Brain Tumor MRI Classification Using a Novel Deep Residual and Regional CNN

Abstract

1. Introduction

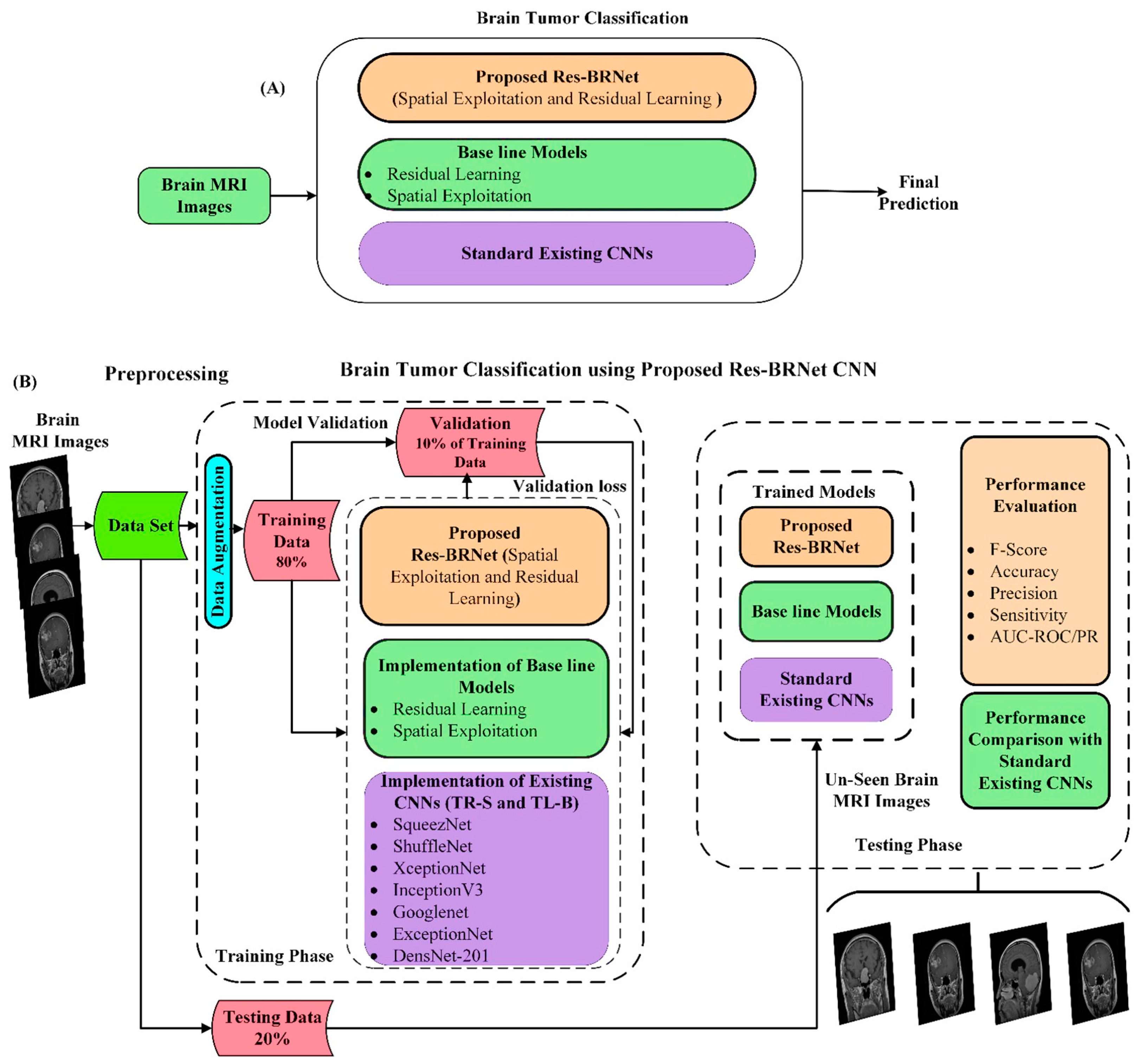

- A novel deep residual and regional CNN architecture, Res-BRNet, has been developed for brain tumors classification.

- The proposed Res-BRNet integrates spatial and residual blocks to learn complex tumor patterns from brain MRIs, and it enhances the performance of the developed model for brain tumor classification.

- The developed Res-BRNet employed regional and boundary-based operations in a systematic order within the customized spatial and residual blocks to exploit spatial correlation information and textural variations from brain tumor MRIs.

- The systematic integration of residual and spatial blocks within the proposed Res-BRNet CNN improves the discriminative capability and generalization. Moreover, spatial blocks extract homogeneity and boundary-defined features at the abstract level. Furthermore, residual blocks at the target level effectively learn local and global texture variations of different brain tumors.

2. Materials and Methods

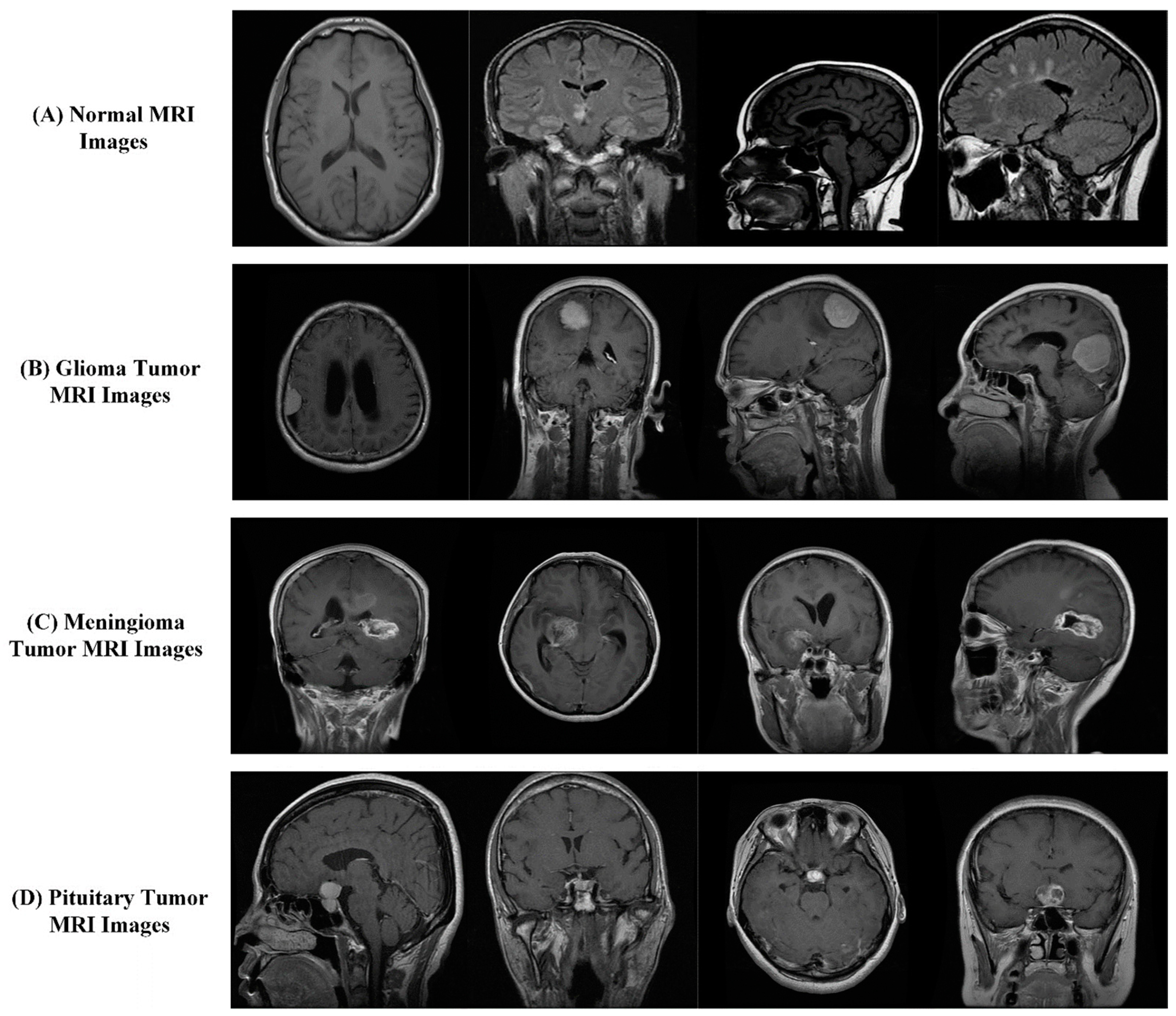

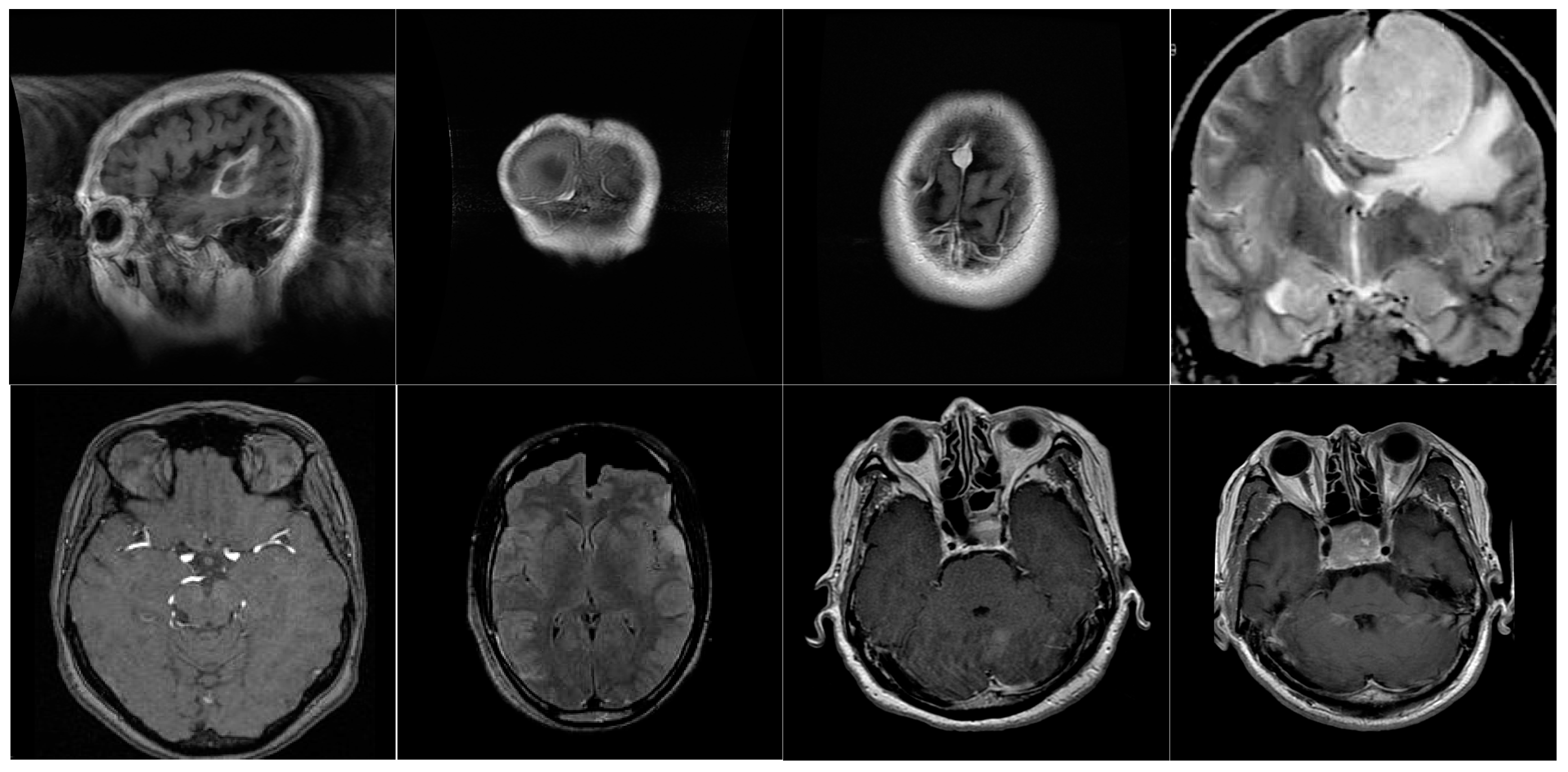

2.1. Dataset

2.2. Data Augmentation

2.3. Performance Metrics

2.4. The Developed Deep Res-BRNet-Based Categorization

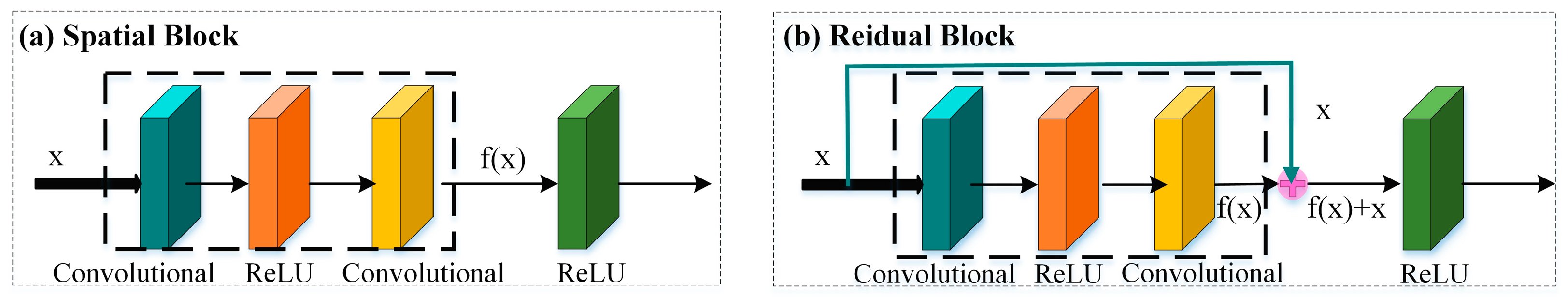

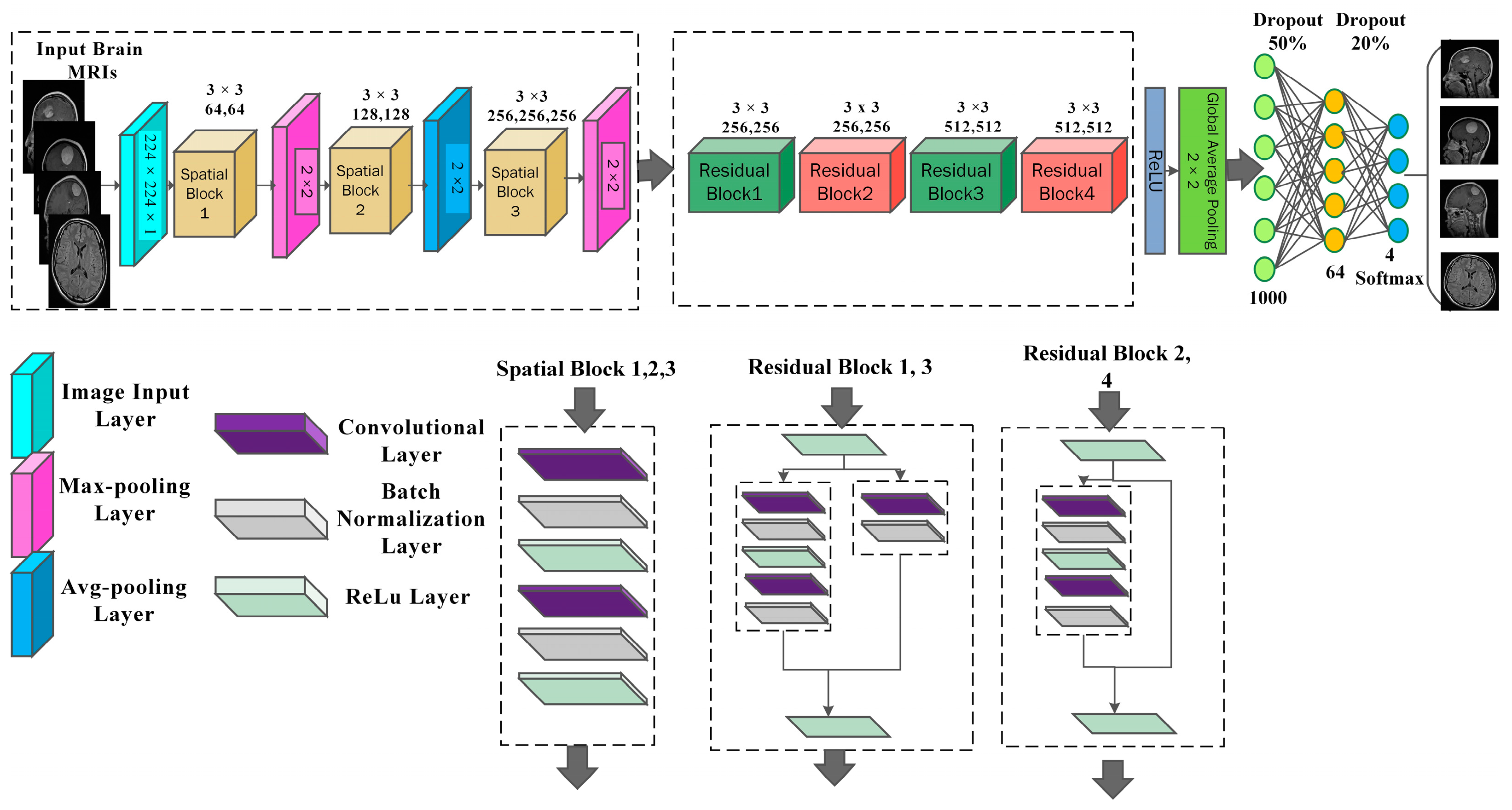

2.5. Structural Details of the Developed Res-BRNet

2.6. Benefits of the Proposed Res-BRNet for Image Content Analysis

- The developed residual and regional CNN architecture aimed to dynamically exploit image smoothness and sharpness, and it may efficiently optimize the level of smoothness and sharpening in harmony with the spatial features of an image.

- Implementing the spatial block with residual learning improves the overall detection ability of the model by acquiring textural features along with spatial correlation from MRI images.

- The systematic use of boundary and regional operations within spatial blocks helps enhance the region homogeneity of various regions. Using average pooling, the region operator helps smooth the regional variations and eliminates noise caused by distortions captured during MRI imaging. On the other hand, Res-BRNet is aided by boundary operators to acquire discriminative local features with the max pooling operation.

- Residual blocks aid the model in capturing textural and minor contrast variations and overcoming the vanishing gradient problem, which is generally produced in very deep architectures.

- Down-sampling is also performed during pooling operations, which increases the model’s robustness to small changes in the input image.

2.7. Employment of Existing CNNs

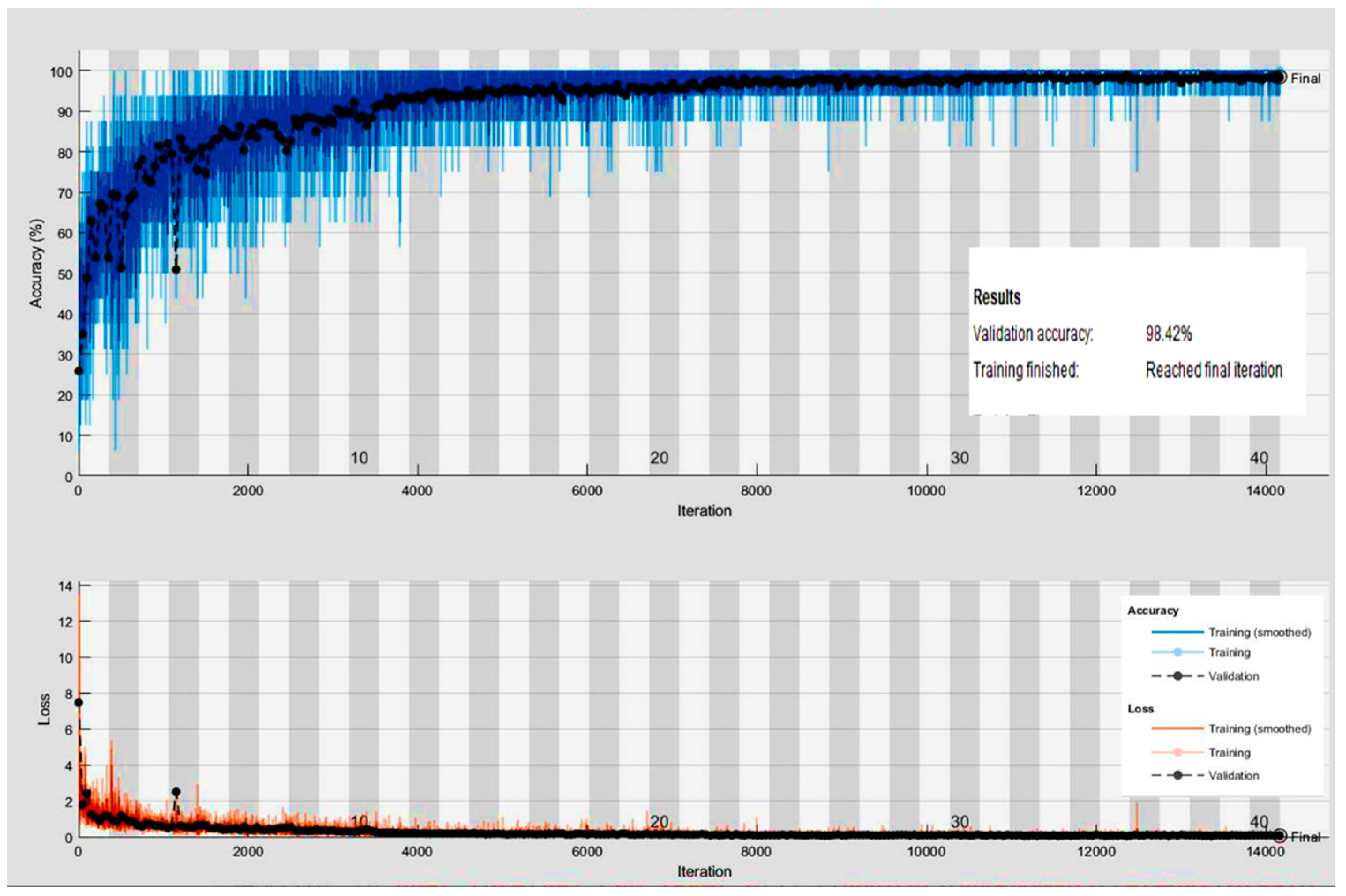

2.8. Implementation Details

3. Results and Discussion

3.1. Efficiency Analysis of the Proposed Res-BRNet

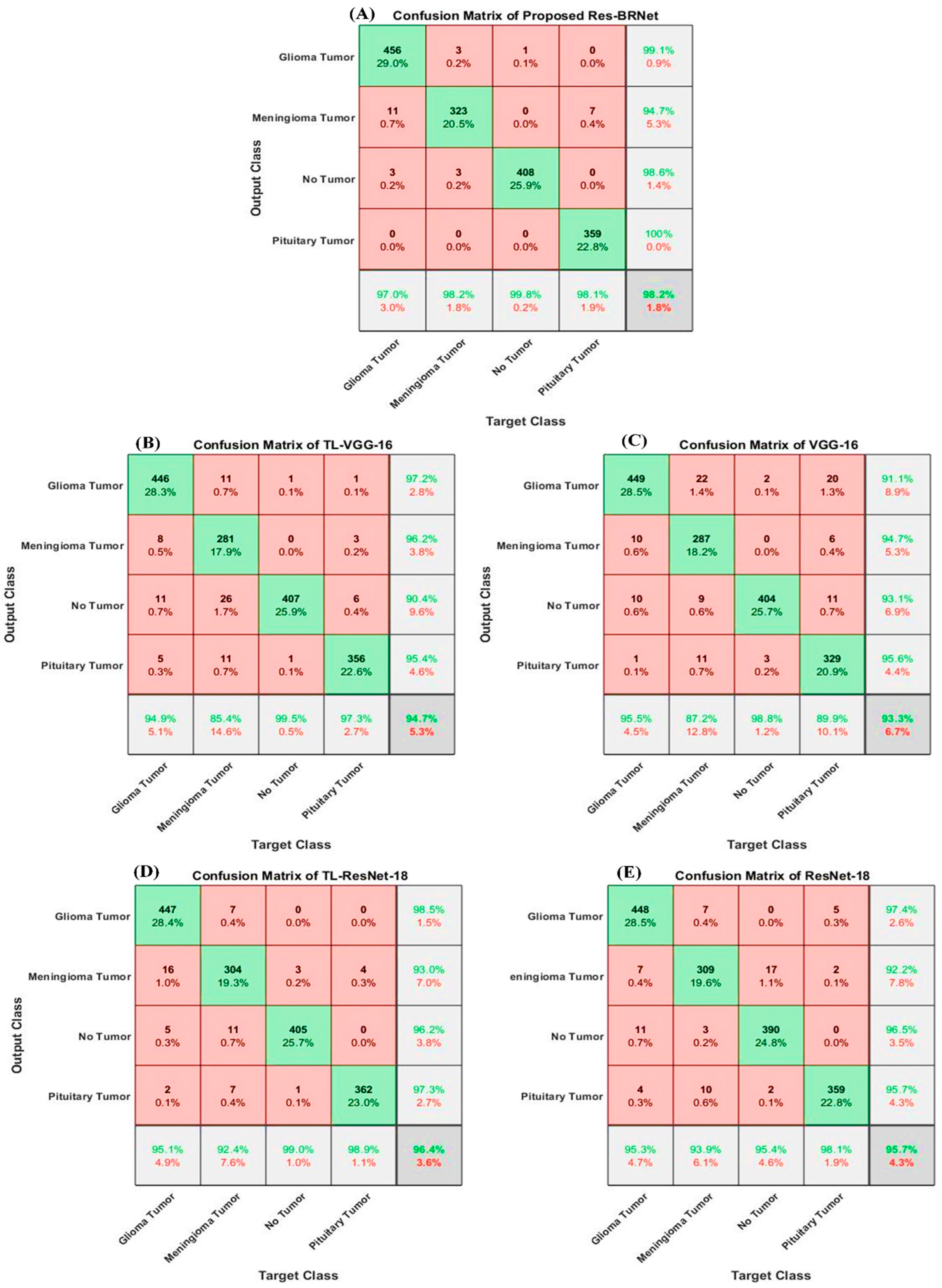

3.1.1. Analysis of Performance with Baseline Methods

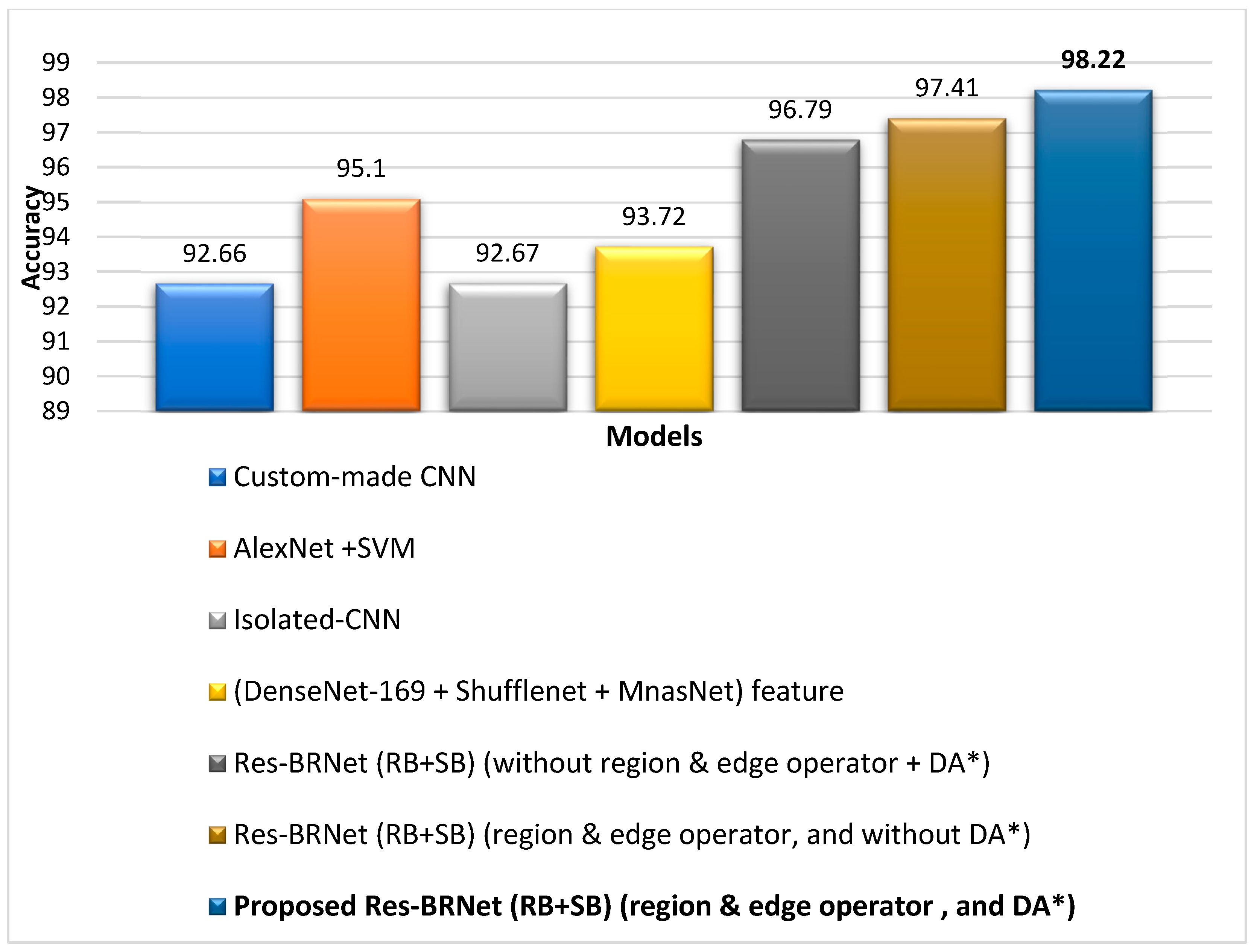

3.1.2. Analysis of Performance with Reported Techniques

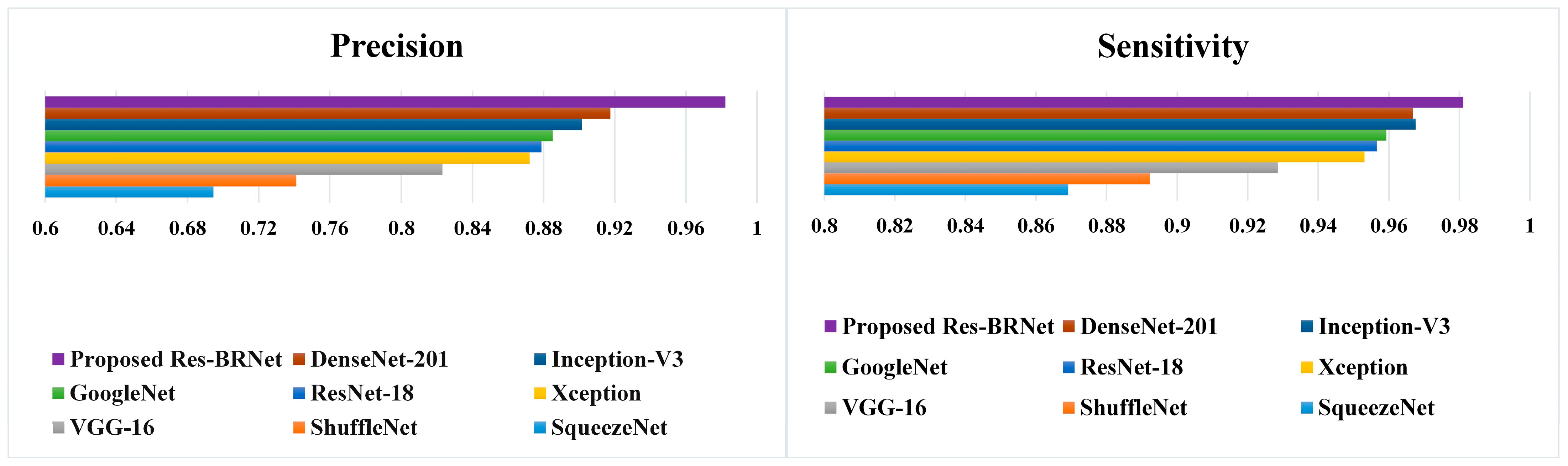

3.1.3. Performance Assessment with Existing CNNs

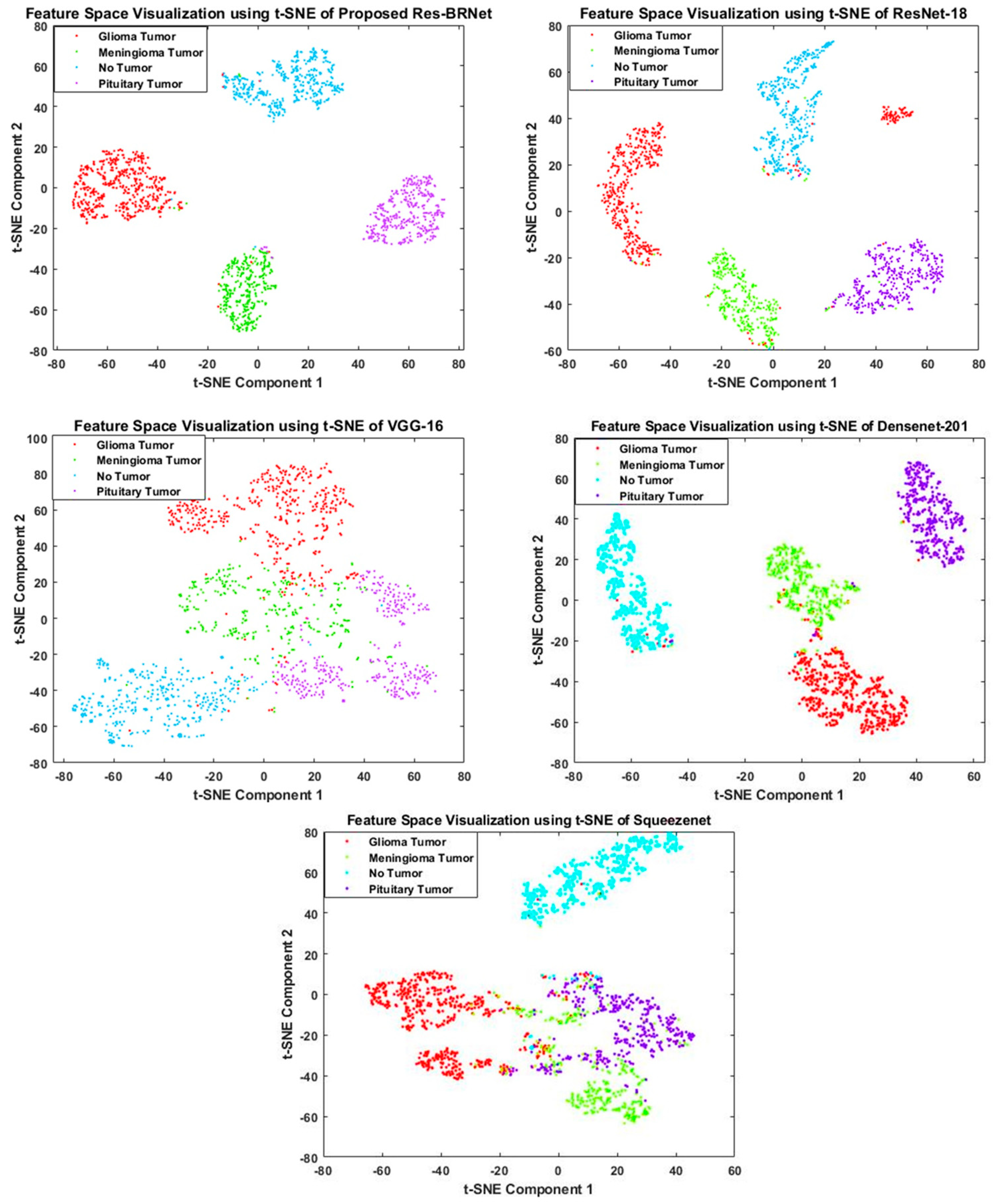

3.1.4. Feature Space-Based Performance Analysis

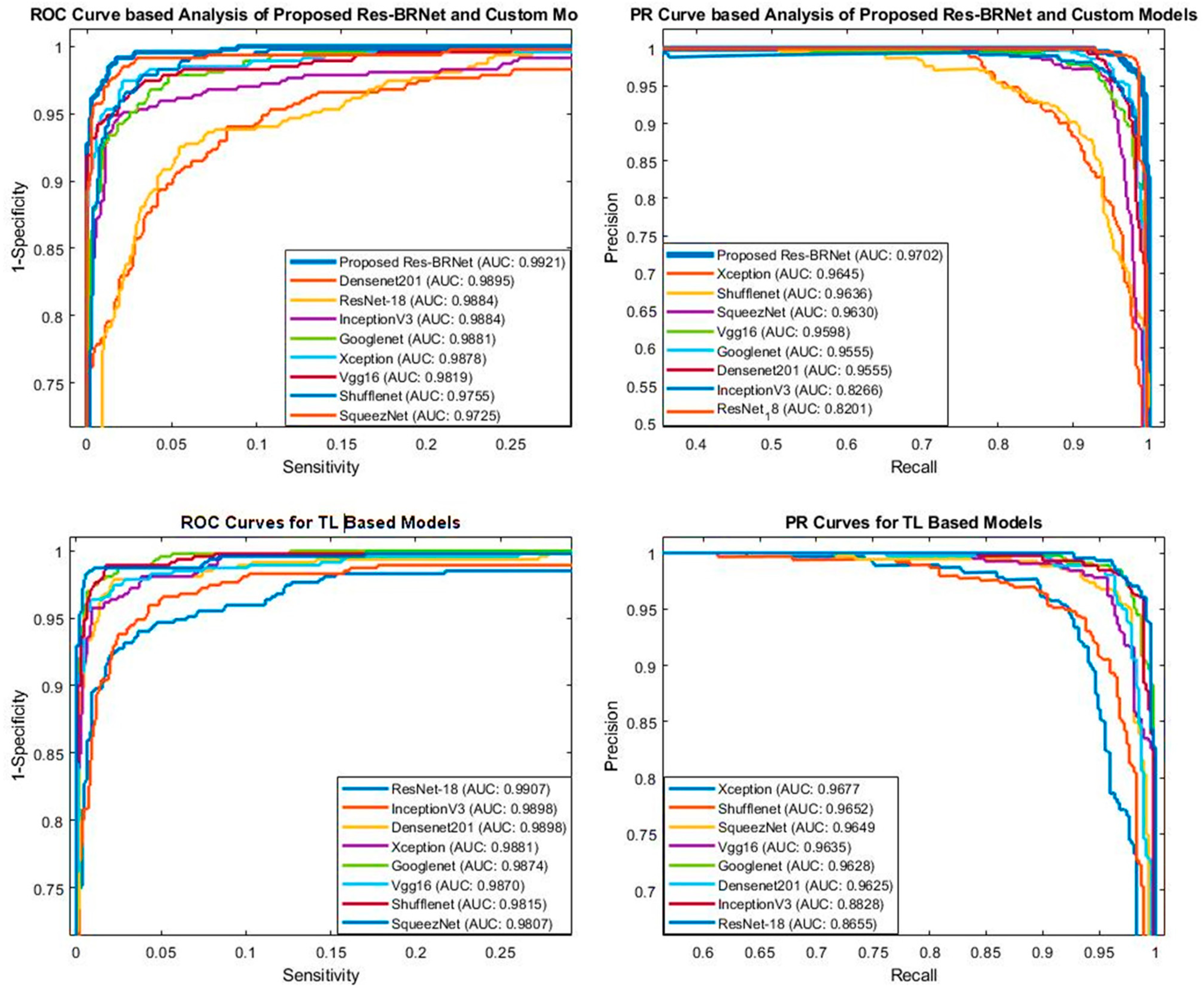

3.1.5. ROC and PR-AUC-Based Analysis

3.1.6. Screening Effectiveness of the Proposed Technique

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Behin, A.; Hoang-Xuan, K.; Carpentier, A.F.; Delattre, J.-Y. Primary brain tumours in adults. Lancet 2003, 361, 323–331. [Google Scholar] [CrossRef] [PubMed]

- Miller, K.D.; Ostrom, Q.T.; Kruchko, C.; Patil, N.; Tihan, T.; Cioffi, G.; Fuchs, H.E.; Waite, K.A.; Jemal, A.; Siegel, R.L.; et al. Brain and other central nervous system tumor statistics. CA. Cancer J. Clin. 2021, 71, 381–406. [Google Scholar] [CrossRef] [PubMed]

- El-Dahshan, E.-S.A.; Mohsen, H.M.; Revett, K.; Salem, A.-B.M. Computer-aided diagnosis of human brain tumor through MRI: A survey and a new algorithm. Expert Syst. Appl. 2014, 41, 5526–5545. [Google Scholar] [CrossRef]

- Iftekharuddin, K.M.; Zheng, J.; Islam, M.A.; Ogg, R.J. Fractal-based brain tumor detection in multimodal MRI. Appl. Math. Comput. 2009, 207, 23–41. [Google Scholar] [CrossRef]

- Zahoor, M.M.; Qureshi, S.A.; Bibi, S.; Khan, S.H.; Khan, A.; Ghafoor, U.; Bhutta, M.R. A New Deep Hybrid Boosted and Ensemble Learning-Based Brain Tumor Analysis Using MRI. Sensors 2022, 22, 2726. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Khan, S.H.; Saif, M.; Batool, A.; Sohail, A.; Khan, M.W. A Survey of Deep Learning Techniques for the Analysis of COVID-19 and their usability for Detecting Omicron. arXiv 2022, arXiv:2202.06372. [Google Scholar] [CrossRef]

- Khan, S.H.; Sohail, A.; Khan, A.; Lee, Y.S. Classification and region analysis of COVID-19 infection using lung CT images and deep convolutional neural networks. arXiv 2020, arXiv:2009.08864. [Google Scholar]

- Asam, M.; Hussain, S.J.; Mohatram, M.; Khan, S.H.; Jamal, T.; Zafar, A.; Khan, A.; Ali, M.U.; Zahoora, U. Detection of Exceptional Malware Variants Using Deep Boosted Feature Spaces and Machine Learning. Appl. Sci. 2021, 11, 10464. [Google Scholar] [CrossRef]

- Zahoor, M.M.; Qureshi, S.A.; Khan, A.; Rehman, A.U.; Rafique, M. A novel dual-channel brain tumor detection system for MR images using dynamic and static features with conventional machine learning techniques. Waves Random Complex Media 2022, 1–20. [Google Scholar] [CrossRef]

- Khan, S.H.; Sohail, A.; Khan, A.; Lee, Y.-S. COVID-19 detection in chest X-ray images using a new channel boosted CNN. arXiv 2020, arXiv:2012.05073. [Google Scholar] [CrossRef]

- Rauf, Z.; Sohail, A.; Khan, S.H.; Khan, A.; Gwak, J.; Maqbool, M. Attention-guided multi-scale deep object detection framework for lymphocyte analysis in IHC histological images. Microscopy 2023, 72, 27–42. [Google Scholar] [CrossRef] [PubMed]

- Zahoora, U.; Khan, A.; Rajarajan, M.; Khan, S.H.; Asam, M.; Jamal, T. Ransomware detection using deep learning based unsupervised feature extraction and a cost sensitive Pareto Ensemble classifier. Sci. Rep. 2022, 12, 15647. [Google Scholar] [CrossRef] [PubMed]

- Khan, S.H.; Iqbal, J.; Hassnain, S.A.; Owais, M.; Mostafa, S.M.; Hadjouni, M.; Mahmoud, A. COVID-19 detection and analysis from lung CT images using novel channel boosted CNNs. Expert Syst. Appl. 2023, 229, 120477. [Google Scholar] [CrossRef]

- Alqahtani, A.; Zahoor, M.M.; Nasrullah, R.; Fareed, A.; Cheema, A.A.; Shahrose, A.; Irfan, M.; Alqhatani, A.; Alsulami, A.A.; Zaffar, M.; et al. Computer Aided COVID-19 Diagnosis in Pandemic Era Using CNN in Chest X-ray Images. Life 2022, 12, 1709. [Google Scholar] [CrossRef] [PubMed]

- Akkus, Z.; Galimzianova, A.; Hoogi, A.; Rubin, D.L.; Erickson, B.J. Deep learning for brain MRI segmentation: State of the art and future directions. J. Digit. Imaging 2017, 30, 449–459. [Google Scholar] [CrossRef]

- Khan, S.H.; Khan, A.; Lee, Y.S.; Hassan, M.; Jeong, W.K. Segmentation of shoulder muscle MRI using a new Region and Edge based Deep Auto-Encoder. Multimed. Tools Appl. 2022, 82, 14963–14984. [Google Scholar] [CrossRef]

- Domingues, I.; Pereira, G.; Martins, P.; Duarte, H.; Santos, J.; Abreu, P.H. Using deep learning techniques in medical imaging: A systematic review of applications on CT and PET. Artif. Intell. Rev. 2020, 53, 4093–4160. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning—Ian Goodfellow, Yoshua Bengio, Aaron Courville—Google Books; MIT Press: Cambridge, MA, USA, 2016; p. 800. [Google Scholar]

- Asam, M.; Khan, S.H.; Akbar, A.; Bibi, S.; Jamal, T.; Khan, A.; Ghafoor, U.; Bhutta, M.R. IoT malware detection architecture using a novel channel boosted and squeezed CNN. Sci. Rep. 2022, 12, 15498. [Google Scholar] [CrossRef]

- Gómez-Flores, W.; Pereira, W.C.d.A. A comparative study of pre-trained convolutional neural networks for semantic segmentation of breast tumors in ultrasound. Comput. Biol. Med. 2020, 126, 2419–2428. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep convolutional neural networks for image classification: A comprehensive review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef]

- Arabahmadi, M.; Farahbakhsh, R.; Rezazadeh, J. Deep Learning for Smart Healthcare—A Survey on Brain Tumor Detection from Medical Imaging. Sensors 2022, 22, 1960. [Google Scholar] [CrossRef] [PubMed]

- Shirazi, A.Z.; Fornaciari, E.; McDonnell, M.D.; Yaghoobi, M.; Cevallos, Y.; Tello-Oquendo, L.; Inca, D.; Gomez, G.A. The Application of Deep Convolutional Neural Networks to Brain Cancer Images: A Survey. J. Pers. Med. 2020, 10, 224. [Google Scholar] [CrossRef] [PubMed]

- Xie, Y.; Zaccagna, F.; Rundo, L.; Testa, C.; Agati, R.; Lodi, R.; Manners, D.N.; Tonon, C. Convolutional Neural Network Techniques for Brain Tumor Classification (from 2015 to 2022): Review, Challenges, and Future Perspectives. Diagnostics 2022, 12, 1850. [Google Scholar] [CrossRef] [PubMed]

- Hoang, Q.T.; Yong, K.-T.; Liu, X.; Mahony, D.; Chaitarvornkit, A.; Cohen, A.; Grootswagers, T. Detecting mild traumatic brain injury for athletes using SSVEP classification: A case study. Biomed. Signal Process. Control 2023, 86, 105274. [Google Scholar] [CrossRef]

- Abdel-Nabi, H.; Ali, M.Z.; Awajan, A. A multi-scale 3-stacked-layer coned U-net framework for tumor segmentation in whole slide images. Biomed. Signal Process. Control 2023, 86, 105273. [Google Scholar] [CrossRef]

- Paul, J.S.; Plassard, A.J.; Landman, B.A.; Fabbri, D. Deep learning for brain tumor classification. Med. Imaging 2017 Biomed. Appl. Mol. Struct. Funct. Imaging 2017, 10137, 1013710. [Google Scholar] [CrossRef]

- Deepak, S.; Ameer, P. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef] [PubMed]

- Çinar, A.; Yildirim, M. Detection of tumors on brain MRI images using the hybrid convolutional neural network architecture. Med. Hypotheses 2020, 139, 109684. [Google Scholar] [CrossRef]

- Sultan, H.H.; Salem, N.M.; Al-Atabany, W. Multi-Classification of Brain Tumor Images Using Deep Neural Network. IEEE Access 2019, 7, 69215–69225. [Google Scholar] [CrossRef]

- Khawaldeh, S.; Pervaiz, U.; Rafiq, A.; Alkhawaldeh, R.S. Noninvasive grading of glioma tumor using magnetic resonance imaging with convolutional neural networks. Appl. Sci. 2018, 8, 27. [Google Scholar] [CrossRef]

- Khan, M.A.; Ashraf, I.; Alhaisoni, M.; Damaševičius, R.; Scherer, R.; Rehman, A.; Bukhari, S.A.C. Multimodal Brain Tumor Classification Using Deep Learning and Robust Feature Selection: A Machine Learning Application for Radiologists. Diagnostics 2020, 10, 565. [Google Scholar] [CrossRef] [PubMed]

- Özyurt, F.; Sert, E.; Avci, E.; Dogantekin, E. Brain tumor detection based on Convolutional Neural Network with neutrosophic expert maximum fuzzy sure entropy. Measurement 2019, 147, 106830. [Google Scholar] [CrossRef]

- Br35H: Brain Tumor Detection 2020. Kaggle. Available online: https://www.kaggle.com/datasets/ahmedhamada0/brain-tumor-detection (accessed on 8 September 2022).

- Brain Tumor Classification (MRI). Kaggle. Available online: https://www.kaggle.com/datasets/sartajbhuvaji/brain-tumor-classification-mri (accessed on 8 September 2022).

- Jun, C. Brain Tumor Dataset. 2017. Available online: https://figshare.com/articles/brain_tumor_dataset/1512427 (accessed on 2 February 2022).

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Buckland, M.; Gey, F. The relationship between recall and precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, ACM, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Diebold, F.X.; Mariano, R.S. Comparing predictive accuracy. J. Bus. Econ. Stat. 2002, 20, 134–144. [Google Scholar] [CrossRef]

- Sokolova, M.; Japkowicz, N.; Szpakowicz, S. Beyond Accuracy, F-Score and ROC: A Family of Discriminant Measures for Performance Evaluation; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1015–1021. [Google Scholar]

- Cortes, C.; Mohri, M. Confidence intervals for the area under the ROC Curve. In Advances in Neural Information Processing Systems 17; MIT: Cambridge, MA, USA, 2005. [Google Scholar]

- DiCiccio, T.J.; Efron, B. Bootstrap confidence intervals. Stat. Sci. 1996, 11, 189–228. [Google Scholar] [CrossRef]

- Hussain, S.; Khan, A. Coronavirus Disease Analysis using Chest X-ray Images and a Novel Deep Convolutional Neural Network. Photodiagnosis Photodyn. Ther. 2020, 35, 102473. [Google Scholar]

- Mallick, A.; Roy, S.; Chaudhuri, S.S.; Roy, S. Optimization of Laplace of Gaussian (LoG) filter for enhanced edge detection: A new approach. In Proceedings of the International Conference on Control, Instrumentation, Energy and Communication, CIEC 2014, Calcutta, India, 31 January–2 February 2014; pp. 658–661. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2015, 2016, 770–778. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Khan, S.H.; Shah, N.S.; Nuzhat, R.; Majid, A.; Alquhayz, H.; Khan, A. Malaria parasite classification framework using a novel channel squeezed and boosted CNN. Reprod. Syst. Sex. Disord. 2022, 71, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Zagoruyko, S.; Komodakis, N. Wide Residual Networks. Proc. Br. Mach. Vis. Conf. 2016, 87, 87.1–87.12. [Google Scholar]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A.; Liu, W.; et al. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Yadav, S.S.; Jadhav, S.M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Ruder, S. An Overview of Gradient Descent Optimization Algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Irmak, E. Multi-Classification of Brain Tumor MRI Images Using Deep Convolutional Neural Network with Fully Optimized Framework. Iran. J. Sci. Technol. Trans. Electr. Eng. 2021, 45, 1015–1036. [Google Scholar] [CrossRef]

- Senan, E.M.; Jadhav, M.E.; Rassem, T.H.; Aljaloud, A.S.; Mohammed, B.A.; Al-Mekhlafi, Z.G. Early Diagnosis of Brain Tumour MRI Images Using Hybrid Techniques between Deep and Machine Learning. Comput. Math. Methods Med. 2022, 2022, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Alanazi, M.F.; Ali, M.U.; Hussain, S.J.; Zafar, A.; Mohatram, M.; Irfan, M.; AlRuwaili, R.; Alruwaili, M.; Ali, N.H.; Albarrak, A.M. Brain Tumor/Mass Classification Framework Using Magnetic-Resonance-Imaging-Based Isolated and Developed Transfer Deep-Learning Model. Sensors 2022, 22, 372. [Google Scholar] [CrossRef] [PubMed]

- Kang, J.; Ullah, Z.; Gwak, J. Mri-based brain tumor classification using ensemble of deep features and machine learning classifiers. Sensors 2021, 21, 2222. [Google Scholar] [CrossRef]

- Van Der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Glioma | Meningioma | Pituitary | Normal | Total | |

|---|---|---|---|---|---|

| Training (80%) | 1882 | 1316 | 1465 | 1635 | 6298 |

| Validation (10% of training) | 188 | 132 | 146 | 163 | 629 |

| Testing (20%) | 470 | 329 | 366 | 409 | 1574 |

| Total (100%) | 2352 | 1645 | 1831 | 2044 | 7872 |

| Symbol | Description |

|---|---|

| Output of spatial block | |

| Output of residual block | |

| Output of convolutional block | |

| k | |

| Output feature map | |

| Output of average pooling | |

| Output of max pooling | |

| Output of dense layer |

| Model | Performance Comparison with Baseline Architecture CNNs | |||

|---|---|---|---|---|

| Accuracy % | Sensitivity | Precision | F1-Score | |

| VGG-16 (SB + DA *) | 93.32 | 0.9285 | 0.8231 | 0.8719 |

| TL_VGG-16 (SB+ DA *) | 94.66 | 0.9426 | 0.8569 | 0.8961 |

| ResNet-18 (SB+ DA *) | 95.67 | 0.9566 | 0.8788 | 0.9158 |

| TL_ResNet-18 (RB+ DA *) | 96.44 | 0.9641 | 0.8998 | 0.9303 |

| Res-BRNet (RB+SB) (without region and edge operator + DA *) | 96.79 | 0.9661 | 0.9467 | 0.9385 |

| Res-BRNet (RB+SB) (region and edge operator, and without DA *) | 97.59 | 0.9712 | 0.9723 | 0.9446 |

| Proposed Res-BRNet (RB+SB) (region and edge operator, and DA *) | 98.22 | 0.9811 | 0.9822 | 0.9641 |

| Model | Performance Comparison with Reported Techniques | |||||

|---|---|---|---|---|---|---|

| Dataset | Class | Accuracy % | Sensitivity | Precision | F1-Score ± CI | |

| Custom-made CNN [59] | Figshare | 5 | 92.66 | - | - | - |

| AlexNet + SVM [60] | MRI dataset | 4 | 95.10 | 95.25 | - | - |

| Isolated-CNN [61] | Kaggle | 4 | 92.67 | - | - | - |

| (DenseNet-169 + Shufflenet + MnasNet) feature [62] | BT-large-4c | 4 | 93.72 | - | - | - |

| Proposed Res-BRNet | Figshare + Kaggle | 4 | 98.22 | 0.9811 | 0.9822 | 0.9641 ± 0.0359 |

| Model | Performance Comparison with Custom-Made CNNs | |||

|---|---|---|---|---|

| Accuracy % | Sensitivity | Precision | ||

| SqueezeNet | 87.16 | 0.8691 | 0.6946 | 0.7671 ± 0.2430 |

| ShuffleNet | 89.45 | 0.8923 | 0.7411 | 0.8047 ± 0.1953 |

| VGG-16 | 93.32 | 0.9285 | 0.8231 | 0.8719 ± 0.1281 |

| Xception | 95.36 | 0.9531 | 0.8721 | 0.9101 ± 0.0899 |

| ResNet-18 | 95.67 | 0.9566 | 0.8788 | 0.9158 ± 0.0842 |

| GoogleNet | 95.87 | 0.9593 | 0.8851 | 0.9196 ± 0.0804 |

| Inception-V3 | 96.56 | 0.9676 | 0.9015 | 0.9331 ± 0.0669 |

| DenseNet-201 | 97.01 | 0.9668 | 0.9175 | 0.9406 ± 0.0594 |

| Proposed Res-BRNet (with region and edge operator and augmentation) | 98.22 | 0.9811 | 0.9822 | 0.9641 ± 0.0359 |

| Model | Performance Comparison with TL-Based CNNs | |||

|---|---|---|---|---|

| Accuracy % | Sensitivity | Precision | ||

| TL_SqueezeNet | 90.91 | 0.9108 | 0.7685 | 0.8315 ± 0.1685 |

| TL_ShuffleNet | 92.31 | 0.9155 | 0.8056 | 0.8521 ± 0.1479 |

| TL_VGG-16 | 94.66 | 0.9426 | 0.8569 | 0.8961 ± 0.1039 |

| TL_Xception | 96.37 | 0.9611 | 0.8996 | 0.9285 ± 0.0715 |

| TL_ResNet-18 | 96.44 | 0.9641 | 0.8998 | 0.9303 ± 0.0697 |

| TL_GoogleNet | 96.37 | 0.9641 | 0.8985 | 0.9291 ± 0.0709 |

| TL_Inception-V3 | 97.26 | 0.9711 | 0.9225 | 0.9459 ± 0.0541 |

| TL_DenseNet-201 | 97.77 | 0.9778 | 0.9349 | 0.9557 ± 0.0443 |

| Proposed Res-BRNet (with region and edge operator, and augmentation) | 98.22 | 0.9811 | 0.9822 | 0.9641 ± 0.0359 |

| Improvement | Accuracy % | Sensitivity % | Precision % | F1-Score % |

|---|---|---|---|---|

| TR-SC to TL-B | 0.76–3.75 | 1.1–4.45 | 1.74–7.39 | 1.51–6.44 |

| TR-SC to Proposed Res-BRNet | 1.2–11.06 | 1.43–11.02 | 6.47–28.76 | 2.35–19.07 |

| TL-B to Proposed Res-BRNet | 0.45–7.31 | 1.1–4.17 | 1.74–7.39 | 1.51–6.44 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zahoor, M.M.; Khan, S.H.; Alahmadi, T.J.; Alsahfi, T.; Mazroa, A.S.A.; Sakr, H.A.; Alqahtani, S.; Albanyan, A.; Alshemaimri, B.K. Brain Tumor MRI Classification Using a Novel Deep Residual and Regional CNN. Biomedicines 2024, 12, 1395. https://doi.org/10.3390/biomedicines12071395

Zahoor MM, Khan SH, Alahmadi TJ, Alsahfi T, Mazroa ASA, Sakr HA, Alqahtani S, Albanyan A, Alshemaimri BK. Brain Tumor MRI Classification Using a Novel Deep Residual and Regional CNN. Biomedicines. 2024; 12(7):1395. https://doi.org/10.3390/biomedicines12071395

Chicago/Turabian StyleZahoor, Mirza Mumtaz, Saddam Hussain Khan, Tahani Jaser Alahmadi, Tariq Alsahfi, Alanoud S. Al Mazroa, Hesham A. Sakr, Saeed Alqahtani, Abdullah Albanyan, and Bader Khalid Alshemaimri. 2024. "Brain Tumor MRI Classification Using a Novel Deep Residual and Regional CNN" Biomedicines 12, no. 7: 1395. https://doi.org/10.3390/biomedicines12071395

APA StyleZahoor, M. M., Khan, S. H., Alahmadi, T. J., Alsahfi, T., Mazroa, A. S. A., Sakr, H. A., Alqahtani, S., Albanyan, A., & Alshemaimri, B. K. (2024). Brain Tumor MRI Classification Using a Novel Deep Residual and Regional CNN. Biomedicines, 12(7), 1395. https://doi.org/10.3390/biomedicines12071395