Applications of Multimodal Artificial Intelligence in Non-Hodgkin Lymphoma B Cells

Abstract

:1. Introduction

2. Multimodal AI

2.1. Different Types of Data Modalities

2.1.1. Imaging Modality

2.1.2. Medical Records

2.1.3. Multi-Omics Data

2.2. The Multimodal AI Framework

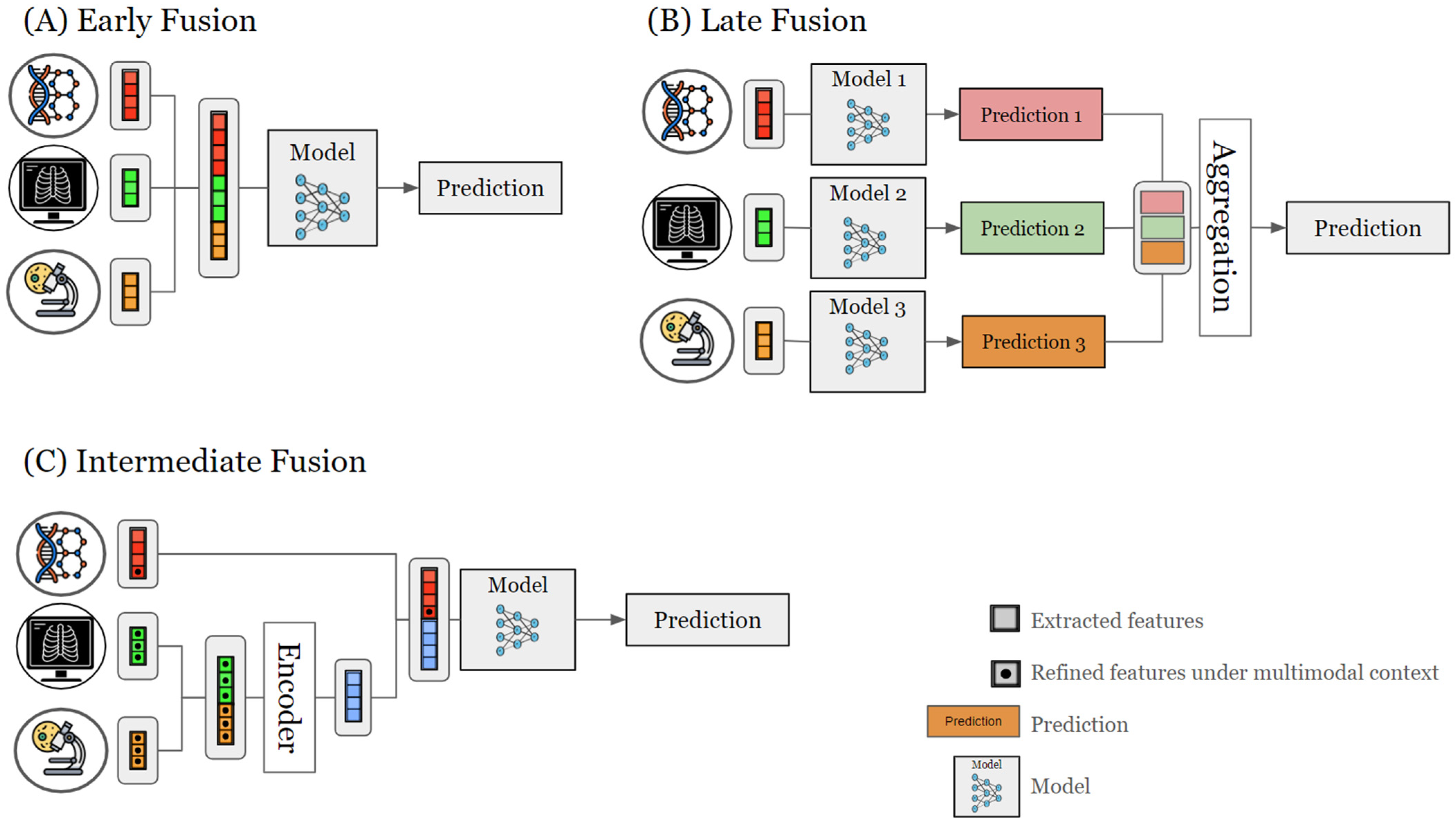

2.3. Data Fusion Strategies

2.3.1. Early Fusion

2.3.2. Late Fusion

2.3.3. Intermediate Fusion

2.4. Learning Methods

2.4.1. Supervised Methods

2.4.2. Unsupervised Methods

2.4.3. Semi-Supervised Methods

2.5. Application of Multimodal AI in Precision Medicine

2.5.1. Liquid Biopsy

2.5.2. Immunotherapy

2.5.3. Surgery

2.5.4. Clinical Trials

3. Case Studies of Multimodal AI in B-NHLs

3.1. Investigating Tumor B-NHLs and TME Ecosystem

3.2. Immune Biomarker Discovery

3.3. Therapy Optimization

3.4. Clinical Investigations

4. Limitations and Challenges

4.1. Data Quality and Availability

4.1.1. Omics

4.1.2. Imaging

4.2. AI Methods and Strategies for Data Fusion

4.3. Clinical Implementation

5. Future Directions

5.1. Cancer Patient Digital Twin

5.2. Virtual Clinical Trial

5.3. Healthcare System

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gao, F.; Huang, K.; Xing, Y. Artificial intelligence in omics. Genom. Proteom. Bioinform. 2022, 20, 811–813. [Google Scholar] [CrossRef] [PubMed]

- Lipkova, J.; Chen, R.J.; Chen, B.; Lu, M.Y.; Barbieri, M.; Shao, D.; Vaidya, A.J.; Chen, C.; Zhuang, L.; Williamson, D.F.K.; et al. Artificial intelligence for multimodal data integration in oncology. Cancer Cell 2022, 40, 1095–1110. [Google Scholar] [CrossRef] [PubMed]

- Truhn, D.; Eckardt, J.-N.; Ferber, D.; Kather, J.N. Large language models and multimodal foundation models for precision oncology. NPJ Precis. Oncol. 2024, 8, 72. [Google Scholar] [CrossRef] [PubMed]

- Biswas, N.; Chakrabarti, S. Artificial Intelligence (AI)-Based Systems Biology Approaches in Multi-Omics Data Analysis of Cancer. Front. Oncol. 2020, 10, 588221. [Google Scholar] [CrossRef] [PubMed]

- Acosta, J.N.; Falcone, G.J.; Rajpurkar, P.; Topol, E.J. Multimodal biomedical AI. Nat. Med. 2022, 28, 1773–1784. [Google Scholar] [CrossRef] [PubMed]

- Thakur, G.K.; Thakur, A.; Kulkarni, S.; Khan, N.; Khan, S. Deep learning approaches for medical image analysis and diagnosis. Cureus 2024, 16, e59507. [Google Scholar] [CrossRef] [PubMed]

- Vilhekar, R.S.; Rawekar, A. Artificial intelligence in genetics. Cureus 2024, 16, e52035. [Google Scholar] [CrossRef]

- Bai, A.; Si, M.; Xue, P.; Qu, Y.; Jiang, Y. Artificial intelligence performance in detecting lymphoma from medical imaging: A systematic review and meta-analysis. BMC Med. Inform. Decis. Mak. 2024, 24, 13. [Google Scholar] [CrossRef] [PubMed]

- Carreras, J.; Hamoudi, R.; Nakamura, N. Artificial intelligence and classification of mature lymphoid neoplasms. Explor. Target. Anti-Tumor Ther. 2024, 5, 332–348. [Google Scholar] [CrossRef]

- Jiang, P.; Sinha, S.; Aldape, K.; Hannenhalli, S.; Sahinalp, C.; Ruppin, E. Big data in basic and translational cancer research. Nat. Rev. Cancer 2022, 22, 625–639. [Google Scholar] [CrossRef]

- Kline, A.; Wang, H.; Li, Y.; Dennis, S.; Hutch, M.; Xu, Z.; Wang, F.; Cheng, F.; Luo, Y. Multimodal machine learning in precision health: A scoping review. NPJ Digital Med. 2022, 5, 171. [Google Scholar] [CrossRef] [PubMed]

- Habets, P.C.; Thomas, R.M.; Milaneschi, Y.; Jansen, R.; Pool, R.; Peyrot, W.J.; Penninx, B.W.J.H.; Meijer, O.C.; van Wingen, G.A.; Vinkers, C.H. Multimodal Data Integration Advances Longitudinal Prediction of the Naturalistic Course of Depression and Reveals a Multimodal Signature of Remission During 2-Year Follow-up. Biol. Psychiatry 2023, 94, 948–958. [Google Scholar] [CrossRef] [PubMed]

- Steyaert, S.; Pizurica, M.; Nagaraj, D.; Khandelwal, P.; Hernandez-Boussard, T.; Gentles, A.J.; Gevaert, O. Multimodal data fusion for cancer biomarker discovery with deep learning. Nat. Mach. Intell. 2023, 5, 351–362. [Google Scholar] [CrossRef] [PubMed]

- Li, D.; Bledsoe, J.R.; Zeng, Y.; Liu, W.; Hu, Y.; Bi, K.; Liang, A.; Li, S. A deep learning diagnostic platform for diffuse large B-cell lymphoma with high accuracy across multiple hospitals. Nat. Commun. 2020, 11, 6004. [Google Scholar] [CrossRef] [PubMed]

- Tsakiroglou, A.M.; Bacon, C.M.; Shingleton, D.; Slavin, G.; Vogiatzis, P.; Byers, R.; Carey, C.; Fergie, M. Lymphoma triage from H&E using AI for improved clinical management. J. Clin. Pathol. 2023, 0, 1–7. [Google Scholar] [CrossRef]

- Jin, C.; Zhou, D.; Li, J.; Bi, L.; Li, L. Single-cell multi-omics advances in lymphoma research (Review). Oncol. Rep. 2023, 50, 184. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.-J.; Song, X.-Y.; Wu, J.-L.; Liu, D.; Lin, B.-Y.; Zhou, H.-S.; Wang, L. Advances in Multi-Omics Study of Prognostic Biomarkers of Diffuse Large B-Cell Lymphoma. Int. J. Biol. Sci. 2022, 18, 1313–1327. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Zhang, Y.; Wang, X. Application of machine learning in the management of lymphoma: Current practice and future prospects. Digit. Health 2024, 10, 20552076241247964. [Google Scholar] [CrossRef]

- Amin, R.; Braza, M.S. The follicular lymphoma epigenome regulates its microenvironment. J. Exp. Clin. Cancer Res. 2022, 41, 21. [Google Scholar] [CrossRef]

- Profitós-Pelejà, N.; Santos, J.C.; Marín-Niebla, A.; Roué, G.; Ribeiro, M.L. Regulation of B-Cell Receptor Signaling and Its Therapeutic Relevance in Aggressive B-Cell Lymphomas. Cancers 2022, 14, 860. [Google Scholar] [CrossRef]

- Naoi, Y.; Ennishi, D. Understanding the intrinsic biology of diffuse large B-cell lymphoma: Recent advances and future prospects. Int. J. Hematol. 2024; Online ahead of print. [Google Scholar] [CrossRef]

- Juskevicius, D.; Dirnhofer, S.; Tzankov, A. Genetic background and evolution of relapses in aggressive B-cell lymphomas. Haematologica 2017, 102, 1139–1149. [Google Scholar] [CrossRef]

- Gupta, G.; Garg, V.; Mallick, S.; Gogia, A. Current trends in diagnosis and management of follicular lymphoma. Am. J. Blood Res. 2022, 12, 105–124. [Google Scholar]

- Smyth, E.; Cheah, C.Y.; Seymour, J.F. Management of indolent B-cell Lymphomas: A review of approved and emerging targeted therapies. Cancer Treat. Rev. 2023, 113, 102510. [Google Scholar] [CrossRef]

- Spinner, M.A.; Advani, R.H. Current Frontline Treatment of Diffuse Large B-Cell Lymphoma. Oncology 2022, 36, 51–58. [Google Scholar] [CrossRef]

- Tavarozzi, R.; Zacchi, G.; Pietrasanta, D.; Catania, G.; Castellino, A.; Monaco, F.; Gandolfo, C.; Rivela, P.; Sofia, A.; Schiena, N.; et al. Changing Trends in B-Cell Non-Hodgkin Lymphoma Treatment: The Role of Novel Monoclonal Antibodies in Clinical Practice. Cancers 2023, 15, 5397. [Google Scholar] [CrossRef]

- Appelbaum, J.S.; Milano, F. Hematopoietic stem cell transplantation in the era of engineered cell therapy. Curr. Hematol. Malig. Rep. 2018, 13, 484–493. [Google Scholar] [CrossRef]

- Gambles, M.T.; Yang, J.; Kopeček, J. Multi-targeted immunotherapeutics to treat B cell malignancies. J. Control. Release 2023, 358, 232–258. [Google Scholar] [CrossRef]

- Kichloo, A.; Albosta, M.; Dahiya, D.; Guidi, J.C.; Aljadah, M.; Singh, J.; Shaka, H.; Wani, F.; Kumar, A.; Lekkala, M. Systemic adverse effects and toxicities associated with immunotherapy: A review. World J. Clin. Oncol. 2021, 12, 150–163. [Google Scholar] [CrossRef]

- Smeland, K.; Holte, H.; Fagerli, U.-M.; Bersvendsen, H.; Hjermstad, M.J.; Loge, J.H.; Murbrach, K.; Linnsund, M.D.; Fluge, O.; Stenehjem, J.S.; et al. Total late effect burden in long-term lymphoma survivors after high-dose therapy with autologous stem-cell transplant and its effect on health-related quality of life. Haematologica 2022, 107, 2698–2707. [Google Scholar] [CrossRef]

- Roed, M.L.; Severinsen, M.T.; Maksten, E.F.; Jørgensen, L.; Enggaard, H. Cured but not well—Haematological cancer survivors’ experiences of chemotherapy-induced peripheral neuropathy in everyday life: A phenomenological-hermeneutic study. J. Cancer Surviv. 2024; Online ahead of print. [Google Scholar] [CrossRef]

- Nicholas, N.S.; Apollonio, B.; Ramsay, A.G. Tumor microenvironment (TME)-driven immune suppression in B cell malignancy. Biochim. Biophys. Acta 2016, 1863, 471–482. [Google Scholar] [CrossRef]

- Liu, Y.; Zhou, X.; Wang, X. Targeting the tumor microenvironment in B-cell lymphoma: Challenges and opportunities. J. Hematol. Oncol. 2021, 14, 125. [Google Scholar] [CrossRef]

- Amin, R.; Mourcin, F.; Uhel, F.; Pangault, C.; Ruminy, P.; Dupré, L.; Guirriec, M.; Marchand, T.; Fest, T.; Lamy, T.; et al. DC-SIGN-expressing macrophages trigger activation of mannosylated IgM B-cell receptor in follicular lymphoma. Blood 2015, 126, 1911–1920. [Google Scholar] [CrossRef]

- Serganova, I.; Chakraborty, S.; Yamshon, S.; Isshiki, Y.; Bucktrout, R.; Melnick, A.; Béguelin, W.; Zappasodi, R. Epigenetic, Metabolic, and Immune Crosstalk in Germinal-Center-Derived B-Cell Lymphomas: Unveiling New Vulnerabilities for Rational Combination Therapies. Front. Cell Dev. Biol. 2021, 9, 805195. [Google Scholar] [CrossRef]

- Ribatti, D.; Tamma, R.; Annese, T.; Ingravallo, G.; Specchia, G. Macrophages and angiogenesis in human lymphomas. Clin. Exp. Med. 2024, 24, 26. [Google Scholar] [CrossRef]

- Cai, F.; Zhang, J.; Gao, H.; Shen, H. Tumor microenvironment and CAR-T cell immunotherapy in B-cell lymphoma. Eur. J. Haematol. 2024, 112, 223–235. [Google Scholar] [CrossRef]

- Banerjee, T.; Vallurupalli, A. Emerging new cell therapies/immune therapies in B-cell non-Hodgkin’s lymphoma. Curr. Probl. Cancer 2022, 46, 100825. [Google Scholar] [CrossRef]

- Mulder, T.A.; Wahlin, B.E.; Österborg, A.; Palma, M. Targeting the Immune Microenvironment in Lymphomas of B-Cell Origin: From Biology to Clinical Application. Cancers 2019, 11, 915. [Google Scholar] [CrossRef]

- Bock, A.M.; Nowakowski, G.S.; Wang, Y. Bispecific Antibodies for Non-Hodgkin Lymphoma Treatment. Curr. Treat. Options Oncol. 2022, 23, 155–170. [Google Scholar] [CrossRef]

- Chan, J.Y.; Somasundaram, N.; Grigoropoulos, N.; Lim, F.; Poon, M.L.; Jeyasekharan, A.; Yeoh, K.W.; Tan, D.; Lenz, G.; Ong, C.K.; et al. Evolving therapeutic landscape of diffuse large B-cell lymphoma: Challenges and aspirations. Discov. Oncol. 2023, 14, 132. [Google Scholar] [CrossRef]

- Brauge, B.; Dessauge, E.; Creusat, F.; Tarte, K. Modeling the crosstalk between malignant B cells and their microenvironment in B-cell lymphomas: Challenges and opportunities. Front. Immunol. 2023, 14, 1288110. [Google Scholar] [CrossRef]

- Hatic, H.; Sampat, D.; Goyal, G. Immune checkpoint inhibitors in lymphoma: Challenges and opportunities. Ann. Transl. Med. 2021, 9, 1037. [Google Scholar] [CrossRef]

- Huang, S.-C.; Pareek, A.; Seyyedi, S.; Banerjee, I.; Lungren, M.P. Fusion of medical imaging and electronic health records using deep learning: A systematic review and implementation guidelines. npj Digit. Med. 2020, 3, 136. [Google Scholar] [CrossRef]

- Hussain, S.; Mubeen, I.; Ullah, N.; Shah, S.S.U.D.; Khan, B.A.; Zahoor, M.; Ullah, R.; Khan, F.A.; Sultan, M.A. Modern diagnostic imaging technique applications and risk factors in the medical field: A review. Biomed Res. Int. 2022, 2022, 5164970. [Google Scholar] [CrossRef]

- Toma, P.; Granata, C.; Rossi, A.; Garaventa, A. Multimodality imaging of Hodgkin disease and non-Hodgkin lymphomas in children. Radiographics 2007, 27, 1335–1354. [Google Scholar] [CrossRef]

- Lloyd, C.; McHugh, K. The role of radiology in head and neck tumours in children. Cancer Imaging 2010, 10, 49–61. [Google Scholar] [CrossRef]

- Kwee, T.C.; Takahara, T.; Vermoolen, M.A.; Bierings, M.B.; Mali, W.P.; Nievelstein, R.A.J. Whole-body diffusion-weighted imaging for staging malignant lymphoma in children. Pediatr. Radiol. 2010, 40, 1592–1602, quiz 1720. [Google Scholar] [CrossRef]

- Barrington, S.F.; Mikhaeel, N.G.; Kostakoglu, L.; Meignan, M.; Hutchings, M.; Müeller, S.P.; Schwartz, L.H.; Zucca, E.; Fisher, R.I.; Trotman, J.; et al. Role of imaging in the staging and response assessment of lymphoma: Consensus of the International Conference on Malignant Lymphomas Imaging Working Group. J. Clin. Oncol. 2014, 32, 3048–3058. [Google Scholar] [CrossRef]

- Cheson, B.D.; Fisher, R.I.; Barrington, S.F.; Cavalli, F.; Schwartz, L.H.; Zucca, E.; Lister, T.A.; Alliance, Australasian Leukaemia and Lymphoma Group; Eastern Cooperative Oncology Group; European Mantle Cell Lymphoma Consortium; et al. Recommendations for initial evaluation, staging, and response assessment of Hodgkin and non-Hodgkin lymphoma: The Lugano classification. J. Clin. Oncol. 2014, 32, 3059–3068. [Google Scholar] [CrossRef]

- El-Galaly, T.C.; Hutchings, M. Imaging of non-Hodgkin lymphomas: Diagnosis and response-adapted strategies. Cancer Treat. Res. 2015, 165, 125–146. [Google Scholar] [CrossRef]

- Punwani, S.; Taylor, S.A.; Bainbridge, A.; Prakash, V.; Bandula, S.; De Vita, E.; Olsen, O.E.; Hain, S.F.; Stevens, N.; Daw, S.; et al. Pediatric and adolescent lymphoma: Comparison of whole-body STIR half-Fourier RARE MR imaging with an enhanced PET/CT reference for initial staging. Radiology 2010, 255, 182–190. [Google Scholar] [CrossRef]

- Syrykh, C.; Abreu, A.; Amara, N.; Siegfried, A.; Maisongrosse, V.; Frenois, F.X.; Martin, L.; Rossi, C.; Laurent, C.; Brousset, P. Accurate diagnosis of lymphoma on whole-slide histopathology images using deep learning. npj Digital Med. 2020, 3, 63. [Google Scholar] [CrossRef]

- Li, R.; Ma, F.; Gao, J. Integrating multimodal electronic health records for diagnosis prediction. AMIA Annu. Symp. Proc. 2021, 2021, 726–735. [Google Scholar]

- Jensen, P.B.; Jensen, L.J.; Brunak, S. Mining electronic health records: Towards better research applications and clinical care. Nat. Rev. Genet. 2012, 13, 395–405. [Google Scholar] [CrossRef]

- Heo, Y.J.; Hwa, C.; Lee, G.-H.; Park, J.-M.; An, J.-Y. Integrative Multi-Omics Approaches in Cancer Research: From Biological Networks to Clinical Subtypes. Mol. Cells 2021, 44, 433–443. [Google Scholar] [CrossRef]

- Raufaste-Cazavieille, V.; Santiago, R.; Droit, A. Multi-omics analysis: Paving the path toward achieving precision medicine in cancer treatment and immuno-oncology. Front. Mol. Biosci. 2022, 9, 962743. [Google Scholar] [CrossRef]

- Rosenthal, A.; Rimsza, L. Genomics of aggressive B-cell lymphoma. Hematol. Am. Soc. Hematol. Educ. Program. 2018, 2018, 69–74. [Google Scholar] [CrossRef]

- Rapier-Sharman, N.; Clancy, J.; Pickett, B.E. Joint Secondary Transcriptomic Analysis of Non-Hodgkin’s B-Cell Lymphomas Predicts Reliance on Pathways Associated with the Extracellular Matrix and Robust Diagnostic Biomarkers. J. Bioinform. Syst. Biol. 2022, 5, 119–135. [Google Scholar] [CrossRef]

- Pickard, K.; Stephenson, E.; Mitchell, A.; Jardine, L.; Bacon, C.M. Location, location, location: Mapping the lymphoma tumor microenvironment using spatial transcriptomics. Front. Oncol. 2023, 13, 1258245. [Google Scholar] [CrossRef]

- Ducasse, M.; Brown, M.A. Epigenetic aberrations and cancer. Mol. Cancer 2006, 5, 60. [Google Scholar] [CrossRef]

- Gao, D.; Herman, J.G.; Guo, M. The clinical value of aberrant epigenetic changes of DNA damage repair genes in human cancer. Oncotarget 2016, 7, 37331–37346. [Google Scholar] [CrossRef]

- Menyhárt, O.; Győrffy, B. Multi-omics approaches in cancer research with applications in tumor subtyping, prognosis, and diagnosis. Comput. Struct. Biotechnol. J. 2021, 19, 949–960. [Google Scholar] [CrossRef]

- Alfaifi, A.; Refai, M.Y.; Alsaadi, M.; Bahashwan, S.; Malhan, H.; Al-Kahiry, W.; Dammag, E.; Ageel, A.; Mahzary, A.; Albiheyri, R.; et al. Metabolomics: A New Era in the Diagnosis or Prognosis of B-Cell Non-Hodgkin’s Lymphoma. Diagnostics 2023, 13, 861. [Google Scholar] [CrossRef]

- Krzyszczyk, P.; Acevedo, A.; Davidoff, E.J.; Timmins, L.M.; Marrero-Berrios, I.; Patel, M.; White, C.; Lowe, C.; Sherba, J.J.; Hartmanshenn, C.; et al. The growing role of precision and personalized medicine for cancer treatment. Technology 2018, 6, 79–100. [Google Scholar] [CrossRef]

- Olatunji, I.; Cui, F. Multimodal AI for prediction of distant metastasis in carcinoma patients. Front. Bioinform. 2023, 3, 1131021. [Google Scholar] [CrossRef]

- Destito, M.; Marzullo, A.; Leone, R.; Zaffino, P.; Steffanoni, S.; Erbella, F.; Calimeri, F.; Anzalone, N.; De Momi, E.; Ferreri, A.J.M.; et al. Radiomics-Based Machine Learning Model for Predicting Overall and Progression-Free Survival in Rare Cancer: A Case Study for Primary CNS Lymphoma Patients. Bioengineering 2023, 10, 285. [Google Scholar] [CrossRef]

- He, X.; Liu, X.; Zuo, F.; Shi, H.; Jing, J. Artificial intelligence-based multi-omics analysis fuels cancer precision medicine. Semin. Cancer Biol. 2023, 88, 187–200. [Google Scholar] [CrossRef]

- Singh, S.; Kumar, R.; Payra, S.; Singh, S.K. Artificial intelligence and machine learning in pharmacological research: Bridging the gap between data and drug discovery. Cureus 2023, 15, e44359. [Google Scholar] [CrossRef]

- Chango, W.; Lara, J.A.; Cerezo, R.; Romero, C. A review on data fusion in multimodal learning analytics and educational data mining. WIREs Data Min. Knowl. Discov. 2022, 12, e1458. [Google Scholar] [CrossRef]

- Stahlschmidt, S.R.; Ulfenborg, B.; Synnergren, J. Multimodal deep learning for biomedical data fusion: A review. Brief. Bioinform. 2022, 23, bbab569. [Google Scholar] [CrossRef]

- Gadzicki, K.; Khamsehashari, R.; Zetzsche, C. Early vs late fusion in multimodal convolutional neural networks. In Proceedings of the 2020 IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Li, W.; Ballard, J.; Zhao, Y.; Long, Q. Knowledge-guided learning methods for integrative analysis of multi-omics data. Comput. Struct. Biotechnol. J. 2024, 23, 1945–1950. [Google Scholar] [CrossRef]

- Kang, M.; Ko, E.; Mersha, T.B. A roadmap for multi-omics data integration using deep learning. Brief. Bioinform. 2022, 23. [Google Scholar] [CrossRef]

- Vahabi, N.; Michailidis, G. Unsupervised Multi-Omics Data Integration Methods: A Comprehensive Review. Front. Genet. 2022, 13, 854752. [Google Scholar] [CrossRef]

- Zhou, Z.-H. A brief introduction to weakly supervised learning. Natl. Sci. Rev. 2018, 5, 44–53. [Google Scholar] [CrossRef]

- Ginghina, O.; Hudita, A.; Zamfir, M.; Spanu, A.; Mardare, M.; Bondoc, I.; Buburuzan, L.; Georgescu, S.E.; Costache, M.; Negrei, C.; et al. Liquid biopsy and artificial intelligence as tools to detect signatures of colorectal malignancies: A modern approach in patient’s stratification. Front. Oncol. 2022, 12, 856575. [Google Scholar] [CrossRef]

- Foser, S.; Maiese, K.; Digumarthy, S.R.; Puig-Butille, J.A.; Rebhan, C. Looking to the future of early detection in cancer: Liquid biopsies, imaging, and artificial intelligence. Clin. Chem. 2024, 70, 27–32. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, B.; Yu, Y.; Lu, J.; Lou, Y.; Qian, F.; Chen, T.; Zhang, L.; Yang, J.; Zhong, H.; et al. Multimodal fusion of liquid biopsy and CT enhances differential diagnosis of early-stage lung adenocarcinoma. NPJ Precis. Oncol. 2024, 8, 50. [Google Scholar] [CrossRef]

- Klempner, S.J.; Fabrizio, D.; Bane, S.; Reinhart, M.; Peoples, T.; Ali, S.M.; Sokol, E.S.; Frampton, G.; Schrock, A.B.; Anhorn, R.; et al. Tumor mutational burden as a predictive biomarker for response to immune checkpoint inhibitors: A review of current evidence. Oncologist 2020, 25, e147–e159. [Google Scholar] [CrossRef]

- Jardim, D.L.; Goodman, A.; de Melo Gagliato, D.; Kurzrock, R. The challenges of tumor mutational burden as an immunotherapy biomarker. Cancer Cell 2021, 39, 154–173. [Google Scholar] [CrossRef]

- Chang, T.-G.; Cao, Y.; Sfreddo, H.J.; Dhruba, S.R.; Lee, S.-H.; Valero, C.; Yoo, S.-K.; Chowell, D.; Morris, L.G.T.; Ruppin, E. LORIS robustly predicts patient outcomes with immune checkpoint blockade therapy using common clinical, pathologic and genomic features. Nat. Cancer 2024, 1–18. [Google Scholar] [CrossRef]

- Akthar, A.S.; Ferguson, M.K.; Koshy, M.; Vigneswaran, W.T.; Malik, R. Limitations of PET/CT in the Detection of Occult N1 Metastasis in Clinical Stage I(T1-2aN0) Non-Small Cell Lung Cancer for Staging Prior to Stereotactic Body Radiotherapy. Technol. Cancer Res. Treat. 2017, 16, 15–21. [Google Scholar] [CrossRef]

- Sanjeevaiah, A.; Park, H.; Fangman, B.; Porembka, M. Gastric Cancer with Radiographically Occult Metastatic Disease: Biology, Challenges, and Diagnostic Approaches. Cancers 2020, 12, 592. [Google Scholar] [CrossRef]

- Walma, M.; Maggino, L.; Smits, F.J.; Borggreve, A.S.; Daamen, L.A.; Groot, V.P.; Casciani, F.; de Meijer, V.E.; Wessels, F.J.; van der Schelling, G.P.; et al. The Difficulty of Detecting Occult Metastases in Patients with Potentially Resectable Pancreatic Cancer: Development and External Validation of a Preoperative Prediction Model. J. Clin. Med. 2024, 13, 1679. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, Y.; Niu, X. Application of artificial intelligence for improving early detection and prediction of therapeutic outcomes for gastric cancer in the era of precision oncology. Semin. Cancer Biol. 2023, 93, 83–96. [Google Scholar] [CrossRef]

- Yu, Y.; Ouyang, W.; Huang, Y.; Huang, H.; Wang, Z.; Jia, X.; Huang, Z.; Lin, R.; Zhu, Y.; Yalikun, Y.; et al. AI-Based multimodal Multi-tasks analysis reveals tumor molecular heterogeneity, predicts preoperative lymph node metastasis and prognosis in papillary thyroid carcinoma: A retrospective study. Int. J. Surg. 2024. [Google Scholar] [CrossRef]

- Loeffler-Wirth, H.; Kreuz, M.; Hopp, L.; Arakelyan, A.; Haake, A.; Cogliatti, S.B.; Feller, A.C.; Hansmann, M.-L.; Lenze, D.; Möller, P.; et al. A modular transcriptome map of mature B cell lymphomas. Genome Med. 2019, 11, 27. [Google Scholar] [CrossRef]

- Zamò, A.; Gerhard-Hartmann, E.; Ott, G.; Anagnostopoulos, I.; Scott, D.W.; Rosenwald, A.; Rauert-Wunderlich, H. Routine application of the Lymph2Cx assay for the subclassification of aggressive B-cell lymphoma: Report of a prospective real-world series. Virchows Arch. 2022, 481, 935–943. [Google Scholar] [CrossRef]

- Xu-Monette, Z.Y.; Zhang, H.; Zhu, F.; Tzankov, A.; Bhagat, G.; Visco, C.; Dybkaer, K.; Chiu, A.; Tam, W.; Zu, Y.; et al. A refined cell-of-origin classifier with targeted NGS and artificial intelligence shows robust predictive value in DLBCL. Blood Adv. 2020, 4, 3391–3404. [Google Scholar] [CrossRef]

- Radtke, A.J.; Postovalova, E.; Varlamova, A.; Bagaev, A.; Sorokina, M.; Kudryashova, O.; Meerson, M.; Polyakova, M.; Galkin, I.; Svekolkin, V.; et al. Multi-omic profiling of follicular lymphoma reveals changes in tissue architecture and enhanced stromal remodeling in high-risk patients. Cancer Cell 2024, 42, 444–463.e10. [Google Scholar] [CrossRef]

- Sun, R.; Medeiros, L.J.; Young, K.H. Diagnostic and predictive biomarkers for lymphoma diagnosis and treatment in the era of precision medicine. Mod. Pathol. 2016, 29, 1118–1142. [Google Scholar] [CrossRef]

- Steen, C.B.; Luca, B.A.; Esfahani, M.S.; Azizi, A.; Sworder, B.J.; Nabet, B.Y.; Kurtz, D.M.; Liu, C.L.; Khameneh, F.; Advani, R.H.; et al. The landscape of tumor cell states and ecosystems in diffuse large B cell lymphoma. Cancer Cell 2021, 39, 1422–1437.e10. [Google Scholar] [CrossRef]

- Carreras, J.; Roncador, G.; Hamoudi, R. Artificial Intelligence Predicted Overall Survival and Classified Mature B-Cell Neoplasms Based on Immuno-Oncology and Immune Checkpoint Panels. Cancers 2022, 14, 5318. [Google Scholar] [CrossRef]

- Krull, J.E.; Wenzl, K.; Hopper, M.A.; Manske, M.K.; Sarangi, V.; Maurer, M.J.; Larson, M.C.; Mondello, P.; Yang, Z.; Novak, J.P.; et al. Follicular lymphoma B cells exhibit heterogeneous transcriptional states with associated somatic alterations and tumor microenvironments. Cell Rep. Med. 2024, 5, 101443. [Google Scholar] [CrossRef]

- Siddiqui, M.; Rajkumar, S.V. The high cost of cancer drugs and what we can do about it. Mayo Clin. Proc. 2012, 87, 935–943. [Google Scholar] [CrossRef]

- Vokinger, K.N.; Hwang, T.J.; Daniore, P.; Lee, C.C.; Tibau, A.; Grischott, T.; Rosemann, T.J.; Kesselheim, A.S. Analysis of launch and postapproval cancer drug pricing, clinical benefit, and policy implications in the US and europe. JAMA Oncol. 2021, 7, e212026. [Google Scholar] [CrossRef]

- Pan, J.; Wei, X.; Lu, H.; Wu, X.; Li, C.; Hai, X.; Lan, T.; Dong, Q.; Yang, Y.; Jakovljevic, M.; et al. List prices and clinical value of anticancer drugs in China, Japan, and South Korea: A retrospective comparative study. Lancet Reg. Health West. Pac. 2024, 47, 101088. [Google Scholar] [CrossRef]

- Yeh, S.-J.; Yeh, T.-Y.; Chen, B.-S. Systems drug discovery for diffuse large B cell lymphoma based on pathogenic molecular mechanism via big data mining and deep learning method. Int. J. Mol. Sci. 2022, 23, 6732. [Google Scholar] [CrossRef]

- Mosquera Orgueira, A.; Díaz Arías, J.Á.; Serrano Martín, R.; Portela Piñeiro, V.; Cid López, M.; Peleteiro Raíndo, A.; Bao Pérez, L.; González Pérez, M.S.; Pérez Encinas, M.M.; Fraga Rodríguez, M.F.; et al. A prognostic model based on gene expression parameters predicts a better response to bortezomib-containing immunochemotherapy in diffuse large B-cell lymphoma. Front. Oncol. 2023, 13, 1157646. [Google Scholar] [CrossRef]

- Tong, Y.; Udupa, J.K.; Chong, E.; Winchell, N.; Sun, C.; Zou, Y.; Schuster, S.J.; Torigian, D.A. Prediction of lymphoma response to CAR T cells by deep learning-based image analysis. PLoS ONE 2023, 18, e0282573. [Google Scholar] [CrossRef]

- Lee, J.H.; Song, G.-Y.; Lee, J.; Kang, S.-R.; Moon, K.M.; Choi, Y.-D.; Shen, J.; Noh, M.-G.; Yang, D.-H. Prediction of immunochemotherapy response for diffuse large B-cell lymphoma using artificial intelligence digital pathology. J. Pathol. Clin. Res. 2024, 10, e12370. [Google Scholar] [CrossRef]

- Freitas-Ribeiro, S.; Reis, R.L.; Pirraco, R.P. Long-term and short-term preservation strategies for tissue engineering and regenerative medicine products: State of the art and emerging trends. PNAS Nexus 2022, 1, pgac212. [Google Scholar] [CrossRef]

- Azimzadeh, O.; Gomolka, M.; Birschwilks, M.; Saigusa, S.; Grosche, B.; Moertl, S. Advanced omics and radiobiological tissue archives: The future in the past. Appl. Sci. 2021, 11, 11108. [Google Scholar] [CrossRef]

- Wu, S.Z.; Roden, D.L.; Al-Eryani, G.; Bartonicek, N.; Harvey, K.; Cazet, A.S.; Chan, C.-L.; Junankar, S.; Hui, M.N.; Millar, E.A.; et al. Cryopreservation of human cancers conserves tumour heterogeneity for single-cell multi-omics analysis. Genome Med. 2021, 13, 81. [Google Scholar] [CrossRef] [PubMed]

- De-Kayne, R.; Frei, D.; Greenway, R.; Mendes, S.L.; Retel, C.; Feulner, P.G.D. Sequencing platform shifts provide opportunities but pose challenges for combining genomic data sets. Mol. Ecol. Resour. 2021, 21, 653–660. [Google Scholar] [CrossRef] [PubMed]

- Chu, Y.; Corey, D.R. RNA sequencing: Platform selection, experimental design, and data interpretation. Nucleic Acid Ther. 2012, 22, 271–274. [Google Scholar] [CrossRef] [PubMed]

- Allali, I.; Arnold, J.W.; Roach, J.; Cadenas, M.B.; Butz, N.; Hassan, H.M.; Koci, M.; Ballou, A.; Mendoza, M.; Ali, R.; et al. A comparison of sequencing platforms and bioinformatics pipelines for compositional analysis of the gut microbiome. BMC Microbiol. 2017, 17, 194. [Google Scholar] [CrossRef] [PubMed]

- Slatko, B.E.; Gardner, A.F.; Ausubel, F.M. Overview of Next-Generation Sequencing Technologies. Curr. Protoc. Mol. Biol. 2018, 122, e59. [Google Scholar] [CrossRef] [PubMed]

- Schwarze, K.; Buchanan, J.; Fermont, J.M.; Dreau, H.; Tilley, M.W.; Taylor, J.M.; Antoniou, P.; Knight, S.J.L.; Camps, C.; Pentony, M.M.; et al. The complete costs of genome sequencing: A microcosting study in cancer and rare diseases from a single center in the United Kingdom. Genet. Med. 2020, 22, 85–94. [Google Scholar] [CrossRef]

- Gordon, L.G.; White, N.M.; Elliott, T.M.; Nones, K.; Beckhouse, A.G.; Rodriguez-Acevedo, A.J.; Webb, P.M.; Lee, X.J.; Graves, N.; Schofield, D.J. Estimating the costs of genomic sequencing in cancer control. BMC Health Serv. Res. 2020, 20, 492. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.-W.; Roh, J.; Park, C.-S. Immunohistochemistry for pathologists: Protocols, pitfalls, and tips. J. Pathol. Transl. Med. 2016, 50, 411–418. [Google Scholar] [CrossRef]

- Koh, D.-M.; Papanikolaou, N.; Bick, U.; Illing, R.; Kahn, C.E.; Kalpathi-Cramer, J.; Matos, C.; Martí-Bonmatí, L.; Miles, A.; Mun, S.K.; et al. Artificial intelligence and machine learning in cancer imaging. Commun. Med. 2022, 2, 133. [Google Scholar] [CrossRef]

- Jeon, K.; Park, W.Y.; Kahn, C.E.; Nagy, P.; You, S.C.; Yoon, S.H. Advancing medical imaging research through standardization: The path to rapid development, rigorous validation, and robust reproducibility. Investig. Radiol. 2024. [Google Scholar] [CrossRef] [PubMed]

- Willemink, M.J.; Koszek, W.A.; Hardell, C.; Wu, J.; Fleischmann, D.; Harvey, H.; Folio, L.R.; Summers, R.M.; Rubin, D.L.; Lungren, M.P. Preparing medical imaging data for machine learning. Radiology 2020, 295, 4–15. [Google Scholar] [CrossRef]

- Krupa, K.; Bekiesińska-Figatowska, M. Artifacts in magnetic resonance imaging. Pol. J. Radiol. 2015, 80, 93–106. [Google Scholar] [CrossRef] [PubMed]

- Spin-Neto, R.; Costa, C.; Salgado, D.M.; Zambrana, N.R.; Gotfredsen, E.; Wenzel, A. Patient movement characteristics and the impact on CBCT image quality and interpretability. Dentomaxillofac. Radiol. 2018, 47, 20170216. [Google Scholar] [CrossRef]

- Koch, L.M.; Baumgartner, C.F.; Berens, P. Distribution shift detection for the postmarket surveillance of medical AI algorithms: A retrospective simulation study. npj Digital Med. 2024, 7, 120. [Google Scholar] [CrossRef] [PubMed]

- Bhowmik, A.; Monga, N.; Belen, K.; Varela, K.; Sevilimedu, V.; Thakur, S.B.; Martinez, D.F.; Sutton, E.J.; Pinker, K.; Eskreis-Winkler, S. Automated Triage of Screening Breast MRI Examinations in High-Risk Women Using an Ensemble Deep Learning Model. Investig. Radiol. 2023, 58, 710–719. [Google Scholar] [CrossRef]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable AI: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef] [PubMed]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial intelligence in healthcare: Transforming the practice of medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef]

- Zhang, Y.; Sheng, M.; Liu, X.; Wang, R.; Lin, W.; Ren, P.; Wang, X.; Zhao, E.; Song, W. A heterogeneous multi-modal medical data fusion framework supporting hybrid data exploration. Health Inf. Sci. Syst. 2022, 10, 22. [Google Scholar] [CrossRef]

- Chang, Q.; Yan, Z.; Zhou, M.; Qu, H.; He, X.; Zhang, H.; Baskaran, L.; Al’Aref, S.; Li, H.; Zhang, S.; et al. Mining multi-center heterogeneous medical data with distributed synthetic learning. Nat. Commun. 2023, 14, 5510. [Google Scholar] [CrossRef]

- Joshi, G.; Jain, A.; Araveeti, S.R.; Adhikari, S.; Garg, H.; Bhandari, M. FDA-Approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices: An Updated Landscape. Electronics 2024, 13, 498. [Google Scholar] [CrossRef]

- FDA Roundup: May 14, 2024|FDA. Available online: https://www.fda.gov/news-events/press-announcements/fda-roundup-may-14-2024 (accessed on 21 June 2024).

- van de Sande, D.; Van Genderen, M.E.; Smit, J.M.; Huiskens, J.; Visser, J.J.; Veen, R.E.R.; van Unen, E.; Ba, O.H.; Gommers, D.; van Bommel, J. Developing, implementing and governing artificial intelligence in medicine: A step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inform. 2022, 29, e100495. [Google Scholar] [CrossRef] [PubMed]

- Nilsen, P.; Svedberg, P.; Neher, M.; Nair, M.; Larsson, I.; Petersson, L.; Nygren, J. A framework to guide implementation of AI in health care: Protocol for a cocreation research project. JMIR Res. Protoc. 2023, 12, e50216. [Google Scholar] [CrossRef] [PubMed]

- Benjamens, S.; Dhunnoo, P.; Meskó, B. The state of artificial intelligence-based FDA-approved medical devices and algorithms: An online database. npj Digital Med. 2020, 3, 118. [Google Scholar] [CrossRef]

- Wu, K.; Wu, E.; Theodorou, B.; Liang, W.; Mack, C.; Glass, L.; Sun, J.; Zou, J. Characterizing the Clinical Adoption of Medical AI Devices through U.S. Insurance Claims. NEJM AI 2023, 1, AIoa2300030. [Google Scholar] [CrossRef]

- Khanna, N.N.; Maindarkar, M.A.; Viswanathan, V.; Fernandes, J.F.E.; Paul, S.; Bhagawati, M.; Ahluwalia, P.; Ruzsa, Z.; Sharma, A.; Kolluri, R.; et al. Economics of artificial intelligence in healthcare: Diagnosis vs. treatment. Healthcare 2022, 10, 2493. [Google Scholar] [CrossRef] [PubMed]

- Yadav, N.; Pandey, S.; Gupta, A.; Dudani, P.; Gupta, S.; Rangarajan, K. Data privacy in healthcare: In the era of artificial intelligence. Indian Dermatol. Online J. 2023, 14, 788–792. [Google Scholar] [CrossRef] [PubMed]

- Hantel, A.; Walsh, T.P.; Marron, J.M.; Kehl, K.L.; Sharp, R.; Van Allen, E.; Abel, G.A. Perspectives of oncologists on the ethical implications of using artificial intelligence for cancer care. JAMA Netw. Open 2024, 7, e244077. [Google Scholar] [CrossRef] [PubMed]

- Iserson, K.V. Informed consent for artificial intelligence in emergency medicine: A practical guide. Am. J. Emerg. Med. 2024, 76, 225–230. [Google Scholar] [CrossRef]

- Katsoulakis, E.; Wang, Q.; Wu, H.; Shahriyari, L.; Fletcher, R.; Liu, J.; Achenie, L.; Liu, H.; Jackson, P.; Xiao, Y.; et al. Digital twins for health: A scoping review. npj Digital Med. 2024, 7, 77. [Google Scholar] [CrossRef]

- Björnsson, B.; Borrebaeck, C.; Elander, N.; Gasslander, T.; Gawel, D.R.; Gustafsson, M.; Jörnsten, R.; Lee, E.J.; Li, X.; Lilja, S.; et al. Digital twins to personalize medicine. Genome Med. 2019, 12, 4. [Google Scholar] [CrossRef] [PubMed]

- Stahlberg, E.A.; Abdel-Rahman, M.; Aguilar, B.; Asadpoure, A.; Beckman, R.A.; Borkon, L.L.; Bryan, J.N.; Cebulla, C.M.; Chang, Y.H.; Chatterjee, A.; et al. Exploring approaches for predictive cancer patient digital twins: Opportunities for collaboration and innovation. Front. Digit. Health 2022, 4, 1007784. [Google Scholar] [CrossRef] [PubMed]

- Martin, L.; Hutchens, M.; Hawkins, C.; Radnov, A. How much do clinical trials cost? Nat. Rev. Drug Discov. 2017, 16, 381–382. [Google Scholar] [CrossRef] [PubMed]

- Kostis, J.B.; Dobrzynski, J.M. Limitations of randomized clinical trials. Am. J. Cardiol. 2020, 129, 109–115. [Google Scholar] [CrossRef] [PubMed]

- Bezerra, E.D.; Iqbal, M.; Munoz, J.; Khurana, A.; Wang, Y.; Maurer, M.J.; Bansal, R.; Hathcock, M.A.; Bennani, N.; Murthy, H.S.; et al. Barriers to enrollment in clinical trials of patients with aggressive B-cell NHL that progressed after CAR T-cell therapy. Blood Adv. 2023, 7, 1572–1576. [Google Scholar] [CrossRef] [PubMed]

- Džakula, A.; Relić, D. Health workforce shortage—Doing the right things or doing things right? Croat. Med. J. 2022, 63, 107–109. [Google Scholar] [CrossRef]

- Nashwan, A.J.; Abujaber, A.A. Nursing in the artificial intelligence (AI) era: Optimizing staffing for tomorrow. Cureus 2023, 15, e47275. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Isavand, P.; Aghamiri, S.S.; Amin, R. Applications of Multimodal Artificial Intelligence in Non-Hodgkin Lymphoma B Cells. Biomedicines 2024, 12, 1753. https://doi.org/10.3390/biomedicines12081753

Isavand P, Aghamiri SS, Amin R. Applications of Multimodal Artificial Intelligence in Non-Hodgkin Lymphoma B Cells. Biomedicines. 2024; 12(8):1753. https://doi.org/10.3390/biomedicines12081753

Chicago/Turabian StyleIsavand, Pouria, Sara Sadat Aghamiri, and Rada Amin. 2024. "Applications of Multimodal Artificial Intelligence in Non-Hodgkin Lymphoma B Cells" Biomedicines 12, no. 8: 1753. https://doi.org/10.3390/biomedicines12081753