Explainable Artificial Intelligence (XAI) in Insurance

Abstract

1. Introduction

XAI Terminology

2. Fundamental Concepts & Background

2.1. Artificial Intelligence Applications in Insurance

2.2. Explainable Artificial Intelligence

2.3. The Importance of Explainability in Insurance Analytics

3. Methodology

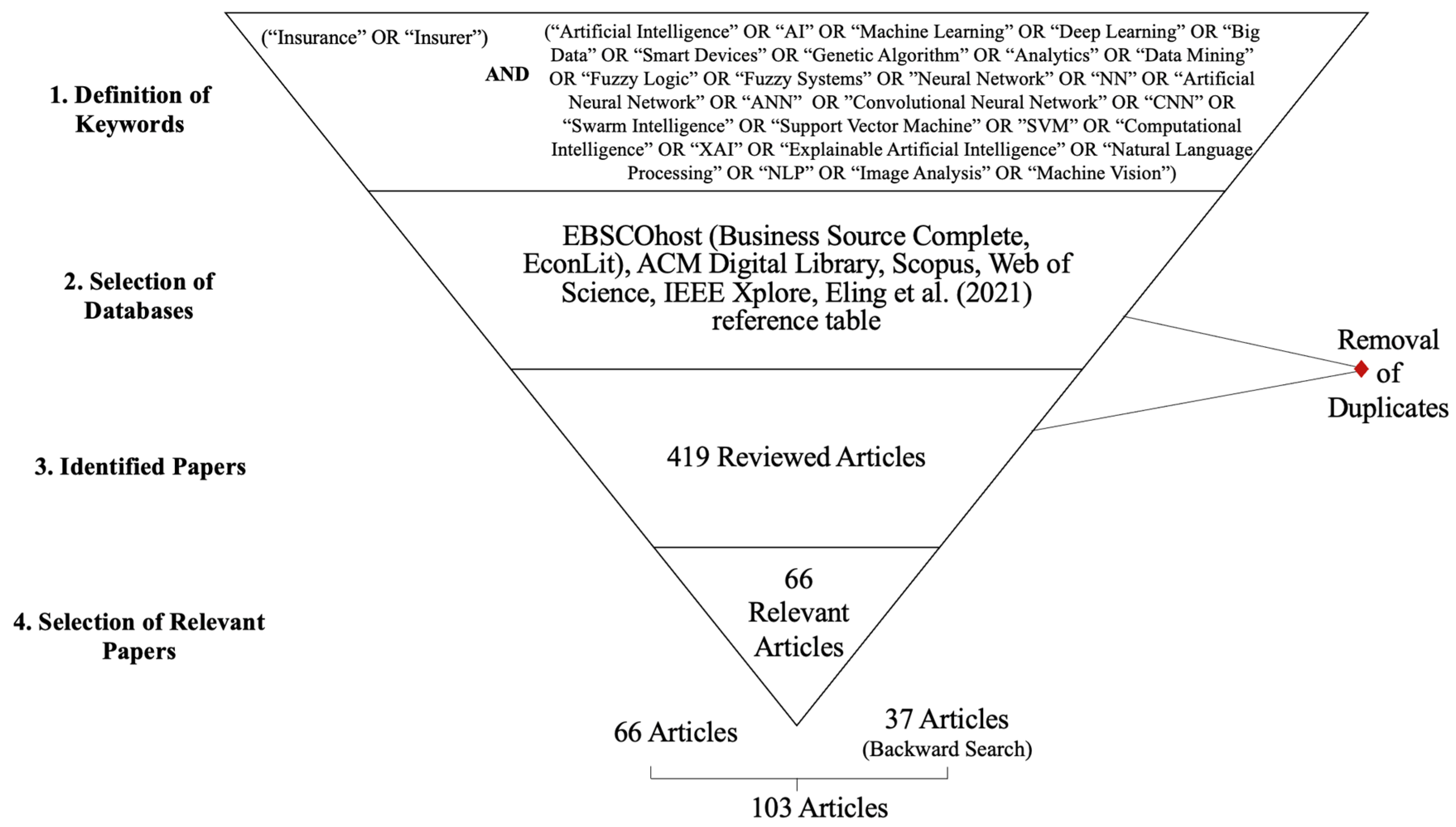

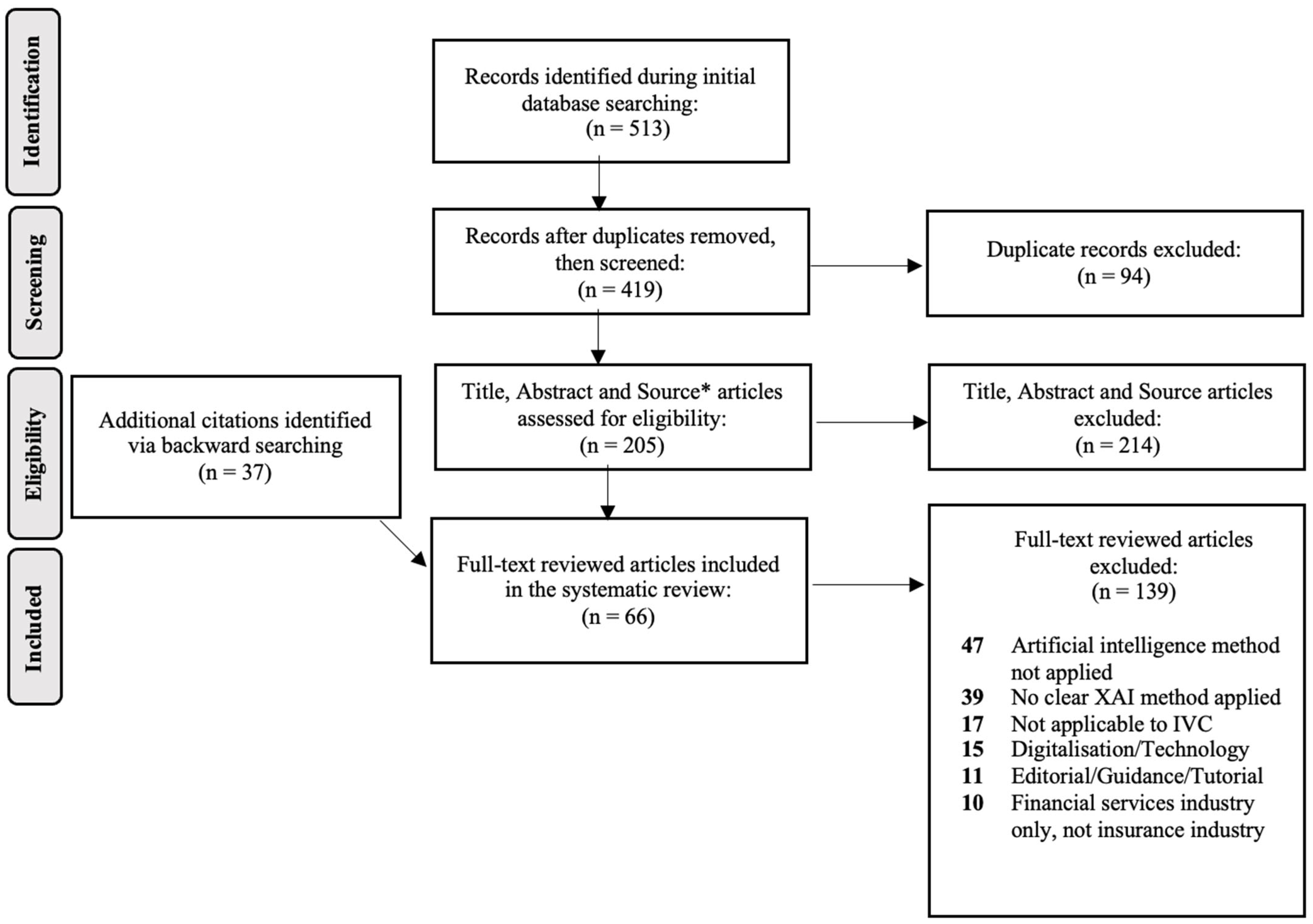

3.1. Literature Search Strategy

- Time Period: Articles3 published between 1 January 2000–31 December 2021 are included,

- Relevancy: The presence of keywords (Table 2) in the abstract is necessary for the article’s inclusion. Additionally, the articles need to be relevant to the assessment of AI applications along the IVC directly (e.g., articles concerned with determining drivers’ behaviour using telematics information, which may later inform insurance companies’ pricing practices were excluded, as well as generalised surveys on AI uses in insurance4),

- Singularity: Duplicate articles found across the various databases are excluded,

- Accessibility: Only peer-reviewed articles that are accessible through the aforementioned databases and are accessible in full text are included (i.e., extended abstracts are not included),

- Language: Only articles published in English are included.

3.2. Literature Extraction Process

3.3. Limitations of the Research

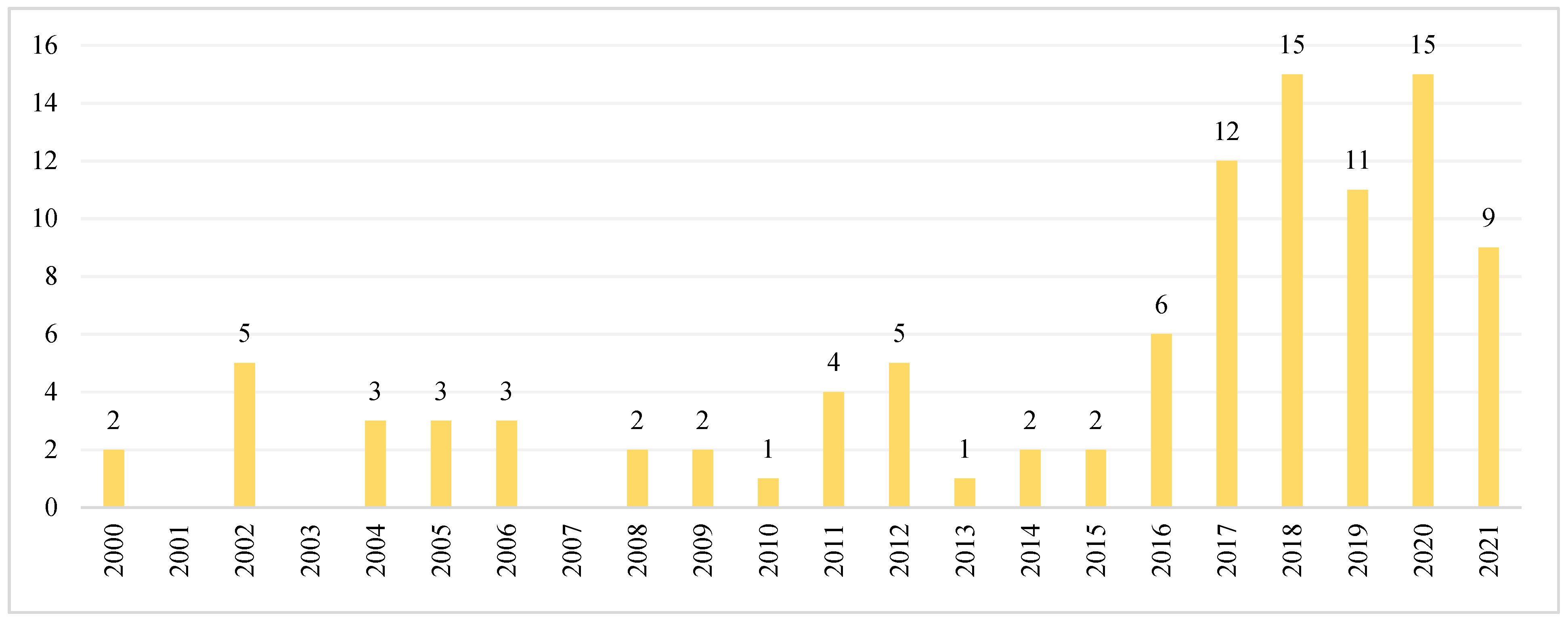

4. Systematic Review Results

4.1. AI Methods and Prediction Tasks

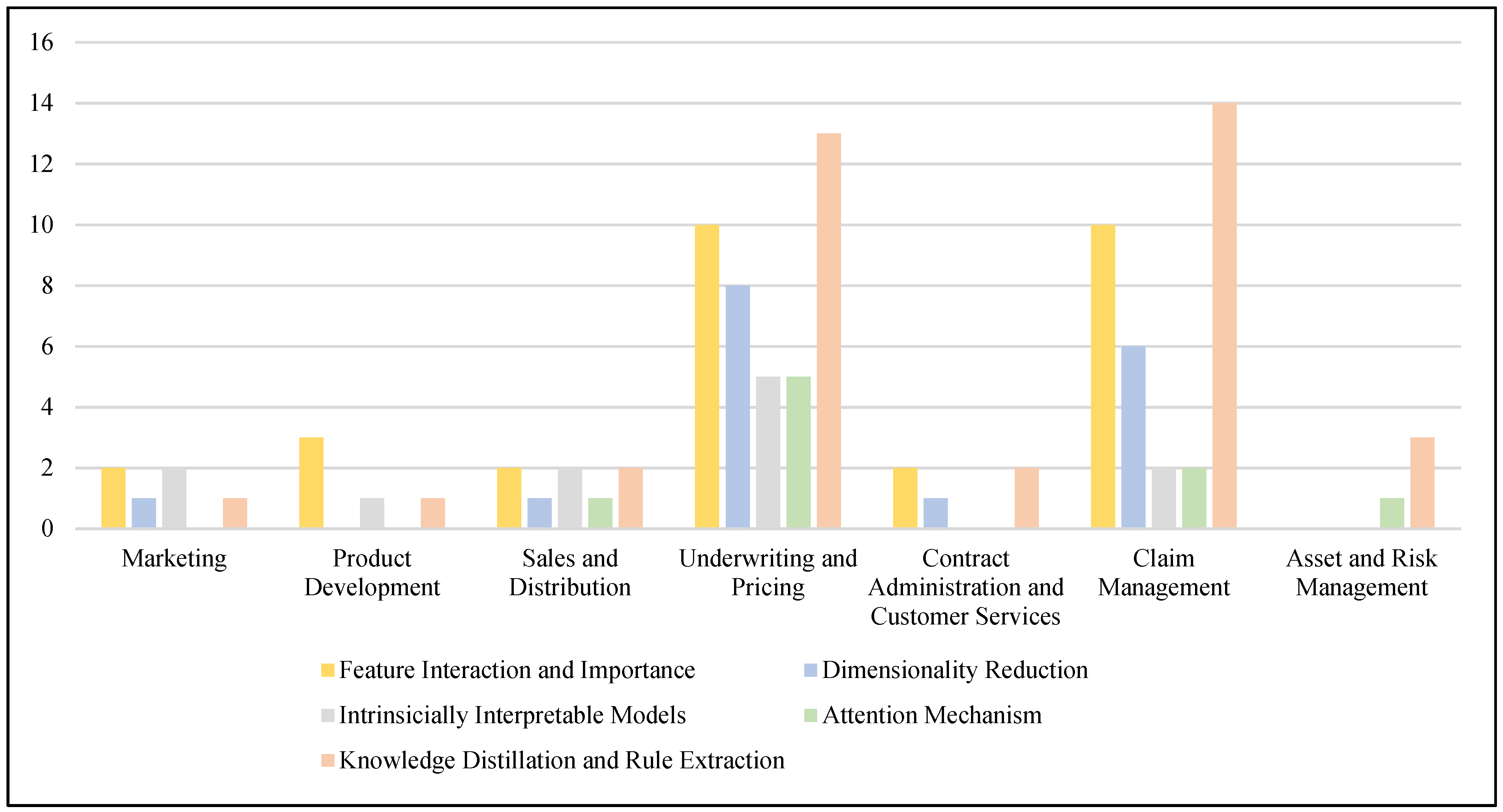

4.2. XAI Categories along the IVC

4.3. Feature Interaction and Importance

4.4. Attention Mechanism

4.5. Dimensionality Reduction

4.6. Knowledge Distillation and Rule Extraction

4.7. Intrinsically Interpretable Models

5. Discussion

5.1. AI’s Application on the Insurance Value Chain

5.2. XAI Definition, Evaluation and Regulatory Compliance

“XAI is the transfer of understanding to AI models’ end-users by highlighting key decision- pathways in the model and allowing for human interpretability at various stages of the model’s decision-process. XAI involves outlining the relationship between model inputs and prediction, meanwhile maintaining predictive accuracy of the model throughout”

5.3. The Relationship between Explanation and Trust

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AdaBoost | Adaptive Boosting |

| AI | Artificial Intelligence |

| ANN | Artificial Neural Network |

| BN | Bayesian Network |

| BPNN | Back Propagation Neural Network |

| CHAID | Chi-Squared Automatic Interaction Detection |

| CNN | Convolutional Neural Networks |

| CPLF | Cost-Sensitive Parallel Learning Framework |

| CRM | Customer Relationship Management |

| DFSVM | Dual Membership Fuzzy Support Vector Machine |

| DL | Deep Learning |

| ESIM | Evolutionary Support Vector Machine Inference Model |

| EvoDM | Evolutionary Data Mining |

| FL | Fuzzy Logic |

| GAM | Generalised Additive Model |

| GLM | Generalised Linear Model |

| HVSVM | Hull Vector Support Vector Machine |

| IoT | Internet of Things |

| IVC | Insurance Value Chain |

| KDD | |

| LASSO | Least Absolute Shrinkage and Selection Operator |

| MCAM | Markov Chain Approximation Method |

| ML | Machine Learning |

| NB | Naïve Bayes |

| NCA | Neighbourhood Component Analysis |

| NLP | Natural Language Processing |

| NN | Neural Network |

| PCA | Principal Component Analysis |

| RF | Random Forest |

| SBS | Sequential Backward Selection |

| SFS | Sequential Forward Selection |

| SHAP | Shapley Additive exPlanations |

| SOFM | Self-Organising Feature Map |

| SOM | Self-Organising Map |

| UBI | Usage-Based Insurance |

| WEKA | Waikato Environment for Knowledge Analysis |

| XAI | Explainable Artificial Intelligence |

| XGBoost | Extreme Gradient Boosting Algorithms |

Appendix A. XAI Variables

Appendix A.1. Intrinsic vs. Post hoc Interpretability

Appendix A.2. Local vs. Global Interpretability

Appendix A.3. Model-Specific vs. Model-Agnostic Interpretation

| In-Model | Intrinsic | Model-specific |

| Post-Model | Post hoc | Model-agnostic |

Appendix B. Database of Reviewed Articles

Appendix B.1. Journal Articles Included in the Systematic Review

| Reference | Title | Lead Author | Year | Source | Volume | Issue Number |

| Aggour et al. (2006) | Automating the underwriting of insurance applications | Aggour | 2006 | AI Magazine | 27 | 3 |

| Baecke and Bocca (2017) | The value of vehicle telematics data in insurance risk selection processes | Baecke | 2017 | Decision Support Systems | 98 | |

| Baudry and Robert (2019) | A machine learning approach for individual claims reserving in insurance | Baudry | 2019 | Applied Stochastic Models in Business and Industry | 35 | 5 |

| Belhadji et al. (2000) | A model for the detection of insurance fraud | Belhadji | 2000 | The Geneva Papers on Risk and Insurance-Issues and Practice | 25 | 4 |

| Benedek and László (2019) | Identifying Key Fraud Indicators in the Automobile Insurance Industry Using SQL Server Analysis Services | Benedek | 2019 | Studia Universitatis Babes-Bolyai | 64 | 2 |

| Bermúdez et al. (2008) | A Bayesian dichotomous model with asymmetric link for fraud in insurance | Bermúdez | 2008 | Insurance: Mathematics and Economics | 42 | 2 |

| Boodhun and Jayabalan (2018) | Risk prediction in life insurance industry using supervised learning algorithms | Boodhun | 2018 | Complex & Intelligent Systems | 4 | 2 |

| Carfora et al. (2019) | A “pay-how-you-drive” car insurance approach through cluster analysis | Carfora | 2019 | Soft Computing | 23 | 9 |

| Chang and Lai (2021) | A Neural Network-Based Approach in Predicting Consumers’ Intentions of Purchasing Insurance Policies | Chang | 2021 | Acta Informatica Pragensia | 10 | 2 |

| Cheng et al. (2011) | Decision making for contractor insurance deductible using the evolutionary support vector machines inference model | Cheng | 2011 | Expert Systems with Applications | 38 | 6 |

| Cheng et al. (2020) | Optimal insurance strategies: A hybrid deep learning Markov chain approximation approach | Cheng | 2020 | ASTIN Bulletin: The Journal of the IAA | 50 | 2 |

| Christmann (2004) | An approach to model complex high–dimensional insurance data | Christmann | 2004 | Allgemeines Statistisches Archiv | 88 | 4 |

| David (2015) | Auto insurance premium calculation using generalized linear models | David | 2015 | Procedia Economics and Finance | 20 | |

| Delong and Wüthrich (2020) | Neural networks for the joint development of individual payments and claim incurred | Delong | 2020 | Risks | 8 | 2 |

| Denuit and Lang (2004) | Non-life rate-making with Bayesian GAMs | Denuit | 2004 | Insurance: Mathematics and Economics | 35 | 3 |

| Deprez et al. (2017) | Machine learning techniques for mortality modeling | Deprez | 2017 | European Actuarial Journal | 7 | 2 |

| Desik and Behera (2012) | Acquiring Insurance Customer: The CHAID Way | Desik | 2012 | IUP Journal of Knowledge Management | 10 | 3 |

| Desik et al. (2016) | Segmentation-Based Predictive Modeling Approach in Insurance Marketing Strategy | Desik | 2016 | IUP Journal of Business Strategy | 13 | 2 |

| Devriendt et al. (2021) | Sparse regression with multi-type regularized feature modeling | Devriendt | 2021 | Insurance: Mathematics and Economics | 96 | |

| Duval and Pigeon (2019) | Individual loss reserving using a gradient boosting-based approach | Duval | 2019 | Risks | 7 | 3 |

| Fang et al. (2016) | Customer profitability forecasting using Big Data analytics: A case study of the insurance industry | Fang | 2016 | Computers & Industrial Engineering | 101 | |

| Frees and Valdez (2008) | Hierarchical insurance claims modeling | Frees | 2008 | Journal of the American Statistical Association | 103 | 484 |

| Gabrielli (2021) | An individual claims reserving model for reported claims | Gabrielli | 2021 | European Actuarial Journal | 11 | 2 |

| Gan (2013) | Application of data clustering and machine learning in variable annuity valuation | Gan | 2013 | Journal of the American Statistical Association | 53 | 3 |

| Gan and Valdez (2017) | Regression modeling for the valuation of large variable annuity portfolios | Gan | 2018 | North American Actuarial Journal | 22 | 1 |

| Ghorbani and Farzai (2018) | Fraud detection in automobile insurance using a data mining based approach | Ghorbani | 2018 | International Journal of Mechatronics, Elektrical and Computer Technology (IJMEC) | 8 | 27 |

| Gramegna and Giudici (2020) | Why to buy insurance? An Explainable Artificial Intelligence Approach | Gramegna | 2020 | Risks | 8 | 4 |

| Guelman (2012) | Gradient boosting trees for auto insurance loss cost modeling and prediction | Guelman | 2012 | Expert Systems with Applications | 39 | 3 |

| Gweon et al. (2020) | An effective bias-corrected bagging method for the valuation of large variable annuity portfolios | Gweon | 2020 | ASTIN Bulletin: The Journal of the IAA | 50 | 3 |

| Herland et al. (2018) | The detection of medicare fraud using machine learning methods with excluded provider labels | Herland | 2018 | Journal of Big Data | 5 | 1 |

| Huang and Meng (2019) | Automobile insurance classification ratemaking based on telematics driving data | Huang | 2019 | Decision Support Systems | 127 | |

| Ibiwoye et al. (2012) | Artificial neural network model for predicting insurance insolvency | Ibiwoye | 2012 | International Journal of Management and Business Research | 2 | 1 |

| Jain et al. (2019) | Assessing risk in life insurance using ensemble learning | Jain | 2019 | Journal of Intelligent & Fuzzy Systems | 37 | 2 |

| Jeong et al. (2018) | Association rules for understanding policyholder lapses | Jeong | 2018 | Risks | 6 | 3 |

| Jiang et al. (2018) | Cost-sensitive parallel learning framework for insurance intelligence operation | Jiang | 2018 | IEEE Transactions on Industrial Electronics | 66 | 12 |

| Jin et al. (2021) | A hybrid deep learning method for optimal insurance strategies: Algorithms and convergence analysis | Jin | 2021 | Insurance: Mathematics and Economics | 96 | |

| Johnson and Khoshgoftaar (2019) | Medicare fraud detection using neural networks | Johnson | 2019 | Journal of Big Data | 6 | 1 |

| Joram et al. (2017) | A knowledge-based system for life insurance underwriting | Joram | 2017 | International Journal of Information Technology and Computer Science | 3 | |

| Karamizadeh and Zolfagharifar (2016) | Using the clustering algorithms and rule-based of data mining to identify affecting factors in the profit and loss of third party insurance, insurance company auto | Karamizadeh | 2016 | Indian Journal of science and Technology | 9 | 7 |

| Kašćelan et al. (2016) | A nonparametric data mining approach for risk prediction in car insurance: a case study from the Montenegrin market | Kašćelan | 2016 | Economic research-Ekonomska istraživanja | 29 | 1 |

| Khodairy and Abosamra (2021) | Driving Behavior Classification Based on Oversampled Signals of Smartphone Embedded Sensors Using an Optimized Stacked-LSTM Neural Networks | Khodairy | 2021 | IEEE Access | 9 | |

| Kiermayer and Weiß (2021) | Grouping of contracts in insurance using neural networks | Kiermayer | 2021 | Scandinavian Actuarial Journal | 2021 | 4 |

| Kose et al. (2015) | An interactive machine-learning-based electronic fraud and abuse detection system in healthcare insurance | Kose | 2015 | Applied Soft Computing | 36 | |

| Kwak et al. (2020) | Driver Identification Based on Wavelet Transform Using Driving Patterns | Kwak | 2020 | IEEE Transactions on Industrial Informatics | 17 | 4 |

| Larivière and Van den Poel (2005) | Predicting customer retention and profitability by using random forests and regression forests techniques | Lariviere | 2005 | Expert systems with applications | 29 | 2 |

| Lee et al. (2020) | Actuarial applications of word embedding models | Lee | 2020 | ASTIN Bulletin: The Journal of the IAA | 50 | 1 |

| Li et al. (2018) | A principle component analysis-based random forest with the potential nearest neighbor method for automobile insurance fraud identification | Li | 2018 | Applied Soft Computing | 70 | |

| Lin (2009) | Using neural networks as a support tool in the decision making for insurance industry | Lin | 2009 | Expert Systems with Applications | 36 | 3 |

| Lin et al. (2017) | An ensemble random forest algorithm for insurance big data analysis | Lin | 2017 | IEEE Access | 5 | |

| Liu et al. (2014) | Using multi-class AdaBoost tree for prediction frequency of auto insurance | Liu | 2014 | Journal of Applied Finance and Banking | 4 | 5 |

| Matloob et al. (2020) | Sequence Mining and Prediction-Based Healthcare Fraud Detection Methodology | Matloob | 2020 | IEEE Access | 8 | |

| Neumann et al. (2019) | Machine Learning-Based Predictions of Customers’ Decisions in Car Insurance | Neumann | 2019 | Applied Artificial Intelligence | 33 | 9 |

| Pathak et al. (2005) | A fuzzy-based algorithm for auditors to detect elements of fraud in settled insurance claims | Pathak | 2005 | Managerial Auditing Journal | 20 | 6 |

| Ravi et al. (2017) | Fuzzy formal concept analysis based opinion mining for CRM in financial services | Ravi | 2017 | Applied Soft Computing | 60 | |

| Sadreddini et al. (2021) | Cancel-for-Any-Reason Insurance Recommendation Using Customer Transaction-Based Clustering | Sadreddini | 2021 | IEEE Access | 9 | |

| Sakthivel and Rajitha (2017) | Artificial intelligence for estimation of future claim frequency in non-life insurance | Sakthivel | 2017 | Global Journal of Pure and Applied Mathematics | 13 | 6 |

| Sevim et al. (2016) | Risk Assessment for Accounting Professional Liability Insurance | Sevim | 2016 | Sosyoekonomi | 24 | 29 |

| Shah and Guez (2009) | Mortality forecasting using neural networks and an application to cause-specific data for insurance purposes | Shah | 2009 | Journal of Forecasting | 28 | 6 |

| Sheehan et al. (2017) | Semi-autonomous vehicle motor insurance: A Bayesian Network risk transfer approach | Sheehan | 2017 | Transportation Research Part C: Emerging Technologies | 82 | |

| Siami et al. (2020) | A mobile telematics pattern recognition framework for driving behavior extraction | Siami | 2020 | IEEE Transactions on Intelligent Transportation Systems | 22 | 3 |

| Smith et al. (2000) | An analysis of customer retention and insurance claim patterns using data mining: A case study | Smith | 2000 | Journal of the Operational Research Society | 51 | 5 |

| Smyth and Jørgensen (2002) | Fitting Tweedie’s compound Poisson model to insurance claims data: dispersion modelling | Smyth | 2002 | ASTIN Bulletin: The Journal of the IAA | 32 | 1 |

| Sun et al. (2018) | Abnormal group-based joint medical fraud detection | Sun | 2018 | IEEE Access | 7 | |

| Tillmanns et al. (2017) | How to separate the wheat from the chaff: Improved variable selection for new customer acquisition | Tillmanns | 2017 | Journal of Marketing | 81 | 2 |

| Vaziri and Beheshtinia (2016) | A holistic fuzzy approach to create competitive advantage via quality management in services industry (case study: life-insurance services) | Vaziri | 2016 | Management decision | 54 | 8 |

| Viaene et al. (2002) | Auto claim fraud detection using Bayesian learning neural networks | Viaene | 2002 | Expert Systems with Applications | 29 | 3 |

| Viaene et al. (2004) | A case study of applying boosting Naive Bayes to claim fraud diagnosis | Viaene | 2004 | Journal of Risk and Insurance | 69 | 3 |

| Viaene et al. (2005) | A case study of applying boosting Naive Bayes to claim fraud diagnosis | Viaene | 2005 | IEEE Transactions on Knowledge and Data Engineering | 16 | 5 |

| Wang (2020) | Research on the Features of Car Insurance Data Based on Machine Learning | Wang | 2020 | Procedia Computer Science | 166 | |

| Wang and Xu (2018) | Leveraging deep learning with LDA-based text analytics to detect automobile insurance fraud | Wang | 2018 | Decision Support Systems | 105 | |

| Wei and Dan (2019) | Market fluctuation and agricultural insurance forecasting model based on machine learning algorithm of parameter optimization | Wei | 2019 | Journal of Intelligent & Fuzzy Systems | 37 | 5 |

| Wüthrich (2020) | Bias regularization in neural network models for general insurance pricing | Wüthrich | 2020 | European Actuarial Journal | 10 | 1 |

| Yan et al. (2020a) | Research on the UBI Car Insurance Rate Determination Model Based on the CNN-HVSVM Algorithm | Yan | 2020 | IEEE Access | 8 | |

| Yan et al. (2020b) | Improved adaptive genetic algorithm for the vehicle Insurance Fraud Identification Model based on a BP Neural Network | Yan | 2020 | Theoretical Computer Science | 817 | |

| Yang et al. (2006) | Extracting actionable knowledge from decision trees | Yang | 2006 | IEEE Transactions on Knowledge and data Engineering | 19 | 1 |

| Yang et al. (2018) | Insurance premium prediction via gradient tree-boosted Tweedie compound Poisson models | Yang | 2018 | Journal of Business & Economic Statistics | 36 | 3 |

| Yeo et al. (2002) | A mathematical programming approach to optimise insurance premium pricing within a data mining framework | Yeo | 2002 | Journal of the Operational research Society | 53 | 11 |

Appendix B.2. Conference Papers Included in the Systematic Review

| Reference | Title | Lead Author | Year | Source |

| Alshamsi (2014) | Predicting car insurance policies using random forest | Alshamsi | 2014 | 2014 10th International Conference on Innovations in Information Technology (IIT) |

| Bian et al. (2018) | Good drivers pay less: A study of usage-based vehicle insurance models | Bian | 2018 | Transportation research part A: policy and practice |

| Biddle et al. (2018) | Transportation research part A: policy and practice | Biddle | 2018 | Australasian Database Conference |

| Bonissone et al. (2002) | Evolutionary optimization of fuzzy decision systems for automated insurance underwriting | Bonissone | 2002 | 2002 IEEE World Congress on Computational Intelligence. 2002 IEEE International Conference on Fuzzy Systems |

| Bove et al. (2021) | Contextualising local explanations for non-expert users: an XAI pricing interface for insurance | Bove | 2021 | IUI Workshops |

| Cao and Zhang (2019) | Using PCA to improve the detection of medical insurance fraud in SOFM neural networks | Cao | 2019 | Proceedings of the 2019 3rd International Conference on Management Engineering, Software Engineering and Service Sciences |

| Dhieb et al. (2019) | Extreme gradient boosting machine learning algorithm for safe auto insurance operations | Dhieb | 2019 | 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES) |

| Gan and Huang (2017) | A data mining framework for valuing large portfolios of variable annuities | Gan | 2017 | Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining |

| Ghani and Kumar (2011) | Interactive learning for efficiently detecting errors in insurance claims | Ghani | 2011 | Proceedings of the 17th ACM SIGKDD international conference on Knowledge discovery and data mining |

| Kieu et al. (2018) | Distinguishing trajectories from different drivers using incompletely labeled trajectories | Kieu | 2018 | Proceedings of the 27th ACM international conference on information and knowledge management |

| Kowshalya and Nandhini (2018) | Predicting fraudulent claims in automobile insurance | Kowshalya | 2018 | 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT) |

| Kumar et al. (2010) | Data mining to predict and prevent errors in health insurance claims processing | Kumar | 2010 | Proceedings of the 16th ACM SIGKDD international conference on Knowledge discovery and data mining |

| Kyu and Woraratpanya (2020) | Car Damage Detection and Classification | Kyu | 2020 | Proceedings of the 11th International Conference on Advances in Information Technology |

| Lau and Tripathi (2011) | Mine your business—A novel application of association rules for insurance claims analytics | Lau | 2011 | CAS E-Forum. Arlington: Casualty Actuarial Society |

| Liu and Chen (2012) | Application of evolutionary data mining algorithms to insurance fraud prediction | Liu | 2012 | Proceedings of 2012 4th International Conference on Machine Learning and Computing IPCSIT |

| Morik et al. (2002) | End-user access to multiple sources-Incorporating knowledge discovery into knowledge management | Morik | 2002 | International Conference on Practical Aspects of Knowledge Management |

| Samonte et al. (2018) | ICD-9 tagging of clinical notes using topical word embedding | Samonte | 2018 | Proceedings of the 2018 International Conference on Internet and e-Business |

| Sohail et al. (2021) | Feature importance analysis for customer management of insurance products | Sohail | 2021 | 2021 International Joint Conference on Neural Networks (ICJNN) |

| Supraja and Saritha (2017) | Robust fuzzy rule based technique to detect frauds in vehicle insurance | Supraja | 2017 | 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS) |

| Tao et al. (2012) | Insurance fraud identification research based on fuzzy support vector machine with dual membership | Tao | 2012 | 2012 International Conference on Information Management, Innovation Management and Industrial Engineering |

| Vassiljeva et al. (2017) | Computational intelligence approach for estimation of vehicle insurance risk level | Vassiljeva | 2017 | 2017 International Joint Conference on Neural Networks (IJCNN) |

| Verma et al. (2017) | Fraud detection and frequent pattern matching in insurance claims using data mining techniques | Verma | 2017 | 2017 Tenth International Conference on Contemporary Computing (IC3) |

| Xu et al. (2011) | Random rough subspace based neural network ensemble for insurance fraud detection | Xu | 2011 | 2011 Fourth International Joint Conference on Computational Sciences and Optimization |

| Yan and Bonissone (2006) | Designing a Neural Network Decision System for Automated Insurance Underwriting | Yan | 2006 | Insurance Studies |

| Zahi and Achchab (2019) | Clustering of the population benefiting from health insurance using k-means | Zahi | 2019 | Proceedings of the 4th International Conference on Smart City Applications |

| Zhang and Kong (2020) | Dynamic estimation model of insurance product recommendation based on Naive Bayesian model | Zhang | 2020 | Proceedings of the 2020 International Conference on Cyberspace Innovation of Advanced Technologies |

| 1 | The five XAI categories used were introduced to XAI literature by Payrovnaziri et al. (2020), adapted from research conducted by Du et al. (2019) and Carvalho et al. (2019). |

| 2 | Searched ‘The ACM Guide to Computing Literature’. |

| 3 | ‘Articles’ throughout this review refers to both academic articles and conference papers. |

| 4 | Several such surveys and reviews are discussed in Section 2.2. |

References

- Adadi, Amina, and Mohammed Berrada. 2018. Peeking inside the black-box: A survey on explainable artificial intelligence (XAI). IEEE Access 6: 52138–60. [Google Scholar] [CrossRef]

- Aggour, Kareem S., Piero P. Bonissone, William E. Cheetham, and Richard P. Messmer. 2006. Automating the underwriting of insurance applications. AI Magazine 27: 36–36. [Google Scholar]

- Alshamsi, Asma S. 2014. Predicting car insurance policies using random forest. Paper presented at the 2014 10th International Conference on Innovations in Information Technology (IIT), Al Ain, United Arab Emirates, November 9–11. [Google Scholar]

- Al-Shedivat, Maruan, Avinava Dubey, and Eric P. Xing. 2020. Contextual Explanation Networks. Journal of Machine Learning Research 21: 194:1–94:44. [Google Scholar]

- Andrew, Jane, and Max Baker. 2021. The general data protection regulation in the age of surveillance capitalism. Journal of Business Ethics 168: 565–78. [Google Scholar] [CrossRef]

- Anjomshoae, Sule, Amro Najjar, Davide Calvaresi, and Kary Främling. 2019. Explainable agents and robots: Results from a systematic literature review. Paper presented at the 18th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2019), Montreal, Canada, May 13–17. [Google Scholar]

- Antoniadi, Anna Markella, Yuhan Du, Yasmine Guendouz, Lan Wei, Claudia Mazo, Brett A Becker, and Catherine Mooney. 2021. Current challenges and future opportunities for XAI in machine learning-based clinical decision support systems: A systematic review. Applied Sciences 11: 5088. [Google Scholar] [CrossRef]

- Arrieta, Alejandro Barredo, Natalia Díaz-Rodríguez, Javier Del Ser, Adrien Bennetot, Siham Tabik, Alberto Barbado, Salvador García, Sergio Gil-López, Daniel Molina, and Richard Benjamins. 2020. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion 58: 82–115. [Google Scholar] [CrossRef]

- Baecke, Philippe, and Lorenzo Bocca. 2017. The value of vehicle telematics data in insurance risk selection processes. Decision Support Systems 98: 69–79. [Google Scholar] [CrossRef]

- Baehrens, David, Timon Schroeter, Stefan Harmeling, Motoaki Kawanabe, Katja Hansen, and Klaus-Robert Müller. 2010. How to explain individual classification decisions. The Journal of Machine Learning Research 11: 1803–31. [Google Scholar]

- Balasubramanian, Ramnath, Ari Libarikian, and Doug McElhaney. 2018. Insurance 2030—The Impact of AI on the Future of Insurance. New York: McKinsey & Company. [Google Scholar]

- Barocas, Solon, and Andrew D Selbst. 2016. Big data’s disparate impact. California Law Review 104: 671. [Google Scholar] [CrossRef]

- Barry, Laurence, and Arthur Charpentier. 2020. Personalization as a promise: Can Big Data change the practice of insurance? Big Data & Society 7: 2053951720935143. [Google Scholar]

- Baser, Furkan, and Aysen Apaydin. 2010. Calculating insurance claim reserves with hybrid fuzzy least squares regression analysis. Gazi University Journal of Science 23: 163–70. [Google Scholar]

- Baudry, Maximilien, and Christian Y. Robert. 2019. A machine learning approach for individual claims reserving in insurance. Applied Stochastic Models in Business and Industry 35: 1127–55. [Google Scholar] [CrossRef]

- Bayamlıoğlu, Emre. 2021. The right to contest automated decisions under the General Data Protection Regulation: Beyond the so-called “right to explanation”. Regulation & Governance 16: 1058–78. [Google Scholar]

- Bean, Randy. 2021. Transforming the Insurance Industry with Big Data, Machine Learning and AI. Forbes. July 6. Available online: https://www.forbes.com/sites/randybean/2021/07/06/transforming-the-insurance-industry-with-big-data-machine-learning-and-ai/?sh=4004a662f8a6 (accessed on 11 August 2021).

- Beck, Hall P., Mary T. Dzindolet, and Linda G. Pierce. 2002. Operators’ automation usage decisions and the sources of misuse and disuse. In Advances in Human Performance and Cognitive Engineering Research. Bingley: Emerald Group Publishing Limited. [Google Scholar]

- Belhadji, El Bachir, George Dionne, and Faouzi Tarkhani. 2000. A model for the detection of insurance fraud. The Geneva Papers on Risk and Insurance-Issues and Practice 25: 517–38. [Google Scholar] [CrossRef]

- Benedek, Botond, and Ede László. 2019. Identifying Key Fraud Indicators in the Automobile Insurance Industry Using SQL Server Analysis Services. Studia Universitatis Babes-Bolyai 64: 53–71. [Google Scholar] [CrossRef]

- Bermúdez, Lluís, José María Pérez, Mercedes Ayuso, Esther Gómez, and Francisco. J. Vázquez. 2008. A Bayesian dichotomous model with asymmetric link for fraud in insurance. Insurance: Mathematics and Economics 42: 779–86. [Google Scholar] [CrossRef]

- Bian, Yiyang, Chen Yang, J. Leon Zhao, and Liang Liang. 2018. Good drivers pay less: A study of usage-based vehicle insurance models. Transportation Research Part A: Policy and Practice 107: 20–34. [Google Scholar] [CrossRef]

- Biddle, Rhys, Shaowu Liu, and Guandong Xu. 2018. Automated Underwriting in Life Insurance: Predictions and Optimisation (Industry Track). Paper presented at Australasian Database Conference, Gold Coast, QLD, Australia, May 24–27. [Google Scholar]

- Biecek, Przemysław, Marcin Chlebus, Janusz Gajda, Alicja Gosiewska, Anna Kozak, Dominik Ogonowski, Jakub Sztachelski, and Piotr Wojewnik. 2021. Enabling Machine Learning Algorithms for Credit Scoring—Explainable Artificial Intelligence (XAI) methods for clear understanding complex predictive models. arXiv arXiv:2104.06735. [Google Scholar]

- Biran, Or, and Courtenay Cotton. 2017. Explanation and justification in machine learning: A survey. Paper presented at the IJCAI-17 Workshop on Explainable AI (XAI), Melbourne, VIC, Australia, August 19–21. [Google Scholar]

- Blier-Wong, Christopher, Hélène Cossette, Luc Lamontagne, and Etienne Marceau. 2021. Machine Learning in P&C Insurance: A Review for Pricing and Reserving. Risks 9: 4. [Google Scholar]

- Bonissone, Piero. P., Raj Subbu, and Kareem S. Aggour. 2002. Evolutionary optimization of fuzzy decision systems for automated insurance underwriting. Paper presented at the 2002 IEEE World Congress on Computational Intelligence, 2002 IEEE International Conference on Fuzzy Systems, FUZZ-IEEE’02. Proceedings (Cat. No. 02CH37291), Honolulu, HI, USA, May 12–17. [Google Scholar]

- Boodhun, Noorhannah, and Manoj Jayabalan. 2018. Risk prediction in life insurance industry using supervised learning algorithms. Complex & Intelligent Systems 4: 145–54. [Google Scholar]

- Bove, Clara, Jonathan Aigrain, Marie-Jeanne Lesot, Charles Tijus, and Marcin Detyniecki. 2021. Contextualising local explanations for non-expert users: An XAI pricing interface for insurance. Paper presented at the IUI Workshops, College Station, TX, USA, April 13–17. [Google Scholar]

- Burgt, Joost van der. 2020. Explainable AI in banking. Journal of Digital Banking 4: 344–50. [Google Scholar]

- Burrell, Jenna. 2016. How the machine ‘thinks’: Understanding opacity in machine learning algorithms. Big Data & Society 3: 2053951715622512. [Google Scholar]

- Bussmann, Niklas, Paolo Giudici, Dimitri Marinelli, and Jochen Papenbrock. 2020. Explainable ai in fintech risk management. Frontiers in Artificial Intelligence 3: 26. [Google Scholar] [CrossRef] [PubMed]

- Cao, Hongfei, and Runtong Zhang. 2019. Using PCA to improve the detection of medical insurance fraud in SOFM neural networks. Paper presented at the 2019 3rd International Conference on Management Engineering, Software Engineering and Service Sciences, Wuhan, China, January 12–14. [Google Scholar]

- Carabantes, Manuel. 2020. Black-box artificial intelligence: An epistemological and critical analysis. AI & Society 35: 309–17. [Google Scholar]

- Carfora, Maria Francesca, Fabio Martinelli, Francesco Mercaldo, Vittoria Nardone, Albina Orlando, Antonella Santone, and Gigliola Vaglini. 2019. A “pay-how-you-drive” car insurance approach through cluster analysis. Soft Computing 23: 2863–75. [Google Scholar] [CrossRef]

- Carvalho, Diogo V., Eduardo M. Pereira, and Jaime S. Cardoso. 2019. Machine learning interpretability: A survey on methods and metrics. Electronics 8: 832. [Google Scholar] [CrossRef]

- Cevolini, Alberto, and Elena Esposito. 2020. From pool to profile: Social consequences of algorithmic prediction in insurance. Big Data & Society 7: 2053951720939228. [Google Scholar]

- Chang, Wen Teng, and Kee Huong Lai. 2021. A Neural Network-Based Approach in Predicting Consumers’ Intentions of Purchasing Insurance Policies. Acta Informatica Pragensia 10: 138–54. [Google Scholar] [CrossRef]

- Chen, Irene Y., Peter Szolovits, and Marzyeh Ghassemi. 2019. Can AI help reduce disparities in general medical and mental health care? AMA Journal of Ethics 21: 167–79. [Google Scholar]

- Cheng, Min-Yuan, Hsien-Sheng Peng, Yu-Wei Wu, and Yi-Hung Liao. 2011. Decision making for contractor insurance deductible using the evolutionary support vector machines inference model. Expert Systems with Applications 38: 6547–55. [Google Scholar] [CrossRef]

- Cheng, Xiang, Zhuo Jin, and Hailiang Yang. 2020. Optimal insurance strategies: A hybrid deep learning Markov chain approximation approach. ASTIN Bulletin: The Journal of the IAA 50: 449–77. [Google Scholar] [CrossRef]

- Chi, Oscar Hengxuan, Gregory Denton, and Dogan Gursoy. 2020. Artificially intelligent device use in service delivery: A systematic review, synthesis, and research agenda. Journal of Hospitality Marketing & Management 29: 757–86. [Google Scholar]

- Christmann, Andreas. 2004. An approach to model complex high–dimensional insurance data. Allgemeines Statistisches Archiv 88: 375–96. [Google Scholar] [CrossRef]

- Clinciu, Miruna-Adriana, and Helen Hastie. 2019. A survey of explainable AI terminology. Paper presented at the 1st Workshop on Interactive Natural Language Technology for Explainable Artificial Intelligence (NL4XAI 2019), Tokyo, Japan, October 29–November 1. [Google Scholar]

- Coalition Against Insurance Fraud. 2020. Artificial Intelligence & Insurance Fraud. Washington, DC: Coalition Against Insurance Fraud. Available online: https://insurancefraud.org/wp-content/uploads/Artificial-Intelligence-and-Insurance-Fraud-2020.pdf (accessed on 2 May 2021).

- Colaner, Nathan. 2022. Is explainable artificial intelligence intrinsically valuable? AI & Society 37: 231–38. [Google Scholar]

- Confalonieri, Roberto, Ludovik Coba, Benedikt Wagner, and Tarek R. Besold. 2021. A historical perspective of explainable Artificial Intelligence. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 11: e1391. [Google Scholar] [CrossRef]

- Daniels, Norman. 2011. The ethics of health reform: Why we should care about who is missing coverage. Connecticut Law Review 44: 1057. [Google Scholar]

- Danilevsky, Marina, Kun Qian, Ranit Aharonov, Yannis Katsis, Ban Kawas, and Prithviraj Sen. 2020. A survey of the state of explainable AI for natural language processing. arXiv arXiv:2010.00711. [Google Scholar]

- Das, Arun, and Paul Rad. 2020. Opportunities and challenges in explainable artificial intelligence (xai): A survey. arXiv arXiv:2006.11371. [Google Scholar]

- David, Mihaela. 2015. Auto insurance premium calculation using generalized linear models. Procedia Economics and Finance 20: 147–56. [Google Scholar] [CrossRef]

- Delong, Łukasz, and Mario V. Wüthrich. 2020. Neural networks for the joint development of individual payments and claim incurred. Risks 8: 33. [Google Scholar] [CrossRef]

- Demajo, Lara Marie, Vince Vella, and Alexiei Dingli. 2020. Explainable AI for interpretable credit scoring. arXiv arXiv:2012.03749. [Google Scholar]

- Denuit, Michel, and Stefan Lang. 2004. Non-life rate-making with Bayesian GAMs. Insurance: Mathematics and Economics 35: 627–47. [Google Scholar] [CrossRef]

- Deprez, Philippe, Pavel V. Shevchenko, and Mario V. Wüthrich. 2017. Machine learning techniques for mortality modeling. European Actuarial Journal 7: 337–52. [Google Scholar] [CrossRef]

- Desik, P. H. Anantha, Samarendra Behera, Prashanth Soma, and Nirmala Sundari. 2016. Segmentation-Based Predictive Modeling Approach in Insurance Marketing Strategy. IUP Journal of Business Strategy 13: 35–45. [Google Scholar]

- Desik, P. H. Anantha, and Samarendra Behera. 2012. Acquiring Insurance Customer: The CHAID Way. IUP Journal of Knowledge Management 10: 7–13. [Google Scholar]

- Devriendt, Sander, Katrien Antonio, Tom Reynkens, and Roel Verbelen. 2021. Sparse regression with multi-type regularized feature modeling. Insurance: Mathematics and Economics 96: 248–61. [Google Scholar] [CrossRef]

- Dhieb, Najmeddine, Hakim Ghazzai, Hichem Besbes, and Yehia Massoud. 2019. Extreme gradient boosting machine learning algorithm for safe auto insurance operations. Paper presented at the 2019 IEEE International Conference on Vehicular Electronics and Safety (ICVES), Cairo, Egypt, September 4–6. [Google Scholar]

- Diprose, William K., Nicholas Buist, Ning Hua, Quentin Thurier, George Shand, and Reece Robinson. 2020. Physician understanding, explainability, and trust in a hypothetical machine learning risk calculator. Journal of the American Medical Informatics Association 27: 592–600. [Google Scholar] [CrossRef]

- Doshi-Velez, Finale, and Been Kim. 2017. Towards a rigorous science of interpretable machine learning. arXiv arXiv:1702.08608. [Google Scholar]

- Došilović, Filip Karlo, Mario Brčić, and Nikica Hlupić. 2018. Explainable artificial intelligence: A survey. Paper presented at the 2018 41st International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, May 21–25. [Google Scholar]

- Du, Mengnan, Ninghao Liu, and Xia Hu. 2019. Techniques for interpretable machine learning. Communications of the ACM 63: 68–77. [Google Scholar] [CrossRef]

- Duval, Francis, and Mathieu Pigeon. 2019. Individual loss reserving using a gradient boosting-based approach. Risks 7: 79. [Google Scholar]

- Eckert, Theresa, and Stefan Hüsig. 2021. Innovation portfolio management: A systematic review and research agenda in regards to digital service innovations. Management Review Quarterly 72: 187–230. [Google Scholar] [CrossRef]

- EIOPA. 2021. Artificial Intelligence Governance Principles: Towards Ethical and Trustworthy Artificial Intelligence in the European Insurance Sector. Luxembourg: European Insurance and Occupational Pensions Authority (EIOPA). [Google Scholar]

- Eling, Martin, Davide Nuessle, and Julian Staubli. 2021. The impact of artificial intelligence along the insurance value chain and on the insurability of risks. The Geneva Papers on Risk and Insurance-Issues and Practice 47: 205–41. [Google Scholar] [CrossRef]

- EU. 2016. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 27 June 2020).

- Fang, Kuangnan, Yefei Jiang, and Malin Song. 2016. Customer profitability forecasting using Big Data analytics: A case study of the insurance industry. Computers & Industrial Engineering 101: 554–64. [Google Scholar]

- Felzmann, Heike, Eduard Fosch Villaronga, Christoph Lutz, and Aurelia Tamò-Larrieux. 2019. Transparency you can trust: Transparency requirements for artificial intelligence between legal norms and contextual concerns. Big Data & Society 6: 2053951719860542. [Google Scholar]

- Ferguson, Niall. 2008. The Ascent of Money: A Financial History of the World. London: Penguin. [Google Scholar]

- Floridi, Luciano, Josh Cowls, Monica Beltrametti, Raja Chatila, Patrice Chazerand, Virginia Dignum, Christoph Luetge, Robert Madelin, Ugo Pagallo, and Francesca Rossi. 2018. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds and Machines 28: 689–707. [Google Scholar] [CrossRef]

- Ford, Martin. 2018. Architects of Intelligence: The Truth about AI from the People Building it. Birmingham: Packt Publishing Ltd. [Google Scholar]

- Fox, Maria, Derek Long, and Daniele Magazzeni. 2017. Explainable planning. arXiv arXiv:1709.10256. [Google Scholar]

- Frees, Edward W., and Emiliano A. Valdez. 2008. Hierarchical insurance claims modeling. Journal of the American Statistical Association 103: 1457–69. [Google Scholar] [CrossRef]

- Freitas, Alex A. 2014. Comprehensible classification models: A position paper. ACM SIGKDD Explorations Newsletter 15: 1–10. [Google Scholar] [CrossRef]

- Gabrielli, Andrea. 2021. An individual claims reserving model for reported claims. European Actuarial Journal 11: 541–77. [Google Scholar] [CrossRef]

- Gade, Krishna, Sahin Cem Geyik, Krishnaram Kenthapadi, Varun Mithal, and Ankur Taly. 2019. Explainable AI in industry. Paper presented at the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, August 4–8. [Google Scholar]

- Gan, Guojun, and Emiliano A. Valdez. 2017. Valuation of large variable annuity portfolios: Monte Carlo simulation and synthetic datasets. Dependence Modeling 5: 354–74. [Google Scholar] [CrossRef]

- Gan, Guojun, and Jimmy Xiangji Huang. 2017. A data mining framework for valuing large portfolios of variable annuities. Paper presented at the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, August 13–17. [Google Scholar]

- Gan, Guojun. 2013. Application of data clustering and machine learning in variable annuity valuation. Insurance: Mathematics and Economics 53: 795–801. [Google Scholar]

- Ghani, Rayid, and Mohit Kumar. 2011. Interactive learning for efficiently detecting errors in insurance claims. Paper presented at the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, August 21–24. [Google Scholar]

- Ghorbani, Ali, and Sara Farzai. 2018. Fraud detection in automobile insurance using a data mining based approach. International Journal of Mechatronics, Elektrical and Computer Technology (IJMEC) 8: 3764–71. [Google Scholar]

- GlobalData. 2021. Artificial Intelligence (AI) in Insurance—Thematic Research. London: GlobalData. [Google Scholar]

- Goddard, Michelle. 2017. The EU General Data Protection Regulation (GDPR): European regulation that has a global impact. International Journal of Market Research 59: 703–5. [Google Scholar] [CrossRef]

- Goodman, Bryce, and Seth Flaxman. 2017. European Union regulations on algorithmic decision-making and a “right to explanation”. AI Magazine 38: 50–57. [Google Scholar] [CrossRef]

- Gramegna, Alex, and Paolo Giudici. 2020. Why to Buy Insurance? An Explainable Artificial Intelligence Approach. Risks 8: 137. [Google Scholar] [CrossRef]

- Gramespacher, Thomas, and Jan-Alexander Posth. 2021. Employing explainable AI to optimize the return target function of a loan portfolio. Frontiers in Artificial Intelligence 4: 693022. [Google Scholar] [CrossRef]

- Grant, Eric. 2012. The Social and Economic Value of Insurance. Geneva: The Geneva Association (The International Association for the Study of Insurance Economics). Available online: https://www.genevaassociation.org/sites/default/files/research-topics-docu-ment-type/pdf_public/ga2012-the_social_and_economic_value_of_insurance.pdf (accessed on 3 July 2020).

- Grize, Yves-Laurent, Wolfram Fischer, and Christian Lützelschwab. 2020. Machine learning applications in nonlife insurance. Applied Stochastic Models in Business and Industry 36: 523–37. [Google Scholar] [CrossRef]

- Guelman, Leo. 2012. Gradient boosting trees for auto insurance loss cost modeling and prediction. Expert Systems with Applications 39: 3659–67. [Google Scholar] [CrossRef]

- Gweon, Hyukjun, Shu Li, and Rogemar Mamon. 2020. An effective bias-corrected bagging method for the valuation of large variable annuity portfolios. ASTIN Bulletin: The Journal of the IAA 50: 853–71. [Google Scholar] [CrossRef]

- Hadji Misheva, Branka, Ali Hirsa, Joerg Osterrieder, Onkar Kulkarni, and Stephen Fung Lin. 2021. Explainable AI in Credit Risk Management. Credit Risk Management, March 1. [Google Scholar]

- Hawley, Katherine. 2014. Trust, distrust and commitment. Noûs 48: 1–20. [Google Scholar] [CrossRef]

- Henckaerts, Roel, Katrien Antonio, and Marie-Pier Côté. 2020. Model-Agnostic Interpretable and Data-driven suRRogates suited for highly regulated industries. Stat 1050: 14. [Google Scholar]

- Henckaerts, Roel, Marie-Pier Côté, Katrien Antonio, and Roel Verbelen. 2021. Boosting insights in insurance tariff plans with tree-based machine learning methods. North American Actuarial Journal 25: 255–85. [Google Scholar] [CrossRef]

- Herland, Matthew, Taghi M. Khoshgoftaar, and Richard A. Bauder. 2018. Big data fraud detection using multiple medicare data sources. Journal of Big Data 5: 1–21. [Google Scholar] [CrossRef]

- Hinton, Geoffrey, Oriol Vinyals, and Jeff Dean. 2015. Distilling the knowledge in a neural network. arXiv arXiv:1503.02531. [Google Scholar]

- Hoffman, Robert R. 2017. A taxonomy of emergent trusting in the human–machine relationship. In Cognitive Systems Engineering: The Future for a Changing World. Boca Raton: CRC Press, pp. 137–64. [Google Scholar]

- Hoffman, Robert R., Shane T. Mueller, Gary Klein, and Jordan Litman. 2018. Metrics for explainable AI: Challenges and prospects. arXiv arXiv:1812.04608. [Google Scholar]

- Hollis, Aidan, and Jason Strauss. 2007. Privacy, Driving Data and Automobile Insurance: An Economic Analysis. Munich: University Library of Munich. [Google Scholar]

- Honegger, Milo. 2018. Shedding light on black box machine learning algorithms: Development of an axiomatic framework to assess the quality of methods that explain individual predictions. arXiv arXiv:1808.05054. [Google Scholar]

- Huang, Yifan, and Shengwang Meng. 2019. Automobile insurance classification ratemaking based on telematics driving data. Decision Support Systems 127: 113156. [Google Scholar] [CrossRef]

- Ibiwoye, Ade, Olawale Olaniyi Ajibola, and Ashim Babatunde Sogunro. 2012. Artificial neural network model for predicting insurance insolvency. International Journal of Management and Business Research 2: 59–68. [Google Scholar]

- Islam, Sheikh Rabiul, William Eberle, and Sheikh K. Ghafoor. 2020. Towards quantification of explainability in explainable artificial intelligence methods. Paper presented at the Thirty-Third International Flairs Conference, North Miami Beach, FL, USA, May 17–20. [Google Scholar]

- Jacovi, Alon, Ana Marasović, Tim Miller, and Yoav Goldberg. 2021. Formalizing trust in artificial intelligence: Prerequisites, causes and goals of human trust in AI. Paper presented at the 2021 ACM Conference on Fairness, Accountability, and Transparency, Toronto, ON, Canada, March 3–10. [Google Scholar]

- Jain, Rachna, Jafar A. Alzubi, Nikita Jain, and Pawan Joshi. 2019. Assessing risk in life insurance using ensemble learning. Journal of Intelligent & Fuzzy Systems 37: 2969–80. [Google Scholar]

- Jeong, Himchan, Guojun Gan, and Emiliano A. Valdez. 2018. Association rules for understanding policyholder lapses. Risks 6: 69. [Google Scholar] [CrossRef]

- Jiang, Xinxin, Shirui Pan, Guodong Long, Fei Xiong, Jing Jiang, and Chengqi Zhang. 2018. Cost-sensitive parallel learning framework for insurance intelligence operation. IEEE Transactions on Industrial Electronics 66: 9713–23. [Google Scholar] [CrossRef]

- Jin, Zhuo, Hailiang Yang, and George Yin. 2021. A hybrid deep learning method for optimal insurance strategies: Algorithms and convergence analysis. Insurance: Mathematics and Economics 96: 262–75. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2019. Medicare fraud detection using neural networks. Journal of Big Data 6: 1–35. [Google Scholar] [CrossRef]

- Joram, Mutai K., Bii K. Harrison, and Kiplang’at N. Joseph. 2017. A knowledge-based system for life insurance underwriting. International Journal of Information Technology and Computer Science 3: 40–49. [Google Scholar] [CrossRef][Green Version]

- Josephson, John R., and Susan G. Josephson. 1996. Abductive Inference: Computation, Philosophy, Technology. Cambridge: Cambridge University Press. [Google Scholar]

- Karamizadeh, Faramarz, and Seyed Ahad Zolfagharifar. 2016. Using the clustering algorithms and rule-based of data mining to identify affecting factors in the profit and loss of third party insurance, insurance company auto. Indian Journal of Science and Technology 9: 1–9. [Google Scholar] [CrossRef]

- Kašćelan, Vladimir, Ljiljana Kašćelan, and Milijana Novović Burić. 2016. A nonparametric data mining approach for risk prediction in car insurance: A case study from the Montenegrin market. Economic Research-Ekonomska Istraživanja 29: 545–58. [Google Scholar] [CrossRef]

- Keller, Benno, Martin Eling, Hato Schmeiser, Markus Christen, and Michele Loi. 2018. Big Data and Insurance: Implications for Innovation, Competition and Privacy. Geneva: Geneva Association-International Association for the Study of Insurance. [Google Scholar]

- Kelley, Kevin H., Lisa M. Fontanetta, Mark Heintzman, and Nikki Pereira. 2018. Artificial intelligence: Implications for social inflation and insurance. Risk Management and Insurance Review 21: 373–87. [Google Scholar] [CrossRef]

- Khodairy, Moayed A., and Gibrael Abosamra. 2021. Driving Behavior Classification Based on Oversampled Signals of Smartphone Embedded Sensors Using an Optimized Stacked-LSTM Neural Networks. IEEE Access 9: 4957–72. [Google Scholar] [CrossRef]

- Khuong, Mai Ngoc, and Tran Manh Tuan. 2016. A new neuro-fuzzy inference system for insurance forecasting. Paper presented at the International Conference on Advances in Information and Communication Technology, Bikaner, India, August 12–13. [Google Scholar]

- Kiermayer, Mark, and Christian Weiß. 2021. Grouping of contracts in insurance using neural networks. Scandinavian Actuarial Journal 2021: 295–322. [Google Scholar] [CrossRef]

- Kieu, Tung, Bin Yang, Chenjuan Guo, and Christian S. Jensen. 2018. Distinguishing trajectories from different drivers using incompletely labeled trajectories. Paper presented at the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, October 22–26. [Google Scholar]

- Kim, Hyong, and Errol Gardner. 2015. The Science of Winning in Financial Services-Competing on Analytics: Opportunities to Unlock the Power of Data. Journal of Financial Perspectives 3: 1–34. [Google Scholar]

- Kopitar, Leon, Leona Cilar, Primoz Kocbek, and Gregor Stiglic. 2019. Local vs. global interpretability of machine learning models in type 2 diabetes mellitus screening. In Artificial Intelligence in Medicine: Knowledge Representation and Transparent and Explainable Systems. Berlin: Springer, pp. 108–19. [Google Scholar]

- Kose, Ilker, Mehmet Gokturk, and Kemal Kilic. 2015. An interactive machine-learning-based electronic fraud and abuse detection system in healthcare insurance. Applied Soft Computing 36: 283–99. [Google Scholar] [CrossRef]

- Koster, Harold. 2020. Towards better implementation of the European Union’s anti-money laundering and countering the financing of terrorism framework. Journal of Money Laundering Control 23: 379–86. [Google Scholar] [CrossRef]

- Koster, Olivier, Ruud Kosman, and Joost Visser. 2021. A Checklist for Explainable AI in the Insurance Domain. Paper presented at the International Conference on the Quality of Information and Communications Technology, Algarve, Portugal, September 8–11. [Google Scholar]

- Kowshalya, G., and M. Nandhini. 2018. Predicting fraudulent claims in automobile insurance. Paper presented at the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, April 20–21. [Google Scholar]

- Krafft, Peaks, Meg Young, Michael Katell, Karen Huang, and Ghislain Bugingo. 2020. Defining AI in policy versus practice. Paper presented at the AAAI/ACM Conference on AI, Ethics, and Society, New York, NY, USA, February 7–8. [Google Scholar]

- Kumar, Akshi, Shubham Dikshit, and Victor Hugo C. Albuquerque. 2021. Explainable Artificial Intelligence for Sarcasm Detection in Dialogues. Wireless Communications and Mobile Computing 2021: 2939334. [Google Scholar] [CrossRef]

- Kumar, Mohit, Rayid Ghani, and Zhu-Song Mei. 2010. Data mining to predict and prevent errors in health insurance claims processing. Paper presented at the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, July 24–28. [Google Scholar]

- Kuo, Kevin, and Daniel Lupton. 2020. Towards Explainability of Machine Learning Models in Insurance Pricing. arXiv arXiv:2003.10674. [Google Scholar]

- Kute, Dattatray V., Biswajeet Pradhan, Nagesh Shukla, and Abdullah Alamri. 2021. Deep learning and explainable artificial intelligence techniques applied for detecting money laundering—A critical review. IEEE Access 9: 82300–17. [Google Scholar] [CrossRef]

- Kwak, Byung Il, Mee Lan Han, and Huy Kang Kim. 2020. Driver Identification Based on Wavelet Transform Using Driving Patterns. IEEE Transactions on Industrial Informatics 17: 2400–10. [Google Scholar] [CrossRef]

- Kyu, Phyu Mar, and Kuntpong Woraratpanya. 2020. Car Damage Detection and Classification. Paper presented at the 11th International Conference on Advances in Information Technology, Bangkok, Thailand, July 1–3. [Google Scholar]

- Larivière, Bart, and Dirk Van den Poel. 2005. Predicting customer retention and profitability by using random forests and regression forests techniques. Expert Systems with Applications 29: 472–84. [Google Scholar] [CrossRef]

- Lau, Lucas, and Arun Tripathi. 2011. Mine your business—A novel application of association rules for insurance claims analytics. In CAS E-Forum. Arlington: Casualty Actuarial Society. [Google Scholar]

- Lee, Gee Y., Scott Manski, and Tapabrata Maiti. 2020. Actuarial applications of word embedding models. ASTIN Bulletin: The Journal of the IAA 50: 1–24. [Google Scholar] [CrossRef]

- Li, Yaqi, Chun Yan, Wei Liu, and Maozhen Li. 2018. A principle component analysis-based random forest with the potential nearest neighbor method for automobile insurance fraud identification. Applied Soft Computing 70: 1000–9. [Google Scholar] [CrossRef]

- Liao, Shu-Hsien, Pei-Hui Chu, and Pei-Yuan Hsiao. 2012. Data mining techniques and applications–A decade review from 2000 to 2011. Expert Systems with Applications 39: 11303–11. [Google Scholar] [CrossRef]

- Lin, Chaohsin. 2009. Using neural networks as a support tool in the decision making for insurance industry. Expert Systems with Applications 36: 6914–17. [Google Scholar] [CrossRef]

- Lin, Justin, and Ha-Joon Chang. 2009. Should Industrial Policy in developing countries conform to comparative advantage or defy it? A debate between Justin Lin and Ha-Joon Chang. Development Policy Review 27: 483–502. [Google Scholar] [CrossRef]

- Lin, Weiwei, Ziming Wu, Longxin Lin, Angzhan Wen, and Jin Li. 2017. An ensemble random forest algorithm for insurance big data analysis. IEEE Access 5: 16568–75. [Google Scholar] [CrossRef]

- Lipton, Zachary C. 2018. The Mythos of Model Interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 16: 31–57. [Google Scholar] [CrossRef]

- Liu, Jenn-Long, and Chien-Liang Chen. 2012. Application of evolutionary data mining algorithms to insurance fraud prediction. Paper presented at the 4th International Conference on Machine Learning and Computing IPCSIT, Hong Kong, China, March 10–11. [Google Scholar]

- Liu, Qing, David Pitt, and Xueyuan Wu. 2014. On the prediction of claim duration for income protection insurance policyholders. Annals of Actuarial Science 8: 42–62. [Google Scholar] [CrossRef]

- Lopez, Olivier, and Xavier Milhaud. 2021. Individual reserving and nonparametric estimation of claim amounts subject to large reporting delays. Scandinavian Actuarial Journal 2021: 34–53. [Google Scholar] [CrossRef]

- Lou, Yin, Rich Caruana, Johannes Gehrke, and Giles Hooker. 2013. Accurate intelligible models with pairwise interactions. Paper presented at the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, August 11–14. [Google Scholar]

- Lundberg, Scott M, and Su-In Lee. 2017. A unified approach to interpreting model predictions. Paper presented at the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, December 4–9. [Google Scholar]

- Lundberg, Scott M, Gabriel Erion, Hugh Chen, Alex DeGrave, Jordan M Prutkin, Bala Nair, Ronit Katz, Jonathan Himmelfarb, Nisha Bansal, and Su-In Lee. 2020. From local explanations to global understanding with explainable AI for trees. Nature Machine Intelligence 2: 56–67. [Google Scholar] [CrossRef]

- Ma, Yu-Luen, Xiaoyu Zhu, Xianbiao Hu, and Yi-Chang Chiu. 2018. The use of context-sensitive insurance telematics data in auto insurance rate making. Transportation Research Part A: Policy and Practice 113: 243–58. [Google Scholar] [CrossRef]

- Mascharka, David, Philip Tran, Ryan Soklaski, and Arjun Majumdar. 2018. Transparency by design: Closing the gap between performance and interpretability in visual reasoning. Paper presented at the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, June 18–23. [Google Scholar]

- Matloob, Irum, Shoab Ahmed Khan, and Habib Ur Rahman. 2020. Sequence Mining and Prediction-Based Healthcare Fraud Detection Methodology. IEEE Access 8: 143256–73. [Google Scholar] [CrossRef]

- Mayer, Roger C., James H. Davis, and F. David Schoorman. 1995. An integrative model of organizational trust. Academy of Management Review 20: 709–34. [Google Scholar] [CrossRef]

- Maynard, Trevor, Luca Baldassarre, Yves-Alexandre de Montjoye, Liz McFall, and María Óskarsdóttir. 2022. AI: Coming of age? Annals of Actuarial Science 16: 1–5. [Google Scholar] [CrossRef]

- McFall, Liz, Gert Meyers, and Ine Van Hoyweghen. 2020. The personalisation of insurance: Data, behaviour and innovation. Big Data & Society 7: 2053951720973707. [Google Scholar]

- McKnight, D. Harrison, Vivek Choudhury, and Charles Kacmar. 2002. Developing and validating trust measures for e-commerce: An integrative typology. Information Systems Research 13: 334–59. [Google Scholar] [CrossRef]

- Mehdiyev, Nijat, Constantin Houy, Oliver Gutermuth, Lea Mayer, and Peter Fettke. 2021. Explainable Artificial Intelligence (XAI) Supporting Public Administration Processes–On the Potential of XAI in Tax Audit Processes. Cham: Springer. [Google Scholar]

- Meyerson, Debra, Karl E. Weick, and Roderick M. Kramer. 1996. Swift trust and temporary groups. Trust in Organizations: Frontiers of Theory and Research 166: 195. [Google Scholar]

- Mizgier, Kamil J., Otto Kocsis, and Stephan M. Wagner. 2018. Zurich Insurance uses data analytics to leverage the BI insurance proposition. Interfaces 48: 94–107. [Google Scholar] [CrossRef]

- Mohamadloo, Azam, Ali Ramezankhani, Saeed Zarein-Dolab, Jamshid Salamzadeh, and Fatemeh Mohamadloo. 2017. A systematic review of main factors leading to irrational prescription of medicine. Iranian Journal of Psychiatry and Behavioral Sciences 11: e10242. [Google Scholar] [CrossRef]

- Molnar, Christoph. 2019. Interpretable Machine Learning. Morrisville: Lulu Press. [Google Scholar]

- Moradi, Milad, and Matthias Samwald. 2021. Post-hoc explanation of black-box classifiers using confident itemsets. Expert Systems with Applications 165: 113941. [Google Scholar] [CrossRef]

- Morik, Katharina, Christian Hüppej, and Klaus Unterstein. 2002. End-user access to multiple sources-Incorporating knowledge discovery into knowledge management. Paper presented at the International Conference on Practical Aspects of Knowledge Management, Vienna, Austria, December 2–3. [Google Scholar]

- Motoda, Hiroshi, and Huan Liu. 2002. Feature selection, extraction and construction. Communication of IICM (Institute of Information and Computing Machinery, Taiwan) 5: 2. [Google Scholar]

- Mueller, Shane T., Robert R. Hoffman, William Clancey, Abigail Emrey, and Gary Klein. 2019. Explanation in Human-AI Systems: A literature meta-review, synopsis of key ideas and publications, and bibliography for Explainable AI. arXiv arXiv:1902.01876. [Google Scholar]

- Mullins, Martin, Christopher P. Holland, and Martin Cunneen. 2021. Creating ethics guidelines for artificial intelligence and big data analytics customers: The case of the consumer European insurance market. Patterns 2: 100362. [Google Scholar] [CrossRef]

- NallamReddy, Sundari, Samarandra Behera, Sanjeev Karadagi, and A Desik. 2014. Application of multiple random centroid (MRC) based k-means clustering algorithm in insurance—A review article. Operations Research and Applications: An International Journal 1: 15–21. [Google Scholar]

- Naylor, Michael. 2017. Insurance Transformed: Technological Disruption. Berlin: Springer. [Google Scholar]

- Neumann, Łukasz, Robert M. Nowak, Rafał Okuniewski, and Paweł Wawrzyński. 2019. Machine Learning-Based Predictions of Customers’ Decisions in Car Insurance. Applied Artificial Intelligence 33: 817–28. [Google Scholar] [CrossRef]

- Ngai, Eric W. T., Yong Hu, Yiu Hing Wong, Yijun Chen, and Xin Sun. 2011. The application of data mining techniques in financial fraud detection: A classification framework and an academic review of literature. Decision Support Systems 50: 559–69. [Google Scholar] [CrossRef]

- Ntoutsi, Eirini, Pavlos Fafalios, Ujwal Gadiraju, Vasileios Iosifidis, Wolfgang Nejdl, Maria-Esther Vidal, Salvatore Ruggieri, Franco Turini, Symeon Papadopoulos, and Emmanouil Krasanakis. 2020. Bias in data-driven artificial intelligence systems—An introductory survey. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery 10: e1356. [Google Scholar] [CrossRef]

- OECD, Organisation for Economic Co-operation and Development. 2020. The Impact of Big Data and Artificial Intelligence (AI) in the Insurance Sector. Paris: OECD. Available online: https://www.oecd.org/finance/Impact-Big-Data-AI-in-the-Insurance-Sector.pdf (accessed on 1 September 2021).

- Page, Matthew J., and David Moher. 2017. Evaluations of the uptake and impact of the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) Statement and extensions: A scoping review. Systematic Reviews 6: 1–14. [Google Scholar] [CrossRef] [PubMed]

- Palacio, Sebastian, Adriano Lucieri, Mohsin Munir, Jörn Hees, Sheraz Ahmed, and Andreas Dengel. 2021. XAI Handbook: Towards a Unified Framework for Explainable AI. arXiv arXiv:2105.06677. [Google Scholar]

- Paruchuri, Harish. 2020. The Impact of Machine Learning on the Future of Insurance Industry. American Journal of Trade and Policy 7: 85–90. [Google Scholar] [CrossRef]

- Pathak, Jagdish, Navneet Vidyarthi, and Scott L. Summers. 2005. A fuzzy-based algorithm for auditors to detect elements of fraud in settled insurance claims. Managerial Auditing Journal 20: 632–44. [Google Scholar] [CrossRef]

- Payrovnaziri, Seyedeh Neelufar, Zhaoyi Chen, Pablo Rengifo-Moreno, Tim Miller, Jiang Bian, Jonathan H. Chen, Xiuwen Liu, and Zhe He. 2020. Explainable artificial intelligence models using real-world electronic health record data: A systematic scoping review. Journal of the American Medical Informatics Association 27: 1173–85. [Google Scholar] [CrossRef]

- Pieters, Wolter. 2011. Explanation and trust: What to tell the user in security and AI? Ethics and Information Technology 13: 53–64. [Google Scholar] [CrossRef]

- Putnam, Vanessa, and Cristina Conati. 2019. Exploring the Need for Explainable Artificial Intelligence (XAI) in Intelligent Tutoring Systems (ITS). Paper presented at the IUI Workshops, Los Angeles, CA, USA, March 16–20. [Google Scholar]

- Quan, Zhiyu, and Emiliano A. Valdez. 2018. Predictive analytics of insurance claims using multivariate decision trees. Dependence Modeling 6: 377–407. [Google Scholar] [CrossRef]

- Ravi, Kumar, Vadlamani Ravi, and P. Sree Rama Krishna Prasad. 2017. Fuzzy formal concept analysis based opinion mining for CRM in financial services. Applied Soft Computing 60: 786–807. [Google Scholar] [CrossRef]

- Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. 2016. “Why should i trust you?” Explaining the predictions of any classifier. Paper presented at the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, August 13–17. [Google Scholar]

- Rieder, Gernot, and Judith Simon. 2017. Big data: A new empiricism and its epistemic and socio-political consequences. In Berechenbarkeit der Welt? Berlin: Springer, pp. 85–105. [Google Scholar]

- Riikkinen, Mikko, Hannu Saarijärvi, Peter Sarlin, and Ilkka Lähteenmäki. 2018. Using artificial intelligence to create value in insurance. International Journal of Bank Marketing 36: 1145–68. [Google Scholar] [CrossRef]

- Robinson, Stephen Cory. 2020. Trust, transparency, and openness: How inclusion of cultural values shapes Nordic national public policy strategies for artificial intelligence (AI). Technology in Society 63: 101421. [Google Scholar] [CrossRef]

- Rosenfeld, Avi. 2021. Better metrics for evaluating explainable artificial intelligence. Paper presented at the 20th International Conference on Autonomous Agents and Multiagent Systems, London, UK, May 3–7. [Google Scholar]

- Rudin, Cynthia. 2018. Please stop explaining black box models for high stakes decisions. Stat 1050: 26. [Google Scholar]

- Sadreddini, Zhaleh, Ilknur Donmez, and Halim Yanikomeroglu. 2021. Cancel-for-Any-Reason Insurance Recommendation Using Customer Transaction-Based Clustering. IEEE Access 9: 39363–74. [Google Scholar] [CrossRef]

- Sakthivel, K. M., and C. S. Rajitha. 2017. Artificial intelligence for estimation of future claim frequency in non-life insurance. Global Journal of Pure and Applied Mathematics 13: 1701–10. [Google Scholar]

- Samonte, Mary Jane C., Bobby D. Gerardo, Arnel C. Fajardo, and Ruji P. Medina. 2018. ICD-9 tagging of clinical notes using topical word embedding. Paper presented at the 2018 International Conference on Internet and e-Business, Singapore, April 25–27. [Google Scholar]

- Sarkar, Abhineet. 2020. Disrupting the Insurance Value Chain. In The AI Book: The Artificial Intelligence Handbook for Investors, Entrepreneurs and FinTech Visionaries. New York: Wiley, pp. 89–91. [Google Scholar]

- Sevim, Şerafettin, Birol Yildiz, and Nilüfer Dalkiliç. 2016. Risk Assessment for Accounting Professional Liability Insurance. Sosyoekonomi 24: 93–112. [Google Scholar] [CrossRef]

- Shah, Paras, and Allon Guez. 2009. Mortality forecasting using neural networks and an application to cause-specific data for insurance purposes. Journal of Forecasting 28: 535–48. [Google Scholar] [CrossRef]

- Shapiro, Arnold F. 2007. An overview of insurance uses of fuzzy logic. In Computational Intelligence in Economics and Finance. Berlin: Springer, pp. 25–61. [Google Scholar]

- Sheehan, Barry, Finbarr Murphy, Cian Ryan, Martin Mullins, and Hai Yue Liu. 2017. Semi-autonomous vehicle motor insurance: A Bayesian Network risk transfer approach. Transportation Research Part C: Emerging Technologies 82: 124–37. [Google Scholar] [CrossRef]

- Siami, Mohammad, Mohsen Naderpour, and Jie Lu. 2020. A mobile telematics pattern recognition framework for driving behavior extraction. IEEE Transactions on Intelligent Transportation Systems 22: 1459–72. [Google Scholar] [CrossRef]

- Siau, Keng, and Weiyu Wang. 2018. Building trust in artificial intelligence, machine learning, and robotics. Cutter Business Technology Journal 31: 47–53. [Google Scholar]

- Siegel, Magdalena, Constanze Assenmacher, Nathalie Meuwly, and Martina Zemp. 2021. The legal vulnerability model for same-sex parent families: A mixed methods systematic review and theoretical integration. Frontiers in Psychology 12: 683. [Google Scholar] [CrossRef] [PubMed]

- Sithic, H. Lookman, and T. Balasubramanian. 2013. Survey of insurance fraud detection using data mining techniques. arXiv arXiv:1309.0806. [Google Scholar]

- Smith, Kate A., Robert J. Willis, and Malcolm Brooks. 2000. An analysis of customer retention and insurance claim patterns using data mining: A case study. Journal of the Operational Research Society 51: 532–41. [Google Scholar] [CrossRef]

- Smyth, Gordon K., and Bent Jørgensen. 2002. Fitting Tweedie’s compound Poisson model to insurance claims data: Dispersion modelling. ASTIN Bulletin: The Journal of the IAA 32: 143–57. [Google Scholar] [CrossRef]

- Sohail, Misbah, Pedro Peres, and Yuhua Li. 2021. Feature importance analysis for customer management of insurance products. Paper presented at the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, July 18–22. [Google Scholar]

- Srihari, Sargur. 2020. Explainable Artificial Intelligence: An Overview. Journal of the Washington Academy of Sciences. [Google Scholar]

- Stovold, Elizabeth, Deirdre Beecher, Ruth Foxlee, and Anna Noel-Storr. 2014. Study flow diagrams in Cochrane systematic review updates: An adapted PRISMA flow diagram. Systematic Reviews 3: 1–5. [Google Scholar] [CrossRef]

- Štrumbelj, Erik, and Igor Kononenko. 2014. Explaining prediction models and individual predictions with feature contributions. Knowledge and Information Systems 41: 647–65. [Google Scholar] [CrossRef]

- Sun, Chenfei, Zhongmin Yan, Qingzhong Li, Yongqing Zheng, Xudong Lu, and Lizhen Cui. 2018. Abnormal group-based joint medical fraud detection. IEEE Access 7: 13589–96. [Google Scholar] [CrossRef]

- Supraja, K., and S. Jessica Saritha. 2017. Robust fuzzy rule based technique to detect frauds in vehicle insurance. Paper presented at the 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS), Chennai, India, August 1–2. [Google Scholar]

- Tallant, Jonathan. 2017. Commitment in cases of trust and distrust. Thought 6: 261–267. [Google Scholar] [CrossRef]

- Tanninen, Maiju. 2020. Contested technology: Social scientific perspectives of behaviour-based insurance. Big Data & Society 7: 2053951720942536. [Google Scholar]

- Tao, Han, Liu Zhixin, and Song Xiaodong. 2012. Insurance fraud identification research based on fuzzy support vector machine with dual membership. Paper presented at the 2012 International Conference on Information Management, Innovation Management and Industrial Engineering, Sanya, China, October 20–21. [Google Scholar]

- Taylor, Linnet. 2017. What is data justice? The case for connecting digital rights and freedoms globally. Big Data & Society 4: 2053951717736335. [Google Scholar]

- Tekaya, Balkiss, Sirine El Feki, Tasnim Tekaya, and Hela Masri. 2020. Recent applications of big data in finance. Paper presented at the 2nd International Conference on Digital Tools & Uses Congress, Virtual Event, October 15–17. [Google Scholar]

- Tillmanns, Sebastian, Frenkel Ter Hofstede, Manfred Krafft, and Oliver Goetz. 2017. How to separate the wheat from the chaff: Improved variable selection for new customer acquisition. Journal of Marketing 81: 99–113. [Google Scholar] [CrossRef]

- Tonekaboni, Sana, Shalmali Joshi, Melissa D McCradden, and Anna Goldenberg. 2019. What clinicians want: Contextualizing explainable machine learning for clinical end use. Paper presented at the Machine Learning for Healthcare Conference, Ann Arbor, MI, USA, August 9–10. [Google Scholar]

- Toreini, Ehsan, Mhairi Aitken, Kovila Coopamootoo, Karen Elliott, Carlos Gonzalez Zelaya, and Aad Van Moorsel. 2020. The relationship between trust in AI and trustworthy machine learning technologies. Paper presented at the 2020 Conference on Fairness, Accountability, and Transparency, Barcelona, Spain, January 27–30. [Google Scholar]

- Umamaheswari, K., and S. Janakiraman. 2014. Role of data mining in insurance industry. Int J Adv Comput Technol 3: 961–66. [Google Scholar]

- Ungur, Cristina. 2017. Socio-economic valences of insurance. Revista Economia Contemporană 2: 112–18. [Google Scholar]

- van den Boom, Freyja. 2021. Regulating Telematics Insurance. In Insurance Distribution Directive. Berlin: Springer, pp. 293–325. [Google Scholar]

- Vassiljeva, Kristina, Aleksei Tepljakov, Eduard Petlenkov, and Eduard Netšajev. 2017. Computational intelligence approach for estimation of vehicle insurance risk level. Paper presented at the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, May 14–19. [Google Scholar]

- Vaziri, Jalil, and Mohammad Ali Beheshtinia. 2016. A holistic fuzzy approach to create competitive advantage via quality management in services industry (case study: Life-insurance services). Management Decision 54: 2035–62. [Google Scholar] [CrossRef]

- Verma, Aayushi, Anu Taneja, and Anuja Arora. 2017. Fraud detection and frequent pattern matching in insurance claims using data mining techniques. Paper presented at the 2017 Tenth International Conference on Contemporary Computing (IC3), Noida, India, August 10–12. [Google Scholar]

- Viaene, Stijn, Guido Dedene, and Richard A. Derrig. 2005. Auto claim fraud detection using Bayesian learning neural networks. Expert Systems with Applications 29: 653–66. [Google Scholar] [CrossRef]

- Viaene, Stijn, Richard A. Derrig, and Guido Dedene. 2004. A case study of applying boosting Naive Bayes to claim fraud diagnosis. IEEE Transactions on Knowledge and Data Engineering 16: 612–20. [Google Scholar] [CrossRef]

- Viaene, Stijn, Richard A. Derrig, Bart Baesens, and Guido Dedene. 2002. A comparison of state-of-the-art classification techniques for expert automobile insurance claim fraud detection. Journal of Risk and Insurance 69: 373–421. [Google Scholar] [CrossRef]

- Vilone, Giulia, and Luca Longo. 2020. Explainable artificial intelligence: A systematic review. arXiv arXiv:2006.00093. [Google Scholar]

- von Eschenbach, Warren J. 2021. Transparency and the black box problem: Why we do not trust AI. Philosophy & Technology 34: 1607–22. [Google Scholar]

- Walsh, Nigel, and Mike Taylor. 2020. Cutting to the Chase: Mapping AI to the Real-World Insurance Value Chain. In The AI Book: The Artificial Intelligence Handbook for Investors, Entrepreneurs and FinTech Visionaries. New York: Wiley, pp. 92–97. [Google Scholar]

- Wang, Hui Dong. 2020. Research on the Features of Car Insurance Data Based on Machine Learning. Procedia Computer Science 166: 582–87. [Google Scholar] [CrossRef]

- Wang, Yibo, and Wei Xu. 2018. Leveraging deep learning with LDA-based text analytics to detect automobile insurance fraud. Decision Support Systems 105: 87–95. [Google Scholar] [CrossRef]

- Wei, Cheng, and Li Dan. 2019. Market fluctuation and agricultural insurance forecasting model based on machine learning algorithm of parameter optimization. Journal of Intelligent & Fuzzy Systems 37: 6217–28. [Google Scholar]

- Wulf, Alexander J., and Ognyan Seizov. 2022. “Please understand we cannot provide further information”: Evaluating content and transparency of GDPR-mandated AI disclosures. AI & Society, 1–22. [Google Scholar] [CrossRef]

- Wüthrich, Mario V. 2018. Machine learning in individual claims reserving. Scandinavian Actuarial Journal 2018: 465–80. [Google Scholar] [CrossRef]

- Wüthrich, Mario V. 2020. Bias regularization in neural network models for general insurance pricing. European Actuarial Journal 10: 179–202. [Google Scholar] [CrossRef]

- Xiao, Bo, and Izak Benbasat. 2007. E-commerce product recommendation agents: Use, characteristics, and impact. MIS Quarterly 31: 137–209. [Google Scholar] [CrossRef]

- Xie, Ning, Gabrielle Ras, Marcel van Gerven, and Derek Doran. 2020. Explainable deep learning: A field guide for the uninitiated. arXiv arXiv:2004.14545. [Google Scholar]

- Xu, Wei, Shengnan Wang, Dailing Zhang, and Bo Yang. 2011. Random rough subspace based neural network ensemble for insurance fraud detection. Paper presented at the 2011 Fourth International Joint Conference on Computational Sciences and Optimization, Kunming, China, April 15–19. [Google Scholar]

- Yan, Chun, Meixuan Li, Wei Liu, and Man Qi. 2020a. Improved adaptive genetic algorithm for the vehicle Insurance Fraud Identification Model based on a BP Neural Network. Theoretical Computer Science 817: 12–23. [Google Scholar] [CrossRef]

- Yan, Chun, Xindong Wang, Xinhong Liu, Wei Liu, and Jiahui Liu. 2020b. Research on the UBI Car Insurance Rate Determination Model Based on the CNN-HVSVM Algorithm. IEEE Access 8: 160762–73. [Google Scholar] [CrossRef]

- Yan, Weizhong, and Piero P. Bonissone. 2006. Designing a Neural Network Decision System for Automated Insurance Underwriting. Paper presented at the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, July 16–21. [Google Scholar]

- Yang, Qiang, Jie Yin, Charles Ling, and Rong Pan. 2006. Extracting actionable knowledge from decision trees. IEEE Transactions on Knowledge and Data Engineering 19: 43–56. [Google Scholar] [CrossRef]

- Yang, Yi, Wei Qian, and Hui Zou. 2018. Insurance premium prediction via gradient tree-boosted Tweedie compound Poisson models. Journal of Business & Economic Statistics 36: 456–70. [Google Scholar]

- Yeo, Ai Cheo, Kate A. Smith, Robert J. Willis, and Malcolm Brooks. 2002. A mathematical programming approach to optimise insurance premium pricing within a data mining framework. Journal of the Operational Research Society 53: 1197–203. [Google Scholar] [CrossRef]

- Yeung, Karen, Andrew Howes, and Ganna Pogrebna. 2019. AI governance by human rights-centred design, deliberation and oversight: An end to ethics washing. In The Oxford Handbook of AI Ethics. Oxford: Oxford University Press. [Google Scholar]

- Zahi, Sara, and Boujemâa Achchab. 2019. Clustering of the population benefiting from health insurance using K-means. Paper presented at the 4th International Conference on Smart City Applications, Casablanca, Morocco, October 2–4. [Google Scholar]

- Zarifis, Alex, Christopher P. Holland, and Alistair Milne. 2019. Evaluating the impact of AI on insurance: The four emerging AI-and data-driven business models. Emerald Open Research 1: 15. [Google Scholar] [CrossRef]

- Zhang, Bo, and Dehua Kong. 2020. Dynamic estimation model of insurance product recommendation based on Naive Bayesian model. Paper presented at the 2020 International Conference on Cyberspace Innovation of Advanced Technologies, Guangzhou, China, December 4–6. [Google Scholar]