Machine Learning-Based Prediction of Controlled Variables of APC Systems Using Time-Series Data in the Petrochemical Industry

Abstract

1. Introduction

2. Background

2.1. APC System

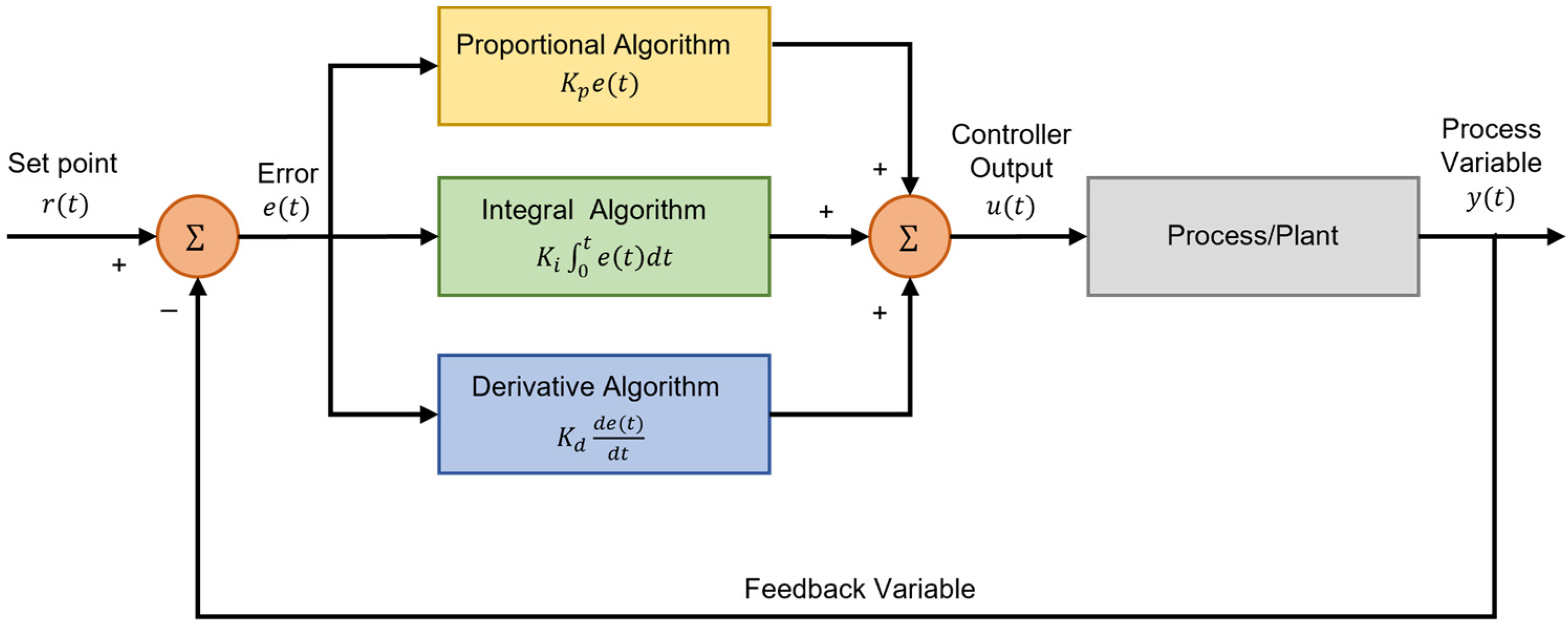

2.1.1. PID System

2.1.2. APC System

2.2. Machine Learning Algorithm

2.2.1. Random Forest

2.2.2. Support Vector Regression

2.2.3. Neural Network

2.2.4. K-Nearest Neighbor

2.2.5. XGBoost

2.2.6. Benefits of Machine Learning Algorithms

3. Proposed Idea

3.1. Overall Architecture

3.2. Time-Series Data and Feature Engineering Method

3.3. Hyper-Parameter Grid

- Maximum number of iterations: 10,000, Active Function: ReLU, Hidden layer: (10)

- Maximum number of iterations: 10,000, Active Function: ReLU, Hidden layer: (10, 10)

- Maximum number of iterations: 10,000, Active Function: ReLU, Hidden layer: (10, 10, 10)

- Maximum number of iterations: 10,000, Active Function: ReLU, Hidden layer: (10, 10, 10, 10)

3.4. Discussion

4. Experimental Results and Simulation Experiments

4.1. Experiment Environment

4.2. Datasets

4.2.1. Experiment #1 (Plant P)

4.2.2. Experiment #2 (Plant N)

4.2.3. Experiment #3 (Plant S)

4.3. Performance Metrics

4.3.1. Mean Absolute Percentage Error (MAPE)

- represents the number of observations or data points.

- is the actual value of the variable you want to predict.

- is the variable’s predicted value.

4.3.2. R-Squared

4.4. Results and Analysis

4.4.1. Experiment #1

- Minimum value of a CV

- Mean value of a CV

- Maximum value of a CV

- Maximum value of

- Previous value of

4.4.2. Experiment #2

- Minimum value of CV

- Mean value of CV

- Maximum value of CV

- Previous value of

- Standard deviation of CV

- Standard deviation of

- Average amount of change of

- Mean value of

- Maximum value of

4.4.3. Experiment #3

- Minimum value of CV

- Minimum value of

- Previous value of =

- Minimum value of

- Previous value of =

- Mean value of

- Maximum value of

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Seborg, D.E. Automation and control of chemical and petrochemical plants. In Control Systems, Robotics and Automation; Eolss: Oxford, UK, 2009; p. 496. [Google Scholar]

- Proctor, L.; Dunn, P.J.; Hawkins, J.M.; Wells, A.S.; Williams, M.T. Continuous processing in the pharmaceutical industry. In Green Chemistry in the Pharmaceutical Industry; Wiley-VCH Verlag GmbH & Co. KGaA: Weinheim, Germany, 2010; pp. 221–242. [Google Scholar]

- Li, Y.; Ang, K.H.; Chong, G.C. PID control system analysis and design. IEEE Control Syst. Mag. 2006, 26, 32–41. [Google Scholar]

- Xinping, Z.; Quanshan, L.; Huan, W.; Wenxin, W.; Qibing, J.; Lideng, P. The application of model PID or IMC-PID advanced process control to refinery and petrochemical plants. In Proceedings of the 2007 Chinese Control Conference, Zhangjiajie, China, 26–31 July 2007; IEEE: Piscataway, NJ, USA; pp. 699–703. [Google Scholar]

- Qin, S.J.; Badgwell, T.A. An overview of industrial model predictive control technology. In AIche Symposium Series; 1971-c2002; American Institute of Chemical Engineers: New York, NY, USA, 1997; Volume 93, pp. 232–256. [Google Scholar]

- Clavijo, N.; Melo, A.; Câmara, M.M.; Feital, T.; Anzai, T.K.; Diehl, F.C.; Thompson, P.H.; Pinto, J.C. Development and Application of a Data-Driven System for Sensor Fault Diagnosis in an Oil Processing Plant. Processes 2019, 7, 436. [Google Scholar] [CrossRef]

- Xiang, S.; Bai, Y.; Zhao, J. Medium-term prediction of key chemical process parameter trend with small data. Chem. Eng. Sci. 2022, 249, 117361. [Google Scholar] [CrossRef]

- Diehl, F.C.; Machado, T.O.; Anzai, T.K.; Almeida, C.S.; Moreira, C.A.; Nery Jr, G.A.; Campos, M.C.; Farenzena, M.; Trierweiler, J.O. 10% increase in oil production through a field applied APC in a Petrobras ultra-deepwater well. Control Eng. Pract. 2019, 91, 104108. [Google Scholar] [CrossRef]

- Lababidi, H.M.; Kotob, S.; Yousuf, B. Refinery advanced process control planning system. Comput. Chem. Eng. 2002, 26, 1303–1319. [Google Scholar] [CrossRef]

- Moro, L.F.L. Process technology in the petroleum refining industry—Current situation and future trends. Comput. Chem. Eng. 2003, 27, 1303–1305. [Google Scholar] [CrossRef]

- Haque, M.E.; Palanki, S.; Xu, Q. Advanced Process Control for Cost-Effective Glycol Loss Minimization in a Natural Gas Dehydration Plant under Upset Conditions. Ind. Eng. Chem. Res. 2020, 59, 7680–7692. [Google Scholar] [CrossRef]

- Serale, G.; Fiorentini, M.; Capozzoli, A.; Bernardini, D.; Bemporad, A. Model predictive control (MPC) for enhancing building and HVAC system energy efficiency: Problem formulation, applications and opportunities. Energies 2018, 11, 631. [Google Scholar] [CrossRef]

- Afram, A.; Janabi-Sharifi, F. Theory and applications of HVAC control systems–A review of model predictive control (MPC). Build. Environ. 2014, 72, 343–355. [Google Scholar] [CrossRef]

- Killian, M.; Kozek, M. Ten questions concerning model predictive control for energy efficient buildings. Build. Environ. 2016, 105, 403–412. [Google Scholar] [CrossRef]

- Barrera-Animas, A.Y.; Oyedele, L.O.; Bilal, M.; Akinosho, T.D.; Delgado, J.M.D.; Akanbi, L.A. Rainfall prediction: A comparative analysis of modern machine learning algorithms for time-series forecasting. Mach. Learn. Appl. 2022, 7, 100204. [Google Scholar] [CrossRef]

- Khosravi, A.; Machado, L.; Nunes, R.O. Time-series prediction of wind speed using machine learning algorithms: A case study Osorio wind farm, Brazil. Appl. Energy 2018, 224, 550–566. [Google Scholar] [CrossRef]

- Mudelsee, M. Trend analysis of climate time series: A review of methods. Earth-Sci. Rev. 2019, 190, 310–322. [Google Scholar] [CrossRef]

- Lu, C.J.; Lee, T.S.; Chiu, C.C. Financial time series forecasting using independent component analysis and support vector regression. Decis. Support Syst. 2009, 47, 115–125. [Google Scholar] [CrossRef]

- Parray, I.R.; Khurana, S.S.; Kumar, M.; Altalbe, A.A. Time series data analysis of stock price movement using machine learning techniques. Soft Comput. 2020, 24, 16509–16517. [Google Scholar] [CrossRef]

- Sagheer, A.; Kotb, M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing 2019, 323, 203–213. [Google Scholar] [CrossRef]

- Kwon, H.; Oh, K.C.; Choi, Y.; Chung, Y.G.; Kim, J. Development and application of machine learning-based prediction model for distillation column. Int. J. Intell. Syst. 2021, 36, 1970–1997. [Google Scholar] [CrossRef]

- Han, Y.; Zeng, Q.; Geng, Z.; Zhu, Q. Energy management and optimization modeling based on a novel fuzzy extreme learning machine: Case study of complex petrochemical industries. Energy Convers. Manag. 2018, 165, 163–171. [Google Scholar] [CrossRef]

- Geng, Z.; Zhang, Y.; Li, C.; Han, Y.; Cui, Y.; Yu, B. Energy optimization and prediction modeling of petrochemical industries: An improved convolutional neural network based on cross-feature. Energy 2020, 194, 116851. [Google Scholar] [CrossRef]

- Oleander, T. Machine Learning Framework for Petrochemical Process Industry Applications. Available online: https://aaltodoc.aalto.fi/handle/123456789/35514 (accessed on 12 June 2023).

- Raó, W. Advanced Process Control; McGraw-Hill: New York, NY, USA, 1981. [Google Scholar]

- Su, H.T. Operation-oriented advanced process control. In Proceedings of the 2004 IEEE International Symposium on Intelligent Control, Taipei, Taiwan, 4 September 2004; IEEE: Piscataway, NJ, USA; pp. 252–257. [Google Scholar]

- Mayne, D.Q. Model predictive control: Recent developments and future promise. Automatica 2014, 50, 2967–2986. [Google Scholar] [CrossRef]

- Morari, M.; Lee, J.H. Model predictive control: Past, present and future. Comput. Chem. Eng. 1999, 23, 667–682. [Google Scholar] [CrossRef]

- Qin, S.J.; Badgwell, T.A. A survey of industrial model predictive control technology. Control Eng. Pract. 2003, 11, 733–764. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-Olmo, M.; Chica-Rivas, M.J.O.G.R. Machine learning predictive models for mineral prospectivity: An evaluation of neural networks, random forest, regression trees and support vector machines. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Masini, R.P.; Medeiros, M.C.; Mendes, E.F. Machine learning advances for time series forecasting. J. Econ. Surv. 2023, 37, 76–111. [Google Scholar] [CrossRef]

- Tyralis, H.; Papacharalampous, G. Variable selection in time series forecasting using random forests. Algorithms 2017, 10, 114. [Google Scholar] [CrossRef]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Awad, M.; Khanna, R. Efficient Learning Machines: Theories, Concepts, and Applications for Engineers and System Designers; Springer Nature: Berlin, Germany, 2015; p. 268. [Google Scholar]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Mohamed, N.A.; Arshad, H. State-of-the-art in artificial neural network applications: A survey. Heliyon 2018, 4, e00938. [Google Scholar] [CrossRef]

- Martínez, F.; Frías, M.P.; Pérez, M.D.; Rivera, A.J. A methodology for applying k-nearest neighbor to time series forecasting. Artif. Intell. Rev. 2019, 52, 2019–2037. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Wuest, T.; Weimer, D.; Irgens, C.; Thoben, K.D. Machine learning in manufacturing: Advantages, challenges, and applications. Prod. Manuf. Res. 2016, 4, 23–45. [Google Scholar] [CrossRef]

- Parmezan, A.R.S.; Souza, V.M.; Batista, G.E. Evaluation of statistical and machine learning models for time series prediction: Identifying the state-of-the-art and the best conditions for the use of each model. Inf. Sci. 2019, 484, 302–337. [Google Scholar] [CrossRef]

- Shi, J.; Jain, M.; Narasimhan, G. Time series forecasting (tsf) using various deep learning models. arXiv 2022, arXiv:2204.11115. [Google Scholar]

- Box, G.E.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control; John Wiley & Sons: Hoboken, NJ, USA, 2015. [Google Scholar]

- Paine, T.L.; Paduraru, C.; Michi, A.; Gulcehre, C.; Zolna, K.; Novikov, A.; Wang, Z.; de Freitas, N. Hyperparameter selection for offline reinforcement learning. arXiv 2020, arXiv:2007.09055. [Google Scholar]

- Botchkarev, A. A new typology design of performance metrics to measure errors in machine learning regression algorithms. Interdiscip. J. Inf. Knowl. Manag. 2019, 14, 045–076. [Google Scholar] [CrossRef] [PubMed]

- Catal, C. Performance evaluation metrics for software fault prediction studies. Acta Polytech. Hung. 2012, 9, 193–206. [Google Scholar]

| Feature | Equation for Variable |

|---|---|

| Mean | |

| Standard deviation | |

| Skew | |

| Kurt | |

| Maximum | |

| Minimum | |

| Average Change |

| Machine Learning Model | Hyper-Parameter Grid |

|---|---|

| Neural Network (NN) | • Maximum iterations: 10,000 • Active function: ReLU • Hidden layers: {(10), (10, 10), (10, 10, 10), (10, 10, 10, 10)} |

| Support Vector Regression (SVR) | • Maximum iterations: 10,000 • Kernel: {rbf, linear} • Penalty Factors: {0.1, 1, 10} |

| Random Forest (RF) | • Decision Tree max depths: {3, 5, 7, 9} • Percentage of features when branching: {0.6, 0.8, 1.0} |

| k-Nearest Neighbor (k-NN) | • Neighbor numbers: {3, 5, 7, 9} |

| XGBoost (XGB) | • Learning rates: {0.05, 0.1, 0.15, 0.2} • Decision Tree max depths: {3, 5, 7, 9} • Number of Decision Trees: {200, 400, 600, 800} |

| Laptop Configuration | Specification |

|---|---|

| CPU | Core i5—8250 |

| RAM | 8GB RAM |

| Operating System | Window 10 |

| Rank | Model | Parameter | K | R2 | MAPE |

|---|---|---|---|---|---|

| 1 | RF | Max Depth: 9 Feature Percentage: 0.8 | 10 | 0.99949 | 0.228 |

| 2 | RF | Max Depth: 9 Feature Percentage: 1.0 | 10 | 0.99949 | 0.2299 |

| 3 | RF | Max Depth: 7 Feature Percentage: 0.8 | 10 | 0.99947 | 0.2281 |

| 4 | RF | Max Depth: 7 Feature Percentage: 1.0 | 10 | 0.99947 | 0.2304 |

| 5 | XGB | Learning Rate: 0.05 Max Depth: 3 Number of decision trees: 400 | 10 | 0.99947 | 0.2376 |

| 6 | XGB | Learning Rate: 0.05 Max Depth: 3 Number of decision trees: 400 | 30 | 0.99947 | 0.2437 |

| 7 | RF | Max Depth: 7 Feature Percentage: 1.0 | 30 | 0.99947 | 0.2309 |

| 8 | XGB | Learning Rate: 0.05 Max Depth: 3 Number of decision trees: 400 | 20 | 0.99946 | 0.2434 |

| 9 | XGB | Learning Rate: 0.05 Max Depth: 3 Number of decision trees: 600 | 10 | 0.99946 | 0.2421 |

| 10 | RF | Max Depth: 7 Feature Percentage: 0.8 | 30 | 0.99946 | 0.2303 |

| Rank | Model | Parameter | K | R2 | MAPE |

|---|---|---|---|---|---|

| 1 | RF | Max Depth: 9 Feature Percentage: 1.0 | 20 | 0.99762 | 0.1941 |

| 2 | XGB | Learning Rate: 0.1 Max Depth: 7 Number of decision trees: 400 | 20 | 0.99762 | 0.1844 |

| 3 | XGB | Learning Rate: 0.1 Max Depth: 7 Number of decision trees: 800 | 20 | 0.99762 | 0.1844 |

| 4 | XGB | Learning Rate: 0.1 Max Depth: 7 Number of decision trees: 600 | 20 | 0.99762 | 0.1844 |

| 5 | XGB | Learning Rate: 0.05 Max Depth: 7 Number of decision trees: 600 | 30 | 0.99761 | 0.18 |

| 6 | XGB | Learning Rate: 0.05 Max Depth: 7 Number of decision trees: 800 | 30 | 0.99761 | 0.18 |

| 7 | XGB | Learning Rate: 0.05 Max Depth: 7 Number of decision trees: 400 | 30 | 0.99761 | 0.18 |

| 8 | XGB | Learning Rate: 0.05 Max Depth: 7 Number of decision trees: 200 | 30 | 0.99761 | 0.18 |

| 9 | XGB | Learning Rate: 0.1 Max Depth: 7 Number of decision trees: 200 | 20 | 0.9976 | 0.1852 |

| 10 | XGB | Learning Rate: 0.05 Max Depth: 9 Number of decision trees: 600 | 30 | 0.99759 | 0.1593 |

| Rank | Model | Parameter | K | R2 | MAPE |

|---|---|---|---|---|---|

| 1 | NN | Hidden layer: (10,) | 20 | 0.96729 | 9.0095 |

| 2 | NN | Hidden layer: (10, 10) | 20 | 0.96472 | 7.5151 |

| 3 | NN | Hidden layer: (10, 10, 10, 10) | 10 | 0.96457 | 6.0797 |

| 4 | XGB | Learning Rate: 0.15 Max Depth: 3 Number of decision trees: 800 | 20 | 0.96104 | 8.0518 |

| 5 | SVR | C: 1, Kernel: Linear | 10 | 0.96085 | 9.7507 |

| 6 | XGB | Learning Rate: 0.15 Max Depth: 3 Number of decision trees: 600 | 20 | 0.96035 | 8.1201 |

| 7 | XGB | Learning Rate: 0.15 Max Depth: 3 Number of decision trees: 400 | 20 | 0.95979 | 8.1688 |

| 8 | XGB | Learning Rate: 0.1 Max Depth: 3 Number of decision trees: 600 | 10 | 0.95973 | 8.5396 |

| 9 | XGB | Learning Rate: 0.1 Max Depth: 3 Number of decision trees: 800 | 10 | 0.95932 | 8.6739 |

| 10 | XGB | Learning Rate: 0.1 Max Depth: 3 Number of decision trees: 400 | 10 | 0.95917 | 8.3721 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, M.; Yu, Y.; Cheon, Y.; Baek, S.; Kim, Y.; Kim, K.; Jung, H.; Lim, D.; Byun, H.; Lee, C.; et al. Machine Learning-Based Prediction of Controlled Variables of APC Systems Using Time-Series Data in the Petrochemical Industry. Processes 2023, 11, 2091. https://doi.org/10.3390/pr11072091

Lee M, Yu Y, Cheon Y, Baek S, Kim Y, Kim K, Jung H, Lim D, Byun H, Lee C, et al. Machine Learning-Based Prediction of Controlled Variables of APC Systems Using Time-Series Data in the Petrochemical Industry. Processes. 2023; 11(7):2091. https://doi.org/10.3390/pr11072091

Chicago/Turabian StyleLee, Minyeob, Yoseb Yu, Yewon Cheon, Seungyun Baek, Youngmin Kim, Kyungmin Kim, Heechan Jung, Dohyeon Lim, Hyogeun Byun, Chaekyu Lee, and et al. 2023. "Machine Learning-Based Prediction of Controlled Variables of APC Systems Using Time-Series Data in the Petrochemical Industry" Processes 11, no. 7: 2091. https://doi.org/10.3390/pr11072091

APA StyleLee, M., Yu, Y., Cheon, Y., Baek, S., Kim, Y., Kim, K., Jung, H., Lim, D., Byun, H., Lee, C., & Jeong, J. (2023). Machine Learning-Based Prediction of Controlled Variables of APC Systems Using Time-Series Data in the Petrochemical Industry. Processes, 11(7), 2091. https://doi.org/10.3390/pr11072091