Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization

Abstract

1. Introduction

2. Assumptions Followed in the Assembly Sequence Validation Framework

- All of the components in the given product are rigid, i.e., there will not be any change in their geometrical shape or size during the assembly operation.

- The assembly fixtures/tools hold the part when there is no physical stability for it after it is positioned in its assembly state.

- The friction between parts during assembly operation is not considered for the verification of stability.

- Only recti linear paths along the principle axes and oblique orientation are used for assembling parts with combinations.

- Rotation of a component during the assembly operation is not considered.

- Tool feasibility during the assembly operation is not considered, only the primary and secondary parts of the product are considered for assembly sequence validation.

3. Improved Geometric Feasibility Testing

3.1. Interference Testing Algorithm

| Algorithm 1: Interference Algorithm |

| Input: Parts, target, direction, magnitude |

| Output: True/False |

| 1 geo center, com, bb ← BoundingBox(target)/* Calculates the Centroid, Center of Mass, Bounding Box dimensions*/ |

| 2 Create a thin Cuboid Ctarget at COM of dimensions bb.length ∗ bb.width ∗ 0.2 |

| 3 Ctarget ← Intersection of Ctarget and target |

| 4 Ctarget ← Extrusion of Ctarget of in direction of magnitude units |

| 5 for Pi in Parts do |

| 6 if Pi is not equal to target then |

| 7 overlap, volume ← check overlap(Pi, target) |

| 8 if overlap is True then |

| 9 if volume > 0 then |

| 10 return True, volume/* Overlapping */ |

| 11 else |

| 12 return False, volume/* Touching */ |

| 13 end |

| 14 else |

| 15 return False, 0 |

| 16 end |

| 17 end |

| 18 end |

| 19 end |

4. Assembly Sequence Validation and Automation

4.1. Assembly Sequence Validation and Automation Algorithm

| Algorithm 2: Assembly Sequence Validation Algorithm |

| Input: Pi → (xi, yi, zi) ∗ mi → Si |

| Output: True or False |

| 1 is sequence valid ← False |

| 2 n ← No. of Parts |

| 3 Parts ← Pi(i ∈ [0, n]) |

| 4 direction ← (xi, yi, zi)(i ∈ [0, n]) |

| 5 magnitude ← mi(i ∈ [0, n]) |

| 6 sequence ← si(i ∈ [0, n]) |

| 7 sort the Parts according to sequence in ascending order |

| 8 for i in n do |

| 9 Set initial location of Pi as direction[i]*mangnitude[i] |

| 10 reversed direction ← (direction.i ∗ −1, direction.j ∗ −1, direction.z ∗ −1) |

| 11 Collision ← interfrence(Parts, Part[i], reversed direction, magnitude[i]) |

| 12 if Collison is True then |

| 13 is sequence valid ← False |

| 14 Stop |

| 15 else |

| 16 Move the Part[i] along the reversed direction. |

| 17 end |

| 18 end |

| 19 is sequence valid ← True |

| 20 STOP |

4.2. Augmented Reality Visualization

- a.

- CAD models: The user supplies the CAD models of the assembled product to the Unity application. These CAD models serve as the basis for the validation and visualization process.

- b.

- Validation using API: The Unity application interacts with the assembly sequence validation (ASV) framework to perform the validation. The input data, including the CAD models and the assembly sequence, are passed to the ASV algorithm. The ASV algorithm then processes the input and determines whether the assembly sequence is valid or not. The output of the ASV algorithm is a Boolean value (true/false) indicating the validity of the sequence from the Blender, and is stored in a cloud database such as Firebase.

- c.

- Data exchange with Unity: After the validation process is completed, the input data and the validation result (true/false) are transferred back to the Unity application using an API (application programming interface). This allows for the transfer of data between the ASV algorithm and the Unity application.

- d.

- Visualization in augmented reality: If the assembly sequence is determined to be valid, Unity takes the validated input data and provides a visualization of the assembly in an augmented reality (AR) device such as Hololens 2. The AR device overlays virtual components onto the real-world environment, allowing the user to see the virtual assembly in the context of the physical space. This visualization serves as a guide for the user during the assembly process, enhancing their understanding of the correct sequence and positioning of the components.

5. Case Studies

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kalpakjian, S.; Schmid, S.R. Manufacturing Engineering. In Technology; Prentice Hall: London, UK, 2009; pp. 568–571. [Google Scholar]

- Bahubalendruni, M.R.; Biswal, B.B. A review on assembly sequence generation and its automation. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2016, 230, 824–838. [Google Scholar] [CrossRef]

- Deepak, B.B.V.L.; Bala Murali, G.; Bahubalendruni, M.R.; Biswal, B.B. Assembly sequence planning using soft computing methods: A review. Proc. Inst. Mech. Eng. Part E J. Process Mech. Eng. 2019, 233, 653–683. [Google Scholar] [CrossRef]

- Whitney, D.E. Mechanical Assemblies: Their Design, Manufacture, and Role in Product Development; Oxford University Press: New York, NY, USA, 2004; Volume 1. [Google Scholar]

- Champatiray, C.; Bahubalendruni, M.R.; Mahapatra, R.N.; Mishra, D. Optimal robotic assembly sequence planning with tool integrated assembly interference matrix. AI EDAM 2023, 37, e4. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Biswal, B.B.; Kumar, M.; Nayak, R. Influence of assembly predicate consideration on optimal assembly sequence generation. Assem. Autom. 2015, 35, 309–316. [Google Scholar] [CrossRef]

- Deshpande, A.; Kim, I. The effects of augmented reality on improving spatial problem solving for object assembly. Adv. Eng. Inform. 2018, 38, 760–775. [Google Scholar] [CrossRef]

- Eswaran, M.; Gulivindala, A.K.; Inkulu, A.K.; Raju Bahubalendruni, M.V.A. Augmented reality-based guidance in product assembly and maintenance/repair perspective: A state of the art review on challenges and opportunities. Expert Syst. Appl. Int. J. 2023, 213, 1–18. [Google Scholar] [CrossRef]

- Dong, J.; Xia, Z.; Zhao, Q. Augmented Reality Assisted Assembly Training Oriented Dynamic Gesture Recognition and Prediction. Appl. Sci. 2021, 11, 9789. [Google Scholar] [CrossRef]

- Eswaran, M.; Bahubalendruni, M.R. Challenges and opportunities on AR/VR technologies for manufacturing systems in the context of industry 4.0: A state of the art review. J. Manuf. Syst. 2022, 65, 260–278. [Google Scholar] [CrossRef]

- Ong, S.; Pang, Y.; Nee, A.Y.C. Augmented reality aided assembly design and planning. CIRP Ann. 2007, 56, 49–52. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Gulivindala, A.K.; Varupala, S.P.; Palavalasa, D.K. Optimal assembly sequence generation through computational approach. Sādhanā 2019, 44, 174. [Google Scholar] [CrossRef]

- Raju Bahubalendruni, M.V.A.; Biswal, B.B. Liaison concatenation—A method to obtain feasible assembly sequences from 3D-CAD product. Sādhanā 2016, 41, 67–74. [Google Scholar] [CrossRef]

- Suszyński, M.; Peta, K.; Černohlávek, V.; Svoboda, M. Mechanical Assembly Sequence Determination Using Artificial Neural Networks Based on Selected DFA Rating Factors. Symmetry 2022, 14, 1013. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Deepak, B.B.V.L.; Biswal, B.B. An advanced immune based strategy to obtain an optimal feasible assembly sequence. Assem. Autom. 2016, 36, 127–137. [Google Scholar] [CrossRef]

- Gunji, A.B.; Deepak, B.B.B.V.L.; Bahubalendruni, C.R.; Biswal, D.B.B. An optimal robotic assembly sequence planning by assembly subsets detection method using teaching learning-based optimization algorithm. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1369–1385. [Google Scholar] [CrossRef]

- Gulivindala, A.K.; Bahubalendruni, M.V.A.R.; Chandrasekar, R.; Ahmed, E.; Abidi, M.H.; Al-Ahmari, A. Automated disassembly sequence prediction for industry 4.0 using enhanced genetic algorithm. Comput. Mater. Contin. 2021, 69, 2531–2548. [Google Scholar] [CrossRef]

- Shi, X.; Tian, X.; Gu, J.; Yang, F.; Ma, L.; Chen, Y.; Su, T. Knowledge Graph-Based Assembly Resource Knowledge Reuse towards Complex Product Assembly Process. Sustainability 2022, 14, 15541. [Google Scholar] [CrossRef]

- Murali, G.B.; Deepak, B.B.V.L.; Raju, M.V.A.; Biswal, B.B. Optimal robotic assembly sequence planning using stability graph through stable assembly subset identification. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2019, 233, 5410–5430. [Google Scholar] [CrossRef]

- Seow, K.T.; Devanathan, R. Temporal logic programming for assembly sequence planning. Artif. Intell. Eng. 1993, 8, 253–263. [Google Scholar] [CrossRef]

- Bahubalendruni, M.R.; Biswal, B.B. A novel concatenation method for generating optimal robotic assembly sequences. Proc. Inst. Mech. Eng. Part C J. Mech. Eng. Sci. 2017, 231, 1966–1977. [Google Scholar] [CrossRef]

- Dong, T.; Tong, R.; Zhang, L.; Dong, J. A knowledge-based approach to assembly sequence planning. Int. J. Adv. Manuf. Technol. 2007, 32, 1232–1244. [Google Scholar] [CrossRef]

- Rashid, M.F.F.; Hutabarat, W.; Tiwari, A. A review on assembly sequence planning and assembly line balancing optimisation using soft computing approaches. Int. J. Adv. Manuf. Technol. 2012, 59, 335–349. [Google Scholar] [CrossRef]

- Anil Kumar, G.; Bahubalendruni, M.R.; Prasad, V.S.S.; Sankaranarayanasamy, K. A multi-layered disassembly sequence planning method to support decision-making in de-manufacturing. Sādhanā 2021, 46, 102. [Google Scholar] [CrossRef]

- Kumar, G.A.; Bahubalendruni, M.R.; Prasad, V.V.; Ashok, D.; Sankaranarayanasamy, K. A novel Geometric feasibility method to perform assembly sequence planning through oblique orientations. Eng. Sci. Technol. Int. J. 2022, 26, 100994. [Google Scholar] [CrossRef]

- Prasad, V.V.; Hymavathi, M.; Rao, C.S.P.; Bahubalendruni, M.A.R. A novel computative strategic planning projections algorithm (CSPPA) to generate oblique directional interference matrix for different applications in computer-aided design. Comput. Ind. 2022, 141, 103703. [Google Scholar] [CrossRef]

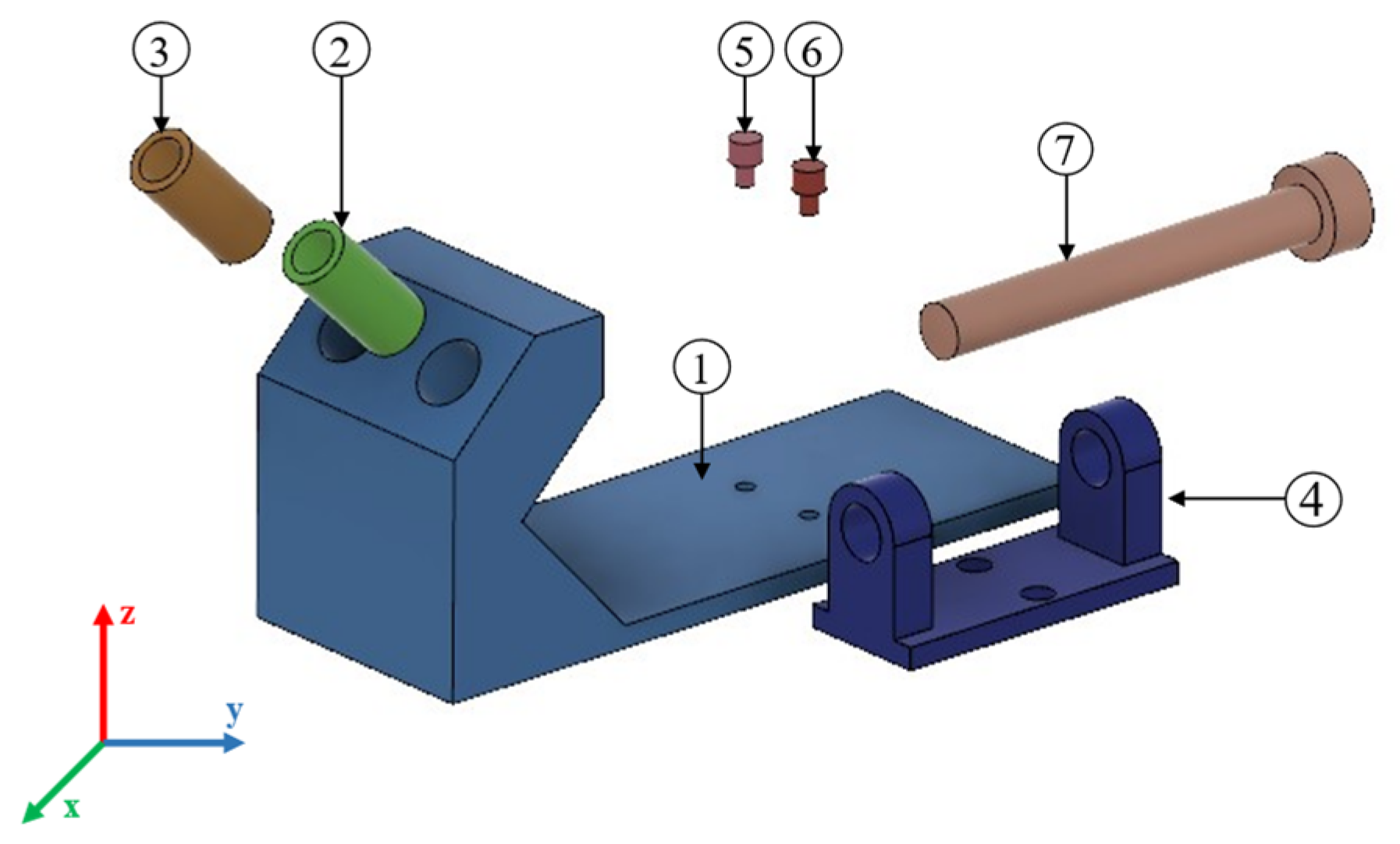

| Part Identity | Direction Vector | Distance for Assembly Operation (mm) | Assembly Order |

|---|---|---|---|

| Part 1 | [0, 0, 0] | [0] | 1 |

| Part 2 | [0, −0.5, 0.5] | [100] | 4 |

| Part 3 | [0, −0.5, 0.5] | [100] | 3 |

| Part 4 | [1, 0, 0] | [100] | 2 |

| Part 5 | [0, 0, 1] | [100] | 6 |

| Part 6 | [0, 0, 1] | [100] | 5 |

| Part 7 | [0, 1, 0] | [100] | 7 |

| Part Identity | Direction Vectors | Dist. for Assembly Operation (mm) | Assembly Orders | ||

|---|---|---|---|---|---|

| Part 1 | [0, 0, 0] | [0] | 1 | 1 | |

| Part 2 | [0, −0.5, 0.5] | [100] | 2 | 2 | |

| Part 3 | [0, −0.5, 0.5] | [100] | 3 | 3 | |

| Part 4 | [1, 0, 0] | [100] | 4 | 7 | |

| Part 5 | [0, 0, 1] | [100] | 5 | 4 | |

| Part 6 | [0, 0, 1] | [100] | 6 | 5 | |

| Part 7 | [0, 1, 0] | [100] | 7 | 6 | |

| Part Identity | Direction Vectors | Dist. for Assembly Operation (mm) | Assembly Orders | ||

|---|---|---|---|---|---|

| Part 1 | [0, 0, 0] | [0] | 1 | 1 | |

| Part 2 | [0, 1, 0] | [60] | 2 | 2 | |

| Part 3 | [0, 1, 0] | [200] | 3 | 3 | |

| Part 4 | [0, 1, 0] | [260] | 4 | 4 | |

| Part 5 | [0, 1, 0] | [360] | 5 | 6 | |

| Part 6 | [0, 1, 0] | [420] | 6 | 5 | |

| Part 7 | [0, −1, 0] | [80] | 7 | 7 | |

| Part 8 | [0, −1, 0] | [200] | 8 | 8 | |

| Part 9 | [0, −1, 0] | [200] | 10 | 9 | |

| Part 10 | [0, −1, 0] | [240] | 9 | 10 | |

| Part 11 | [1, −0.5, 0] | [120] | 11 | 11 | |

| Bench vice Assembly | Assembly Orders | Result |

| 1–2–3–4–5–6–7 | Vaid sequence | |

| 1–2–3–7–4–5–6 | Failed due to interference between part 7 and part 4 during part 4 assembly. | |

| Transmission Assembly | 1–2–3–4–5–6–7–8–10–9–11 | Valid sequence |

| 1–2–3–4–6–5–7–8–9–10–11 | Failed due to interference between part 5 and part 6 during part 5 assembly. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bahubalendruni, M.V.A.R.; Putta, B. Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization. Processes 2023, 11, 2094. https://doi.org/10.3390/pr11072094

Bahubalendruni MVAR, Putta B. Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization. Processes. 2023; 11(7):2094. https://doi.org/10.3390/pr11072094

Chicago/Turabian StyleBahubalendruni, M. V. A. Raju, and Bhavasagar Putta. 2023. "Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization" Processes 11, no. 7: 2094. https://doi.org/10.3390/pr11072094

APA StyleBahubalendruni, M. V. A. R., & Putta, B. (2023). Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization. Processes, 11(7), 2094. https://doi.org/10.3390/pr11072094