YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes

Abstract

1. Introduction

2. Related Work

2.1. Plastic Manufacturing Process

2.2. Supervised Learning

2.3. Yolo

2.4. Anomaly Detection

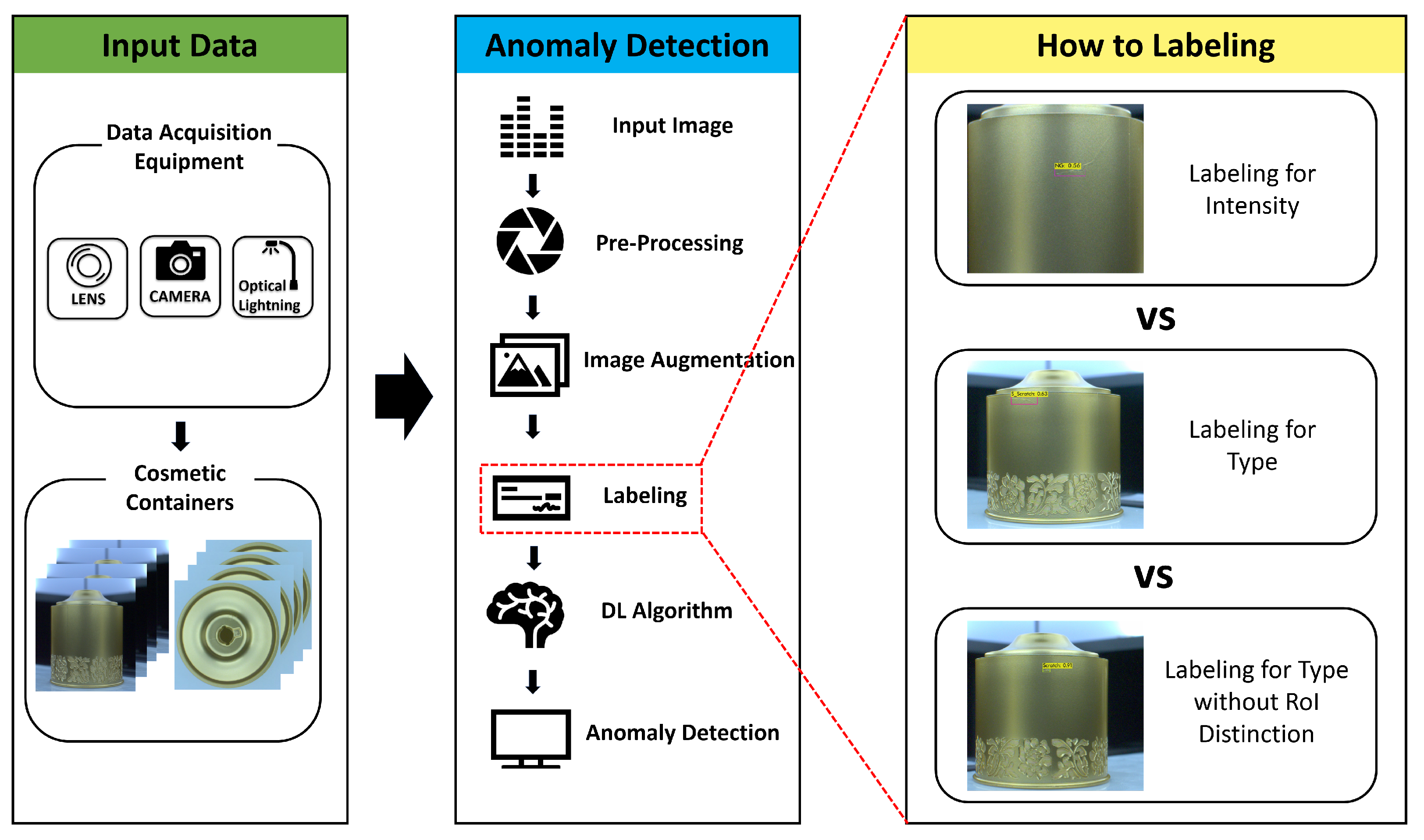

3. Yolov7-Based Anomaly Detection Using Intensity and NG Types

3.1. System Architecture

3.1.1. Dataset

3.1.2. Meaningful Image Classification

3.1.3. Pre-Processing

- Fourier TransformThe Fourier transform consists of decomposing a signal or image into a sum of fundamental signals, which has the property of being easy to implement and observe [35]. Since these fundamental signals are periodic and complex, the amplitude and phase of the system can be studied. The Fourier transform is a powerful mathematical tool for analyzing the frequency content of signals and functions. It finds a wide range of applications in various fields, providing insight into the fundamental properties of signals, facilitating filtering operations, and enabling efficient data compression techniques.

- Wavelet TransformWavelet transform is a mathematical tool used to analyze signals and data in both the time and frequency domains. It provides a localized representation of a signal by decomposing it into a series of wavelet functions called wavelets. Unlike the Fourier transform, which uses a fixed sinusoidal basis function, the wavelet transform uses wavelets that are localized in both time and frequency, allowing for more precise analysis of signals with transient or localized features.Wavelet functions are mathematical functions that are localized in both time and frequency, and are typically derived from a mother wavelet through scaling and transformation operations. The mother wavelet acts as a building block, and by expanding or compressing it and moving it through time, a set of wavelets with different sizes and positions can be obtained.Wavelet transforms have a wide range of applications across multiple domains, including signal processing, image compression, noise reduction, feature extraction, and time-frequency analysis, and provide a powerful tool for analyzing signals with localized or time-varying characteristics, allowing for more detailed and adaptive representations compared to traditional Fourier-based methods.

- NormalizationNormalization is a common preprocessing technique used to standardize the scale or range of input data, which involves transforming data in such a way that it has a consistent scale and distribution that helps improve the performance and convergence of many machine learning algorithms. The normalization process involves adjusting the values of a feature or set of features to fall within a certain range or follow a certain distribution, with the goal of bringing features to a similar scale and removing any bias or variation that may exist in the original data. Normalization is especially important when dealing with features that have different units of measure or vary widely in range, as without it, certain features on a larger scale can dominate the learning process and bias the model’s behavior for those features.

3.1.4. Image Augmentation

3.1.5. Labelling Processes

3.1.6. Anomaly Detection

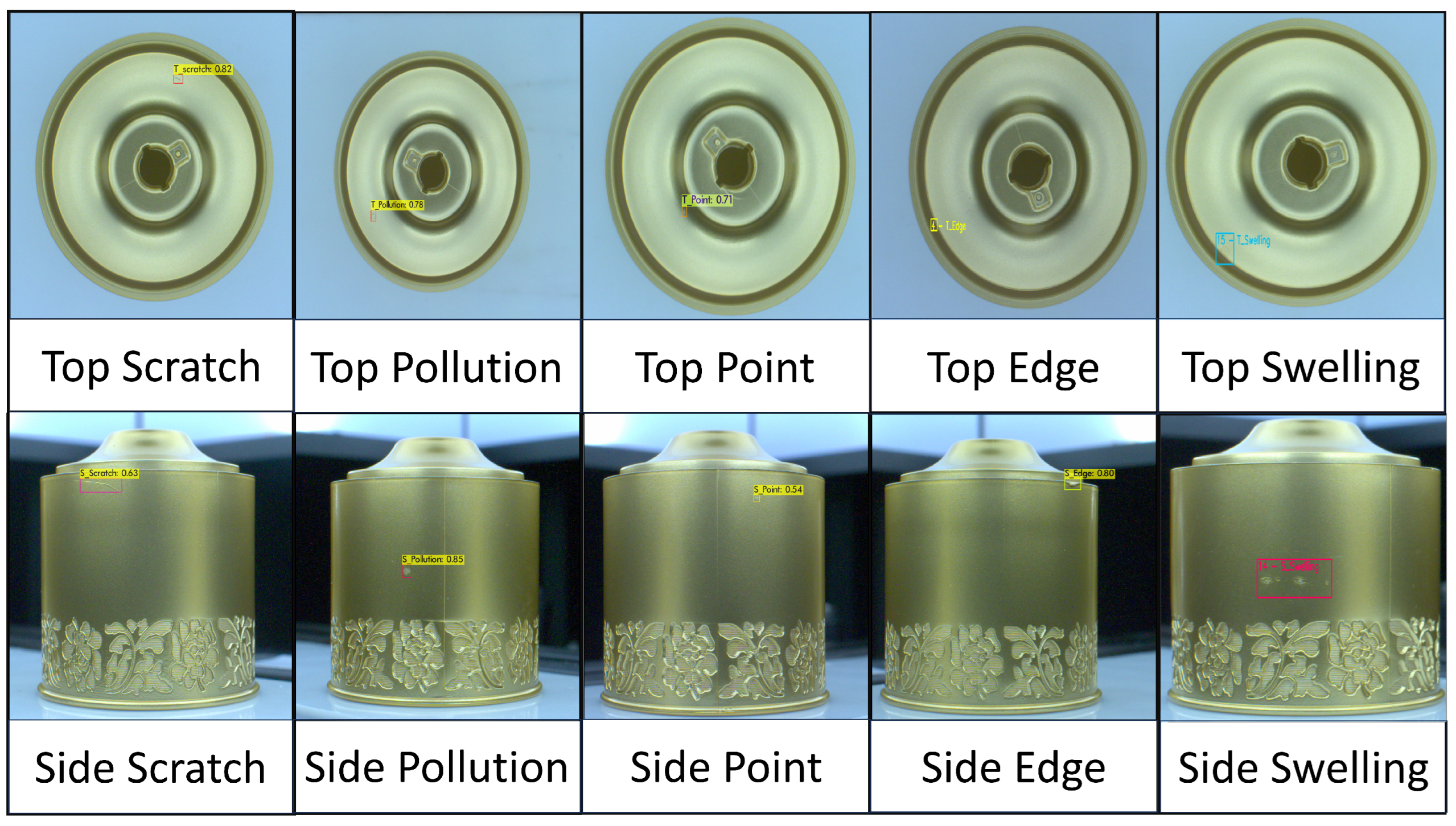

- ScratchesThis category refers to surface defects that result in noticeable marks on the top or sides of the container due to abrasions or scratches.

- PollutionPollution is when the surface of a container is contaminated or scratched by foreign objects or debris.

- PointPoint anomalies are localized irregularities, such as small dots or spots on the top or sides of a container that can affect its appearance or function.

- EdgeEdge anomalies include irregularities or deformations along the edge of a container, which can compromise its overall quality or structural integrity.

- SwellingSwelling is the expansion of the surface of a part. Swelling can occur if the product’s molding temperature is too high, the molding pressure is too high, or the material overheats during the molding time. It can also occur if the product is poorly designed and the material does not cool properly.

- StrongNGIndicates a larger, more serious issue that requires immediate attention.

- WeakNGAnomalies that are confused with dirt or are relatively minor in nature.

4. Experimental Results

4.1. Experimental Environments

4.2. Data Collection and Processing

4.3. Performance Matrix

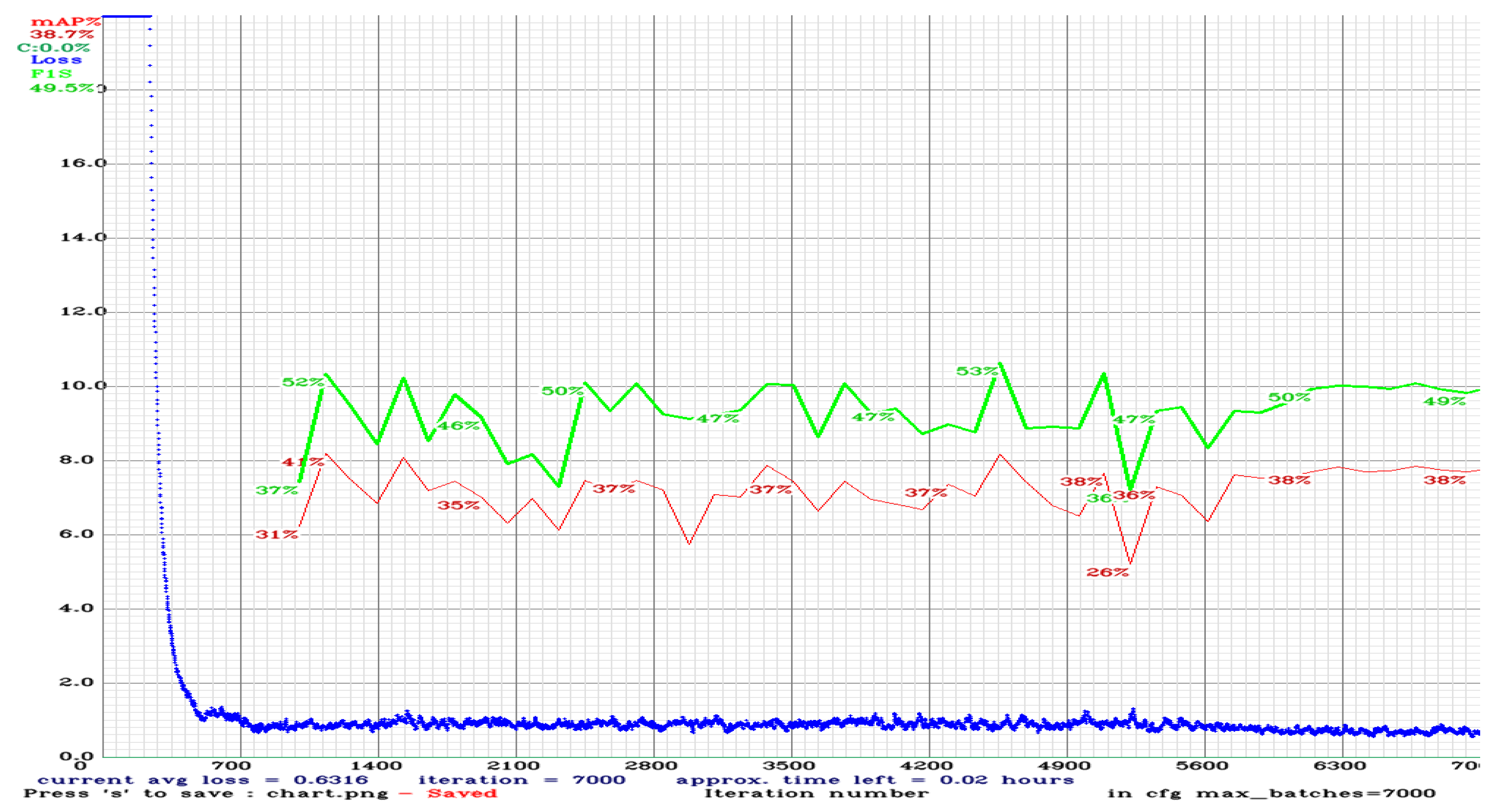

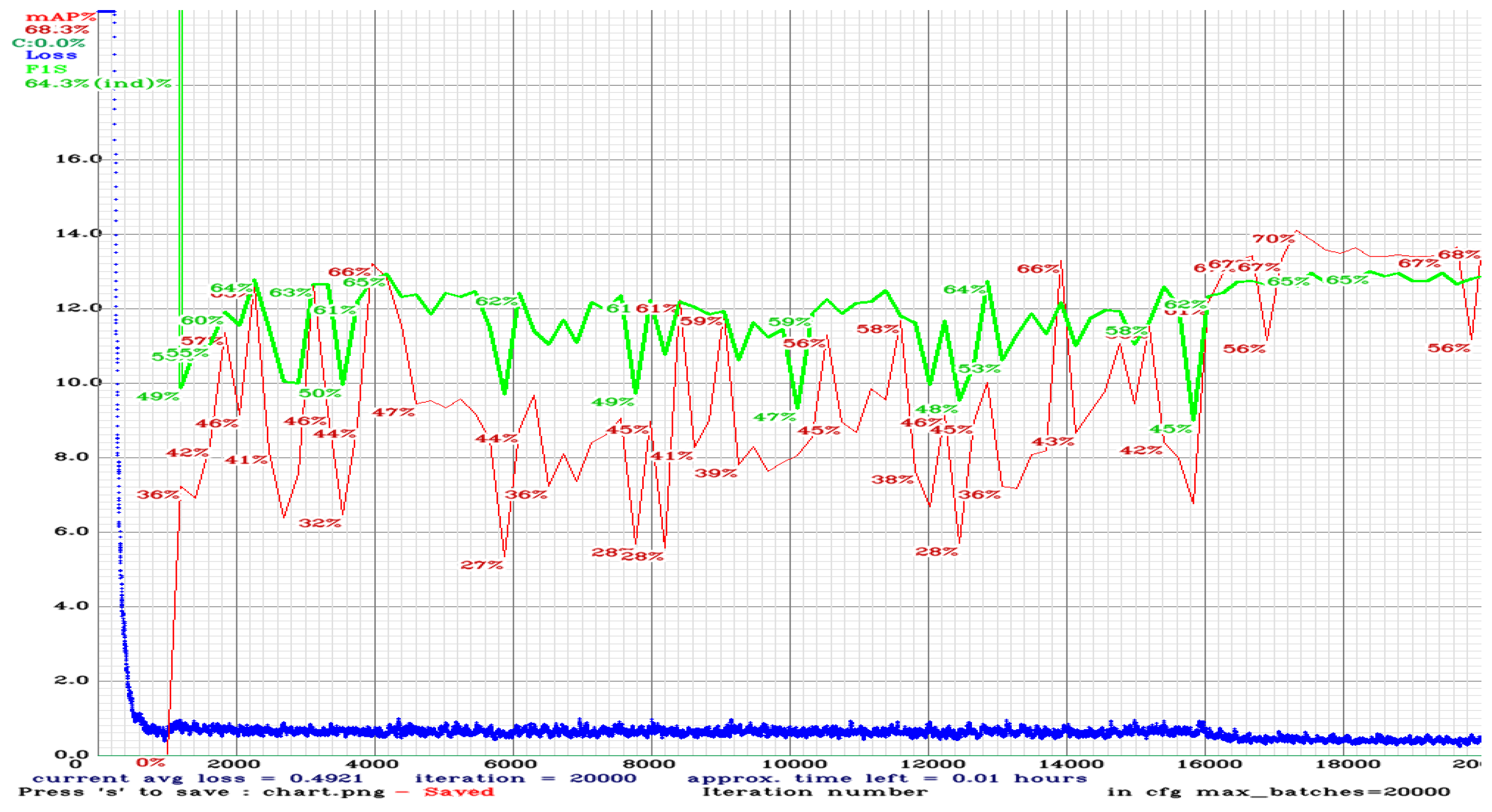

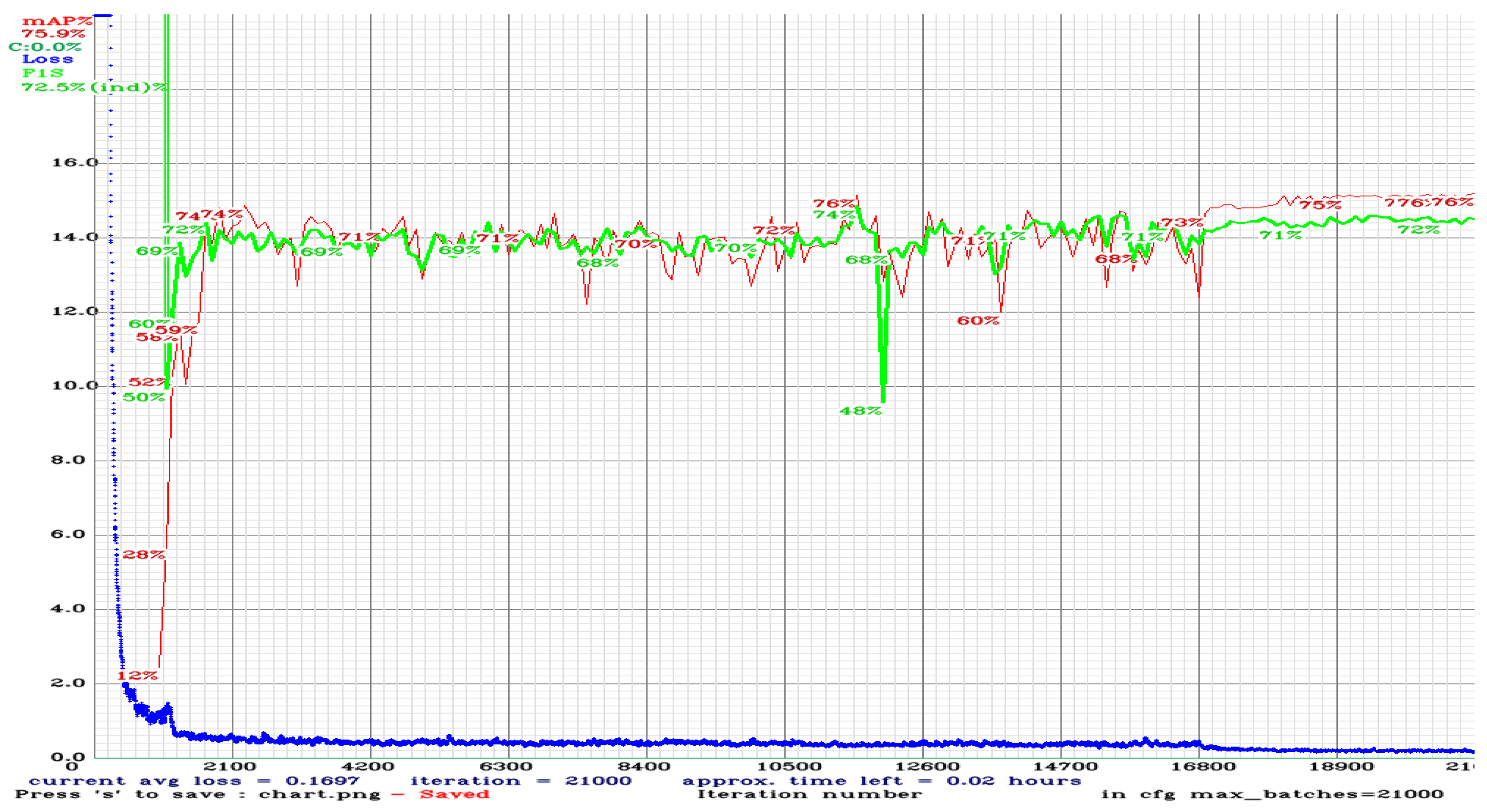

4.4. Results

- Labeling for NG IntensityLabeling by NG intensity involves labeling anomalies based on the severity or intensity of the anomaly. This method focuses on quantifying the degree of anomaly, but may lack specificity in identifying the exact type of anomaly. It provides important information about the severity of the anomaly, but may not be suitable for accurate classification tasks.

- Labeling for NG TypeNG labeling by type involves classifying anomalies based on a specific type or class. This method aims to identify the underlying patterns and characteristics of anomalies so that you can better classify and understand different anomaly types. Labeling anomalies based on a specific class enables more accurate anomaly detection and classification.

- Labeling for NG Type without RoI DistinctionThe NG type-specific labeling without RoI classification method focuses on labeling anomalies according to their specific type without considering their RoI or location in the image. This method primarily aims to accurately classify anomalies based on their type without explicitly specifying their spatial location.

- Superior labeling performance by NG type without RoI classificationAfter thorough evaluation and analysis, we found that the NG type-specific labeling method without RoI classification achieved the highest F1 Score and mAP compared to other labeling methods. The reasons behind the superior performance are as follows.

- Precision and recallThis labeling method focuses on accurately classifying anomalies by type, ensuring high precision and recall. Anomalies can be accurately classified regardless of their spatial location.

- FlexibilityThe lack of RoI classification gives you the flexibility to identify anomalies in different areas of the image, and allows the model to capture anomalies that can occur in different areas, giving you a comprehensive understanding of different anomaly types.

- Improved model performanceThe NG type-specific labeling method without RoI classification provides a clear focus on anomaly types, allowing the model to learn specific patterns and features associated with each anomaly class. This enhanced learning contributes to better detection and classification performance, resulting in higher F1 scores and mAPs.In anomaly detection, the choice of labeling method has a significant impact on the accuracy and efficiency of the model. After evaluating different labeling methods, we found that labeling by NG type without RoI classification outperforms labeling by NG intensity and labeling by NG type in terms of F1 Score.

4.5. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Okorie, O.; Subramoniam, R.; Charnley, F.; Patsavellas, J.; Widdifield, D.; Salonitis, K. Manufacturing in the time of COVID-19: An assessment of barriers and enablers. IEEE Eng. Manag. Rev. 2020, 48, 167–175. [Google Scholar] [CrossRef]

- Khanfar, A.A.; Iranmanesh, M.; Ghobakhloo, M.; Senali, M.G.; Fathi, M. Applications of blockchain technology in sustainable manufacturing and supply chain management: A systematic review. Sustainability 2021, 13, 7870. [Google Scholar]

- Hossein, H.; Huang, X.; Silva, E. The human digitalisation journey: Technology first at the expense of humans? Information 2021, 12, 267. [Google Scholar]

- Yaniel, T.; Nadeau, S.; Landau, K. Classification and quantification of human error in manufacturing: A case study in complex manual assembly. Appl. Sci. 2020, 11, 749. [Google Scholar]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using deep learning to detect defects in manufacturing: A comprehensive survey and current challenges. Materials 2020, 13, 5755. [Google Scholar]

- Andronie, M.; Lazaroiu, G.; Iatagan, M.; Uta, C.; Stefanescu, R.; Cocosatu, M. Artificial intelligence-based decision-making algorithms, internet of things sensing networks, and deep learning-assisted smart process management in cyber-physical production systems. Electronics 2021, 10, 2497. [Google Scholar] [CrossRef]

- Li, R.; Zhao, S.; Yang, B. Research on the application status of machine vision technology in furniture manufacturing process. Appl. Sci. 2023, 13, 2434. [Google Scholar] [CrossRef]

- Rizvi, A.T.; Haleem, A.; Bahl, S.; Javaid, M. Artificial intelligence (AI) and its applications in Indian manufacturing: A review. J. Curr. Adv. Mech. Eng. 2020, 2021, 825–835. [Google Scholar]

- Kim, D.; Han, Y.-H.; Jeong, J. Design and Implementation of Real-time Anomaly Detection System based on YOLOv4. Jwseas Trans. Electron. 2022, 13, 130–136. [Google Scholar] [CrossRef]

- Dowlatshahi, S. An application of design of experiments for optimization of plastic injection molding processes. J. Manuf. Technol. Manag. 2004, 15, 245–254. [Google Scholar]

- Chen, W.-S.; Yu, F.; Wu, S. A robust design for plastic injection molding applying Taguchi method and PCA. J. Sci. Eng. Technol. 2011, 7, 1–8. [Google Scholar]

- Seow, L.W.; Lam, Y.C. Optimizing flow in plastic injection molding. J. Mater. Process. Technol. 1997, 72, 333–341. [Google Scholar] [CrossRef]

- Dang, X.-P. General frameworks for optimization of plastic injection molding process parameters. J. Simul. Model. Pract. Theory 2014, 41, 15–27. [Google Scholar] [CrossRef]

- Song, H.; Jiang, Z.; Men, A.; Yang, B. A hybrid semi-supervised anomaly detection model for high-dimensional data. Int. J. Sci. Res. (IJSR) 2020, 381–386. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, T.; Xuan, Z.; Feng, Z. Automated Defect Analysis System for Industrial Computerized Tomography Images of Solid Rocket Motor Grains Based on YOLO-V4 Model. Electronics 2022, 11, 3215. [Google Scholar] [CrossRef]

- Libbrecht, M.W.; Noble, W.S. Machine learning applications in genetics and genomics. Nature Reviews Genetics 2015, 16, 321–332. [Google Scholar] [CrossRef]

- Cui, Y.; Liu, Z.; Lian, S. A Survey on Unsupervised Industrial Anomaly Detection Algorithms. arXiv 2022, arXiv:2204.11161. [Google Scholar] [CrossRef]

- Jiang, J.-R.; Kao, J.-B.; Li, Y.-L. Semi-supervised time series anomaly detection based on statistics and deep learning. Appl. Sci. 2021, 11, 6698. [Google Scholar] [CrossRef]

- Liu, L.; Wu, Y.; Wei, W.; Cao, W.; Sahin, S.; Zhang, Q. Benchmarking Deep Learning Frameworks: Design Considerations, Metrics and Beyond. In Proceedings of the 2018 IEEE 38th International Conference on Distributed Computing Systems (ICDCS), Vienna, Austria, 2–6 July 2018. [Google Scholar]

- Kantak, S.; Kadam, P.; Sarvaiya, K.; Keni, S.; Kubal, K. Object Detection–Trained YOLOv4. Int. J. Res. Eng. Sci. (IJRES) 2021, 9, 29–34. [Google Scholar]

- Du, J. Understanding of object detection based on CNN family and YOLO. J. Phys. Conf. Ser. 2018, 1004, 266071. [Google Scholar] [CrossRef]

- Rajendran, G.B.; Kumarasamy, U.M.; Zarro, C.; Divakarachari, P.B.; Ullo, S.L. Land-use and land-cover classification using a human group-based particle swarm optimization algorithm with an LSTM Classifier on hybrid pre-processing remote-sensing images. Remote Sens. 2020, 12, 4145. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [PubMed]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Adibhatla, V.A.; Huang, Y.C.; Chang, M.C.; Kuo, H.C.; Utekar, A.; Chih, H.C.; Abbod, M.F.; Shieh, J.S. Unsupervised Anomaly Detection in Printed Circuit Boards through Student–Teacher Feature Pyramid Matching. Electronics 2021, 10, 3177. [Google Scholar] [CrossRef]

- Sundaram, S.; Zeid, A. Artificial intelligence-based smart quality inspection for manufacturing. Micromachines 2023, 14, 570. [Google Scholar] [CrossRef]

- Liu, J.; Guo, J.; Orlik, P.; Shibata, M.; Nakahara, D.; Mii, S.; Takac, M. Anomaly detection in manufacturing systems using structured neural networks. In Proceedings of the 2018 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018. [Google Scholar]

- Alexey, A.; Darknet, B.; AlexeyAB. AlexeyAB/Darknet: Yolov7. 2020. Available online: https://github.com/AlexeyAB/darknet (accessed on 10 February 2022).

- Gonzalez, R.F.T.; Woods, R. Digital Image Preprocessing; Prentice Hall: Upper Saddle River, NJ, USA, 2007; p. 996. [Google Scholar]

- Milanfar, P. A tour of modimage filtering T. New insights and methods, both practical and theoretical. Signal Process 2013, 30, 106–128. [Google Scholar] [CrossRef]

- Guo, J.; Wang, S.; Xu, Q. Saliency Guided DNL-Yolo for Optical Remote Sensing Images for Off-Shore Ship Detection. Appl. Sci. 2022, 12, 2629. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, M.; Ordieres-Meré, J. Comparison of data preprocessing approaches for applying deep learning to human activity recognition in the context of industry 4.0. Sensors 2018, 18, 2146. [Google Scholar]

- Wallis, R. An approach to the space variant restoration and enhancement of images. Signal Process 2013, 6, 106–128. [Google Scholar]

- Di Pasquale, V.; Franciosi, C.; Lambiase, A.; Miranda, S. Methodology for the analysis and quantification of human error probability in manufacturing systems. In Proceedings of the 2016 IEEE Student Conference on Research and Development, Kuala Lumpur, Malaysia, 13–14 December 2016. [Google Scholar]

- Chapeau-Blondeau, F.; Belin, E. Fourier-transform quantum phase estimation with quantum phase noise. Signal Process. 2020, 170, 107441. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

| Side | Top | Distance between Products |

|---|---|---|

| 30.15 × 28.11 (mm) | 25.86 × 25.86 (mm) | 80 (mm) |

| OpenCV | CUDA | cuDNN | Framework | Working Distance | Conveyor Belt Speed |

|---|---|---|---|---|---|

| v6.4.16 | v11.1 | v8.2.0 | Darknet | Top: 135 mm Side: 145 mm | 150 mm/s |

| LENS | Lens Mount | C Mount |

| Resolution | 2464 × 2056 | |

| Pixel Size | 3.45 × 3.45 | |

| Optical Format | 2/3″ | |

| CAMERA | Name | MG-A500M-22 |

| Sensor Format | 2/3″ | |

| Mono/Color | Mono | |

| Dimension | 29 × 29 × 40 (mm) | |

| IPC | Processor | Intel Xeon Fold 5220R 6-Core Processor 2.20 Ghz |

| RAM | 90 GB | |

| GPU | Tesla V100-SXM2-32 GB | |

| Optical Lightning | Name | ADQL4-300 |

| LED Count | 84 EA | |

| Spec | 300 × 300 × 100 (mm) |

| Labeling Methods | Threshold | Total Number of Images | TN | FN | TN Rate | FN Rate |

|---|---|---|---|---|---|---|

| Intensity | 0.5 | 939 | 69 | 68 | 7.35% | 7.24% |

| Intensity | 0.5 | 175 | 34 | 1 | 17.13% | 1.71% |

| Intensity | 0.5 | 480 | 55 | 1 | 11.46% | 0.00% |

| Intensity | 0.5 | 615 | 135 | 0 | 21.95% | 0.00% |

| Intensity | 0.5 | 1320 | 169 | 0 | 12.80% | 0.00% |

| Labeling Methods | Threshold | Total Number of Images | TN | FN | TN Rate | FN Rate |

|---|---|---|---|---|---|---|

| Type | 0.5 | 1266 | 66 | 1 | 5.21% | 0.08% |

| Type | 0.5 | 485 | 44 | 1 | 7.59% | 0.17% |

| Type | 0.5 | 615 | 35 | 248 | 2.74% | 19.42% |

| Type | 0.5 | 4640 | 200 | 8 | 4.31% | 0.17% |

| Type | 0.5 | 1315 | 51 | 0 | 3.88% | 0.00% |

| Labeling Methods | Threshold | Total Number of Images | TN | FN | TN Rate | FN Rate |

|---|---|---|---|---|---|---|

| Type without RoI | 0.5 | 1295 | 66 | 1 | 5.10% | 0.08% |

| Type without RoI | 0.5 | 195 | 20 | 44 | 2.74% | 19.42% |

| Type without RoI | 0.5 | 580 | 44 | 1 | 7.59% | 0.17% |

| Type without RoI | 0.5 | 824 | 45 | 0 | 5.46% | 0.00% |

| Type without RoI | 0.5 | 306 | 5 | 2 | 1.63% | 0.00% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beak, S.; Han, Y.-H.; Moon, Y.; Lee, J.; Jeong, J. YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes. Processes 2023, 11, 2266. https://doi.org/10.3390/pr11082266

Beak S, Han Y-H, Moon Y, Lee J, Jeong J. YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes. Processes. 2023; 11(8):2266. https://doi.org/10.3390/pr11082266

Chicago/Turabian StyleBeak, Seunghyo, Yo-Han Han, Yeeun Moon, Jieun Lee, and Jongpil Jeong. 2023. "YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes" Processes 11, no. 8: 2266. https://doi.org/10.3390/pr11082266

APA StyleBeak, S., Han, Y.-H., Moon, Y., Lee, J., & Jeong, J. (2023). YOLOv7-Based Anomaly Detection Using Intensity and NG Types in Labeling in Cosmetic Manufacturing Processes. Processes, 11(8), 2266. https://doi.org/10.3390/pr11082266