AI-Based Integrated Smart Process Sensor for Emulsion Control in Industrial Application

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sensor Development Strategy

- Optical access to the product, allowing a large number of different formulations to be optically resolved and evaluated;

- No influence on process performance due to the integrated intelligent sensor;

- Simple integration into the industrial plant and user-friendliness;

- Robust design.

- Optical sensor and flow cell design;

- Automated droplet size analysis;

- Integration into the production plant.

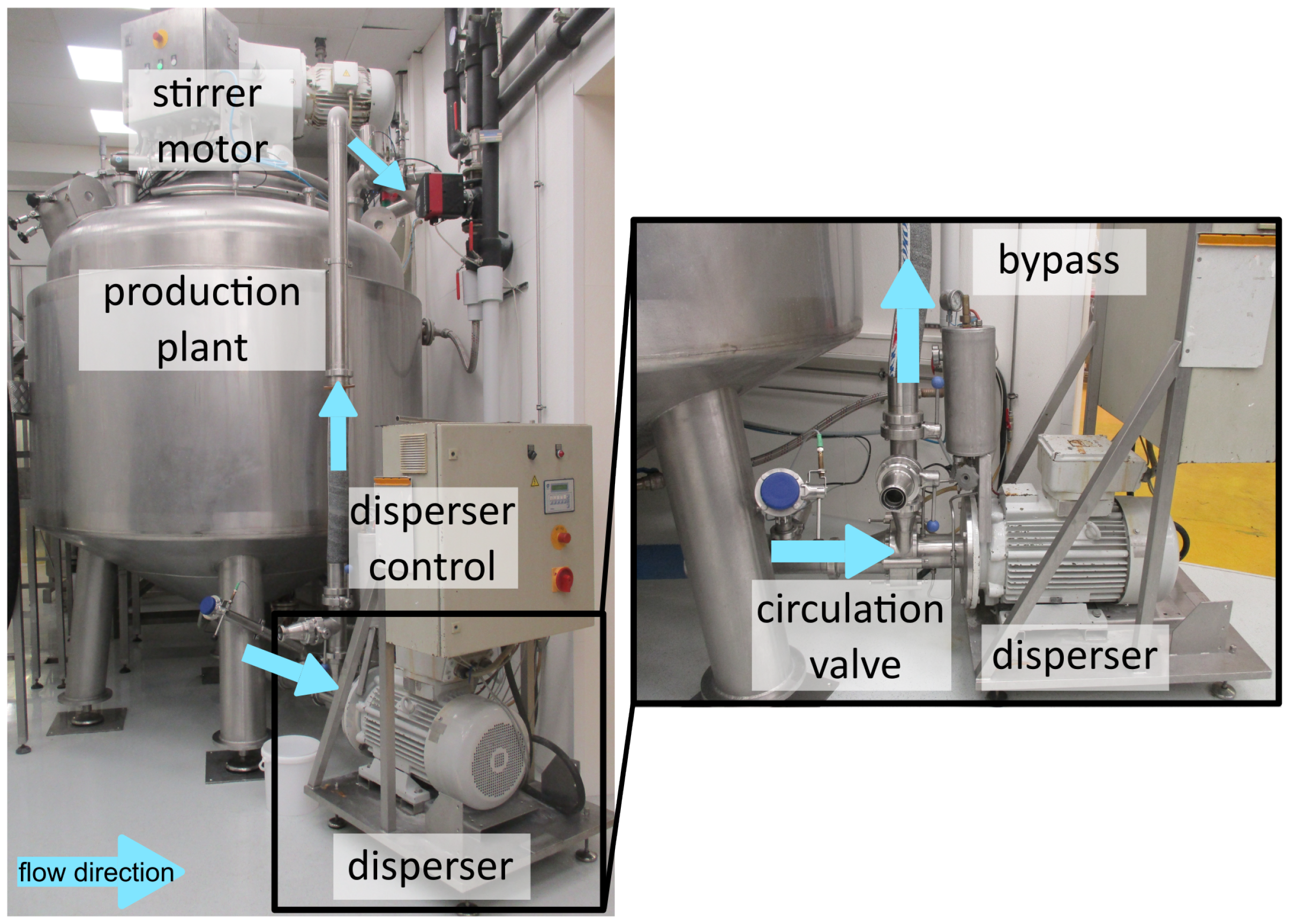

2.2. Industrial Emulsification Process

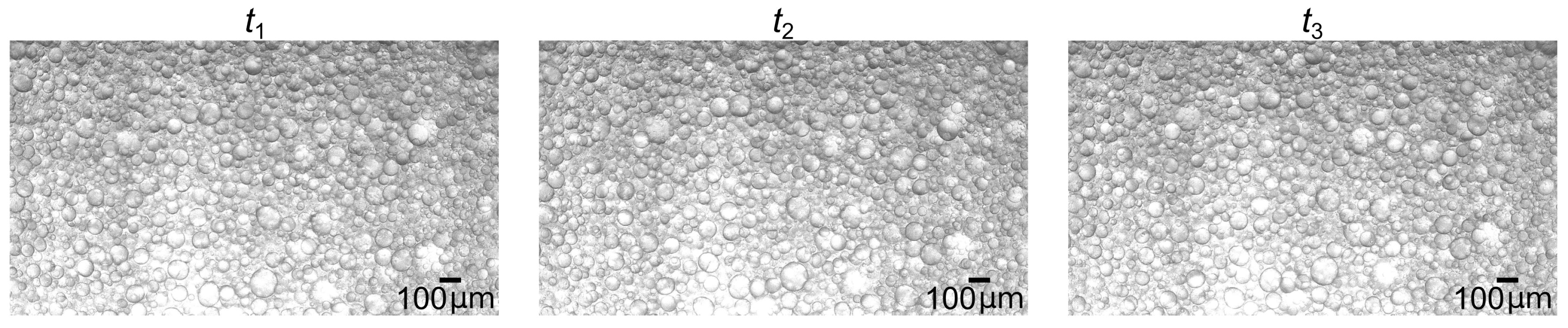

2.3. AI-Based Droplet Size Determination

2.4. Validation of Droplet Size Determination

3. Results

3.1. Optical Smart Process Sensor

- Camera unit, including the processor board, optical sensor, and focusable lens;

- Optical measurement flow cell;

- LED illumination unit.

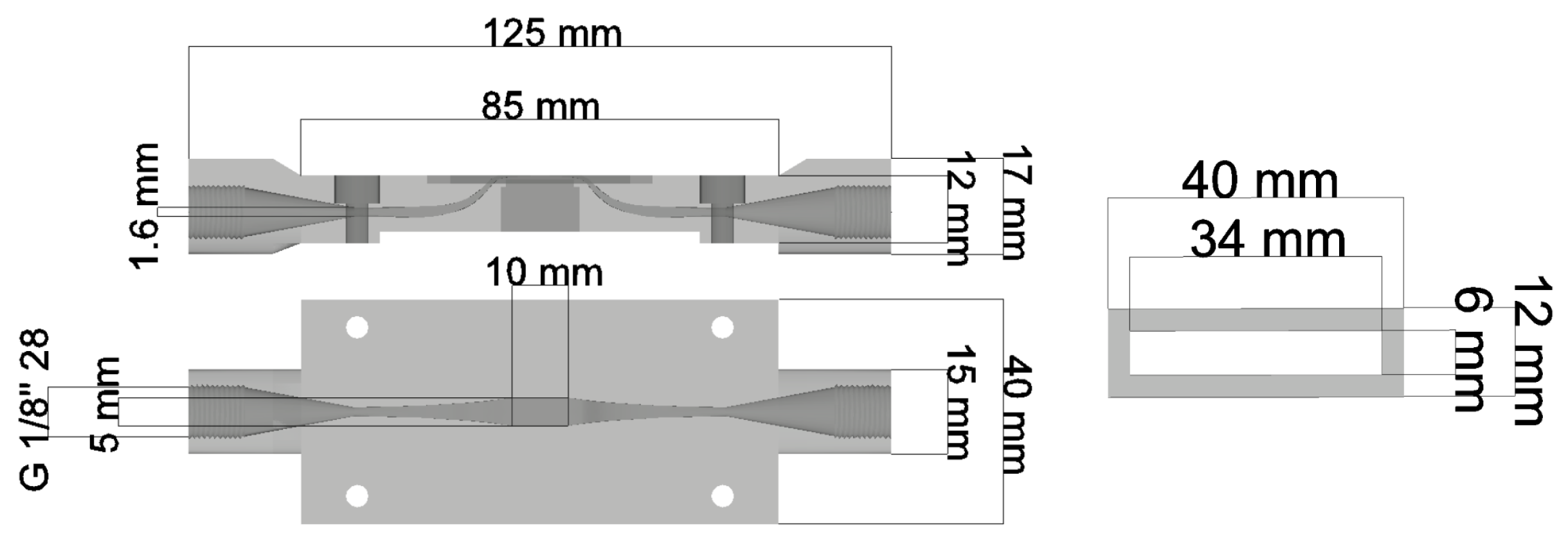

3.1.1. Optical Measurement Flow Cell

- Reduction of complexity;

- Reduction of components to simplify handling and minimize leakages;

- Minimization of the distance between the emulsion and the camera lens;

- Applicability for the evaluation of industrial emulsions;

- Integration into industrial system—focus on fluid connections.

3.1.2. Camera System and Illumination Strategy

- Adequate magnification and visualization of the emulsion droplets;

- Definable and reproducible focusing;

- Exposure time, exposure mode, and light temperature;

- Hardware of the embedded processor for real-time evaluation;

- Housing concept and gasket.

- Power supply unit for input voltage of 9–60 V DC;

- Interfaces to sensor board and electronic focus module;

- Ethernet PHY;

- USB interface;

- Digital inputs and outputs for triggering and signaling;

- LED driver for controlling the lighting.

3.1.3. AI-Based Evaluation for Edge Device

- Reading the video and extracting one frame per second;

- Cutting the individual images into six sub-images;

- Optional: adjustment of contrast;

- Starting AI-based droplet detection using YOLO and size determination using HC;

- Saving the determined droplet diameters and statistical parameters in a .csv file;

- Requesting if a new video is available in the folder to restart at Step 1.

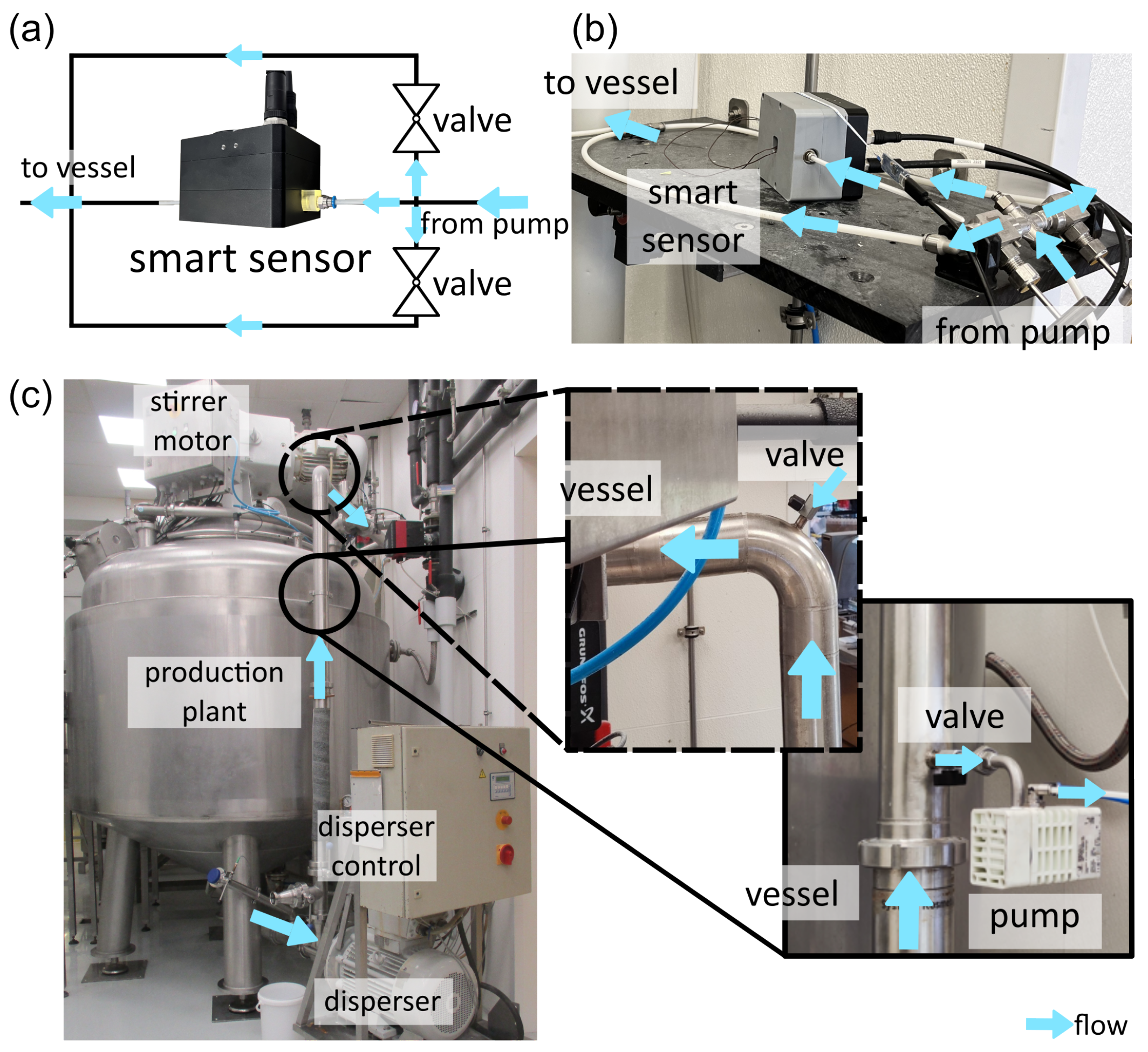

3.2. Sensor Integration

- Installation of a bypass system with flow control;

- Representative sampling;

- GMP-based hygiene requirements with regard to cleaning/maintenance;

- Connection to the system control unit;

- Reduction of vibrations on the measurement system as well as simple maintenance and servicing.

3.3. Validation of Measurement System

4. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| App | Application Software |

| CCD | Charge-Coupled Device |

| CPU | Central Processing Unit |

| CS | Confidence Score |

| CRI | Color Rendering Index |

| DC | Direct Current |

| DSD | Droplet Size Distribution |

| GMP | Good Manufacturing Practice |

| GPO | General Purpose Output |

| GPU | Graphics Processing Unit |

| H | Height |

| HC | Hough Circle |

| IQR | Interquartile Range |

| L | Length |

| LED | Light-Emitting Diode |

| ML | Machine Learning |

| NPU | Neural Processing Unit |

| PAN | Path Aggregation Network |

| Probability Density Function | |

| PHY | Physical Layer |

| PVM | Particle Vision Microscope |

| RAM | Random-Access Memory |

| SOM | System-on-Module |

| SPP | Spatial Pyramid Pooling |

| TFLITE | TensorFlow Lite |

| TOPS | Trillions or Tera Operations per Second |

| TRL | Technical Readiness Level |

| USB | Universal Serial Bus |

| W | Width |

| v4 | Version 4 |

| YOLO | You Only Look Once |

Appendix A

| CAD Sketch | No. of Components (Total) | Channel Dimension/ mm × mm × mm *1 | Fluid Connection | Change *2 |

|---|---|---|---|---|

| 7 | 3 × 3 × 0.025–0.250 | 1/ | - |

| 4 | 3 × 3 × 0.025 | hose nozzle | Reduction in complexity; Changes fluid connection for faster testing in test setup |

| 3 | 3 × 5 × 0.025 | 5 mm/M5 inside camera housing | Wider channel; Changes fluid connection + screwing for camera/plant integration |

| 3 | 3 × 5 × 0.025 | 8 mm/M8 outside camera housing | Fluid connection outside camera housing; Multiple threads for longer lifetime |

References

- Jiang, Z. Online Monitoring and Robust, Reliable Fault Detection of Chemical Process Systems. In Proceedings of the 33rd European Symposium on Computer Aided Process Engineering (ESCAPE33), Athens, Greece, 18–21 June 2023; pp. 1623–1628. [Google Scholar] [CrossRef]

- Tadros, T. Emulsions—Formation, Stability, Industrial Applications; De Gruyter: Berlin, Germany; Boston, MA, USA, 2016. [Google Scholar] [CrossRef]

- Hunter, T.; Pugh, R.; Franks, G.; Jameson, G. The Role of Particles in Stabilising Foams and Emulsions. Adv. Colloid Interface Sci. 2008, 137, 57–81. [Google Scholar] [CrossRef] [PubMed]

- Khosravi, H.; Thaker, A.; Donovan, J.; Ranade, V.; Unnikrishnan, S. Artificial Intelligence and Classic Methods to Segment and Characterize Spherical Objects in Micrographs of Industrial Emulsions. Int. J. Pharm. 2024, 649, 123633. [Google Scholar] [CrossRef]

- Panckow, R.; Reinecke, L.; Cuellar, M.; Maaß, S. Photo-Optical In-Situ Measurement of Drop Size Distributions: Applications in Research and Industry. Oil Gas Sci. Technol. 2017, 72, 14. [Google Scholar] [CrossRef]

- Maaß, S.; Rojahn, J.; Hänsch, R.; Kraume, M. Automated Drop Detection Using Image Analysis for Online Particle Size Monitoring in Multiphase Systems. Comput. Chem. Eng. 2012, 45, 27–37. [Google Scholar] [CrossRef]

- Emmerich, J.; Tang, Q.; Wang, Y.; Neubauer, P.; Junne, S.; Maaß, S. Optical Inline Analysis and Monitoring of Particle Size and Shape Distributions for Multiple Applications: Scientific and Industrial Relevance. Chin. J. Chem. Eng. 2019, 27, 257–277. [Google Scholar] [CrossRef]

- Abidin, M.; Raman, A.; Nor, M. Review on Measurement Techniques for Drop Size Distribution in a Stirred Vessel. Ind. Eng. Chem. Res. 2013, 52, 16085–16094. [Google Scholar] [CrossRef]

- Brás, L.; Gomes, E.; Ribeiro, M.; Guimarães, M. Drop Distribution Determination in a Liquid-Liquid Dispersion by Image Processing. Int. J. Chem. Eng. 2009, 2009, 746439. [Google Scholar] [CrossRef]

- Bowler, A.; Bakalis, S.; Watson, N.A. Review of In-line and On-line Measurement Techniques to Monitor Industrial Mixing Processes. Chem. Eng. Res. Des. 2020, 153, 463–495. [Google Scholar] [CrossRef]

- Neuendorf, L.; Müller, P.; Lammers, K.; Kockmann, N. Convolutional Neural Network (CNN)-Based Measurement of Properties in Liquid–Liquid Systems. Processes 2023, 11, 1521. [Google Scholar] [CrossRef]

- Wu, Y.; Gao, Z.; Rohani, S. Deep Learning-based Oriented Object Detection for In situ Image Monitoring and Analysis: A Process Analytical Technology (PAT) Application for Taurine Crystallization. Chem. Eng. Res. Des. 2021, 170, 444–455. [Google Scholar] [CrossRef]

- Huo, Y.; Zhang, F. In-situ Detection of Micro Crystals During Cooling Crystallization Based on Deep Image Super-Resolution Reconstruction. IEEE Access 2021, 9, 31618–31626. [Google Scholar] [CrossRef]

- Lins, J.; Harweg, T.; Weichert, F.; Wohlgemuth, K. Potential of Deep Learning Methods for Deep Level Particle Characterization in Crystallization. Appl. Sci. 2022, 12, 2465. [Google Scholar] [CrossRef]

- Unnikrishnan, S.; Donovan, J.; MacPherson, R.; Tormey, D. An Integrated Histogram-Based Vision and Machine-Learning Classification Model for Industrial Emulsion Processing. IEEE Trans. Ind. Inform. 2020, 16, 5948–5955. [Google Scholar] [CrossRef]

- Burke, I.; Assies, C.; Kockmann, N. Rapid Prototyping of a Modular Optical Flow Cell for Image-Based Droplet Size Measurements in Emulsification Processes. J. Flow Chem. 2024. [Google Scholar] [CrossRef]

- Burke, I.; Dhayaparan, T.; Youssef, A.S.; Schmidt, K.; Kockmann, N. Two Deep Learning Methods in Comparison to Characterize Droplet Sizes in Emulsification Flow Processes. J. Flow Chem. 2024. [Google Scholar] [CrossRef]

- Kockmann, N. Digital Methods and Tools for Chemical Equipment and Plants. React. Chem. Eng. 2019, 4, 1522–1529. [Google Scholar] [CrossRef]

- Kadlec, P.; Gabrys, B.; Strandt, S. Data-driven Soft Sensors in the Process Industry. Comput. Chem. Eng. 2009, 33, 795–814. [Google Scholar] [CrossRef]

- Neto, J.; Mota, A.; Lopes, G.; Coelho, B.; Frazão, J.; Moura, A.; Oliveira, B.; Sieira, B.; Fernandes, J.; Fortunato, E.; et al. Open-source Tool for Real-Time and Automated Analysis of Droplet-Based Microfluidic. Lab Chip 2023, 23, 3238–3244. [Google Scholar] [CrossRef] [PubMed]

- Unnikrishnan, S.; Donovan, J.; Tormey, D.; Macpherson, R. Emulsion Quality Evaluation Using Automated Image Analysis. EasyChair Prepr. 2022, 8762. [Google Scholar] [CrossRef]

- Habib, G.; Qureshi, S. Optimization and Acceleration of Convolutional Neural Networks: A Survey. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 4244–4268. [Google Scholar] [CrossRef]

- Neuendorf, L.; Khaydarov, V.; Schler, C.; Kock, T.; Fischer, J.; Urbas, L.; Kockmann, N. Artificial Intelligence-based Module Type Package-compatible Smart Sensors in the Process Industry. Chemie-Ingenieur-Technik 2023, 95, 1546–1554. [Google Scholar] [CrossRef]

- Sibirtsev, S.; Zhai, S.; Neufang, M.; Seiler, J.; Jupke, A. Mask R-CNN Based Droplet Detection in Liquid–Liquid Systems, Part 2: Methodology for Determining Training and Image Processing Parameter Values Improving Droplet Detection Accuracy. Chem. Eng. J. 2023, 473, 144826. [Google Scholar] [CrossRef]

- Sibirtsev, S.; Zhai, S.; Jupke, A. Mask R-CNN Based Droplet Detection in Liquid–Liquid Systems, Part 3: Model Generalization for Accurate Processing Performance Independent of Image Quality. Chem. Eng. Res. Des. 2024, 202, 161–168. [Google Scholar] [CrossRef]

- Schäfer, J.; Schmitt, P.; Hlawitschka, M.; Bart, H. Measuring Particle Size Distributions in Multiphase Flows Using a Convolutional Neural Network. Chemie-Ingenieur-Technik 2019, 91, 1688–1695. [Google Scholar] [CrossRef]

- Liu, J.; Kuang, W.; Liu, J.; Gao, Z.; Rohani, S.; Gong, J. In-situ Multiphase Flow Imaging for Particle Dynamic Tracking and Characterization: Advances and Applications. Chem. Eng. J. 2022, 438, 135554. [Google Scholar] [CrossRef]

- Manee, V.; Zhu, W.; Romagnoli, J. A Deep Learning Image-Based Sensor for Real-Time Crystal Size Distribution Characterization. Ind. Eng. Chem. Res. 2019, 58, 23175–23186. [Google Scholar] [CrossRef]

- Gao, Z.; Wu, Y.; Bao, Y.; Gong, J.; Wang, J.; Rohani, S. Image Analysis for In-line Measurement of Multidimensional Size, Shape, and Polymorphic Transformation of l -Glutamic Acid Using Deep Learning-Based Image Segmentation and Classification. Cryst. Growth Des. 2018, 18, 4275–4281. [Google Scholar] [CrossRef]

- Kockmann, N.; Bittorf, L.; Krieger, W.; Reichmann, F.; Schmalenberg, M.; Soboll, S. Smart Equipment—A Perspective Paper. Chem. Ing. Tech. 2018, 90, 1806–1822. [Google Scholar] [CrossRef]

- Bundesministerium des Innern und für Heimat. Design Thinking. Available online: https://www.orghandbuch.de/Webs/OHB/DE/OrganisationshandbuchNEU/4_MethodenUndTechniken/Methoden_A_bis_Z/Design_Thinking/Design%20Thinking_node.html (accessed on 12 July 2024).

- Manning, C. Technology Readiness Levels. Available online: https://www.nasa.gov/directorates/somd/space-communications-navigation-program/technology-readiness-levels/ (accessed on 12 July 2024).

- Sopat. Available online: https://www.sopat.de/de/ (accessed on 18 July 2024).

- Dinter, R.; Helwes, L.; Vries, S.; Jegatheeswaran, K.; Jibben, H.; Kockmann, N. 3D-Printed Open-Source Sensor Flow Cells for Microfluidic Temperature, Electrical Conductivity, and pH Value Determination. J. Flow Chem. 2024, 14, 469–479. [Google Scholar] [CrossRef]

- Glotz, G.; Kappe, C. Design and Construction of an Open Source-based Photometer and its Applications in Flow Chemistry. React. Chem. Eng. 2018, 3, 478–486. [Google Scholar] [CrossRef]

- Schmalenberg, M.; Sallamon, F.; Haas, C.; Kockmann, N. Temperature-Controlled Minichannel Flow-Cell for Non-Innvasive Particle Measurements in Solid-Liquid Flow. In Proceedings of the ASME 2020 18th International Conference on Nanochannels, Microchannels, and Minichannels (ICNMM2020), Orlando, FL, USA, 12–15 July 2020. [Google Scholar] [CrossRef]

- Burke, I.; Youssef, A.S.; Kockmann, N. Design of an AI-supported Sensor for Process Relevant Parameters in Emulsification Processes. In Proceedings of the Dresdner Sensor-Symposium, Dresden, Germany, 5–7 December 2022; pp. 218–223. [Google Scholar] [CrossRef]

- Analytics, H. Durchfluss-Küvette 137-QS, Quarzglas High Performance, 1 mm Schichtdicke. Available online: https://www.analytics-shop.com/de/hl137-1-40 (accessed on 18 July 2024).

- Schneider, C.; Rasband, W.; Eliceiri, K. NIH Image to ImageJ: 25 years of image analysis. Nat. Methods 2012, 9, 671–675. [Google Scholar] [CrossRef] [PubMed]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- IMXMLUG_6.6.23_2.0.0—i.MX Machine Learning User’s Guide. Available online: https://www.nxp.com/docs/en/user-guide/IMX-MACHINE-LEARNING-UG.pdf (accessed on 16 July 2024).

- Laskowski, P. GitHub Repository—Convert_Darknet_YOLO_to_TensorFlow (patryklaskowski). Available online: https://github.com/patryklaskowski/Convert_Darknet_YOLO_to_TensorFlow (accessed on 16 July 2024).

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable Bag-of-freebies Sets New State-of-the-art for Real-time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Durve, M.; Orsini, S.; Tiribocchi, A.; Montessori, A.; Tucny, J.; Lauricella, M.; Camposeo, A.; Pisignano, D.; Succi, S. Benchmarking YOLOv5 and YOLOv7 Models with DeepSORT for Droplet Tracking Applications. Eur. Phys. J. 2023, 46, 32. [Google Scholar] [CrossRef]

| Properties | Sony IMX327 |

|---|---|

| Resolution/pixel | 1920 × 1080 (2 MP) |

| Sensor size | 1/2.8 |

| Pixel size/m | 2.9 |

| Max. image diagonal/mm | 6.46 |

| Shutter/- | Rolling |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Burke, I.; Salzer, S.; Stein, S.; Olusanya, T.O.O.; Thiel, O.F.; Kockmann, N. AI-Based Integrated Smart Process Sensor for Emulsion Control in Industrial Application. Processes 2024, 12, 1821. https://doi.org/10.3390/pr12091821

Burke I, Salzer S, Stein S, Olusanya TOO, Thiel OF, Kockmann N. AI-Based Integrated Smart Process Sensor for Emulsion Control in Industrial Application. Processes. 2024; 12(9):1821. https://doi.org/10.3390/pr12091821

Chicago/Turabian StyleBurke, Inga, Sven Salzer, Sebastian Stein, Tom Olatomiwa Olakunle Olusanya, Ole Fabian Thiel, and Norbert Kockmann. 2024. "AI-Based Integrated Smart Process Sensor for Emulsion Control in Industrial Application" Processes 12, no. 9: 1821. https://doi.org/10.3390/pr12091821

APA StyleBurke, I., Salzer, S., Stein, S., Olusanya, T. O. O., Thiel, O. F., & Kockmann, N. (2024). AI-Based Integrated Smart Process Sensor for Emulsion Control in Industrial Application. Processes, 12(9), 1821. https://doi.org/10.3390/pr12091821