Abstract

We propose a new optimal iterative scheme without memory free from derivatives for solving non-linear equations. There are many iterative schemes existing in the literature which either diverge or fail to work when . However, our proposed scheme works even in these cases. In addition, we extended the same idea for iterative methods with memory with the help of self-accelerating parameters estimated from the current and previous approximations. As a result, the order of convergence increased from four to seven without the addition of any further functional evaluation. To confirm the theoretical results, numerical examples and comparisons with some of the existing methods are included which reveal that our scheme is more efficient than the existing schemes. Furthermore, basins of attraction are also included to describe a clear picture of the convergence of the proposed method as well as some of the existing methods.

Keywords:

non-linear equation; iterative method with memory; R-order of convergence; basin of attraction MSC:

65H05; 65H99

1. Introduction

Many problems in computational sciences and other disciplines can be modelled in the form of a non-linear equation or systems. In particular, a large number of problems in applied mathematics and engineering are solved by finding the solutions of these equations. In the literature, there are several iterative methods that have been designed by using different procedures to approximate the simple roots of a non-linear equation,

where is a real function defined in an open interval I. To find the roots of Equation (1), we look towards iterative schemes. A lot of iterative methods of different convergence orders already exist in the literature (see [1,2] and the references therein) to approximate the roots of Equation (1). Out of them, the most eminent one-point iterative method without memory is the quadratic convergent Newton–Raphson scheme [3] given by

One drawback of this method is that when , the method fails, which confines its applications. The first objective and inspiration to design iterative methods for solving this kind of problem are to obtain the highest order of convergence with the least computational cost. Therefore, a lot of researchers are interested in constructing optimal multipoint methods [4] without memory, in the sense of Kung Traub conjecture [5] which states that multipoint iterative methods without memory, requiring functional evaluations per iteration, have a convergence order at most . Among them, an optimal fourth-order iterative method was developed by Kou et al. [6] defined by

Further, Kansal et al. proposed an optimal fourth-order iterative method [7] in parameters and defined by

Soleymani developed an optimal fourth-order method [8] given by

Furthermore, an optimal-order method was proposed by Chun et al. [9] given by

On the other hand, sometimes it is possible to increase the order of convergence without any new function evaluation based on acceleration parameter(s) which appear in the error equation of the multipoint methods without memory. It was Traub [3], who slightly altered Steffensen’s method [10] and presented the first method with memory as follows:

This method has an order of convergence of 2.414. Still, if we use a better self-accelerating parameter, there are apparent chances that the order of convergence will increase.

Following the steps of Traub, many authors are constructing higher-order methods with and without memory. Among many others, Chicharro et al. [11] presented a bi-parametric family of order four and then developed a family of methods with memory having a higher order of convergence without further increasing the number of functional evaluations per iteration. In [12], the authors presented a derivative-free form of King’s family with memory. The authors in [13] developed a tri-parametric derivative-free family of Hansen–Patrick-type methods which requires only three functional evaluations to achieve optimal fourth-order convergence. Then, they extended the idea with memory as a result of which the R-order convergence increased from four to seven, without any additional functional evaluation.

The development of such methods has increased over the years. Some applications of these iterative methods can be seen in [14,15,16,17]. Thus, by taking into consideration these developments, we further attempt to propose an iterative method without memory and then convert it into a more efficient method with memory such that the order of convergence is increased without any further functional evaluation.

However, another important aspect of an iterative scheme to be considered is its stability, which is the analysis that tells us how dependent the scheme of the initial guesses used is. In this regard, a comparison between iterative methods by using the basins of attraction was developed by Ardelean [18]. This motivates us to work on the optimal-order methods and their with memory variants along with their basins of attraction.

The rest of the paper is organized as follows. Section 2 contains the development of a new iterative method without memory and the proof of its order of convergence. Section 3 covers the inclusion of memory to develop a new iterative method with memory and its error analysis. Numerical results for the proposed methods and comparisons with some of the existing methods to illustrate our theoretical results are given in Section 4. Section 5 depicts the convergence of the methods using basins of attraction. Lastly, Section 6 collates the conclusions.

R-Order of Convergence

For finding the R-order convergence [19] of our proposed method with memory, we make use of the following Theorem 1 given by Traub.

Theorem 1.

Suppose that is an iterative method with memory that generates a sequence (converging to the root ξ) of approximations to ξ. If there exists a non-zero constant ζ and non-negative numbers , , such that the inequality,

holds, then the R-order of convergence of the iterative method satisfies the inequality,

where is the unique positive root of the equation,

2. Iterative Method without Memory and Its Convergence Analysis

We aim to construct a new two-point derivative-free optimal scheme without memory in this section and extend it to a memory scheme.

If the well-known Steffensen’s method is combined with Newton’s method, we obtain the following fourth-order scheme:

where . To avoid the computation of , the authors in [20] approximated it by the derivative of the following first-degree Padé approximant:

where , and are real parameters to be determined satisfying the following conditions:

Using these conditions, the derivative of the Padé approximant evaluated in is given as

Using (14) in the second step of (9), they presented the following scheme:

where . This scheme is optimal in the sense of the Kung–Traub conjecture having an order of convergence of four with three functional evaluations per iteration, , and .

Now, in order to extend the method with memory, we devise the idea of introducing two parameters and in (15) and we present a modification in this method as follows:

where .

This modified scheme yields the optimal order of convergence 4 having three functional evaluations per iteration, , and .

Next, we establish the convergence results for our proposed family without memory given by Equation (16).

Theorem 2.

Suppose that is a real function suitably differentiable in a domain D. If is a simple root of and an initial guess is sufficiently close to ξ, then the iterative method given by Equation (16), converges to ξ with convergence order having the following error relation,

where , ξ is a simple root of and

Proof.

Expanding about by the Taylor series, we have

Using Equation (17) in the first step of Equation (16), we have

In addition, the Taylor’s expansion of is

Using Equations (17)–(19), we have

Finally, putting Equation (20) into the second step of Equation (16), we obtain

which is the error equation for the proposed optimal scheme given by Equation (16) with a convergence order of four. This completes the proof. □

3. Iterative Method with Memory and Its Convergence Analysis

Now, we present an extension to the method given by Equation (16) by the inclusion of memory to improve the convergence order without the addition of any new functional evaluations.

If we clearly observed, it can be seen from the error relation given in Equation (21) that the order of convergence of the proposed family given by Equation (16) is 4 if and . Therefore, if and , then the order of convergence of our proposed family can be improved, but this value cannot be reached because the values of and are not practically available. Instead, we can use approximations calculated by already available information [21]. Hence, the main idea in constructing the methods with memory consists of the calculation of parameters and as the iteration proceeds by the formulae,

for Further, it is also assumed that the initial estimates and must be chosen before starting the iterations. Thus, we give an estimation for and given by

where and are Newton’s interpolating polynomials of the third- and fourth-degrees, respectively, which are set through the best available nodal points, for and for .

Thus, by replacing by and by in the method given by Equation (16), we obtain a new family with memory as follows:

Next, we establish the convergence results for our proposed family with memory given by Equation (23).

Theorem 3.

Suppose that is a real function suitably differentiable in a domain D. If is a simple root of and an initial guess is sufficiently close to ξ, then the iterative method given by Equation (23) converges to ξ with a convergence order of at least 7.

Proof.

Let be a sequence of approximations generated by an iterative method . If this sequence converges to zero of f with the R-order of , then we can write

where tends to the asymptotic error constant of , when . Thus,

Let the iterative sequences and have R-orders and , respectively. Therefore, we obtain

and

Using (26), (27) and a lemma stated in [13], we obtain

In view of our proposed family of methods without memory given by Equation (16), we have the following error relations,

where .

According to the error relations given by Equations (29)–(31) with self-accelerating parameters, and , we can write the corresponding error relations for the methods given by Equation (23) with memory as follows:

where depending on iteration index since and are re-calculated in each step. Now using Equations (28) and (32)–(34), we obtain the following relations:

Now, comparing the error exponents of on the right-hand side of the pairs given by Equations (26) with (35), (27) with (36) and (25) with (37), respectively, we obtain the following system of equations:

Solving this system of equations, we obtain a non-trivial solution as . Hence, we can conclude that the lower bound of the R-order of our proposed family with memory given by Equation (23) is seven. This completes our proof. □

4. Numerical Results

In this section, the numerical results of our proposed scheme are examined. Furthermore, we will demonstrate the corresponding results after comparison with some existing schemes, both with and without memory. All calculations have been accomplished using Mathematica 11.1 in multiple precision arithmetic environments with specification of a processor Intel(R) Core(TM) i5-1035G1 CPU @ 1.00 GHz 1.20 GHz (64-bit operating system), Windows 11. We suppose that the initial values of (or ) and (or ) must be selected prior to performing the iterations and a suitable be given.

The functions used for our computations are given in Table 1.

Table 1.

Test functions along with their roots and initial guesses taken.

To check the theoretical order of convergence, the computational order of convergence [22], (COC) is calculated using the following formula,

considering the last three approximations in the iterative procedure. The errors of approximations to the respective zeros of the test functions, and COC are displayed in Table 2 and Table 3.

Table 2.

Comparison of the different methods without memory.

Table 3.

Comparison of the different methods with memory.

We consider the following existing methods for the comparisons:

Soleymani et al. method () without memory [23]:

Cordero et al. method () without memory [20]:

Chun method () without memory [24]:

Cordero et al. method () with memory [25]:

where and are as defined in Section 3.

Furthermore, we consider some real-life problems, which are as follows:

Example 1.

Fractional conversion in a chemical reactor [27],

Here, x denotes the fractional conversion of quantities in a chemical reactor. If x is less than zero or greater than one, then the above fractional conversion will be of no physical meaning. Hence, x is taken to be bounded in the region . Moreover, the desired root is .

Example 2.

The path traversed by an electron in the air gap between two parallel plates considering the multi-factor effect is given by

where and are the position and velocity of the electron at time , m and are the mass and charge of the electron at rest and is the RF electric field between the plates. If particular parameters are chosen, Equation (45) can be simplified as

The desired root of Equation (46) is .

We also implemented our proposed schemes given by Equations (16) and (23) on the above-mentioned problems. Table 4 and Table 5 demonstrate the corresponding results. Further, Table 2 demonstrates COC for our proposed method without memory () given by Equation (16), the method given by Equation (39) denoted as , the method given by Equation (40) denoted as , and the method given by Equation (41) denoted as , respectively. Table 3 demonstrates COC for our proposed method with memory () given by Equation (23), the method given by Equation (42) denoted as , and the method given by Equation (43) by taking denoted as and denoted by , respectively.

Table 4.

Comparison of the different methods without memory for real-life problems.

Table 5.

Comparison of the different methods with memory for real-life problems.

It can be seen from Table 2 and Table 3 that for the function , fails to provide a solution and requires more than three iterations to converge to the root. Furthermore, converges to the desired root with an error of approximations much lower than and . For the function , , and fail to provide a solution and and do not converge to the desired solution within three iterations. has a somewhat complex structure, and as a consequence takes more time than our method in most of the cases to converge to the root. Furthermore, and converge to the root taking more time than and , respectively. has a drawback of its derivative, so it will not work on points at which the function is zero or close to zero.

Furthermore, for functions , and , the proposed methods and converge to the required root with minimum error compared to the existing methods.

Hence, we can conclude that our methods work on several functions to obtain roots, whereas the existing methods have some limitations.

Remark 1.

The proposed schemes given by Equations (16) and (23) have been compared to some already existing methods and it can be seen from the computational results that our proposed schemes give results in many cases where the existing methods fail in terms of COC and errors, as depicted in Table 2, Table 3, Table 4 and Table 5. Our methods display a noticeable decrease in approximation errors, as shown in the above-mentioned tables.

5. Basins of Attraction

The basins of attraction of the root of is the set of all initial points in the complex plane that converge to on the application of the given iterative scheme. Our objective is to make use of the basins of attraction to examine the comparison of several root-finding iterative methods in the complex plane in terms of convergence and stability.

On this front, we take a grid of the rectangle . A colour is assigned to each point on the basis of the convergence of the corresponding method starting from to the simple root and if the method diverges, a black colour is assigned to that point. Thus, distinct colours are assigned to the distinct roots of the corresponding problem. It was decided that an initial point converges to a root when . Then, point is said to belong to the basins of attraction of . Likewise, the method beginning from the initial point is said to diverge if no root is located in a maximum of 25 iterations. We have used MATLAB R2022a software [28] to draw the presented basins of attraction.

Furthermore, Table 6 lists the average number of iterations denoted by Avg_Iter and the percentage of non-converging points denoted by of the methods to generate the basins of attraction.

Table 6.

Comparison of the different methods without and with memory in terms of Avg_Iter and .

To carry out the desired comparisons, we considered the test problems given below:

Problem 1.

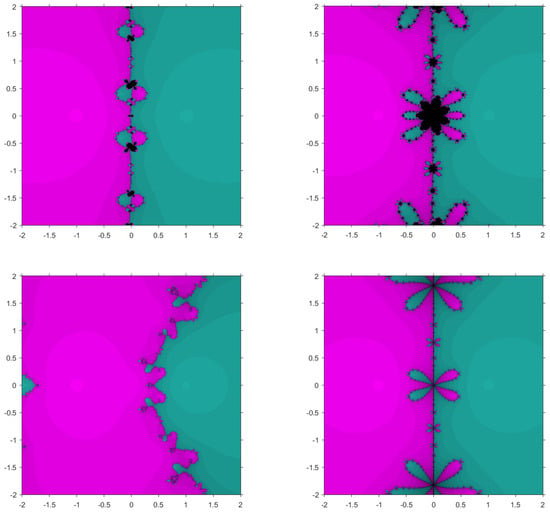

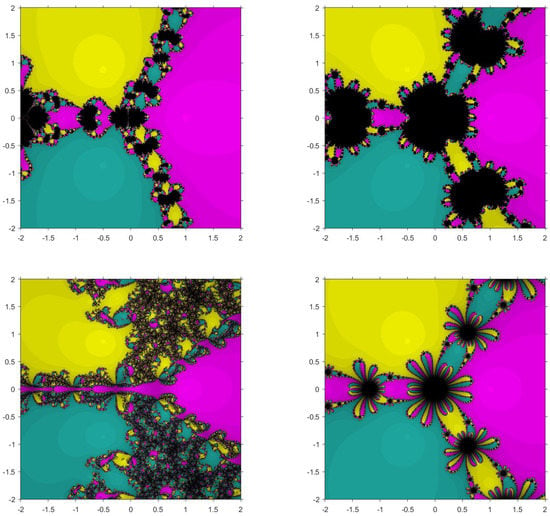

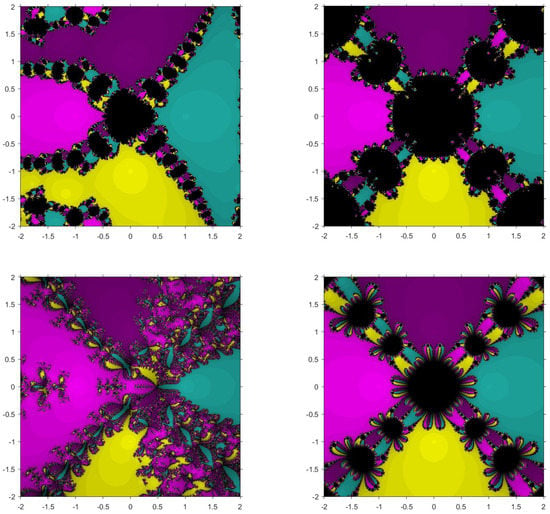

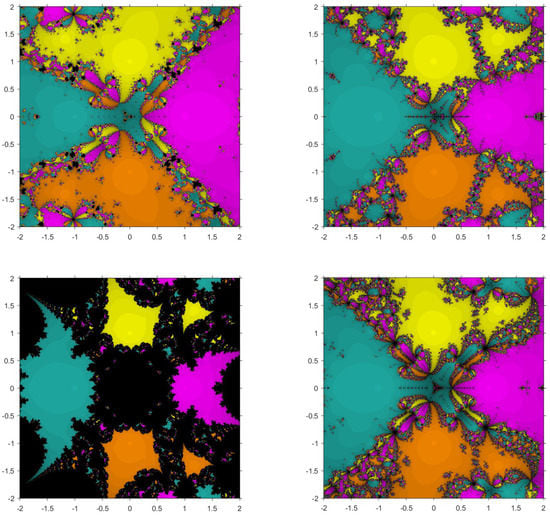

The first function considered is . The roots of this function are 1 and . The basins corresponding to our proposed method and the existing methods are shown in Figure 1 and Figure 2. From Table 6, it can be seen that the proposed methods, and converge to the root in fewer iterations. Furthermore, from the figures, it is observed that converges to the root with no diverging points but the existing methods have some points painted as black. , in particular has very small basins.

Figure 1.

Basins of attraction for , , , and , respectively, for .

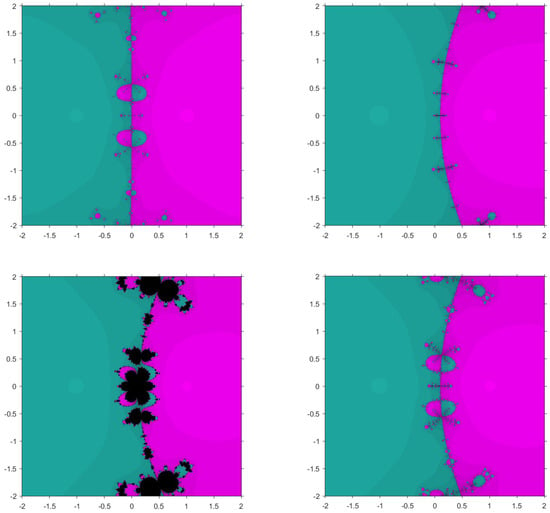

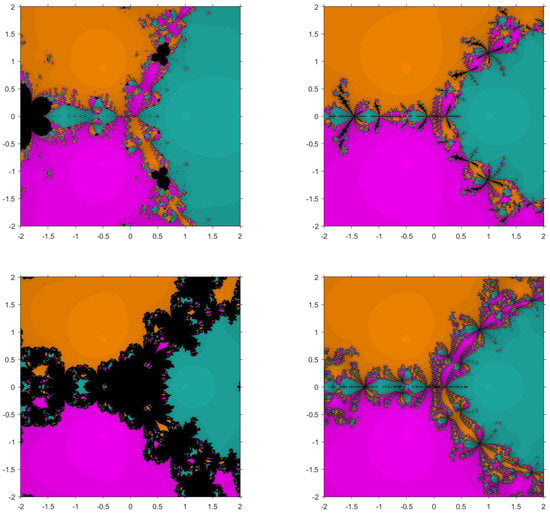

Figure 2.

Basins of attraction for , , , and , respectively, for .

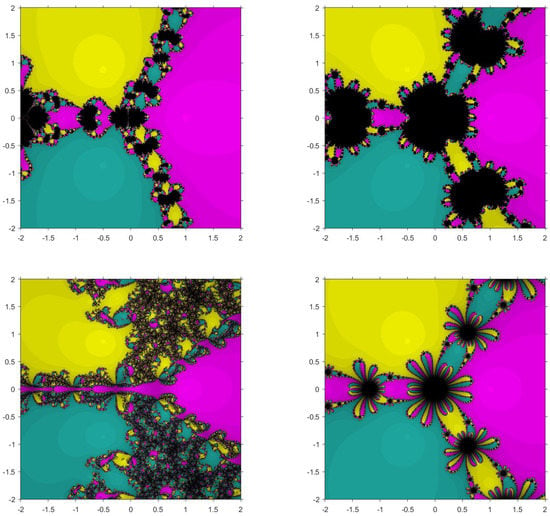

Problem 2.

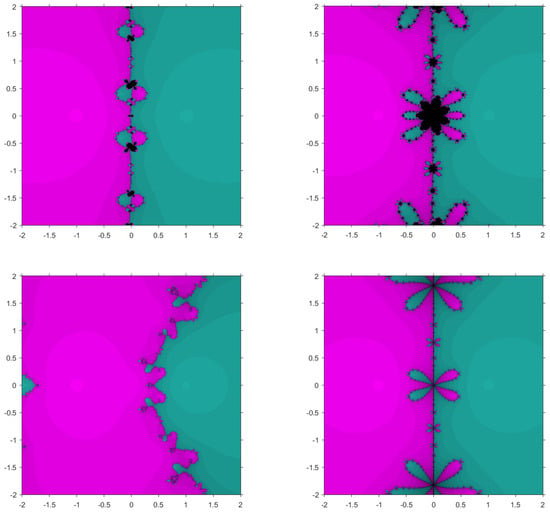

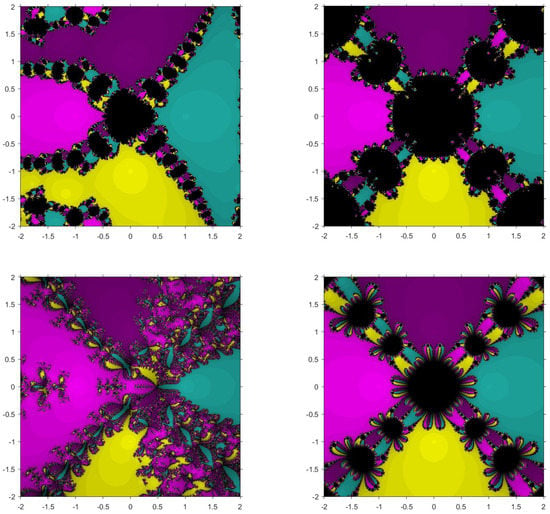

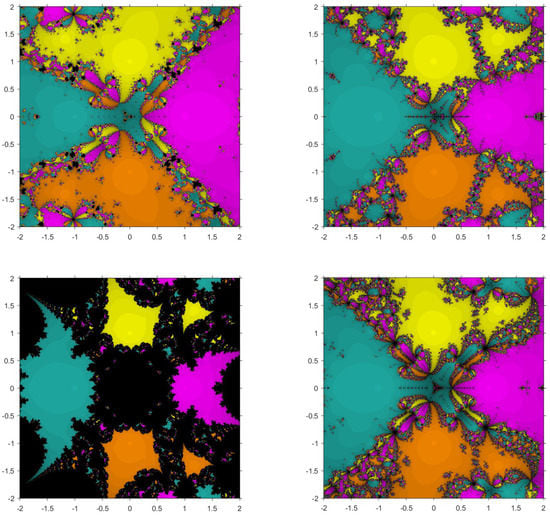

The second function taken is with roots , and . Figure 3 and Figure 4 show the basins for in which it can be seen that , and have wider regions of divergence. Moreover, the average number of iterations taken by the proposed methods is less in each case compared to the existing methods.

Figure 3.

Basins of attraction for , , , and , respectively, for .

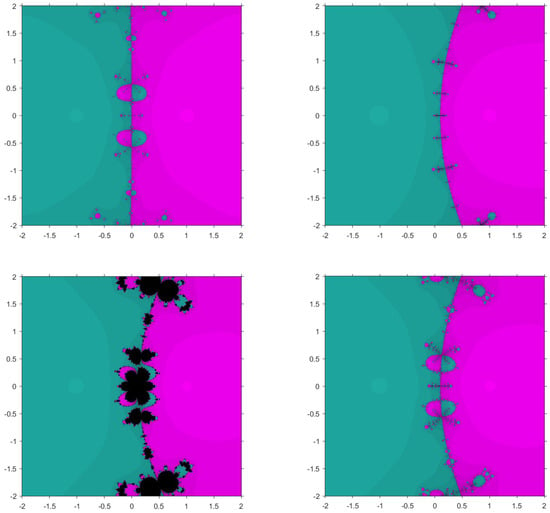

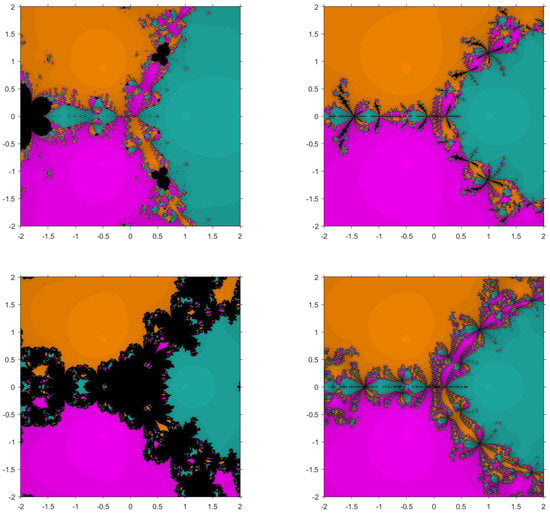

Figure 4.

Basins of attraction for , , , and , respectively, for .

Problem 3.

The third function considered is with roots and . Figure 5 and Figure 6 show that , and have smaller basins. Although and have some diverging points, they converge in a fewer number of iterations faster than the existing methods.

Figure 5.

Basins of attraction for , , , and , respectively, for .

Figure 6.

Basins of attraction for , , , and , respectively, for .

Therefore, we can conclude that from Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6, it can be observed that has larger basins in comparison to and in all cases. The basins for are very small in comparison to in all cases. In addition, from Table 6, we observe that the average number of iterations taken by the methods are more than and for , the iterations required are more than .

Remark 3.

One can see from Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 and Table 6 that our proposed methods have larger basins of attraction in comparison to the existing ones. In addition, there is a marginal increase in the average number of iterations per point of the existing methods. Consequently, through our proposed methods, the chances of non-convergence to the root are less when compared to the existing methods.

6. Conclusions

We have proposed a new fourth-order optimal method without memory. In order to increase the order of convergence, we have extended the proposed method without memory to with memory, without the addition of any new functional evaluations taking into consideration two self-accelerating parameters. Consequently, the order of convergence increased from four to seven. Computational results demonstrate that the proposed methods converge to the root with a higher rate in comparison to other methods of the same order at the considered point. In addition, our proposed schemes give results in many of the cases where the existing methods fail in terms of COC and errors. Moreover, we have also presented the basins of attraction for the proposed method as well as some existing methods, which assert that the chances of non-convergence to the root much less in our proposed methods when compared to the existing methods.

Author Contributions

M.K.: Conceptualization; methodology; validation; H.S.: writing—original draft preparation; M.K. and R.B.: writing—review and editing, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia, under grant no. (KEP-MSc-58-130-43). The authors, therefore, acknowledge with thanks DSR for technical and financial support.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to sincerely thank the reviewers for their valuable suggestions, which significantly improved the readability of the paper. The second author gratefully acknowledges technical support from the Seed Money Project (TU/DORSP/57/7290) to support this research work of TIET, Punjab.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Argyros, I.K.; Kansal, M.; Kanwar, V.; Bajaj, S. Higher-order derivative-free families of Chebyshev-Halley type methods with or without memory for solving nonlinear equations. Appl. Math. Comput. 2017, 315, 224–245. [Google Scholar] [CrossRef]

- Jain, P.; Chand, P.B. Derivative free iterative methods with memory having R-order of convergence. Int. J. Nonlinear Sci. Numer. Simul. 2020, 21, 641–648. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Bergen, NJ, USA, 1964. [Google Scholar]

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Kung, H.T.; Traub, J.F. Optimal order of one-point and multi-point iteration. J. Assoc. Comput. Math. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Kou, J.; Li, Y.; Wang, X. A composite fourth-order iterative method for solving non-linear equations. Appl. Math. Comput. 2007, 184, 471–475. [Google Scholar] [CrossRef]

- Kansal, M.; Kanwar, V.; Bhatia, S. New modifications of Hansen-Patrick’s family with optimal fourth and eighth orders of convergence. Appl. Math. Comput. 2015, 269, 507–519. [Google Scholar] [CrossRef]

- Soleymani, F. Novel computational iterative methods with optimal order for nonlinear equations. Adv. Numer. Anal. 2011, 2011, 270903. [Google Scholar] [CrossRef]

- Chun, C.; Lee, M.Y.; Neta, B.; Džunić, J. On optimal fourth-order iterative methods free from second derivative and their dynamics. Appl. Math. Comput. 2012, 218, 6427–6438. [Google Scholar] [CrossRef]

- Zheng, Q.; Li, J.; Huang, F. An optimal Steffensen-type family for solving nonlinear equations. Appl. Math. Comput. 2011, 217, 9592–9597. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R.; Vassileva, M.P. King-type derivative-free iterative families: Real and memory dynamics. Complexity 2017, 2017, 2713145. [Google Scholar] [CrossRef]

- Sharifi, S.; Siegmund, S.; Salimi, M. Solving nonlinear equations by a derivative-free form of the King’s family with memory. Calcolo 2016, 53, 201–215. [Google Scholar] [CrossRef]

- Kansal, M.; Kanwar, V.; Bhatia, S. Efficient derivative-free variants of Hansen-Patrick’s family with memory for solving nonlinear equations. Numer. Algorithms 2016, 73, 1017–1036. [Google Scholar] [CrossRef]

- Jagan, K.; Sivasankaran, S. Soret & Dufour and triple stratification effect on MHD flow with velocity slip towards a stretching cylinder. Math. Comput. Appl. 2022, 27, 25. [Google Scholar]

- Qasem, S.A.; Sivasankaran, S.; Siri, Z.; Othman, W.A. Effect of thermal radiation on natural conviction of a nanofluid in a square cavity with a solid body. Therm. Sci. 2021, 25, 1949–1961. [Google Scholar] [CrossRef]

- Asmadi, M.S.; Kasmani, R.; Siri, Z.; Sivasankaran, S. Upper-Convected Maxwell fluid analysis over A horizontal wedge using Cattaneo-Christov heat flux model. Therm. Sci. 2021, 25, 1013–1021. [Google Scholar] [CrossRef]

- Kasmani, R.M.; Sivasankaran, S.; Bhuvaneswari, M.; Siri, Z. Effect of chemical reaction on convective heat transfer of boundary layer flow in nanofluid over a wedge with heat generation/absorption and suction. J. Appl. Fluid Mech. 2015, 9, 379–388. [Google Scholar] [CrossRef]

- Ardelean, G. A comparison between iterative methods by using the basins of attraction. Appl. Math. Comput. 2011, 218, 88–95. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solutions of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A new technique to obtain derivative-free optimal iterative methods for solving nonlinear equations. J. Comput. Appl. Math. 2013, 252, 95–102. [Google Scholar] [CrossRef]

- Soleymani, F.; Lotfi, T.; Tavakoli, E.; Haghani, F.K. Several iterative methods with memory using self-accelerators. Appl. Math. Comput. 2015, 254, 452–458. [Google Scholar] [CrossRef]

- Jay, I.O. A note on Q-order of convergence. BIT Numer. Math. 2011, 41, 422–429. [Google Scholar] [CrossRef]

- Soleymani, F.; Sharma, R.; Li, X.; Tohidi, E. An optimized derivative-free form of the Potra-Pták method. Math. Comput. Modell. 2012, 56, 97–104. [Google Scholar] [CrossRef]

- Chun, C. Some variants of King’s fourth-order family of methods for nonlinear equations. Appl. Math. Comput. 2007, 190, 57–62. [Google Scholar] [CrossRef]

- Cordero, A.; Lotfi, T.; Bakhtiari, P.; Torregrosa, J.R. An efficient two-parametric family with memory for nonlinear equations. Numer. Algorithms 2015, 68, 323–335. [Google Scholar] [CrossRef]

- Džunić, J. On efficient two-parameter methods for solving nonlinear equations. Numer. Algorithms 2013, 63, 549–569. [Google Scholar] [CrossRef]

- Shacham, M. Numerical solution of constrained nonlinear algebraic equations. Int. J. Numer. Methods Eng. 1986, 23, 1455–1481. [Google Scholar] [CrossRef]

- Zachary, J.L. Introduction to Scientific Programming: Computational Problem Solving Using Maple and C; Springer: New York, NY, USA, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).