Abstract

Barrett’s esophagus (BE) represents a pre-malignant condition characterized by abnormal cellular proliferation in the distal esophagus. A timely and accurate diagnosis of BE is imperative to prevent its progression to esophageal adenocarcinoma, a malignancy associated with a significantly reduced survival rate. In this digital age, deep learning (DL) has emerged as a powerful tool for medical image analysis and diagnostic applications, showcasing vast potential across various medical disciplines. In this comprehensive review, we meticulously assess 33 primary studies employing varied DL techniques, predominantly featuring convolutional neural networks (CNNs), for the diagnosis and understanding of BE. Our primary focus revolves around evaluating the current applications of DL in BE diagnosis, encompassing tasks such as image segmentation and classification, as well as their potential impact and implications in real-world clinical settings. While the applications of DL in BE diagnosis exhibit promising results, they are not without challenges, such as dataset issues and the “black box” nature of models. We discuss these challenges in the concluding section. Essentially, while DL holds tremendous potential to revolutionize BE diagnosis, addressing these challenges is paramount to harnessing its full capacity and ensuring its widespread application in clinical practice.

1. Introduction

Barrett’s esophagus (BE) is a pathological condition where the squamous epithelial cells in the lower part of the esophagus are replaced by a type of columnar epithelium found in the intestine [1,2]. It is often considered a pre-cancerous change for esophageal adenocarcinoma (EAC), with an annual progression rate from BE to EAC estimated to be 0.12–0.13% [3,4]. Early detection and intervention for BE or EAC can result in a five-year survival rate of up to 80%. At the same time, in the late stages, it drops to only 13% [5,6]. Currently, the diagnosis of BE primarily depends on the pathological biopsy under endoscopy. However, even for well-equipped and experienced endoscopists or pathologists, early-stage BE lesions pose a considerable diagnostic challenge [7,8]. Using the Seattle Biopsy Protocol to handle abnormalities detected during endoscopic inspections is commonly recommended. Despite this protocol suggesting four biopsies for every 1 cm, a failure in diagnosis may still occur due to insufficient sample volume [9,10].

Deep learning (DL) has fundamentally changed our approach to analyzing and understanding visual information in recent years. As a subfield of machine learning, the essence of deep learning lies in utilizing neural network models to learn and abstract features from large amounts of data [11,12]. In particular, deep learning has achieved significant breakthroughs in image recognition, analysis, and processing, surpassing human expert levels in specific tasks [13]. In medical image diagnosis, deep learning models can learn typical pathological features from many images, thus potentially identifying BE lesions at an early stage, even those that are minuscule and difficult for the human eye to detect [12,14,15]. Through such means, deep learning may significantly enhance the early diagnosis rate of BE, improving patient prognosis and quality of life.

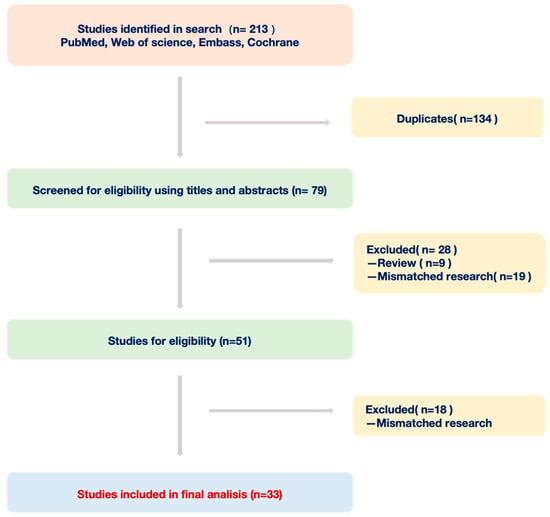

In this review, we adopted a combined approach of subject keyword and free word search strategy to identify pertinent studies focusing on deep learning and Barrett’s esophagus. Our primary data sources encompassed four public databases: PubMed, Embase, Web of Science, and Cochrane Library. Using a predefined set of inclusion and exclusion criteria, we meticulously assessed the literature from the past decade. Inclusion Criteria: 1. Studies primarily addressing the application of deep learning in Barrett’s esophagus diagnosis. 2. Articles presenting original data and specific research findings. 3. Publications within the last ten years. Exclusion Criteria: 1. Duplicates or multiple versions of the same study. 2. Commentaries, expert opinions, case reports, or any non-original research articles. 3. Studies not directly relevant to deep learning or Barrett’s esophagus. Following this rigorous screening process, we distilled our initial findings from 213 articles down to 33 original studies for an in-depth analysis (Figure 1). Through this review, we offer an exhaustive insight into the current applications of deep learning in BE diagnosis and further discuss its promising trajectory in an upcoming clinical setting.

Figure 1.

Workflow of documents from four databases.

2. Application of Deep Learning to Assist Endoscopic Diagnosis

Endoscopy plays a pivotal role in diagnosing BE, enabling gastroenterologists to view the entirety of the esophageal inner wall and sample specific tissues for pathological examination based on the situation. A comprehensive review included 21 original studies that utilized endoscopic images or video information to construct deep neural networks to aid diagnosis (Table 1).

Table 1.

Twenty-one studies on deep learning-assisted endoscopic diagnosis of BE.

Diagnosing diseases first considers the distinction between the pathological site and the surrounding normal tissues. Liu, G., et al. constructed and verified a convolutional neural network (CNN) with two subnetworks using 1272 white-light endoscopic images from a single-center retrospective study, completing the classification task of normal vs. pre-cancer vs. cancer. The final model performance showed an accuracy of 85.83%, a sensitivity of 94.23%, and a specificity of 94.67%. One subnetwork was designed to extract color and global features. In contrast, the other extracted texture and detailed features to increase the model’s interpretability [25]. Leandro A. Passos and his team used the infinite restricted Boltzmann machines to classify esophageal lesions (BE) from other conditions based on the publicly available endoscopy images from the “MICCAI 2015 EndoVis Challenge” dataset. In this study, the maximum accuracy reached 67% [19]. In theory, video provides more dimensional information than images, potentially enhancing prediction effectiveness. Pulido, J.V., et al. retrospectively collected 1057 probe-based confocal laser endomicroscopy (pCLE) videos from 78 patients and constructed models based on AttnPooling and MultiAttnPooling architecture, completing the classification task of normal vs. non-dysplastic BE (NDBE) vs. dysplastic BE/cancer. The final model performance for AttnPooling showed a sensitivity of 90% and a specificity of 88%, whereas for MultiAttnPooling, the sensitivity and specificity were 92% and 84%, respectively [28]. Van der Putten J. and colleagues discovered that during the process of creating endoscopic videos, there might be some invalid frames due to various reasons, affecting lesion detection. Therefore, a method was proposed that initializes frame-based classification and then employs the Hidden Markov Model to incorporate temporal information, enhancing the sensitivity of the classification by 10% [20].

Apart from distinguishing normal tissues from BE, some researchers have also paid attention to differentiating esophagitis from BE. Kumar, A.C., et al. attempted combinations of five CNN architectures and six classifiers using 1663 endoscopic images from public databases. The best-performing model was a fine-tuned ResNet50 with transfer learning, achieving an AUC of 0.962 [35]. Additionally, Villagrana-Bañuelos, K.E., et al. subdivided esophagitis and accomplished a four-classification task: normal vs. BE vs. esophagitis-a vs. esophagitis-b-d. They used 1561 endoscopic images from public databases to construct a model based on the VGG architecture, with the final model’s AUC being normal: 0.95, BE: 0.96, esophagitis-a: 0.86, and esophagitis-b-d: 0.83 [36].

Since BE is a known precursor to EAC, the transition from BE to EAC is a clinical concern. Ebigbo, A., et al. utilized 248 endoscopic images from a public database to classify BE and EAC. Using the ResNet model, they achieved a sensitivity of 97% and a specificity of 88% on the Augsburg dataset, and a sensitivity of 92% and a specificity of 100% on the MICCAI dataset [18]. Ghatwary, N., et al. aimed to build an object detection model based on endoscopic images. Through trials of multiple models, they ultimately chose the VGG-based single-shot multibox detector as the prediction model. The model was trained on endoscopic images from a public database of 100 cases, completing the BE vs. EAC classification task and achieving a sensitivity of 96% and a specificity of 92% [22]. Hou, W., et al. proposed an end-to-end network with an attentive hierarchical aggregation module and a self-distillation mechanism, achieving an AUC of 0.9629. This model enhances classification performance without sacrificing temporal performance, thereby achieving real-time inference [31]. Additionally, de Souza, L.A., Jr., et al. constructed a model for the same task using a convolutional neural network combined with a generative adversarial network (GAN). Their choice of using GANs was driven by the challenges related to limited datasets in medical imaging and the potential of GANs in data augmentation, especially for generating synthetic yet realistic medical images. This approach achieved an accuracy of 90% for the patch-based method and 85% for the image-based approach [24]. The pursuit of creating explainable artificial intelligence continues to be an ongoing endeavor that tracks the operational process of deep learning technology and offers insights into the correctness or error behind its models. De Souza, L.A., Jr., et al. used five different explanation techniques (saliency, guided backpropagation, integrated gradients, input × gradients, and DeepLIFT) to analyze four commonly used deep neural networks (AlexNet, SqueezeNet, ResNet50, and VGG16) built for BE vs. EAC classification tasks through endoscopic images, demonstrating the correlation between computational learning and expert insights [33].

From the perspective of BE subclasses, some researchers have modeled and predicted whether there are developmental abnormalities. Several researchers have tried to model predictions for the presence or absence of dysplasia within the subcategories of BE. Van der Putten, J., et al. collected prospective data sets from three centers. They constructed a model using the ResNet architecture with 40 endoscopic images, thereby completing the classification task of neoplastic BE vs. NDBE. The model achieved a final accuracy of 98%. Its innovation lies in the fact that the deep learning model can assist clinicians in determining the optimal location for pathological biopsies [21]. The team published another phased model for the same problem in the same year. In the first phase, the extraction of features was completed through an editor structure, and in the second phase, the classification task of NDBE vs. dysplastic BE was achieved through a ResNet-based structure. They explored the effect of pre-training on model performance and which dataset would provide the best performance when used for pre-training. The results indicated that pre-training based on the GastroNet dataset yielded the best performance, with an AUC reaching 0.91 (the other two were 0.82 and 0.90, respectively) [23]. The following year, van der Putten, J., et al. incorporated five datasets for modeling, aiming to address this problem better. The T1 dataset contained 494,355 gastrointestinal organ images for pre-training; the T2 dataset consisted of 1247 endoscopic images for model training; the T3 dataset, containing 297 endoscopic images, was used for model parameter adjustment; and finally, the T4 and T5 datasets, together composed of 160 endoscopic images, served as an external test set to evaluate model performance. The multi-stage model they constructed completed the segmentation task using a U-Net architecture and the classification task using a ResNet architecture, ultimately achieving an accuracy, sensitivity, and specificity of 90% [27]. The ultimate purpose of model construction is to serve clinical practice. De Groof AJ and others built a classification model for NDBE vs. neoplastic BE based on the ResNet/U-Net architecture model. They validated it using a prospective, multicenter dataset, achieving an accuracy of 90%, sensitivity of 91%, and specificity of 89%. Worth mentioning is that this team attempted to apply the model to clinical practice with 20 patients after its construction to demonstrate the consistency of computer-assisted detection (CAD) predictions and diagnoses [26]. Jisu, H., et al. conducted a study based on 262 endoscopic images targeting subtypes with BE. Using a conventional CNN model, they accomplished a tri-classification task of intestinal metaplasia, gastric metaplasia, and neoplasia, achieving an accuracy of 80.77% [16].

Compared to standard endoscopy, narrow-band imaging (NBI) endoscopy enhances the visualization of the mucosa and vasculature [37,38,39,40]. Struyvenberg, M.R., et al. initially conducted pre-training using the GastroNet database. Subsequently, they trained using another dataset composed of 1247 white-light endoscopic images. Following this, they employed the third dataset containing NBI endoscopic images, undertaking additional training and validation within an internal center. Lastly, they used the fourth dataset, composed of NBI videos, for external validation. When performing the classification task of neoplastic BE vs. NDBE, the prediction results for image information were a sensitivity of 88% and a specificity of 78%. For video information, the prediction results were a sensitivity of 85% and a specificity of 83% [29]. Additionally, Kusters, C.H.J., et al., based on the EfficientNet-b4 architecture, performed predictions for NBI data using datasets from seven centers. They conducted training on image data and validated and tested it on video data. For completing the neoplastic BE vs. NDBE classification task, the highest achieved AUC value was 0.985 [34].

Determining the extent of BE lesions is crucial for diagnosis, treatment monitoring, and prognosis prediction [41,42]. Pan, W., et al., based on the architecture of fully convolutional networks, constructed a model to accomplish the segmentation task of delineating the boundary between BE and normal esophageal epithelium, utilizing 443 endoscopic images from a single-center prospective study. The performance of their model was evaluated by the intersection over union: 0.56 (at the gastroesophageal junction) and 0.82 (at the squamous-columnar junction) [30]. De Groof J. and others conducted esophageal lesion boundary detection using 40 endoscopic images. They used a pre-trained Inception v3 to build the model, and the final segmentation score was 47.5%. Although the performance was not as good as that of human experts, this study focused on the detection of esophageal lesion boundaries [17]. Furthermore, Ali, S., et al. created an automated model for the quantification and segmentation of BE using endoscopic images and videos, with an accuracy of 98.4% [32].

These studies employ deep learning technologies based on endoscopic images or video information to diagnose BE. They primarily cover three key areas: disease classification, severity grading of disease, and lesion segmentation. They provide auxiliary diagnostic means with significant potential for clinical application.

3. Applications of Deep Learning to Assist Pathological Diagnosis

With the rapid advancements in digitization and artificial intelligence, digital pathology has emerged as an integral component of modern medicine, providing clinicians with a more precise and quicker method of diagnosis [43,44,45,46]. At its core, digital pathology utilizes complex algorithms and big data to analyze images of pathological tissues, aiming to identify and assess lesions. In the diagnosis of BE, digital pathology is increasingly prevalent, with a series of research studies deeply exploring this application [47,48]. The primary objective of these studies is to leverage deep learning technologies for more accurate identification and categorization of BE-related lesions, thereby enhancing the precision and timeliness of BE diagnosis [49,50]. In the following sections, we will discuss the specific content of seven research studies (Table 2).

Table 2.

Seven studies on deep learning-assisted pathological diagnosis of BE.

Pathology represents the gold standard for diagnosis, potentially offering more comprehensive and microscopic histological information in theory than endoscopy. As the precursor of EAC, BE presents a unique opportunity to understand the factors driving the transition from pre-cancerous conditions to cancer [3,58]. Cell detection is usually the first crucial step in the automated image analysis of pathological slides [59,60]. Law, J., et al. developed a model based on SE2-U-Net, using pathological images from multiple public datasets to classify cells and non-cells in pathological sections [53].

Subsequently, the task of pathological diagnosis and classification comes into play. Tomita, N., et al. built a deep learning network model based on an attention-driven CNN. They used 123 retrospective case images from a single center for training, validation, and prediction, accomplishing a four-category task: normal vs. NDBE vs. dysplastic BE vs. EAC. The final model performance indicators were: AUC for normal: 0.751; NDBE: 0.897; dysplastic BE: 0.817; and EAC: 0.795 [51]. This model can accurately identify cancerous and pre-cancerous esophageal tissues on microscopic images without training annotations for areas of interest, significantly reducing the workload in pathological image analysis. Sali, R., et al. used 387 pathological images to construct a model based on the ResNet architecture to accomplish a three-category task: normal vs. NDBE vs. dysplastic BE. During the experiments, they compared the impact of full supervision, weak supervision, and unsupervised learning on model performance. The results indicated that the appropriate setting of unsupervised feature representation methods could extract more relevant image features from whole-slide images [52]. Guleria, S., et al. conducted a modeling study where they compared pCLE with pathological images. Their findings demonstrated that a comprehensive three-category classification task, distinguishing between normal, NDBE, and dysplasia/cancer, can be successfully achieved using multiple retrospective datasets [57].

Moreover, the risk of BE transforming into EAC increases with the progression of atypical hyperplasia. However, the histological diagnosis of atypical hyperplasia, particularly low-grade atypical hyperplasia, presents challenges, leading to a lack of inter-observer consensus among pathologists [61]. Therefore, Codipilly, D.C., et al. used 587 patient pathological slices to build a model based on the ResNet architecture, accomplishing a three-category task: NDBE vs. low-grade dysplasia vs. high-grade dysplasia [54]. In the same task, Faghani, S., et al. constructed a two-stage model, with the first stage identifying and segmenting using YOLO [56]. The output of the first stage was then used as input for the second stage, which, in conjunction with the ResNet architecture, completed the three-category task [56].

Mass spectrometry imaging (MSI) can obtain spatially resolved molecular spectra from tissue slices without labeling. Beuque, M., et al. combined MSI with standard pathological slice data to construct a model with three modules that can complete tasks: Task 1: epithelial vs. stroma; Task 2: dysplastic grade; and Task 3: progression of dysplasia. The inclusion of more information may contribute to a more precise classification [55].

The application of deep learning to aid pathological diagnosis has been extensively studied. Research focuses on utilizing complex algorithms and big data to analyze pathological tissue images for more accurate and timely identification and classification of lesions. Various studies have made progress, including cell vs. non-cell classification, multi-category tasks for BE, and more precise diagnoses integrating mass spectrometry imaging. These research findings provide robust support for improving the accuracy and efficiency of pathological diagnosis.

4. Applications of Deep Learning to Assist Other Diagnostic Methods

In addition to traditional endoscopy and conventional pathological sampling, some researchers have developed deep neural network models for other diagnostic methods (Table 3). Volumetric laser endomicroscopy (VLE) is a novel imaging technique. During the examination, a balloon is inflated in the esophagus, and second-generation optical coherence tomography (OCT) is used to capture a full circumferential scan of the esophageal wall (approximately 6 cm) in about 90 s, reaching a depth of up to 3 mm [62]. This method can acquire information from deep tissues, but interpreting the gray-shadow images is challenging for human experts. To address this, Fonollà, et al. used VLE image data to distinguish between NDBE and HGD using the deep learning model VGG-16, achieving a maximum AUC value of 0.96 [63]. Van der Putten, J., et al., utilizing prospective single-center data and based on the FusionNet/DenseNet architecture, effectively completed a binary classification task of NDBE vs. HGD, achieving an AUC of 0.93 [64]. Additionally, based on OCT information, Z. Yang and others proposed a bilateral connectivity-based neural network for in vivo human esophageal OCT layer segmentation. This marked the development of the first end-to-end learning method specifically designed for automatic epithelial cell segmentation in vivo in human esophageal OCT images [65].

Table 3.

Five studies of deep learning assisting other diagnoses of BE.

An exciting study was conducted by Gehrung, M., et al., in which an endoscopic brush-like sponge was used to collect epithelial cells. H&E staining and immunohistochemical staining of trefoil factor 3 were performed to obtain the image information input for the model. The model, built based on the VGG-16 architecture, was used to distinguish between BE and others, with an AUC reaching up to 0.88 [66]. Other researchers discovered that when light passes through tissue, it is absorbed by endogenous chromophores such as hemoglobin and scattered by endogenous structures such as organelles and nuclei. Biochemical and structural changes associated with diseases in the epithelial layer alter the distribution and abundance of absorbers and scatterers, leading to subtle changes in the spectral characteristics of the light exiting the tissue. Spectral imaging techniques can capture this rich endogenous contrast to reveal potential pathological changes [68,69]. Waterhouse, D.J., et al. modeled the spectral signals and completed the NDBE vs. EAC classification task with prospective data. The first clinical human trial demonstrated the potential of spectral endoscopy to reveal disease-related vascular changes and provide a highly contrasting depiction of esophageal tumor formation [67].

Compared to human experts, artificial intelligence has a more remarkable ability to capture patterns in large datasets, especially the potential information between data that is hard to discern with the naked eye. Therefore, one possible future research direction is to focus on collecting more information through various means and using it to assist in diagnosis.

5. Public Databases and Model Evaluation Metrics

In the literature we reviewed, the commonly used public datasets mainly include the following: Firstly, the HyperKvasir dataset was collected at Bærum Hospital in Norway and is derived from gastroscopy and colonoscopy examinations. Part of the data has been annotated by experienced gastroendoscopy physicians. This dataset covers 110,079 images and 374 videos, showcasing anatomical landmarks, various pathological conditions, and normal findings. Secondly, GastroNet is composed of 494,364 endoscopic images from 15,286 patients, covering organs including the colon, stomach, duodenum, and esophagus. Thirdly, the ImageNet database encompasses over 14 million manually annotated high-resolution images, covering more than 22,000 categories of objects and entities. Fourthly, the MICCAI 2015 dataset includes 100 lower esophageal endoscopic images captured from 39 individuals. Out of them, 22 were diagnosed with Barrett’s esophagus, and 17 showed early signs of esophageal adenocarcinoma. Fifthly, the Augsburg dataset consists of 76 endoscopic images from patients with BE (42 samples) and early adenocarcinoma (34 samples). These databases have significant application value in the fields of medical imaging and computer vision research. Specifically, HyperKvasir, GastroNet, and ImageNet are frequently used as sources for pre-training models, while MICCAI 2015 and Augsburg are often utilized in formal model training and external validation.

In the model performance evaluations mentioned above, for classification tasks, accuracy, sensitivity, specificity, F1 score, and area under the receiver operating characteristic curve are commonly employed as evaluation metrics. For segmentation tasks, aside from accuracy, some researchers also use metrics such as intersection over union and dice coefficient to assess segmentation performance.

6. Discussion

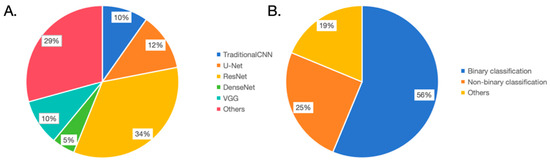

In this review, we conducted a literature search across multiple databases, culminating in the inclusion of 33 primary studies. The objective was to summarize the current role of deep learning in aiding the diagnosis of BE. We identified that the types of data for modeling can be categorized as discussed herein. First, endoscopic data, including images and videos, are used for prediction modeling. Second, pathological images (e.g., H&E staining or IHC) are modeled for prediction. Third, other auxiliary diagnostic information (such as OCT) is utilized for predictive modeling. Regarding the types of diagnostic tasks, there are two primary categories: classification tasks, which encompass binary and ternary classification tasks distinguishing BE from normal tissues or tumorous tissues, and segmentation tasks, which focus on the segmentation of epithelial tissues or individual cells (Figure 2A). In some of the aforementioned studies, researchers delineated segmentation and classification tasks into two distinct phases. Initially, problematic esophagi are segmented, followed by classification tasks targeting the segmented esophagi. This phased model approach deconstructs a complex task into multiple stages, each employing a specific model to address sub-problems, sequentially accomplishing the entire task. In every stage, a dedicated model processes the data, producing intermediary outcomes, which are then forwarded to the subsequent phase’s model for further processing until the entire task is finalized [70,71,72].

Figure 2.

Overview of the model structure and task types. (A). In terms of model structure usage frequency, U-Net: 34%, VGG: 29%, Traditional CNN: 12%, ResNet: 10%, DenseNet: 5%, and others: 10%. (B). In task types, 56% of the studies focus on binary classification, 25% on non-binary classification, and 19% on other task types.

Given the outcomes of these 33 studies, all exhibited commendable model performance. Consequently, we believe that deep learning holds promising potential for augmenting the diagnosis of BE.

From an architectural perspective, researchers chose different foundational model architectures for various tasks or data types and made adaptive improvements (Figure 2B). For classification tasks, image data was predominantly processed using CNN architectures such as VGG, AlexNet, and ResNet. AlexNet, designed in 2012, was pivotal in the ImageNet image recognition challenge, establishing the efficacy of deep convolutional neural networks in large-scale image classification tasks [73]. In this BE diagnostic review, studies by de Souza LA Jr. and Kumar A. C. have attempted to employ this architecture [33,35]. VGG, proposed by the Oxford Vision Group in 2014, secured the second position in the ImageNet challenge. Despite not clinching the title, VGG showcased the potential of deep convolutional networks, particularly through increased depth [74]. This BE review encompasses four studies involving this architecture [22,33,36,63,66]. ResNet, designed by Microsoft Research in 2015, became widely adopted due to its residual network design, which addressed the vanishing gradient problem in deep network training [75]. This BE review indicates 12 studies utilizing ResNet, revealing a preference for its stable performance in classification tasks [18,20,21,23,26,27,29,31,33,35,52,54,55,56]. DenseNet, introduced by Cornell University researchers in 2017, embraced dense connections to enhance feature reuse and gradient flow [76]. In this BE review, research by van der Putten J. and Kumar A. C. tried this architecture [35,64]. For segmentation tasks, most adopted the U-Net architecture with modifications. U-Net, proposed in 2015 by a German image processing institute, was specifically designed for biomedical image segmentation [77]. This review found four studies using U-Net [26,27,29,53]. We argue that modeling should not be confined to one architecture but should explore diverse methods, opting for the most efficacious model, as exemplified by Kumar A. C. and colleagues [35].

The introduction of deep learning in medical diagnosis has brought revolutionary changes to the field. However, we must clarify its actual application intent: as a supplementary tool to complement and enhance doctors’ expertise, not to completely replace them. Human intuition, experience, and years of training cannot simply be replaced by machines. On the contrary, deep learning should be viewed as a tool aimed at providing more accurate and faster data analysis to assist doctors in making better decisions. In our review, we noted that some researchers have made comparisons between deep learning models and human experts. The results show that, especially on certain metrics, the performance of deep learning models is comparable to expert diagnosis (Table 4). This has mainly been verified in scenarios where tasks are clear and the data set quality is high. This further proves the potential value of deep learning in the medical field. For instance, for some complex image analysis tasks, models can quickly identify potential abnormal areas, helping doctors narrow the scope of examination.

Table 4.

Human-machine performance comparison.

Although deep learning has achieved remarkable strides in diagnosing Barrett’s esophagus, we must recognize the inherent challenges and shortcomings. In the subsequent sections, we delve deeper into these shortcomings and explore potential countermeasures for more reliable diagnostic methods. Primarily, the model’s performance could be hampered by data limitations and inherent model characteristics. Most researchers utilized retrospective single-center data, possibly leading to overfitting and thus compromising generalization. To address this, we recommend prospective multi-center joint data for training and validation to ensure data quality and diversity. However, such data collaboration also brings a series of challenges. First, there are issues of data privacy and security. Medical data often contains sensitive information about patients. When sharing data across centers, it is essential to ensure that this data is not misused or leaked. To address this, researchers can consider using techniques such as data de-identification and anonymization to ensure privacy and security during the data-sharing process. Secondly, there is the challenge of data heterogeneity. Different centers may adopt various standards for data collection, storage, and processing, leading to data heterogeneity that might impact model performance. To resolve this issue, normalization and standardization must be performed before integrating the data, ensuring data consistency. Third, there are legal and ethical concerns related to data sharing. Beyond technical issues, data sharing encompasses multiple legal and ethical considerations. This necessitates that researchers obtain appropriate ethical review and patient consent before sharing data, ensuring compliance with relevant legal stipulations.

Secondly, in the application of multidimensional data modeling, there is limited utilization in this direction. In fact, multidimensional information modeling has demonstrated potential for enhancing the predictive performance of clinical models across various fields. This modeling approach integrates multiple data features and information, aiming to construct a more comprehensive and accurate model [78,79,80]. It can take into account factors such as a patient’s medical history or multiple related examinations for classification purposes. Successful applications have been observed in areas such as lung cancer and breast cancer [81,82,83]. We believe this will be a promising research direction.

Thirdly, it is undeniable that the quality of the endoscopic system influences the final image quality, which in turn affects the accuracy of deep learning models. High-resolution sensors are capable of capturing more details, thereby providing richer information for deep learning models. At the same time, sensors with a wide dynamic range ensure that clear images can be obtained under various lighting conditions. A high-quality illumination system ensures that both doctors and algorithms can clearly see every detail of the tissue. Uniform, shadow-free illumination helps emphasize abnormal areas, making it easier for the model to detect lesions. Insufficient or uneven lighting might obscure crucial information or lead to color distortions in the image. In the studies we reviewed, few provided detailed information about the technical specifications of their endoscopic systems. This omission might be a potential limitation because different systems might produce varying image qualities, leading to differences in model performance. Therefore, we recommend that in future research, researchers should explicitly provide detailed information about the technical specifications of the endoscopic system. This not only enhances the transparency of the research but also helps to better understand under which equipment conditions the performance of deep learning models is optimal.

Furthermore, the interpretability of deep learning models remains an immensely challenging field. Deep learning models are often regarded as “black boxes,” which constitutes a significant barrier to the clinical application of deep neural networks [84,85]. Despite their impressive performance in Barrett’s esophagus diagnosis, the internal mechanisms of these deep learning models are frequently exceptionally intricate, making it difficult to explain the basis for the model’s decisions. In the realm of medical diagnosis, interpretability becomes especially critical, as both medical practitioners and patients require an understanding of how the model arrives at its diagnostic conclusions. It is worth mentioning that although de Souza LA Jr. and colleagues attempted five distinct explanation techniques in their research, including saliency, guided backpropagation, integrated gradients, input time gradients, and DeepLIFT, this undertaking was a proactive effort [33]. Nonetheless, conquering this issue still appears to be a challenge.

7. Conclusions

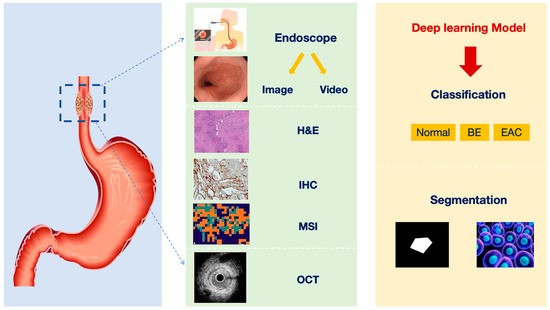

Deep learning has played a pivotal role and demonstrated tremendous potential in diagnosing BE (Figure 3). Its applications range widely, from primary image classification to more complex segmentation tasks and advanced lesion detection, where deep learning has shown powerful capabilities. Leveraging advanced network architectures such as U-Net, ResNet, etc., deep learning has achieved remarkable success in big data processing, pattern recognition, and precise localization. Moreover, some studies have gone even further, using deep learning techniques for spectral image analysis or OCT image information to uncover more potential pathological changes, which brings new possibilities for early diagnosis of BE. However, despite the significant advancements made by deep learning in diagnosing BE, challenges remain that need to be addressed and resolved, particularly those related to model interpretability and credibility. Nevertheless, with continuous algorithm optimization and the application of new technologies, such as advanced model explanation techniques, there is reason to believe that deep learning will play an even more significant role in the diagnosis and research of BE. This will enable us to provide more accurate, earlier diagnoses, ultimately leading to better treatment options and quality of life for patients.

Figure 3.

Overview of the current status of deep learning technology-assisted diagnosis of BE (H&E: hematoxylin-eosin; IHC: immunohistochemistry; MSI: mass spectrometry imaging; OCT: optical coherence tomography; BE: Barrett’s esophagus; EAC: esophageal adenocarcinoma).

Author Contributions

Conceptualization, D.T., L.C., R.C. and L.W. Methodology, Q.S. Formal analysis, Y.G. and H.Z. Investigation, L.L. and J.L. Data curation, S.L. and B.L. Writing—original draft preparation, R.C. and L.W. Writing—review and editing, D.T. and L.C. Visualization, R.L. and S.L. Supervision, D.T. and L.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the 1•3•5 project for disciplines of excellence—Clinical Research Incubation Project, West China Hospital, Sichuan University (2018HXFH020); Regional Innovation and Collaboration projects of the Sichuan Provincial Department of Science and Technology (2021YFQ0026); National Natural Science Foundation Regional Innovation and Development (U20A20394); and 2023 Clinical Research Fund of West China Hospital, Sichuan University (No. 27).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dent, J. Barrett’s Esophagus: A Historical Perspective, an Update on Core Practicalities and Predictions on Future Evolutions of Management. J. Gastroenterol. Hepatol. 2011, 26, 11–30. [Google Scholar] [CrossRef] [PubMed]

- Lagergren, J.; Lagergren, P. Oesophageal Cancer. BMJ 2010, 341, c6280. [Google Scholar] [CrossRef] [PubMed]

- Hvid-Jensen, F.; Pedersen, L.; Drewes, A.M.; Sørensen, H.T.; Funch-Jensen, P. Incidence of Adenocarcinoma among Patients with Barrett’s Esophagus. N. Engl. J. Med. 2011, 365, 1375–1383. [Google Scholar] [CrossRef] [PubMed]

- Bhat, S.; Coleman, H.G.; Yousef, F.; Johnston, B.T.; McManus, D.T.; Gavin, A.T.; Murray, L.J. Risk of Malignant Progression in Barrett’s Esophagus Patients: Results from a Large Population-Based Study. J. Natl. Cancer Inst. 2011, 103, 1049–1057. [Google Scholar] [CrossRef]

- Pohl, H.; Sirovich, B.; Welch, H.G. Esophageal Adenocarcinoma Incidence: Are We Reaching the Peak? Cancer Epidemiol. Biomark. Prev. 2010, 19, 1468–1470. [Google Scholar] [CrossRef] [PubMed]

- Rice, T.W.; Ishwaran, H.; Hofstetter, W.L.; Kelsen, D.P.; Apperson-Hansen, C.; Blackstone, E.H.; Worldwide Esophageal Cancer Collaboration Investigators. Recommendations for Pathologic Staging (pTNM) of Cancer of the Esophagus and Esophagogastric Junction for the 8th Edition AJCC/UICC Staging Manuals. Dis. Esophagus 2016, 29, 897–905. [Google Scholar] [CrossRef]

- Ishihara, R.; Takeuchi, Y.; Chatani, R.; Kidu, T.; Inoue, T.; Hanaoka, N.; Yamamoto, S.; Higashino, K.; Uedo, N.; Iishi, H.; et al. Prospective Evaluation of Narrow-Band Imaging Endoscopy for Screening of Esophageal Squamous Mucosal High-Grade Neoplasia in Experienced and Less Experienced Endoscopists. Dis. Esophagus 2010, 23, 480–486. [Google Scholar] [CrossRef]

- di Pietro, M.; Canto, M.I.; Fitzgerald, R.C. Endoscopic Management of Early Adenocarcinoma and Squamous Cell Carcinoma of the Esophagus: Screening, Diagnosis, and Therapy. Gastroenterology 2018, 154, 421–436. [Google Scholar] [CrossRef]

- Abrams, J.A.; Kapel, R.C.; Lindberg, G.M.; Saboorian, M.H.; Genta, R.M.; Neugut, A.I.; Lightdale, C.J. Adherence to Biopsy Guidelines for Barrett’s Esophagus Surveillance in the Community Setting in the United States. Clin. Gastroenterol. Hepatol. 2009, 7, 736–742, quiz 710. [Google Scholar] [CrossRef]

- Sharma, P.; Brill, J.; Canto, M.; DeMarco, D.; Fennerty, B.; Gupta, N.; Laine, L.; Lieberman, D.; Lightdale, C.; Montgomery, E.; et al. White Paper AGA: Advanced Imaging in Barrett’s Esophagus. Clin. Gastroenterol. Hepatol. 2015, 13, 2209–2218. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Hop, P.; Allgood, B.; Yu, J. Geometric Deep Learning Autonomously Learns Chemical Features That Outperform Those Engineered by Domain Experts. Mol. Pharm. 2018, 15, 4371–4377. [Google Scholar] [CrossRef] [PubMed]

- Chan, H.-P.; Samala, R.K.; Hadjiiski, L.M.; Zhou, C. Deep Learning in Medical Image Analysis. Adv. Exp. Med. Biol. 2020, 1213, 3–21. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A Guide to Deep Learning in Healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef]

- Hong, J.; Park, B.-Y.; Park, H. Convolutional Neural Network Classifier for Distinguishing Barrett’s Esophagus and Neoplasia Endomicroscopy Images. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; Volume 2017, pp. 2892–2895. [Google Scholar] [CrossRef]

- de Groof, J.; van der Putten, J.; van der Sommen, F.; Zinger, S.; Curvers, W.; Bisschops, R.; Pech, O.; Meining, A.; Neuhaus, H.; Schoon, E.J.; et al. The argos project: Evaluation of results of a clinically-inspired algorithm vs. A deep learning algorithm for the detection and delineation of barrett’s neoplasia. Gastroenterology 2018, 154, S-1368. [Google Scholar] [CrossRef]

- Ebigbo, A.; Mendel, R.; Probst, A.; Manzeneder, J.; De Souza, L.A.; Papa, J.P.; Palm, C.; Messmann, H. Computer-Aided Diagnosis Using Deep Learning in the Evaluation of Early Oesophageal Adenocarcinoma. Gut 2019, 68, 1143–1145. [Google Scholar] [CrossRef]

- Passos, L.A.; de Souza, L.A.; Mendel, R.; Ebigbo, A.; Probst, A.; Messmann, H.; Palm, C.; Papa, J.P. Barrett’s Esophagus Analysis Using Infinity Restricted Boltzmann Machines. J. Vis. Commun. Image Represent. 2019, 59, 475–485. [Google Scholar] [CrossRef]

- van der Putten, J.; de Groof, J.; van der Sommen, F.; Struyvenberg, M.; Zinger, S.; Curvers, W.; Schoon, E.; Bergman, J.; de With, P.H.N. Informative Frame Classification of Endoscopic Videos Using Convolutional Neural Networks and Hidden Markov Models. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2017; IEEE: New York, NY, USA, 2019; pp. 380–384. [Google Scholar]

- Putten, J.V.D.; Bergman, J.; De With, P.H.N.; Wildeboer, R.; Groof, J.D.; Sloun, R.V.; Struyvenberg, M.; Sommen, F.V.D.; Zinger, S.; Curvers, W.; et al. Deep Learning Biopsy Marking of Early Neoplasia in Barrett’s Esophagus by Combining WLE and BLI Modalities. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1127–1131. [Google Scholar]

- Ghatwary, N.; Zolgharni, M.; Ye, X. Early Esophageal Adenocarcinoma Detection Using Deep Learning Methods. Int. J. CARS 2019, 14, 611–621. [Google Scholar] [CrossRef]

- Van Der Putten, J.; Struyvenberg, M.; De Groof, J.; Curvers, W.; Schoon, E.; Baldaque-Silva, F.; Bergman, J.; Van Der Sommen, F.; De With, P.H.N. Endoscopy-Driven Pretraining for Classification of Dysplasia in Barrett’s Esophagus with Endoscopic Narrow-Band Imaging Zoom Videos. Appl. Sci. 2020, 10, 3407. [Google Scholar] [CrossRef]

- De Souza, L.A.; Passos, L.A.; Mendel, R.; Ebigbo, A.; Probst, A.; Messmann, H.; Palm, C.; Papa, J.P. Assisting Barrett’s Esophagus Identification Using Endoscopic Data Augmentation Based on Generative Adversarial Networks. Comput. Biol. Med. 2020, 126, 104029. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Hua, J.; Wu, Z.; Meng, T.; Sun, M.; Huang, P.; He, X.; Sun, W.; Li, X.; Chen, Y. Automatic Classification of Esophageal Lesions in Endoscopic Images Using a Convolutional Neural Network. Ann. Transl. Med. 2020, 8, 486. [Google Scholar] [CrossRef] [PubMed]

- De Groof, A.J.; Struyvenberg, M.R.; Fockens, K.N.; Van Der Putten, J.; Van Der Sommen, F.; Boers, T.G.; Zinger, S.; Bisschops, R.; De With, P.H.; Pouw, R.E.; et al. Deep Learning Algorithm Detection of Barrett’s Neoplasia with High Accuracy during Live Endoscopic Procedures: A Pilot Study (with Video). Gastrointest. Endosc. 2020, 91, 1242–1250. [Google Scholar] [CrossRef] [PubMed]

- Van Der Putten, J.; De Groof, J.; Struyvenberg, M.; Boers, T.; Fockens, K.; Curvers, W.; Schoon, E.; Bergman, J.; Van Der Sommen, F.; De With, P.H.N. Multi-Stage Domain-Specific Pretraining for Improved Detection and Localization of Barrett’s Neoplasia: A Comprehensive Clinically Validated Study. Artif. Intell. Med. 2020, 107, 101914. [Google Scholar] [CrossRef] [PubMed]

- Pulido, J.V.; Guleriai, S.; Ehsan, L.; Shah, T.; Syed, S.; Brown, D.E. Screening for Barrett’s Esophagus with Probe-Based Confocal Laser Endomicroscopy Videos. In Proceedings of the 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), Iowa City, IA, USA, 3–7 April 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1659–1663. [Google Scholar]

- Struyvenberg, M.R.; De Groof, A.J.; Van Der Putten, J.; Van Der Sommen, F.; Baldaque-Silva, F.; Omae, M.; Pouw, R.; Bisschops, R.; Vieth, M.; Schoon, E.J.; et al. A Computer-Assisted Algorithm for Narrow-Band Imaging-Based Tissue Characterization in Barrett’s Esophagus. Gastrointest. Endosc. 2021, 93, 89–98. [Google Scholar] [CrossRef]

- Pan, W.; Li, X.; Wang, W.; Zhou, L.; Wu, J.; Ren, T.; Liu, C.; Lv, M.; Su, S.; Tang, Y. Identification of Barrett’s Esophagus in Endoscopic Images Using Deep Learning. BMC Gastroenterol. 2021, 21, 479. [Google Scholar] [CrossRef]

- Hou, W.; Wang, L.; Cai, S.; Lin, Z.; Yu, R.; Qin, J. Early Neoplasia Identification in Barrett’s Esophagus via Attentive Hierarchical Aggregation and Self-Distillation. Med. Image Anal. 2021, 72, 102092. [Google Scholar] [CrossRef]

- Ali, S.; Bailey, A.; Ash, S.; Haghighat, M.; Leedham, S.J.; Lu, X.; East, J.E.; Rittscher, J.; Braden, B.; Allan, P.; et al. A Pilot Study on Automatic Three-Dimensional Quantification of Barrett’s Esophagus for Risk Stratification and Therapy Monitoring. Gastroenterology 2021, 161, 865–878.e8. [Google Scholar] [CrossRef]

- De Souza, L.A.; Mendel, R.; Strasser, S.; Ebigbo, A.; Probst, A.; Messmann, H.; Papa, J.P.; Palm, C. Convolutional Neural Networks for the Evaluation of Cancer in Barrett’s Esophagus: Explainable AI to Lighten up the Black-Box. Comput. Biol. Med. 2021, 135, 104578. [Google Scholar] [CrossRef]

- Kusters, C.H.J.; Boers, T.G.W.; Jukema, J.B.; Jong, M.R.; Fockens, K.N.; De Groof, A.J.; Bergman, J.J.; Der Sommen, F.V.; De With, P.H.N. A CAD System for Real-Time Characterization of Neoplasia in Barrett’s Esophagus NBI Videos. In Cancer Prevention Through Early Detection; Lecture Notes in Computer, Science; Ali, S., Van Der Sommen, F., Papież, B.W., Van Eijnatten, M., Jin, Y., Kolenbrander, I., Eds.; Springer Nature: Cham, Switzerland, 2022; Volume 13581, pp. 89–98. ISBN 978-3-031-17978-5. [Google Scholar]

- Kumar, A.C.; Mubarak, D.M.N. Classification of Early Stages of Esophageal Cancer Using Transfer Learning. IRBM 2022, 43, 251–258. [Google Scholar] [CrossRef]

- Villagrana-Bañuelos, K.E.; Alcalá-Rmz, V.; Celaya-Padilla, J.M.; Galván-Tejada, J.I.; Gamboa-Rosales, H.; Galván-Tejada, C.E. towards Esophagitis and Barret’s Esophagus Endoscopic Images Classification: An Approach with Deep Learning Techniques. In Proceedings of the International Conference on Ubiquitous Computing & Ambient. Intelligence (UCAmI 2022); Lecture Notes in Networks and Systems; Bravo, J., Ochoa, S., Favela, J., Eds.; Springer International Publishing: Cham, Switzerland, 2023; Volume 594, pp. 169–180. ISBN 978-3-031-21332-8. [Google Scholar]

- Herrero, L.A.; Curvers, W.L.; Bansal, A.; Wani, S.; Kara, M.; Schenk, E.; Schoon, E.J.; Lynch, C.R.; Rastogi, A.; Pondugula, K.; et al. Zooming in on Barrett Oesophagus Using Narrow-Band Imaging: An International Observer Agreement Study. Eur. J. Gastroenterol. Hepatol. 2009, 21, 1068. [Google Scholar] [CrossRef] [PubMed]

- Baldaque-Silva, F.; Marques, M.; Lunet, N.; Themudo, G.; Goda, K.; Toth, E.; Soares, J.; Bastos, P.; Ramalho, R.; Pereira, P.; et al. Endoscopic Assessment and Grading of Barrett’s Esophagus Using Magnification Endoscopy and Narrow Band Imaging: Impact of Structured Learning and Experience on the Accuracy of the Amsterdam Classification System. Scand. J. Gastroenterol. 2013, 48, 160–167. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Nogales, O.; Caballero-Marcos, A.; Clemente-Sánchez, A.; García-Lledó, J.; Pérez-Carazo, L.; Merino, B.; Carbonell, C.; López-Ibáñez, M.; González-Asanza, C. Usefulness of Non-Magnifying Narrow Band Imaging in EVIS EXERA III Video Systems and High-Definition Endoscopes to Diagnose Dysplasia in Barrett’s Esophagus Using the Barrett International NBI Group (BING) Classification. Dig. Dis. Sci. 2017, 62, 2840–2846. [Google Scholar] [CrossRef] [PubMed]

- Kara, M.A.; Ennahachi, M.; Fockens, P.; ten Kate, F.J.W.; Bergman, J.J.G.H.M. Detection and Classification of the Mucosal and Vascular Patterns (Mucosal Morphology) in Barrett’s Esophagus by Using Narrow Band Imaging. Gastrointest. Endosc. 2006, 64, 155–166. [Google Scholar] [CrossRef]

- Shaheen, N.J.; Falk, G.W.; Iyer, P.G.; Gerson, L.B. ACG Clinical Guideline: Diagnosis and Management of Barrett’s Esophagus. Off. J. Am. Coll. Gastroenterol. ACG 2016, 111, 30. [Google Scholar] [CrossRef]

- Belghazi, K.; Bergman, J.; Pouw, R.E. Endoscopic Resection and Radiofrequency Ablation for Early Esophageal Neoplasia. Dig. Dis. 2016, 34, 469–475. [Google Scholar] [CrossRef]

- Bera, K.; Schalper, K.A.; Rimm, D.L.; Velcheti, V.; Madabhushi, A. Artificial Intelligence in Digital Pathology—New Tools for Diagnosis and Precision Oncology. Nat. Rev. Clin. Oncol. 2019, 16, 703–715. [Google Scholar] [CrossRef]

- Reis-Filho, J.S.; Kather, J.N. Overcoming the Challenges to Implementation of Artificial Intelligence in Pathology. J. Natl. Cancer Inst. 2023, 115, 608–612. [Google Scholar] [CrossRef]

- Zuraw, A.; Aeffner, F. Whole-Slide Imaging, Tissue Image Analysis, and Artificial Intelligence in Veterinary Pathology: An Updated Introduction and Review. Vet. Pathol. 2022, 59, 6–25. [Google Scholar] [CrossRef]

- Niazi, M.K.K.; Parwani, A.V.; Gurcan, M.N. Digital Pathology and Artificial Intelligence. Lancet Oncol. 2019, 20, e253–e261. [Google Scholar] [CrossRef]

- Kim, I.; Kang, K.; Song, Y.; Kim, T.-J. Application of Artificial Intelligence in Pathology: Trends and Challenges. Diagnostics 2022, 12, 2794. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Yang, M.; Wang, S.; Li, X.; Sun, Y. Emerging Role of Deep Learning-Based Artificial Intelligence in Tumor Pathology. Cancer Commun. 2020, 40, 154–166. [Google Scholar] [CrossRef] [PubMed]

- Baxi, V.; Edwards, R.; Montalto, M.; Saha, S. Digital Pathology and Artificial Intelligence in Translational Medicine and Clinical Practice. Mod. Pathol. 2022, 35, 23–32. [Google Scholar] [CrossRef]

- Cheng, J.Y.; Abel, J.T.; Balis, U.G.J.; McClintock, D.S.; Pantanowitz, L. Challenges in the Development, Deployment, and Regulation of Artificial Intelligence in Anatomic Pathology. Am. J. Pathol. 2021, 191, 1684–1692. [Google Scholar] [CrossRef] [PubMed]

- Tomita, N.; Abdollahi, B.; Wei, J.; Ren, B.; Suriawinata, A.; Hassanpour, S. Attention-Based Deep Neural Networks for Detection of Cancerous and Precancerous Esophagus Tissue on Histopathological Slides. JAMA Netw. Open 2019, 2, e1914645. [Google Scholar] [CrossRef]

- Sali, R.; Moradinasab, N.; Guleria, S.; Ehsan, L.; Fernandes, P.; Shah, T.U.; Syed, S.; Brown, D.E. Deep Learning for Whole-Slide Tissue Histopathology Classification: A Comparative Study in the Identification of Dysplastic and Non-Dysplastic Barrett’s Esophagus. JPM 2020, 10, 141. [Google Scholar] [CrossRef]

- Law, J.; Paulson, T.G.; Sanchez, C.A.; Galipeau, P.C.; Jansen, M.; Stachler, M.D.; Maley, C.C.; Yuan, Y. Wisdom of the Crowd for Early Detection in Barrett’s Esophagus. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 531–535. [Google Scholar]

- Codipilly, D.C.; Vogelsang, D.; Agarwal, S.; Dhaliwal, L.; Johnson, M.L.; Lansing, R.; Hagen, C.E.; Lewis, J.T.; Leggett, C.L.; Katzka, D.A.; et al. ID: 3524488 Utilization of a Deep Learning Artificial Intelligence Model in the Histologic Diagnosis of Dysplasia in Barrett’s Esophagus. Gastrointest. Endosc. 2021, 93, AB288–AB289. [Google Scholar] [CrossRef]

- Beuque, M.; Martin-Lorenzo, M.; Balluff, B.; Woodruff, H.C.; Lucas, M.; De Bruin, D.M.; Van Timmeren, J.E.; de Boer, O.J.; Heeren, R.M.; Meijer, S.L.; et al. Machine Learning for Grading and Prognosis of Esophageal Dysplasia Using Mass Spectrometry and Histological Imaging. Comput. Biol. Med. 2021, 138, 104918. [Google Scholar] [CrossRef]

- Faghani, S.; Codipilly, D.C.; Vogelsang, D.; Moassefi, M.; Rouzrokh, P.; Khosravi, B.; Agarwal, S.; Dhaliwal, L.; Katzka, D.A.; Hagen, C.; et al. Development of a Deep Learning Model for the Histologic Diagnosis of Dysplasia in Barrett’s Esophagus. Gastrointest. Endosc. 2022, 96, 918–925.e3. [Google Scholar] [CrossRef]

- Guleria, S.; Shah, T.U.; Pulido, J.V.; Fasullo, M.; Ehsan, L.; Lippman, R.; Sali, R.; Mutha, P.; Cheng, L.; Brown, D.E.; et al. Deep Learning Systems Detect Dysplasia with Human-like Accuracy Using Histopathology and Probe-Based Confocal Laser Endomicroscopy. Sci. Rep. 2021, 11, 5086. [Google Scholar] [CrossRef]

- Singh, T.; Sanghi, V.; Thota, P.N. Current Management of Barrett Esophagus and Esophageal Adenocarcinoma. Clevel. Clin. J. Med. 2019, 86, 724–732. [Google Scholar] [CrossRef]

- Chen, F.; Zhuang, X.; Lin, L.; Yu, P.; Wang, Y.; Shi, Y.; Hu, G.; Sun, Y. New Horizons in Tumor Microenvironment Biology: Challenges and Opportunities. BMC Med. 2015, 13, 45. [Google Scholar] [CrossRef] [PubMed]

- AbdulJabbar, K.; Raza, S.E.A.; Rosenthal, R.; Jamal-Hanjani, M.; Veeriah, S.; Akarca, A.; Lund, T.; Moore, D.A.; Salgado, R.; Al Bakir, M.; et al. Geospatial Immune Variability Illuminates Differential Evolution of Lung Adenocarcinoma. Nat. Med. 2020, 26, 1054–1062. [Google Scholar] [CrossRef] [PubMed]

- Odze, R.D. Diagnosis and Grading of Dysplasia in Barrett’s Oesophagus. J. Clin. Pathol. 2006, 59, 1029–1038. [Google Scholar] [CrossRef] [PubMed]

- Gonzalo, N.; Tearney, G.J.; Serruys, P.W.; van Soest, G.; Okamura, T.; García-García, H.M.; Jan van Geuns, R.; van der Ent, M.; Ligthart, J.; Bouma, B.E.; et al. Second-Generation Optical Coherence Tomography in Clinical Practice. High-Speed Data Acquisition Is Highly Reproducible in Patients Undergoing Percutaneous Coronary Intervention. Rev. Española Cardiol. 2010, 63, 893–903. [Google Scholar] [CrossRef]

- Fonolla, R.; Scheeve, T.; Struyvenberg, M.R.; Curvers, W.L.; de Groof, A.J.; van der Sommen, F.; Schoon, E.J.; Bergman, J.J.G.H.M.; de With, P.H.N. Ensemble of Deep Convolutional Neural Networks for Classification of Early Barrett’s Neoplasia Using Volumetric Laser Endomicroscopy. Appl. Sci. 2019, 9, 2183. [Google Scholar] [CrossRef]

- Van Der Putten, J.; Struyvenberg, M.; De Groof, J.; Scheeve, T.; Curvers, W.; Schoon, E.; Bergman, J.J.G.H.M.; De With, P.H.N.; Van Der Sommen, F. Deep Principal Dimension Encoding for the Classification of Early Neoplasia in Barrett’s Esophagus with Volumetric Laser Endomicroscopy. Comput. Med. Imaging Graph. 2020, 80, 101701. [Google Scholar] [CrossRef]

- Yang, Z.; Soltanian-Zadeh, S.; Chu, K.K.; Zhang, H.; Moussa, L.; Watts, A.E.; Shaheen, N.J.; Wax, A.; Farsiu, S. Connectivity-Based Deep Learning Approach for Segmentation of the Epithelium in in Vivo Human Esophageal OCT Images. Biomed. Opt. Express 2021, 12, 6326. [Google Scholar] [CrossRef]

- Gehrung, M.; Crispin-Ortuzar, M.; Berman, A.G.; O’Donovan, M.; Fitzgerald, R.C.; Markowetz, F. Triage-Driven Diagnosis of Barrett’s Esophagus for Early Detection of Esophageal Adenocarcinoma Using Deep Learning. Nat. Med. 2021, 27, 833–841. [Google Scholar] [CrossRef]

- Waterhouse, D.J.; Januszewicz, W.; Ali, S.; Fitzgerald, R.C.; Di Pietro, M.; Bohndiek, S.E. Spectral Endoscopy Enhances Contrast for Neoplasia in Surveillance of Barrett’s Esophagus. Cancer Res. 2021, 81, 3415–3425. [Google Scholar] [CrossRef]

- Lu, G.; Fei, B. Medical Hyperspectral Imaging: A Review. J. Biomed. Opt. 2014, 19, 10901. [Google Scholar] [CrossRef] [PubMed]

- Halicek, M.; Fabelo, H.; Ortega, S.; Callico, G.M.; Fei, B. In-Vivo and Ex-Vivo Tissue Analysis through Hyperspectral Imaging Techniques: Revealing the Invisible Features of Cancer. Cancers 2019, 11, 756. [Google Scholar] [CrossRef] [PubMed]

- Barragán-Montero, A.; Javaid, U.; Valdés, G.; Nguyen, D.; Desbordes, P.; Macq, B.; Willems, S.; Vandewinckele, L.; Holmström, M.; Löfman, F.; et al. Artificial Intelligence and Machine Learning for Medical Imaging: A Technology Review. Phys. Med. 2021, 83, 242–256. [Google Scholar] [CrossRef] [PubMed]

- Handelman, G.S.; Kok, H.K.; Chandra, R.V.; Razavi, A.H.; Lee, M.J.; Asadi, H. eDoctor: Machine Learning and the Future of Medicine. J. Intern. Med. 2018, 284, 603–619. [Google Scholar] [CrossRef]

- Deo, R.C. Machine Learning in Medicine. Circulation 2015, 132, 1920–1930. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2012; Volume 25. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep. Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2261–2269. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Liu, Z.; Wang, S.; Dong, D.; Wei, J.; Fang, C.; Zhou, X.; Sun, K.; Li, L.; Li, B.; Wang, M.; et al. The Applications of Radiomics in Precision Diagnosis and Treatment of Oncology: Opportunities and Challenges. Theranostics 2019, 9, 1303–1322. [Google Scholar] [CrossRef]

- Bi, W.L.; Hosny, A.; Schabath, M.B.; Giger, M.L.; Birkbak, N.J.; Mehrtash, A.; Allison, T.; Arnaout, O.; Abbosh, C.; Dunn, I.F.; et al. Artificial Intelligence in Cancer Imaging: Clinical Challenges and Applications. CA Cancer J. Clin. 2019, 69, 127–157. [Google Scholar] [CrossRef]

- Wu, S.; Roberts, K.; Datta, S.; Du, J.; Ji, Z.; Si, Y.; Soni, S.; Wang, Q.; Wei, Q.; Xiang, Y.; et al. Deep Learning in Clinical Natural Language Processing: A Methodical Review. J. Am. Med. Inform. Assoc. 2020, 27, 457–470. [Google Scholar] [CrossRef]

- Huang, S.; Yang, J.; Shen, N.; Xu, Q.; Zhao, Q. Artificial Intelligence in Lung Cancer Diagnosis and Prognosis: Current Application and Future Perspective. Semin. Cancer Biol. 2023, 89, 30–37. [Google Scholar] [CrossRef]

- Radak, M.; Lafta, H.Y.; Fallahi, H. Machine Learning and Deep Learning Techniques for Breast Cancer Diagnosis and Classification: A Comprehensive Review of Medical Imaging Studies. J. Cancer Res. Clin. 2023, 149, 10473–10491. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.W.; Karthikesalingam, A.; King, D.; Ashrafian, H.; Darzi, A. Diagnostic Accuracy of Deep Learning in Medical Imaging: A Systematic Review and Meta-Analysis. NPJ Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef] [PubMed]

- van der Velden, B.H.M.; Kuijf, H.J.; Gilhuijs, K.G.A.; Viergever, M.A. Explainable Artificial Intelligence (XAI) in Deep Learning-Based Medical Image Analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef] [PubMed]

- Handelman, G.S.; Kok, H.K.; Chandra, R.V.; Razavi, A.H.; Huang, S.; Brooks, M.; Lee, M.J.; Asadi, H. Peering Into the Black Box of Artificial Intelligence: Evaluation Metrics of Machine Learning Methods. AJR Am. J. Roentgenol. 2019, 212, 38–43. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).