Abstract

Remote photoplethysmography (rPPG) is a promising contactless technology that uses videos of faces to extract health parameters, such as heart rate. Several methods for transforming red, green, and blue (RGB) video signals into rPPG signals have been introduced in the existing literature. The RGB signals represent variations in the reflected luminance from the skin surface of an individual over a given period of time. These methods attempt to find the best combination of color channels to reconstruct an rPPG signal. Usually, rPPG methods use a combination of prepossessed color channels to convert the three RGB signals to one rPPG signal that is most influenced by blood volume changes. This study examined simple yet effective methods to convert the RGB to rPPG, relying only on RGB signals without applying complex mathematical models or machine learning algorithms. A new method, GRGB rPPG, was proposed that outperformed most machine-learning-based rPPG methods and was robust to indoor lighting and participant motion. Moreover, the proposed method estimated the heart rate better than well-established rPPG methods. This paper also discusses the results and provides recommendations for further research.

1. Introduction

Variations in blood volume or blood flow occur with every heartbeat [1,2]. Photoplethysmography (PPG) is the measurement of those changes that are related to the pulsating blood volume in the skin tissue [1,2]. Remote photoplethysmography (rPPG) is PPG without skin. The technology is very promising for large populations; rPPG signals can be captured with a simple red–green–blue (RGB) camera, which means that smartphones or laptops can be used to record them. For most people, smartphones, and laptops with integrated cameras are widely available and much more accessible than pulse oximeters or cuff-based blood pressure monitors [3,4]. Several health parameters, such as heart rate (HR), heart rate variability (HRV), and blood pressure (BP), can be determined from a PPG signal [5,6].

As cardiovascular diseases (CVDs) are the leading cause of death worldwide, [7] cardiovascular health parameters can help detect or assess CVDs at an early stage. Thus, large populations could benefit from regular assessment of cardiovascular health parameters if this were done with consumer-grade RGB cameras and potentially become a device outside the clinical environment that could be used as part of the patient’s routine life [8,9]. Moreover, concerning coronavirus disease 2019, rPPG is very promising as it allows contactless measurement of cardiovascular health parameters. Furthermore, patients can perform the measurements from home without any additional risk of infection. To determine cardiovascular health parameters accurately, it is necessary to obtain a high-quality rPPG signal. For these reasons, researchers have recently investigated a variety of rPPG methods.

The reflected light from some regions of the skin of the human face, such as the forehead and cheeks, is affected by blood flow under the skin. These blood flow changes can be recorded with an RGB camera, followed by the transformation of an RGB signal into an rPPG signal. However, there is a disagreement in recent literature about how this is best done. Several approaches have been proposed and compared for different behavioral and environmental conditions. Several studies have compared different rPPG methods using various datasets for HR estimation [10,11,12]. However, it is often not possible to prove why one rPPG method is better than another; only the results can be compared. Researchers have tried approaches, such as different decomposition methods (e.g., principal component analysis (PCA) and independent component analysis (ICA)), transformations in other color models, and varying color channel normalization techniques. These methods perform differently on each applied dataset [10].

Note that the contact-based PPG signal is typically recorded with a pulse oximeter consisting of a light source and a photodetector. An advantage here is that the environmental conditions are negligible due to direct skin contact; therefore, the heart rate is easily detected with high accuracy. On the contrary, the rPPG requires capturing video of the face and processing it to extract the blood flow information and map it to rPPG. Recently, more complex and advanced signal processing techniques are increasingly being developed to obtain high-quality rPPG signals. This adds more complexity to the processing and computational power of the camera-based application. In the present study, we are attempting to produce a new simple but efficient rPPG method based on raw RGB video signals without the need for complex statistical modeling and machine learning algorithms. We then compared the proposed method with well-established methods to examine its performance.

2. Methodology

2.1. Hypothesis

Based on the literature, the green color channel has been verified to have the highest similarity to a PPG signal in comparison to the red and blue color channels [13]. Furthermore, it is assumed that the light intensity fluctuations caused by skin movements influence all color channels equally. Therefore, this study hypothesized that the ratio of the green-to-red (GR) channel and the ratio of the green-to-blue (GB) channel, or the sum of these ratios (GR + GB), may improve the quality of the constructed rPPG signals.

2.2. Dataset

The LGI-PGGI dataset from Pilz et al. [14] was used for the evaluation. It includes videos of the participants’ faces with the referenced fingertip PPG signal. The videos from six participants were publicly accessible, each with four distinct settings, as follows

- Resting: indoor participant, sitting, head barely moving.

- Gym: on a bicycle ergometer, participant exercising indoors.

- Talk: urban setting with daylight and conversation.

- Rotation: the participant makes irrational head motions in an indoor setting.

Each participant had one recording of all four video settings. In each video, the participant’s face is at the center of the video. Of the six participants, five were men and one was a woman. The duration was more than 1 min and the resolution was for each video. The average sampling rate was 60 Hz for the pulse oximeter and 25 Hz for the RGB camera. Six windows per video were constructed by simply using the first minute of each video.

2.3. Pipeline and rPPG Methods

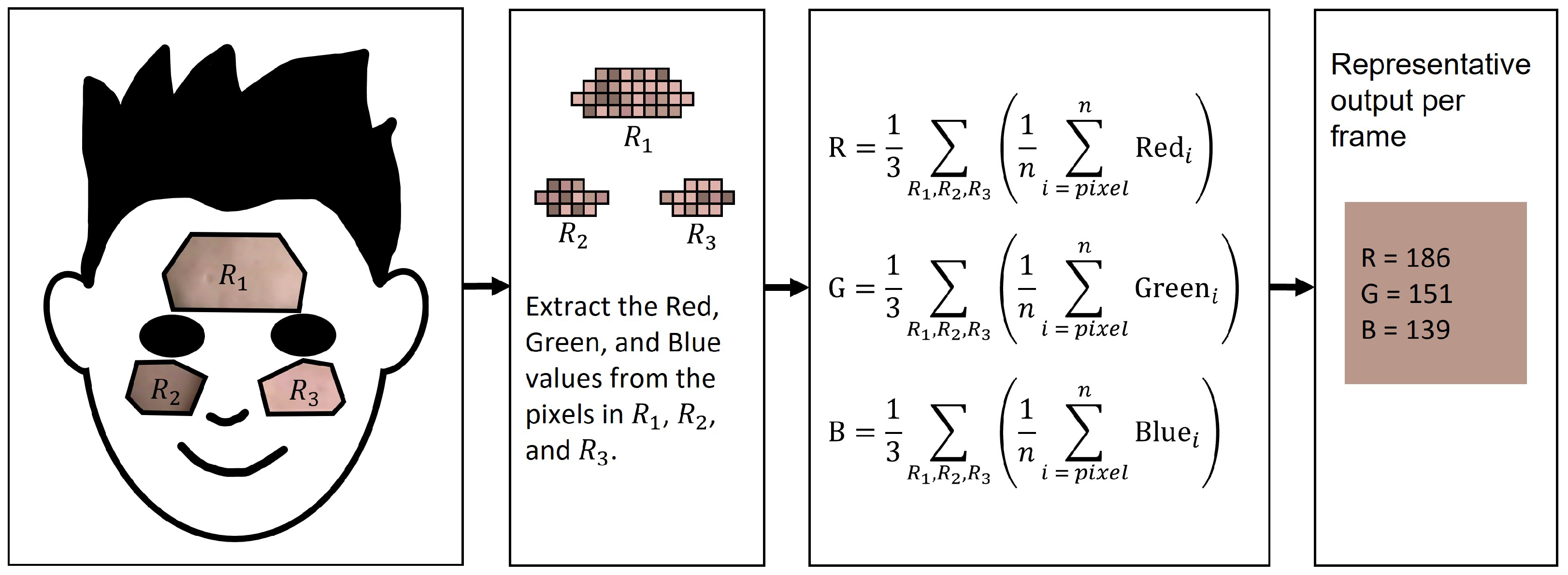

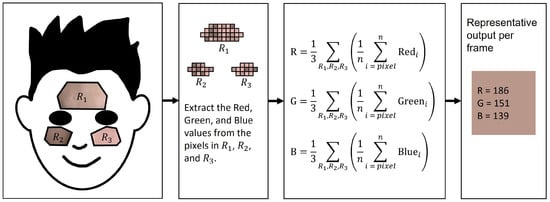

The RGB signal was obtained from the average RGB values of the pixels in the three regions of interest (ROIs). The ROIs were the forehead, left cheek, and right cheek in each frame, which were determined with landmarks from the MediaPipe Face Mesh [15]. The forehead and cheeks were found to be the most promising ROIs for rPPG in two independent ROI studies conducted by Sungjun et al. [16] and Dae-Yeol et al. [17]. The exact landmarks were (107, 66, 69, 109, 10, 338, 299, 296, 336, 9) for the forehead, (118, 119, 100, 126, 209, 49, 129, 203, 205, 50) for the left cheek, and (347, 348, 329, 355, 429, 279, 358, 423, 425, 280) for the right cheek, as shown in Figure 1.

Figure 1.

Graphical abstract of the pipeline from a frame to the R, G, and B values. The process repeats for each frame from the 1-min video resulting in the three time series: R, G, and B. i = pixel, n = number of pixels in ROI, , , = Regions of interests.

Each video consists of a time series of frames. Each frame is composed of a number of pixels (e.g., full HD: pixels). The proportions of the three main colors red, green, and blue define a color. This RGB scheme is based on additive color mixing and is only one of the numerous color models available; however, it is very popular, and a large number of video recordings are based on it.

This study aimed to observe the conversion of an RGB signal to an rPPG signal. We did not optimize the entire pipeline for optimal beats per minute (BPM) estimation. A detrend or a Butterworth bandpass filter, for example, can improve the rPPG signal [18,19]. The pipeline consisted of only one filter and did not use other optimization options. Our three proposed rPPG methods are summarized in Table 1. The RGB channels were divided into six 10-s, non-overlaying windows. For each window, a Butterworth bandpass filter of order six, ranging from 0.65 Hz to 4 Hz, was applied.

Table 1.

Summary of the introduced rPPG methods. Note: B = blue channels, G = green channel, R = red channel.

We compared our rPPG methods with the most recent rPPG methods: GREEN [13], ICA [20], PCA [21], the chrominance-base method (CHROM) [22], blood volume pulse (PBV) [23], plane orthogonal to the skin (POS) [11], local group invariance (LGI) [14], and orthogonal matrix image transformation (OMIT) [24]. All mentioned rPPG methods are implemented in the Python toolbox, pyVHR, from Boccignone et al. [25]. It is important to note that PCA and ICA are blind-source separation-based rPPG algorithms. In this study, the rPPG signal was the second component of ICA and PCA.

The RGB signal then consists of the average value of the ROI, resulting in three time series (red, green, and blue). The time-dependent representation and the matrix representation is used.

The GREEN [13] rPPG method uses the green channel to construct the rPPG signal. Of the three RGB color channels, the green channel has the highest similarity with the PPG signal. This is based on the observation that hemoglobin, a protein involved in the transportation of oxygen, reaches its maximum level of absorption near green light [26]. The resulting rPPG signal is equal to the G color channel

The RGB signal is subjected to ICA [20] to recover three different source signals. Cardoso [27] invented the joint approximate diagonalization of eigenmatrices (JADE) technique, which was applied to the ICA method. Tensor methods are used in the ICA approach, which involves the joint diagonalization of cumulant matrices and the use of fourth-order cumulant tensors. The solution approximates the statistical independence of the sources (to the fourth order). Although the ICA components are not ordered, the second component usually contains a substantial rPPG signal and is used in the ICA rPPG method. The temporal RGB traces is expressed as follows:

where A is a memoryless mixture matrix of the latent sources . The source recovery problem can be recast as an estimation of the demixed matrix problem . This is a problem of blind separation that was solved by Cardoso [27]. The highest correlation with the PPG signal was found in the second component

PCA is a technique that is frequently used for data reduction in pattern recognition and signal processing. PCA can also be used to obtain an rPPG signal from an RGB signal [21]. PCA and ICA try to find the most periodic signals. However, movement or other disturbances can also be periodic.

In PCA, the idea is to find the components of , with the maximum amount of variance possible by N linearly transformed components. The principal components are given by

where W represents the de-mixing matrix which is estimated by PCA, such that the greatest possible variance lies on the first coordinate. For the rPPG signal the second component [21] is often used:

The OMIT method calculates the rPPG signal by generating an orthogonal matrix with linearly uncorrelated components representing the orthogonal components in the RGB signal, relying on matrix decomposition.

The QR factorization [28] method is used to find linear least-squares solutions in the RGB space with Householder Reflections [29]. The resulting equation is:

where Q is the orthogonal basis for and T is an upper-right triangular–invertible matrix that displays the relationships between the columns in Q.

The CHROM [22] method removes the noise by light reflection by color difference channel normalization. The method is based on the idea that the ratio of two normalized color channels would not be affected by the movement of light because different intensities would affect all channels equally. From the RGB signal, the method builds two orthogonal chrominance signals: and . The resulting rPPG signal is given by:

where and is the standard deviation.

The POS method [11] uses the plane orthogonal to the skin tone in the RGB signal to calculate rPPG. The resulting rPPG signal is given by:

where , , and is calculated as in CHROM.

To distinguish pulse-induced color changes from motion noise, the PBV [23] approach derives the rPPG signal in the RGB data with blood volume pulse fluctuations. Differences in a normalized RGB space along a very accurate vector result from the different absorption spectra of arterial blood and bloodless skin. The blood volume pulse vector results from the vector representation of the preprocessed color channels.

For a specific light spectrum and specific transfer properties of the optical filters in the camera, the can be identified. An rPPG algorithm with high motion robustness can be created using this “signature” The rPPG signal is calculated by the projection:

where M is the orthogonal matrix:

where k is a normalization factor.

The LGI [14] calculates an rPPG signal with a robust algorithm using local transformations. The rPPG signal is computed by:

where D is:

and where U is the result of a single value decomposition of the RGB signal. The identity matrix is I.

It can be stated that every established method, besides GREEN [13], requires more calculation steps than the simple ratio-based methods presented in this paper.

2.4. Evaluation Metric

To determine the absolute BPM difference (| BPM|), a power spectrum (PS) analysis was conducted. The fast Fourier transform of the autocorrelation function is a definition that is commonly used for a PS [30]. The outcome is shown as a plot of signal power against frequency. Since the frequency of the peak in the PS plot corresponds to the HR, this analysis is often performed for PPG and rPPG signals. The resulting | BPM| is the frequency difference of the peak in the PS between the estimated rPPG signal and the reference fingertip signal from a normalized 10-second window.

3. Results

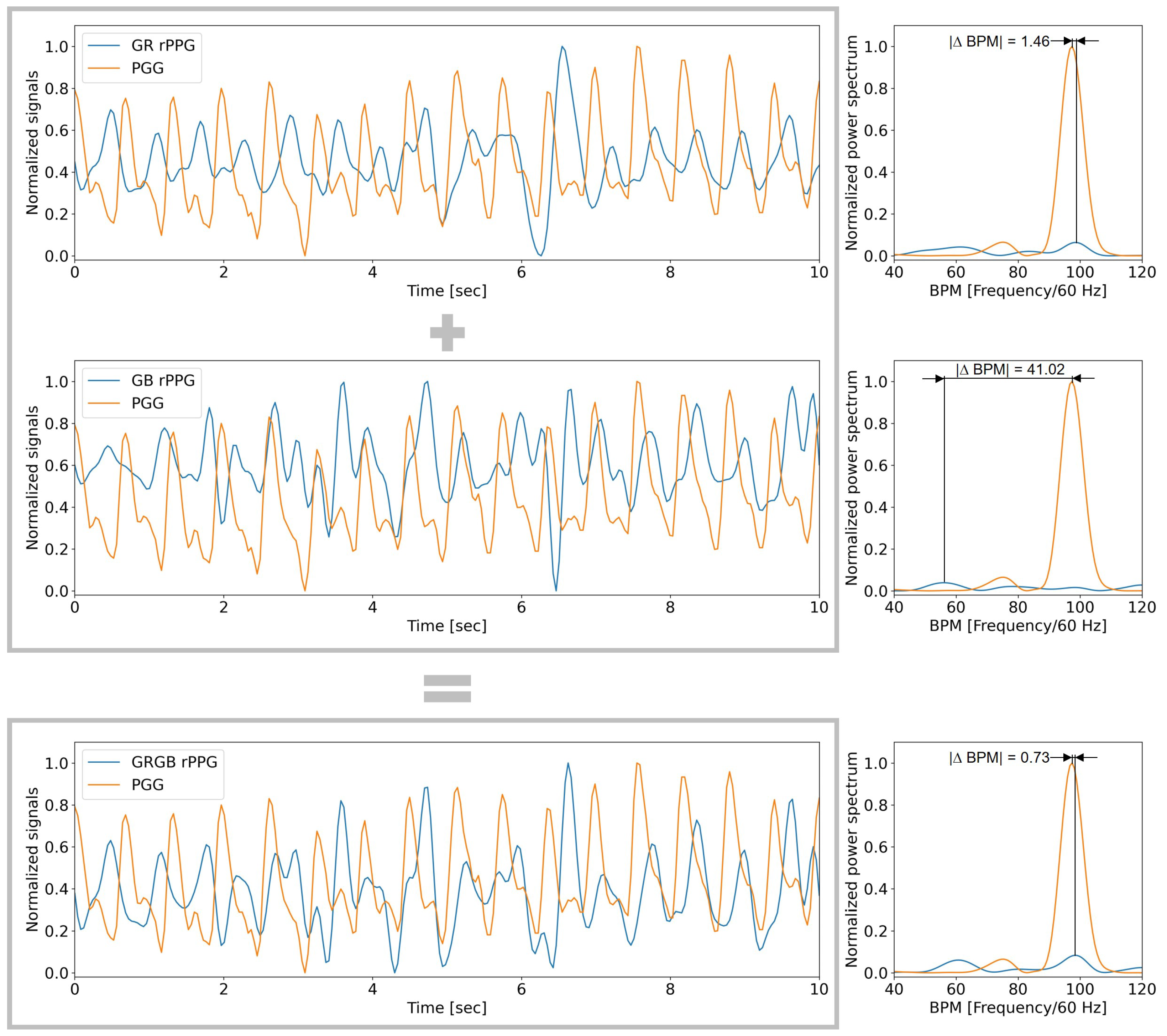

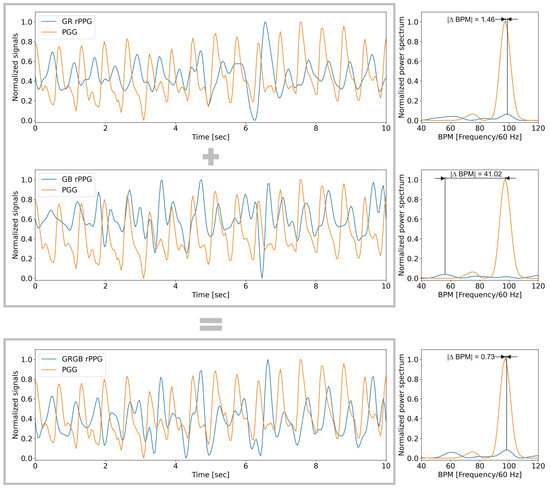

Figure 2 shows an example of the 10-second window of the rPPG signal from the proposed methods. In the figure, the systolic peaks are recognizable. However, it is not easy to visually compare the results; therefore, the power spectrum is plotted on the right side of each signal. The heart rate derived from rPPG constructed using the GR method was close to the heart rate calculated via the fingertip PPG signal, with , while the rPPG constructed using the GB method achieved . Interestingly, the GRGB achieved .

Figure 2.

Representative normalized rPPG signals of the three proposed methods from a 10-second window in the Gym video setting of the participant, David, and the reference fingertip PPG signal. The combination of the GR and GB method results in the GRGB method.

The video setting ’Gym’ is one of the more challenging video settings to determine heart rate. Additional noise sources, compared to the other video settings, are many small movements of the face which come from performing an activity, sweat on the face of the participant, and the heart rate does not have to be constant over a 10-second window. These noise sources can be seen in Figure 2, the heart rate peak of the rPPG signal, which does not stand out from the surrounding peaks. However, by combining the GB and GR methods, the overall result is improved.

In the Resting video setting, the method Omit performed best, followed by GRGB, CHROM, and LGI, which performed equally well. In the Gym video setting, the GRGB method performed best, followed by POS. The two methods set themselves apart here and showed particular motion robustness. Together with the video setting rotation, Gym belongs to the video settings with the highest on average. In these two video settings, it can be seen that the GRGB method performed significantly better than the GR or GB methods individually, as shown in Table 2.

Table 2.

Average | BPM| for each video setting and rPPG method. A small | BPM| means that the calculated BPM from the PPG and rPPG signals are in high agreement.

The light conditions in the ’Talk’ video setting are unique because they are natural light. In this case, our methods, which are based on simple fractions without any color channel normalization, showed modest performance. In this case, the CHROM method performed best, followed by POS. Again, it can be seen in Table 2, GRGB performed better than GR or GB individually, although not as clearly as in the video setting Gym or Talk.

The video setting Rotation had the highest on average and stood out with a particularly high level of head movements. Here, our proposed GRGB method also showed the best performance. This can be interpreted as the confirmation of the high level of motivation robustness. Method POS showed the second-best results in the video setting Rotation.

Overall, the GRGB method outperformed all of the other methods. Surprisingly, in the Gym video setting, the GRGB method was the best and showed high motion robustness. The GR method also performed well. In this study, the three best-performing methods are POS, LGI, and GRGB.

4. Discussion

It was also demonstrated that the GR method performed better than the green channel alone for every video setting. This can be interpreted as confirmation of the present study’s hypothesis. The sum of the two-color channel ratio applied in the GRGB method shows superior performance, although the single-ratio GB always performed worse than the GR. POS also leads to good results in our comparison, but the GRGB is simpler and less computationally complex. The time complexity of POS is while GRGB’s time complexity is . POS requires multiple calculation steps, including temporal normalization for the chosen window length.

In the video recordings with a lot of motion and indoor lighting (e.g., Gym and Rotation), the GRGB method performed particularly well. Our GRGB method outperformed all the rPPG methods evaluated in this study. All of our proposed methods would produce the same rPPG signal at other RGB window lengths. Other methods, such as PCA or ICA, depend on the length of the signal used and eventually produce different results for different window lengths. In the existing literature, more than eight studies have introduced an rPPG method; at least seven of these studies reported results for indoor lighting conditions, which are worse than our simple GRGB method. These eight rPPG methods all have the same goals, and it is unclear under which conditions they perform best. More research is needed to understand which methods have better performance in specific environmental settings and under specific movement conditions. The LGI-PGGI dataset from Pilz et al. [14] is particularly suitable for investigation because it includes four different settings. For each video setting, there is a new ranking that, to the best of our knowledge, is not predictable. However, this dataset has limitations, as it consists mainly of Caucasian participants.

The literature almost unanimously ascertains that darker skin tends to make rPPG methods more error-prone [31,32]. However, there is no threshold value for melanin content at which the method’s performance decreases drastically [31]. Thus, the evaluated rPPG methods may perform differently for darker skin tones [33]. Therefore, more research should be conducted, ideally using a dataset that is representative of the general population in terms of skin tone. Furthermore, the participants tended to be young and healthy. Age could be a factor for algorithm potential bias [34]. It would also be interesting to compare participants who suffer from CVDs. Further investigation is needed to determine the possible influencing factors and to gain a deeper understanding of the relationship between the three color channels and the rPPG signal.

The GRGB method runs only one simple mathematical equation, making it an optimal rPPG approach for real-time applications, especially for artificial lighting conditions. The applied data set consists of six people, each with four video settings. A higher number of participants or more video material could help increase this study’s significance. This study showed that a simple ratio of red and green channels are an acceptable rPPG method for ideal environmental conditions (e.g., indoor lighting and minimal movements).

In addition to the environmental conditions during recording, the technical data of the videos are also relevant, such as resolution and frames per second. In the dataset used, the resolution is relatively low at , but it must be noted that the average values of the color channel in the ROIs per frame are used, as shown in Figure 1. Therefore, it can be assumed that higher resolutions can only slightly improve the result. In contrast, the frame rate probably has a stronger influence, as oximeters often work with higher frequencies. However, it must be taken into account that we have limited ourselves to a consumer-grade web camera. In summary, the video quality used is rather at the lower end of modern webcams, and the results could possibly be improved by a better web camera.

An increase in the complexity of rPPG methods can be observed in the literature. More specific methods are presented that are more suitable for specific scenarios or datasets. We greatly appreciate the work of the researchers who have investigated the current state of rPPG. The study discussed in this paper challenges the status quo and shows that much simpler methods can also produce sophisticated results.

We have provided the following recommendations for future research:

- 1

- Examining the proposed GRGB method as a benchmark rPPG method.

- 2

- Exploring the illumination changes. The lighting used in indoor settings is often not described well enough. To understand the rPPG method more deeply, it is essential to study the light that illuminates the face (e.g., spectrum or angle to the face).

- 3

- Investigating the impact of ROI. Any movement of the subject can introduce artifacts into the extracted ROI, making it difficult to separate the signal from the noise. For video settings with little movement and constant light, we recommend focusing more on ROI selection.

- 4

- Collecting videos from participants with different skin colors. We recommend testing all the examined algorithms on participants of different ethnicities and age ranges.

5. Conclusions

This study showed that using a ratio-based RGB method is a sufficient rPPG construction for HR estimation. Three ratio-based RGB methods were investigated: GR, GB, and GRGB. Both GR and GB provided comparable HR estimations to complex rPPG methods. Interestingly, the GRGB method achieved optimal performance compared to techniques with more sophisticated image processing algorithms capable of accurately detecting and tracking blood flow changes in real time. The proposed low-complexity and easy-to-implement method paves the future of rPPG technology, intending to make rPPG construction fast, accurate, robust, and accessible to a broader range of users.

Author Contributions

M.E. designed and led the study. F.H., C.M., and M.E. conceived the study. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset used is available at https://github.com/partofthestars/LGI-PPGI-DB (accessed on 1 January 2022 ). The applied rPPG methods are part of the pyVHR toolbox from https://github.com/phuselab/pyVHR (accessed on 1 January 2022).

Conflicts of Interest

The authors declare that there are no competing interests.

References

- Elgendi, M. PPG Signal Analysis; Taylor & Francis (CRC Press): Boca Raton, FL, USA, 2020. [Google Scholar]

- Elgendi, M. On the analysis of fingertip photoplethysmogram signals. Curr. Cardiol. Rev. 2012, 8, 14–25. [Google Scholar] [CrossRef]

- Haugg, F.; Elgendi, M.; Menon, C. Assessment of blood pressure using only a smartphone and machine learning techniques: A systematic review. Front. Cardiovasc. Med. 2022, 9, 894224. [Google Scholar] [CrossRef]

- Frey, L.; Menon, C.; Elgendi, M. Blood pressure measurement using only a smartphone. NPJ Digit. Med. 2022, 5, 86. [Google Scholar] [CrossRef]

- Lin, W.-H.; Wu, D.; Li, C.; Zhang, H.; Zhang, Y.-T. Comparison of heart rate variability from ppg with that from ecg. In The International Conference on Health Informatics; Zhang, Y.-T., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 213–215. [Google Scholar]

- Elgendi, M.; Fletcher, R.; Liang, Y.; Howard, N.; Lovell, N.H.; Abbott, D.; Lim, K.; Ward, R. The use of photoplethysmography for assessing hypertension. NPJ Digit. Med. 2019, 2, 60. [Google Scholar] [CrossRef]

- WHO: Cardiovascular Diseases. 2022. Available online: https://www.who.int/health-topics/cardiovascular-diseases (accessed on 19 July 2022).

- Rocque, G.B.; Rosenberg, A.R. Improving outcomes demands patient-centred interventions and equitable delivery. Nat. Rev. Clin. Oncol. 2022, 19, 569–570. [Google Scholar] [CrossRef]

- Subbiah, V. The next generation of evidence-based medicine. Nat. Med. 2023, 29, 49–58. [Google Scholar] [CrossRef]

- Boccignone, G.; Conte, D.; Cuculo, V.; d’Amelio, A.; Grossi, G.; Lanzarotti, R. An open framework for remote-ppg methods and their assessment. IEEE Access 2020, 8, 216083–216103. [Google Scholar] [CrossRef]

- Wang, W.; den Brinker, A.C.; Stuijk, S.; de Haan, G. Algorithmic principles of remote ppg. IEEE Trans. Biomed. Eng. 2016, 64, 1479–1491. [Google Scholar] [CrossRef]

- Haugg, F.; Elgendi, M.; Menon, C. Effectiveness of remote ppg construction methods: A preliminary analysis. Bioengineering 2022, 9, 485. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef]

- Pilz, C.S.; Zaunseder, S.; Krajewski, J.; Blazek, V. Local group invariance for heart rate estimation from face videos in the wild. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1335–13358. [Google Scholar] [CrossRef]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time facial surface geometry from monocular video on mobile gpus. In Proceedings of the CVPR Workshop on Computer Vision for Augmented and Virtual Reality 2019, Long Beach, CA, USA, 17 June 2019. [Google Scholar]

- Kwon, S.; Kim, J.; Lee, D.; Park, K. Roi analysis for remote photoplethysmography on facial video. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 4938–4941. [Google Scholar] [CrossRef]

- Kim, D.-Y.; Lee, K.; Sohn, C.-B. Assessment of roi selection for facial video-based rppg. Sensors 2021, 21, 7923. [Google Scholar] [CrossRef]

- Tarvainen, M.; Rantaaho, P.; Karjalainen, P. An advanced detrending method with application to hrv analysis. IEEE Trans. Biomed. Eng. 2002, 49, 172–175. [Google Scholar] [CrossRef]

- Kim, J.; Ahn, J. Design of an optimal digital iir filter for heart rate variability by photoplethysmogram. Int. J. Eng. Res. Technol. 2018, 11, 2009–2021. [Google Scholar]

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef]

- Lewandowska, M.; Rumiński, J.; Kocejko, T.; Nowak, J. Measuring pulse rate with a webcam — a non-contact method for evaluating cardiac activity. In Proceedings of the 2011 Federated Conference on Computer Science and Information Systems (FedCSIS), Szczecin, Poland, 18–21 September 2011; pp. 405–410. [Google Scholar]

- de Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rppg. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef]

- de Haan, G.; van Leest, A. Improved motion robustness of remote-PPG by using the blood volume pulse signature. Hysiological Meas. 2014, 35, 1913–1926. [Google Scholar] [CrossRef]

- Casado, C.; López, M. Face2ppg: An unsupervised pipeline for blood volume pulse extraction from faces. arXiv 2022, arXiv:2202.04101. [Google Scholar]

- Boccignone, G.; Conte, D.; Cuculo, V.; D’Amelio, A.; Grossi, G.; Lanzarotti, R.; Mortara, E. pyVHR: A python framework for remote photoplethysmography. Peerj Comput. Sci. 2022, 8, e929. [Google Scholar] [CrossRef]

- Van Kampen, E.J.; Zijlstra, W.G. Determination of hemoglobin and its derivatives. Adv. Clin. Chem. 1965, 8, 141–187. [Google Scholar]

- Cardoso, J.F. High-order contrasts for independent component analysis. Neural Comput. 1999, 11, 157–192. [Google Scholar] [CrossRef]

- Francis, J.G.F. The QR Transformation—Part 2. Comput. J. 1962, 4, 32–345. [Google Scholar] [CrossRef]

- Householder, A.S. Unitary triangularization of a nonsymmetric matrix. J. ACM 1958, 5, 339–342. [Google Scholar] [CrossRef]

- Semmlow, J. Signals and Systems for Bioengineers; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Dasari, A.; Prakash, S.K.A.; Jeni, L.A.; Tucker, C.S. Evaluation of biases in remote photoplethysmography methods. NPJ Digit. Med. 2021, 4, 91. [Google Scholar] [CrossRef]

- Nowara, E.M.; McDuff, D.; Veeraraghavan, A. A meta-analysis of the impact of skin tone and gender on non-contact photoplethysmography measurements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Sinaki, F.Y.; Ward, R.; Abbott, D.; Allen, J.; Fletcher, R.R.; Menon, C.; Elgendi, M. Ethnic disparities in publicly-available pulse oximetry databases. Commun. Med. 2022, 2, 59. [Google Scholar] [CrossRef]

- Elgendi, M.; Fletcher, R.R.; Tomar, H.; Allen, J.; Ward, R.; Menon, C. The striking need for age diverse pulse oximeter databases. Front. Med. 2021, 8, 782422. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).