Detection of Cardiovascular Disease from Clinical Parameters Using a One-Dimensional Convolutional Neural Network

Abstract

1. Introduction

- A.

- Proposing a heart disease diagnosis model using the 1D CNN, making use of a large dataset with clinical parameters;

- B.

- Presenting the analysis of 1D CNN while dealing with dataset imbalance;

- C.

- Comparing with contemporary ML algorithms, using performance evaluation metrics;

- D.

- Making recommendations, including tuned hyperparameters for 1D CNN and featured optimization algorithms for developing a system to diagnose heart disease in its early stages.

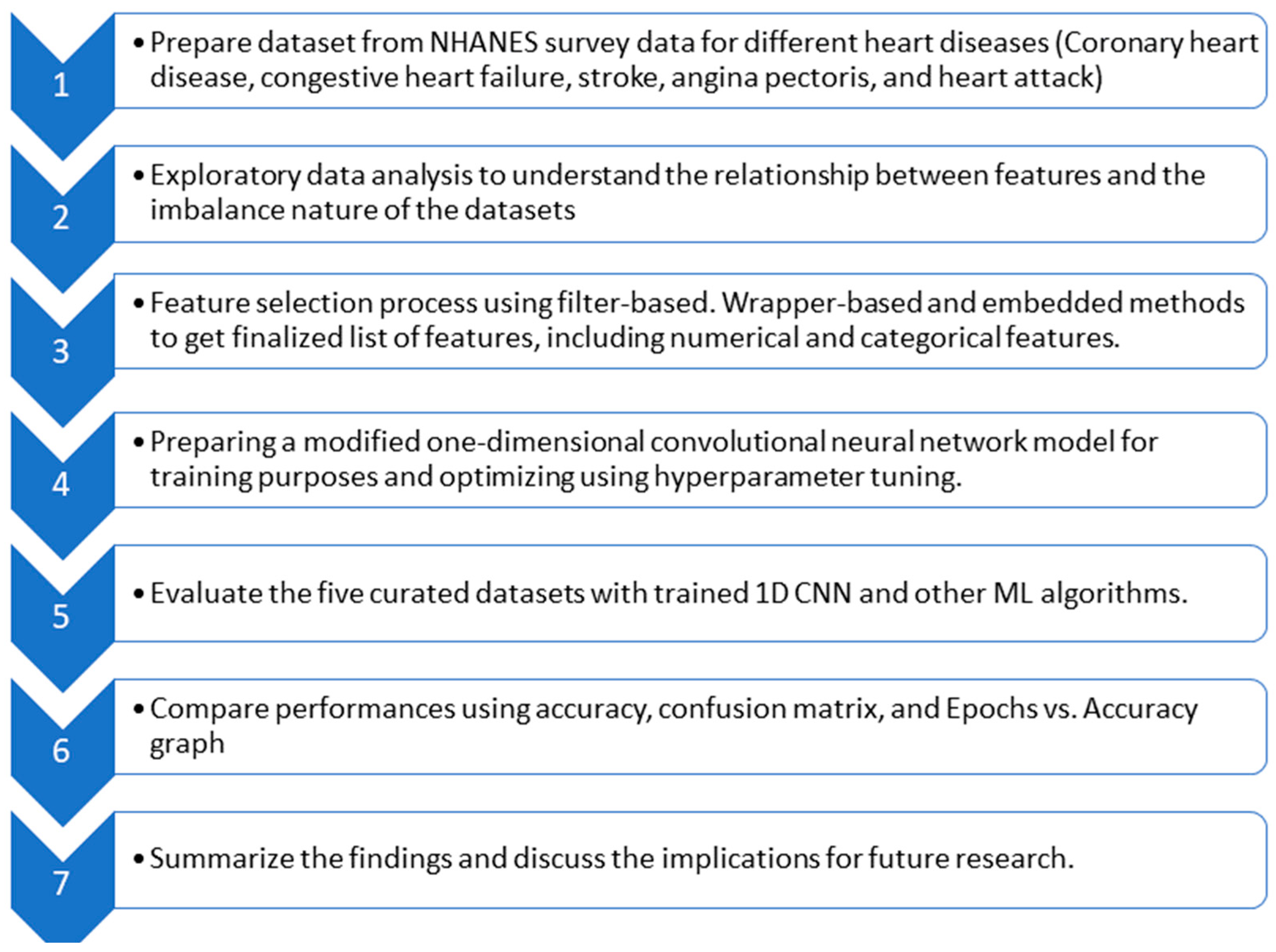

2. Proposed Technique

2.1. Dataset Selection and Preparation

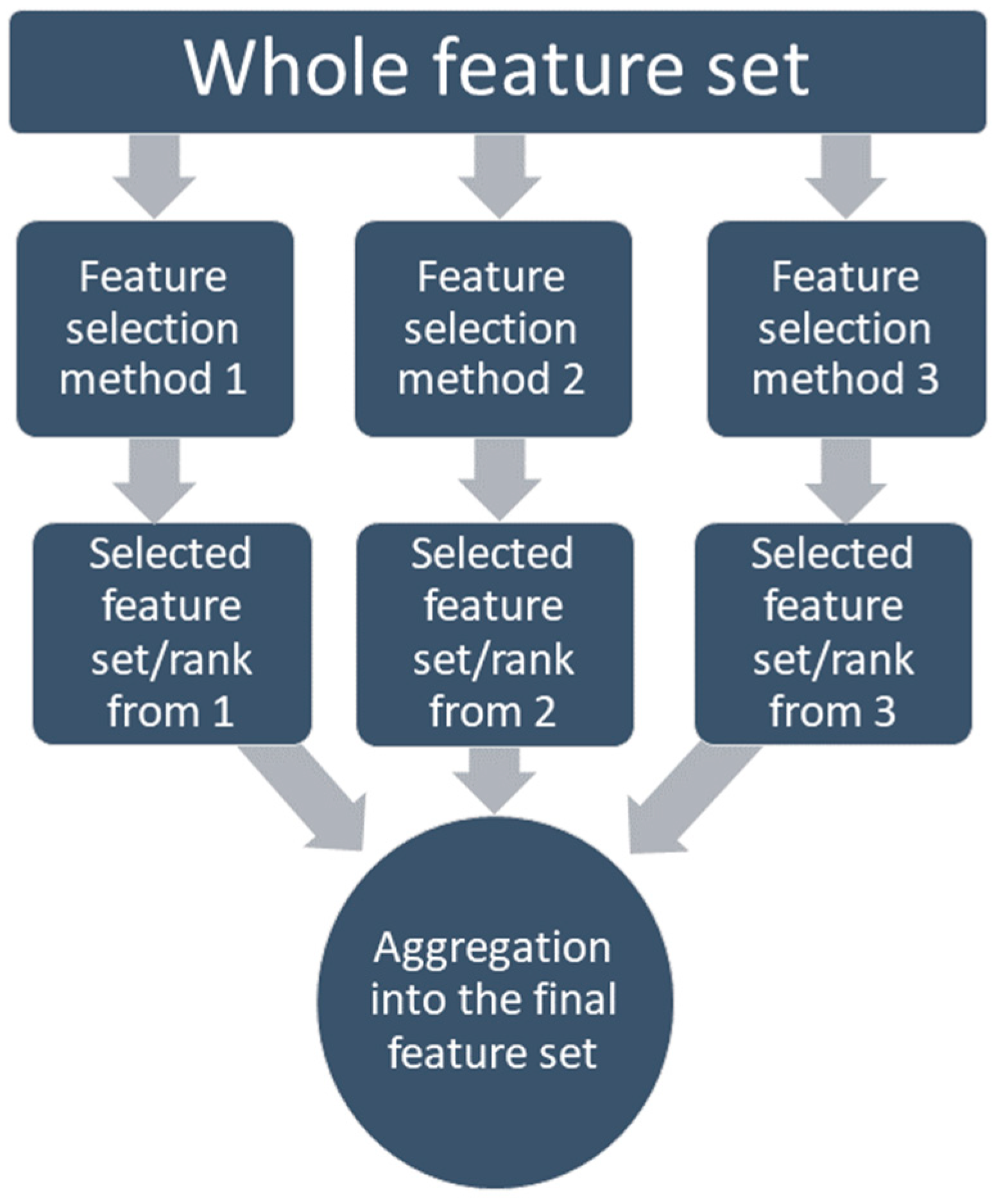

2.2. Feature Selection

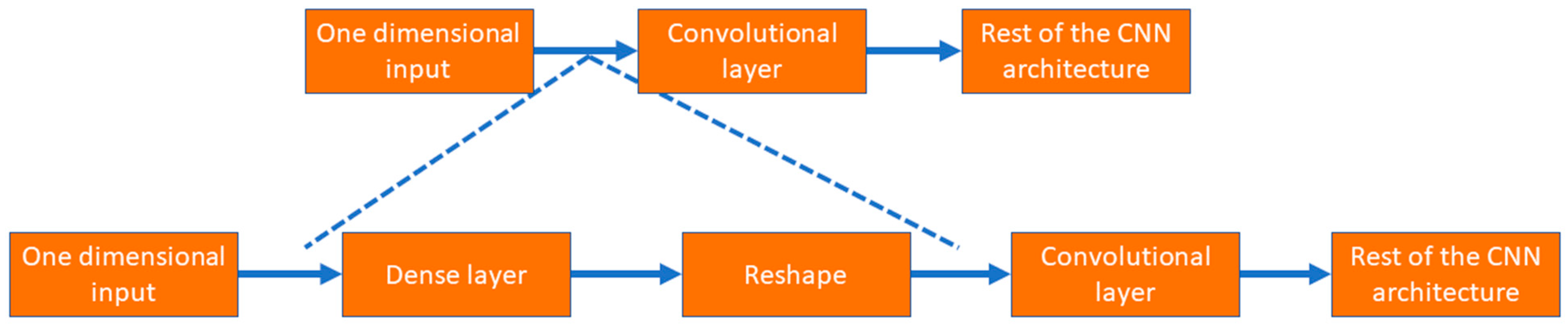

2.3. Convolutional Neural Network

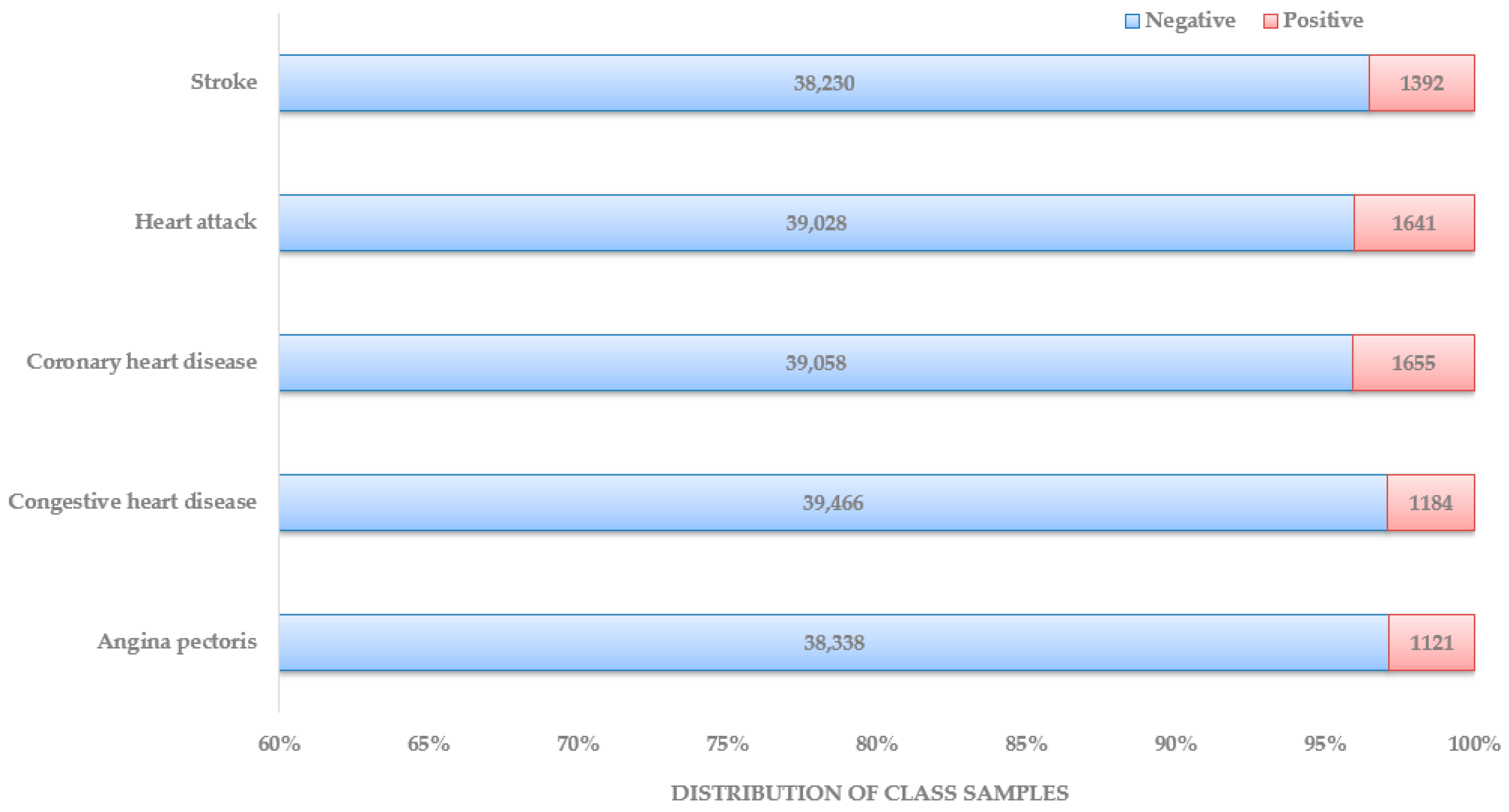

3. Data Analysis

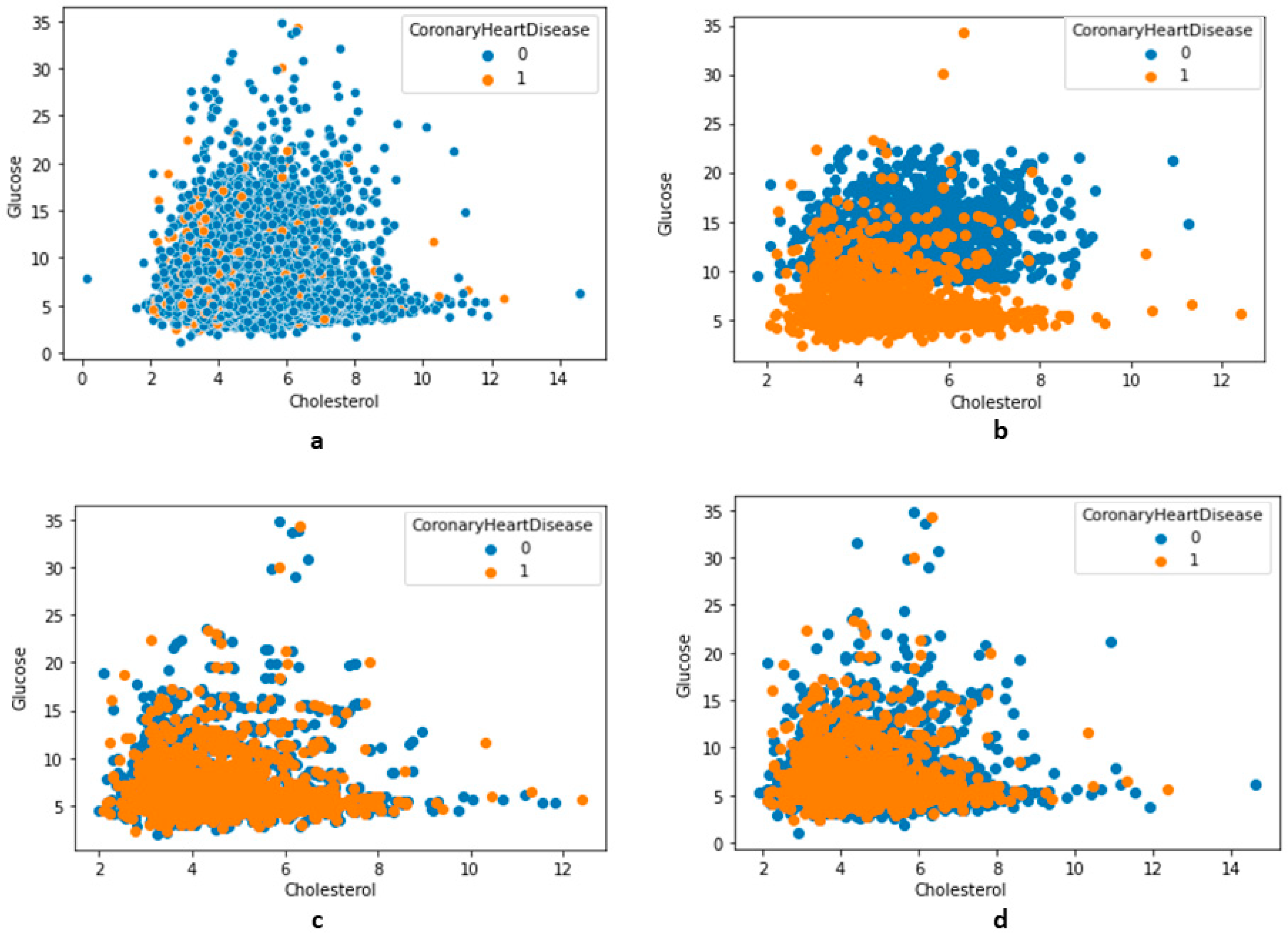

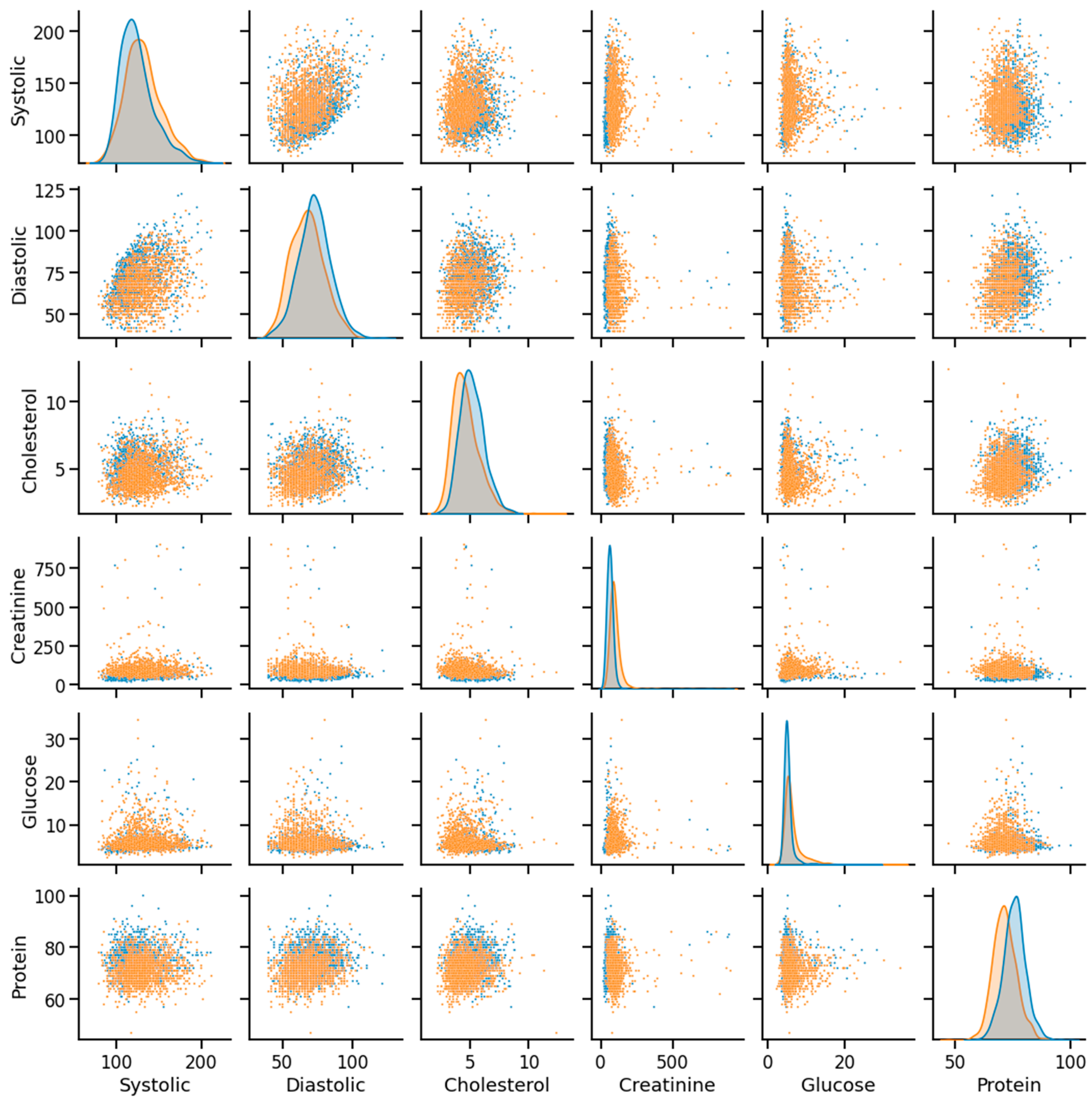

3.1. Data Imbalance and Exploratory Analysis

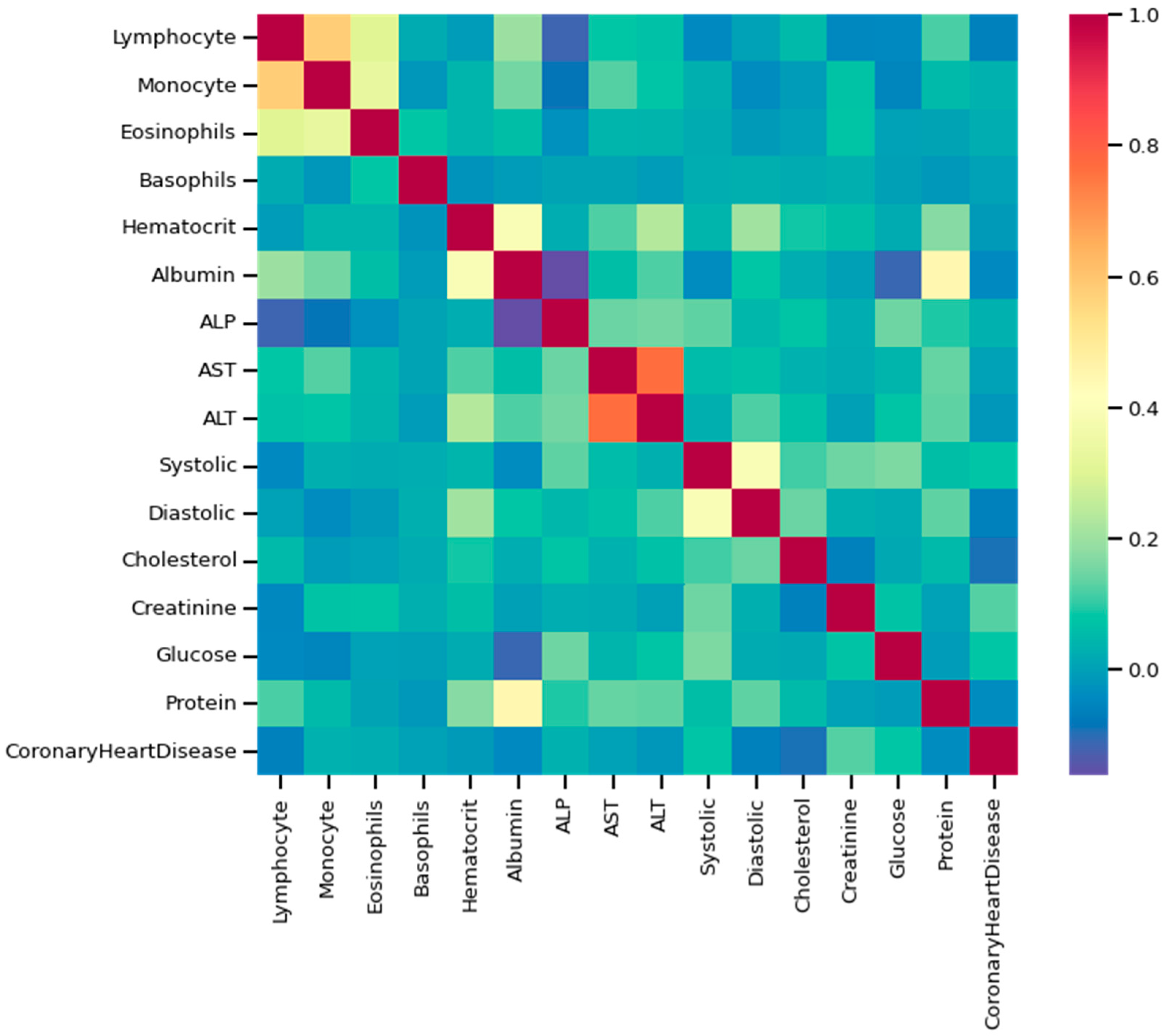

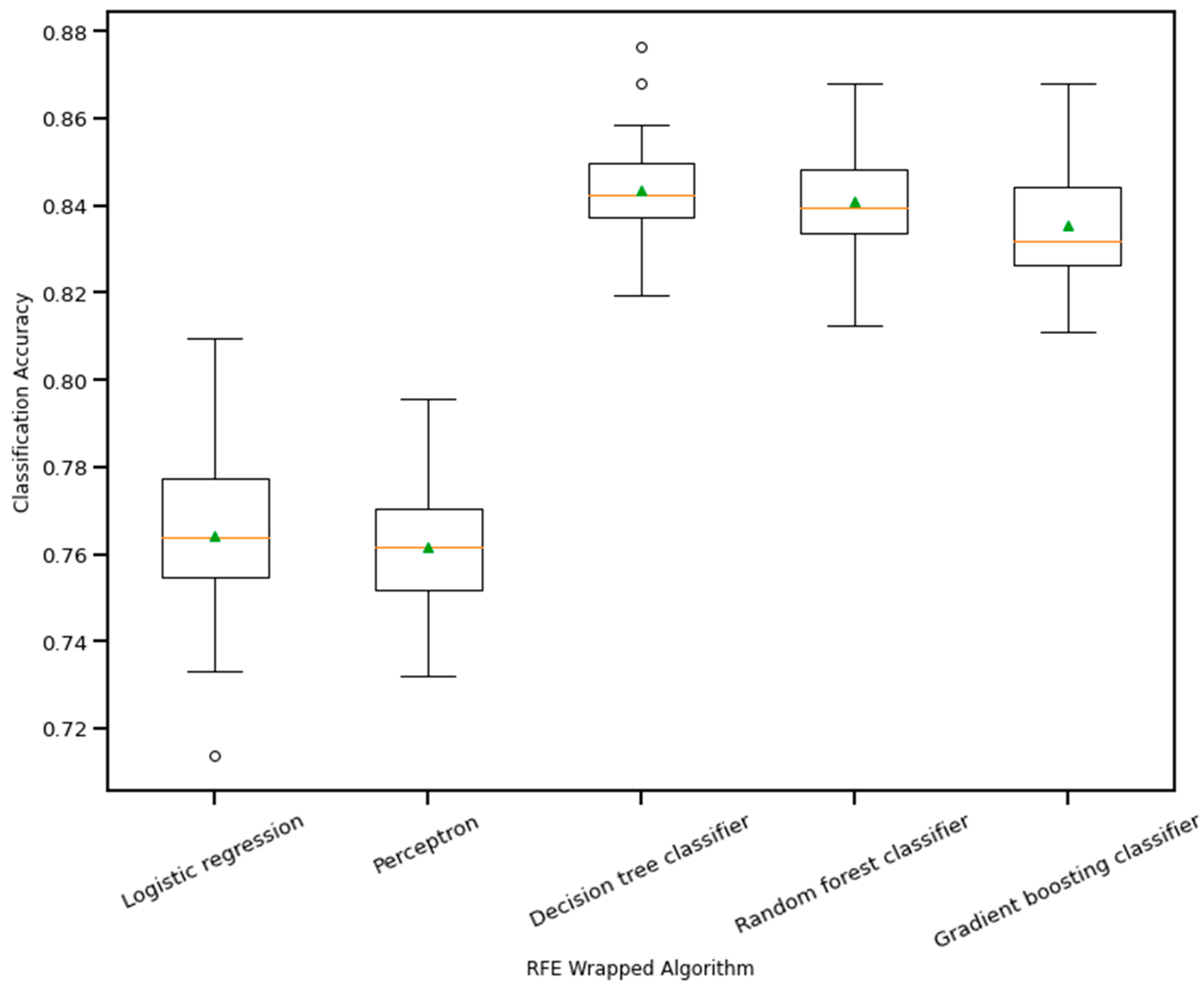

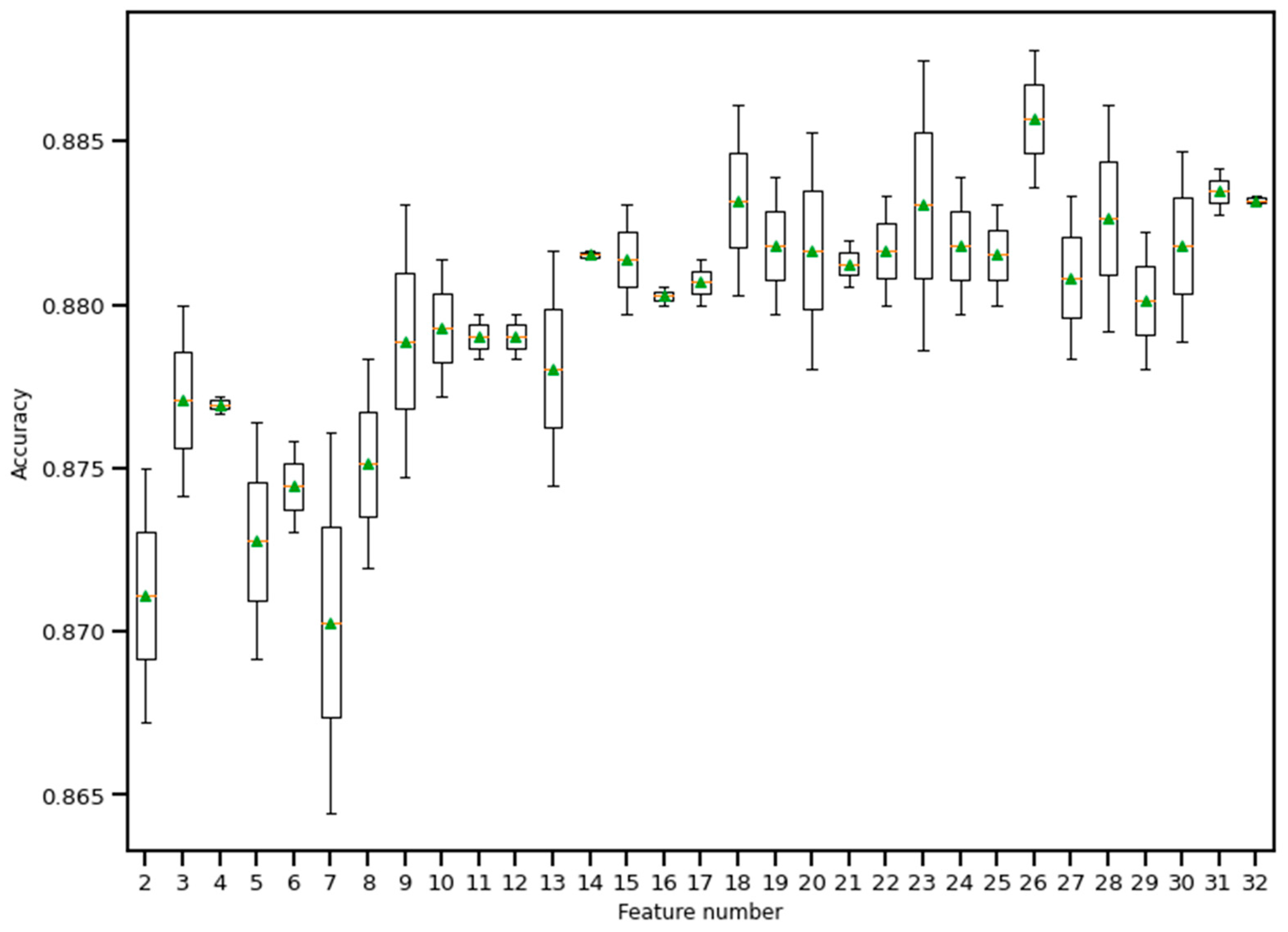

3.2. Feature Selection Process

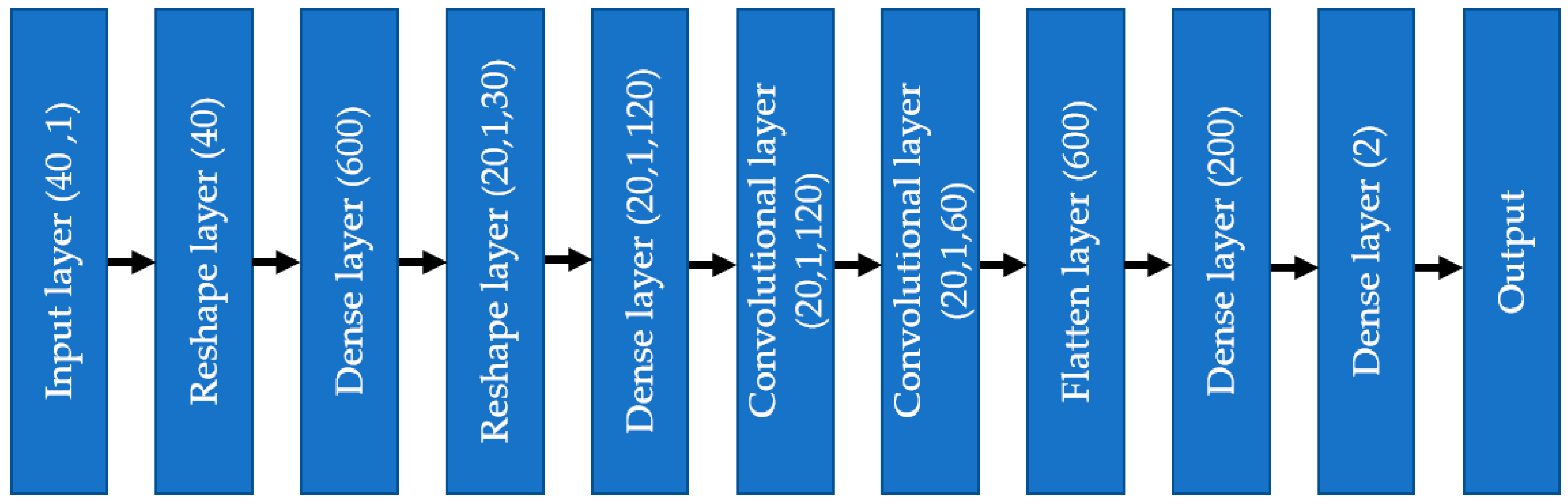

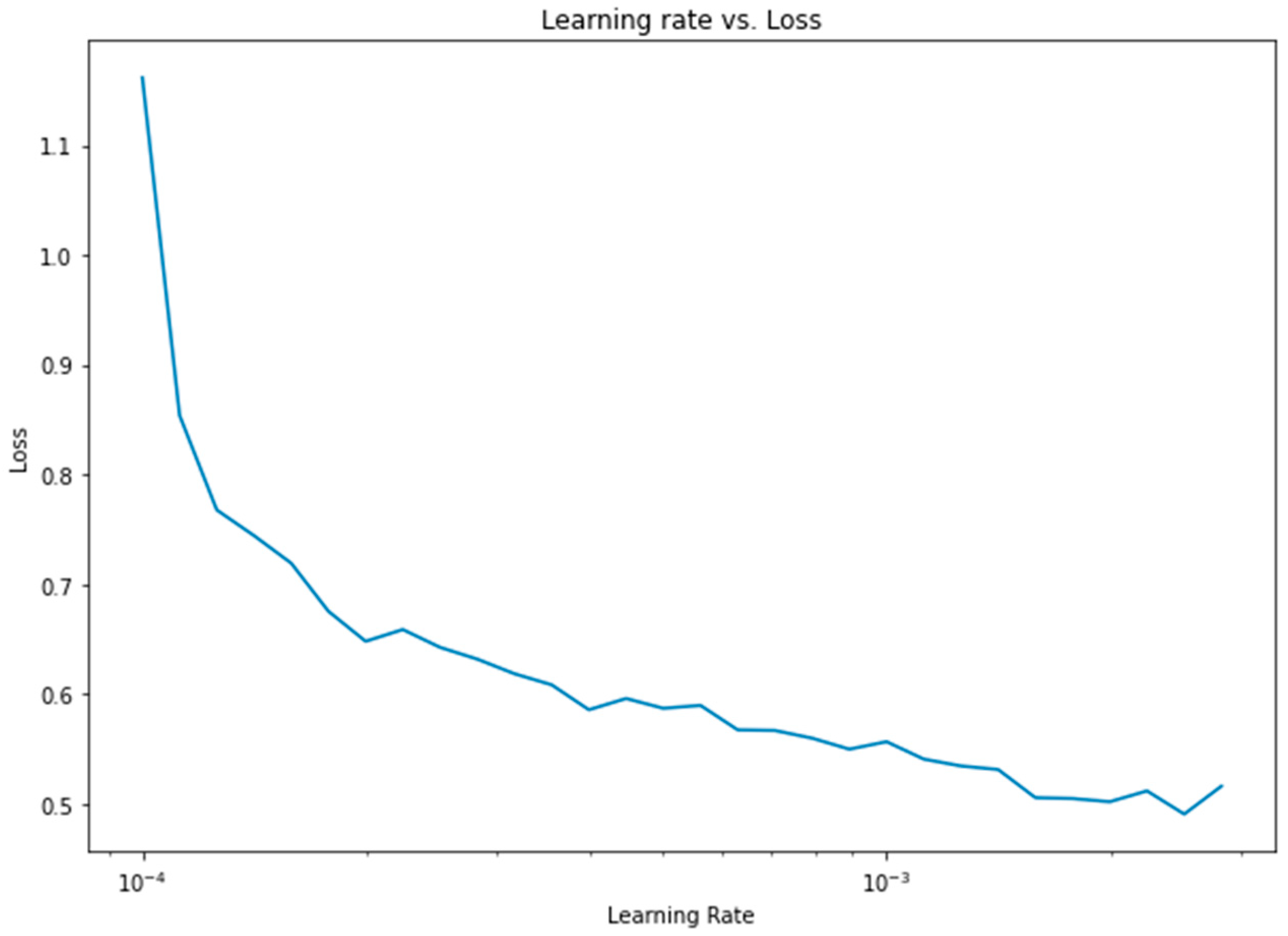

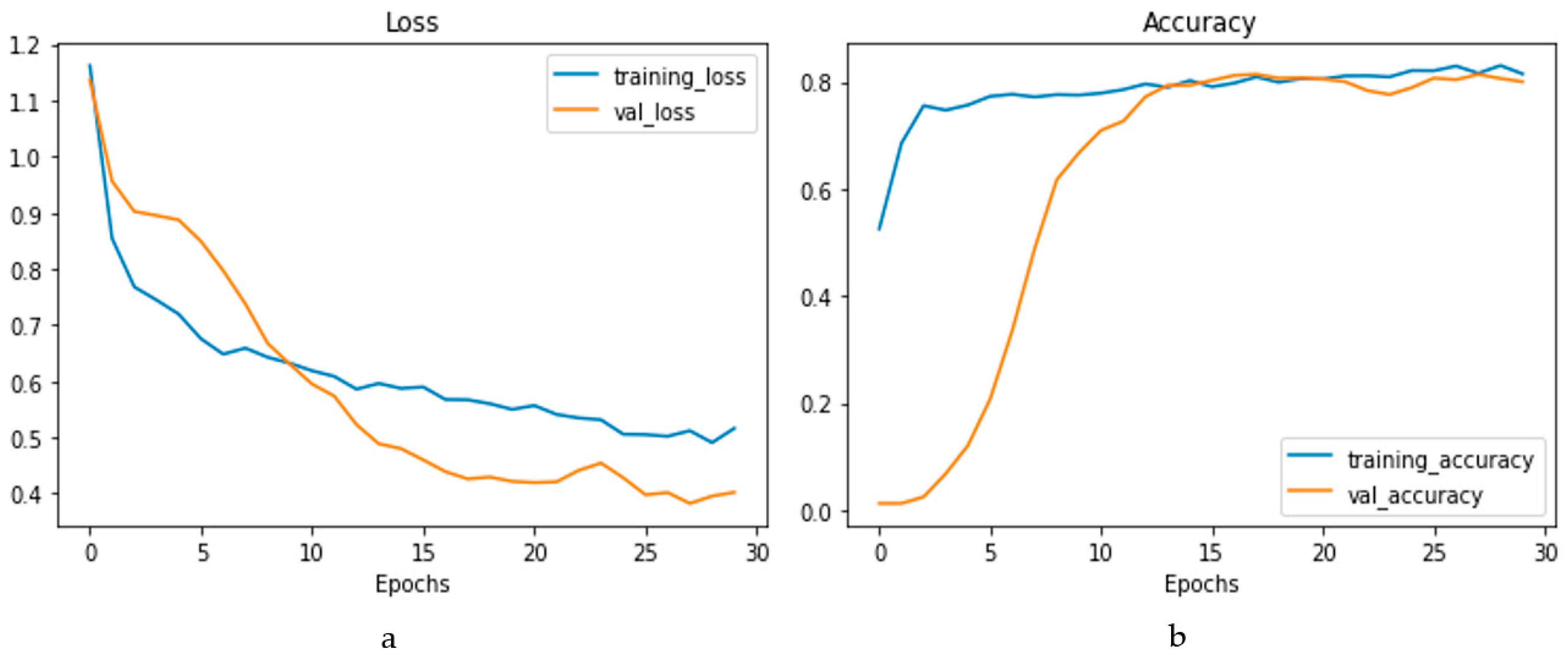

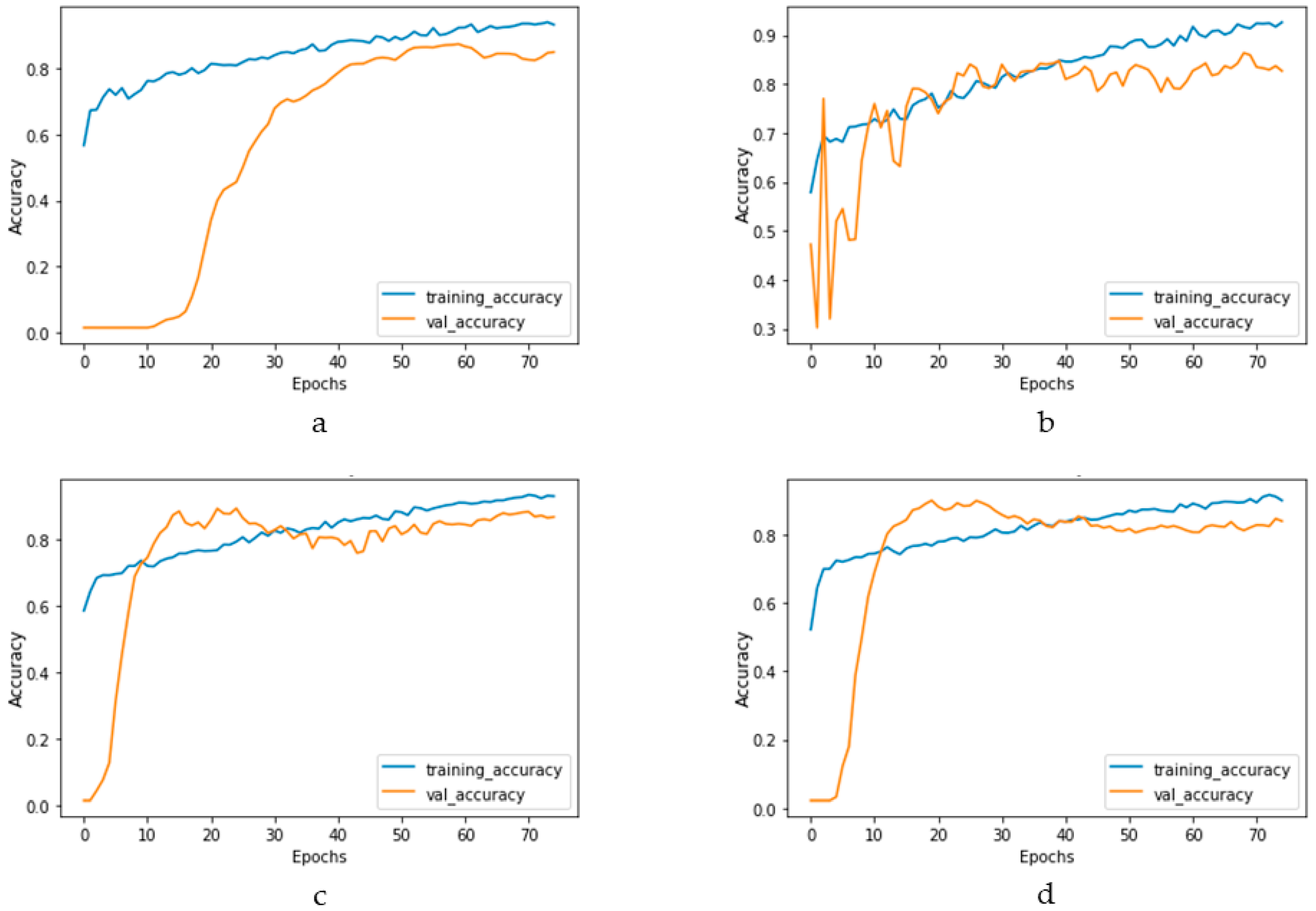

3.3. The 1D CNN Model

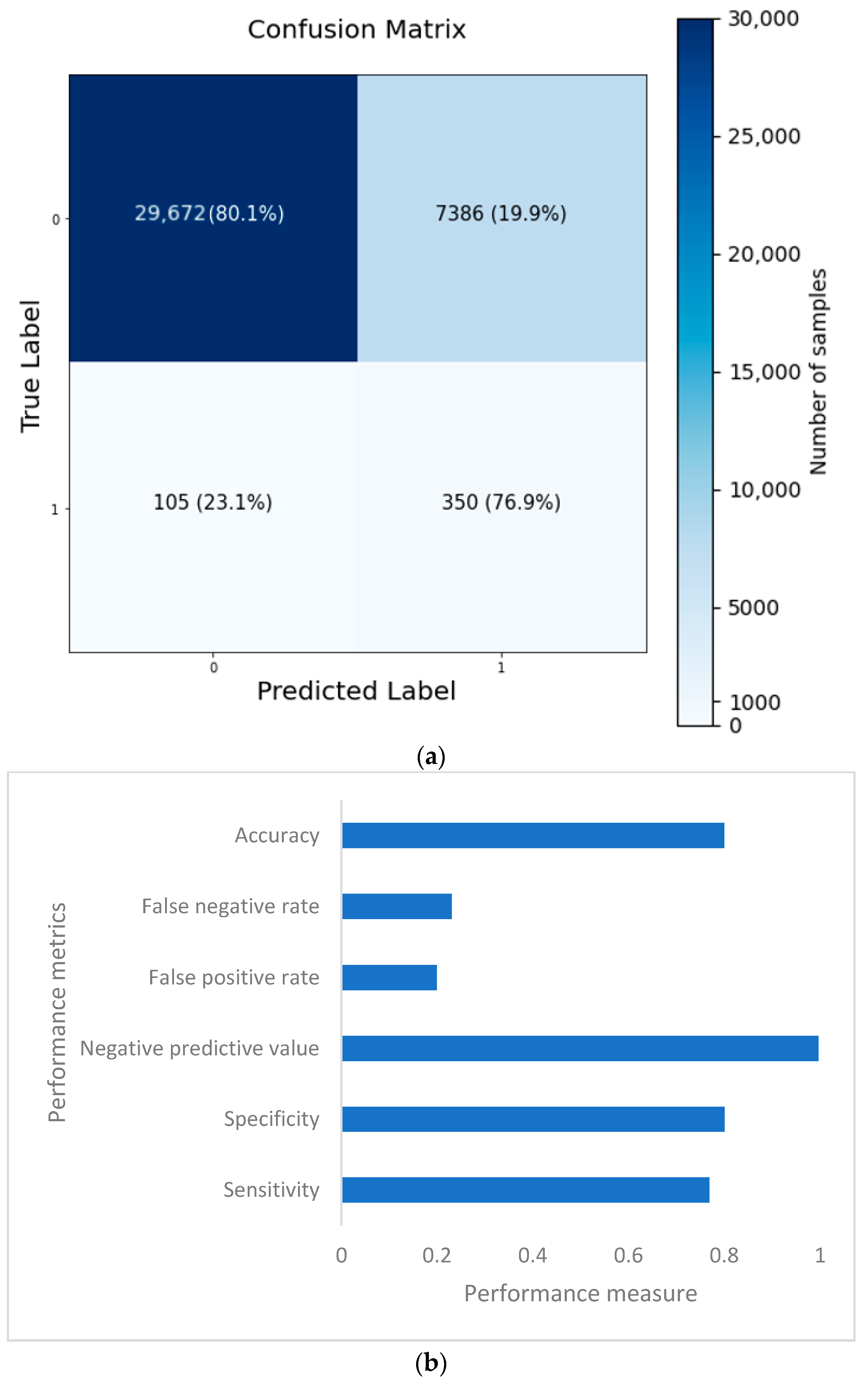

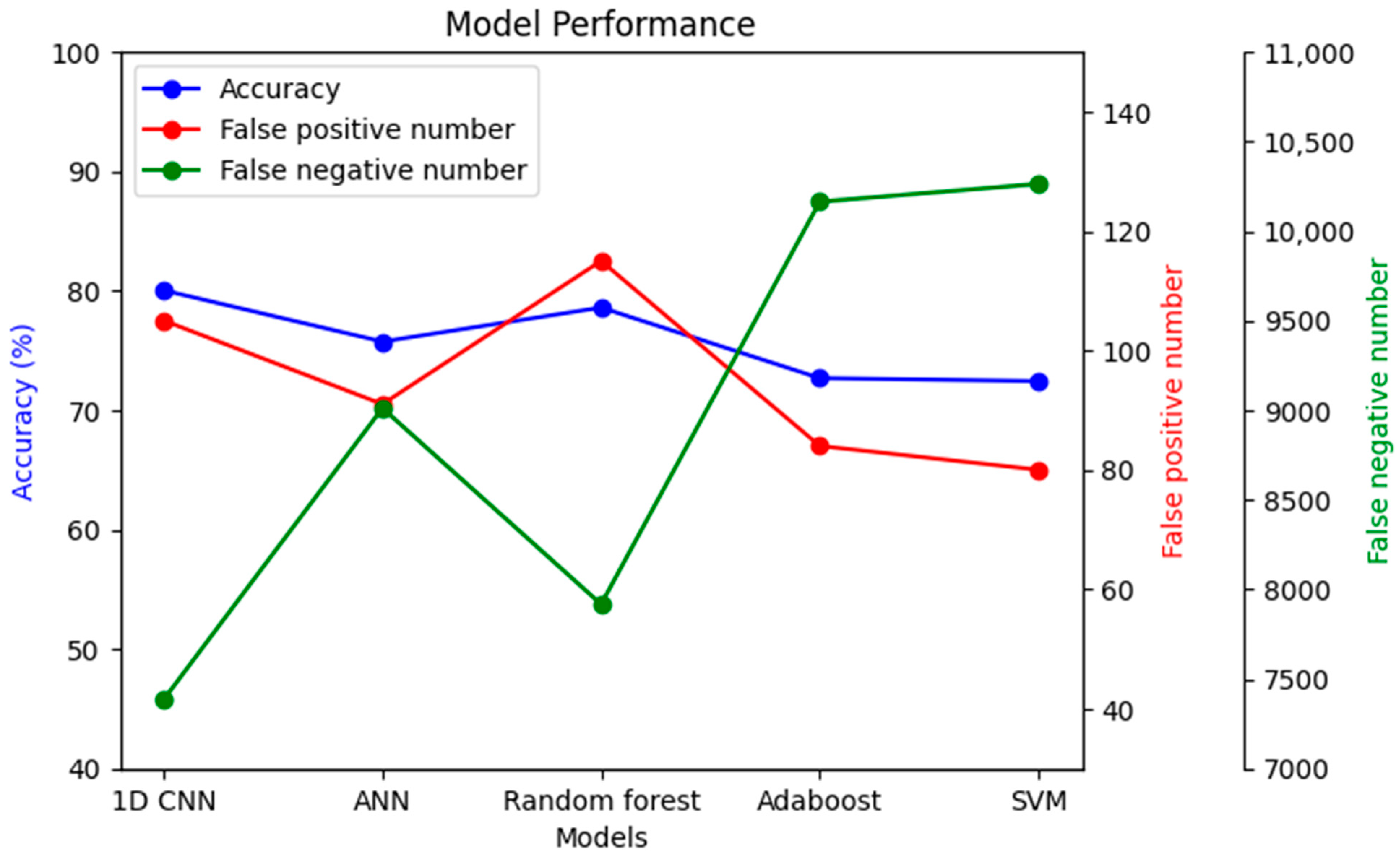

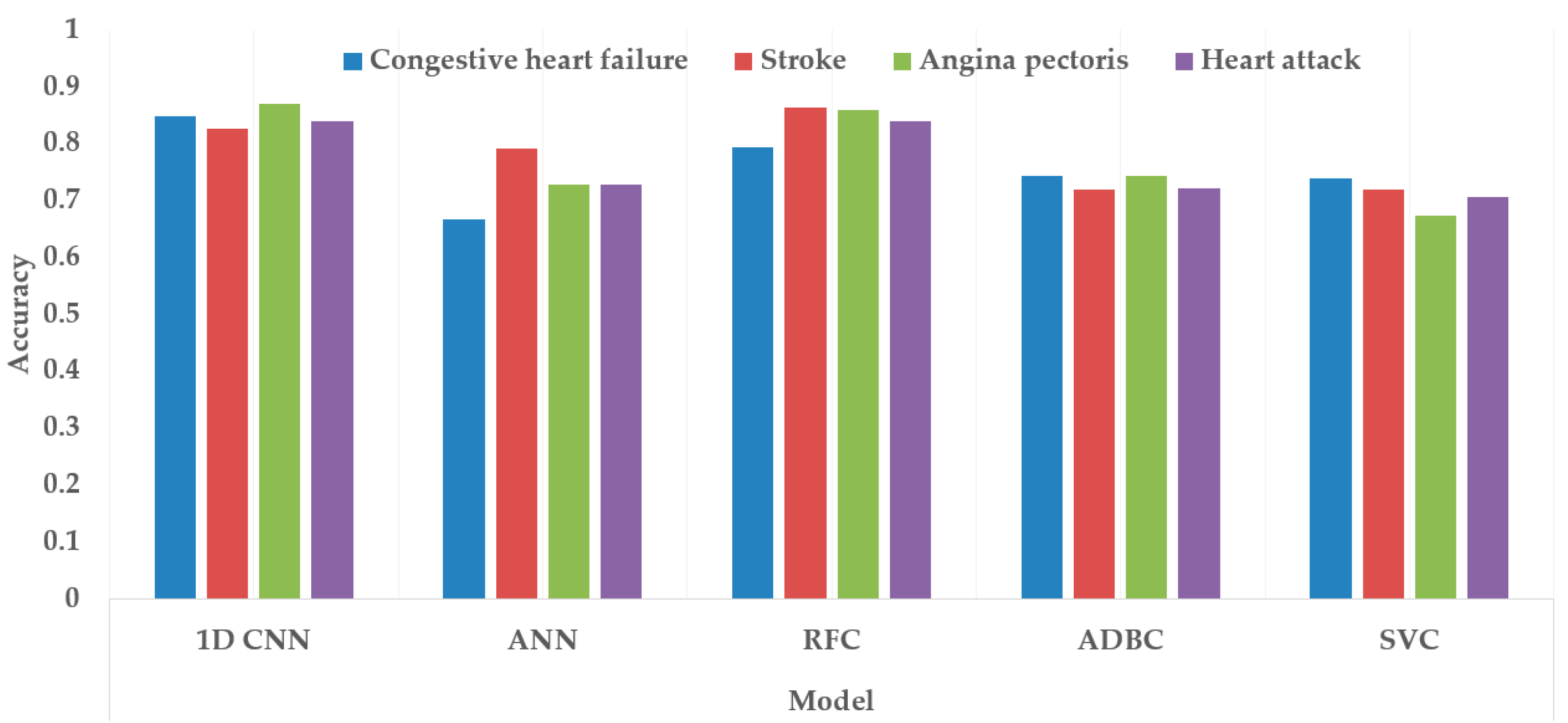

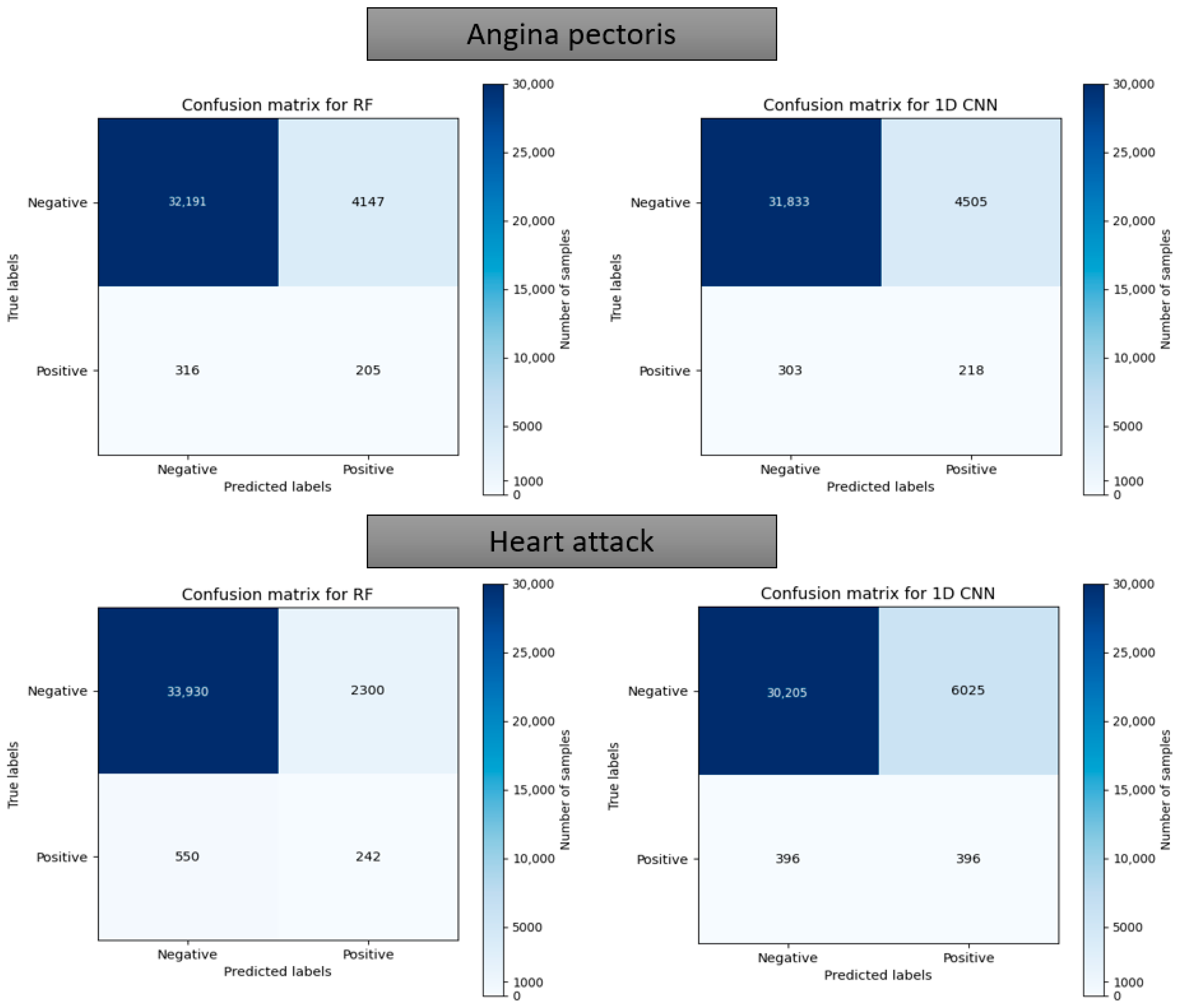

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bui, A.L.; Horwich, T.B.; Fonarow, G.C. Epidemiology and risk profile of heart failure. Nat. Rev. Cardiol. 2011, 8, 30–41. [Google Scholar] [CrossRef] [PubMed]

- Polat, K.; Güneş, S. Artificial immune recognition system with fuzzy resource allocation mechanism classifier, principal com-ponent analysis and FFT method based new hybrid automated identification system for classification of EEG signals. Expert Syst. Appl. 2008, 34, 2039–2048. [Google Scholar] [CrossRef]

- Durairaj, M.; Ramasamy, N. A comparison of the perceptive approaches for preprocessing the data set for predicting fertility success rate. Int. J. Control Theory Appl 2016, 9, 255–260. [Google Scholar]

- Goldberg, R. Coronary heart disease: Epidemiology and risk factors. In Prevention of Coronary Heart Disease, 1st ed.; Little, Brown and Company: Boston, MA, USA; Toronto, ON, Canada; London, UK, 2004; pp. 3–40. [Google Scholar]

- Tsao, C.W.; Aday, A.W.; Almarzooq, Z.I.; Alonso, A.; Beaton, A.Z.; Bittencourt, M.S.; Boehme, A.K.; Buxton, A.E.; Carson, A.P.; Commodore-Mensah, Y.; et al. Heart Disease and Stroke Statistics—2022 Update: A Report From the American Heart Association. Circulation 2022, 145, e153–e639. [Google Scholar] [CrossRef]

- Alizadehsani, R.; Habibi, J.; Sani, Z.A.; Mashayekhi, H.; Boghrati, R.; Ghandeharioun, A.; Bahadorian, B. Diagnosis of Coronary Artery Disease Using Data Mining Based on Lab Data and Echo Features. J. Med. Bioeng. 2012, 1, 26–29. [Google Scholar] [CrossRef]

- Arabasadi, Z.; Alizadehsani, R.; Roshanzamir, M.; Moosaei, H.; Yarifard, A.A. Computer aided decision making for heart disease detection using hybrid neural network-Genetic algorithm. Comput. Methods Programs Biomed. 2017, 141, 19–26. [Google Scholar] [CrossRef]

- Samuel, O.W.; Asogbon, G.M.; Sangaiah, A.K.; Fang, P.; Li, G. An integrated decision support system based on ANN and Fuzzy_AHP for heart failure risk prediction. Expert Syst. Appl. 2017, 68, 163–172. [Google Scholar] [CrossRef]

- Vanisree, K.V.; Singaraju, J. Decision Support System for Congenital Heart Disease Diagnosis based on Signs and Symptoms using Neural Networks. Int. J. Comput. Appl. 2011, 19, 6–12. [Google Scholar] [CrossRef]

- Patil, S.B.; Kumaraswamy, Y. Intelligent and effective heart attack prediction system using data mining and artificial neural network. Eur. J. Sci. Res. 2009, 31, 642–656. [Google Scholar]

- Hamilton-Craig, C.R.; Friedman, D.; Achenbach, S. Cardiac computed tomography—Evidence, limitations and clinical application. Heart Lung Circ. 2012, 21, 70–81. [Google Scholar] [CrossRef]

- Kitagawa, K.; Sakuma, H.; Nagata, M.; Okuda, S.; Hirano, M.; Tanimoto, A.; Matsusako, M.; Lima, J.A.C.; Kuribayashi, S.; Takeda, K. Diagnostic accuracy of stress myocardial perfusion MRI and late gadolinium-enhanced MRI for detecting flow-limiting coronary artery disease: A multicenter study. Eur. Radiol. 2008, 18, 2808–2816. [Google Scholar] [CrossRef]

- Cardinale, L.; Priola, A.M.; Moretti, F.; Volpicelli, G. Effectiveness of chest radiography, lung ultrasound and thoracic computed tomography in the diagnosis of congestive heart failure. World J. Radiol. 2014, 6, 230–237. [Google Scholar] [CrossRef]

- Mamun, K.; Rahman, M.M.; Sherif, A. Advancement in the Cuffless and Noninvasive Measurement of Blood Pressure: A Re-view of the Literature and Open Challenges. Bioengineering 2022, 10, 27. [Google Scholar] [CrossRef]

- Mamun, M.M.R.K.; Alouani, A. Using feature optimization and fuzzy logic to detect hypertensive heart diseases. In Proceedings of the 6th World Congress on Electrical Engineering and Computer Systems and Sciences (EECSS’20), Virtual Conference, 13–15 August 2020. [Google Scholar] [CrossRef]

- Mamunm Khan, M.M.R.; Alouani, A. Diagnosis of STEMI and Non-STEMI Heart Attack using Nature-inspired Swarm Intel-ligence and Deep Learning Techniques. J. Biomed. Eng. Biosci. 2020, 6, 1–8. [Google Scholar]

- Mamunm Khan, M.M.R.; Alouani, A.T. Myocardial Infarction Detection using Multi Biomedical Sensors. In Proceedings of the BICOB 2018, Las Vegas, NV, USA, 19–21 March 2018; pp. 117–122. [Google Scholar]

- Mamun, M.M.R.K.; Alouani, A. Arrhythmia Classification Using Hybrid Feature Selection Approach and Ensemble Learning Technique. In Proceedings of the 2021 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Toronto, ON, Canada, 12–17 September 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Sultana, Z.; Khan, A.R.; Jahan, N. Early Breast Cancer Detection Utilizing Artificial Neural Network. WSEAS Trans. Biol. Biomed. 2021, 18, 32–42. [Google Scholar] [CrossRef]

- Alshayeji, M.H.; Ellethy, H.; Abed, S.; Gupta, R. Computer-aided detection of breast cancer on the Wisconsin dataset: An artificial neural networks approach. Biomed. Signal Process. Control 2021, 71, 103141. [Google Scholar] [CrossRef]

- Bhattacherjee, A.; Roy, S.; Paul, S.; Roy, P.; Kausar, N.; Dey, N. Classification approach for breast cancer detection using back propagation neural network: A study. In Biomedical Image Analysis and Mining Techniques for Improved Health Outcomes; IGI Global: Hershey, PA, USA, 2015; pp. 1410–1421. [Google Scholar]

- Assegie, T.A. An optimized K-Nearest Neighbor based breast cancer detection. J. Robot. Control 2021, 2, 115–118. [Google Scholar] [CrossRef]

- Austin, P.C.; Tu, J.V.; Ho, J.E.; Levy, D.; Lee, D.S. Using methods from the data-mining and machine-learning literature for disease classification and prediction: A case study examining classification of heart failure subtypes. J. Clin. Epidemiol. 2013, 66, 398–407. [Google Scholar] [CrossRef]

- Zheng, B.; Yoon, S.W.; Lam, S.S. Breast cancer diagnosis based on feature extraction using a hybrid of K-means and support vector machine algorithms. Expert Syst. Appl. 2014, 41, 1476–1482. [Google Scholar] [CrossRef]

- Charleonnan, A.; Fufaung, T.; Niyomwong, T.; Chokchueypattanakit, W.; Suwannawach, S.; Ninchawee, N. Predictive ana-lytics for chronic kidney disease using machine learning techniques. In Proceedings of the 2016 Management and Innovation Technology International Conference (MITicon), Bang-San, Thailand, 12–14 October 2016; pp. MIT-80–MIT-83. [Google Scholar]

- Ashiquzzaman, A.; Tushar, A.K.; Islam, R.; Shon, D.; Im, K.; Park, J.-H.; Lim, D.-S.; Kim, J. Reduction of Overfitting in Diabetes Prediction Using Deep Learning Neural Network. In IT Convergence and Security 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 35–43. [Google Scholar] [CrossRef]

- Yahyaoui, A.; Jamil, A.; Rasheed, J.; Yesiltepe, M. A Decision Support System for Diabetes Prediction Using Machine Learning and Deep Learning Techniques. In Proceedings of the 2019 1st International Informatics and Software Engineering Conference (UBMYK), Ankara, Turkey, 6–7 November 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Kandhasamy, J.P.; Balamurali, S. Performance Analysis of Classifier Models to Predict Diabetes Mellitus. Procedia Comput. Sci. 2015, 47, 45–51. [Google Scholar] [CrossRef]

- Tan, Y.; Shi, Y.; Tuba, M. Data Mining and Big Data: 5th International Conference, DMBD 2020, Belgrade, Serbia, 14–20 July 2020; Springer: Singapore, 2020; Volume 1234. [Google Scholar]

- Asri, H.; Mousannif, H.; Al Moatassime, H.; Noel, T. Using Machine Learning Algorithms for Breast Cancer Risk Prediction and Diagnosis. Procedia Comput. Sci. 2016, 83, 1064–1069. [Google Scholar] [CrossRef]

- Mamun Khan, M.M.R. Significance of Features from Biomedical Signals in Heart Health Monitoring. BioMed 2022, 2, 391–408. [Google Scholar] [CrossRef]

- Qteat, H.; Arab American University; Awad, M. Using Hybrid Model of Particle Swarm Optimization and Multi-Layer Perceptron Neural Networks for Classification of Diabetes. Int. J. Intell. Eng. Syst. 2021, 14, 11–22. [Google Scholar] [CrossRef]

- Chaki, J.; Ganesh, S.T.; Cidham, S.; Theertan, S.A. Machine learning and artificial intelligence based Diabetes Mellitus detection and self-management: A systematic review. J. King Saud Univ. Comput. Inf. Sci. 2020, 34, 3204–3225. [Google Scholar] [CrossRef]

- Sriram, T.; Rao, M.V.; Narayana, G.; Kaladhar, D.; Vital, T.P.R. Intelligent Parkinson disease prediction using machine learning algorithms. Int. J. Eng. Innov. Technol 2013, 3, 212–215. [Google Scholar]

- Fernández-Edreira, D.; Liñares-Blanco, J.; Fernandez-Lozano, C. Machine Learning analysis of the human infant gut micro-biome identifies influential species in type 1 diabetes. Expert Syst. Appl. 2021, 185, 115648. [Google Scholar] [CrossRef]

- Esmaeilzadeh, S.; Yang, Y.; Adeli, E. End-to-end parkinson disease diagnosis using brain mr-images by 3d-cnn. arXiv 2018, arXiv:1806.05233. [Google Scholar]

- Fitriyani, N.L.; Syafrudin, M.; Alfian, G.; Rhee, J. Development of disease prediction model based on ensemble learning ap-proach for diabetes and hypertension. IEEE Access 2019, 7, 144777–144789. [Google Scholar] [CrossRef]

- Beheshti, Z.; Shamsuddin, S.M.H.; Beheshti, E.; Yuhaniz, S.S. Enhancement of artificial neural network learning using cen-tripetal accelerated particle swarm optimization for medical diseases diagnosis. Soft Comput. 2013, 18, 2253–2270. [Google Scholar] [CrossRef]

- Hedeshi, N.G.; Abadeh, M.S. Coronary Artery Disease Detection Using a Fuzzy-Boosting PSO Approach. Comput. Intell. Neurosci. 2014, 2014, 783734. [Google Scholar] [CrossRef]

- Eshtay, M.; Faris, H.; Obeid, N. Improving Extreme Learning Machine by Competitive Swarm Optimization and its application for medical diagnosis problems. Expert Syst. Appl. 2018, 104, 134–152. [Google Scholar] [CrossRef]

- Karaolis, M.A.; Moutiris, J.A.; Hadjipanayi, D.; Pattichis, C.S. Assessment of the Risk Factors of Coronary Heart Events Based on Data Mining With Decision Trees. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 559–566. [Google Scholar] [CrossRef] [PubMed]

- Tay, D.; Poh, C.L.; Goh, C.; Kitney, R.I. A biological continuum based approach for efficient clinical classification. J. Biomed. Inform. 2014, 47, 28–38. [Google Scholar] [CrossRef] [PubMed]

- Alizadehsani, R.; Habibi, J.; Hosseini, M.J.; Mashayekhi, H.; Boghrati, R.; Ghandeharioun, A.; Bahadorian, B.; Sani, Z.A. A data mining approach for diagnosis of coronary artery disease. Comput. Methods Programs Biomed. 2013, 111, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Bemando, C.; Miranda, E.; Aryuni, M. Machine-Learning-Based Prediction Models of Coronary Heart Disease Using Naïve Bayes and Random Forest Algorithms. In Proceedings of the 2021 International Conference on Software Engineering & Computer Systems and 4th International Conference on Computational Science and Information Management (ICSECS-ICOCSIM), Pekan, Malaysia, 24–26 August 2021; pp. 232–237. [Google Scholar] [CrossRef]

- Kumar, R.P.R.; Polepaka, S. Performance Comparison of Random Forest Classifier and Convolution Neural Network in Predicting Heart Diseases. In Proceedings of the Third International Conference on Computational Intelligence and Informatics, Hyderabad, India, 28–29 December 2018; Springer: Berlin/Heidelberg, Germany, 2020; pp. 683–691. [Google Scholar] [CrossRef]

- Singh, H.; Navaneeth, N.; Pillai, G. Multisurface Proximal SVM Based Decision Trees for Heart Disease Classification. In Proceedings of the TENCON 2019–2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 13–18. [Google Scholar] [CrossRef]

- Desai, S.D.; Giraddi, S.; Narayankar, P.; Pudakalakatti, N.R.; Sulegaon, S. Back-Propagation Neural Network Versus Logistic Regression in Heart Disease Classification. In Advanced Computing and Communication Technologies; Springer: Berlin/Heidelberg, Germany, 2018; pp. 133–144. [Google Scholar] [CrossRef]

- Patil, D.D.; Singh, R.P.; Thakare, V.M.; Gulve, A.K. Analysis of ECG Arrhythmia for Heart Disease Detection using SVM and Cuckoo Search Optimized Neural Network. Int. J. Eng. Technol. 2018, 7, 27–33. [Google Scholar] [CrossRef]

- Acharya, U.R.; Oh, S.L.; Hagiwara, Y.; Tan, J.H.; Adam, M.; Gertych, A.; San Tan, R. A deep convolutional neural network model to classify heartbeats. Comput. Biol. Med. 2017, 89, 389–396. [Google Scholar] [CrossRef]

- Yang, W.; Si, Y.; Wang, D.; Guo, B. Automatic recognition of arrhythmia based on principal component analysis network and linear support vector machine. Comput. Biol. Med. 2018, 101, 22–32. [Google Scholar] [CrossRef]

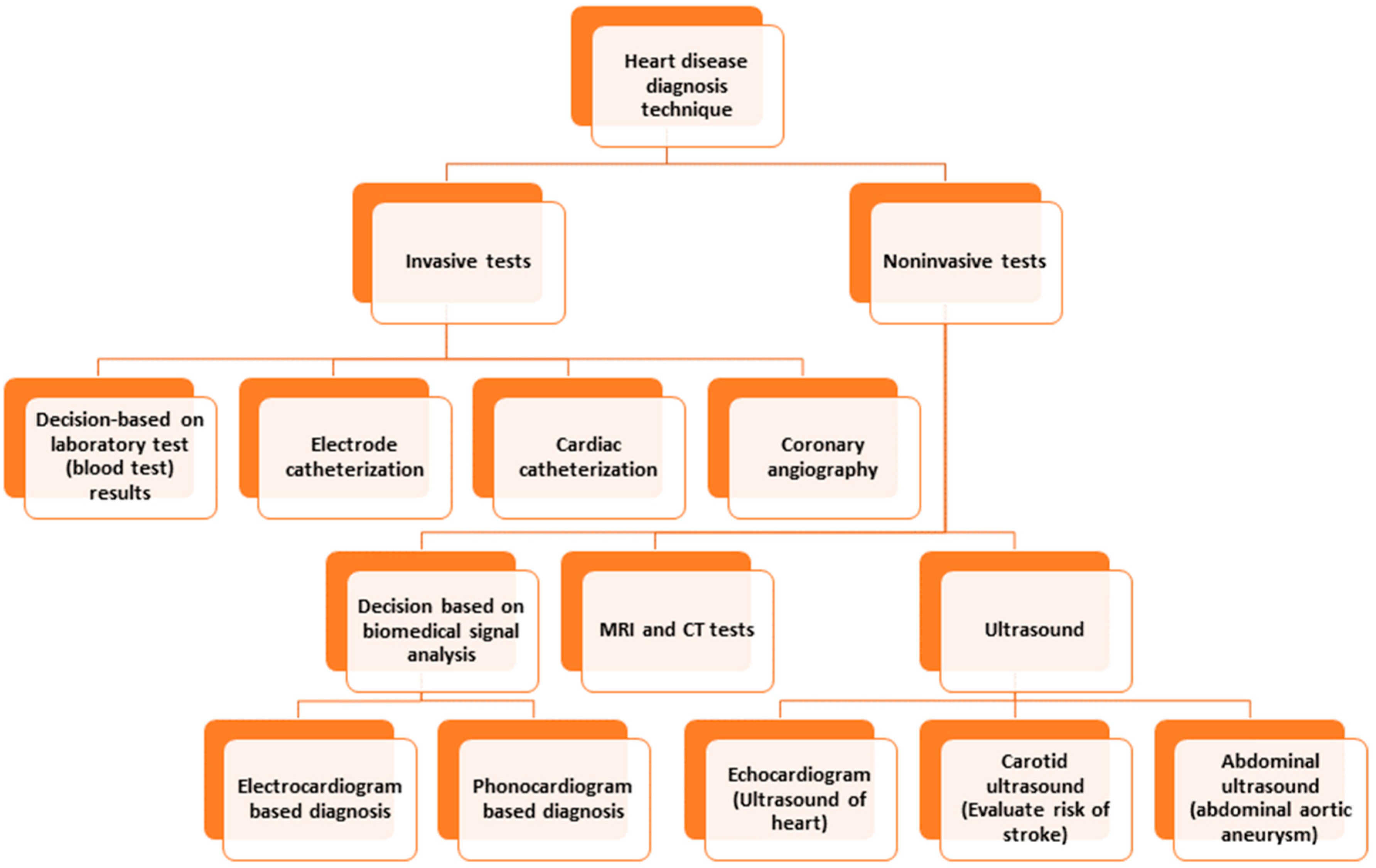

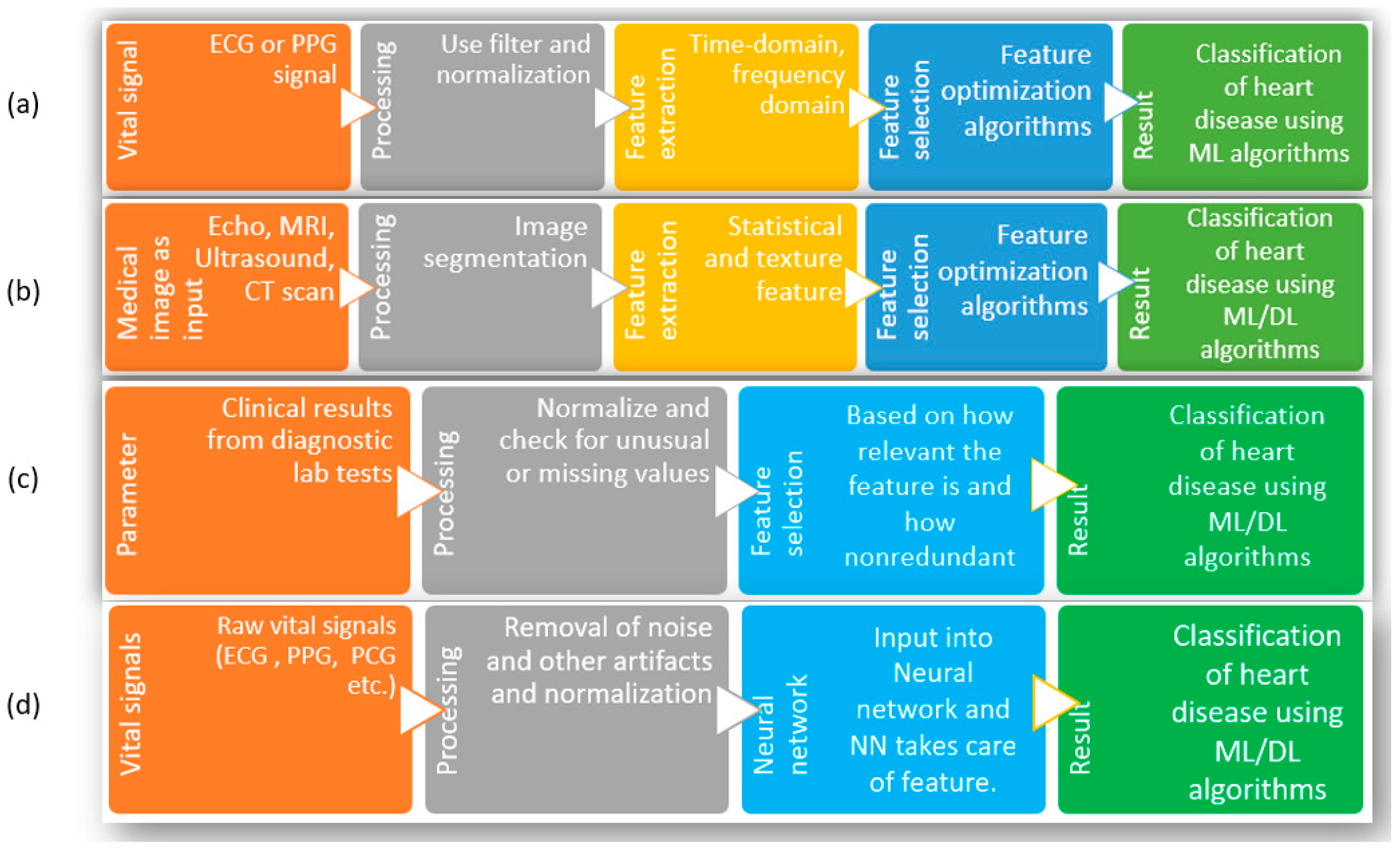

- Mamun, K.; Rahman, M.M.; Alouani, A. Automatic Detection of Heart Diseases Using Biomedical Signals: A Literature Review of Current Status and Limitations. In Advances in Information and Communication; Springer: Berlin/Heidelberg, Germany, 2022; pp. 420–440. [Google Scholar]

- Alizadehsani, R.; Hosseini, M.J.; Khosravi, A.; Khozeimeh, F.; Roshanzamir, M.; Sarrafzadegan, N.; Nahavandi, S. Non-invasive detection of coronary artery disease in high-risk patients based on the stenosis prediction of separate coronary arteries. Comput. Methods Programs Biomed. 2018, 162, 119–127. [Google Scholar] [CrossRef]

- Manogaran, G.; Varatharajan, R.; Priyan, M.K. Hybrid Recommendation System for Heart Disease Diagnosis based on Multiple Kernel Learning with Adaptive Neuro-Fuzzy Inference System. Multimed. Tools Appl. 2017, 77, 4379–4399. [Google Scholar] [CrossRef]

- Pirgazi, J.; Sorkhi, A.G.; Mobarkeh, M.I. An Accurate Heart Disease Prognosis Using Machine Intelligence and IoMT. Wirel. Commun. Mob. Comput. 2022, 2022, 9060340. [Google Scholar] [CrossRef]

- Paul, A.K.; Shill, P.C.; Rabin, R.I.; Murase, K. Adaptive weighted fuzzy rule-based system for the risk level assessment of heart disease. Appl. Intell. 2017, 48, 1739–1756. [Google Scholar] [CrossRef]

- Das, R.; Turkoglu, I.; Sengur, A. Effective diagnosis of heart disease through neural networks ensembles. Expert Syst. Appl. 2009, 36, 7675–7680. [Google Scholar] [CrossRef]

- Olaniyi, E.O.; Oyedotun, O.K.; Adnan, K. Heart Diseases Diagnosis Using Neural Networks Arbitration. Int. J. Intell. Syst. Appl. 2015, 7, 72. [Google Scholar] [CrossRef]

- Kahramanli, H.; Allahverdi, N. Design of a hybrid system for the diabetes and heart diseases. Expert Syst. Appl. 2008, 35, 82–89. [Google Scholar] [CrossRef]

- Gudadhe, M.; Wankhade, K.; Dongre, S. Decision support system for heart disease based on support vector machine and ar-tificial neural network. In Proceedings of the 2010 International Conference on Computer and Communication Technology (ICCCT), Allahabad, India, 17–19 September 2010; pp. 741–745. [Google Scholar]

- An, Y.; Huang, N.; Chen, X.; Wu, F.; Wang, J. High-Risk Prediction of Cardiovascular Diseases via Attention-Based Deep Neural Networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2019, 18, 1093–1105. [Google Scholar] [CrossRef]

- Swathy, M.; Saruladha, K. A comparative study of classification and prediction of Cardio-Vascular Diseases (CVD) using Machine Learning and Deep Learning techniques. ICT Express 2021, 8, 109–116. [Google Scholar] [CrossRef]

- Dezaki, F.T.; Liao, Z.; Luong, C.; Girgis, H.; Dhungel, N.; Abdi, A.H.; Behnami, D.; Gin, K.; Rohling, R.; Abolmaesumi, P.; et al. Cardiac Phase Detection in Echocardiograms With Densely Gated Recurrent Neural Networks and Global Extrema Loss. IEEE Trans. Med. Imaging 2018, 38, 1821–1832. [Google Scholar] [CrossRef]

- Ahmed, A.A.; Ali, W.; Abdullah, T.A.; Malebary, S.J. Classifying cardiac arrhythmia from ECG signal using 1D CNN deep learning model. Mathematics 2023, 11, 562. [Google Scholar] [CrossRef]

- Wiharto, W.; Kusnanto, H.; Herianto, H. Intelligence System for Diagnosis Level of Coronary Heart Disease with K-Star Algorithm. Health Inform. Res. 2016, 22, 30–38. [Google Scholar] [CrossRef] [PubMed]

- Liu, N.; Lin, Z.; Cao, J.; Koh, Z.; Zhang, T.; Huang, G.-B.; Ser, W.; Ong, M.E.H. An Intelligent Scoring System and Its Application to Cardiac Arrest Prediction. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 1324–1331. [Google Scholar] [CrossRef] [PubMed]

- Haq, A.U.; Li, J.P.; Memon, M.H.; Nazir, S.; Sun, R. A Hybrid Intelligent System Framework for the Prediction of Heart Disease Using Machine Learning Algorithms. Mob. Inf. Syst. 2018, 2018, 3860146. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2020, 151, 107398. [Google Scholar] [CrossRef]

- Detrano, R.; Janosi, A.; Steinbrunn, W.; Pfisterer, M.; Schmid, J.-J.; Sandhu, S.; Guppy, K.H.; Lee, S.; Froelicher, V. International application of a new probability algorithm for the diagnosis of coronary artery disease. Am. J. Cardiol. 1989, 64, 304–310. [Google Scholar] [CrossRef]

- Blake, C. UCI Repository of Machine Learning Databases. Available online: http://www.ics.uci.edu/~mlearn/MLRepository.html (accessed on 20 January 2023).

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank. PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef]

- Johnson, A.E.W.; Pollard, T.J.; Shen, L.; Lehman, L.-W.H.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Celi, L.A.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef]

- Lee, J.; Scott, D.J.; Villarroel, M.; Clifford, G.D.; Saeed, M.; Mark, R.G. Open-access MIMIC-II database for intensive care re-search. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 8315–8318. [Google Scholar]

- Centers for Disease Control and Prevention (CDC). National Health and Nutrition Examination Survey Data; US Department of Health and Human Services, Centers for Disease Control and Prevention: Hyattsville, MD, USA, 2020.

- Guyon, I.; Gunn, S.; Nikravesh, M.; Zadeh, L.A. Feature extraction: Foundations and applications. In Feature Extraction: Foundations and Applications; Springer: Berlin/Heidelberg, Germany, 2008; Volume 207. [Google Scholar]

- Bolón-Canedo, V.; Sánchez-Maroño, N.; Alonso-Betanzos, A. A review of feature selection methods on synthetic data. Knowl. Inf. Syst. 2013, 34, 483–519. [Google Scholar] [CrossRef]

- Kononenko, I. Estimating Attributes: Analysis and Extensions of RELIEF. In European Conference on Machine Learning; Springer: Berlin/Heidelberg, Germany, 1994; pp. 171–182. [Google Scholar]

- Kira, K.; Rendell, L.A. A Practical Approach to Feature Selection. In Machine Learning Proceedings 1992; Elsevier: Amsterdam, The Netherlands, 1992; pp. 249–256. [Google Scholar] [CrossRef]

- Jeon, H.; Oh, S. Hybrid-Recursive Feature Elimination for Efficient Feature Selection. Appl. Sci. 2020, 10, 3211. [Google Scholar] [CrossRef]

- Schwarz, D.F.; König, I.R.; Ziegler, A. On safari to Random Jungle: A fast implementation of Random Forests for high-dimensional data. Bioinformatics 2010, 26, 1752–1758. [Google Scholar] [CrossRef]

- Kubus, M. The Problem of Redundant Variables in Random Forests. Acta Univ. Lodz. Folia Oeconomica 2019, 6, 7–16. [Google Scholar] [CrossRef]

- Signorino, C.S.; Kirchner, A. Using LASSO to Model Interactions and Nonlinearities in Survey Data. Surv. Pr. 2018, 11, 1–10. [Google Scholar] [CrossRef]

- Barrera-Gómez, J.; Agier, L.; Portengen, L.; Chadeau-Hyam, M.; Giorgis-Allemand, L.; Siroux, V.; Robinson, O.; Vlaanderen, J.; González, J.R.; Nieuwenhuijsen, M.; et al. A systematic comparison of statistical methods to detect interactions in exposome-health associations. Environ. Health 2017, 16, 1–13. [Google Scholar] [CrossRef]

- Cortez, P.; Pereira, P.J.; Mendes, R. Multi-step time series prediction intervals using neuroevolution. Neural Comput. Appl. 2019, 32, 8939–8953. [Google Scholar] [CrossRef]

- Sarkar, S.; Zhu, X.; Melnykov, V.; Ingrassia, S. On parsimonious models for modeling matrix data. Comput. Stat. Data Anal. 2020, 142, 247–257. [Google Scholar] [CrossRef]

- YWah, B.; Ibrahim, N.; Hamid, H.A.; Abdul-Rahman, S.; Fong, S. Feature Selection Methods: Case of Filter and Wrapper Ap-proaches for Maximising Classification Accuracy. Pertanika J. Sci. Technol. 2018, 26, 329–340. [Google Scholar]

- Aphinyanaphongs, Y.; Fu, L.D.; Li, Z.; Peskin, E.R.; Efstathiadis, E.; Aliferis, C.F.; Statnikov, A. A comprehensive empirical comparison of modern supervised classification and feature selection methods for text categorization. J. Assoc. Inf. Sci. Technol. 2014, 65, 1964–1987. [Google Scholar] [CrossRef]

- Forman, G. An extensive empirical study of feature selection metrics for text classification. J. Mach. Learn. Res. 2003, 3, 1289–1305. [Google Scholar]

- Pudjihartono, N.; Fadason, T.; Kempa-Liehr, A.W.; O’Sullivan, J.M. A review of feature selection methods for machine learn-ing-based disease risk prediction. Front. Bioinform. 2022, 2, 927312. [Google Scholar] [CrossRef]

- Hoque, N.; Singh, M.; Bhattacharyya, D.K. EFS-MI: An ensemble feature selection method for classification. Complex Intell. Syst. 2017, 4, 105–118. [Google Scholar] [CrossRef]

- Wang, J.; Xu, J.; Zhao, C.; Peng, Y.; Wang, H. An ensemble feature selection method for high-dimensional data based on sort aggregation. Syst. Sci. Control Eng. 2019, 7, 32–39. [Google Scholar] [CrossRef]

- Tsai, C.; Sung, Y. Ensemble feature selection in high dimension, low sample size datasets: Parallel and serial combination ap-proaches. Knowl.-Based Syst. 2020, 203, 106097. [Google Scholar] [CrossRef]

- Mao, Y.; Chen, Y.; Hackmann, G.; Chen, M.; Lu, C.; Kollef, M.; Bailey, T.C. Medical Data Mining for Early Deterioration Warning in General Hospital Wards. In Proceedings of the 2011 IEEE 11th International Conference on Data Mining Workshops, Vancouver, BC, Canada, 11 December 2011; pp. 1042–1049. [Google Scholar] [CrossRef]

- Eren, L.; Ince, T.; Kiranyaz, S. A Generic Intelligent Bearing Fault Diagnosis System Using Compact Adaptive 1D CNN Classifier. J. Signal Process. Syst. 2018, 91, 179–189. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Sassi, S.; Avci, O.; Kiranyaz, S.; Ibrahim, A.A.; Gabbouj, M. Fault Detection and Severity Identification of Ball Bearings by Online Condition Monitoring. IEEE Trans. Ind. Electron. 2018, 66, 8136–8147. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Gastli, A.; Ben-Brahim, L.; Al-Emadi, N.; Gabbouj, M. Real-Time Fault Detection and Identification for MMC Using 1-D Convolutional Neural Networks. IEEE Trans. Ind. Electron. 2018, 66, 8760–8771. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-Time Motor Fault Detection by 1-D Convolutional Neural Networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Avci, O.; Kiranyaz, M.S.; Boashash, B.; Sodano, H.; Inman, D.J. 1-D CNNs for structural damage detection: Verification on a structural health monitoring benchmark data. Neurocomputing 2018, 275, 1308–1317. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Boashash, B.; Sodano, H.; Inman, D.J. Efficiency validation of one dimensional convo-lutional neural networks for structural damage detection using a SHM benchmark data. In Proceedings of the 25th International Congress on Sound and Vibration 2018, Hiroshima, Japan, 8–12 July 2018; pp. 4600–4607. [Google Scholar]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Inman, D. Structural Damage Detection in Real Time: Implementation of 1D Convolutional Neural Networks for SHM Applications. In Structural Health Monitoring & Damage Detection; Springer: Berlin/Heidelberg, Germany, 2017; pp. 49–54. [Google Scholar] [CrossRef]

- Eren, L. Bearing Fault Detection by One-Dimensional Convolutional Neural Networks. Math. Probl. Eng. 2017, 2017, 8617315. [Google Scholar] [CrossRef]

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Inman, D.J. Wireless and real-time structural damage detection: A novel decentralized method for wireless sensor networks. J. Sound Vib. 2018, 424, 158–172. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Personalized Monitoring and Advance Warning System for Cardiac Arrhythmias. Sci. Rep. 2017, 7, 9270. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Real-Time Patient-Specific ECG Classification by 1-D Convolutional Neural Networks. IEEE Trans. Biomed. Eng. 2015, 63, 664–675. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Hamila, R.; Gabbouj, M. Convolutional Neural Networks for patient-specific ECG classification. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2608–2611. [Google Scholar] [CrossRef]

- Ahsan, M.; Siddique, Z. Machine learning-based heart disease diagnosis: A systematic literature review. Artif. Intell. Med. 2022, 128, 102289. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Song, S.; Xu, L.; Song, A.; Yang, B. Segmentation of Coronary Arteries Images Using Spatio-temporal Feature Fusion Network with Combo Loss. Cardiovasc. Eng. Technol. 2021, 13, 407–418. [Google Scholar] [CrossRef]

- Yang, P.; Wang, D.; Zhao, W.-B.; Fu, L.-H.; Du, J.-L.; Su, H. Ensemble of kernel extreme learning machine based random forest classifiers for automatic heartbeat classification. Biomed. Signal Process. Control 2020, 63, 102138. [Google Scholar] [CrossRef]

- Lu, Y.; Jiang, M.; Wei, L.; Zhang, J.; Wang, Z.; Wei, B.; Xia, L. Automated arrhythmia classification using depthwise separable convolutional neural network with focal loss. Biomed. Signal Process. Control 2021, 69, 102843. [Google Scholar] [CrossRef]

- Mani, I.; Zhang, I. kNN approach to unbalanced data distributions: A case study involving information extraction. In Proceedings of the International Conference on Machine Learning (ICML 2003), Workshop on Learning from Imbalanced Data Sets, Washington, DC, USA, 21 August 2003; pp. 1–7. [Google Scholar]

- Hart, P. The condensed nearest neighbor rule (Corresp.). IEEE Trans. Inf. Theory 1968, 14, 515–516. [Google Scholar] [CrossRef]

- Radovic, M.; Ghalwash, M.; Filipovic, N.; Obradovic, Z. Minimum redundancy maximum relevance feature selection approach for temporal gene expression data. BMC Bioinform. 2017, 18, 9. [Google Scholar] [CrossRef]

- Zhao, Z.; Anand, R.; Wang, M. Maximum Relevance and Minimum Redundancy Feature Selection Methods for a Marketing Machine Learning Platform. In Proceedings of the 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Washington, DC, USA, 5–8 October 2019; pp. 442–452. [Google Scholar] [CrossRef]

- Mamun, M.M.R.K. Cuff-less blood pressure measurement based on hybrid feature selection algorithm and multi-penalty regularized regression technique. Biomed. Phys. Eng. Express. 2021, 7, 065030. [Google Scholar] [CrossRef]

| Variable Name | Description | Target | Code Value | Code Value Description |

|---|---|---|---|---|

| SEQN | Respondent sequence number | All age groups | NA. | NA |

| PEASCCT1 | Blood Pressure Comment | All age groups | 1, 2, 3 | Safety exclusion, SP refusal, time constraint (Age was divided into three groups named 1, 2, and 3) |

| BPXCHR | 60 s HR (30 s HR × 2) | 0–7 years | 60–180 bits per minute | Range of values in heart bit per minute |

| BPAARM | Arm selected | 8 years and above | 1, 2, 8 | Right, left, could not obtain (Correspond to 1, 2, and 8) |

| BPACSZ | Coded cuff size | 8 years and above | 1, 2, 3, 4, 5 | Infant, Child, Adult, Large, Thigh (each number in code value represents one age group) |

| BPXPLS | 60 s pulse (30 s pulses × 2) | 8 years and above | 34–136 | Range of number of pulses in 60 s |

| BPXPULS | Pulse regular or irregular? | All age groups | 1, 2 | Regular, irregular (1—regular, 2—irregular) |

| BPXPTY | Pulse type | 8 years and above | 1, 2, 8 | Radial (1), brachial (2), could not obtain (8) |

| BPXML1 | MIL: maximum inflation levels (mmHg) | 8 years and above | 100–260 | Range of values of blood pressure in mmHg |

| BPXSY1 | Systolic: Blood pres (1st rdg) mmHg | 8 years and above | 72–228 | Range of values of blood pressure in mmHg |

| BPXDI1 | Diastolic: Blood pres (1st rdg) mmHg | 8 years and above | 0–136 | Range of values of blood pressure in mmHg |

| BPAEN1 | Enhancement used 1st rdg | 8 years and above | 1, 2, 8 | Yes (1), no (2), could not obtain (8) |

| BPXSY2 | Systolic: Blood pres (2nd rdg) mmHg | 8 years and above | 72–236 | Range of values of blood pressure in mmHg |

| BPXDI2 | Diastolic: Blood pres (2nd rdg) mmHg | 8 years and above | 0–136 | Range of values of blood pressure in mmHg |

| BPAEN2 | Enhancement used 2nd rdg | 8 years and above | 1, 2, 8 | Yes (1), no (2), could not obtain (8) |

| BPXSY3 | Systolic: Blood pres (3rd rdg) mmHg | 8 years and above | 72–238 | Range of values of blood pressure in mmHg |

| BPXDI3 | Diastolic: Blood pres (3rd rdg) mmHg | 8 years and above | 0–134 | Range of values of blood pressure in mmHg |

| BPAEN3 | Enhancement used third reading | 8 years and above | 1, 2, 8 | Yes (1), no (2), could not obtain (8) |

| BPXSY4 | Systolic: Blood pres (4th rdg) mmHg | 8 years and above | 72–234 | Range of values of blood pressure in mmHg |

| BPXDI4 | Diastolic: Blood pres (4th rdg) mmHg | 8 years and above | 0–118 | Range of values of blood pressure in mmHg |

| BPAEN4 | Enhancement used 4th rdg | 8 years and above | 1, 2, 8 | Yes (1), no (2), could not obtain (8) |

| Parameter | Age (Years) | Systolic (mmHg) | Diastolic (mmHg) | Weight (kg) | Height (cm) | Cholesterol (mg/dL) | Creatinine (mg/dL) | Glucose (mg/dL) | Protein (mg/dL) |

|---|---|---|---|---|---|---|---|---|---|

| mean | 48.98 | 124.18 | 71.00 | 81.26 | 167.34 | 5.06 | 78.36 | 5.60 | 72.00 |

| STD | 17.83 | 19.15 | 11.72 | 20.84 | 10.11 | 1.08 | 35.34 | 2.04 | 4.89 |

| Min | 20.00 | 66.00 | 40.00 | 32.30 | 129.70 | 0.16 | 17.70 | 1.05 | 47.00 |

| 25th percentiles | 34.00 | 111.00 | 64.00 | 66.60 | 160.00 | 4.29 | 61.88 | 4.72 | 69.00 |

| 50th percentiles | 48.00 | 122.00 | 71.00 | 78.40 | 167.00 | 4.97 | 73.37 | 5.11 | 72.00 |

| 75th percentiles | 63.00 | 134.00 | 78.00 | 92.40 | 174.60 | 5.71 | 88.40 | 5.66 | 75.00 |

| Max | 85.00 | 270.00 | 132.00 | 223.00 | 204.50 | 14.61 | 946.76 | 34.75 | 113.00 |

| No | Feature | Feature Type | Description |

|---|---|---|---|

| 1 | Age | Numerical | Age of participant (years) |

| 2 | Systolic | Numerical | Systolic blood pressure (mmHg) |

| 3 | Diastolic | Numerical | Diastolic blood pressure (mmHg) |

| 4 | Weight | Numerical | Weight of participant (kg) |

| 5 | White-Blood-Cells | Numerical | White blood cell count (1000 cells/μL) |

| 6 | Lymphocyte | Numerical | Lymphocyte percent (%) |

| 7 | Monocyte | Numerical | Monocyte percent (%) |

| 8 | Red-Blood-Cells | Numerical | Red blood cell count (million cells/μL) |

| 9 | Platelet-count | Numerical | Platelet count (1000 cells/μL) |

| 10 | Red-Cell-Distribution-Width | Numerical | Red cell distribution width (%) |

| 11 | Albumin | Numerical | Albumin, urine (mg/L) |

| 12 | ALP | Numerical | Alkaline Phosphatase (IU/L) |

| 13 | ALT | Numerical | Alanine Aminotransferase (IU/L) |

| 14 | Cholesterol | Numerical | Cholesterol (mg/dL) |

| 15 | Creatinine | Numerical | Creatinine (mg/dL) |

| 16 | Glucose | Numerical | Glucose, serum (mg/dL) |

| 17 | GGT | Numerical | Gamma-glutamyl transferase (U/L) |

| 18 | Iron | Numerical | Iron, refrigerated serum (μg/dL) |

| 19 | LDH | Numerical | Lactate dehydrogenase (IU/L) |

| 20 | Triglycerides | Numerical | Triglycerides, refrigerated (mg/dL) |

| 21 | Uric.Acid | Numerical | Uric acid (mg/dL) |

| 22 | Total-Cholesterol | Numerical | Total Cholesterol (mg/dL) |

| 23 | HDL | Numerical | Direct HDL-Cholesterol (mg/dL) |

| 24 | Glycohemoglobin | Numerical | Glycohemoglobin (%) |

| 25 | Gender | Categorical | Gender of the participant |

| 26 | Diabetes | Categorical | Diagnosed with Diabetes |

| 27 | Blood rel Diabetes | Categorical | Does blood relative have Diabetes |

| 28 | Blood rel stroke | Categorical | Does a blood relative have a stroke |

| 29 | Vigorous work | Categorical | Vigorous work activity |

| 30 | Moderate work | Categorical | Moderate work activity |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan Mamun, M.M.R.; Elfouly, T. Detection of Cardiovascular Disease from Clinical Parameters Using a One-Dimensional Convolutional Neural Network. Bioengineering 2023, 10, 796. https://doi.org/10.3390/bioengineering10070796

Khan Mamun MMR, Elfouly T. Detection of Cardiovascular Disease from Clinical Parameters Using a One-Dimensional Convolutional Neural Network. Bioengineering. 2023; 10(7):796. https://doi.org/10.3390/bioengineering10070796

Chicago/Turabian StyleKhan Mamun, Mohammad Mahbubur Rahman, and Tarek Elfouly. 2023. "Detection of Cardiovascular Disease from Clinical Parameters Using a One-Dimensional Convolutional Neural Network" Bioengineering 10, no. 7: 796. https://doi.org/10.3390/bioengineering10070796

APA StyleKhan Mamun, M. M. R., & Elfouly, T. (2023). Detection of Cardiovascular Disease from Clinical Parameters Using a One-Dimensional Convolutional Neural Network. Bioengineering, 10(7), 796. https://doi.org/10.3390/bioengineering10070796