A Novel Asynchronous Brain Signals-Based Driver–Vehicle Interface for Brain-Controlled Vehicles

Abstract

:1. Introduction

- (1)

- This work is the first to use P300 potentials to develop a DVI to enable users to control a vehicle continuously and asynchronously.

- (2)

- The control command decoding algorithm, including the asynchronous function, was proposed for the development of the P300-based DVI.

- (3)

- We tested and validated the feasibility of the P300-based DVI for controlling a brain-controlled vehicle using a driver-in-the-loop online experiment.

2. Materials and Methods

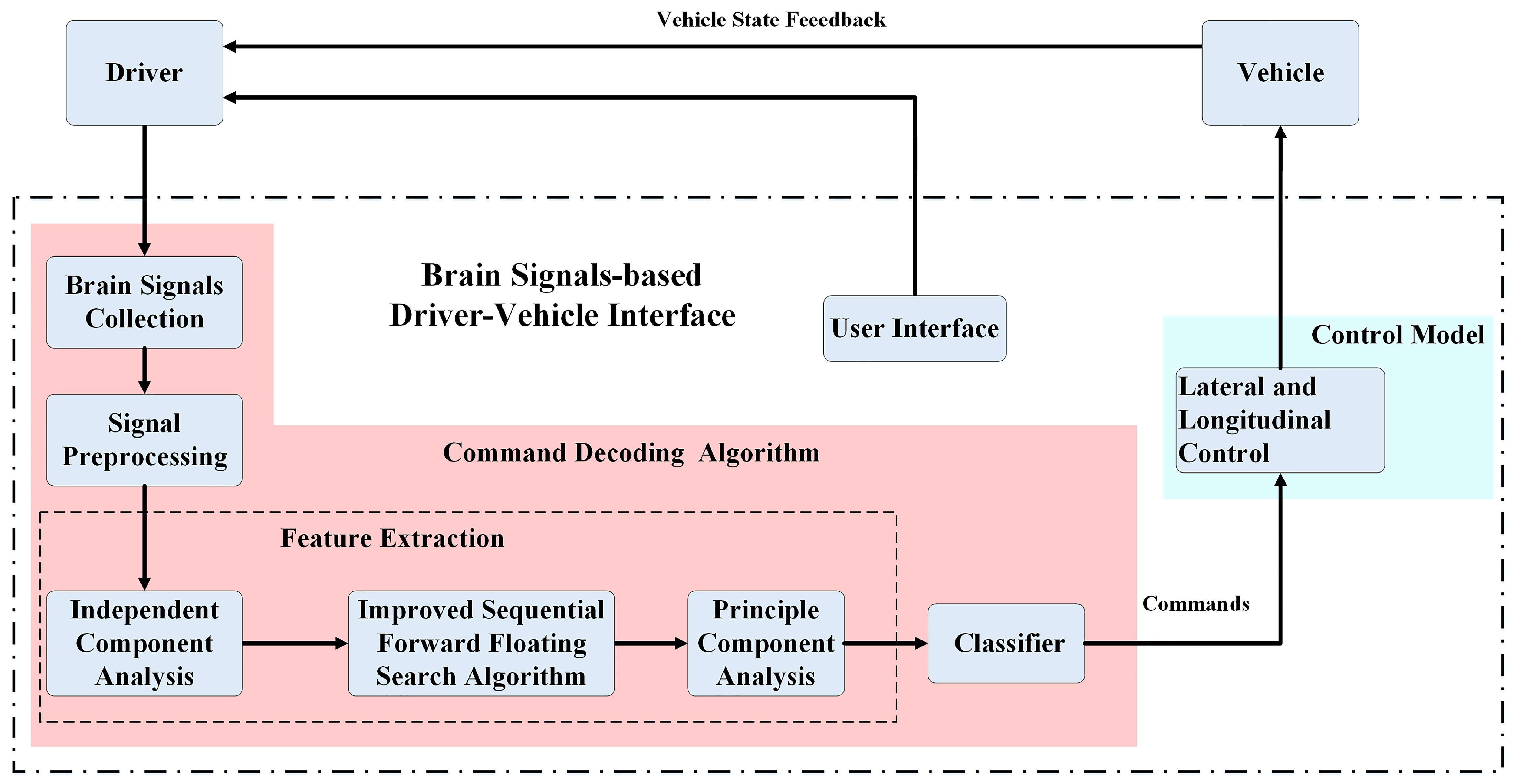

2.1. System Architecture

2.2. User Interface

2.3. Command Decoding Algorithm

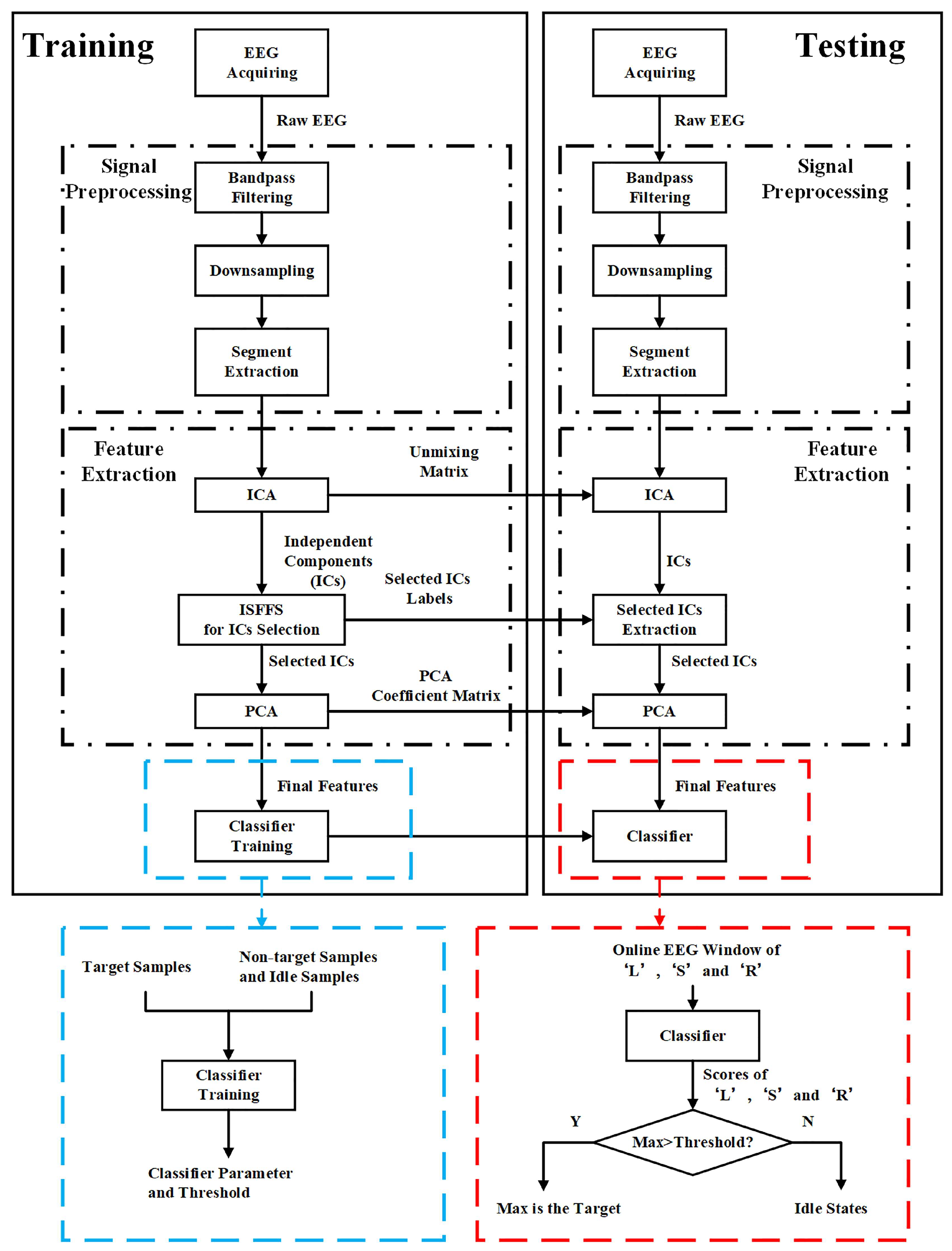

2.3.1. Training Phase

2.3.2. Testing Phase

2.4. Control Model

3. Experiment

3.1. Subjects

3.2. Experimental Platform

3.3. Experimental Procedure

4. Results and Discussion

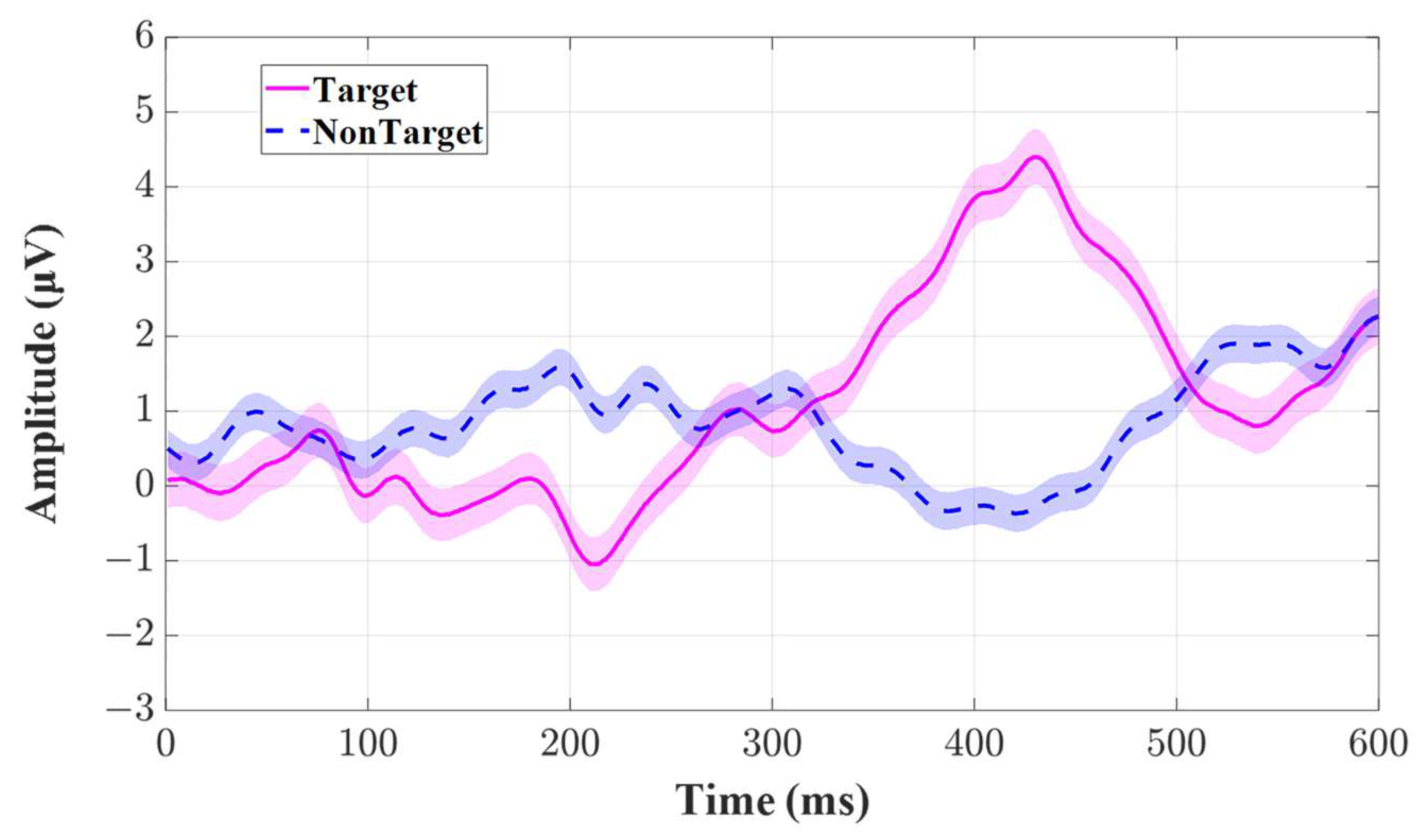

4.1. EEG Response under the Paradigm

4.2. Performance of the Proposed DVI

4.3. Performance of Brain-Controlled Vehicles Based on the Proposed Novel Asynchronous Brain Signals-Based DVI

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bi, L.; Fan, X.A.; Jie, K.; Teng, T.; Ding, H.; Liu, Y. Using a head-up display-based steady-state visually evoked potential brain–computer interface to control a simulated vehicle. IEEE Trans. Intell. Transp. Syst. 2014, 15, 959–966. [Google Scholar] [CrossRef]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Mcmullen, D.P.; Hotson, G.; Katyal, K.D.; Wester, B.A.; Fifer, M.S.; Mcgee, T.G.; Harris, A.; Johannes, M.S.; Vogelstein, R.J.; Ravitz, A.D. Demonstration of a Semi-Autonomous Hybrid Brain-Machine Interface using Human Intracranial EEG, Eye Tracking, and Computer Vision to Control a Robotic Upper Limb Prosthetic. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 784–796. [Google Scholar] [CrossRef] [PubMed]

- Meena, Y.K.; Cecotti, H.; Wonglin, K.; Dutta, A.; Prasad, G. Toward Optimization of Gaze-Controlled Human-Computer Interaction: Application to Hindi Virtual Keyboard for Stroke Patients. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 911. [Google Scholar] [CrossRef]

- Lee, J.D.; Caven, B.; Haake, S.; Brown, T.L. Speech-based interaction with in-vehicle computers: The effect of speech-based e-mail on drivers’ attention to the roadway. Hum. Factors 2001, 43, 631–640. [Google Scholar] [CrossRef]

- Li, W.; Zhou, Y.; Poh, N.; Zhou, F.; Liao, Q. Feature denoising using joint sparse representation for in-car speech recognition. IEEE Signal Process. Lett. 2013, 20, 681–684. [Google Scholar] [CrossRef]

- Huo, X.; Park, H.; Kim, J.; Ghovanloo, M. A Dual-Mode Human Computer Interface Combining Speech and Tongue Motion for People with Severe Disabilities. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 979–991. [Google Scholar] [CrossRef] [PubMed]

- He, T.; Bi, L.; Lian, J.; Sun, H. A brain signals-based interface between drivers and in-vehicle devices. In Proceedings of the Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 1333–1337. [Google Scholar]

- Thurlings, M.E.; Van Erp, J.B.; Brouwer, A.-M.; Werkhoven, P. Controlling a Tactile ERP–BCI in a Dual Task. IEEE Trans. Comput. Intell. AI Games 2013, 5, 129–140. [Google Scholar] [CrossRef]

- Allison, B.Z.; Dunne, S.; Leeb, R.; Milln, J.D.R.; Nijholt, A. Towards Practical Brain-Computer Interfaces: Bridging the Gap from Research to Real-World Applications; Springer Publishing Company Inc.: Berlin/Heidelberg, Germany, 2012; pp. 1–30. [Google Scholar]

- Wang, R.; Liu, Y.; Shi, J.; Peng, B.; Fei, W.; Bi, L. Sound Target Detection Under Noisy Environment Using Brain-Computer Interface. IEEE Trans. Neural Syst. Rehab. Eng. 2022, 31, 229–237. [Google Scholar] [CrossRef]

- Mammone, N.; De Salvo, S.; Bonanno, L.; Ieracitano, C.; Marino, S.; Marra, A.; Bramanti, A.; Morabito, F.C. Brain Network Analysis of Compressive Sensed High-Density EEG Signals in AD and MCI Subjects. IEEE Trans. Ind. Inform. 2019, 15, 527–536. [Google Scholar] [CrossRef]

- Yang, C.; Wu, H.; Li, Z.; He, W.; Wang, N.; Su, C.-Y. Mind control of a robotic arm with visual fusion technology. IEEE Trans. Ind. Inform. 2017, 14, 3822–3830. [Google Scholar] [CrossRef]

- Göhring, D.; Latotzky, D.; Wang, M.; Rojas, R. Semi-autonomous car control using brain computer interfaces. Intell. Auton. Syst. 2013, 12, 393–408. [Google Scholar]

- Bi, L.; Fan, X.-A.; Luo, N.; Jie, K.; Li, Y.; Liu, Y. A head-up display-based P300 brain–computer interface for destination selection. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1996–2001. [Google Scholar] [CrossRef]

- Fan, X.-A.; Bi, L.; Teng, T.; Ding, H.; Liu, Y. A brain–computer interface-based vehicle destination selection system using P300 and SSVEP signals. IEEE Trans. Intell. Transp. Syst. 2014, 16, 274–283. [Google Scholar] [CrossRef]

- Akram, F.; Han, S.M.; Kim, T.-S. An efficient word typing P300-BCI system using a modified T9 interface and random forest classifier. Comput. Biol. Med. 2015, 56, 30–36. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Zhang, C.; Zeng, Y.; Tong, L.; Yan, B. A novel P300 BCI speller based on the Triple RSVP paradigm. Sci. Rep. 2018, 8, 3350. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Jin, J.; Daly, I.; Liu, C.; Cichocki, A. Feature selection method based on Menger curvature and LDA theory for a P300 brain–computer interface. J. Neural Eng. 2022, 18, 066050. [Google Scholar] [CrossRef]

- Bi, L.; Fan, X.-A.; Liu, Y. EEG-based brain-controlled mobile robots: A survey. IEEE Trans. Hum.-Mach. Syst. 2013, 43, 161–176. [Google Scholar] [CrossRef]

- Brunner, P.; Joshi, S.; Briskin, S.; Wolpaw, J.R.; Bischof, H.; Schalk, G. Does the ‘P300’speller depend on eye gaze? J. Neural Eng. 2010, 7, 056013. [Google Scholar] [CrossRef]

- Bi, L.; Lian, J.; Jie, K.; Lai, R.; Liu, Y. A speed and direction-based cursor control system with P300 and SSVEP. Biomed. Signal Process. Control 2014, 14, 126–133. [Google Scholar] [CrossRef]

- Martinez-Cagigal, V.; Gomez-Pilar, J.; Alvarez, D.; Hornero, R. An Asynchronous P300-Based Brain-Computer Interface Web Browser for Severely Disabled People. IEEE Trans. Neural Syst. Rehabil. Eng. A Publ. IEEE Eng. Med. Biol. Soc. 2016, 25, 1332–1342. [Google Scholar] [CrossRef] [PubMed]

- Zhu, D.; Bieger, J.; Molina, G.G.; Aarts, R.M. A survey of stimulation methods used in SSVEP-based BCIs. Comput. Intell. Neurosci. 2010, 2010, 1. [Google Scholar] [CrossRef] [PubMed]

- Volosyak, I.; Valbuena, D.; Luth, T.; Malechka, T.; Graser, A. BCI Demographics II: How Many (and What Kinds of) People Can Use a High-Frequency SSVEP BCI? IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 232–239. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Neuper, C. Could anyone use a BCI? In Brain-Computer Interfaces. Human-Computer Interaction Series; Springer: London, UK, 2010; pp. 35–54. [Google Scholar]

- Zhang, R.; Li, Y.; Yan, Y.; Zhang, H.; Wu, S.; Yu, T.; Gu, Z. Control of a wheelchair in an indoor environment based on a brain–computer interface and automated navigation. IEEE Trans. Neural Syst. Rehab. Eng. 2016, 24, 128–139. [Google Scholar] [CrossRef]

- Li, M.; Li, W.; Niu, L.; Zhou, H.; Chen, G.; Duan, F. An event-related potential-based adaptive model for telepresence control of humanoid robot motion in an environment cluttered with obstacles. IEEE Trans. Ind. Electron. 2017, 64, 1696–1705. [Google Scholar] [CrossRef]

- De Venuto, D.; Annese, V.F.; Mezzina, G. An embedded system remotely driving mechanical devices by P300 brain activity. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Lausanne, Switzerland, 27–31 March 2017; pp. 1014–1019. [Google Scholar]

- Mezzina, G.; De Venuto, D. Four-Wheel Vehicle Driving by using a Spatio-Temporal Characterization of the P300 Brain Potential. In Proceedings of the 2020 AEIT International Conference of Electrical and Electronic Technologies for Automotive (AEIT AUTOMOTIVE), Turin, Italy, 18–20 November 2020; pp. 1–6. [Google Scholar]

- Lian, J.; Bi, L.; Fei, W. A novel event-related potential-based brain–computer interface for continuously controlling dynamic systems. IEEE Access 2019, 7, 38721–38729. [Google Scholar] [CrossRef]

- Zhang, H.; Guan, C.; Wang, C. Asynchronous P300-Based Brain--Computer Interfaces: A Computational Approach with Statistical Models. IEEE Trans. Biomed. Eng. 2008, 55, 1754–1763. [Google Scholar] [CrossRef]

- Pinegger, A.; Faller, J.; Halder, S.; Wriessnegger, S.C.; Muller-Putz, G.R. Control or non-control state: That is the question! An asynchronous visual P300-based BCI approach. J. Neural Eng. 2015, 12, 014001. [Google Scholar] [CrossRef]

- Panicker, R.C.; Sadasivan, P.; Ying, S. An asynchronous P300 BCI with SSVEP-based control state detection. IEEE Trans. Biomed. Eng. 2011, 58, 1781–1788. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, X.; Guo, X.; Liu, J.; Zhou, B. Design and implementation of an asynchronous BCI system with alpha rhythm and SSVEP. IEEE Access 2019, 7, 146123–146143. [Google Scholar] [CrossRef]

- Guan, C.; Thulasidas, M.; Wu, J. High performance P300 speller for brain-computer interface. In Proceedings of the IEEE International Workshop on Biomedical Circuits & Systems, Singapore, 1–3 December 2004. [Google Scholar]

- Ditthapron, A.; Banluesombatkul, N.; Ketrat, S.; Chuangsuwanich, E.; Wilaiprasitporn, T. Universal joint feature extraction for P300 EEG classification using multi-task autoencoder. IEEE Access 2019, 7, 68415–68428. [Google Scholar] [CrossRef]

- Raymond, J.E.; Shapiro, K.L.; Arnell, K.M. Temporary suppression of visual processing in an RSVP task: An attentional blink? J. Exp. Psychol. Hum. Percept. Perform. 1992, 18, 849. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Pineda, J.A. Effects of SOA and flash pattern manipulations on ERPs, performance, and preference: Implications for a BCI system. Int. J. Psychophysiol. 2006, 59, 127–140. [Google Scholar] [CrossRef] [PubMed]

- Sellers, E.W.; Krusienski, D.J.; McFarland, D.J.; Vaughan, T.M.; Wolpaw, J.R. A P300 event-related potential brain–computer interface (BCI): The effects of matrix size and inter stimulus interval on performance. Biol. Psychol. 2006, 73, 242–252. [Google Scholar] [CrossRef]

- Bell, A.J.; Sejnowski, T.J. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995, 7, 1129–1159. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Allison, B.Z.; Wolpaw, E.W.; Wolpaw, J.R. Brain–computer interface systems: Progress and prospects. Expert Rev. Med. Devices 2007, 4, 463–474. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, Y.; Gao, B.; Long, J. Alpha frequency intervention by electrical stimulation to improve performance in mu-based BCI. IEEE Trans. Neural Syst. Rehab. Eng. 2020, 28, 1262–1270. [Google Scholar] [CrossRef]

- Yao, L.; Meng, J.; Zhang, D.; Sheng, X.; Zhu, X. Combining motor imagery with selective sensation toward a hybrid-modality BCI. IEEE Trans. Biomed. Eng. 2014, 61, 2304–2312. [Google Scholar] [CrossRef]

- Teng, T.; Bi, L.; Liu, Y. EEG-Based Detection of Driver Emergency Braking Intention for Brain-Controlled Vehicles. IEEE Trans. Intell. Transp. Syst. 2017, 19, 1766–1773. [Google Scholar] [CrossRef]

- Bi, L.; Zhang, J.; Lian, J. EEG-based adaptive driver-vehicle interface using variational autoencoder and PI-TSVM. IEEE Trans. Neural Syst. Rehab. Eng. 2019, 27, 2025–2033. [Google Scholar] [CrossRef] [PubMed]

- Bai, X.; Li, M.; Qi, S.; Ng, A.C.M.; Ng, T.; Qian, W. A hybrid P300-SSVEP brain-computer interface speller with a frequency enhanced row and column paradigm. Front. Neurosci. 2023, 17, 1133933. [Google Scholar] [CrossRef]

- Anari, S.; Tataei Sarshar, N.; Mahjoori, N.; Dorosti, S.; Rezaie, A. Review of deep learning approaches for thyroid cancer diagnosis. Math. Probl. Eng. 2022, 2022, 5052435. [Google Scholar] [CrossRef]

- Kasgari, A.B.; Safavi, S.; Nouri, M.; Hou, J.; Sarshar, N.T.; Ranjbarzadeh, R. Point-of-Interest Preference Model Using an Attention Mechanism in a Convolutional Neural Network. Bioengineering 2023, 10, 495. [Google Scholar] [CrossRef] [PubMed]

| Subject | Control State | Idle State | ||||

|---|---|---|---|---|---|---|

| 1.08 s | 2.16 s | 3.24 s | 1.08 s | 2.16 s | 3.24 s | |

| 1 | 84.42 | 90.71 | 95.44 | 91.44 | 96.76 | 99.84 |

| 2 | 83.15 | 89.19 | 92.15 | 86.78 | 93.64 | 98.23 |

| 3 | 77.32 | 88.97 | 92.43 | 91.06 | 97.01 | 96.03 |

| 4 | 73.20 | 81.42 | 84.72 | 81.38 | 88.44 | 90.60 |

| 5 | 72.96 | 77.31 | 82.72 | 84.81 | 78.69 | 83.98 |

| 6 | 73.00 | 73.96 | 77.72 | 85.29 | 85.82 | 87.48 |

| Mean ± std. error | 77.34 ± 1.96 | 83.59 ± 2.62 | 87.53 ± 2.55 | 86.79 ± 1.45 | 90.06 ± 2.67 | 92.69 ± 2.36 |

| Subject | Control State | Idle State | ||||

|---|---|---|---|---|---|---|

| 1.08 s | 2.16 s | 3.24 s | 1.08 s | 2.16 s | 3.24 s | |

| 1 | 80.54 | 87.89 | 94.44 | 87.46 | 91.36 | 95.44 |

| 2 | 73.70 | 88.49 | 94.02 | 85.38 | 86.45 | 90.76 |

| 3 | 64.14 | 74.03 | 83.67 | 80.59 | 85.40 | 88.57 |

| 4 | 63.96 | 76.29 | 79.47 | 81.62 | 82.36 | 83.75 |

| 5 | 58.73 | 68.25 | 72.70 | 80.58 | 81.10 | 82.97 |

| 6 | 50.85 | 59.03 | 69.90 | 75.35 | 77.60 | 79.80 |

| Mean ± std. error | 65.32 ± 3.94 | 75.66 ± 4.25 | 82.37 ± 3.87 | 81.83 ± 1.58 | 84.05 ± 1.78 | 86.88 ± 2.15 |

| Literature | Controlled Objective | Dynamic System | Continuously Control | Asynchronous | Active or Passive | Notes |

|---|---|---|---|---|---|---|

| Fan et al. [16] | Simulated Vehicle | No | No | No | Active | - |

| Venuto et al. [29] | Remotely Driving Mechanical Devices | Yes | Yes | No | Active | With ultrasonic sensors |

| Mezzina et al. [30] | An acrylic prototype car | Yes | Yes | No | Active | With peripheral sensors |

| Teng et al. [46] | Simulated Vehicle | Yes | Yes | Yes | Passive | - |

| Bi et al. [47] | Simulated Vehicle | No | No | No | Active | - |

| Bai et al. [48] | Speller | No | No | No | Active | - |

| Our current work | Simulated Vehicle | Yes | Yes | Yes | Active | - |

| Literature | Type of EEG Signals | Offline Classification Accuracy | Detection Time (s) | Online Performance | Notes |

|---|---|---|---|---|---|

| Fan et al. [16] | P300 | - | 26 | 99% | - |

| Venuto et al. [29] | P300 | - | 1.03 | 69.54% for direction selection | Online driving performance was not available. |

| Mezzina et al. [30] | P300 | - | 2.8 | 84.28 ± 0.87% | Speed at 10 cm/s; Online driving performance was not available. |

| Teng et al. [46] | Emergency-related EEG signals | 94% | 0.42 | - | - |

| Bi et al. [47] | P300 | 84.72% | 2.16 | - | - |

| Bai et al. [48] | P300 and SSVEP | 96.86% | 39.3 | 94.29% | Speller |

| Our current work | P300 | 83.59 ± 2.62% for control state; 90.06 ± 2.67% for idle state | 2.16 | Details were shown in Section 4.3 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lian, J.; Guo, Y.; Qiao, X.; Wang, C.; Bi, L. A Novel Asynchronous Brain Signals-Based Driver–Vehicle Interface for Brain-Controlled Vehicles. Bioengineering 2023, 10, 1105. https://doi.org/10.3390/bioengineering10091105

Lian J, Guo Y, Qiao X, Wang C, Bi L. A Novel Asynchronous Brain Signals-Based Driver–Vehicle Interface for Brain-Controlled Vehicles. Bioengineering. 2023; 10(9):1105. https://doi.org/10.3390/bioengineering10091105

Chicago/Turabian StyleLian, Jinling, Yanli Guo, Xin Qiao, Changyong Wang, and Luzheng Bi. 2023. "A Novel Asynchronous Brain Signals-Based Driver–Vehicle Interface for Brain-Controlled Vehicles" Bioengineering 10, no. 9: 1105. https://doi.org/10.3390/bioengineering10091105

APA StyleLian, J., Guo, Y., Qiao, X., Wang, C., & Bi, L. (2023). A Novel Asynchronous Brain Signals-Based Driver–Vehicle Interface for Brain-Controlled Vehicles. Bioengineering, 10(9), 1105. https://doi.org/10.3390/bioengineering10091105