Abstract

A recently introduced model-based deep learning (MoDL) technique successfully incorporates convolutional neural network (CNN)-based regularizers into physics-based parallel imaging reconstruction using a small number of network parameters. Wave-controlled aliasing in parallel imaging (CAIPI) is an emerging parallel imaging method that accelerates imaging acquisition by employing sinusoidal gradients in the phase- and slice/partition-encoding directions during the readout to take better advantage of 3D coil sensitivity profiles. We propose wave-encoded MoDL (wave-MoDL) combining the wave-encoding strategy with unrolled network constraints for highly accelerated 3D imaging while enforcing data consistency. We extend wave-MoDL to reconstruct multicontrast data with CAIPI sampling patterns to leverage similarity between multiple images to improve the reconstruction quality. We further exploit this to enable rapid quantitative imaging using an interleaved look-locker acquisition sequence with T2 preparation pulse (3D-QALAS). Wave-MoDL enables a 40 s MPRAGE acquisition at 1 mm resolution at 16-fold acceleration. For quantitative imaging, wave-MoDL permits a 1:50 min acquisition for T1, T2, and proton density mapping at 1 mm resolution at 12-fold acceleration, from which contrast-weighted images can be synthesized as well. In conclusion, wave-MoDL allows rapid MR acquisition and high-fidelity image reconstruction and may facilitate clinical and neuroscientific applications by incorporating unrolled neural networks into wave-CAIPI reconstruction.

1. Introduction

MRI has been widely used to provide structural and physiological images; however, its efficiency has been limited by an inherent tradeoff between scan time, resolution, and signal-to-noise ratio (SNR) [1]. MRI repeatedly excites and collects the signal while encoding the signal to visualize the acquired data as images, which often takes a long scan time. To mitigate the encoding burden of MRI scans, parallel imaging (PI) techniques have been developed to accelerate various sequences through the use of multiple receiver coils for image encoding. Sensitivity encoding (SENSE) [2] and generalized auto-calibrating partially parallel acquisition (GRAPPA) [3] have been widely used to reconstruct data in the image domain and k-space, respectively, and the recent ESPIRiT technique [4] has bridged the gap between these two domains. A number of techniques have been proposed to improve the conditioning of PI acquisition to enable higher accelerations. Controlled aliasing in parallel imaging (CAIPI) [5] modifies the appearance of aliasing artifacts during the acquisition to improve the subsequent PI reconstruction for multislice imaging. Application of interslice shifts to three-dimensional (3D) imaging forms the basis of 2D-CAIPI [6], wherein the phase () and partition () encoding positions are modified to shift the spatial aliasing pattern to reduce aliasing and better exploit the variations in coil sensitivities.

Wave-CAIPI is a more recent controlled aliasing method that can further reduce noise amplification and aliasing artifacts in highly accelerated acquisitions [7,8]. It employs extra sinusoidal gradient modulations in the phase- and the partition-encoding directions during the readout to better harness coil sensitivity variations in all three dimensions and achieve higher accelerations. Wave-encoding also incorporates 2D-CAIPI interslice shifts to improve the PI conditioning [6] and has found applications in highly accelerated gradient-echo (GE), MPRAGE, fast spin-echo, and echo-planar imaging acquisitions [8,9,10,11,12].

Compressed sensing (CS) and low-rank reconstruction using annihilation filters further improved the MR reconstruction [13,14,15,16,17,18]. In the last decade, combined PI and CS techniques have resulted in substantial improvements in acquisition speed and image quality. Although the PI–CS combination can achieve state-of-the-art performance [17,19,20,21,22], designing effective regularization schemes and tuning hyperparameters are nontrivial, and it often requires a relatively long reconstruction time.

Recently, image reconstruction with deep learning approaches has been explored [23,24,25] to overcome the hurdles faced by existing reconstruction techniques such as long reconstruction time, residual artifacts at high acceleration factor, and oversmoothness. A few studies have shown deep learning methods outperforming conventional regularization- and/or optimization-based techniques in various applications. PI reconstruction has been implemented with multilayer perceptron [23] and the variational network incorporating the unrolled network during conjugate gradient updates [24]. Some of the studies trained neural networks in k-space to exploit the features in the spatial frequency domain [25,26,27,28], as many PI techniques have been implemented in k-space. In recent works, the wave-encoding strategy was successfully combined with the variational network [29] and scan-specific image reconstruction [30,31] to provide high-quality images at high acceleration.

Recently developed model-based deep learning (MoDL) improves image reconstruction by leveraging an unrolled convolutional neural network (CNN) and PI forward model to help denoise and unalias undersampled data [32]. MoDL consists of unrolled CNN and data consistency blocks and updates the image by iteratively passing the data through both blocks. The sharing of network parameters across iterations enables MoDL to keep the number of learned parameters decoupled from the number of iterations, thus providing good convergence without increasing the number of trainable parameters. Such smaller number of trainable parameters also translates to significantly reduced training data demands, which is particularly attractive for data-scarce medical-imaging applications. MoDL has been further applied to multishot diffusion-weighted echo-planar imaging [33]. This work successfully replaced the multishot sensitivity-encoded diffusion data recovery algorithm using structured low-rank matrix completion (MUSSELS) [18] with the hybrid MoDL-MUSSELS approach which employs CNNs in both k-space and image domains. MoDL-MUSSELS was able to yield reconstructions that are comparable to state-of-the-art methods while offering several orders of magnitude reduction in run time.

Quantitative MRI estimates tissue parameters quantitatively, enabling the detection of, e.g., microstructural processes related to tissue remodeling in aging and neurological diseases [34]. Advanced image reconstruction could benefit high-resolution quantitative imaging as well, which often requires lengthy acquisitions because a multidimensional signal space needs to be sampled to enable parameter quantification. The 3D-QuAntification using an interleaved Look-Locker Acquisition Sequence with T2 preparation pulse (3D-QALAS) acquires high-resolution images with five different contrasts and enables simultaneous T1, T2, and proton density (PD) parameter mapping [35,36,37]. However, encoding limitations stemming from multicontrast sampling at high resolution substantially lengthen 3D-QALAS acquisitions, e.g., 11 min for 1 mm iso resolution at R = twofold acceleration [38]. The combination of PI with CS has recently enabled a 6 min scan at R = 3.8 [38]. Unfortunately, pushing the acceleration further is hampered by g-factor penalty and intrinsic SNR limitations.

In this study, we propose to combine wave-encoding and MoDL synergistically and demonstrate a rapid, 16-fold accelerated MPRAGE acquisition with high image quality. We further propose to expand our earlier work presented as an abstract [39] by using wave-MoDL to enable highly accelerated, high-resolution multiecho and multicontrast imaging with joint reconstruction. We first demonstrate the application of this in multiecho MPRAGE (MEMPRAGE) [40] imaging employed in the Human Connectome Project (HCP) at 0.8 mm isotropic resolution and jointly reconstruct four echoes at R = ninefold acceleration. Moreover, we make use of controlled aliasing by shifting the sampling patterns across the echoes, thereby increasing the collective k-space coverage. Finally, we demonstrate the ability to perform joint multicontrast reconstruction with wave-MoDL on the 3D-QALAS sequence. We pushed the acceleration to R = 12-fold to provide a 1:50 min comprehensive quantitative exam by jointly reconstructing multicontrast 3D-QALAS images with wave-MoDL, thereby minimizing the g-factor loss using wave-encoding and boosting SNR with MoDL. From this rapid 1:50 min 3D-QALAS scan, wave-MoDL allows for the estimation of high-quality T1, T2, and PD parameter maps, which can be used for synthesizing standard contrast-weighted images that lend themselves to clinical reads or subsequent analysis using image analysis software (e.g., FreeSurfer) [41,42].

2. Theory

2.1. Wave-CAIPI

Wave-encoding employs additional sinusoidal gradients during the readout to take better advantage of 3D coil sensitivity profiles [7,8,10,43]. Wave-encoding modulates the phase during the readout and incurs a corkscrew trajectory in k-space. This spreads the aliased signal into the readout direction, thereby employing an additional sensitivity profile for image reconstruction. The wave-encoded signal, s, can be explained using the following equation.

where m is the magnetization, is the gyromagnetic ratio, and g is the time-varying sinusoidal wave gradient. Voxel spreading by wave-encoding is a function of readout time and the position in the y- and z-dimensions (-y-z hybrid domain). The reconstruction of standard wave-CAIPI is described as follows:

where is the reconstructed image, is the subsampling mask, is the Fourier transform operator, is the wave point spread function in the -y-z hybrid domain (corresponding to in Equation (1)), is the coil sensitivity map, and is the subsampled k-space data.

2.2. 3D-QALAS

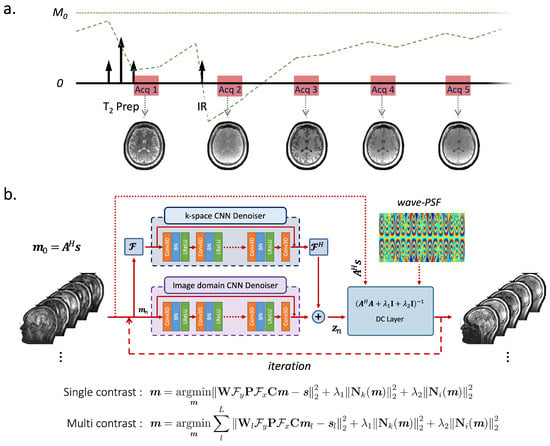

Figure 1a shows the sequence diagram of 3D-QALAS that enables high-resolution and simultaneous T1, T2, and PD parameter mapping. The 3D-QALAS is based on a multiacquisition 3D gradient echo sequence, with five acquisitions equally spaced in time, interleaved with a T2-preparation pulse and an inversion pulse. The first readout succeeds a T2-preparation module, and the remaining four readouts follow an inversion pulse that captures T1 dynamics. The estimated parameter maps will provide the ability to synthesize standard contrast-weighted images, thus allowing the sequence to be used in a more traditional way for clinical reads. We used a standalone version of SyMRI (SyntheticMR, Linköping, Sweden) to generate quantitative parameter maps and synthetic images.

Figure 1.

(a) 3D-QALAS sequence diagram. Five turbo-flash readout trains are played repeatedly until all k-space is acquired. The first readout succeeds a T2-preparation module, and the remaining four readouts follow an inversion pulse that captures T1 dynamics. (b) Wave-MoDL diagram for multicontrast joint reconstruction. presents the forward model of wave-encoding. Convolutional regularizers are applied in both image- and k-space, which are combined with data consistency (DC) layers in an unrolled network structure. The model is trained in a supervised manner where the loss function measures the fidelity with respect to high-quality reconstructions at mild R = twofold acceleration.

3. Methods

3.1. Wave-MoDL

We propose wave-MoDL incorporating the wave-encoding strategy into MoDL image reconstruction to improve the PI condition and high-resolution images with high image quality. For single-image contrast, wave-MoDL reconstruction can be described as follows.

where and represent residual CNNs in the k- and image-space, respectively. Figure 1b shows the proposed network architecture of wave-MoDL reconstruction for single/multicontrast images. The unrolled CNN block captures image features to help the image reconstruction. We use unrolled CNNs to constrain the reconstruction in both the image- and k-space, as it was shown previously that applying constraints in both domains improves the performance of the entire network [26,33]. The data consistency (DC) block enforces consistency with measured wave-encoded signals where wave point spread function (wave-PSF) input is required to utilize the known forward model. At the DC block, the resulting quadratic subproblem was solved using conjugate gradient (CG) optimization. The residual CNN, , can be explained by CNN, , and the skip connection, . We used the alternating recursive minimization-based solution as described in [32,33], by which the network is unrolled. By substituting and , where and represent CNNs in the k- and image-space, respectively, an alternating minimization-based solution can be described as follows.

where n is the iteration number and H is the Hermitian transpose.

3.2. Wave-MoDL for Multicontrast Image Reconstruction

We further extended single-contrast wave-MoDL to reconstruct multicontrast images, , from Equations (2) and (3), as follows.

where L is the number of image contrasts, is the subsampling mask for the l-th contrast, is the l-th contrast image, and is the l-th subsampled k-space data, respectively. We concatenate and create the variable comprising all contrasts, and provide this as the input channel of residual CNN, . CAIPI sampling patterns were applied across the contrasts to improve the reconstruction by increasing the collective k-space coverage [44].

3.3. Experiments

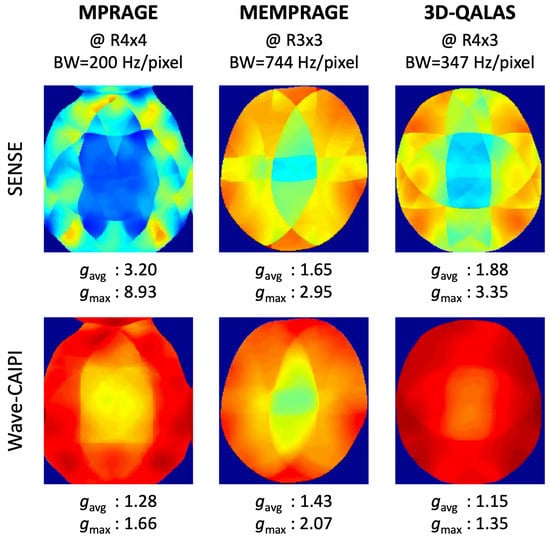

We trained, validated, and tested the wave-MoDL using three different databases acquired on a 3T Siemens Prisma system equipped with a 32-channel head receive array. Table 1 shows the acquisition parameters of the databases and the networks. Figure 2 shows the g-factor analyses of SENSE and wave-CAIPI, linear reconstructions with neither network nor regularization, at the target acceleration for each database. We separated the 3D data into slice groups and trained on sets of aliasing slices to address the GPU memory constraint. The number of slices of an input 3D batch image is equal to the acceleration factor in the slice-encoding direction. For example, at , a batch contains three slices that are aliasing on each other slice and need to be unaliased. Multiple contrast images were concatenated in the input channel dimension of the network. The wave-MoDL network updates the results during 10 outer iterations and takes 10 conjugate gradients per iteration in the data consistency layers. The unrolled CNN has five hidden layers consisting of a 24-depth filter with a leaky ReLU activation per layer, and all network parameters were zero-initialized. Coil sensitivity maps were calculated from external 3D low-resolution gradient-echo-based reference scans using ESPIRiT [4]. Example code can be found at https://github.com/jaejin-cho/wave-modl (accessed on 4 April 2022).

Table 1.

Parameters of database and network parameters.

Figure 2.

g-factor analyses of SENSE and wave-CAIPI at the target acceleration.

3.3.1. MPRAGE Database

We acquired fully sampled T1-MPRAGE data on 10 healthy subjects at 1 mm isotropic resolution to generate the database. We used a split of 8/1/1 subjects for training/validating/testing the wave-MoDL network. The network had access to 384 slice groups during the training, which should be a sufficient database size considering the number of network parameters as detailed in [32]. We retrospectively subsampled the data at the acceleration factor of R = 4 × 4, where wave-encodings were applied in both and directions with 8.8 mT/m of Gmax and 11 cycles [10]. The receiver bandwidth was 200 Hz/pixel. From the g-factor analysis in Figure 2, we expect 2.5-fold averaged g-factor gain and 5.4-fold maximum g-factor gain by incorporating wave-encoding strategy with respect to SENSE image reconstruction at R = 4 × 4.

3.3.2. MEMPRAGE Database

k-space data from four-echo MEMPRAGE scans were collected at the Massachusetts General Hospital HCP-Aging site [45]. The database consists of 30 subjects at 0.8 mm isotropic resolution and reduction factor 2. The network had access to 1892 slice groups (22 subjects) during the training. To create a database comprising high-quality reference images, we reconstructed images using GRAPPA on each of the four different echoes separately [3,4]. We retrospectively subsampled the data to R = 3 × 3 and incorporated CAIPI sampling patterns to use complementary information for the reconstruction across the multiechos. Wave-encoding was applied in both and directions with 9.626 mT/m of Gmax and four cycles at 744 Hz/pixel of receiver bandwidth. Incorporating wave-encoding reduces the average g-factor by 13% and the maximum g-factor by 43% with respect to SENSE at R = 3 × 3, as shown in Figure 2.

3.3.3. 3D-QALAS Database

We scanned 10 healthy subjects using the 3D QALAS sequence (Figure 1a) at 1 mm isotropic resolution and reduction factor of 2. To avoid prohibitively long acquisition times, data were acquired at R = 2 to limit the scan time to 11 min. High-SNR reference images were reconstructed using GRAPPA [3] for the five different contrasts separately. To train/validate/test the network, 8/1/1 subjects were used, respectively. The network had access to 512 slice groups during the training. The data were retrospectively subsampled to R = 4 × 3, corresponding to a 1:50 min quantitative exam, where 5-cycle cosine and sine wave-encodings were applied in both and directions with 16.5 mT/m of Gmax at 347 Hz/pixel bandwidth. To take into account the signal intensity differences between the contrasts, the contrast images were weighted by global scaling factors [3.26, 2.36, 1.57, 1.12, 1], calculated by the L2 signal norm ratio of each contrast image in the training database. This allowed each contrast to contribute to the loss function in similar amounts. We applied CAIPI sampling patterns across the multiple contrasts to use the complementary k-space information from each other echo in the reconstructions using MoDL and wave-MoDL. A fixed sampling pattern was applied to the five different contrasts for SENSE and wave-CAIPI, since these algorithms reconstruct each image independently. Employing wave gradients reduces the average g-factor by 20% and improves the maximum g-factor by 2.5-fold with respect to SENSE, as shown in Figure 2.

4. Results

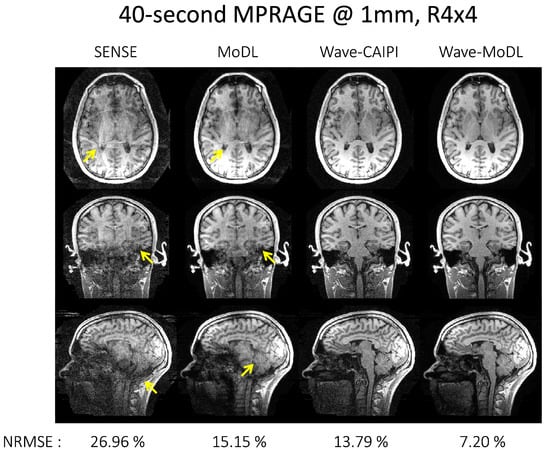

4.1. MPRAGE at R = 4 × 4

Figure 3 shows the reconstruction results at R = 4 × 4 on the MPRAGE data. SENSE suffered from residual folding artifacts and noise amplification. MoDL significantly mitigated the noise amplification and reduced NRMSE by 1.78-fold with respect to SENSE, but still suffered from folding artifacts (as shown by the arrows). Wave-CAIPI reduces NRMSE by 1.96-fold with respect to SENSE. Wave-MoDL further reduced the noise amplification and decreased NRMSE to 7.20%, which is a 1.92-fold improvement with respect to wave-CAIPI, and a 2.10-fold improvement over standard MoDL. Wave-MoDL thus provided high-quality image reconstruction at R = 4 × 4-fold acceleration, allowing the acquisition of whole-brain structural data in 40 seconds at 1 mm isotropic resolution.

Figure 3.

40 s 1 mm MPRAGE image reconstruction at R = 4 × 4. The arrows point to the folding artifacts and noise amplification.

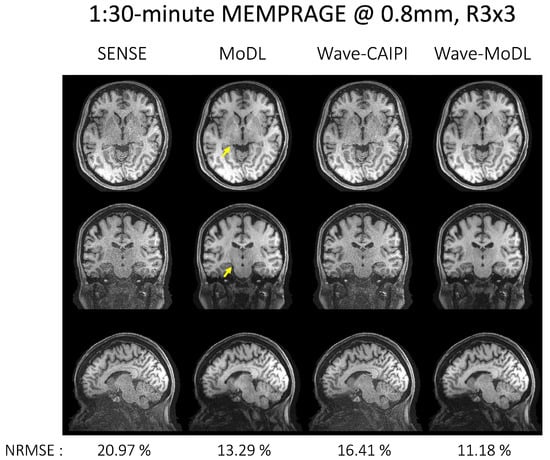

4.2. MEMPRAGE at R = 3 × 3

Figure 4 shows the reconstruction results of MEMPRAGE at 0.8 mm isotropic voxels and R = 3 × 3-fold acceleration. The images shown are the root mean squared (RMS) combination of the four echoes. CAIPI sampling patterns across the echoes were applied to MoDL and wave-MoDL for better use of the multiecho information, which in turn improves the reconstruction. Moreover, fixed sampling patterns were applied in SENSE and wave-CAIPI reconstruction since these algorithms independently reconstruct each echo image. Figure S1 shows the sampling patterns and each echo image before the combination. SENSE suffered from high noise amplification. Although MoDL mitigated the noise amplification, the part of the folding artifacts still remains (as pointed out by the arrows). Since wave-encoding was limited by a high receiver bandwidth [12], and wave-CAIPI also suffered from some noise amplification and resulted in even higher NRMSE with respect to Cartesian MoDL. Wave-MoDL shows improved reconstructions and reduced NRMSE to 11.18%. The image reconstruction at R = 3 × 2 on the same database is shown in Figures S2 and S3.

Figure 4.

The proposed method on the MEMPRAGE database at R = 3 × 3-fold and 0.8 mm isotropic voxel resolution. Echo images were combined by calculating the root mean squared image. NRMSEs were calculated for the entire testing database.

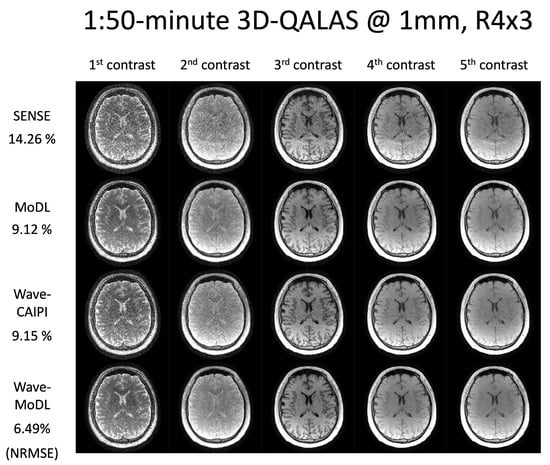

4.3. 3D-QALAS at R = 4 × 3

Figure 5 shows the multicontrast images of the five 3D-QALAS readouts, reconstructed using SENSE, MoDL, wave-CAIPI, and wave-MoDL at R = 4 × 3-fold acceleration. SENSE suffered from aliasing artifacts and noise amplification, while MoDL mitigated noise amplification and reduced the NRMSE significantly. Wave-CAIPI also improved the reconstruction and reduced the NRMSE to 9.15%. Wave-MoDL further reduced NRMSE to 6.49%, which is a 1.4-fold improvement with respect to both standard Cartesian MoDL and wave-CAIPI.

Figure 5.

Multicontrast image reconstruction using SENSE, MoDL, wave-CAIPI, and wave-MoDL. The results are 1:50 min scans at R = 4 × 3 and 1 mm isotropic resolution.

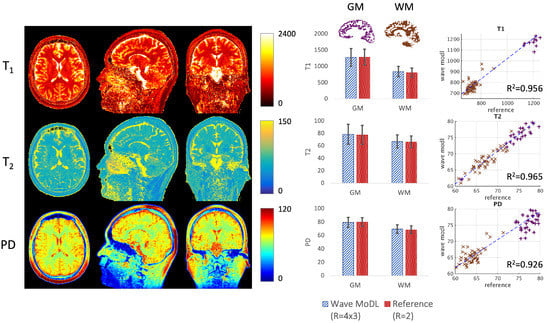

Figure 6 shows the T1, T2, and PD quantification results. To evaluate the accuracy, we calculated averaged values and standard deviation in white matter (WM, brown) and gray matter (GM, purple) segmented by FreeSurfer [41,42]. In the last column, we selected 50 brain regions, each including voxels in WM and GM, and plotted the quantified values using the wave-MoDL results over the reference R = 2 acquisition. The plotted graphs demonstrate that the estimated T1, T2, and PD values are well aligned with the reference QALAS acquisition. In GM, the estimated T1, T2, and PD were 1214 ± 477, 82 ± 50, and 76 ± 10, respectively, whereas the reference T1, T2, and PD were 1190 ± 474, 83 ± 61, and 76 ± 9, respectively. In WM, the estimated T1, T2, and PD were 839 ± 162, 67 ± 10, and 69 ± 6, respectively, whereas the reference T1, T2, and PD were 804 ± 162, 66 ± 9, and 68 ± 6, respectively. R2 metrics were 0.956, 0.965, and 0.926 in T1, T2, and PD maps, respectively. As shown in Figure 5, wave-MoDL mitigated the noise amplification in the parameter maps as well.

Figure 6.

T1, T2, and PD maps were calculated using the wave-MoDL results. The last column shows the correction between the reference (R = 2) and the quantitative values of wave-MoDL (R = 4 × 3) in the randomly selected boxes in gray matter (purple) and white matter (brown).

On the QALAS database, using NVidia Quadro RTX 5000, the whole brain image reconstructions took 7:35, 8:11, 14:35, and 15:26 min for SENSE, MoDL, wave-CAIPI, and wave-MoDL, respectively. Most of the image reconstruction time came from the DC layers, whereas unrolled networks took an additional 36 and 51 s in Cartesian and wave-encodings.

5. Discussion

We introduced the wave-MoDL acquisition/reconstruction strategy that synergistically combined wave-encoding with unrolled deep learning reconstruction and showed markedly improved image quality at high acceleration rates. In vivo experiments show its ability to provide anatomical images in 40 s using a 16-fold accelerated MPRAGE scan, 1:30 min submillimeter imaging with high fidelity with an MEMPRAGE, and quantitative images using a 1:50 min 3D-QALAS acquisition. Though the current implementation is not able to use the information from adjacent slices due to the limited GPU memory, it significantly reduces the memory footprint and facilitates training with high channel count data.

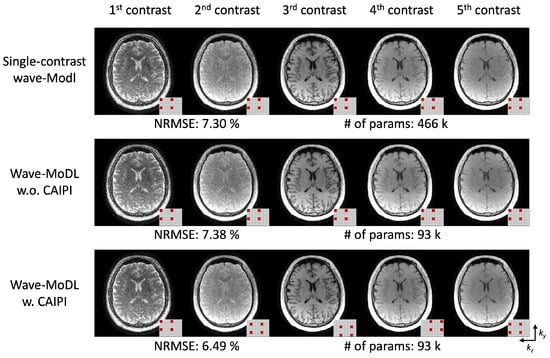

We employed CAIPI sampling patterns across the multiple echoes/contrasts to improve the reconstruction by leveraging the similarity of the multiple images [44]. To evaluate the efficiency of multicontrast information use, we reconstructed the images with and without CAIPI sampling patterns on the QALAS data, and trained five independent wave-MoDL networks to reconstruct each image separately, as shown in Figure 7. This figure demonstrates that the use of CAIPI sampling patterns helps reconstruct the images using shared information and reduces NRMSE from 7.38% to 6.49%. Wave-MoDL for multicontrast reconstruction without the CAIPI sampling patterns has comparable NRMSE with respect to independently trained networks for each echo while reducing the number of network parameters by ~5-fold. Wave-MoDL with CAIPI sampling patterns across contrasts improved the reconstruction while using a small number of network parameters, as the network can obtain complementary information from each echo image.

Figure 7.

(top) Five individual single-contrast wave-MoDL reconstructions for each contrast and (middle, bottom) joint-wave-MoDL with and without CAIPI sampling pattern across contrasts. The small pattern in the right bottom corner of each image shows the sampling in the and directions.

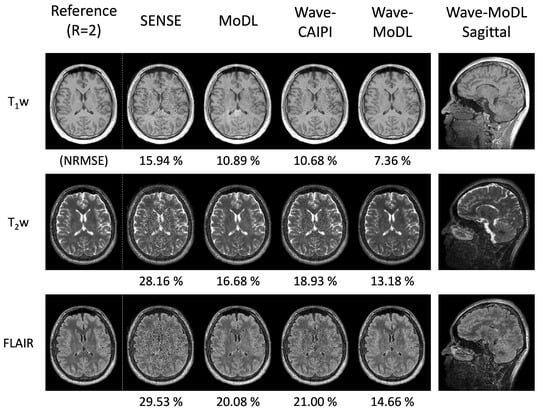

The 3D-QALAS can estimate the T1, T2, and proton density parameter maps; thus, additional contrast-weighted images can be synthesized from the quantitative parameter maps. Figure 8 shows the synthesized T1w, T2w, and FLAIR images using the quantified T1, T2, and PD maps obtained using the SyMRI software tool. The results demonstrate that wave-MoDL can mitigate the noise amplification and provide synthetic images that match well to the reference R = twofold accelerated data with higher fidelity than SENSE, MoDL, and wave-CAIPI. The synthesized PDw, double inversion recovery (DIR), and phase-sensitive inversion recovery (PSIR) images are shown in Figure S4.

Figure 8.

The synthesized T1w, T2w, and FLAIR images at R = 4 × 3-fold acceleration.

The proposed wave-MoDL reconstruction method uses unrolled network constraints and successfully improved image quality at high acceleration rates. In Figure S5, on the MEMPRAGE database, we explored other image reconstruction constraints, low-rank property over multiple echoes with the 3D wave-LORAKS strategy [11], and 2D U-net denoisers, of which the inputs are four-echo wave-CAIPI images. With the mean squared error, we trained two different U-net denoisers; one is a typical U-net and another one is a small U-net that has a similar number of network parameters to the one of wave-MoDL. Wave-LORAKS could improve both NRMSE and SSIM from the wave-CAIPI result, but is still noisier than wave-MoDL. Compared with wave-MoDL, the first U-net denoiser shows the best NRMSE on root mean squared images with 378 times more network parameters. The second U-net denoiser comprises 15% more network parameters and shows comparable NRMSE to wave-MoDL. However, SSIMs of the U-net denoisers are worse than the results using other constraints. Wave-MoDL presents the best SSIM and better NRMSE improvement per network parameter.

Because the networks were trained using databases that included only healthy subjects, the trained networks’ ability to generalize can be improved by incorporating data from pathological cases. Scan-specific models might help mitigate this concern on generalization to unseen pathologies [27,46]. Recent work on wave-spark could achieve 1.2-fold NRMSE reduction with respect to wave-CAIPI at R = 15 MPRAGE data [31], whereas our wave-MoDL could obtain 1.9-fold NRMSE reduction with respect to wave-CAIPI for R = 16-fold accelerated MPRAGE. This may suggest that database-trained models may provide higher performance gains than scan-specific approaches, albeit at the potential cost of reduced ability to generalize to unseen pathologies [47].

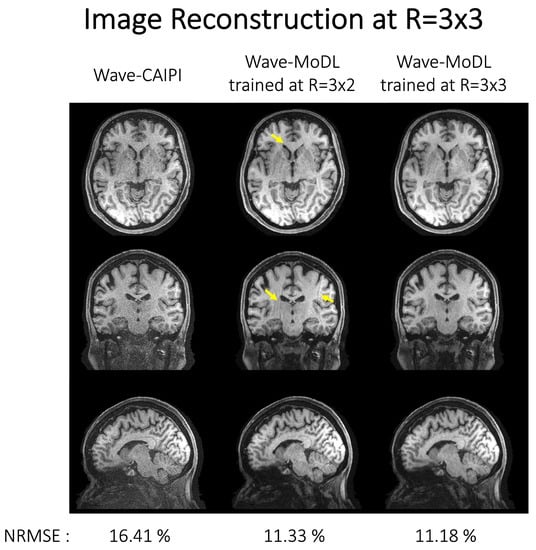

As we train the network at specific reduction factors and using CAIPI sampling patterns, the benefits including the NRMSE reduction can be decreased at different accelerations and/or CAIPI sampling patterns. In Figure 9, we reconstructed HCP images at R = 3 × 3 using wave-CAIPI, wave-MoDL trained with data at the difference acceleration factor of R = 3 × 2, and wave-MoDL trained with data at R = 3 × 3. Even though the networks of wave-MoDL were trained at a different reduction factor (R = 3 × 2) and sampling patterns, the networks could denoise the image and reduce NRMSE with respect to wave-CAIPI. However, it also generated amplified folding artifacts, as pointed out by arrows, which are not present in the standard wave-CAIPI reconstructions or the wave-MoDL results trained with R = 3 × 3 data. The networks trained at the same reduction factor (R = 3 × 3) and sampling pattern show the best performance. Fine-tuning the network with a couple of subject data might be required to use the network trained at a different reduction factor or different sampling pattern.

Figure 9.

Image reconstruction on HCP database subsampled at R = 3 × 3 using (left) wave-CAIPI, (middle) wave-MoDL trained at R = 3 × 2, and (right) wave-MoDL trained at R = 3 × 3. NRMSEs were calculated for the entire testing database. The network would not be generalized and required to be fine-tuned at a different reduction factor or sampling pattern.

6. Conclusions

We showed that our proposed wave-MoDL method enables highly accelerated MR scans that improve image fidelity and acquisition speed. Its application was demonstrated in MPRAGE, MEMPRAGE, and 3D-QALAS sequences. Joint-wave-MoDL was introduced to improve the reconstruction by employing the information from other echoes/contrasts using CAIPI sampling patterns. Extension of joint image reconstruction using wave-MoDL to 3D-QALAS enabled a high acceleration rate of R = 12 with a 32-channel coil array. This permitted a 1:50 min 3D-QALAS acquisition at 1 mm isotropic resolution and simultaneous T1, T2, and PD quantification as well as the synthesis of contrast-weighted images with high fidelity.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bioengineering9120736/s1, Figure S1: Each echo image of wave-MoDL at R = 3 × 3-fold acceleration. Figure S2: The proposed method on the MEMPRAGE database at R = 3 × 2-fold and 0.8 mm isotropic voxel resolution. Echo images were combined with root mean squared. NRMSEs were calculated for the entire testing database. Figure S3: Each echo image of wave-MoDL at R = 3 × 2-fold acceleration. Figure S4: The synthesized double inversion recovery (DIR) and phase-sensitive inversion recovery (PSIR) images at R = 4 × 3-fold acceleration. Figure S5: Wave-CAIPI, wave-LORAKS, U-net denoisers, and wave-MoDL on the MEMPRAGE database at R = 3 × 3-fold and 0.8 mm isotropic voxel resolution. Echo images were combined with root mean squared. NRMSEs and SSIMs were calculated for the entire testing database.

Author Contributions

Conceptualization, J.C., B.G. and B.B.; methodology, J.C., B.G., T.H.K., I.C. and B.B.; software, J.C.; validation, J.C.; formal analysis, J.C. and B.G.; investigation, J.C., T.H.K., Q.T. and B.B.; resources, J.C. and R.F.; data curation, J.C. and R.F.; writing—original draft preparation, J.C.; writing—review and editing, B.G., T.H.K., Q.T., R.F., I.C. and B.B.; visualization, J.C.; supervision, B.B.; project administration, B.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by research grants NIH R01 EB028797, R03 EB031175, P41 EB030006, U01 AG052564, U01 AG052564-S1, U01 EB025162, and U01 EB026996, and the NVidia Corporation for computing support.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Massachusetts General Hospital (protocol code: 2018P002270; date of approval: 9 May 2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The MPRAGE and 3D-QALAS data presented in this study are available on request from the corresponding author.

Acknowledgments

We are grateful for access to data collected as part of the HCP-Aging study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nishimura, D.G. Principles of Magnetic Resonance Imaging; Stanford University: Stanford, CA, USA, 1996. [Google Scholar]

- Pruessmann, K.P.; Weiger, M.; Scheidegger, M.B.; Boesiger, P. SENSE: Sensitivity encoding for fast MRI. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 1999, 42, 952–962. [Google Scholar] [CrossRef]

- Griswold, M.A.; Jakob, P.M.; Heidemann, R.M.; Nittka, M.; Jellus, V.; Wang, J.; Kiefer, B.; Haase, A. Generalized autocalibrating partially parallel acquisitions (GRAPPA). Magn. Reson. Med. 2002, 47, 1202–1210. [Google Scholar] [CrossRef] [PubMed]

- Uecker, M.; Lai, P.; Murphy, M.J.; Virtue, P.; Elad, M.; Pauly, J.M.; Vasanawala, S.S.; Lustig, M. ESPIRiT—An eigenvalue approach to autocalibrating parallel MRI: Where SENSE meets GRAPPA. Magn. Reson. Med. 2014, 71, 990–1001. [Google Scholar] [CrossRef] [PubMed]

- Breuer, F.A.; Blaimer, M.; Heidemann, R.M.; Mueller, M.F.; Griswold, M.A.; Jakob, P.M. Controlled aliasing in parallel imaging results in higher acceleration (CAIPIRINHA) for multi-slice imaging. Magn. Reson. Med. 2005, 53, 684–691. [Google Scholar] [CrossRef]

- Breuer, F.A.; Blaimer, M.; Mueller, M.F.; Seiberlich, N.; Heidemann, R.M.; Griswold, M.A.; Jakob, P.M. Controlled aliasing in volumetric parallel imaging (2D CAIPIRINHA). Magn. Reson. Med. 2006, 55, 549–556. [Google Scholar] [CrossRef] [PubMed]

- Bilgic, B.; Gagoski, B.A.; Cauley, S.F.; Fan, A.P.; Polimeni, J.R.; Grant, P.E.; Wald, L.L.; Setsompop, K. Wave-CAIPI for highly accelerated 3D imaging. Magn. Reson. Med. 2015, 73, 2152–2162. [Google Scholar] [CrossRef] [PubMed]

- Gagoski, B.A.; Bilgic, B.; Eichner, C.; Bhat, H.; Grant, P.E.; Wald, L.L.; Setsompop, K. RARE/turbo spin echo imaging with simultaneous multislice Wave-CAIPI: RARE/TSE with SMS Wave-CAIPI. Magn. Reson. Med. 2015, 73, 929–938. [Google Scholar] [CrossRef]

- Chen, F.; Taviani, V.; Tamir, J.I.; Cheng, J.Y.; Zhang, T.; Song, Q.; Hargreaves, B.A.; Pauly, J.M.; Vasanawala, S.S. Self-calibrating wave-encoded variable-density single-shot fast spin echo imaging. J. Magn. Reson. Imaging 2018, 47, 954–966. [Google Scholar] [CrossRef] [PubMed]

- Polak, D.; Setsompop, K.; Cauley, S.F.; Gagoski, B.A.; Bhat, H.; Maier, F.; Bachert, P.; Wald, L.L.; Bilgic, B. Wave-CAIPI for highly accelerated MP-RAGE imaging. Magn. Reson. Med. 2018, 79, 401–406. [Google Scholar] [CrossRef]

- Kim, T.H.; Bilgic, B.; Polak, D.; Setsompop, K.; Haldar, J.P. Wave-LORAKS: Combining wave encoding with structured low-rank matrix modeling for more highly accelerated 3D imaging. Magn. Reson. Med. 2019, 81, 1620–1633. [Google Scholar] [CrossRef] [PubMed]

- Cho, J.; Liao, C.; Tian, Q.; Zhang, Z.; Xu, J.; Lo, W.C.; Poser, B.A.; Stenger, V.A.; Stockmann, J.; Setsompop, K. Highly accelerated EPI with wave encoding and multi-shot simultaneous multislice imaging. Magn. Reson. Med. 2022, 88, 1180–1197. [Google Scholar] [CrossRef]

- Lustig, M.; Donoho, D.; Pauly, J.M. Sparse MRI: The application of compressed sensing for rapid MR imaging. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 2007, 58, 1182–1195. [Google Scholar] [CrossRef] [PubMed]

- Lustig, M.; Donoho, D.L.; Santos, J.M.; Pauly, J.M. Compressed sensing MRI. IEEE Signal Process. Mag. 2008, 25, 72–82. [Google Scholar] [CrossRef]

- Haldar, J.P. Low-rank modeling of local k-space neighborhoods (LORAKS) for constrained MRI. IEEE Trans. Med. Imaging 2013, 33, 668–681. [Google Scholar] [CrossRef] [PubMed]

- Shin, P.J.; Larson, P.E.; Ohliger, M.A.; Elad, M.; Pauly, J.M.; Vigneron, D.B.; Lustig, M. Calibrationless parallel imaging reconstruction based on structured low-rank matrix completion. Magn. Reson. Med. 2014, 72, 959–970. [Google Scholar] [CrossRef] [PubMed]

- Jin, K.H.; Lee, D.; Ye, J.C. A general framework for compressed sensing and parallel MRI using annihilating filter based low-rank Hankel matrix. IEEE Trans. Comput. Imaging 2016, 2, 480–495. [Google Scholar] [CrossRef]

- Mani, M.; Jacob, M.; Kelley, D.; Magnotta, V. Multi-shot sensitivity-encoded diffusion data recovery using structured low-rank matrix completion (MUSSELS): Annihilating Filter K-Space Formulation for Multi-Shot DWI Recovery. Magn. Reson. Med. 2017, 78, 494–507. [Google Scholar] [CrossRef] [PubMed]

- Lustig, M.; Pauly, J.M. SPIRiT: Iterative self-consistent parallel imaging reconstruction from arbitrary k-space. Magn. Reson. Med. 2010, 64, 457–471. [Google Scholar] [CrossRef] [PubMed]

- Murphy, M.; Alley, M.; Demmel, J.; Keutzer, K.; Vasanawala, S.; Lustig, M. Fast ℓ1-SPIRiT compressed sensing parallel imaging MRI: Scalable parallel implementation and clinically feasible runtime. IEEE Trans. Med. Imaging 2012, 31, 1250–1262. [Google Scholar] [CrossRef] [PubMed]

- Ongie, G.; Jacob, M. Off-the-grid recovery of piecewise constant images from few Fourier samples. SIAM J. Imaging Sci. 2016, 9, 1004–1041. [Google Scholar] [CrossRef] [PubMed]

- Haldar, J.P.; Zhuo, J. P-LORAKS: Low-rank modeling of local k-space neighborhoods with parallel imaging data. Magn. Reson. Med. 2016, 75, 1499–1514. [Google Scholar] [CrossRef] [PubMed]

- Kwon, K.; Kim, D.; Park, H. A parallel MR imaging method using multilayer perceptron. Med. Phys. 2017, 44, 6209–6224. [Google Scholar] [CrossRef] [PubMed]

- Hammernik, K.; Klatzer, T.; Kobler, E.; Recht, M.P.; Sodickson, D.K.; Pock, T.; Knoll, F. Learning a variational network for reconstruction of accelerated MRI data: Learning a Variational Network for Reconstruction of Accelerated MRI Data. Magn. Reson. Med. 2018, 79, 3055–3071. [Google Scholar] [CrossRef]

- Han, Y.; Sunwoo, L.; Ye, J.C. k-Space Deep Learning for Accelerated MRI. IEEE Trans. Med. Imaging 2020, 39, 377–386. [Google Scholar] [CrossRef] [PubMed]

- Eo, T.; Jun, Y.; Kim, T.; Jang, J.; Lee, H.J.; Hwang, D. KIKI-net: Cross-domain convolutional neural networks for reconstructing undersampled magnetic resonance images. Magn. Reson. Med. 2018, 80, 2188–2201. [Google Scholar] [CrossRef] [PubMed]

- Akçakaya, M.; Moeller, S.; Weingärtner, S.; Uğurbil, K. Scan-specific robust artificial-neural-networks for k-space interpolation (RAKI) reconstruction: Database-free deep learning for fast imaging. Magn. Reson. Med. 2019, 81, 439–453. [Google Scholar] [CrossRef]

- Kim, T.H.; Garg, P.; Haldar, J.P. LORAKI: Autocalibrated Recurrent Neural Networks for Autoregressive MRI Reconstruction in k-Space. arXiv 2019, arXiv:1904.09390. [Google Scholar]

- Polak, D.; Cauley, S.; Bilgic, B.; Gong, E.; Bachert, P.; Adalsteinsson, E.; Setsompop, K. Joint multi-contrast variational network reconstruction (jVN) with application to rapid 2D and 3D imaging. Magn. Reson. Med. 2020, 84, 1456–1469. [Google Scholar] [CrossRef]

- Beker, O.; Liao, C.; Cho, J.; Zhang, Z.; Setsompop, K.; Bilgic, B. Scan-specific, Parameter-free Artifact Reduction in K-space (SPARK). arXiv 2019, arXiv:1911.07219. [Google Scholar]

- Arefeen, Y.; Beker, O.; Cho, J.; Yu, H.; Adalsteinsson, E.; Bilgic, B. Scan-specific artifact reduction in k-space (SPARK) neural networks synergize with physics-based reconstruction to accelerate MRI. Magn. Reson. Med. 2022, 87, 764–780. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, H.K.; Mani, M.P.; Jacob, M. MoDL: Model Based Deep Learning Architecture for Inverse Problems. IEEE Trans. Med. Imaging 2018, 38, 394–405. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, H.K.; Mani, M.P.; Jacob, M. MoDL-MUSSELS: Model-Based Deep Learning for Multi-Shot Sensitivity Encoded Diffusion MRI. IEEE Trans. Med. Imaging 2020, 39, 1268–1277. [Google Scholar] [CrossRef] [PubMed]

- Seiler, A.; Nöth, U.; Hok, P.; Reiländer, A.; Maiworm, M.; Baudrexel, S.; Meuth, S.; Rosenow, F.; Steinmetz, H.; Wagner, M.; et al. Multiparametric quantitative MRI in neurological diseases. Front. Neurol. 2021, 12, 640239. [Google Scholar] [CrossRef] [PubMed]

- Kvernby, S.; Warntjes, M.J.B.; Haraldsson, H.; Carlhäll, C.J.; Engvall, J.; Ebbers, T. Simultaneous three-dimensional myocardial T1 and T2 mapping in one breath hold with 3D-QALAS. J. Cardiovasc. Magn. Reson. 2014, 16, 102. [Google Scholar] [CrossRef]

- Kvernby, S.; Warntjes, M.; Engvall, J.; Carlhäll, C.J.; Ebbers, T. Clinical feasibility of 3D-QALAS – Single breath-hold 3D myocardial T1- and T2-mapping. Magn. Reson. Imaging 2017, 38, 13–20. [Google Scholar] [CrossRef]

- Fujita, S.; Hagiwara, A.; Hori, M.; Warntjes, M.; Kamagata, K.; Fukunaga, I.; Andica, C.; Maekawa, T.; Irie, R. Three-dimensional high-resolution simultaneous quantitative mapping of the whole brain with 3D-QALAS: An accuracy and repeatability study. Magn. Reson. Imaging 2019, 63, 235–243. [Google Scholar] [CrossRef]

- Fujita, S.; Hagiwara, A.; Takei, N.; Hwang, K.P.; Fukunaga, I.; Kato, S.; Andica, C.; Kamagata, K.; Yokoyama, K.; Hattori, N.; et al. Accelerated Isotropic Multiparametric Imaging by High Spatial Resolution 3D-QALAS With Compressed Sensing: A Phantom, Volunteer, and Patient Study. Investig. Radiol. 2021, 56, 292–300. [Google Scholar] [CrossRef]

- Cho, J.; Tian, Q.; Frost, R.; Chatnuntawech, I.; Bilgic, B. Wave-encoded model-based deep learning with joint reconstruction and segmentation. In Proceedings of the 29th Scientific Meeting of ISMRM, Online Conference, 15–20 May 2021; p. 1982. [Google Scholar]

- Van der Kouwe, A.J.; Benner, T.; Salat, D.H.; Fischl, B. Brain morphometry with multiecho MPRAGE. Neuroimage 2008, 40, 559–569. [Google Scholar] [CrossRef]

- Dale, A.M.; Fischl, B.; Sereno, M.I. Cortical Surface-Based Analysis: I. Segmentation and Surface Reconstruction. Neuroimage 1999, 9, 179–194. [Google Scholar] [CrossRef]

- Desikan, R.S.; Ségonne, F.; Fischl, B.; Quinn, B.T.; Dickerson, B.C.; Blacker, D.; Buckner, R.L.; Dale, A.M.; Maguire, R.P.; Hyman, B.T. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 2006, 31, 968–980. [Google Scholar] [CrossRef]

- Polak, D.; Cauley, S.; Huang, S.Y.; Longo, M.G.; Conklin, J.; Bilgic, B.; Ohringer, N.; Raithel, E.; Bachert, P.; Wald, L.L.; et al. Highly-accelerated volumetric brain examination using optimized wave-CAIPI encoding. J. Magn. Reson. Imaging 2019, 50, 961–974. [Google Scholar] [CrossRef] [PubMed]

- Bilgic, B.; Kim, T.H.; Liao, C.; Manhard, M.K.; Wald, L.L.; Haldar, J.P.; Setsompop, K. Improving parallel imaging by jointly reconstructing multi-contrast data. Magn. Reson. Med. 2018, 80, 619–632. [Google Scholar] [CrossRef] [PubMed]

- Frost, R.; Tisdall, M.D.; Hoffmann, M.; Fischl, B.; Salat, D.; van der Kouwe, A.J. Scan-specific assessment of vNav motion artifact mitigation in the HCP Aging study using reverse motion correction. In Proceedings of the 28th Annual Meeting of the International Society of Magnetic Resonance in Medicine, Online Conference, 8–14 August 2020. [Google Scholar]

- Yaman, B.; Hosseini, S.A.H.; Moeller, S.; Ellermann, J.; Uğurbil, K.; Akçakaya, M. Self-supervised learning of physics-guided reconstruction neural networks without fully sampled reference data. Magn. Reson. Med. 2020, 84, 3172–3191. [Google Scholar] [CrossRef] [PubMed]

- Muckley, M.J.; Riemenschneider, B.; Radmanesh, A.; Kim, S.; Jeong, G.; Ko, J.; Jun, Y.; Shin, H.; Hwang, D.; Mostapha, M. Results of the 2020 fastmri challenge for machine learning mr image reconstruction. IEEE Trans. Med. Imaging 2021, 40, 2306–2317. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).