Skin Cancer Image Classification Using Artificial Intelligence Strategies: A Systematic Review

Abstract

:1. Introduction

2. Methods

2.1. Information Sources

2.2. Eligibility Criteria and Screening

3. Results

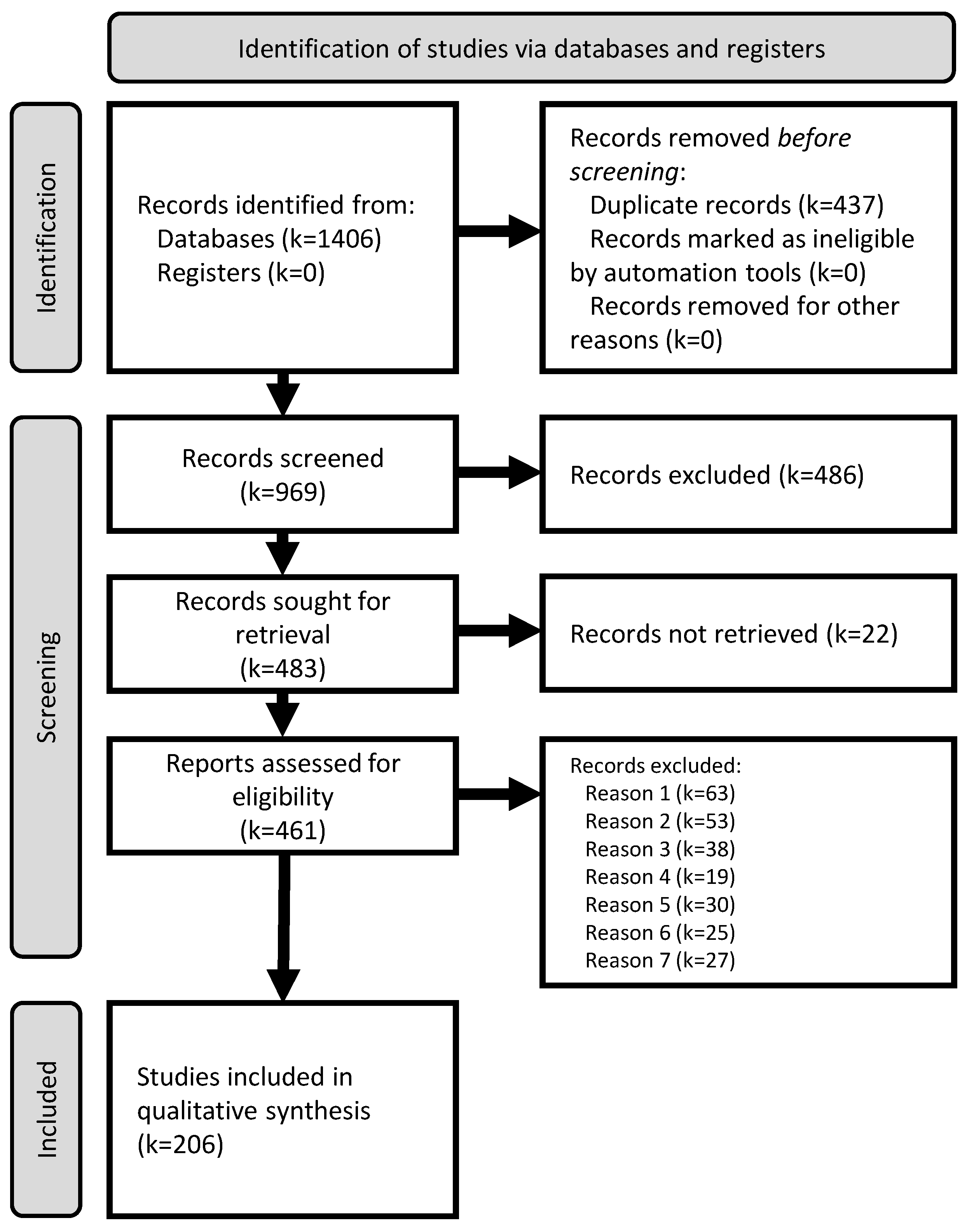

3.1. Study Selection

3.2. Qualitative Synthesis

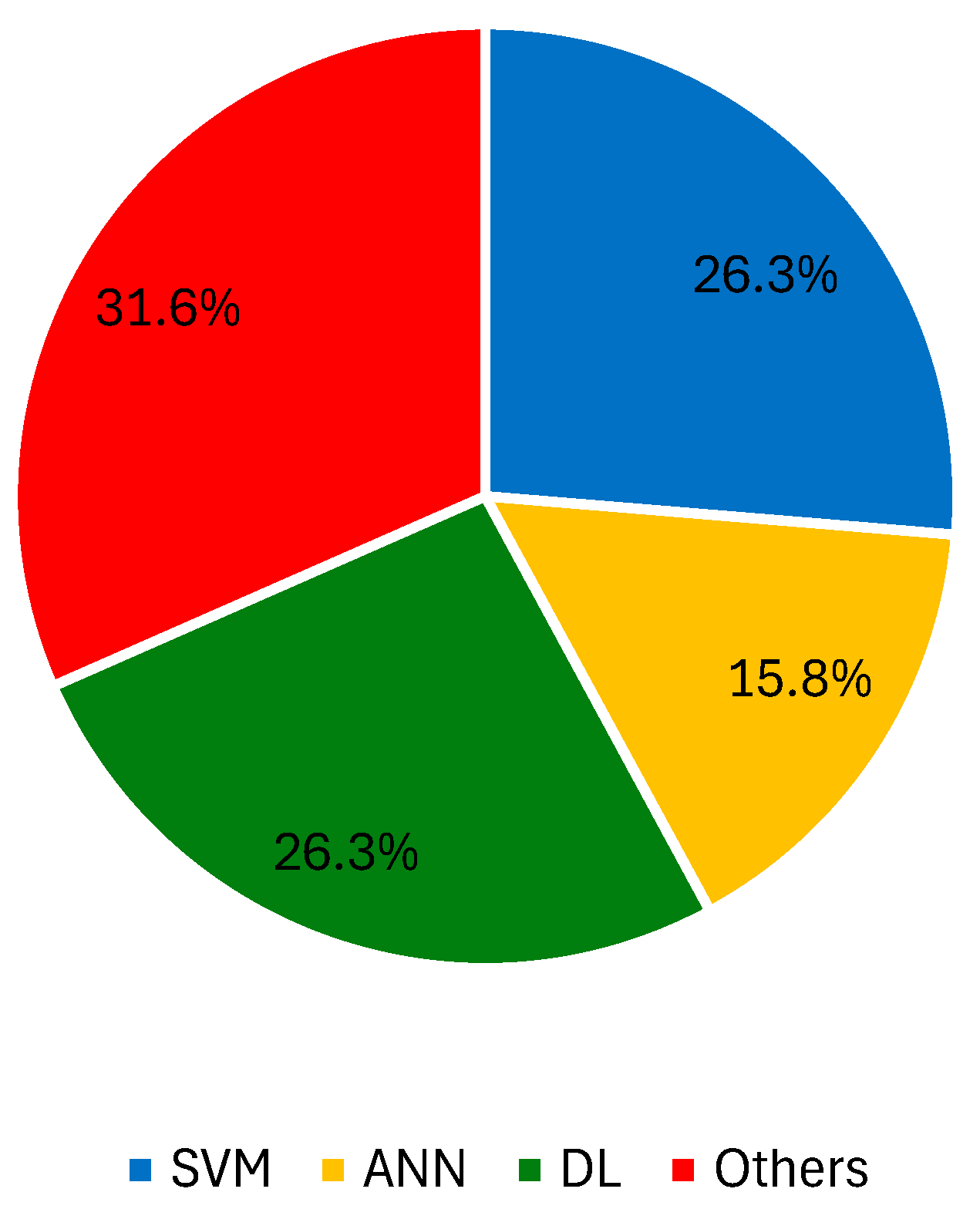

3.2.1. Digital Dermoscopy Imaging

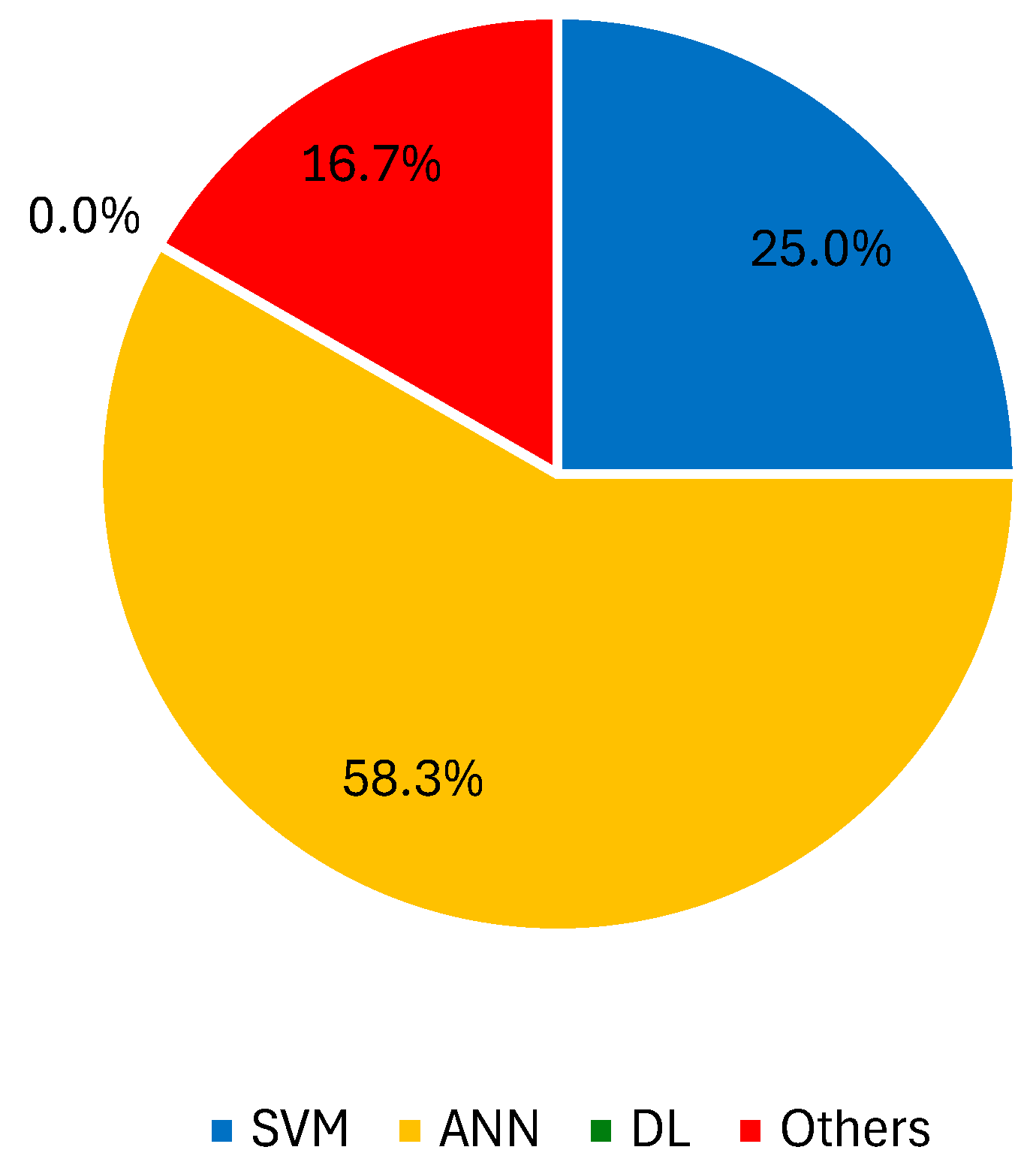

3.2.2. Microscopy Imaging Techniques

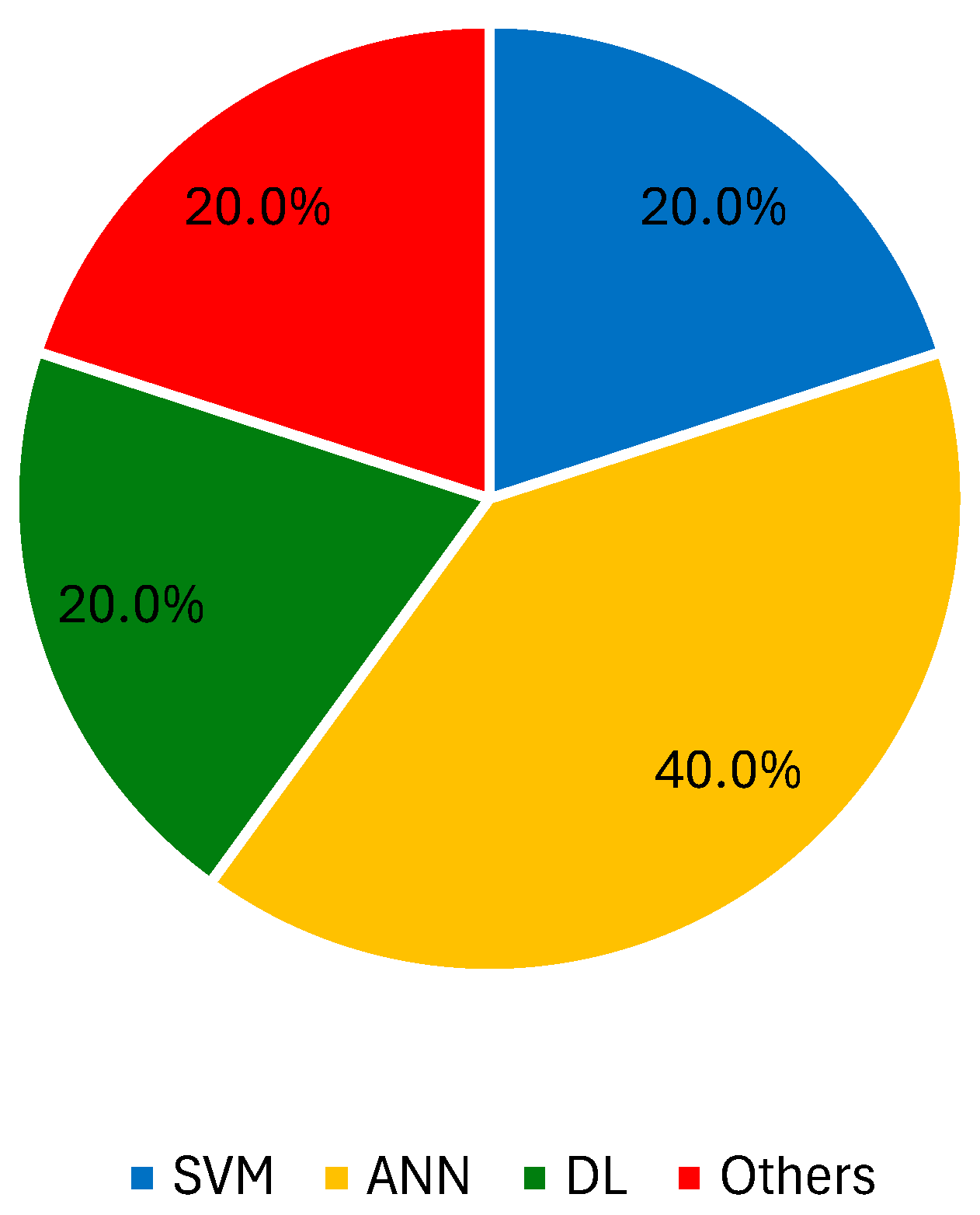

3.2.3. Spectroscopy Imaging Techniques

3.2.4. Macroscopy Imaging

3.2.5. Other Imaging Modalities

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| Squamous cell carcinoma | SCC |

| Basal cell carcinoma | BCC |

| Artificial intelligence | AI |

| Infrared thermal | IRT |

| Machine learning | ML |

| Preferred reporting items for systematic reviews and meta-analyses | PRISMA |

| International database of prospectively registered systematic reviews in health and social care, welfare, public health, education, crime, justice, and international development, where there is a health-related outcome | PROSPERO |

| Academic citation indexing and search service, which is combined with web linking and provided by Thomson Reuters | ISI |

| Support vector machine | SVM |

| Local binary patterns | LBP |

| Accuracy | ACC |

| Sensitivity | SN |

| Specificity | SP |

| Artificial neural network | ANN |

| Bidimensional intrinsic mode function | BIMF |

| Generative adversarial network | GAN |

| Convolutional neural network | CNN |

| Deep learning | DL |

| Random forest | RF |

| k-nearest neighbor | kNN |

| Computer aided diagnostic | CAD |

| Genetic algorithm | GA |

| Gradient-weighted class activation mapping | Grad-CAM |

References

- Hunter, J.; Savin, J.; Dahl, M. Skin Tumours. In Clinical Dermatology, 3rd ed.; Blackwell Science: Hoboken, NJ, USA, 2002; pp. 253–282. ISBN 0632059168. [Google Scholar]

- Crowley, L.V. Neoplastic Disease. In An Introduction to Human Disease: Pathology and Pathophysiology Correlations, 9th ed.; Jones and Bartlett Learning: Burlington, MA, USA, 2013; pp. 192–209. [Google Scholar]

- The Global Cancer Observatory. 2022. Available online: https://gco.iarc.fr/ (accessed on 14 August 2024).

- Glazer, A.M.; Rigel, D.S.; Winkelmann, R.R.; Farberg, A.S. Clinical Diagnosis of Skin Cancer. Dermatol. Clin. 2017, 35, 409–416. [Google Scholar] [CrossRef] [PubMed]

- Massone, C.; Di Stefani, A.; Soyer, H.P. Dermoscopy for skin cancer detection. Curr. Opin. Oncol. 2005, 17, 147–153. [Google Scholar] [CrossRef] [PubMed]

- Apalla, Z.; Lallas, A.; Sotiriou, E.; Lazaridou, E.; Ioannides, D. Managing Skin Cancer; Springer: Berlin/Heidelberg, Germany, 2010; Volume 7, ISBN 978-3-540-79346-5. [Google Scholar]

- MacFarlane, D.F. Biopsy Techniques and Interpretation. In Skin Cancer Management: A Practical Approach; Springer: Berlin/Heidelberg, Germany, 2010; Chapter 10; pp. 1–8. ISBN 9780387884943. [Google Scholar]

- Heibel, H.D.; Hooey, L.; Cockerell, C.J. A Review of Noninvasive Techniques for Skin Cancer Detection in Dermatology. Am. J. Clin. Dermatol. 2020, 21, 513–524. [Google Scholar] [CrossRef] [PubMed]

- Narayanamurthy, V.; Padmapriya, P.; Noorasafrin, A.; Pooja, B.; Hema, K.; Firus Khan, A.Y.; Nithyakalyani, K.; Samsuri, F. Skin cancer detection using non-invasive techniques. RSC Adv. 2018, 8, 28095–28130. [Google Scholar] [CrossRef]

- Kulkarni, S.; Seneviratne, N.; Baig, M.S.; Khan, A.H.A. Artificial Intelligence in Medicine: Where Are We Now? Acad. Radiol. 2020, 27, 62–70. [Google Scholar] [CrossRef]

- Ngiam, K.Y.; Khor, I.W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019, 20, e262–e273. [Google Scholar] [CrossRef]

- Cormier, J.; Voss, R.; Woods, T.; Cromwell, K.; Nelson, K. Improving outcomes in patients with melanoma: Strategies to ensure an early diagnosis. Patient Relat. Outcome Meas. 2015, 6, 229–242. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Tan, T.Y.; Zhang, L.; Jiang, M. An intelligent decision support system for skin cancer detection from dermoscopic images. In Proceedings of the 2016 12th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 2194–2199. [Google Scholar] [CrossRef]

- Jaworek-Korjakowska, J. Computer-Aided Diagnosis of Micro-Malignant Melanoma Lesions Applying Support Vector Machines. Biomed Res. Int. 2016, 2016, 4381972. [Google Scholar] [CrossRef]

- Poovizhi, S.; Ganesh Babu, T.R. An Efficient Skin Cancer Diagnostic System Using Bendlet Transform and Support Vector Machine. An. Acad. Bras. Cienc. 2020, 92, e20190554. [Google Scholar] [CrossRef]

- Sethy, P.K.; Behera, S.K.; Kannan, N. Categorization of Common Pigmented Skin Lesions (CPSL) using Multi-Deep Features and Support Vector Machine. J. Digit. Imaging 2022, 35, 1207–1216. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Shu, C.; Hu, H.; Ma, G.; Yang, M. Dermoscopic Image Classification of Pigmented Nevus under Deep Learning and the Correlation with Pathological Features. Comput. Math. Methods Med. 2022, 2022, 9726181. [Google Scholar] [CrossRef]

- Wei, L.; Pan, S.X.; Nanehkaran, Y.A.; Rajinikanth, V. An Optimized Method for Skin Cancer Diagnosis Using Modified Thermal Exchange Optimization Algorithm. Comput. Math. Methods Med. 2021, 2021, 5527698. [Google Scholar] [CrossRef]

- Codella, N.C.F.; Nguyen, Q.B.; Pankanti, S.; Gutman, D.A.; Helba, B.; Halpern, A.C.; Smith, J.R. Deep learning ensembles for melanoma recognition in dermoscopy images. IBM J. Res. Dev. 2017, 61, 5:1–5:15. [Google Scholar] [CrossRef]

- Sabbaghi Mahmouei, S.; Aldeen, M.; Stoecker, W.V.; Garnavi, R. Biologically Inspired QuadTree Color Detection in Dermoscopy Images of Melanoma. IEEE J. Biomed. Health Inform. 2019, 23, 570–577. [Google Scholar] [CrossRef]

- Alamri, A.; Alsaeed, D. On the development of a skin cancer computer aided diagnosis system using support vector machine. Biosci. Biotechnol. Res. Commun. 2019, 12, 297–308. [Google Scholar] [CrossRef]

- Vasconcelos, M.J.M.; Rosado, L.; Ferreira, M. A new color assessment methodology using cluster-based features for skin lesion analysis. In Proceedings of the 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 25–29 May 2015; pp. 373–378. [Google Scholar] [CrossRef]

- Almansour, E.; Jaffar, M.A. Classification of Dermoscopic Skin Cancer Images Using Color and Hybrid Texture Features. IJCSNS Int. J. Comput. Sci. Netw. Secur. 2016, 16, 135–139. [Google Scholar]

- Khan, M.A.; Akram, T.; Sharif, M.; Saba, T.; Javed, K.; Lali, I.U.; Tanik, U.J.; Rehman, A. Construction of saliency map and hybrid set of features for efficient segmentation and classification of skin lesion. Microsc. Res. Tech. 2019, 82, 741–763. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Akram, T.; Sharif, M.; Shahzad, A.; Aurangzeb, K.; Alhussein, M.; Haider, S.I.; Altamrah, A. An implementation of normal distribution based segmentation and entropy controlled features selection for skin lesion detection and classification. BMC Cancer 2018, 18, 638. [Google Scholar] [CrossRef]

- Rehman, A.; Khan, M.A.; Mehmood, Z.; Saba, T.; Sardaraz, M.; Rashid, M. Microscopic melanoma detection and classification: A framework of pixel-based fusion and multilevel features reduction. Microsc. Res. Tech. 2020, 83, 410–423. [Google Scholar] [CrossRef]

- Masood, A.; Al-Jumaily, A. SA-SVM based automated diagnostic system for skin cancer. In Proceedings of the Sixth International Conference on Graphic and Image Processing (ICGIP 2014), Beijing, China, 24–26 October 2014; SPIE: Sydney, Australia, 2015; Volume 9443. [Google Scholar] [CrossRef]

- Masood, A.; Al-Jumaily, A.; Anam, K. Self-supervised learning model for skin cancer diagnosis. In Proceedings of the 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, France, 22–24 April 2015. [Google Scholar] [CrossRef]

- Celebi, M.E.; Kingravi, H.A.; Uddin, B.; Iyatomi, H.; Aslandogan, Y.A.; Stoecker, W.V.; Moss, R.H. A methodological approach to the classification of dermoscopy images. Comput. Med. Imaging Graph. 2007, 31, 362–373. [Google Scholar] [CrossRef] [PubMed]

- Tajeddin, N.Z.; Asl, B.M. Melanoma recognition in dermoscopy images using lesion’s peripheral region information. Comput. Methods Programs Biomed. 2018, 163, 143–153. [Google Scholar] [CrossRef]

- Kalwa, U.; Legner, C.; Kong, T.; Pandey, S. Skin cancer diagnostics with an all-inclusive smartphone application. Symmetry 2019, 11, 790. [Google Scholar] [CrossRef]

- Wahba, M.A.; Ashour, A.S.; Napoleon, S.A.; Abd Elnaby, M.M.; Guo, Y. Combined empirical mode decomposition and texture features for skin lesion classification using quadratic support vector machine. Health Inf. Sci. Syst. 2017, 5, 10. [Google Scholar] [CrossRef]

- Chatterjee, S.; Dey, D.; Munshi, S. Integration of morphological preprocessing and fractal based feature extraction with recursive feature elimination for skin lesion types classification. Comput. Methods Programs Biomed. 2019, 178, 201–218. [Google Scholar] [CrossRef]

- Gilmore, S.J. Automated decision support in melanocytic lesion management. PLoS ONE 2018, 13, e0203459. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Schaefer, G.; Ellinger, I.; Ecker, R.; Pitiot, A.; Wang, C. Fusing fine-tuned deep features for skin lesion classification. Comput. Med. Imaging Graph. 2019, 71, 19–29. [Google Scholar] [CrossRef] [PubMed]

- Joseph, S.; Panicker, J.R. Skin lesion analysis system for melanoma detection with an effective hair segmentation method. In Proceedings of the 2016 International Conference on Information Science (ICIS), Kochi, India, 12–13 August 2016; pp. 91–96. [Google Scholar] [CrossRef]

- Suganya, R. An automated computer aided diagnosis of skin lesions detection and classification for dermoscopy images. In Proceedings of the 2016 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 8–9 April 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Rahman, M.M.; Bhattacharya, P. An integrated and interactive decision support system for automated melanoma recognition of dermoscopic images. Comput. Med. Imaging Graph. 2010, 34, 479–486. [Google Scholar] [CrossRef]

- Faal, M.; Miran Baygi, M.H.; Kabir, E. Improving the diagnostic accuracy of dysplastic and melanoma lesions using the decision template combination method. Ski. Res. Technol. 2013, 19, 113–122. [Google Scholar] [CrossRef]

- Schaefer, G.; Krawczyk, B.; Celebi, M.E.; Iyatomi, H.; Hassanien, A.E. Melanoma Classification Based on Ensemble Classification of Dermoscopy Image Features. In Advanced Machine Learning Technologies and Applications, Proceedings of the AMLTA 2014, Cairo, Egypt, 28–30 November 2014; Communications in Computer and Information Science; Hassanien, A.E., Tolba, M.F., Taher Azar, A., Eds.; Springer: Cham, Switzerland, 2014; pp. 291–298. ISBN 9783319134604. [Google Scholar]

- Abbas, Q.; Sadaf, M.; Akram, A. Prediction of Dermoscopy Patterns for Recognition of both Melanocytic and Non-Melanocytic Skin Lesions. Computers 2016, 5, 13. [Google Scholar] [CrossRef]

- Xie, F.; Fan, H.; Li, Y.; Jiang, Z.; Meng, R.; Bovik, A. Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans. Med. Imaging 2017, 36, 849–858. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, R.B.; Pereira, A.S.; Tavares, J.M.R.S. Skin lesion computational diagnosis of dermoscopic images: Ensemble models based on input feature manipulation. Comput. Methods Programs Biomed. 2017, 149, 43–53. [Google Scholar] [CrossRef] [PubMed]

- Castillejos-fernández, H.; López-ortega, O. An Intelligent System for the Diagnosis of Skin Cancer on Digital Images taken with Dermoscopy. Acta Polytech. Hung. 2017, 14, 169–185. [Google Scholar]

- Aswin, R.B.; Jaleel, J.A.; Salim, S. Hybrid genetic algorithm—Artificial neural network classifier for skin cancer detection. In Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10–11 July 2014; pp. 1304–1309. [Google Scholar] [CrossRef]

- Huang, H.; Hsu, B.W.; Lee, C.; Tseng, V.S. Development of a light-weight deep learning model for cloud applications and remote diagnosis of skin cancers. J. Dermatol. 2021, 48, 310–316. [Google Scholar] [CrossRef]

- Iqbal, I.; Younus, M.; Walayat, K.; Kakar, M.U.; Ma, J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput. Med. Imaging Graph. 2021, 88, 101843. [Google Scholar] [CrossRef]

- Mahmoud, N.M.; Soliman, A.M. Early automated detection system for skin cancer diagnosis using artificial intelligent techniques. Sci. Rep. 2024, 14, 9749. [Google Scholar] [CrossRef]

- Messadi, M.; Bessaid, A.; Taleb-Ahmed, A. New characterization methodology for skin tumors classification. J. Mech. Med. Biol. 2010, 10, 467–477. [Google Scholar] [CrossRef]

- Grochowski, M.; Mikołajczyk, A.; Kwasigroch, A. Diagnosis of malignant melanoma by neural network ensemble-based system utilising hand-crafted skin lesion features. Metrol. Meas. Syst. 2019, 26, 65–80. [Google Scholar] [CrossRef]

- Samsudin, S.S.; Arof, H.; Harun, S.W.; Abdul Wahab, A.W.; Idris, M.Y.I. Skin lesion classification using multi-resolution empirical mode decomposition and local binary pattern. PLoS ONE 2022, 17, e0274896. [Google Scholar] [CrossRef]

- Abbas, Q.; Celebi, M.E. DermoDeep-A classification of melanoma-nevus skin lesions using multi-feature fusion of visual features and deep neural network. Multimed. Tools Appl. 2019, 78, 23559–23580. [Google Scholar] [CrossRef]

- Divya, D.; Ganeshbabu, T.R. Fitness adaptive deer hunting-based region growing and recurrent neural network for melanoma skin cancer detection. Int. J. Imaging Syst. Technol. 2020, 30, 731–752. [Google Scholar] [CrossRef]

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32. [Google Scholar] [CrossRef]

- Gessert, N.; Sentker, T.; Madesta, F.; Schmitz, R.; Kniep, H.; Baltruschat, I.; Werner, R.; Schlaefer, A. Skin Lesion Classification Using CNNs With Patch-Based Attention and Diagnosis-Guided Loss Weighting. IEEE Trans. Biomed. Eng. 2020, 67, 495–503. [Google Scholar] [CrossRef] [PubMed]

- Mahbod, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef]

- Wu, J.; Hu, W.; Wen, Y.; Tu, W.; Liu, X. Skin Lesion Classification Using Densely Connected Convolutional Networks with Attention Residual Learning. Sensors 2020, 20, 7080. [Google Scholar] [CrossRef]

- Hekler, A.; Kather, J.N.; Krieghoff-Henning, E.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Upmeier zu Belzen, J.; French, L.; Schlager, J.G.; Ghoreschi, K.; et al. Effects of Label Noise on Deep Learning-Based Skin Cancer Classification. Front. Med. 2020, 7, 177. [Google Scholar] [CrossRef]

- Qian, S.; Ren, K.; Zhang, W.; Ning, H. Skin lesion classification using CNNs with grouping of multi-scale attention and class-specific loss weighting. Comput. Methods Programs Biomed. 2022, 226, 107166. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Pan, J.-S.; Wang, Z.; Sun, Z.; ul Haq, A.; Deng, W.; Yang, S. Application of generated mask method based on Mask R-CNN in classification and detection of melanoma. Comput. Methods Programs Biomed. 2021, 207, 106174. [Google Scholar] [CrossRef]

- Angeline, J.; Siva Kailash, A.; Karthikeyan, J.; Karthika, R.; Saravanan, V. Automated Prediction of Malignant Melanoma using Two-Stage Convolutional Neural Network. Arch. Dermatol. Res. 2024, 316, 275. [Google Scholar] [CrossRef]

- Almufareh, M.F.; Tariq, N.; Humayun, M.; Khan, F.A. Melanoma identification and classification model based on fine-tuned convolutional neural network. Digit. Health 2024, 10, 20552076241253756. [Google Scholar] [CrossRef]

- Moura, N.; Veras, R.; Aires, K.; Machado, V.; Silva, R.; Araújo, F.; Claro, M. ABCD rule and pre-trained CNNs for melanoma diagnosis. Multimed. Tools Appl. 2019, 78, 6869–6888. [Google Scholar] [CrossRef]

- Sayed, G.I.; Soliman, M.M.; Hassanien, A.E. A novel melanoma prediction model for imbalanced data using optimized SqueezeNet by bald eagle search optimization. Comput. Biol. Med. 2021, 136, 104712. [Google Scholar] [CrossRef]

- Ruga, T.; Musacchio, G.; Maurmo, D. An Ensemble Architecture for Melanoma Classification. Stud. Health Technol. Inform. 2024, 314, 183–184. [Google Scholar] [PubMed]

- Tschandl, P.; Rosendahl, C.; Akay, B.N.; Argenziano, G.; Blum, A.; Braun, R.P.; Cabo, H.; Gourhant, J.Y.; Kreusch, J.; Lallas, A.; et al. Expert-Level Diagnosis of Nonpigmented Skin Cancer by Combined Convolutional Neural Networks. JAMA Dermatol. 2019, 155, 58–65. [Google Scholar] [CrossRef]

- Aladhadh, S.; Alsanea, M.; Aloraini, M.; Khan, T.; Habib, S.; Islam, M. An Effective Skin Cancer Classification Mechanism via Medical Vision Transformer. Sensors 2022, 22, 4008. [Google Scholar] [CrossRef]

- Abdelhafeez, A.; Mohamed, H.K.; Maher, A.; Khalil, N.A. A novel approach toward skin cancer classification through fused deep features and neutrosophic environment. Front. Public Health 2023, 11, 1123581. [Google Scholar] [CrossRef]

- Pérez, E.; Ventura, S. Progressive growing of Generative Adversarial Networks for improving data augmentation and skin cancer diagnosis. Artif. Intell. Med. 2023, 141, 102556. [Google Scholar] [CrossRef]

- Maurya, A.; Stanley, R.J.; Aradhyula, H.Y.; Lama, N.; Nambisan, A.K.; Patel, G.; Saeed, D.; Swinfard, S.; Smith, C.; Jagannathan, S.; et al. Basal Cell Carcinoma Diagnosis with Fusion of Deep Learning and Telangiectasia Features. J. Imaging Inform. Med. 2024, 37, 1137–1150. [Google Scholar] [CrossRef]

- Dahou, A.; Aseeri, A.O.; Mabrouk, A.; Ibrahim, R.A.; Al-Betar, M.A.; Elaziz, M.A. Optimal Skin Cancer Detection Model Using Transfer Learning and Dynamic-Opposite Hunger Games Search. Diagnostics 2023, 13, 1579. [Google Scholar] [CrossRef] [PubMed]

- Tahir, M.; Naeem, A.; Malik, H.; Tanveer, J.; Naqvi, R.A.; Lee, S.-W. DSCC_Net: Multi-Classification Deep Learning Models for Diagnosing of Skin Cancer Using Dermoscopic Images. Cancers 2023, 15, 2179. [Google Scholar] [CrossRef]

- Qasim Gilani, S.; Syed, T.; Umair, M.; Marques, O. Skin Cancer Classification Using Deep Spiking Neural Network. J. Digit. Imaging 2023, 36, 1137–1147. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Su, J.; Xu, Q.; Zhong, Y. A Collaborative Learning Model for Skin Lesion Segmentation and Classification. Diagnostics 2023, 13, 912. [Google Scholar] [CrossRef]

- Raghavendra, P.V.S.P.; Charitha, C.; Begum, K.G.; Prasath, V.B.S. Deep Learning–Based Skin Lesion Multi-class Classification with Global Average Pooling Improvement. J. Digit. Imaging 2023, 36, 2227–2248. [Google Scholar] [CrossRef] [PubMed]

- Anand, V.; Gupta, S.; Altameem, A.; Nayak, S.R.; Poonia, R.C.; Saudagar, A.K.J. An Enhanced Transfer Learning Based Classification for Diagnosis of Skin Cancer. Diagnostics 2022, 12, 1628. [Google Scholar] [CrossRef] [PubMed]

- Alam, T.M.; Shaukat, K.; Khan, W.A.; Hameed, I.A.; Almuqren, L.A.; Raza, M.A.; Aslam, M.; Luo, S. An Efficient Deep Learning-Based Skin Cancer Classifier for an Imbalanced Dataset. Diagnostics 2022, 12, 2115. [Google Scholar] [CrossRef]

- Montaha, S.; Azam, S.; Rafid, A.K.M.R.H.; Islam, S.; Ghosh, P.; Jonkman, M. A shallow deep learning approach to classify skin cancer using down-scaling method to minimize time and space complexity. PLoS ONE 2022, 17, e0269826. [Google Scholar] [CrossRef]

- Li, H.; Li, W.; Chang, J.; Zhou, L.; Luo, J.; Guo, Y. Dermoscopy lesion classification based on GANs and a fuzzy rank-based ensemble of CNN models. Phys. Med. Biol. 2022, 67, 185005. [Google Scholar] [CrossRef]

- Albahar, M.A. Skin Lesion Classification Using Convolutional Neural Network with Novel Regularizer. IEEE Access 2019, 7, 38306–38313. [Google Scholar] [CrossRef]

- Kaur, R.; GholamHosseini, H.; Sinha, R.; Lindén, M. Melanoma Classification Using a Novel Deep Convolutional Neural Network with Dermoscopic Images. Sensors 2022, 22, 1134. [Google Scholar] [CrossRef]

- Afza, F.; Sharif, M.; Mittal, M.; Khan, M.A.; Jude Hemanth, D. A hierarchical three-step superpixels and deep learning framework for skin lesion classification. Methods 2022, 202, 88–102. [Google Scholar] [CrossRef]

- Fraiwan, M.; Faouri, E. On the Automatic Detection and Classification of Skin Cancer Using Deep Transfer Learning. Sensors 2022, 22, 4963. [Google Scholar] [CrossRef] [PubMed]

- Ghazal, T.M.; Hussain, S.; Khan, M.F.; Khan, M.A.; Said, R.A.T.; Ahmad, M. Detection of Benign and Malignant Tumors in Skin Empowered with Transfer Learning. Comput. Intell. Neurosci. 2022, 2022, 4826892. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Albuquerque, V.H.C. de Multi-Class Skin Lesion Detection and Classification via Teledermatology. IEEE J. Biomed. Health Inform. 2021, 25, 4267–4275. [Google Scholar] [CrossRef] [PubMed]

- Vaiyapuri, T.; Balaji, P.; S, S.; Alaskar, H.; Sbai, Z. Computational Intelligence-Based Melanoma Detection and Classification Using Dermoscopic Images. Comput. Intell. Neurosci. 2022, 2022, 2370190. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, Y.; Cai, J.; Lee, T.K.; Miao, C.; Wang, Z.J. SSD-KD: A self-supervised diverse knowledge distillation method for lightweight skin lesion classification using dermoscopic images. Med. Image Anal. 2023, 84, 102693. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of skin lesions using transfer learning and augmentation with Alex-net. PLoS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef]

- Lu, X.; Firoozeh Abolhasani Zadeh, Y.A. Deep Learning-Based Classification for Melanoma Detection Using XceptionNet. J. Healthc. Eng. 2022, 2022, 2196096. [Google Scholar] [CrossRef]

- Shan, P.; Fu, C.; Dai, L.; Jia, T.; Tie, M.; Liu, J. Automatic skin lesion classification using a new densely connected convolutional network with an SF module. Med. Biol. Eng. Comput. 2022, 60, 2173–2188. [Google Scholar] [CrossRef]

- Singh, S.K.; Abolghasemi, V.; Anisi, M.H. Skin Cancer Diagnosis Based on Neutrosophic Features with a Deep Neural Network. Sensors 2022, 22, 6261. [Google Scholar] [CrossRef]

- Singh, R.K.; Gorantla, R.; Allada, S.G.R.; Narra, P. SkiNet: A deep learning framework for skin lesion diagnosis with uncertainty estimation and explainability. PLoS ONE 2022, 17, e0276836. [Google Scholar] [CrossRef]

- Yao, P.; Shen, S.; Xu, M.; Liu, P.; Zhang, F.; Xing, J.; Shao, P.; Kaffenberger, B.; Xu, R.X. Single Model Deep Learning on Imbalanced Small Datasets for Skin Lesion Classification. IEEE Trans. Med. Imaging 2022, 41, 1242–1254. [Google Scholar] [CrossRef] [PubMed]

- Xia, M.; Kheterpal, M.K.; Wong, S.C.; Park, C.; Ratliff, W.; Carin, L.; Henao, R. Lesion identification and malignancy prediction from clinical dermatological images. Sci. Rep. 2022, 12, 15836. [Google Scholar] [CrossRef] [PubMed]

- Ali, M.U.; Khalid, M.; Alshanbari, H.; Zafar, A.; Lee, S.W. Enhancing Skin Lesion Detection: A Multistage Multiclass Convolutional Neural Network-Based Framework. Bioengineering 2023, 10, 1430. [Google Scholar] [CrossRef] [PubMed]

- Lai, W.; Kuang, M.; Wang, X.; Ghafariasl, P.; Sabzalian, M.H.; Lee, S. Skin cancer diagnosis (SCD) using Artificial Neural Network (ANN) and Improved Gray Wolf Optimization (IGWO). Sci. Rep. 2023, 13, 19377. [Google Scholar] [CrossRef] [PubMed]

- Nugroho, E.S.; Ardiyanto, I.; Nugroho, H.A. Boosting the performance of pretrained CNN architecture on dermoscopic pigmented skin lesion classification. Ski. Res. Technol. 2023, 29, e13505. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Dahou, A.; Mabrouk, A.; El-Sappagh, S.; Aseeri, A.O. An Efficient Artificial Rabbits Optimization Based on Mutation Strategy For Skin Cancer Prediction. Comput. Biol. Med. 2023, 163, 107154. [Google Scholar] [CrossRef]

- Pacheco, A.G.C.; Krohling, R.A. The impact of patient clinical information on automated skin cancer detection. Comput. Biol. Med. 2020, 116, 103545. [Google Scholar] [CrossRef]

- Pacheco, A.G.C.; Krohling, R.A. An Attention-Based Mechanism to Combine Images and Metadata in Deep Learning Models Applied to Skin Cancer Classification. IEEE J. Biomed. Health Inform. 2021, 25, 3554–3563. [Google Scholar] [CrossRef]

- Ningrum, D.N.A.; Yuan, S.-P.; Kung, W.-M.; Wu, C.-C.; Tzeng, I.-S.; Huang, C.-Y.; Li, J.Y.-C.; Wang, Y.-C. Deep Learning Classifier with Patient’s Metadata of Dermoscopic Images in Malignant Melanoma Detection. J. Multidiscip. Healthc. 2021, 14, 877–885. [Google Scholar] [CrossRef]

- Tognetti, L.; Bonechi, S.; Andreini, P.; Bianchini, M.; Scarselli, F.; Cevenini, G.; Moscarella, E.; Farnetani, F.; Longo, C.; Lallas, A.; et al. A new deep learning approach integrated with clinical data for the dermoscopic differentiation of early melanomas from atypical nevi. J. Dermatol. Sci. 2021, 101, 115–122. [Google Scholar] [CrossRef]

- Sun, Q.; Huang, C.; Chen, M.; Xu, H.; Yang, Y. Skin Lesion Classification Using Additional Patient Information. BioMed Res. Int. 2021, 2021, 6673852. [Google Scholar] [CrossRef] [PubMed]

- Xing, X.; Song, P.; Zhang, K.; Yang, F.; Dong, Y. ZooME: Efficient Melanoma Detection Using Zoom-in Attention and Metadata Embedding Deep Neural Network. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Online, 1–5 November 2021; pp. 4041–4044. [Google Scholar] [CrossRef]

- Kanchana, K.; Kavitha, S.; Anoop, K.J.; Chinthamani, B. Enhancing Skin Cancer Classification using Efficient Net B0-B7 through Convolutional Neural Networks and Transfer Learning with Patient-Specific Data. Asian Pac. J. Cancer Prev. 2024, 25, 1795–1802. [Google Scholar] [CrossRef]

- Xin, C.; Liu, Z.; Zhao, K.; Miao, L.; Ma, Y.; Zhu, X.; Zhou, Q.; Wang, S.; Li, L.; Yang, F.; et al. An improved transformer network for skin cancer classification. Comput. Biol. Med. 2022, 149, 105939. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V.; Khoshelham, K.; Kaya, S. InSiNet: A deep convolutional approach to skin cancer detection and segmentation. Med. Biol. Eng. Comput. 2022, 60, 643–662. [Google Scholar] [CrossRef] [PubMed]

- Ravi, V. Attention Cost-Sensitive Deep Learning-Based Approach for Skin Cancer Detection and Classification. Cancers 2022, 14, 5872. [Google Scholar] [CrossRef]

- Zhang, Y.; Xie, F.; Song, X.; Zhou, H.; Yang, Y.; Zhang, H.; Liu, J. A rotation meanout network with invariance for dermoscopy image classification and retrieval. Comput. Biol. Med. 2022, 151, 106272. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Javed, K.; Rashid, M.; Bukhari, S.A.C. An integrated framework of skin lesion detection and recognition through saliency method and optimal deep neural network features selection. Neural Comput. Appl. 2019, 32, 15929–15948. [Google Scholar] [CrossRef]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region Extraction and Classification of Skin Cancer: A Heterogeneous framework of Deep CNN Features Fusion and Reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef] [PubMed]

- Bakheet, S.; Alsubai, S.; El-Nagar, A.; Alqahtani, A. A Multi-Feature Fusion Framework for Automatic Skin Cancer Diagnostics. Diagnostics 2023, 13, 1474. [Google Scholar] [CrossRef]

- Maurya, R.; Mahapatra, S.; Dutta, M.K.; Singh, V.P.; Karnati, M.; Sahu, G.; Pandey, N.N. Skin cancer detection through attention guided dual autoencoder approach with extreme learning machine. Sci. Rep. 2024, 14, 17785. [Google Scholar] [CrossRef]

- Naseem, S.; Anwar, M.; Faheem, M.; Fayyaz, M.; Malik, M.S.A. Bayesian-Edge system for classification and segmentation of skin lesions in Internet of Medical Things. Ski. Res. Technol. 2024, 30, e13878. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. Skin-CAD: Explainable deep learning classification of skin cancer from dermoscopic images by feature selection of dual high-level CNNs features and transfer learning. Comput. Biol. Med. 2024, 178, 108798. [Google Scholar] [CrossRef] [PubMed]

- Ali, R.; Manikandan, A.; Lei, R.; Xu, J. A novel SpaSA based hyper-parameter optimized FCEDN with adaptive CNN classification for skin cancer detection. Sci. Rep. 2024, 14, 9336. [Google Scholar] [CrossRef]

- Zhang, D.; Li, A.; Wu, W.; Yu, L.; Kang, X.; Huo, X. CR-Conformer: A fusion network for clinical skin lesion classification. Med. Biol. Eng. Comput. 2023, 62, 85–94. [Google Scholar] [CrossRef] [PubMed]

- Akram, A.; Rashid, J.; Jaffar, M.A.; Faheem, M.; ul Amin, R. Segmentation and classification of skin lesions using hybrid deep learning method in the Internet of Medical Things. Ski. Res. Technol. 2023, 29, e13524. [Google Scholar] [CrossRef]

- Sarkar, R.; Chatterjee, C.C.; Hazra, A. Diagnosis of melanoma from dermoscopic images using a deep depthwise separable residual convolutional network. IET Image Process. 2019, 13, 2130–2142. [Google Scholar] [CrossRef]

- Serte, S.; Demirel, H. Gabor wavelet-based deep learning for skin lesion classification. Comput. Biol. Med. 2019, 113, 103423. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, J.; Xia, Y.; Shen, C. A Mutual Bootstrapping Model for Automated Skin Lesion Segmentation and Classification. IEEE Trans. Med. Imaging 2020, 39, 2482–2493. [Google Scholar] [CrossRef]

- Al-masni, M.A.; Kim, D.-H.; Kim, T.-S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Comput. Methods Programs Biomed. 2020, 190, 105351. [Google Scholar] [CrossRef]

- Abdar, M.; Samami, M.; Dehghani Mahmoodabad, S.; Doan, T.; Mazoure, B.; Hashemifesharaki, R.; Liu, L.; Khosravi, A.; Acharya, U.R.; Makarenkov, V.; et al. Uncertainty quantification in skin cancer classification using three-way decision-based Bayesian deep learning. Comput. Biol. Med. 2021, 135, 104418. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Bukhari, S.A.C.; Nayak, R.S. Developed Newton-Raphson based deep features selection framework for skin lesion recognition. Pattern Recognit. Lett. 2020, 129, 293–303. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Damaševičius, R.; Maskeliūnas, R. Skin lesion segmentation and multiclass classification using deep learning features and improved moth flame optimization. Diagnostics 2021, 11, 811. [Google Scholar] [CrossRef] [PubMed]

- Maqsood, S.; Damaševičius, R. Multiclass skin lesion localization and classification using deep learning based features fusion and selection framework for smart healthcare. Neural Netw. 2023, 160, 238–258. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Gao, H.J.; Zhang, J.; Badami, B. Optimization of the Convolutional Neural Networks for Automatic Detection of Skin Cancer. Open Med. 2020, 15, 27–37. [Google Scholar] [CrossRef]

- Zunair, H.; Ben Hamza, A. Melanoma detection using adversarial training and deep transfer learning. Phys. Med. Biol. 2020, 65, 135005. [Google Scholar] [CrossRef]

- Alenezi, F.; Armghan, A.; Polat, K. A Novel Multi-Task Learning Network Based on Melanoma Segmentation and Classification with Skin Lesion Images. Diagnostics 2023, 13, 262. [Google Scholar] [CrossRef] [PubMed]

- Mahum, R.; Aladhadh, S. Skin Lesion Detection Using Hand-Crafted and DL-Based Features Fusion and LSTM. Diagnostics 2022, 12, 2974. [Google Scholar] [CrossRef]

- Shinde, R.K.; Alam, M.S.; Hossain, M.B.; Md Imtiaz, S.; Kim, J.; Padwal, A.A.; Kim, N. Squeeze-MNet: Precise Skin Cancer Detection Model for Low Computing IoT Devices Using Transfer Learning. Cancers 2022, 15, 12. [Google Scholar] [CrossRef]

- Jain, S.; Singhania, U.; Tripathy, B.; Nasr, E.A.; Aboudaif, M.K.; Kamrani, A.K. Deep Learning-Based Transfer Learning for Classification of Skin Cancer. Sensors 2021, 21, 8142. [Google Scholar] [CrossRef]

- Musthafa, M.M.; T R, M.; Kumar V, V.; Guluwadi, S. Enhanced skin cancer diagnosis using optimized CNN architecture and checkpoints for automated dermatological lesion classification. BMC Med. Imaging 2024, 24, 201. [Google Scholar] [CrossRef]

- Rasel, M.A.; Abdul Kareem, S.; Kwan, Z.; Yong, S.S.; Obaidellah, U. Bluish veil detection and lesion classification using custom deep learnable layers with explainable artificial intelligence (XAI). Comput. Biol. Med. 2024, 178, 108758. [Google Scholar] [CrossRef] [PubMed]

- Arjun, K.P.; Kumar, K.S.; Dhanaraj, R.K.; Ravi, V.; Kumar, T.G. Optimizing time prediction and error classification in early melanoma detection using a hybrid RCNN-LSTM model. Microsc. Res. Tech. 2024, 87, 1789–1809. [Google Scholar] [CrossRef] [PubMed]

- Saleh, N.; Hassan, M.A.; Salaheldin, A.M. Skin cancer classification based on an optimized convolutional neural network and multicriteria decision-making. Sci. Rep. 2024, 14, 17323. [Google Scholar] [CrossRef]

- Hu, Z.; Mei, W.; Chen, H.; Hou, W. Multi-scale feature fusion and class weight loss for skin lesion classification. Comput. Biol. Med. 2024, 176, 108594. [Google Scholar] [CrossRef]

- Kumar, A.; Kumar, M.; Bhardwaj, V.P.; Kumar, S.; Selvarajan, S. A novel skin cancer detection model using modified finch deep CNN classifier model. Sci. Rep. 2024, 14, 11235. [Google Scholar] [CrossRef] [PubMed]

- Alsaade, F.W.; Aldhyani, T.H.H.; Al-Adhaileh, M.H. Developing a Recognition System for Diagnosing Melanoma Skin Lesions Using Artificial Intelligence Algorithms. Comput. Math. Methods Med. 2021, 2021, 9998379. [Google Scholar] [CrossRef]

- Khan, S.; Khan, A. SkinViT: A transformer based method for Melanoma and Nonmelanoma classification. PLoS ONE 2023, 18, e0295151. [Google Scholar] [CrossRef]

- Maron, R.C.; Haggenmüller, S.; von Kalle, C.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Hauschild, A.; French, L.E.; Schlaak, M.; Ghoreschi, K.; et al. Robustness of convolutional neural networks in recognition of pigmented skin lesions. Eur. J. Cancer 2021, 145, 81–91. [Google Scholar] [CrossRef]

- Jojoa Acosta, M.F.; Caballero Tovar, L.Y.; Garcia-Zapirain, M.B.; Percybrooks, W.S. Melanoma diagnosis using deep learning techniques on dermatoscopic images. BMC Med. Imaging 2021, 21, 6. [Google Scholar] [CrossRef]

- Rezk, E.; Eltorki, M.; El-Dakhakhni, W. Interpretable Skin Cancer Classification based on Incremental Domain Knowledge Learning. J. Healthc. Inform. Res. 2023, 7, 59–83. [Google Scholar] [CrossRef]

- Winkler, J.K.; Blum, A.; Kommoss, K.; Enk, A.; Toberer, F.; Rosenberger, A.; Haenssle, H.A. Assessment of Diagnostic Performance of Dermatologists Cooperating With a Convolutional Neural Network in a Prospective Clinical Study. JAMA Dermatol. 2023, 159, 621. [Google Scholar] [CrossRef] [PubMed]

- Adepu, A.K.; Sahayam, S.; Jayaraman, U.; Arramraju, R. Melanoma classification from dermatoscopy images using knowledge distillation for highly imbalanced data. Comput. Biol. Med. 2023, 154, 106571. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Xiong, R.; Jiang, T. CI-Net: Clinical-Inspired Network for Automated Skin Lesion Recognition. IEEE Trans. Med. Imaging 2023, 42, 619–632. [Google Scholar] [CrossRef] [PubMed]

- Bandy, A.D.; Spyridis, Y.; Villarini, B.; Argyriou, V. Intraclass Clustering-Based CNN Approach for Detection of Malignant Melanoma. Sensors 2023, 23, 926. [Google Scholar] [CrossRef]

- Ferris, L.K.; Harkes, J.A.; Gilbert, B.; Winger, D.G.; Golubets, K.; Akilov, O.; Satyanarayanan, M. Computer-aided classification of melanocytic lesions using dermoscopic images. J. Am. Acad. Dermatol. 2015, 73, 769–776. [Google Scholar] [CrossRef]

- Rastgoo, M.; Morel, O.; Marzani, F.; Garcia, R. Ensemble approach for differentiation of malignant melanoma. In Proceedings of the Twelfth International Conference on Quality Control by Artificial Vision 2015, Le Creusot, France, 30 April 2015; Volume 9534. [Google Scholar] [CrossRef]

- Grzesiak-Kopeć, K.; Ogorzałek, M.; Nowak, L. Computational Classification of Melanocytic Skin Lesions. In Artificial Intelligence and Soft Computing, Proceeding of the 15th International Conference, ICAISC 2016, Zakopane, Poland, 12–16 June 2016; Springer International Publishing: Cham, Switzerland; Volume 9693, pp. 169–178. ISBN 978-3-642-13207-0.

- Kharazmi, P.; Lui, H.; Wang, Z.J.; Lee, T.K. Automatic detection of basal cell carcinoma using vascular-extracted features from dermoscopy images. In Proceedings of the 2016 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Vancouver, BC, Canada, 15–18 May 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Kharazmi, P.; AlJasser, M.I.; Lui, H.; Wang, Z.J.; Lee, T.K. Automated Detection and Segmentation of Vascular Structures of Skin Lesions Seen in Dermoscopy, With an Application to Basal Cell Carcinoma Classification. IEEE J. Biomed. Health Inform. 2017, 21, 1675–1684. [Google Scholar] [CrossRef]

- Benyahia, S.; Meftah, B.; Lézoray, O. Multi-features extraction based on deep learning for skin lesion classification. Tissue Cell 2022, 74, 101701. [Google Scholar] [CrossRef]

- Abdullah, A.; Siddique, A.; Shaukat, K.; Jan, T. An Intelligent Mechanism to Detect Multi-Factor Skin Cancer. Diagnostics 2024, 14, 1359. [Google Scholar] [CrossRef]

- Vasconcelos, M.J.M.; Rosado, L.; Ferreira, M. Principal Axes-Based Asymmetry Assessment Methodology for Skin Lesion Image Analysis. In Advances in Visual Computing, Proceedings of the ISVC 2014, Las Vegas, NV, USA, 8–10 December 2014; Springer International Publishing: Cham, Switzerland; pp. 21–31.

- Murugan, A.; Nair, S.A.H.; Kumar, K.P.S. Detection of Skin Cancer Using SVM, Random Forest and kNN Classifiers. J. Med. Syst. 2019, 43, 269. [Google Scholar] [CrossRef]

- Narasimhan, K.; Elamaran, V. Wavelet-based energy features for diagnosis of melanoma from dermoscopic images. Int. J. Biomed. Eng. Technol. 2016, 20, 243. [Google Scholar] [CrossRef]

- Janney, J.B.; Roslin, S.E. Classification of melanoma from Dermoscopic data using machine learning techniques. Multimed. Tools Appl. 2018, 79, 3713–3728. [Google Scholar] [CrossRef]

- Rastgoo, M.; Garcia, R.; Morel, O.; Marzani, F. Automatic differentiation of melanoma from dysplastic nevi. Comput. Med. Imaging Graph. 2015, 43, 44–52. [Google Scholar] [CrossRef]

- Barata, C.; Ruela, M.; Francisco, M.; Mendonca, T.; Marques, J.S. Two Systems for the Detection of Melanomas in Dermoscopy Images Using Texture and Color Features. IEEE Syst. J. 2014, 8, 965–979. [Google Scholar] [CrossRef]

- Giavina-Bianchi, M.; de Sousa, R.M.; de Almeida Paciello, V.Z.; Vitor, W.G.; Okita, A.L.; Prôa, R.; Severino, G.L.d.S.; Schinaid, A.A.; Espírito Santo, R.; Machado, B.S. Implementation of artificial intelligence algorithms for melanoma screening in a primary care setting. PLoS ONE 2021, 16, e0257006. [Google Scholar] [CrossRef] [PubMed]

- Pennisi, A.; Bloisi, D.D.; Nardi, D.; Giampetruzzi, A.R.; Mondino, C.; Facchiano, A. Skin lesion image segmentation using Delaunay Triangulation for melanoma detection. Comput. Med. Imaging Graph. 2016, 52, 89–103. [Google Scholar] [CrossRef]

- Arshad, M.; Khan, M.A.; Tariq, U.; Armghan, A.; Alenezi, F.; Younus Javed, M.; Aslam, S.M.; Kadry, S. A Computer-Aided Diagnosis System Using Deep Learning for Multiclass Skin Lesion Classification. Comput. Intell. Neurosci. 2021, 2021, 9619079. [Google Scholar] [CrossRef] [PubMed]

- Xu, H.; Lu, C.; Berendt, R.; Jha, N.; Mandal, M. Automated analysis and classification of melanocytic tumor on skin whole slide images. Comput. Med. Imaging Graph. 2018, 66, 124–134. [Google Scholar] [CrossRef] [PubMed]

- Ianni, J.D.; Soans, R.E.; Sankarapandian, S.; Chamarthi, R.V.; Ayyagari, D.; Olsen, T.G.; Bonham, M.J.; Stavish, C.C.; Motaparthi, K.; Cockerell, C.J.; et al. Tailored for Real-World: A Whole Slide Image Classification System Validated on Uncurated Multi-Site Data Emulating the Prospective Pathology Workload. Sci. Rep. 2020, 10, 3217. [Google Scholar] [CrossRef]

- Khan, S.; Khan, M.A.; Noor, A.; Fareed, K. SASAN: Ground truth for the effective segmentation and classification of skin cancer using biopsy images. Diagnosis 2024, 11, 283–294. [Google Scholar] [CrossRef]

- Ganster, H.; Pinz, P.; Rohrer, R.; Wildling, E.; Binder, M.; Kittler, H. Automated melanoma recognition. IEEE Trans. Med. Imaging 2001, 20, 233–239. [Google Scholar] [CrossRef]

- Odeh, S.M.; de Toro, F.; Rojas, I.; Saéz-Lara, M.J. Evaluating Fluorescence Illumination Techniques for Skin Lesion Diagnosis. Appl. Artif. Intell. 2012, 26, 696–713. [Google Scholar] [CrossRef]

- Odeh, S.M.; Baareh, A.K.M. A comparison of classification methods as diagnostic system: A case study on skin lesions. Comput. Methods Programs Biomed. 2016, 137, 311–319. [Google Scholar] [CrossRef] [PubMed]

- Gerger, A.; Wiltgen, M.; Langsenlehner, U.; Richtig, E.; Horn, M.; Weger, W.; Ahlgrimm-Siess, V.; Hofmann-Wellenhof, R.; Samonigg, H.; Smolle, J. Diagnostic image analysis of malignant melanoma in in vivo confocal laser-scanning microscopy: A preliminary study. Ski. Res. Technol. 2008, 14, 359–363. [Google Scholar] [CrossRef] [PubMed]

- Lorber, A.; Wiltgen, M.; Hofmann-Wellenhof, R.; Koller, S.; Weger, W.; Ahlgrimm-Siess, V.; Smolle, J.; Gerger, A. Correlation of image analysis features and visual morphology in melanocytic skin tumours using in vivo confocal laser scanning microscopy. Ski. Res. Technol. 2009, 15, 237–241. [Google Scholar] [CrossRef]

- Koller, S.; Wiltgen, M.; Ahlgrimm-Siess, V.; Weger, W.; Hofmann-Wellenhof, R.; Richtig, E.; Smolle, J.; Gerger, A. In vivo reflectance confocal microscopy: Automated diagnostic image analysis of melanocytic skin tumours. J. Eur. Acad. Dermatol. Venereol. 2011, 25, 554–558. [Google Scholar] [CrossRef]

- Yuan, X.; Yang, Z.; Zouridakis, G.; Mullani, N. SVM-based Texture Classification and Application to Early Melanoma Detection. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 4775–4778. [Google Scholar] [CrossRef]

- Masood, A.; Al-Jumaily, A. Semi-advised learning model for skin cancer diagnosis based on histopathalogical images. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 631–634. [Google Scholar] [CrossRef]

- Ruiz, D.; Berenguer, V.; Soriano, A.; Sánchez, B. A decision support system for the diagnosis of melanoma: A comparative approach. Expert Syst. Appl. 2011, 38, 15217–15223. [Google Scholar] [CrossRef]

- Noroozi, N.; Zakerolhosseini, A. Differential diagnosis of squamous cell carcinoma in situ using skin histopathological images. Comput. Biol. Med. 2016, 70, 23–39. [Google Scholar] [CrossRef] [PubMed]

- Noroozi, N.; Zakerolhosseini, A. Computer assisted diagnosis of basal cell carcinoma using Z-transform features. J. Vis. Commun. Image Represent. 2016, 40, 128–148. [Google Scholar] [CrossRef]

- Höhn, J.; Krieghoff-Henning, E.; Jutzi, T.B.; von Kalle, C.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Hobelsberger, S.; Hauschild, A.; Schlager, J.G.; et al. Combining CNN-based histologic whole slide image analysis and patient data to improve skin cancer classification. Eur. J. Cancer 2021, 149, 94–101. [Google Scholar] [CrossRef]

- Chen, M.; Feng, X.; Fox, M.C.; Reichenberg, J.S.; Lopes, F.C.P.S.; Sebastian, K.R.; Markey, M.K.; Tunnell, J.W. Deep learning on reflectance confocal microscopy improves Raman spectral diagnosis of basal cell carcinoma. J. Biomed. Opt. 2022, 27, 065004. [Google Scholar] [CrossRef]

- La Salvia, M.; Torti, E.; Leon, R.; Fabelo, H.; Ortega, S.; Balea-Fernandez, F.; Martinez-Vega, B.; Castaño, I.; Almeida, P.; Carretero, G.; et al. Neural Networks-Based On-Site Dermatologic Diagnosis through Hyperspectral Epidermal Images. Sensors 2022, 22, 7139. [Google Scholar] [CrossRef]

- Liu, L.; Qi, M.; Li, Y.; Liu, Y.; Liu, X.; Zhang, Z.; Qu, J. Staging of Skin Cancer Based on Hyperspectral Microscopic Imaging and Machine Learning. Biosensors 2022, 12, 790. [Google Scholar] [CrossRef]

- Truong, B.C.Q.; Tuan, H.D.; Wallace, V.P.; Fitzgerald, A.J.; Nguyen, H.T. The Potential of the Double Debye Parameters to Discriminate between Basal Cell Carcinoma and Normal Skin. IEEE Trans. Terahertz Sci. Technol. 2015, 5, 990–998. [Google Scholar] [CrossRef]

- Mohr, P.; Birgersson, U.; Berking, C.; Henderson, C.; Trefzer, U.; Kemeny, L.; Sunderkötter, C.; Dirschka, T.; Motley, R.; Frohm-Nilsson, M.; et al. Electrical impedance spectroscopy as a potential adjunct diagnostic tool for cutaneous melanoma. Ski. Res. Technol. 2013, 19, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Kupriyanov, V.; Blondel, W.; Daul, C.; Amouroux, M.; Kistenev, Y. Implementation of data fusion to increase the efficiency of classification of precancerous skin states using in vivo bimodal spectroscopic technique. J. Biophotonics 2023, 16, e202300035. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Lui, H.; Kalia, S.; Lee, T.K.; Zeng, H. Improving skin cancer detection by Raman spectroscopy using convolutional neural networks and data augmentation. Front. Oncol. 2024, 14, 1320220. [Google Scholar] [CrossRef]

- Zhang, W.; Patterson, N.H.; Verbeeck, N.; Moore, J.L.; Ly, A.; Caprioli, R.M.; De Moor, B.; Norris, J.L.; Claesen, M. Multimodal MALDI imaging mass spectrometry for improved diagnosis of melanoma. PLoS ONE 2024, 19, e0304709. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Shen, J.; Liu, C.; Shi, X.; Feng, W.; Sun, H.; Zhang, W.; Zhang, S.; Jiao, Y.; Chen, J.; et al. Applications of Data Characteristic AI-Assisted Raman Spectroscopy in Pathological Classification. Anal. Chem. 2024, 96, 6158–6169. [Google Scholar] [CrossRef]

- Petracchi, B.; Torti, E.; Marenzi, E.; Leporati, F. Acceleration of Hyperspectral Skin Cancer Image Classification through Parallel Machine-Learning Methods. Sensors 2024, 24, 1399. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Ding, Y.; Jiang, H.; Thiers, B.H.; Wang, J.Z. Automatic diagnosis of melanoma using machine learning methods on a spectroscopic system. BMC Med. Imaging 2014, 14, 36. [Google Scholar] [CrossRef]

- Maciel, V.H.; Correr, W.R.; Kurachi, C.; Bagnato, V.S.; da Silva Souza, C. Fluorescence spectroscopy as a tool to in vivo discrimination of distinctive skin disorders. Photodiagnosis Photodyn. Ther. 2017, 19, 45–50. [Google Scholar] [CrossRef]

- Tomatis, S.; Bono, A.; Bartoli, C.; Carrara, M.; Lualdi, M.; Tragni, G.; Marchesini, R. Automated melanoma detection: Multispectral imaging and neural network approach for classification. Med. Phys. 2003, 30, 212–221. [Google Scholar] [CrossRef]

- Tomatis, S.; Carrara, M.; Bono, A.; Bartoli, C.; Lualdi, M.; Tragni, G.; Colombo, A.; Marchesini, R. Automated melanoma detection with a novel multispectral imaging system: Results of a prospective study. Phys. Med. Biol. 2005, 50, 1675–1687. [Google Scholar] [CrossRef] [PubMed]

- Åberg, P.; Birgersson, U.; Elsner, P.; Mohr, P.; Ollmar, S. Electrical impedance spectroscopy and the diagnostic accuracy for malignant melanoma. Exp. Dermatol. 2011, 20, 648–652. [Google Scholar] [CrossRef] [PubMed]

- Giotis, I.; Molders, N.; Land, S.; Biehl, M.; Jonkman, M.F.; Petkov, N. MED-NODE: A computer-assisted melanoma diagnosis system using non-dermoscopic images. Expert Syst. Appl. 2015, 42, 6578–6585. [Google Scholar] [CrossRef]

- Eslava, J.; Druzgalski, C. Differential Feature Space in Mean Shift Clustering for Automated Melanoma Assessment. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering, Toronto, Canada, 7–12 June 2015; Jaffray, D.A., Ed.; Springer International Publishing: Cham, Switzerland, 2015; Volume 51, pp. 1401–1404, ISBN 978-3-319-19386-1. [Google Scholar]

- Karami, N.; Esteki, A. Automated Diagnosis of Melanoma Based on Nonlinear Complexity Features. In Proceedings of the 5th Kuala Lumpur International Conference on Biomedical Engineering 2011, Kuala Lumpur, Malaysia, 20–23 June 2011; Osman, N.A.A., Abas, W.A.B.W., Wahab, A.K.A., Ting, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 270–274. [Google Scholar]

- Tabatabaie, K.; Esteki, A. Independent Component Analysis as an Effective Tool for Automated Diagnosis of Melanoma. In Proceedings of the 2008 Cairo International Biomedical Engineering Conference, Cairo, Egypt, 18–20 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Gautam, D.; Ahmed, M.; Meena, Y.K.; Ul Haq, A. Machine learning–based diagnosis of melanoma using macro images. Int. J. Numer. Method. Biomed. Eng. 2018, 34, e2953. [Google Scholar] [CrossRef] [PubMed]

- Przystalski, K. Decision Support System for Skin Cancer Diagnosis. In Proceedings of the The Ninth International Symposium on Operations Research and Its Applications (ISORA’ 10), Chengdu, China, 19–23 August 2010; pp. 406–413. [Google Scholar]

- Amelard, R.; Glaister, J.; Wong, A.; Clausi, D.A. High-Level Intuitive Features (HLIFs) for Intuitive Skin Lesion Description. IEEE Trans. Biomed. Eng. 2015, 62, 820–831. [Google Scholar] [CrossRef]

- Liu, Z.; Sun, J.; Smith, M.; Smith, L.; Warr, R. Incorporating clinical metadata with digital image features for automated identification of cutaneous melanoma. Br. J. Dermatol. 2013, 169, 1034–1040. [Google Scholar] [CrossRef]

- Sanchez, I.; Agaian, S. Computer aided diagnosis of lesions extracted from large skin surfaces. In Proceedings of the 2012 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Seoul, Republic of Korea, 14–17 October 2012; pp. 2879–2884. [Google Scholar] [CrossRef]

- Oliveira, R.B.; Marranghello, N.; Pereira, A.S.; Tavares, J.M.R.S. A computational approach for detecting pigmented skin lesions in macroscopic images. Expert Syst. Appl. 2016, 61, 53–63. [Google Scholar] [CrossRef]

- Jafari, M.H.; Samavi, S.; Karimi, N.; Soroushmehr, S.M.R.; Ward, K.; Najarian, K. Automatic detection of melanoma using broad extraction of features from digital images. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1357–1360. [Google Scholar] [CrossRef]

- Spyridonos, P.; Gaitanis, G.; Likas, A.; Bassukas, I.D. Automatic discrimination of actinic keratoses from clinical photographs. Comput. Biol. Med. 2017, 88, 50–59. [Google Scholar] [CrossRef]

- Abbes, W.; Sellami, D.; Control, A.; Departmement, E.E. High-Level features for automatic skin lesions neural network based classification. In Proceedings of the 2016 International Image Processing, Applications and Systems (IPAS), Hammamet, Tunisia, 5–7 November 2016; pp. 1–7. [Google Scholar]

- Jafari, M.H.; Samavi, S.; Soroushmehr, S.M.R.; Mohaghegh, H.; Karimi, N.; Najarian, K. Set of descriptors for skin cancer diagnosis using non-dermoscopic color images. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 2638–2642. [Google Scholar] [CrossRef]

- Cavalcanti, P.G.; Scharcanski, J. Automated prescreening of pigmented skin lesions using standard cameras. Comput. Med. Imaging Graph. 2011, 35, 481–491. [Google Scholar] [CrossRef] [PubMed]

- Choudhury, D.; Naug, A.; Ghosh, S. Texture and color feature based WLS framework aided skin cancer classification using MSVM and ELM. In Proceedings of the 2015 Annual IEEE India Conference (INDICON), New Delhi, India, 17–20 December 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Takruri, M.; Rashad, M.W.; Attia, H. Multi-classifier decision fusion for enhancing melanoma recognition accuracy. In Proceedings of the 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA), Ras Al Khaimah, United Arab Emirates, 6–8 December 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Magalhaes, C.; Vardasca, R.; Rebelo, M.; Valenca-Filipe, R.; Ribeiro, M.; Mendes, J. Distinguishing melanocytic nevi from melanomas using static and dynamic infrared thermal imaging. J. Eur. Acad. Dermatol. Venereol. 2019, 33, 1700–1705. [Google Scholar] [CrossRef] [PubMed]

- Magalhaes, C.; Tavares, J.M.R.S.; Mendes, J.; Vardasca, R. Comparison of machine learning strategies for infrared thermography of skin cancer. Biomed. Signal Process. Control 2021, 69, 102872. [Google Scholar] [CrossRef]

- Kia, S.; Setayeshi, S.; Shamsaei, M.; Kia, M. Computer-aided diagnosis (CAD) of the skin disease based on an intelligent classification of sonogram using neural network. Neural Comput. Appl. 2013, 22, 1049–1062. [Google Scholar] [CrossRef]

- Ding, Y.; John, N.W.; Smith, L.; Sun, J.; Smith, M. Combination of 3D skin surface texture features and 2D ABCD features for improved melanoma diagnosis. Med. Biol. Eng. Comput. 2015, 53, 961–974. [Google Scholar] [CrossRef]

- Faita, F.; Oranges, T.; Di Lascio, N.; Ciompi, F.; Vitali, S.; Aringhieri, G.; Janowska, A.; Romanelli, M.; Dini, V. Ultra-high-frequency ultrasound and machine learning approaches for the differential diagnosis of melanocytic lesions. Exp. Dermatol. 2022, 31, 94–98. [Google Scholar] [CrossRef]

| Imaging Modality | Reference of Records |

|---|---|

| Dermoscopy | [14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164] |

| Microscopy | [165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182] |

| Spectroscopy | [183,184,185,186,187,188,189,190,191,192,193,194] |

| Macroscopy | [195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211] |

| Other imaging modalities | [212,213,214,215,216] |

| Main Findings | Example of Records |

|---|---|

| CNNs are a current tendency | [100,101,102,107,108,109,110,111,112,120] |

| Ensembles are sometimes preferred for better ACC | [39,40,41,42,43,44,45,175,176,194,195] |

| Different learners can be tested to achieve best performance | [23,39,151,156,158,160,161,170,176,190,200] |

| Freely available databases are of extreme importance for comparison of works | [25,26,27,30,31,32,34,36,37,51,53,54,55,81,89,111,112,120,121,122,125,150,159,162,192,193] |

| Some authors still prefer the use of licensed software | [22,25,26,28,38,54,111,125,128,165,183,191,196,200,208,215] |

| Optimization of feature extraction stage is key | [23,39,45,168,174,195,201,204,209,211] |

| Some studies lack reports of performance metrics | [27,39,40,46,100,112,174,184,200,210] |

| Good balance between SN and SP is necessary | [50,55,121,159,194,212,214] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vardasca, R.; Mendes, J.G.; Magalhaes, C. Skin Cancer Image Classification Using Artificial Intelligence Strategies: A Systematic Review. J. Imaging 2024, 10, 265. https://doi.org/10.3390/jimaging10110265

Vardasca R, Mendes JG, Magalhaes C. Skin Cancer Image Classification Using Artificial Intelligence Strategies: A Systematic Review. Journal of Imaging. 2024; 10(11):265. https://doi.org/10.3390/jimaging10110265

Chicago/Turabian StyleVardasca, Ricardo, Joaquim Gabriel Mendes, and Carolina Magalhaes. 2024. "Skin Cancer Image Classification Using Artificial Intelligence Strategies: A Systematic Review" Journal of Imaging 10, no. 11: 265. https://doi.org/10.3390/jimaging10110265

APA StyleVardasca, R., Mendes, J. G., & Magalhaes, C. (2024). Skin Cancer Image Classification Using Artificial Intelligence Strategies: A Systematic Review. Journal of Imaging, 10(11), 265. https://doi.org/10.3390/jimaging10110265