1. Introduction

The relentless global impact of the COVID-19 pandemic has underscored the critical importance of early and accurate detection in safeguarding public health, mitigating economic repercussions, and ensuring the long-term well-being of communities worldwide [

1,

2,

3]. Early detection allows diseases to be diagnosed at a stage when they are more likely to respond to treatment, reduces morbidity and mortality, prevents complications and consequences, and lowers healthcare costs. Timely detection not only informs effective patient care but also serves as a linchpin in elevating long-term survival rates, emphasizing the pressing need for innovative diagnostic methodologies. It, therefore, seems important to think about setting up systems capable of facilitating the early detection of disease to contribute to public healthcare management.

Over the years, medical imaging has emerged as an indispensable tool for the early detection, monitoring, and post-treatment follow-up of diseases. From the inception of computer-aided diagnostic systems in the early 1980s to the contemporary era of advanced artificial intelligence (AI) applications, the trajectory of medical image analysis has evolved significantly. While early approaches focused on sequential processing and mathematical modeling, the advent of AI, inspired by the human brain’s learning mechanisms, has ushered in a new era of sophisticated diagnostic systems [

4,

5].

Machine learning, a cornerstone of this transformative paradigm, empowers software applications to enhance predictive accuracy without explicit programming. At the forefront of global health concerns, the ongoing COVID-19 pandemic, caused by the severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2), demands innovative solutions for effective patient screening. Despite the widespread adoption of the reverse transcription polymerase chain reaction test, its limited positivity rate and inability to differentiate SARS-CoV-2 from other respiratory infections underscore the urgent need for alternative screening methods [

6,

7,

8].

Artificial intelligence models for rapid disease detection are systems that employ machine learning, image analysis, or natural language processing techniques to identify individuals infected with SARS-CoV-2, the virus responsible for COVID-19. These models can rely on various types of data, such as radiological images, antigen tests, reported symptoms, or genomic data, offering speed, accuracy, user-friendliness, and cost reduction. They play a crucial role in screening suspected cases, guiding patients to appropriate care, monitoring disease progression, and controlling virus spread.

However, detecting and accurately distinguishing between different strains of SARS-CoV-2 poses a formidable challenge in the landscape of disease detection. The evolving nature of the virus, coupled with its propensity for genetic mutations, introduces complexities that demand innovative solutions. One crucial hurdle lies in the scarcity of sufficient, reliable, and representative data for training and validating models, especially for emerging virus variants. These variants may necessitate adaptations or updates to existing models, highlighting the need for continuous vigilance and adjustment. Additionally, the variability in model performance across diverse contexts, populations, environments, and usage protocols further accentuates the intricacy of the task. The ethical, legal, and social dimensions of deploying these models also contribute to the multifaceted challenges, encompassing issues of privacy, data protection, responsibility, transparency, explainability, security, reliability, and trust. The gravity of these challenges necessitates a comprehensive and principled approach to model development and usage, emphasizing the urgency of addressing these intricacies for the advancement of disease detection strategies.

To tackle these challenges, adherence to principles and best practices for the development and use of artificial intelligence models for rapid disease detection is crucial. Guidelines proposed by organizations like the World Health Organization (WHO) [

9], the Organisation for Economic Co-operation and Development (OECD), and the European Commission provide valuable insights.

Furthermore, fostering collaboration and data, knowledge, and experience sharing among stakeholders involved in the fight against COVID-19, including researchers, healthcare professionals, policymakers, industry professionals, and citizens, is essential. This collaborative approach can significantly contribute to overcoming the challenges posed by the rapidly evolving viral threat and enhancing the effectiveness of AI models in disease detection.

The key contributions of this paper include the proposition and validation of an innovative model, synthesized from the strengths of existing architectures and enriched through transfer learning. Experimental results demonstrate superior predictive accuracy compared to benchmark models. By advancing the state of the art in COVID-19 detection, this research significantly contributes to global efforts to revolutionize patient care pathways and bolster long-term survival rates. In the next section, an introduction to transfer learning is provided to facilitate a smooth transition into the discussion on its relevance to the proposed deep learning model.

The remainder of this paper is organized as follows.

Section 2, “Materials and Methods”, presents the methodology used for our innovative deep learning model for automated COVID-19 detection, and the dataset and experimental setup are also outlined.

Section 3, “Results”, presents the detailed findings from the validation experiments. In

Section 4, “Discussion”, the implications, challenges, and limitations of the results are comprehensively discussed, along with a comparison with related works.

Section 5, “Conclusions”, concludes the paper with a synthesis of the key insights and future directions for the proposed model.

2. Materials and Methods

2.1. Xception Model

Xception, short for “Extreme Inception”, is a state-of-the-art deep learning architecture proposed by François Chollet in 2017 [

10]. It represents a significant advancement in convolutional neural network (CNN) design, particularly tailored for image classification tasks [

11]. At its core, Xception embodies a fundamental departure from conventional CNN architectures, introducing a novel approach to convolution operations.

Traditional CNNs rely on standard convolutional layers to extract features from input images. These layers apply a set of learnable filters across the entire input volume, producing feature maps that capture spatial patterns. However, this approach often leads to an excessive number of parameters, resulting in computational inefficiency and increased risk of overfitting.

In contrast, Xception introduces depth-wise separable convolutions, a concept borrowed from the Inception family of architectures [

12]. Depth-wise separable convolutions decompose the standard convolution operation into two distinct stages: depth-wise convolution and point-wise convolution.

Let

F represent the input feature map,

K denote the kernel (or filter), and

S signify the stride length. The depth-wise convolution operation is defined as:

where

represents the output feature map and

denotes the corresponding element of the kernel. By performing convolutions independently across each channel of the input feature map, depth-wise convolutions significantly reduce computational complexity while preserving spatial information.

Following the depth-wise convolution, point-wise convolutions are applied to integrate information across channels. This operation is expressed as:

where

represents the

k-th element of the point-wise kernel. By incorporating both depth-wise and point-wise convolutions, Xception achieves a remarkable balance between computational efficiency and expressive power.

In addition to its architectural innovations, Xception employs other techniques, such as batch normalization and ReLU activations, to enhance model stability and convergence speed [

13]. These elements collectively contribute to Xception’s exceptional performance in image classification tasks, making it a preferred choice for various applications, including medical image analysis.

In the subsequent sections, we delve deeper into the integration of Xception within our proposed deep learning paradigm, elucidating its role in revolutionizing automated disease detection from medical imaging data.

2.2. Transfer Learning

Transfer learning is a powerful concept in machine learning that leverages knowledge gained from one task to improve performance on a different but related task [

14]. In the context of deep learning and neural networks, transfer learning involves using pre-trained models on large datasets and fine-tuning them for specific tasks. This approach is particularly beneficial when the target task has limited labeled data.

Transfer learning can be conceptualized as follows: let S denote the source domain, T denote the target domain, denote the probability distribution of the source domain, denote the probability distribution of the target domain, X denote the input space, and Y denote the output space. The model’s objective is to learn a mapping that performs well on T based on the knowledge acquired from S.

Transfer learning encompasses various strategies, such as feature extraction and fine-tuning. Feature extraction involves using the pre-trained model’s early layers as generic feature extractors and appending task-specific layers for the target task. Fine-tuning, on the other hand, refines the entire model on the target task by adjusting the weights of all layers while retaining the knowledge gained from the source task.

2.3. Transfer Learning Framework: Feature Extraction, Encoding, Decoding, and Feature Generation

In the transfer learning phase, we introduce various layers, including a feature extraction module that enables us to address the expressiveness issue resulting from different locations and times of image capture. This module precedes encoding and decision making for effective preprocessing. The methodology encompasses a feature-encoding module, a decoding module, and a feature-generation segment. This approach is both sequential and parallel, enhancing the overall efficiency of the process.

In our proposed model for COVID-19 detection, transfer learning plays a pivotal role in overcoming the challenges associated with the evolving nature of the SARS-CoV-2 virus. By leveraging pre-existing knowledge from a large dataset, the model can effectively learn relevant features for distinguishing between different strains, enhancing its accuracy and robustness in the face of emerging variants. The subsequent sections delve into the specifics of our novel model and the experimental validation conducted to demonstrate its superior predictive accuracy compared to established models.

In the quest for a groundbreaking solution to the crucial problem at hand, we introduce a novel deep learning paradigm meticulously designed to redefine the landscape of automated COVID-19 detection from X-ray thorax images. Our proposed model seamlessly integrates the robust Xception architecture for pattern recognition, offering a transformative approach to enhance diagnostic accuracy in the realm of healthcare.

2.4. Model Architecture

The architectural prowess of our proposed model is illustrated in

Figure 1, encapsulating the fusion of cutting-edge techniques for feature extraction, transfer learning, and classification. In the spirit of innovation, we have strategically organized the model into distinctive blocks, each contributing to the overall efficacy. Our model’s architecture, meticulously crafted to train on existing data and predict outcomes for new individuals, stands as a testament to the sophistication required in the healthcare domain.

Our model is subdivided into three blocks. The first contains the basic Xception model. The second contains a “GloabalAveragePooling2D” layer followed by four “BatchNormalization” layers, which provide data belonging to the same scale. This makes the neural network easier to train. Normalization, therefore, consists of formatting the input data to facilitate the machine learning process. These layers are separated by a dropout layer, with a rate of 0.5 to minimize the risk of overlearning, and a dense layer with 256 units linking all the layers of the network. The third and final block consists of a dense layer with a “softmax” activation function.

2.5. Layer Model

Considering the combination of two functions

and

to produce a new function

, we define the convolution model in Equation (

3)

To this, we add its decomposition into two distinct stages: a depth convolution, applying a spatial filter of size

to each input channel, and a point convolution, then applying a

filter to all output channels, allowing for the reduction or increase in the number of channels, ensuring depth-separable convolution. An Xception block is necessary in our approach, as it constitutes a basic unit of the Xception approach. To this end, we consider a succession of depth-separable convolution layers, followed by a batch normalization layer and an activation function, as illustrated by the layered model shown in

Figure 2.

To add transfer learning, let us consider any task denoted by

as an image classification function defined by Equation (

4), as follows:

where

is the input space and

is the output space.

To predict the images, we then define f, a function that associates a numerical value with each element of or . To have a function that approximates the function to be learned, using adjustable parameters, we denote M as an image classification model such that defines the model prediction for image .

Considering that the source and target tasks share certain common features, which can be captured by the model, we then define two subsets as follows:

The features are given by and the parameters by .

We have , , where is the source model associated with the source task and is the target model used by the target task .

Figure 2 illustrates our proposed layer model, showcasing the intricate composition that underlies the model’s ability to navigate the complexities of X-ray thorax images. The layer model emphasizes the interplay between feature extraction, transfer learning, and classification layers, providing a comprehensive insight into the neural architecture’s depth and sophistication.

2.6. Integration of Xception Model: Rationale and Advantages

The decision to incorporate the Xception network into our model stems from its multifaceted advantages. Xception, evolving from Inception modules within convolutional neural networks, strategically positions itself as an intermediate step between conventional convolution and the depth-separable convolution operation. This distinctive characteristic empowers our model with unparalleled adaptability and expressive capacity, crucial for intricate nuances in COVID-19 pattern recognition within X-ray thorax images.

Our proposed Xception-Enhanced Transfer Learning Model represents a pioneering stride toward revolutionizing the diagnostic landscape in healthcare. By harnessing the strengths of Xception and seamlessly integrating them into our deep learning paradigm, we anticipate a paradigm shift in the accuracy and efficiency of automated COVID-19 detection. The subsequent sections delve into the experimental validation, results, and discussions, providing a comprehensive narrative of the model’s performance and its potential impact on the broader healthcare domain.

Furthermore, while numerous image analysis methods, such as the YOLO model, demonstrate high performance in computer vision, our specific study opts for the Xception model due to its exceptional performance in the medical domain. Unlike other models, Xception introduces depth-wise separable convolutions, enhancing its capabilities.

Xception transforms the original Inception-V3 block by expanding it and replacing various convolution operations (1 × 1, 5 × 5, 3 × 3) with a single 3 × 3 convolution followed by a 1 × 1 convolution. This modification aims to effectively regulate computational complexity. Additionally, unlike Inception, which applies ReLU non-linearities after convolution operations, depth-wise separable convolutions are generally implemented without non-linearities.

The choice of Xception for our methodology is grounded in its prowess in medical applications and its innovative architectural modifications, contributing to improved computational efficiency without compromising performance.

2.7. Tools and Technologies Used

The realization of our work leveraged cutting-edge tools and technologies. On the hardware front, an HP Probook computer with a Windows 10 operating system, 64-bit architecture, Intel(R) Core i7-9700F CPU @ 3.00GHz, and 16 GB of RAM played a pivotal role. The software toolkit used in the experiment included TensorFlow 1.5.3, Keras 2.15.0, Scikit-learn 1.2.2, Scikit-image 0.19.3, Python 3.11.6, and Flask 3.0.2.

2.8. Dataset

To evaluate our model, we utilized X-ray images from COVID-19 patients sourced from multiple datasets, including hospital data related to the COVID-19 outbreak [

15,

16] and Kaggle data [

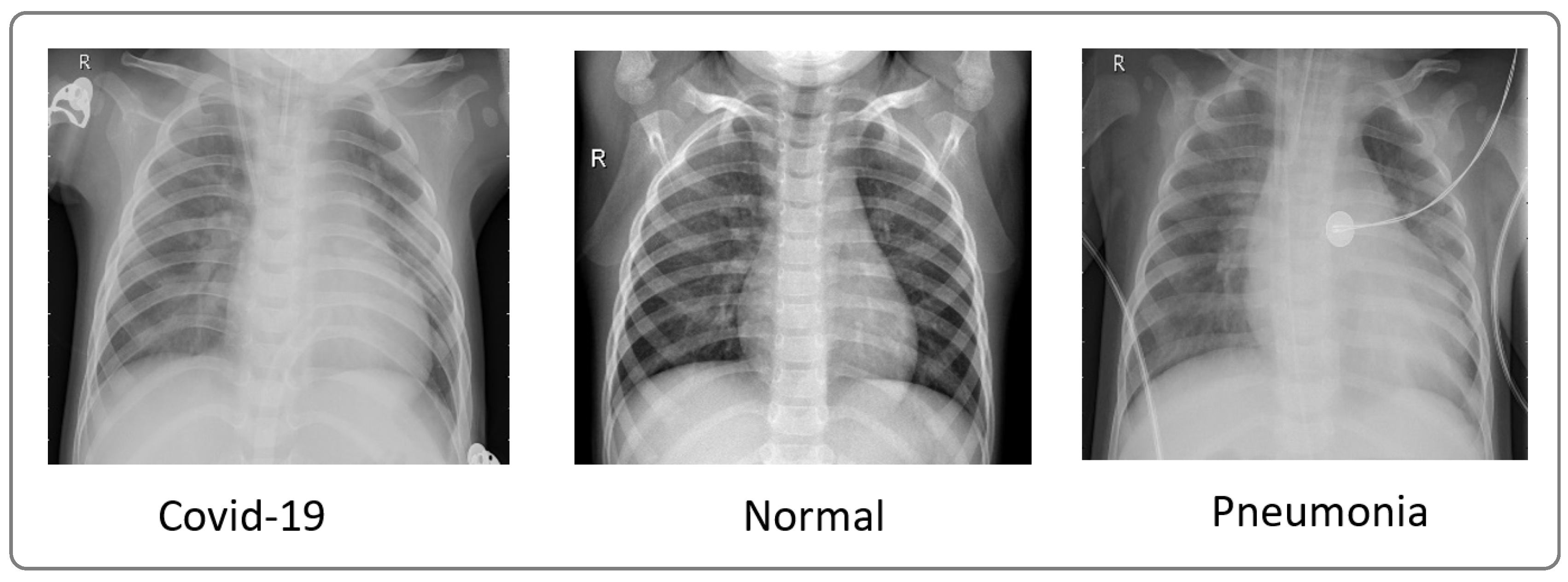

16]. We considered two datasets. The first one comprised 4050 images, with 3000 images for training and 1050 images for testing. The second one comprised 6378 images, with 4878 images for training and 1500 images for testing. These datasets included images from confirmed COVID-19 patients, normal individuals, and pneumonia patients.

Figure 3 showcases a sample of X-ray images from these datasets.

Table 1 displays the distribution by class of the first dataset with 3000 images (training), including 2400 images for training and 600 images for validation.

Table 2 presents the class distribution of the second dataset with 4878 images (train), including 3902 training images and 976 images for validation.

5. Conclusions

In this study, we introduced the Xception-Enhanced Transfer Learning Model as a novel approach for precise COVID-19 detection from X-ray images. Leveraging the power of transfer learning, our model has demonstrated exceptional performance across various metrics, surpassing established benchmarks and setting a new standard in diagnostic accuracy.

Our model’s success is evidenced by its outstanding performance compared to other models, as summarized in

Table 5. With a training accuracy of 96% and a validation accuracy of 97%, our model consistently outperforms ResNet50, VGG-16, and even the baseline Xception model. Furthermore, our model exhibits impressive recall and precision rates of 97% and 98.8%, respectively, highlighting its robustness in correctly identifying COVID-19 cases while minimizing false positives.

By harnessing transfer learning, our approach not only achieves superior accuracy but also addresses key challenges in model development. The utilization of pre-trained models, such as Xception, significantly reduces the need for extensive labeled data, making our model both resource-efficient and scalable for deployment in real-world settings. Moreover, the integration of transfer learning enhances our model’s adaptability to diverse datasets and medical imaging tasks, paving the way for future advancements in diagnostic methodologies.

Our findings underscore the transformative potential of advanced machine learning techniques in combating global health crises. The Xception-Enhanced Transfer Learning Model represents a paradigm shift in COVID-19 detection, offering enhanced diagnostic capabilities that can significantly impact patient care pathways. Beyond its immediate implications for COVID-19 diagnosis, our model lays the foundation for the development of innovative diagnostic tools in healthcare, promising improved outcomes and better management of infectious diseases.