Image-Based 3D Reconstruction in Laparoscopy: A Review Focusing on the Quantitative Evaluation by Applying the Reconstruction Error

Abstract

:1. Introduction

1.1. Image-Based 3D Reconstruction Techniques Used in Laparoscopy

1.2. Related Work

1.3. Research of Image-Based 3D Reconstruction in Laparoscopy since 2015

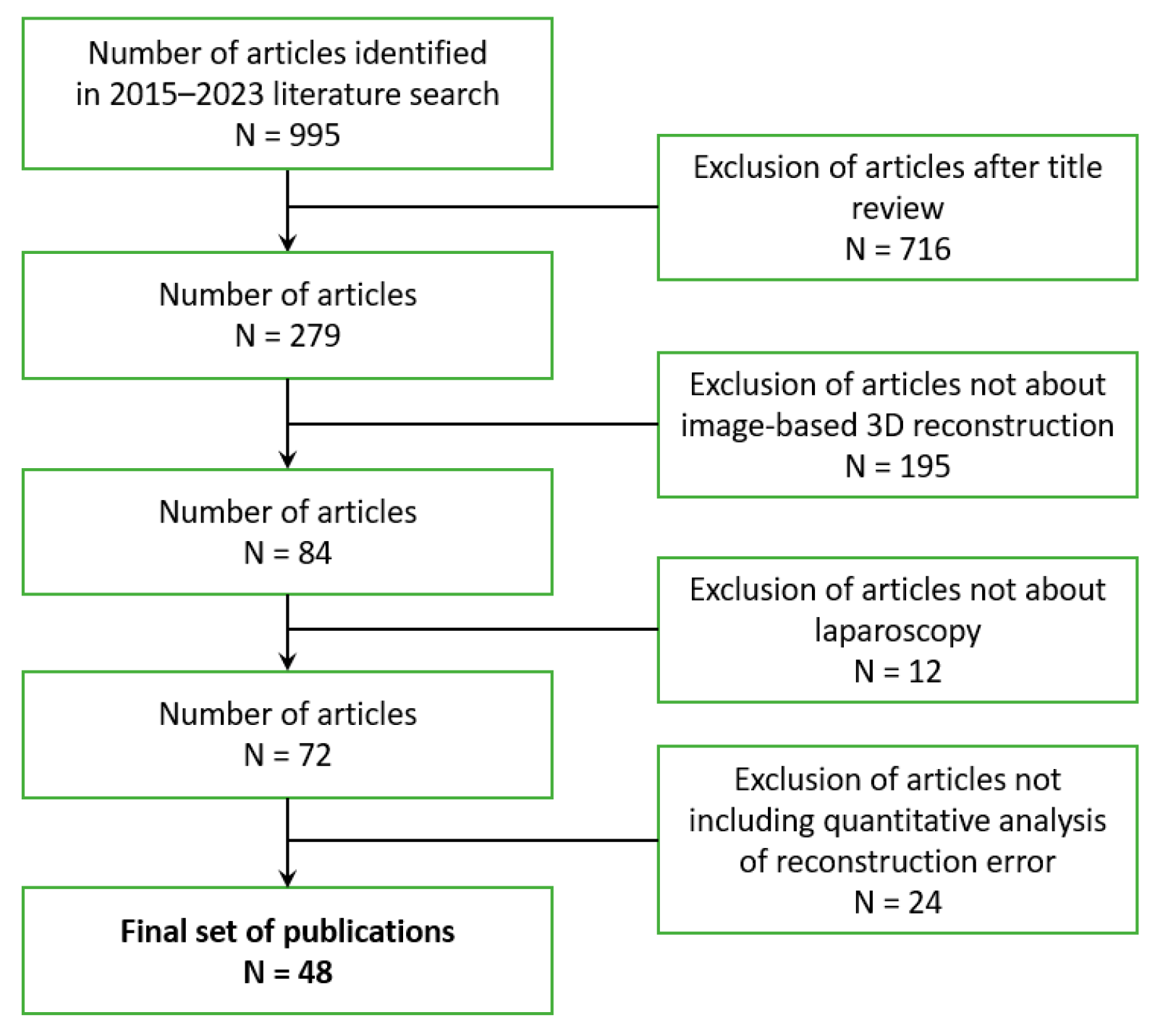

2. Methods

2.1. Search Strategy

2.2. Metrics for Quantitative Evaluation of 3D Reconstructions

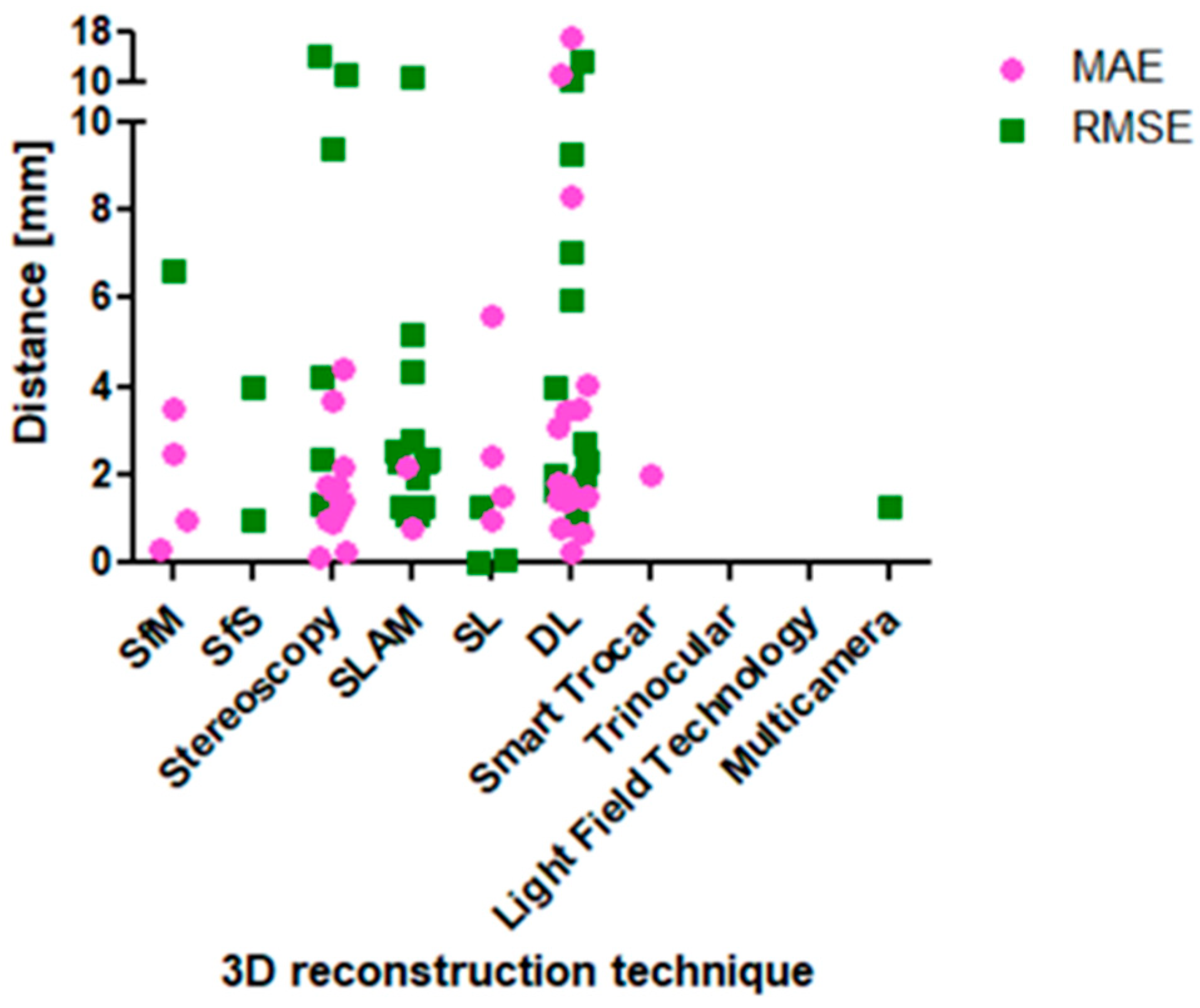

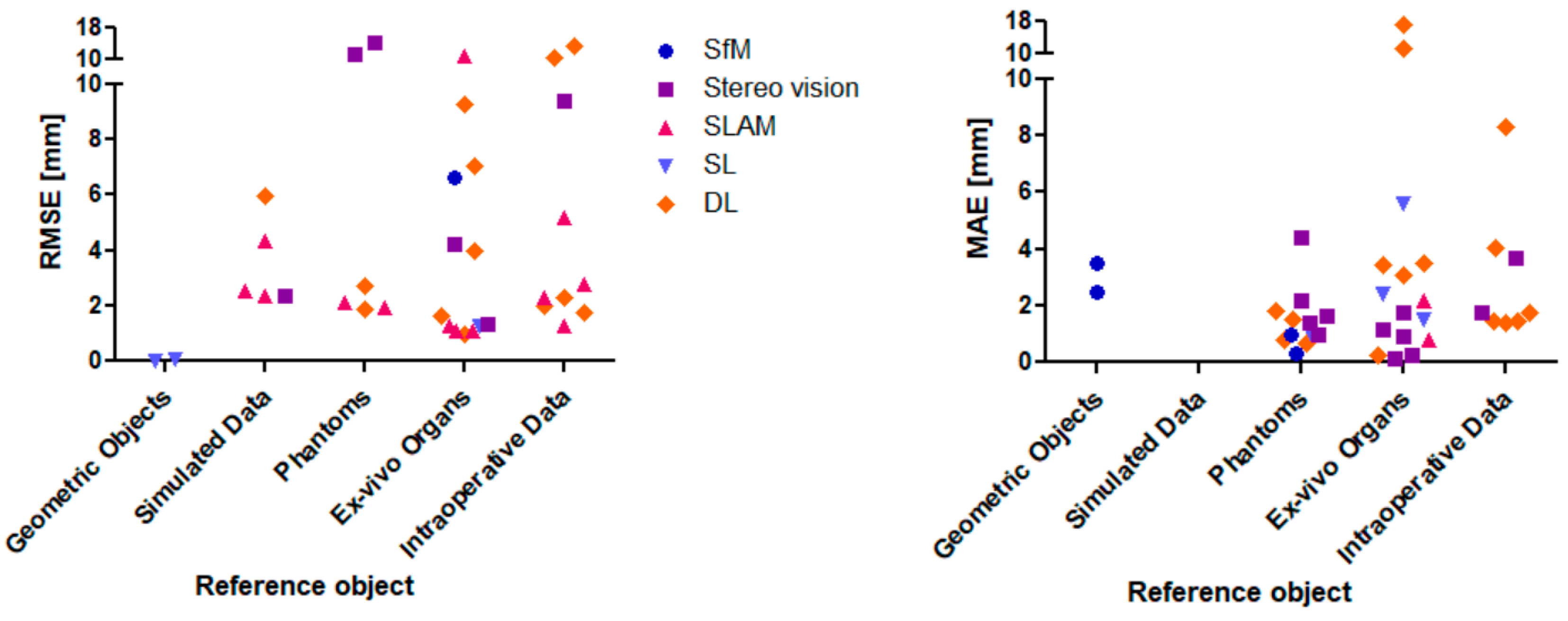

3. Results

4. Discussion

- Reference object (geometric objects vs. simulated data vs. ex/in vivo data).

- Method of ground truth acquisition (CT data vs. laser scanner vs. manual labelling).

- Method of camera localization (known from external sensor vs. image-based estimation).

- Number of frames (single shot vs. multiple frames/multi view).

- Image resolution (the higher the more 3D points).

- Number of training data (relevant for DL approaches.

- Implemented algorithms (e.g., SIFT vs. SURF, ORB-SLAM vs. VISLAM).

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jaffray, B. Minimally Invasive Surgery. Arch. Dis. Child. 2005, 90, 537–542. [Google Scholar] [CrossRef] [PubMed]

- Sugarbaker, P.H. Laparoscopy in the Diagnosis and Treatment of Peritoneal Metastases. Ann. Laparosc. Endosc. Surg. 2019, 4, 42. [Google Scholar] [CrossRef]

- Agarwal, S.K.; Chapron, C.; Giudice, L.C.; Laufer, M.R.; Leyland, N.; Missmer, S.A.; Singh, S.S.; Taylor, H.S. Clinical Diagnosis of Endometriosis: A Call to Action. Am. J. Obstet. Gynecol. 2019, 220, 354.e1–354.e12. [Google Scholar] [CrossRef] [PubMed]

- Andrea, T.; Congcong, W.; Rafael, P.; Faouzi, A.C.; Azeddine, B.; Bjorn, E.; Jakob, E.O. Validation of Stereo Vision Based Liver Surface Reconstruction for Image Guided Surgery. In Proceedings of the 2018 Colour and Visual Computing Symposium (CVCS), Gjovik, Norway, 19–20 September 2018; IEEE: New York City, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Saeidi, H.; Opfermann, J.D.; Kam, M.; Wei, S.; Leonard, S.; Hsieh, M.H.; Kang, J.U.; Krieger, A. Autonomous Robotic Laparoscopic Surgery for Intestinal Anastomosis. Sci. Robot. 2022, 7, eabj2908. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, K.; Spalazzi, J.; Lazzaretti, S.; Cook, M.; Trivedi, A. Clinical Utility of Senhance AI Inguinal Hernia. Available online: https://www.asensus.com/documents/clinical-utility-senhance-ai-inguinal-hernia (accessed on 17 July 2023).

- Göbel, B.; Möller, K. Quantitative Evaluation of Camera-Based 3D Reconstruction in Laparoscopy: A Review. In Proceedings of the 12th IFAC Symposium on Biological and Medical Systems, Villingen-Schwenningen, Germany, 11–13 September 2024. forthcoming. [Google Scholar]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical Techniques for 3D Surface Reconstruction in Computer-Assisted Laparoscopic Surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef] [PubMed]

- Marcinczak, J.M.; Painer, S.; Grigat, R.-R. Sparse Reconstruction of Liver Cirrhosis from Monocular Mini-Laparoscopic Sequences. In Proceedings of the Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 21–26 February 2015; SPIE: Bellingham, WA, USA, 2015; pp. 470–475. [Google Scholar]

- Kumar, A.; Wang, Y.-Y.; Liu, K.-C.; Hung, W.-C.; Huang, S.-W.; Lie, W.-N.; Huang, C.-C. Surface Reconstruction from Endoscopic Image Sequence. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics-Taiwan, Taipei, Taiwan, 6–8 June 2015; IEEE: New York City, NY, USA, 2015; pp. 404–405. [Google Scholar]

- Conen, N.; Luhmann, T.; Maas, H.-G. Development and Evaluation of a Miniature Trinocular Camera System for Surgical Measurement Applications. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 127–138. [Google Scholar] [CrossRef]

- Su, Y.-H.; Huang, K.; Hannaford, B. Multicamera 3D Reconstruction of Dynamic Surgical Cavities: Camera Grouping and Pair Sequencing. In Proceedings of the 2019 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 3–5 April 2019; IEEE: New York City, NY, USA, 2019; pp. 1–7. [Google Scholar]

- Zhou, H.; Jayender, J. EMDQ-SLAM: Real-Time High-Resolution Reconstruction of Soft Tissue Surface from Stereo Laparoscopy Videos. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part IV 24. Springer Nature: Cham, Switzerland, 2021; Volume 12904, pp. 331–340. [Google Scholar]

- Lin, J.; Clancy, N.T.; Qi, J.; Hu, Y.; Tatla, T.; Stoyanov, D.; Maier-Hein, L.; Elson, D.S. Dual-Modality Endoscopic Probe for Tissue Surface Shape Reconstruction and Hyperspectral Imaging Enabled by Deep Neural Networks. Med. Image Anal. 2018, 48, 162–176. [Google Scholar] [CrossRef] [PubMed]

- Allan, M.; Mcleod, J.; Wang, C.; Rosenthal, J.C.; Hu, Z.; Gard, N.; Eisert, P.; Fu, K.X.; Zeffiro, T.; Xia, W.; et al. Stereo Correspondence and Reconstruction of Endoscopic Data Challenge 2021. arXiv 2021, arXiv:2101.01133. [Google Scholar]

- Wei, R.; Li, B.; Mo, H.; Lu, B.; Long, Y.; Yang, B.; Dou, Q.; Liu, Y.; Sun, D. Stereo Dense Scene Reconstruction and Accurate Localization for Learning-Based Navigation of Laparoscope in Minimally Invasive Surgery. IEEE Trans. Biomed. Eng. 2022, 70, 488–500. [Google Scholar] [CrossRef]

- Chong, N. 3D Reconstruction of Laparoscope Images with Contrastive Learning Methods. IEEE Access 2022, 10, 4456–4470. [Google Scholar] [CrossRef]

- Huang, B.; Zheng, J.-Q.; Nguyen, A.; Xu, C.; Gkouzionis, I.; Vyas, K.; Tuch, D.; Giannarou, S.; Elson, D.S. Self-Supervised Depth Estimation in Laparoscopic Image Using 3D Geometric Consistency. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 18–22 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 13–22. [Google Scholar]

- Zhang, G.; Huang, Z.; Lin, J.; Li, Z.; Cao, E.; Pang, Y. A 3D Reconstruction Based on an Unsupervised Domain Adaptive for Binocular Endoscopy. Front. Physiol. 2022, 13, 1734. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Wang, C.; Duan, X.; Liu, H.; Wang, P.; Hu, Q.; Jia, F. Unsupervised Learning of Depth Estimation from Imperfect Rectified Stereo Laparoscopic Images. Comput. Biol. Med. 2022, 140, 105109. [Google Scholar] [CrossRef] [PubMed]

- Huang, B.; Zheng, J.; Nguyen, A.; Tuch, D.; Vyas, K.; Giannarou, S.; Elson, D.S. Self-Supervised Generative Adversarial Network for Depth Estimation in Laparoscopic Images. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part IV 24. Springer Nature: Cham, Switzerland, 2021; pp. 227–237. [Google Scholar]

- Luo, H.; Yin, D.; Zhang, S.; Xiao, D.; He, B.; Meng, F.; Zhang, Y.; Cai, W.; He, S.; Zhang, W.; et al. Augmented Reality Navigation for Liver Resection with a Stereoscopic Laparoscope. Comput. Methods Programs Biomed. 2020, 187, 105099. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Hu, Q.; Jia, F. Details Preserved Unsupervised Depth Estimation by Fusing Traditional Stereo Knowledge from Laparoscopic Images. Healthc. Technol. Lett. 2019, 6, 154–158. [Google Scholar] [CrossRef] [PubMed]

- Antal, B. Automatic 3D Point Set Reconstruction from Stereo Laparoscopic Images Using Deep Neural Networks 2016. arXiv 2016, arXiv:1608.00203. [Google Scholar]

- Penne, J.; Höller, K.; Stürmer, M.; Schrauder, T.; Schneider, A.; Engelbrecht, R.; Feußner, H.; Schmauss, B.; Hornegger, J. Time-of-Flight 3-D Endoscopy. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, London, UK, 20–24 September 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 467–474. [Google Scholar]

- Sui, C.; Wang, Z.; Liu, Y. A 3D Laparoscopic Imaging System Based on Stereo-Photogrammetry with Random Patterns. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York City, NY, USA, 2018; pp. 1276–1282. [Google Scholar]

- Garbey, M.; Nguyen, T.B.; Huang, A.Y.; Fikfak, V.; Dunkin, B.J. A Method for Going from 2D Laparoscope to 3D Acquisition of Surface Landmarks by a Novel Computer Vision Approach. Int. J. CARS 2018, 13, 267–280. [Google Scholar] [CrossRef] [PubMed]

- Kwan, E.; Qin, Y.; Hua, H. Development of a Light Field Laparoscope for Depth Reconstruction. In Proceedings of the Imaging and Applied Optics 2017 (3D, AIO, COSI, IS, MATH, pcAOP) (2017), San Francisco, CA, USA, 26–29 June 2017; paper DW1F.2. Optica Publishing Group: Washington, DC, USA, 2017; p. DW1F.2. [Google Scholar]

- Collins, T.; Bartoli, A. Towards Live Monocular 3D Laparoscopy Using Shading and Specularity Information. In Proceedings of the International Conference on Information Processing in Computer-Assisted Interventions, Pisa, Italy, 27 June 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 11–21. [Google Scholar]

- Devernay, F.; Mourgues, F.; Coste-Maniere, E. Towards Endoscopic Augmented Reality for Robotically Assisted Minimally Invasive Cardiac Surgery. In Proceedings of the International Workshop on Medical Imaging and Augmented Reality, Hong Kong, China, 10–12 June 2001; pp. 16–20. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localisation and Mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Mountney, P.; Stoyanov, D.; Davison, A.; Yang, G.-Z. Simultaneous Stereoscope Localization and Soft-Tissue Mapping for Minimal Invasive Surgery. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Copenhagen, Denmark, 1–6 October 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 9, pp. 347–354. [Google Scholar]

- Robinson, A.; Alboul, L.; Rodrigues, M. Methods for Indexing Stripes in Uncoded Structured Light Scanning Systems. J. WSCG 2004, 12, 371–378. [Google Scholar]

- Bardozzo, F.; Collins, T.; Forgione, A.; Hostettler, A.; Tagliaferri, R. StaSiS-Net: A Stacked and Siamese Disparity Estimation Network for Depth Reconstruction in Modern 3D Laparoscopy. Med. Image Anal. 2022, 77, 102380. [Google Scholar] [CrossRef]

- Cao, Z.; Huang, W.; Liao, X.; Deng, X.; Wang, Q. Self-Supervised Dense Depth Prediction in Monocular Endoscope Video for 3D Liver Surface Reconstruction. J. Phys. Conf. Ser. 2021, 1883, 012050. [Google Scholar] [CrossRef]

- Maekawa, R.; Shishido, H.; Kameda, Y.; Kitahara, I. Dense 3D Organ Modeling from a Laparoscopic Video. In Proceedings of the International Forum on Medical Imaging in Asia, Taipei, Taiwan, 24–27 January 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11792, pp. 115–120. [Google Scholar]

- Su, Y.-H.; Huang, K.; Hannaford, B. Multicamera 3D Viewpoint Adjustment for Robotic Surgery via Deep Reinforcement Learning. J. Med. Robot. Res. 2021, 6, 2140003. [Google Scholar] [CrossRef]

- Xu, K.; Chen, Z.; Jia, F. Unsupervised Binocular Depth Prediction Network for Laparoscopic Surgery. Comput. Assist. Surg. 2019, 24 (Suppl. S1), 30–35. [Google Scholar] [CrossRef]

- Lin, B.; Goldgof, D.; Gitlin, R.; You, Y.; Sun, Y.; Qian, X. Video Based 3D Reconstruction, Laparoscope Localization, and Deformation Recovery for Abdominal Minimally Invasive Surgery: A Survey. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 158–178. [Google Scholar] [CrossRef] [PubMed]

- Bergen, T.; Wittenberg, T. Stitching and Surface Reconstruction from Endoscopic Image Sequences: A Review of Applications and Methods. IEEE J. Biomed. Health Inform. 2014, 20, 304–321. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Groch, A.; Bartoli, A.; Bodenstedt, S.; Boissonnat, G.; Haase, S.; Heim, E.; Hornegger, J.; Jannin, P.; Kenngott, H.; et al. Comparative Validation of Single-Shot Optical Techniques for Laparoscopic 3D Surface Reconstruction. IEEE Trans. Med. Imaging 2014, 33, 1913–1930. [Google Scholar] [CrossRef] [PubMed]

- Schneider, C.; Allam, M.; Stoyanov, D.; Hawkes, D.J.; Gurusamy, K.; Davidson, B.R. Performance of Image Guided Navigation in Laparoscopic Liver Surgery—A Systematic Review. Surg. Oncol. 2021, 38, 101637. [Google Scholar] [CrossRef] [PubMed]

- Groch, A.; Seitel, A.; Hempel, S.; Speidel, S.; Engelbrecht, R.; Penne, J.; Holler, K.; Rohl, S.; Yung, K.; Bodenstedt, S.; et al. 3D Surface Reconstruction for Laparoscopic Computer-Assisted Interventions: Comparison of State-of-the-Art Methods. In Proceedings of the Medical Imaging 2011: Visualization, Image-Guided Procedures, and Modeling, Lake Buena Vista, FL, USA, 13–15 February 2011; SPIE: Bellingham, WA, USA, 2011; Volume 7964, pp. 351–359. [Google Scholar]

- Conen, N.; Luhmann, T. Overview of Photogrammetric Measurement Techniques in Minimally Invasive Surgery Using Endoscopes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 33–40. [Google Scholar] [CrossRef]

- Parchami, M.; Cadeddu, J.; Mariottini, G.-L. Endoscopic Stereo Reconstruction: A Comparative Study. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; IEEE: New York City, NY, USA, 2014; pp. 2440–2443. [Google Scholar]

- Cheema, M.N.; Nazir, A.; Sheng, B.; Li, P.; Qin, J.; Kim, J.; Feng, D.D. Image-Aligned Dynamic Liver Reconstruction Using Intra-Operative Field of Views for Minimal Invasive Surgery. IEEE Trans. Biomed. Eng. 2019, 66, 2163–2173. [Google Scholar] [CrossRef]

- Puig, L.; Daniilidis, K. Monocular 3D Tracking of Deformable Surfaces. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York City, NY, USA, 2016; pp. 580–586. [Google Scholar]

- Bourdel, N.; Collins, T.; Pizarro, D.; Debize, C.; Grémeau, A.; Bartoli, A.; Canis, M. Use of Augmented Reality in Laparoscopic Gynecology to Visualize Myomas. Fertil. Steril. 2017, 107, 737–739. [Google Scholar] [CrossRef]

- Francois, T.; Debize, C.; Calvet, L.; Collins, T.; Pizarro, D.; Bartoli, A. Uteraug: Augmented Reality in Laparoscopic Surgery of the Uterus. In Proceedings of the Démonstration Présentée lors de la Conférence ISMAR en octobre, Nantes, France, 9–13 October 2017. [Google Scholar]

- Modrzejewski, R.; Collins, T.; Bartoli, A.; Hostettler, A.; Soler, L.; Marescaux, J. Automatic Verification of Laparoscopic 3d Reconstructions with Stereo Cross-Validation. In Proceedings of the Surgetica; Surgetica, Strasbourg, France, 20–22 November 2017. [Google Scholar]

- Wang, R.; Price, T.; Zhao, Q.; Frahm, J.M.; Rosenman, J.; Pizer, S. Improving 3D Surface Reconstruction from Endoscopic Video via Fusion and Refined Reflectance Modeling. In Proceedings of the Medical Imaging 2017: Image Processing, Orlando, FL, USA, 12–14 February 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10133, pp. 80–86. [Google Scholar]

- Oh, J.; Kim, K. Accurate 3D Reconstruction for Less Overlapped Laparoscopic Sequential Images. In Proceedings of the 2017 2nd International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, France, 30 August–1 September 2017; IEEE: New York City, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Su, Y.-H.; Huang, I.; Huang, K.; Hannaford, B. Comparison of 3D Surgical Tool Segmentation Procedures with Robot Kinematics Prior. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York City, NY, USA, 2018; pp. 4411–4418. [Google Scholar]

- Modrzejewski, R.; Collins, T.; Hostettler, A.; Marescaux, J.; Bartoli, A. Light Modelling and Calibration in Laparoscopy. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 859–866. [Google Scholar] [CrossRef] [PubMed]

- Allan, M.; Kapoor, A.; Mewes, P.; Mountney, P. Non Rigid Registration of 3D Images to Laparoscopic Video for Image Guided Surgery. In Proceedings of the Computer-Assisted and Robotic Endoscopy: Second International Workshop, CARE 2015, Held in Conjunction with MICCAI 2015, Munich, Germany, 5 October 2015; Revised Selected Papers 2. Springer International Publishing: Cham, Switzerland, 2016; Volume 9515, pp. 109–116. [Google Scholar]

- Lin, B. Visual SLAM and Surface Reconstruction for Abdominal Minimally Invasive Surgery; University of South Florida: Tampa, FL, USA, 2015. [Google Scholar]

- Reichard, D.; Bodenstedt, S.; Suwelack, S.; Mayer, B.; Preukschas, A.; Wagner, M.; Kenngott, H.; Müller-Stich, B.; Dillmann, R.; Speidel, S. Intraoperative On-the-Fly Organ- Mosaicking for Laparoscopic Surgery. J. Med. Imaging 2015, 2, 045001. [Google Scholar] [CrossRef] [PubMed]

- Penza, V.; Ortiz, J.; Mattos, L.S.; Forgione, A.; De Momi, E. Dense Soft Tissue 3D Reconstruction Refined with Super-Pixel Segmentation for Robotic Abdominal Surgery. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.T.; Cheng, C.-H.; Liu, D.-G.; Liu, J.; Huang, W.S.W. Designing a New Endoscope for Panoramic-View with Focus-Area 3D-Vision in Minimally Invasive Surgery. J. Med. Biol. Eng. 2020, 40, 204–219. [Google Scholar] [CrossRef]

- Teatini, A.; Brunet, J.-N.; Nikolaev, S.; Edwin, B.; Cotin, S.; Elle, O.J. Use of Stereo-Laparoscopic Liver Surface Reconstruction to Compensate for Pneumoperitoneum Deformation through Biomechanical Modeling. In Proceedings of the VPH2020-Virtual Physiological Human, Paris, France, 26–28 August 2020. [Google Scholar]

- Shibata, M.; Hayashi, Y.; Oda, M.; Misawa, K.; Mori, K. Quantitative Evaluation of Organ Surface Reconstruction from Stereo Laparoscopic Images. IEICE Tech. Rep. 2018, 117, 117–122. [Google Scholar]

- Zhang, L.; Ye, M.; Giataganas, P.; Hughes, M.; Yang, G.-Z. Autonomous Scanning for Endomicroscopic Mosaicing and 3D Fusion. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: New York City, NY, USA, 2017; pp. 3587–3593. [Google Scholar]

- Luo, X.; McLeod, A.J.; Jayarathne, U.L.; Pautler, S.E.; Schlachta, C.M.; Peters, T.M. Towards Disparity Joint Upsampling for Robust Stereoscopic Endoscopic Scene Reconstruction in Robotic Prostatectomy. In Proceedings of the Medical Imaging 2016: Image-Guided Procedures, Robotic Interventions, and Modeling, San Diego, CA, USA, 28 February–1 March 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9786, pp. 232–241. [Google Scholar]

- Thompson, S.; Totz, J.; Song, Y.; Johnsen, S.; Stoyanov, D.; Gurusamy, K.; Schneider, C.; Davidson, B.; Hawkes, D.; Clarkson, M.J. Accuracy Validation of an Image Guided Laparoscopy System for Liver Resection. In Proceedings of the Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 21–26 February 2015; SPIE: Bellingham, WA, USA, 2015; Volume 941509, pp. 52–63. [Google Scholar]

- Wittenberg, T.; Eigl, B.; Bergen, T.; Nowack, S.; Lemke, N.; Erpenbeck, D. Panorama-Endoscopy of the Abdomen: From 2D to 3D. In Proceedings of the Computer und Roboterassistierte Chirurgie (CURAC 2017), Hannover, Germany, 5–7 October 2017. Tech. Rep. [Google Scholar]

- Reichard, D.; Häntsch, D.; Bodenstedt, S.; Suwelack, S.; Wagner, M.; Kenngott, H.G.; Müller, B.P.; Maier-Hein, L.; Dillmann, R.; Speidel, S. Projective Biomechanical Depth Matching for Soft Tissue Registration in Laparoscopic Surgery. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1101–1110. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Cheikh, F.A.; Kaaniche, M.; Elle, O.J. Liver Surface Reconstruction for Image Guided Surgery. In Proceedings of the Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling, Houston, TX, USA, 12–15 February 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10576, pp. 576–583. [Google Scholar]

- Speers, A.D.; Ma, B.; Jarnagin, W.R.; Himidan, S.; Simpson, A.L.; Wildes, R.P. Fast and Accurate Vision-Based Stereo Reconstruction and Motion Estimation for Image-Guided Liver Surgery. Healthc. Technol. Lett. 2018, 5, 208–214. [Google Scholar] [CrossRef] [PubMed]

- Kolagunda, A.; Sorensen, S.; Mehralivand, S.; Saponaro, P.; Treible, W.; Turkbey, B.; Pinto, P.; Choyke, P.; Kambhamettu, C. A Mixed Reality Guidance System for Robot Assisted Laparoscopic Radical Prostatectomy|SpringerLink. In Proceedings of the OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis, Granada, Spain, 16–20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 164–174. [Google Scholar]

- Zhou, H.; Jagadeesan, J. Real-Time Dense Reconstruction of Tissue Surface from Stereo Optical Video. IEEE Trans. Med. Imaging 2020, 39, 400–412. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Chen, E.C.S.; Pautler, S.; Peters, T.M. A Robust Edge-Preserving Stereo Matching Method for Laparoscopic Images. IEEE Trans. Med. Imaging 2022, 41, 1651–1664. [Google Scholar] [CrossRef]

- Chen, L. On-the-Fly Dense 3D Surface Reconstruction for Geometry-Aware Augmented Reality. Ph.D. Thesis, Bournemouth University, Poole, UK, 2019. [Google Scholar]

- Zhang, X.; Ji, X.; Wang, J.; Fan, Y.; Tao, C. Renal Surface Reconstruction and Segmentation for Image-Guided Surgical Navigation of Laparoscopic Partial Nephrectomy. Biomed. Eng. Lett. 2023, 13, 165–174. [Google Scholar] [CrossRef]

- Su, Y.-H.; Lindgren, K.; Huang, K.; Hannaford, B. A Comparison of Surgical Cavity 3D Reconstruction Methods. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; IEEE: New York City, NY, USA, 2020; pp. 329–336. [Google Scholar]

- Chen, L.; Tang, W.; John, N.W.; Wan, T.R.; Zhang, J.J. Augmented Reality for Depth Cues in Monocular Minimally Invasive Surgery 2017. arXiv 2017, arXiv:1703.01243. [Google Scholar]

- Mahmoud, N.; Hostettler, A.; Collins, T.; Soler, L.; Doignon, C.; Montiel, J.M.M. SLAM Based Quasi Dense Reconstruction for Minimally Invasive Surgery Scenes 2017. arXiv 2017, arXiv:1705.09107. [Google Scholar]

- Wei, G.; Feng, G.; Li, H.; Chen, T.; Shi, W.; Jiang, Z. A Novel SLAM Method for Laparoscopic Scene Reconstruction with Feature Patch Tracking. In Proceedings of the 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 13–14 November 2020; IEEE: New York City, NY, USA, 2020; pp. 287–291. [Google Scholar]

- Mahmoud, N.; Collins, T.; Hostettler, A.; Soler, L.; Doignon, C.; Montiel, J.M.M. Live Tracking and Dense Reconstruction for Handheld Monocular Endoscopy. IEEE Trans. Med. Imaging 2019, 38, 79–89. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Liao, X.; Sun, Y.; Wang, Q. Improved ORB-SLAM Based 3D Dense Reconstruction for Monocular Endoscopic Image. In Proceedings of the 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 13–14 November 2020; IEEE: New York City, NY, USA, 2020; pp. 101–106. [Google Scholar]

- Yu, X.; Zhao, J.; Wu, H.; Wang, A. A Novel Evaluation Method for SLAM-Based 3D Reconstruction of Lumen Panoramas. Sensors 2023, 23, 7188. [Google Scholar] [CrossRef] [PubMed]

- Wei, G.; Shi, W.; Feng, G.; Ao, Y.; Miao, Y.; He, W.; Chen, T.; Wang, Y.; Ji, B.; Jiang, Z. An Automatic and Robust Visual SLAM Method for Intra-Abdominal Environment Reconstruction. J. Adv. Comput. Intell. Intell. Inform. 2023, 27, 1216–1229. [Google Scholar] [CrossRef]

- Lin, J.; Clancy, N.T.; Hu, Y.; Qi, J.; Tatla, T.; Stoyanov, D.; Maier-Hein, L.; Elson, D.S. Endoscopic Depth Measurement and Super-Spectral-Resolution Imaging 2017. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017. [Google Scholar]

- Le, H.N.D.; Opfermann, J.D.; Kam, M.; Raghunathan, S.; Saeidi, H.; Leonard, S.; Kang, J.U.; Krieger, A. Semi-Autonomous Laparoscopic Robotic Electro-Surgery with a Novel 3D Endoscope. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; IEEE: New York City, NY, USA, 2018; pp. 6637–6644. [Google Scholar]

- Edgcumbe, P.; Pratt, P.; Yang, G.-Z.; Nguan, C.; Rohling, R. Pico Lantern: Surface Reconstruction and Augmented Reality in Laparoscopic Surgery Using a Pick-up Laser Projector. Med. Image Anal. 2015, 25, 95–102. [Google Scholar] [CrossRef] [PubMed]

- Geurten, J.; Xia, W.; Jayarathne, U.L.; Peters, T.M.; Chen, E.C.S. Endoscopic Laser Surface Scanner for Minimally Invasive Abdominal Surgeries. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018; Proceedings, Part IV 11. Springer International Publishing: Cham, Switzerland, 2018; pp. 143–150. [Google Scholar]

- Fusaglia, M.; Hess, H.; Schwalbe, M.; Peterhans, M.; Tinguely, P.; Weber, S.; Lu, H. A Clinically Applicable Laser-Based Image-Guided System for Laparoscopic Liver Procedures. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1499–1513. [Google Scholar] [CrossRef] [PubMed]

- Sui, C.; He, K.; Lyu, C.; Wang, Z.; Liu, Y.-H. 3D Surface Reconstruction Using a Two-Step Stereo Matching Method Assisted with Five Projected Patterns. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: New York City, NY, USA, 2019; pp. 6080–6086. [Google Scholar]

- Clancy, N.T.; Lin, J.; Arya, S.; Hanna, G.B.; Elson, D.S. Dual Multispectral and 3D Structured Light Laparoscope. In Proceedings of the Multimodal Biomedical Imaging X, San Francisco, CA, USA, 7 February 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9316, pp. 60–64. [Google Scholar]

- Sugawara, M.; Kiyomitsu, K.; Namae, T.; Nakaguchi, T.; Tsumura, N. An Optical Projection System with Mirrors for Laparoscopy. Artif. Life Robot. 2017, 22, 51–57. [Google Scholar] [CrossRef]

- Sui, C.; Wu, J.; Wang, Z.; Ma, G.; Liu, Y.-H. A Real-Time 3D Laparoscopic Imaging System: Design, Method, and Validation. IEEE Trans. Biomed. Eng. 2020, 67, 2683–2695. [Google Scholar] [CrossRef]

- Mao, F.; Huang, T.; Ma, L.; Zhang, X.; Liao, H. A Monocular Variable Magnifications 3D Laparoscope System Using Double Liquid Lenses. IEEE J. Transl. Eng. Health Med. 2023, 12, 32–42. [Google Scholar] [CrossRef]

- Barbed, O.L.; Montiel, J.M.M.; Fua, P.; Murillo, A.C. Tracking Adaptation to Improve SuperPoint for 3D Reconstruction in Endoscopy. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 583–593. [Google Scholar]

- Cruciani, L.; Chen, Z.; Fontana, M.; Musi, G.; De Cobelli, O.; De Momi, E. 3D Reconstruction and Segmentation in Laparoscopic Robotic Surgery. In Proceedings of the 5th Italian Conference on Robotics and Intelligent Machines (IRIM), Rome, Italy, 20–22 October 2023. [Google Scholar]

- Nguyen, K.T.; Tozzi, F.; Rashidian, N.; Willaert, W.; Vankerschaver, J.; De Neve, W. Towards Abdominal 3-D Scene Rendering from Laparoscopy Surgical Videos Using NeRFs 2023. In International Workshop on Machine Learning in Medical Imaging; Springer Nature: Cham, Switzerland, 2023; pp. 83–93. [Google Scholar]

- Palmatier, R.W.; Houston, M.B.; Hulland, J. Review Articles: Purpose, Process, and Structure. J. Acad. Mark. Sci. 2018, 46, 1–5. [Google Scholar] [CrossRef]

- Carnwell, R.; Daly, W. Strategies for the Construction of a Critical Review of the Literature. Nurse Educ. Pract. 2001, 1, 57–63. [Google Scholar] [CrossRef] [PubMed]

- London, I.C. Hamlyn Centre Laparoscopic/Endoscopic Video Datasets. Available online: http://hamlyn.doc.ic.ac.uk/vision/ (accessed on 30 January 2024).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lin, Z.; Lei, C.; Yang, L. Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization. Sensors 2023, 23, 9872. [Google Scholar] [CrossRef] [PubMed]

- Duval, C.; Jones, J. Assessment of the Amplitude of Oscillations Associated with High-Frequency Components of Physiological Tremor: Impact of Loading and Signal Differentiation. Exp. Brain Res. 2005, 163, 261–266. [Google Scholar] [CrossRef]

| Image-Based 3D Reconstruction Technique | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | Total |

|---|---|---|---|---|---|---|---|---|---|---|

| SfM [9,47,48,49,50,51,52,53,54,55] | 1 | 1 | 5 | 1 | 1 | 1 | 10 | |||

| SfS [10] | 1 | 1 | ||||||||

| Stereo vision [4,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75] | 4 | 2 | 3 | 6 | 3 | 1 | 1 | 20 | ||

| SLAM [13,54,73,76,77,78,79,80,81,82] | 2 | 2 | 1 | 2 | 1 | 2 | 10 | |||

| SL [5,26,83,84,85,86,87,88,89,90,91] | 2 | 1 | 2 | 3 | 1 | 1 | 1 | 11 | ||

| DL [14,15,16,17,18,19,20,21,22,23,24,35,36,37,38,39,92,93,94,95] | 1 | 1 | 2 | 1 | 5 | 6 | 4 | 20 | ||

| Smart Trocar [27] | 1 | 1 | ||||||||

| Trinocular [11] | 1 | 1 | ||||||||

| Light Field Technology [28] | 1 | 1 | ||||||||

| [12] | 1 | 1 | ||||||||

| ToF | 0 | |||||||||

| Total | 8 | 5 | 15 | 13 | 6 | 8 | 6 | 8 | 7 | 76 |

| Metrics | Definition | Target Value |

|---|---|---|

| MAE | 0.0 mm | |

| MRE | 0.0 mm | |

| STD | 0.0 mm | |

| RMSE | 0.0 mm | |

| RMSE log | 0.0 mm | |

| SqRel | 0.0 mm |

| 3D Reconstruction Technique | Reference Object | Ground Truth | MAE ± STD [mm] | RMSE ± STD [mm] | Author |

|---|---|---|---|---|---|

| Structure-from-Motion (SfM) | 15 seq. of 3 cirrhotic liver phantoms | Optical tracking system | 0.3–1.0 | - | [9] |

| Ex vivo bovine liver | PhotoScan (SL projecting system + mechanical arm for laparoscope guidance) | 0.15 ± 0.05 | - | [55] | |

| (1) Paper (2) T-shirt (3) Stereo laparoscopic video | RGB-D sensor | 2.5–3.5 | - | [48] | |

| Dataset of moving camera of static surgical scene w/known camera pose | Space Spider (white light 3D scanner) | - | 6.628 | [54] | |

| Shape-from-Shading (SfS) | Laparoscopic (mono) surgery video data | Two consecutive frames after ICP | - | 1.0–4.0 | [10] |

| Stereo vision | Liver phantom model | Intraoperative CT data | 4.4 ± 0.8 | - | [4] |

| Publicly available phantom dataset | CT data | - | 5.45 Pixel | [56] | |

| Ex vivo porcine liver and Hamlyn centre dataset | Included in dataset | 1.14; 1.77–3.7 | - | [57] | |

| Ex vivo porcine liver | CT data | - | 4.21 ± 0.63 | [58] | |

| Hamlyn centre dataset | Included in dataset (made by Library for efficient large-scale Stereo Matching (LIBELAS)) | 1.75 | - | [59] | |

| Phantom model | Eight points on phantom for distance measurement | 1.0 | - | [60] | |

| In vivo liver with and without pneumoperitoneum, but with a tube disconnected to stop breathing) | CT data (intraoperative) | - | 9.35 ± 2.94 | [61] | |

| Phantom surgical cavity | Space Spider 3D Scanner | 11.2547; 13.9759 | [75] | ||

| Phantom model | 3D scanner | 1.4 ± 1.07 | - | [62] | |

| Ex vivo tissue | External tracking system | 0.89 ± 0.7 | 1.31 ± 0.98 | [63] | |

| Phantom heart | CT data | 2.16 ± 0.65 | - | [68] | |

| In vitro porcine heart images | CT data | 0.23 ± 0.33 | - | [74] | |

| Liver phantom | CT data | 1.65 ± 1.41 | - | [69] | |

| Simulated MIS scene | Known scene dimensions | - | 2.37 | [73] | |

| Simultaneous Localization and Mapping (SLAM) | Dataset of moving camera of static surgical scene w/unknown camera pose | Space Spider (white light 3D scanner) | - | 10.78 | [54] |

| Ex vivo porcine livers | Electromagnetic trackers | 0.8–2.2 ± 0.4–0.7 | 1.1–1.3 * ± 0.6–0.7 * | [13] | |

| Simulated MIS scene | Known scene dimensions | - | 4.32 | [76] | |

| Porcine in vivo data | CT data | - | 2.8 | [77] | |

| Synthetic abdominal cavity box (silicone) | Known scene dimensions | - | 1.94; 2.13; | [81] | |

| Hamlyn centre dataset | Included in dataset | - | 1.3; 2.3; 5.2 | [82] | |

| Simulated MIS scene | Known scene dimensions | - | 2.54 | [73] | |

| In vivo porcine abdominal cavity | CT data | - | 1.1 | [79] | |

| Structured Light (SL) | Cylinder surface w/diameter of 22.5 cm | Known object dimensions | - | 0.07 | [26] |

| Porcine cadaver kidney | Measurement of cut in kidney | 2.44 ± 0.34 | - | [84] | |

| Ex vivo kidney | Certus optical tracker stylus | 5.6 ± 4.9 1.5 ± 0.6 | - | [85] | |

| Ex vivo porcine liver and kidney | CT data | - | 1.28 | [86] | |

| Patient-specific phantoms built by rapid prototyping | Known object dimensions | 1.0 ± 0.4 | - | [87] | |

| (1) Plate (2) Cylinder | Known object dimensions | - | 0.0078 | [88] | |

| Deep Learning (DL) | KITTI dataset | Included in dataset | - | 5.953 | [73] |

| Silicone heart phantom | MCAx25 handheld scanner | 0.68 ± 0.13 | - | [14] | |

| SCARED dataset test set 1, test set 2 | Included in dataset (SL + da Vinci Xi kinematics) | 3.44; 3.47 | 7.01 * (Rosenthal) | [15] | |

| SCARED dataset test set 1, test set 2 | Included in dataset | - | 1.0 | [16] | |

| Hamlyn centre dataset and additional monocular laparoscopic image sequences | Included in dataset and CT data | 0.26 | 1.98 | [17] | |

| SCARED dataset test set 1, test set 2 | Included in dataset | - | 9.27 | [18] | |

| Hamlyn centre dataset | Included in dataset | 1.45 ± 0.4 | 1.62 ± 0.42 | [19] | |

| SCARED dataset test set 1, test set 2 | Included in dataset | 3.05 | 3.961 * ± 1.237 * | [20] | |

| SCARED dataset test set 1, test set 2 | Included in dataset | 11.23; 17.42 | - | [21] | |

| Real colonoscopy videos | Points being reprojected onto images | 1.75 ± 0.07 | - | [93] | |

| In vivo porcine hearts | Pre-operative CT data | 1.41 ± 0.42 1.43 ± 0.47 | 1.77 ± 0.51 2.27 ± 0.39 | [22] | |

| Hamlyn centre dataset and three points on heart phantom model | Artec Eva scanner | 4.047 0.78 ± 0.22 | - | [92] | |

| SCARED dataset test set 1, test set 2 | Included in dataset | 2.64 ± 1.64 Pixel | 5.47 ± 1.46 Pixel | [94] | |

| Heart phantom model and Da Vinci dataset | Rigid confidence measurement | 1.49 ± 0.41 1.84 ± 0.4 8.3 ± 3.1 | 1.9 ± 0.38 2.69 ± 0.58 10.5 ± 3.7 | [23] | |

| Laparoscopic cardiac dataset | Included in dataset | - | 13.18 | [24] | |

| Smart Trocar® | 3D-printed plastic sphere model. Surface is divided into 1 cm2 squares | Known object dimensions | 2.0 | - | [27] |

| Trinocular | Cartilage of pig knee joint | Vialux zSnapper (3D fringe projection system) | ±1.1 | - | [11] |

| Multicamera (10 cameras) | Phantom surgical scene | Space Spider | - | 1.29 | [12] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Göbel, B.; Reiterer, A.; Möller, K. Image-Based 3D Reconstruction in Laparoscopy: A Review Focusing on the Quantitative Evaluation by Applying the Reconstruction Error. J. Imaging 2024, 10, 180. https://doi.org/10.3390/jimaging10080180

Göbel B, Reiterer A, Möller K. Image-Based 3D Reconstruction in Laparoscopy: A Review Focusing on the Quantitative Evaluation by Applying the Reconstruction Error. Journal of Imaging. 2024; 10(8):180. https://doi.org/10.3390/jimaging10080180

Chicago/Turabian StyleGöbel, Birthe, Alexander Reiterer, and Knut Möller. 2024. "Image-Based 3D Reconstruction in Laparoscopy: A Review Focusing on the Quantitative Evaluation by Applying the Reconstruction Error" Journal of Imaging 10, no. 8: 180. https://doi.org/10.3390/jimaging10080180