Convolutional Neural Network–Machine Learning Model: Hybrid Model for Meningioma Tumour and Healthy Brain Classification

Abstract

1. Introduction

- Selection of an adequate brain MRI dataset that contains meningioma tumours and healthy brains;

- Development of an original CNN.

- Selection of two pre-trained models (i.e., EfficientNetV2B0 and DenseNet169) according to the top five accuracies obtained on an ImageNet dataset.

- Three CNN models’ features were used to train RF, KNN, and SVM machine learning classifiers.

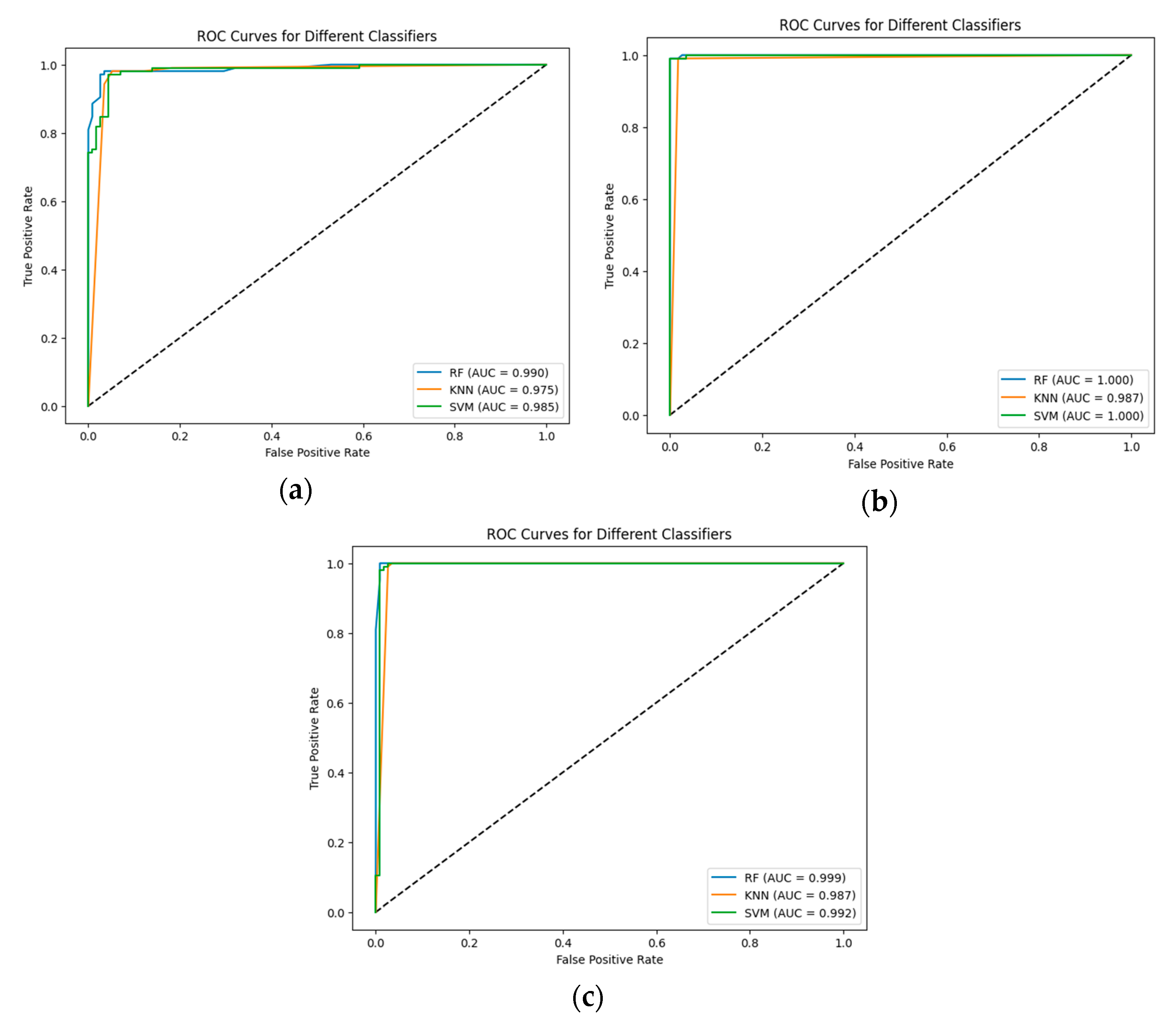

- We measured classification accuracy and ROC-AUC concerning the base CNN and transfer learning models.

- A bagging ensemble approach with 5-fold cross-validation was employed to prevent overfitting and aggregate their results.

- In the last part of the study, the detection of the best hybrid model that can differentiate between the studied classes was performed.

2. Related Work

3. Proposed Method

3.1. Brain MRI Kaggle Dataset

3.2. Data Augmentation and Pre-Processing

3.3. State-of-the-Art CNN and ML Models

3.4. Training of CNNs and ML Models

3.5. Deep Feature Extraction and Classification

3.6. Feature Evaluation

4. Results and Discussions

5. Comparison with the State-of-the-Art Models

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rehman, A.; Khan, M.A.; Saba, T.; Mehmood, Z.; Tariq, U.; Ayesha, N. Microscopic brain tumour detection and classification using 3D CNN and feature selection architecture. Microsc. Res. Tech. 2021, 84, 133–149. [Google Scholar] [CrossRef] [PubMed]

- Sharif, M.I.; Li, J.P.; Khan, M.A.; Saleem, M.A. Active deep neural network features selection for segmentation and recognition of brain tumours using MRI images. Pattern Recogn. Lett. 2020, 129, 181–189. [Google Scholar] [CrossRef]

- Mehrotra, R.; Ansari, M.A.; Agrawal, R.; Anand, R.S. A Transfer Learning approach for AI-based classification of brain tumours. Mach. Learn. Appl. 2020, 2, 10–19. [Google Scholar]

- Mahmud, M.I.; Mamun, M.; Abdelgawad, A. A Deep Analysis of Brain Tumour Detection from MR Images Using Deep Learning. Netw. Algorithms 2023, 16, 176. [Google Scholar] [CrossRef]

- Ahmmed, S.; Podder, P.; Mondal, M.R.H.; Rahman, S.M.A.; Kannan, S.; Hasan, M.J.; Rohan, A.; Prosvirin, A.E. Enhancing Brain Tumour Classification with Transfer Learning across Multiple Classes: An In-Depth Analysis. BioMedInformatics 2023, 3, 1124–1144. [Google Scholar] [CrossRef]

- Albalawi, E.; Thakur, A.; Dorai, D.R.; Bhatia Khan, S.; Mahesh, T.R.; Almusharraf, A.; Aurangzeb, K. Enhancing brain tumor classification in MRI scans with a multi-layer customized convolutional neural network approach. Front. Comput. Neurosci. 2024, 18, 1418546. [Google Scholar] [CrossRef]

- Abdusalomov, A.B.; Mukhiddinov, M.; Whangbo, T.K. Brain tumour detection based on deep learning approaches and magnetic resonance imaging. Cancers 2023, 15, 4172. [Google Scholar] [CrossRef]

- Saeedi, S.; Rezayi, S.; Keshavarz, H.; Niakan-Kalhori, S.R. MRI-based brain tumour detection using convolutional deep learning methods and chosen machine learning techniques. BMC Med. Inform. Decis. Mak. 2023, 23, 16. [Google Scholar] [CrossRef]

- Celik, M.; Inik, O. Development of hybrid models based on deep learning and optimized machine learning algorithms for brain tumour Multi-Classification. Expert Syst. Appl. 2024, 238, 122159. [Google Scholar] [CrossRef]

- Sarkar, A.; Maniruzzaman, M.; Alahe, M.A.; Ahmad, M. An Effective and Novel Approach for Brain Tumour Classification Using AlexNet CNN Feature Extractor and Multiple Eminent Machine Learning Classifiers in MRIs. J. Sens. 2023, 2023, 1224619. [Google Scholar] [CrossRef]

- Mahmoud, A.; Awad, N.A.; Alsubaie, N.; Ansarullah, S.I.; Alqahtani, M.S.; Abbas, M.; Usman, M.; Soufiene, B.O.; Saber, A. Advanced Deep Learning Approaches for Accurate Brain Tumour Classification in Medical Imaging. Symmetry 2023, 15, 571. [Google Scholar] [CrossRef]

- Bansal, S.; Jadon, R.S.; Gupta, S.K. A Robust Hybrid Convolutional Network for Tumour Classification Using Brain MRI Image Datasets. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 576. [Google Scholar]

- Woźniak, M.; Siłka, J.; Wieczorek, M. Neural Computing and Applications Deep neural network correlation learning mechanism for CT brain tumour detection. Neural Comput. Appl. 2021, 35, 14611–14626. [Google Scholar] [CrossRef]

- Zebari, N.A.; Alkurdi, A.A.H.; Marqas, R.B.; Salih, M.S. Enhancing Brain Tumour Classification with Data Augmentation and DenseNet121. Acad. J. Nawroz Univ. 2023, 12, 323–334. [Google Scholar] [CrossRef]

- Haque, R.; Hassan, M.M.; Bairagi, A.K.; Shariful Islam, S.M. NeuroNet19: An explainable deep neural network model for the classification of brain tumors using magnetic resonance imaging data. Sci. Rep. 2024, 14, 1524. [Google Scholar] [CrossRef]

- Thakur, M.; Kuresan, H.; Dhanalakshmi, S.; Lai, K.W.; Wu, X. Soft Attention Based DenseNet Model for Parkinson’s Disease Classification Using SPECT Images. Front. Aging Neurosci. 2022, 14, 908143. [Google Scholar] [CrossRef]

- Hassan, M.M.; Haque, R.; Shariful Islam, S.M.; Meshref, H.; Alroobaea, R.; Masud, M.; Bairagi, A.K. NeuroWave-Net: Enhancing epileptic seizure detection from EEG brain signals via advanced convolutional and long short-term memory networks. AIMS Bioeng. 2024, 11, 85–109. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, Y.T.; Lai, K.W.; Yang, M.Z.; Yang, G.L.; Wang, H.H. A Novel Centralized Federated Deep Fuzzy Neural Network with Multi-objectives Neural Architecture Search for Epistatic Detection. IEEE Trans. Fuzzy Syst. 2024. [Google Scholar] [CrossRef]

- Casapu, C.I.; Moldovanu, S. Classification of Microorganism Using Convolutional Neural Network and H2O AutoML. Syst. Theory Control. Comput. J. 2024, 4, 15–21. [Google Scholar] [CrossRef]

- Wu, X.; Wei, Y.; Jiang, T.; Wang, Y.; Jiang, S. A micro-aggregation algorithm based on density partition method for anonymizingbiomedical data. Curr. Bioinform. 2019, 7, 667–675. [Google Scholar] [CrossRef]

- Bhuvaji, S.; Kadam, A.; Bhumkar, P.; Dedge, S.; Kanchan, S. Brain tumour classifcation (MRI). Kaggle 2020. [Google Scholar] [CrossRef]

- Shahid, H.; Khalid, A.; Liu, X.; Irfan, M.; Ta, D. A deep learning approach for the photoacoustic tomography recovery from undersampled measurements. Front. Neurosci. 2021, 15, 598693. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Yu, Z.; Wang, Y.; Zheng, H. TumorGAN: A Multi-Modal Data Augmentation Framework for Brain Tumor Segmentation. Sensors 2020, 20, 4203. [Google Scholar] [CrossRef] [PubMed]

- Boob, D.; Dey, S.S.; Lan, G.H. Complexity of training ReLU neural network. Discret. Optim. 2022, 44, 100620. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNetV2: Smaller Models and Faster Training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. 2017. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Tăbăcaru, G.; Moldovanu, S.; Barbu, M. Texture Analysis of Breast US Images Using Morphological Transforms, Hausdorff Dimension and Bagging Ensemble Method. In Proceedings of the 2024 32nd Mediterranean Conference on Control and Automation (MED), Chania, Greece, 11–14 June 2024. [Google Scholar]

- Moldovanu, S.; Miron, M.; Rusu, C.-G.; Biswas, K.C.; Moraru, L. Refining skin lesions classification performance using geometric features of superpixels. Sci. Rep. 2023, 13, 11463. [Google Scholar] [CrossRef]

- Tăbăcaru, G.; Moldovanu, S.; Răducan, E.; Barbu, M. A Robust Machine Learning Model for Diabetic Retinopathy Classification. J. Imaging 2023, 10, 8. [Google Scholar] [CrossRef]

- Uyar, K.; Tașdemir, S.; Űlker, E.; Őztűrk, M.; Kasap, H. Multi-class brain normality and abnormality diagnosis using modified Faster R-CNN. Int. J. Med. Inform. 2021, 155, 104576. [Google Scholar] [CrossRef]

- Shanjida, S.; Islam, S.; Mohiuddin, M. MRI-Image based Brain Tumour Detection and Classification using CNN-KNN. In Proceedings of the 2022 IEEE IAS Global Conference on Emerging Technologies (GlobConET), Arad, Romania, 20–22 May 2022; pp. 900–905. [Google Scholar]

- Deepak, S.; Ameer, P.M. Automated categorization of brain tumour from MRI using cnn features and SVM. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8357–8369. [Google Scholar] [CrossRef]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Siar, M.; Teshnehlab, M. Brain Tumour Detection Using Deep Neural Network and Machine Learning Algorithm. In Proceedings of the 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 24–25 October 2019; IEEE: Piscataway, NJ, USA; pp. 363–368. [Google Scholar]

- AlSaeed, D.; Omar, S.F. Brain MRI analysis for Alzheimer’s disease diagnosis using CNN-based feature extraction and machine learning. Sensors 2022, 22, 2911. [Google Scholar] [CrossRef] [PubMed]

- Bohra, M.; Gupta, S. Pre-trained CNN Models and Machine Learning Techniques for Brain Tumour Analysis. In Proceedings of the 2nd International Conference on Emerging Frontiers in Electrical and Electronic Technologies (ICEFEET), Patna, India, 24–25 June 2022; pp. 1–6. [Google Scholar]

- Khushi, H.M.T.; Masood, T.; Jaffar, A.; Akram, S.; Bhatti, S.M. Performance Analysis of state-of-the-art CNN Architectures for Brain Tumour Detection. Int. J. Imaging Syst. Technol. 2024, 34, e22949. [Google Scholar] [CrossRef]

| References | Dataset | Models | Performance Accuracy (%) | Limitations |

|---|---|---|---|---|

| [4], 2023 | Brain MRI dataset, 3264 images. | Proposed CNNs ResNet-50, VGG16, InceptionV3 | 93.3 81.1 71.6 80 | Alternative evaluation with ML tools is missed. |

| [5], 2023 | Brain MRI dataset, 2213 images. | ResNet 50 and Inception V3 | 99.8 | Only pre-trained CNNs are used. |

| [6], 2024 | SARTAJ, and Br35H brain MRI dataset, 7023 images | Proposed CNNs | 99 | Pre-trained CNNs are not used. |

| [8], 2024 | 3264. Magnetic Resonance Imaging (MRI). | Proposed CNN–KNN | 86 | Pre-trained CNNs are not used. |

| [9], 2024 | Brain MRI dataset, 7023 images. | Darknet19-SVM Darknet53-SVM Densenet201-SVM EfficientnetB0-SVM Resnet101-SVM Xception-SVM Proposed-KNN | 95.58 96.87 97.81 97.93 95.87 96.23 97.15 | A CNN with original architecture is missed. |

| [10], 2023 | Brain MRI dataset, 7023 images. | AlexNet- BayesNet,(SMO), AlexNet–Naïve Bayes (NB) | 88.75 98.15 | Only a pre-trained CNN is proposed. |

| [11], 2023 | Brain MRI dataset, 2950 images. | VGG-19 | 98.95 | The study does not contain ML tools. |

| [12], 2024 | Brain MRI dataset, 7023 images. | Proposed CNN–SVM | 98 | A study without pre-trained CNNs. |

| [13], 2021 | Brain MRI dataset, 3064 images. | Proposed CNN | 96 | A study without pre-train CNNs and ML tools. |

| [14], 2023 | Brain MRI dataset, 252 images. | DenseNet121 | 94.83 | A study without ML tools. |

| CNNs | Total Parameters | Trainable Parameters | Non-Trainable Parameters |

|---|---|---|---|

| Proposed CNN | 6.67 MB | 0.67 MB | 0 |

| EfficientNetV2B0 | 23 MB | 22.76 MB | 236.75 KB |

| DenseNet169 | 48.77 MB | 48.16 MB | 618.75 KB |

| Proposed CNN | 6.67 MB | 0.67 MB | 0 |

| Batches | Size Image | Epochs | Convolutional Layers |

|---|---|---|---|

| 20 | 80 × 80 | 10 | 1 |

| 32 | 152 × 152 | 15 | 2 |

| 64 | 224 × 224 | 20 | 3 |

| Layer Name Activation | Activation Maps | Parameters |

|---|---|---|

| rescaling_1 (Rescaling) | (None, 80, 80, 3) | 0 |

| conv2d_3 (Conv2D) | (None, 80, 80, 32) | 896 |

| max_pooling2d_3 (MaxPooling2D) | (None, 40, 40, 32) | 0 |

| dropout_3 (Dropout) | (None, 40, 40, 32) | 0 |

| conv2d_4 (Conv2D) | (None, 40, 40, 64) | 18,496 |

| max_pooling2d_4 (MaxPooling2D) | (None, 20, 20, 64) | 0 |

| dropout_4 (Dropout) | (None, 20, 20, 64) | 0 |

| conv2d_5 (Conv2D) | (None, 20, 20, 128) | 73,856 |

| max_pooling2d_5 (MaxPooling2D) | (None, 10, 10, 128) | 0 |

| dropout_5 (Dropout) | (None, 10, 10, 128) | 0 |

| flatten_1 (Flatten) | (None, 12,800) | 0 |

| dense_2 (Dense) | (None, 128) | 1,638,528 |

| dense_3 (Dense | (None, 128) | 16,512 |

| MLs | hyperparameters |

| RF | number of trees = 50 depth of trees = 100 |

| KNN | n_neighbors = 4, class = KDTree |

| SVM | C = 0.1, gamma = 10 |

| Datasets | CNNs | MLs | ACC | F1-Score | MCC | Confusion Matrices [[TP FP] [ FN TN]] |

|---|---|---|---|---|---|---|

| Validation dataset | Proposed CNN | SVM | 0.955 | 0.968 | 0.891 | [[167 4] [ 7 65]] |

| RF | 0.950 | 0.965 | 0.880 | [[167 4] [ 8 64]] | ||

| KNN | 0.946 | 0.962 | 0.871 | [[166 5] [ 8 64]] | ||

| DenseNet169 | SVM | 0.979 | 0.985 | 0.952 | [[167 4] [ 1 71]] | |

| RF | 0.9711 | 0.979 | 0.933 | [[165 6] [ 1 71]] | ||

| KNN | 0.979 | 0.985 | 0.952 | [[167 4] [ 1 71]] | ||

| EfficientNetV2B0 | SVM | 0.995 | 0.996 | 0.991 | [[115 0] [ 1 104]] | |

| RF | 0.983 | 0.988 | 0.961 | [[168 3] [ 1 71]] | ||

| KNN | 0.983 | 0.988 | 0.961 | [[168 3] [ 1 71]] | ||

| Test dataset | Proposed CNN | SVM | 0.950 | 0.951 | 0.902 | [[106 9] [ 2 103]] |

| RF | 0.972 | 0.974 | 0.946 | [[111 4] [ 2 103]] | ||

| KNN | 0.945 | 0.946 | 0.893 | [[105 10] [ 2 103]] | ||

| DenseNet169 | SVM | 0.986 | 0.987 | 0.973 | [[112 3] [ 0 105]] | |

| RF | 0.981 | 0.982 | 0.964 | [[111 4] [ 0 105]] | ||

| KNN | 0.981 | 0.982 | 0.964 | [[111 4] [ 0 105]] | ||

| EfficientNetV2B0 | SVM | 0.995 | 0.997 | 0.990 | [[171 0] [ 1 71]] | |

| RF | 0.986 | 0.987 | 0.973 | [[113 2] [ 1 104]] | ||

| KNN | 0.986 | 0.987 | 0.973 | [[113 2] [ 1 104]] |

| State-of-the-Art Methods | CNNs-MLs | ACC |

|---|---|---|

| Siar at al. [34], 2019 | Proposed CNN-DT | 0.942 |

| AlSaeed and Omar [35], 2022 | ResNet50-SVM ResNet50-RF | 0.92 0.857 |

| Bohra and Gupta [36], 2022 | VGG16-logistic regression classifier. | 0.977 |

| Sarkar et al. [10], 2023 | AlexNet-BayesNet, AlexNet—Sequential Minimal Optimization (SMO) AlexNet—Naïve Bayes | 0.887 0.981 0.862 |

| Bansal et al. [12], 2023 | Proposed CNN-SVM | 0.99 |

| Celik and Inik [9], 2024 | Darknet19-SVM Darknet53-SVM Densenet201-SVM EfficientnetB0-SVM Resnet101-SVM Xception-SVM Proposed-KNN | 0.955 0.968 0.978 0.979 0.958 0.962 0.971 |

| Khushi et al. [37], 2024 | AlexNet-Stochastic Gradient Descent (SGD) | 0.987 |

| Proposed hybrid CNN-ML | Proposed CNN-RF DenseNet169-SVM EfficientNetV2B0-SVM | 0.972 0.986 0.995 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moldovanu, S.; Tăbăcaru, G.; Barbu, M. Convolutional Neural Network–Machine Learning Model: Hybrid Model for Meningioma Tumour and Healthy Brain Classification. J. Imaging 2024, 10, 235. https://doi.org/10.3390/jimaging10090235

Moldovanu S, Tăbăcaru G, Barbu M. Convolutional Neural Network–Machine Learning Model: Hybrid Model for Meningioma Tumour and Healthy Brain Classification. Journal of Imaging. 2024; 10(9):235. https://doi.org/10.3390/jimaging10090235

Chicago/Turabian StyleMoldovanu, Simona, Gigi Tăbăcaru, and Marian Barbu. 2024. "Convolutional Neural Network–Machine Learning Model: Hybrid Model for Meningioma Tumour and Healthy Brain Classification" Journal of Imaging 10, no. 9: 235. https://doi.org/10.3390/jimaging10090235

APA StyleMoldovanu, S., Tăbăcaru, G., & Barbu, M. (2024). Convolutional Neural Network–Machine Learning Model: Hybrid Model for Meningioma Tumour and Healthy Brain Classification. Journal of Imaging, 10(9), 235. https://doi.org/10.3390/jimaging10090235