Optimizing Digital Image Quality for Improved Skin Cancer Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Materials

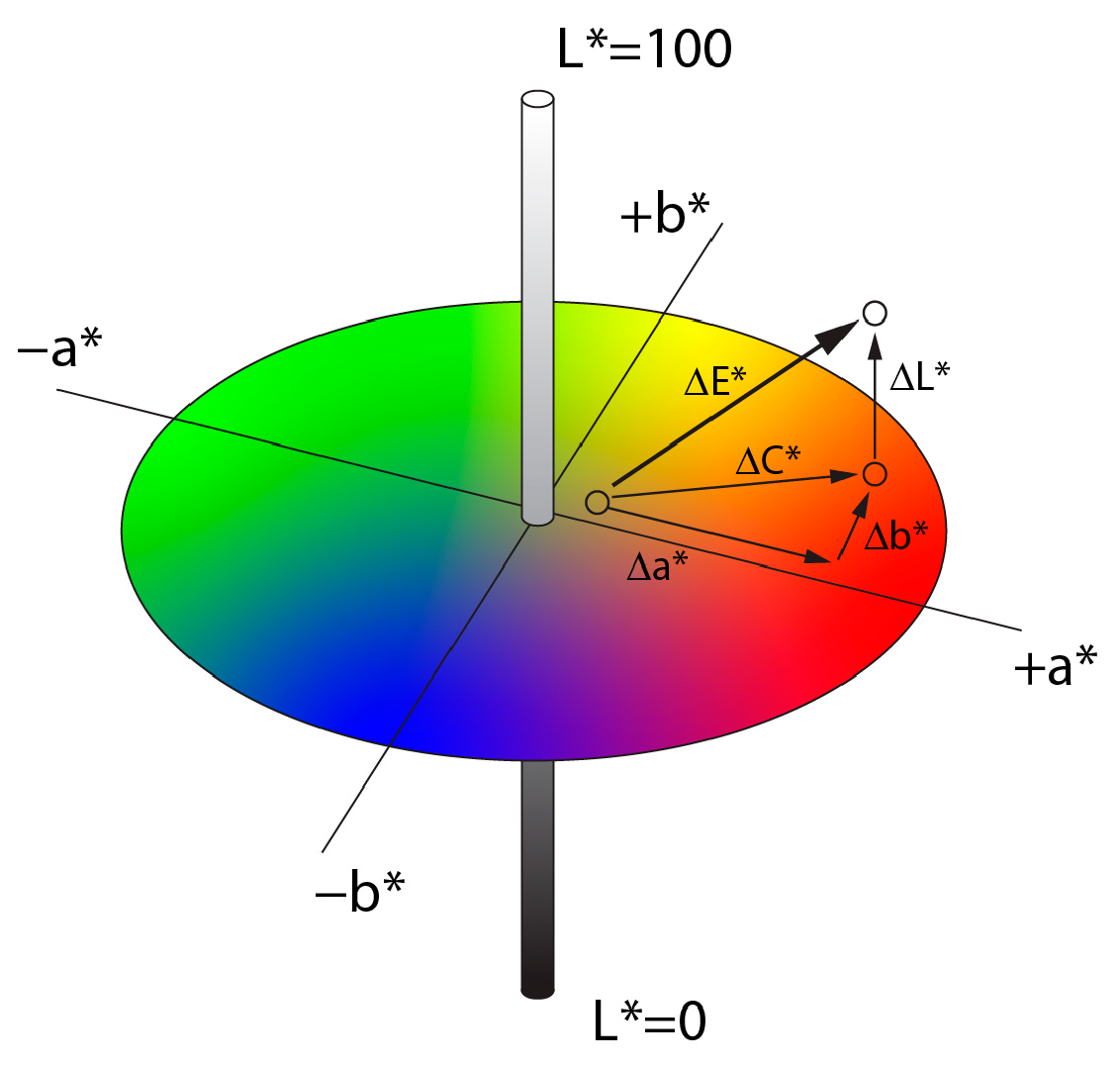

2.2. Quantifying Color Differences and Reproduction Accuracy

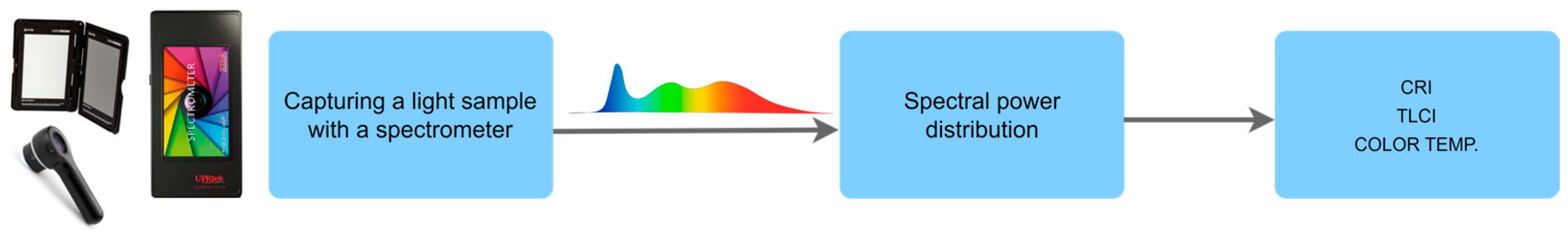

2.3. Evaluation of Light Source Quality and Color Fidelity

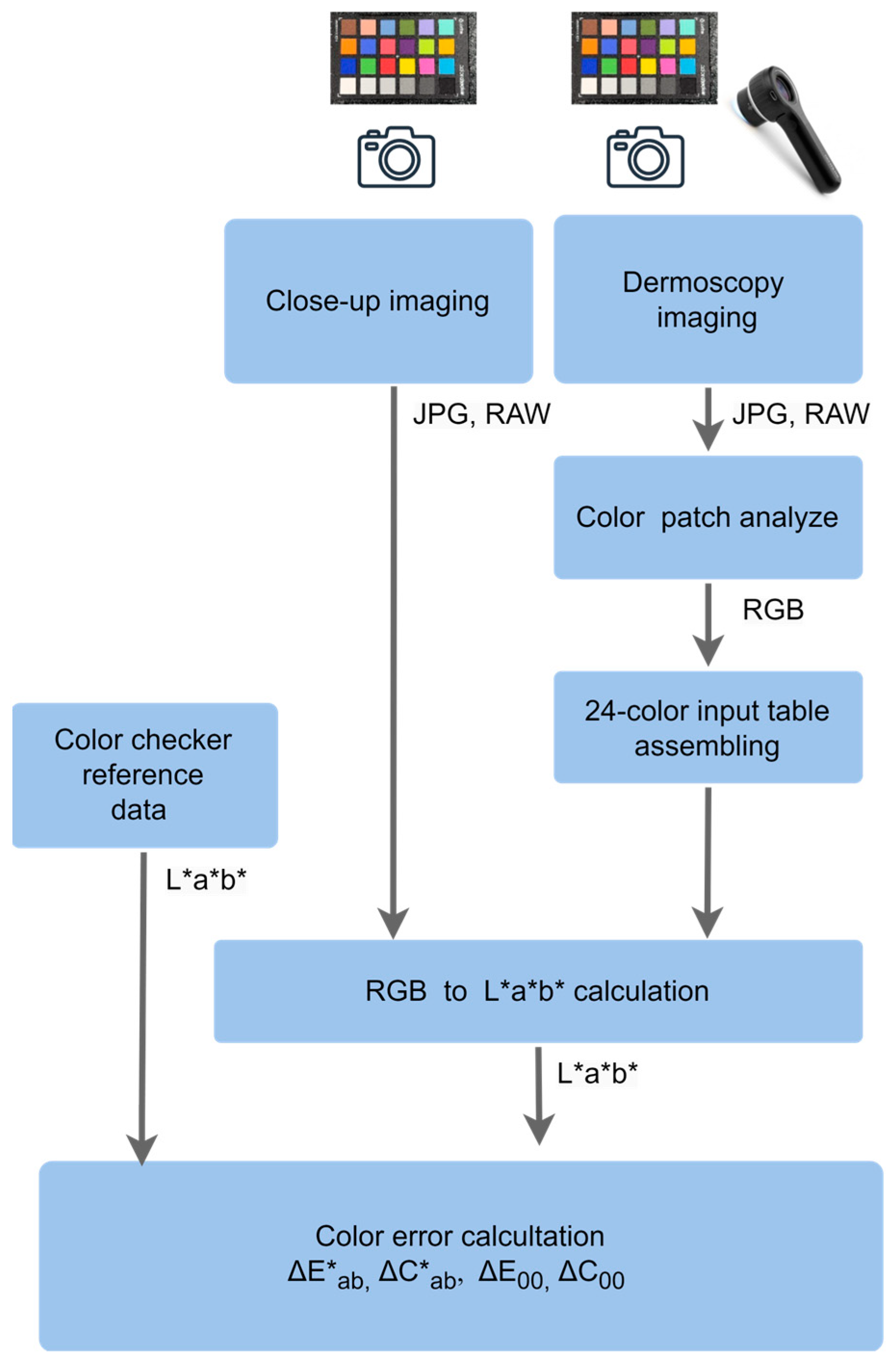

2.4. Assessment of Color Deviations in Close-Up and Dermoscopic Imaging

3. Results

3.1. Color Deviations in Close-Up and Dermoscopic Images Using a Professional Dermatology Device

3.2. Color Deviations in Close-Up Imaging Across All Devices

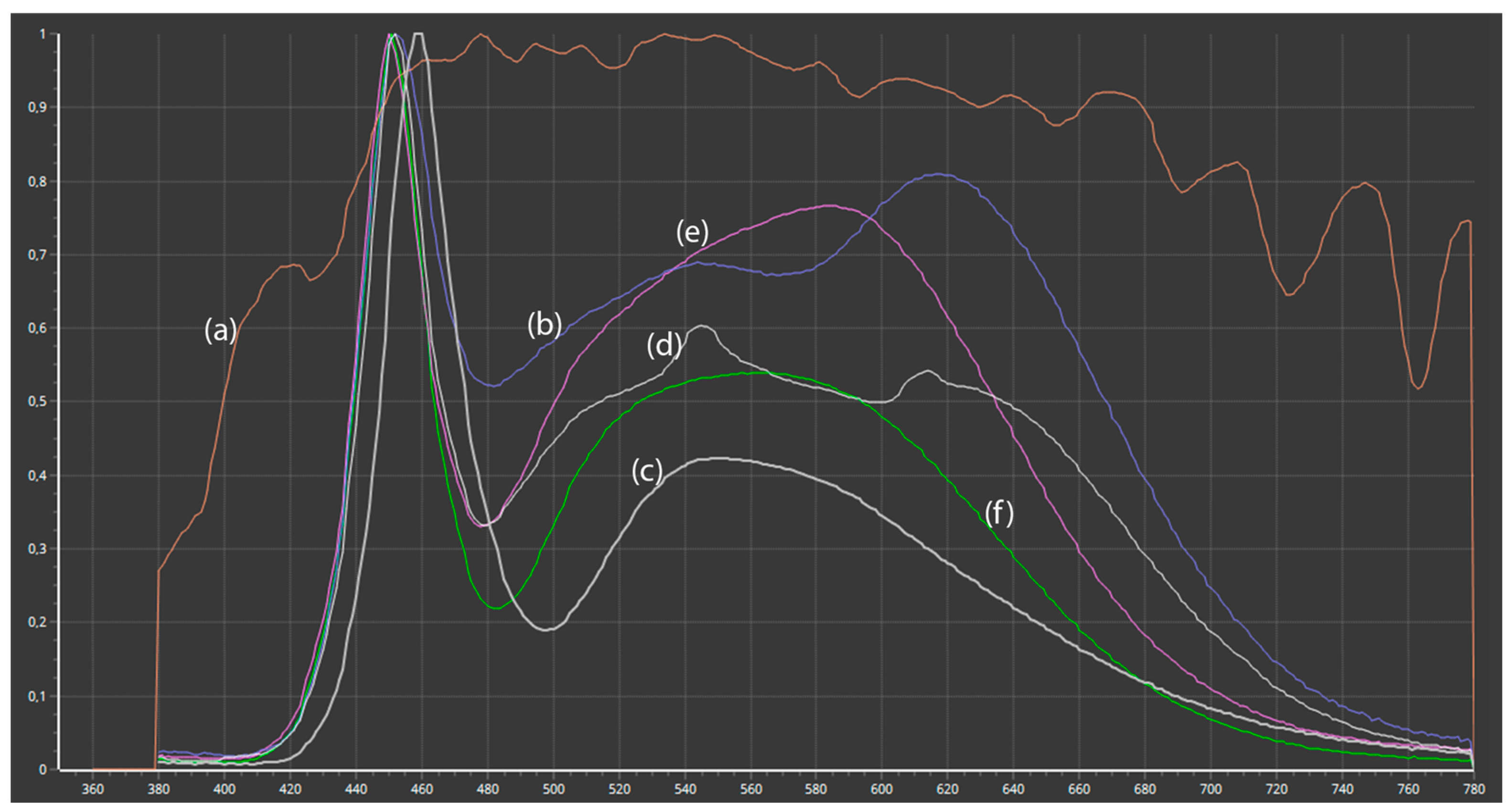

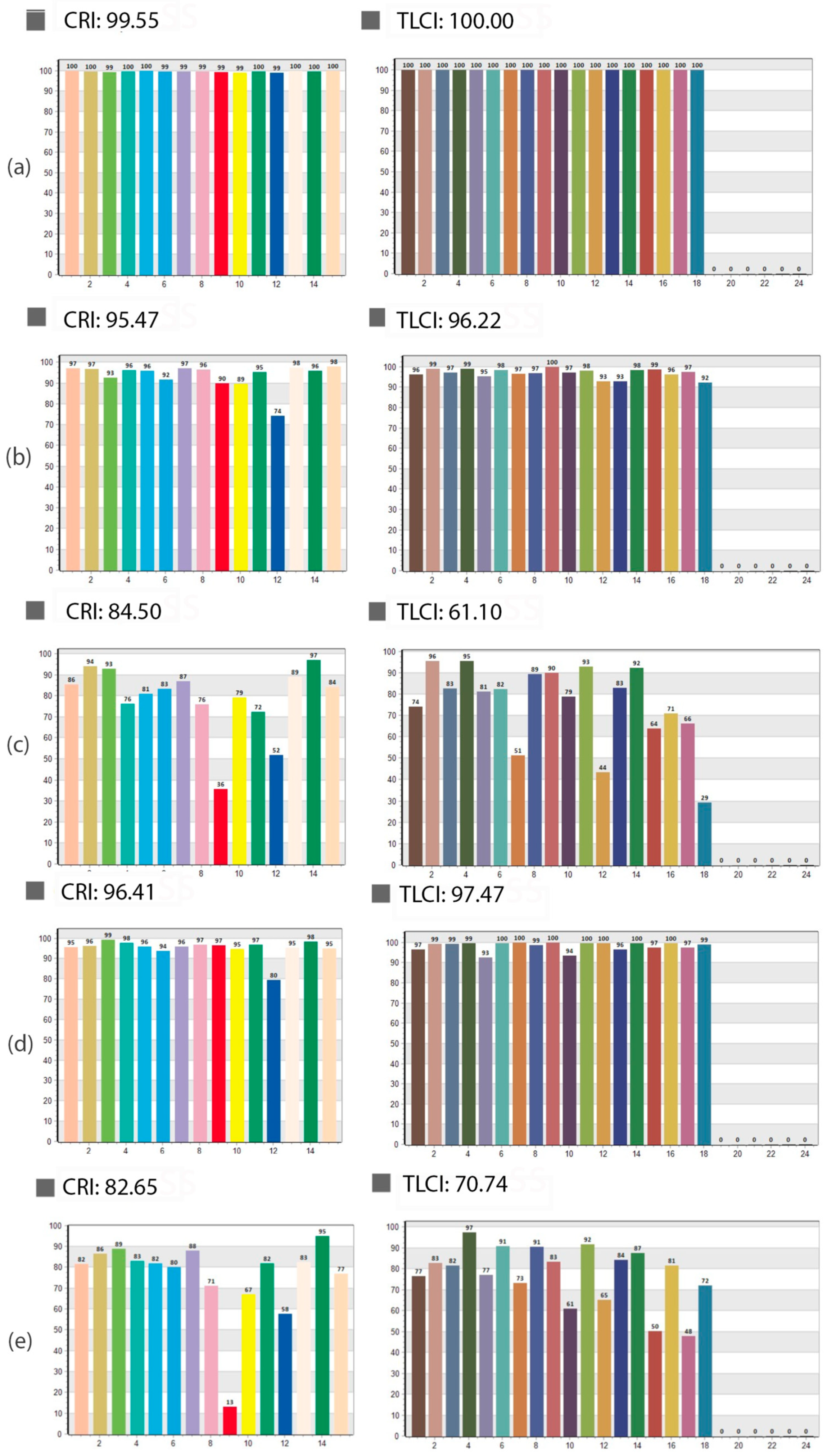

3.3. Influence of Spectral Light Characteristics on Color Accuracy

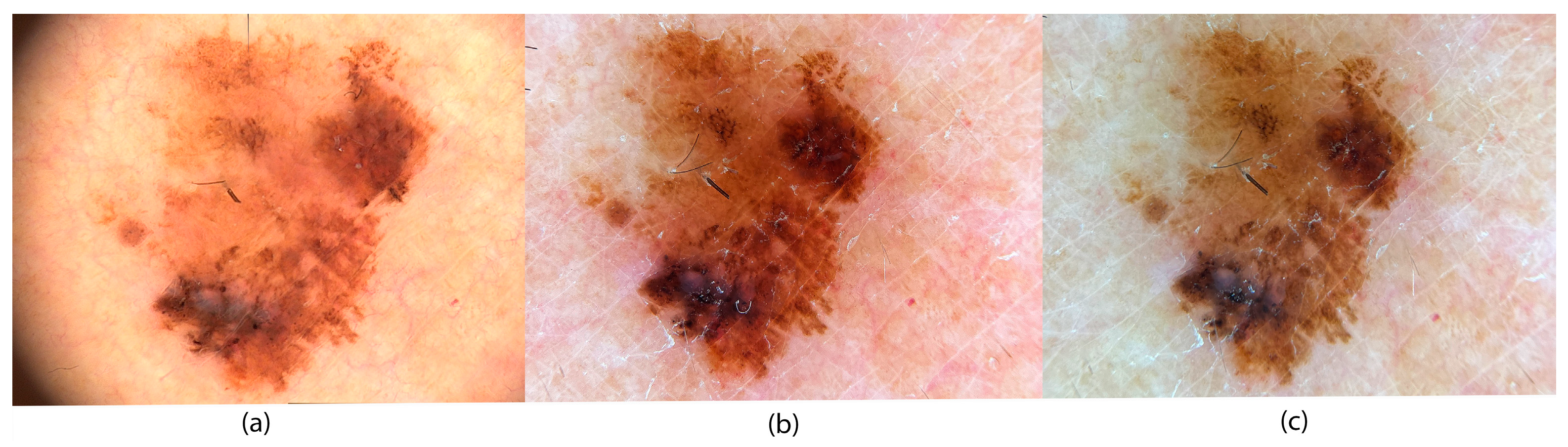

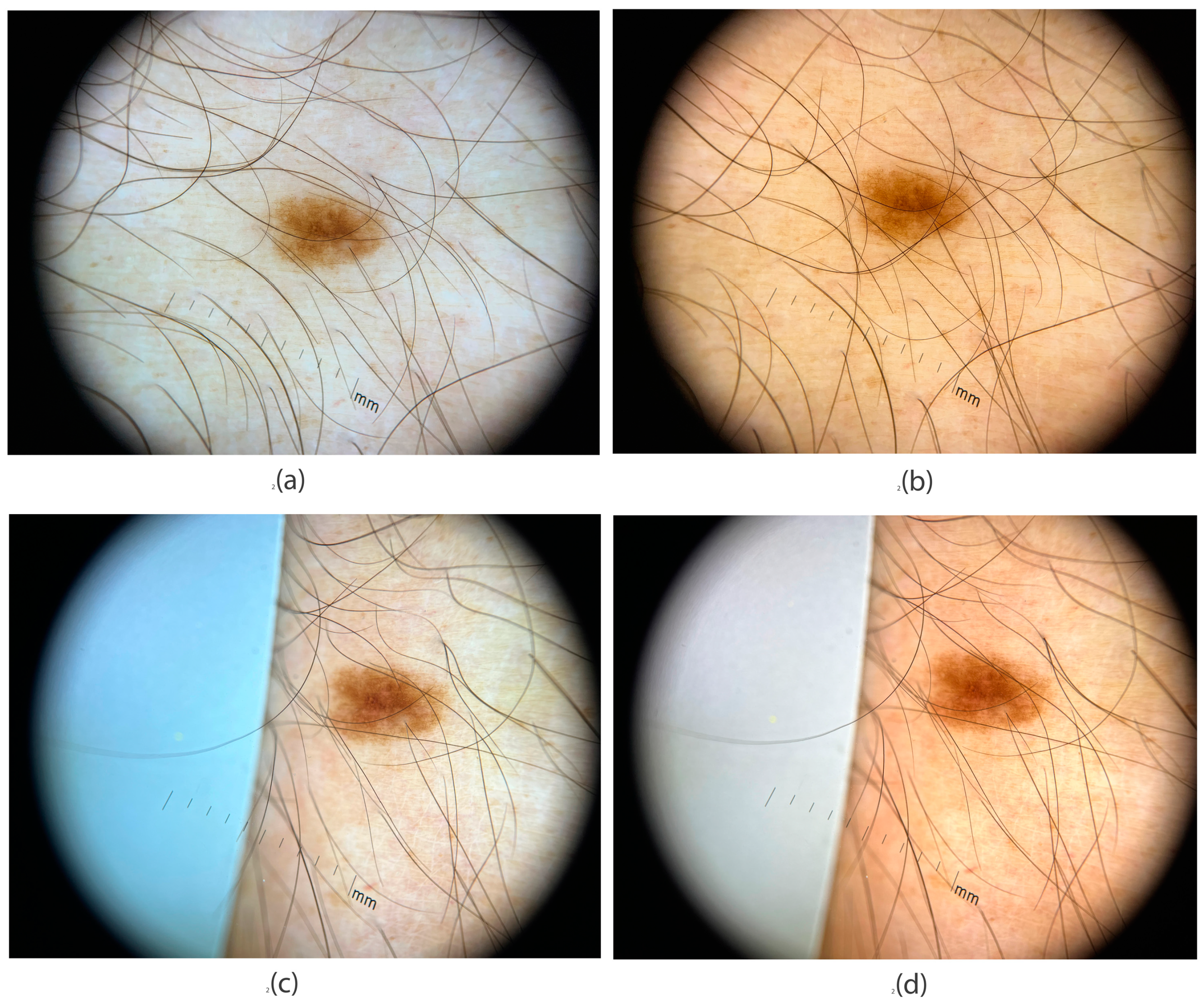

3.4. Application of Image Evaluation to Melanoma Diagnosis

- Set white balance to CCT = 7080 K

- Set ISO to ISO = 48

- Set shutter speed to 1/800

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Garbe, C.; Amaral, T.; Peris, K.; Hauschild, A.; Arenberger, P.; Basset-Seguin, N.; Bastholt, L.; Bataille, V.; del Marmol, V.; Dréno, B.; et al. European Consensus-Based Interdisciplinary Guideline for Melanoma. Part 2: Treatment-Update 2022. Eur. J. Cancer 2022, 170, 256–284. [Google Scholar] [CrossRef] [PubMed]

- Kashetsky, N.; Mar, K.; Liu, C.; Rivers, J.K.; Mukovozov, I. Photography in Dermatology—A Scoping Review: Practices, Skin of Color, Patient Preferences, and Medical-legal Considerations. J. Dtsch. Derma. Gesell. 2023, 21, 1102–1107. [Google Scholar] [CrossRef] [PubMed]

- Pasquali, P. (Ed.) Photography in Clinical Medicine; Springer International Publishing: Cham, Switzerland, 2020; ISBN 978-3-030-24543-6. [Google Scholar]

- Lester, J.C.; Clark, L.; Linos, E.; Daneshjou, R. Clinical Photography in Skin of Colour: Tips and Best Practices. Br. J. Dermatol. 2021, 184, 1177–1179. [Google Scholar] [CrossRef] [PubMed]

- Lallas, A.; Longo, C.; Manfredini, M.; Benati, E.; Babino, G.; Chinazzo, C.; Apalla, Z.; Papageorgiou, C.; Moscarella, E.; Kyrgidis, A.; et al. Accuracy of Dermoscopic Criteria for the Diagnosis of Melanoma In Situ. JAMA Dermatol. 2018, 154, 414. [Google Scholar] [CrossRef] [PubMed]

- Ferreira, I.G.; Weber, M.B.; Bonamigo, R.R. History of Dermatology: The Study of Skin Diseases over the Centuries. An. Bras. Dermatol. 2021, 96, 332–345. [Google Scholar] [CrossRef]

- Chen, X.; Lu, Q.; Chen, C.; Jiang, G. Recent Developments in Dermoscopy for Dermatology. J. Cosmet. Dermatol. 2021, 20, 1611–1617. [Google Scholar] [CrossRef]

- Shalu; Kamboj, A. A Color-Based Approach for Melanoma Skin Cancer Detection. In Proceedings of the 2018 First International Conference on Secure Cyber Computing and Communication (ICSCCC), Jalandhar, India, 15–17 December 2018; IEEE: Jalandhar, India, 2018; pp. 508–513. [Google Scholar]

- Fuduli, A.; Veltri, P.; Vocaturo, E.; Zumpano, E. Melanoma Detection Using Color and Texture Features in Computer Vision Systems. Adv. Sci. Technol. Eng. Syst. J. 2019, 4, 16–22. [Google Scholar] [CrossRef]

- Kittler, H.; Rosendahl, C.; Cameron, A.; Tschandl, P. Dermatoscopy: Pattern Analysis of Pigmented and Non-Pigmented Lesions, 2nd ed.; Facultas Verlags und Buchhandels AG: Vienna, Austria, 2016; pp. 9–52. [Google Scholar]

- Madooei, A.; Drew, M.S. Incorporating Colour Information for Computer-Aided Diagnosis of Melanoma from Dermoscopy Images: A Retrospective Survey and Critical Analysis. Int. J. Biomed. Imaging 2016, 2016, 4868305. [Google Scholar] [CrossRef]

- Hibler, B.; Qi, Q.; Rossi, A. Current State of Imaging in Dermatology. Sem. Cutan. Med. Surg. 2016, 35, 2–8. [Google Scholar] [CrossRef][Green Version]

- Campos-do-Carmo, G.; Ramos-e-Silva, M. Dermoscopy: Basic Concepts. Int. J. Dermatol. 2008, 47, 712–719. [Google Scholar] [CrossRef]

- Salerni, G.; Terán, T.; Alonso, C.; Fernández-Bussy, R. The Role of Dermoscopy and Digital Dermoscopy Follow-up in the Clinical Diagnosis of Melanoma: Clinical and Dermoscopic Features of 99 Consecutive Primary Melanomas. Dermatol. Pract. Concept. 2014, 4, 39. [Google Scholar] [CrossRef] [PubMed]

- Dermoscopy International Board. Dermoscopedia. Available online: https://Dermoscopedia.Org/Main_Page (accessed on 15 January 2025).

- Fried, L.; Tan, A.; Bajaj, S.; Liebman, T.N.; Polsky, D.; Stein, J.A. Technological Advances for the Detection of Melanoma. J. Am. Acad. Dermatol. 2020, 83, 983–992. [Google Scholar] [CrossRef]

- Niklasson, L.; Prasad, S.C.; Schuster, A.; Laurinaviciene, R.; Vestergaard, T. A Comparative Study of the Image Quality of Dermoscopic Images Acquired in General Practice versus at a Dermatology Department. JEADV Clin. Pract. 2023, 2, 802–809. [Google Scholar] [CrossRef]

- Zoltie, T.; Blome-Eberwein, S.; Forbes, S.; Theaker, M.; Hussain, W. Medical Photography Using Mobile Devices. BMJ 2022, 378, e067663. [Google Scholar] [CrossRef] [PubMed]

- Hunt, B.; Ruiz, A.J.; Pogue, B.W. Smartphone-Based Imaging Systems for Medical Applications: A Critical Review. J. Biomed. Opt. 2021, 26, 040902. [Google Scholar] [CrossRef]

- Penczek, J.; Boynton, P.A.; Splett, J.D. Color Error in the Digital Camera Image Capture Process. J. Digit. Imaging 2014, 27, 182–191. [Google Scholar] [CrossRef]

- Štampfl, V.; Ahtik, J. Quality of Colour Rendering in Photographic Scenes Illuminated by Light Sources with Light-Shaping Attachments. Appl. Sci. 2024, 14, 1814. [Google Scholar] [CrossRef]

- Schneider, S.L.; Kohli, I.; Hamzavi, I.H.; Council, M.L.; Rossi, A.M.; Ozog, D.M. Emerging Imaging Technologies in Dermatology. J. Am. Acad. Dermatol. 2019, 80, 1114–1120. [Google Scholar] [CrossRef]

- Rosado, L.; Jo, M.; Vasconcelos, M.; Castro, R.; Tavares, J.A.M.R. From Dermoscopy to Mobile Teledermatology. In Dermoscopy Image Analysis; CRC Press: Boca Raton, FL, USA, 2015; pp. 385–418. [Google Scholar]

- Gálvez, A.; Iglesias, A.; Fister, I.; Fister, I.; Otero, C.; Díaz, J.A. NURBS Functional Network Approach for Automatic Image Segmentation of Macroscopic Medical Images in Melanoma Detection. J. Comput. Sci. 2021, 56, 101481. [Google Scholar] [CrossRef]

- Quigley, E.A.; Tokay, B.A.; Jewell, S.T.; Marchetti, M.A.; Halpern, A.C. Technology and Technique Standards for Camera-Acquired Digital Dermatologic Images: A Systematic Review. JAMA Dermatol. 2015, 151, 883–890. [Google Scholar] [CrossRef]

- Celebi, M.E.; Mendonca, T.; Marques, J.S. (Eds.) Dermoscopy Image Analysis; CRC Press: Boca Raton, FL, USA, 2015; ISBN 978-0-429-06919-2. [Google Scholar]

- McKoy, K.; Antoniotti, N.M.; Armstrong, A.; Bashshur, R.; Bernard, J.; Bernstein, D.; Burdick, A.; Edison, K.; Goldyne, M.; Kovarik, C.; et al. Practice Guidelines for Teledermatology. Telemed. e-Health 2016, 22, 981–990. [Google Scholar] [CrossRef]

- International Melanoma Institute. National Guideline: Dermoscopy (Draft). Available online: https://www.imi.org.uk/wp-content/uploads/2022/10/National-Guideline-Dermoscopy-DRAFT.pdf (accessed on 20 February 2024).

- Kashetsky, N.; Mar, K.; Liu, C.; Rivers, J.K.; Mukovozov, I. Photography and Image Acquisition in Dermatology a Scoping Review: Techniques for High-Quality Photography. Dermatol. Surg. 2023, 49, 877–884. [Google Scholar] [CrossRef]

- Badano, A.; Revie, C.; Casertano, A.; Cheng, W.-C.; Green, P.; Kimpe, T.; Krupinski, E.; Sisson, C.; Skrøvseth, S.; Treanor, D.; et al. Consistency and Standardization of Color in Medical Imaging: A Consensus Report. J. Digit Imaging 2015, 28, 41–52. [Google Scholar] [CrossRef]

- Hanlon, K.L.; Wei, G.; Correa-Selm, L.; Grichnik, J.M. Dermoscopy and Skin Imaging Light Sources: A Comparison and Review of Spectral Power Distribution and Color Consistency. JBO 2022, 27, 080902. [Google Scholar] [CrossRef] [PubMed]

- Swarowsky, L.A.; Pereira, R.F.; Durand, L.B. Influence of Image File and White Balance on Photographic Color Assessment. J. Prosthet. Dent. 2023, 133, 847–856. [Google Scholar] [CrossRef] [PubMed]

- Yung, D.; Tse, A.K.; Hsung, R.T.; Botelho, M.G.; Pow, E.H.; Lam, W.Y. Comparison of the Colour Accuracy of a Single-Lens Reflex Camera and a Smartphone Camera in a Clinical Context. J. Dent. 2023, 137, 104681. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Polo, C.; Muñoz, M.P.; Lorenzo Luengo, M.C.; Vicente, P.; Galindo, P.; Martín Casado, A.M. Comparison of the CIELab and CIEDE2000 Color Difference Formulas. J. Prosthet. Dent. 2016, 115, 65–70. [Google Scholar] [CrossRef]

- Ohno, Y. CIE Fundamentals for Color Measurements. NIP Digit. Fabr. Conf. 2000, 16, 540–545. [Google Scholar] [CrossRef]

- The International Comission on Illumination. CIE 015:2018 Colorimetry, 4th ed.; CIE Central Bureau: Vienna, Austria, 2018. [Google Scholar]

- Wood, M.I.K.E. Television Lighting Consistency Index–TLCI. In Out of the Wood; Audible Studios: Newark, NJ, USA, 2013. [Google Scholar]

- Wang, H.; Wang, P.; Jin, Z.; Song, Y.; Xiong, D. Spectra Prediction for WLEDs with High TLCI. Appl. Sci. 2023, 13, 8487. [Google Scholar] [CrossRef]

- Houser, K.; Mossman, M.; Smet, K.; Whitehead, L. Tutorial: Color Rendering and Its Applications in Lighting. LEUKOS 2016, 12, 7–26. [Google Scholar] [CrossRef]

- Dugonik, B.; Dugonik, A.; Horvat, D.; Žalik, B.; Špelič, D. E-Derma—A Novel Wireless Dermatoscopy System. J. Med. Syst. 2017, 41, 205. [Google Scholar] [CrossRef]

- Iyatomi, H.; Celebi, M.E.; Schaefer, G.; Tanaka, M. Automated Color Calibration Method for Dermoscopy Images. Comput. Med. Imaging Graph. 2011, 35, 89–98. [Google Scholar] [PubMed]

- Sampaio, C.S.; Atria, P.J.; Hirata, R.; Jorquera, G. Variability of Color Matching with Different Digital Photography Techniques and a Gray Reference Card. J. Prosthet. Dent. 2018, 121, 333e339. [Google Scholar]

- Mokrzycki, W.; Tatol, M. Colour Difference∆ EA Survey. Mach. Graph. Vis. 2011, 20, 383–411. [Google Scholar]

- Shamey, R. (Ed.) Encyclopedia of Color Science and Technology; Springer International Publishing: Cham, Switzerland, 2023; ISBN 978-3-030-89861-8. [Google Scholar]

- Yélamos, O.; Garcia, R.; D’Alessandro, B.; Thomas, M.; Patwardhan, S.; Malvehy, J. Understanding Color. Photogr. Clin. Med. 2020, 99–111. [Google Scholar] [CrossRef]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 Color-Difference Formula: Implementation Notes, Supplementary Test Data, and Mathematical Observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The Development of the CIE 2000 Colour-Difference Formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Lake, A.; Jones, B. Dermoscopy: To Cross-Polarize, or Not to Cross-Polarize, That Is the Question: A Literature Review. J. Vis. Commun. Med. 2015, 38, 36–50. [Google Scholar] [CrossRef]

- Xiong, M.; Pfau, J.; Young, A.T.; Wei, M.L. Artificial Intelligence in Teledermatology. Curr. Derm. Rep. 2019, 8, 85–90. [Google Scholar] [CrossRef]

- Fliorent, R.; Fardman, B.; Podwojniak, A.; Javaid, K.; Tan, I.J.; Ghani, H.; Truong, T.M.; Rao, B.; Heath, C. Artificial Intelligence in Dermatology: Advancements and Challenges in Skin of Color. Int. J. Dermatol. 2024, 63, 455–461. [Google Scholar] [CrossRef]

- Popescu, D.; El-Khatib, M.; El-Khatib, H.; Ichim, L. New Trends in Melanoma Detection Using Neural Networks: A Systematic Review. Sensors 2022, 22, 496. [Google Scholar] [CrossRef] [PubMed]

- Syed, S.; Albalawi, E.M. Improved Skin Cancer Detection with 3D Total Body Photography: Integrating AI Algorithms for Precise Diagnosis [Preprint]. Research Square. 2024. Available online: https://www.researchsquare.com/article/rs-4677329/v1 (accessed on 26 March 2025).

- Bidgood, W.D., Jr.; Horii, S.C.; Prior, F.W.; Van Syckle, D.E. Understanding and Using DICOM, the Data Interchange Standard for Biomedical Imaging. J. Am. Med. Inform. Assoc. 1997, 4, 199–212. [Google Scholar] [PubMed]

- Caffery, L.; Weber, J.; Kurtansky, N.; Clunie, D.; Langer, S.; Shih, G.; Halpern, A.; Rotemberg, V. DICOM in Dermoscopic Research: An Experience Report and a Way Forward. J. Digit. Imaging 2021, 34, 967–973. [Google Scholar] [CrossRef] [PubMed]

| Close-Up Images | Dermoscopy Images | |||||||

|---|---|---|---|---|---|---|---|---|

| ΔE* | ΔC* | ΔE00 | ΔC00 | ΔE* | ΔC* | ΔE00 | ΔC00 | |

| Avg | 15.8 | 9.2 | 10.9 | 4.6 | 20.7 | 9.6 | 16.2 | 4.4 |

| Min | 2.1 | 1.1 | 3 | 0.8 | 0.5 | 0.2 | 0.5 | 0.2 |

| Max | 30.9 | 29.1 | 16 | 10.2 | 47.1 | 29.1 | 40.1 | 11.4 |

| Camera Model | Studio Light CCT 5500 K | Dermoscope LED Light | |||||||

|---|---|---|---|---|---|---|---|---|---|

| ΔE* | ΔC* | ΔE00 | ΔC00 | ΔE* | ΔC* | ΔE00 | ΔC00 | ||

| Canon EOS R7 | Aver. | 11.5 | 9.7 | 6.3 | 4.7 | 16.2 | 12.2 | 8.7 | 5.1 |

| Min | 3.6 | 0.5 | 2.4 | 0.5 | 0.8 | 0.4 | 1 | 0.4 | |

| Max | 24.7 | 24.6 | 12.9 | 9.5 | 34.4 | 33.3 | 17.1 | 14.3 | |

| iPhone 13 | Aver. | 18 | 11.2 | 11.8 | 4.7 | 26.5 | 21.8 | 12.3 | 7.5 |

| Min | 2.6 | 0.7 | 1.9 | 0.8 | 3.8 | 2.2 | 3.4 | 1.3 | |

| Max | 35.2 | 34.2 | 22.7 | 10.1 | 55.1 | 55 | 26.4 | 18 | |

| Canon EOS 5DIII | Aver. | 10.9 | 8.3 | 6.2 | 4 | 19.6 | 16.7 | 9.6 | 7 |

| Min | 1.1 | 1.1 | 0.8 | 0.8 | 1.9 | 1.9 | 2.2 | 2.2 | |

| Max | 18.8 | 18.6 | 9.8 | 8.7 | 40.3 | 39.5 | 15.2 | 13.5 | |

| Galaxy S24 | Aver. | 17.4 | 15.3 | 9.6 | 7.5 | 16.8 | 9.7 | 12.1 | 5.3 |

| Min | 5.7 | 1.6 | 4.2 | 0.7 | 4.5 | 0.2 | 3.1 | 0.1 | |

| Max | 43.6 | 43.2 | 19.8 | 18.4 | 37.8 | 32 | 23.2 | 19.7 | |

| Sony A7III | Aver. | 12.3 | 8.9 | 7.3 | 3.7 | 24 | 19.6 | 11.5 | 7.3 |

| Min | 1.7 | 0.3 | 1.2 | 0.5 | 3.8 | 2.2 | 3.4 | 1.3 | |

| Max | 32 | 31.2 | 15.3 | 9.6 | 55.7 | 55 | 34 | 32.6 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dugonik, B.; Golob, M.; Marhl, M.; Dugonik, A. Optimizing Digital Image Quality for Improved Skin Cancer Detection. J. Imaging 2025, 11, 107. https://doi.org/10.3390/jimaging11040107

Dugonik B, Golob M, Marhl M, Dugonik A. Optimizing Digital Image Quality for Improved Skin Cancer Detection. Journal of Imaging. 2025; 11(4):107. https://doi.org/10.3390/jimaging11040107

Chicago/Turabian StyleDugonik, Bogdan, Marjan Golob, Marko Marhl, and Aleksandra Dugonik. 2025. "Optimizing Digital Image Quality for Improved Skin Cancer Detection" Journal of Imaging 11, no. 4: 107. https://doi.org/10.3390/jimaging11040107

APA StyleDugonik, B., Golob, M., Marhl, M., & Dugonik, A. (2025). Optimizing Digital Image Quality for Improved Skin Cancer Detection. Journal of Imaging, 11(4), 107. https://doi.org/10.3390/jimaging11040107