A Comprehensive Review on Document Image Binarization

Abstract

:1. Introduction

- Comprehensive Overview: Emphasizes the critical role of document binarization in digital document analysis.

- Challenge Identification: Highlights key issues, including camera-captured documents, complex layouts, and historical preservation.

- Methodological Evolution: Traces the progression from traditional thresholding to modern deep learning techniques, evaluating current approaches in terms of effectiveness, robustness, and applicability.

- Benchmark Datasets: Introduces and evaluates datasets for performance assessment and machine learning training.

- Evaluation Metrics: Reviews metrics used in prior studies, advocating a standardized and rigorous evaluation of binarization methods.

- Future Directions: Explores emerging applications and research opportunities, particularly those leveraging deep learning

2. Document Binarization Importance

3. Overview of Binarization Challenges

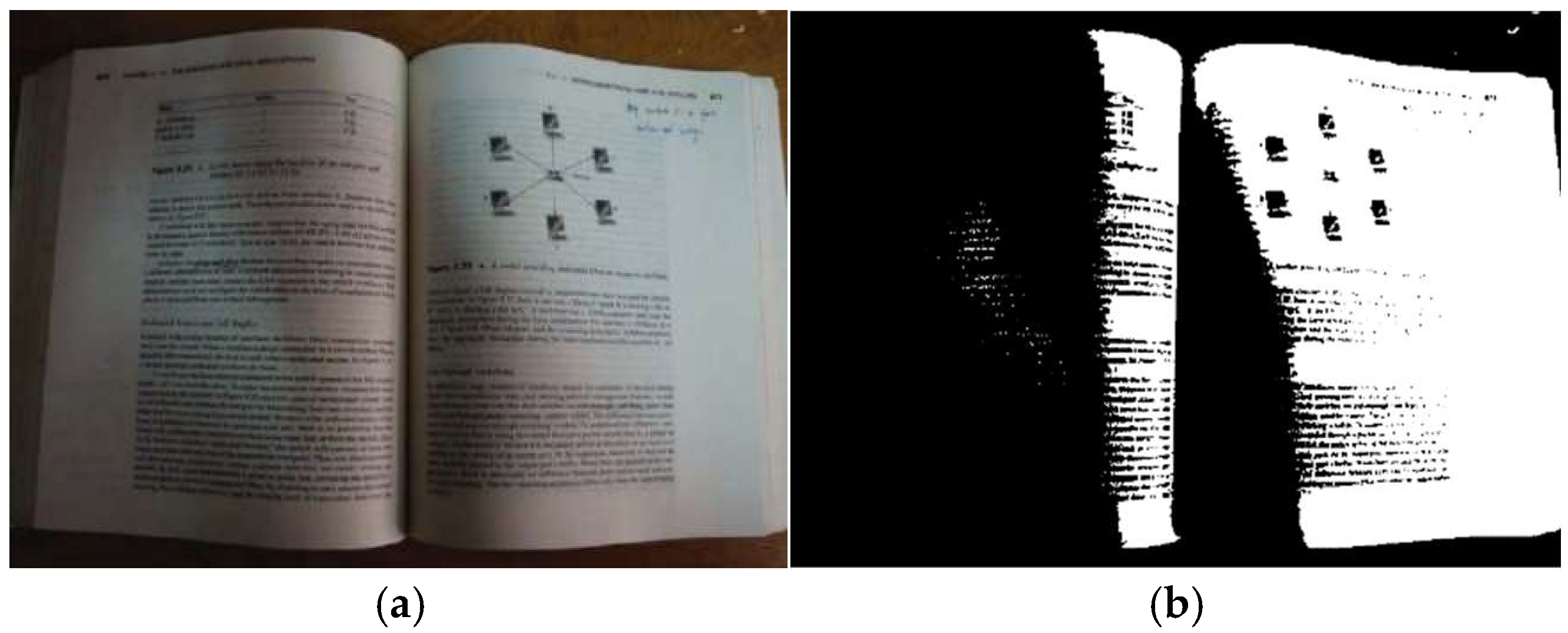

3.1. Documents Captured by Camera

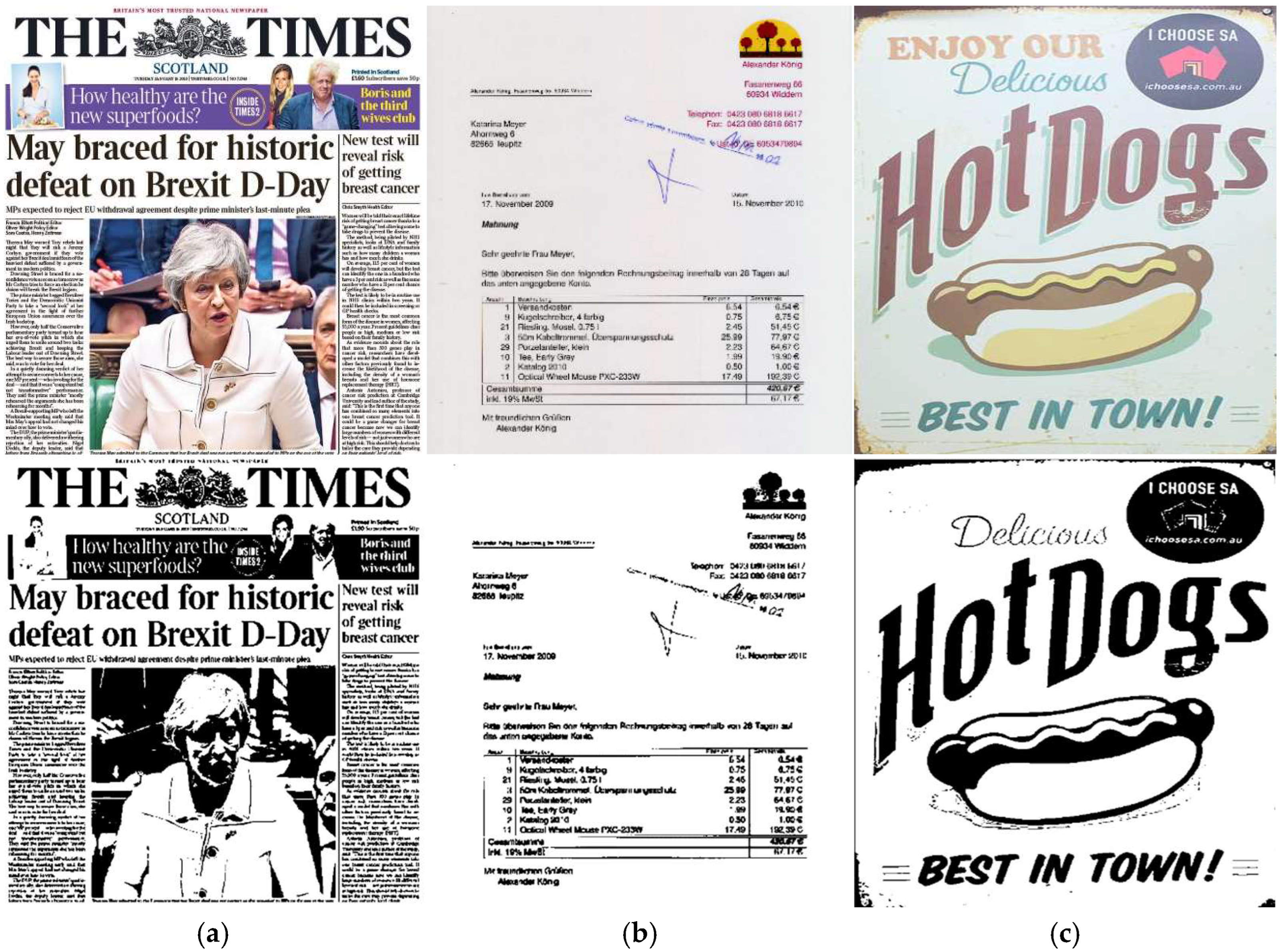

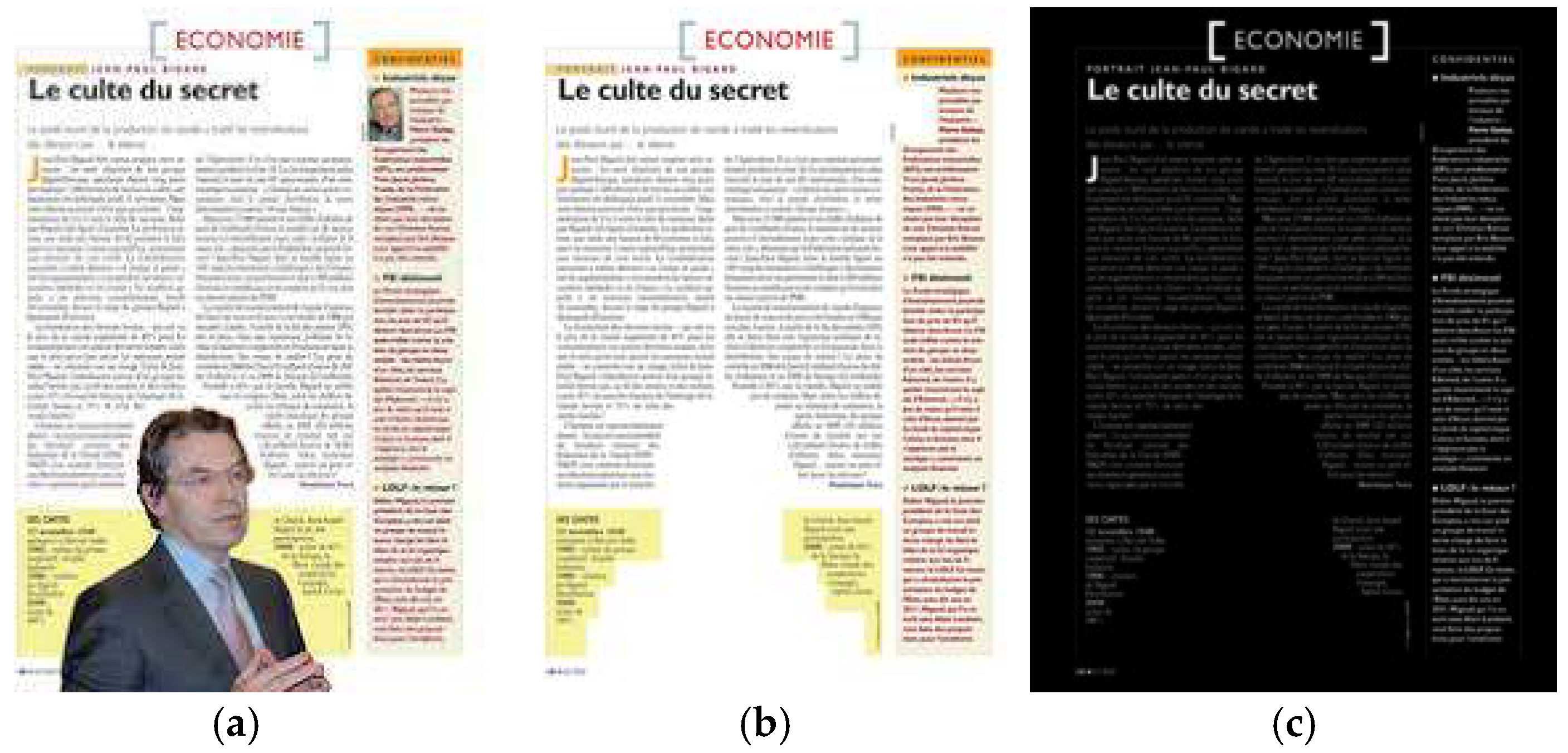

3.2. Documents with Complex Structures

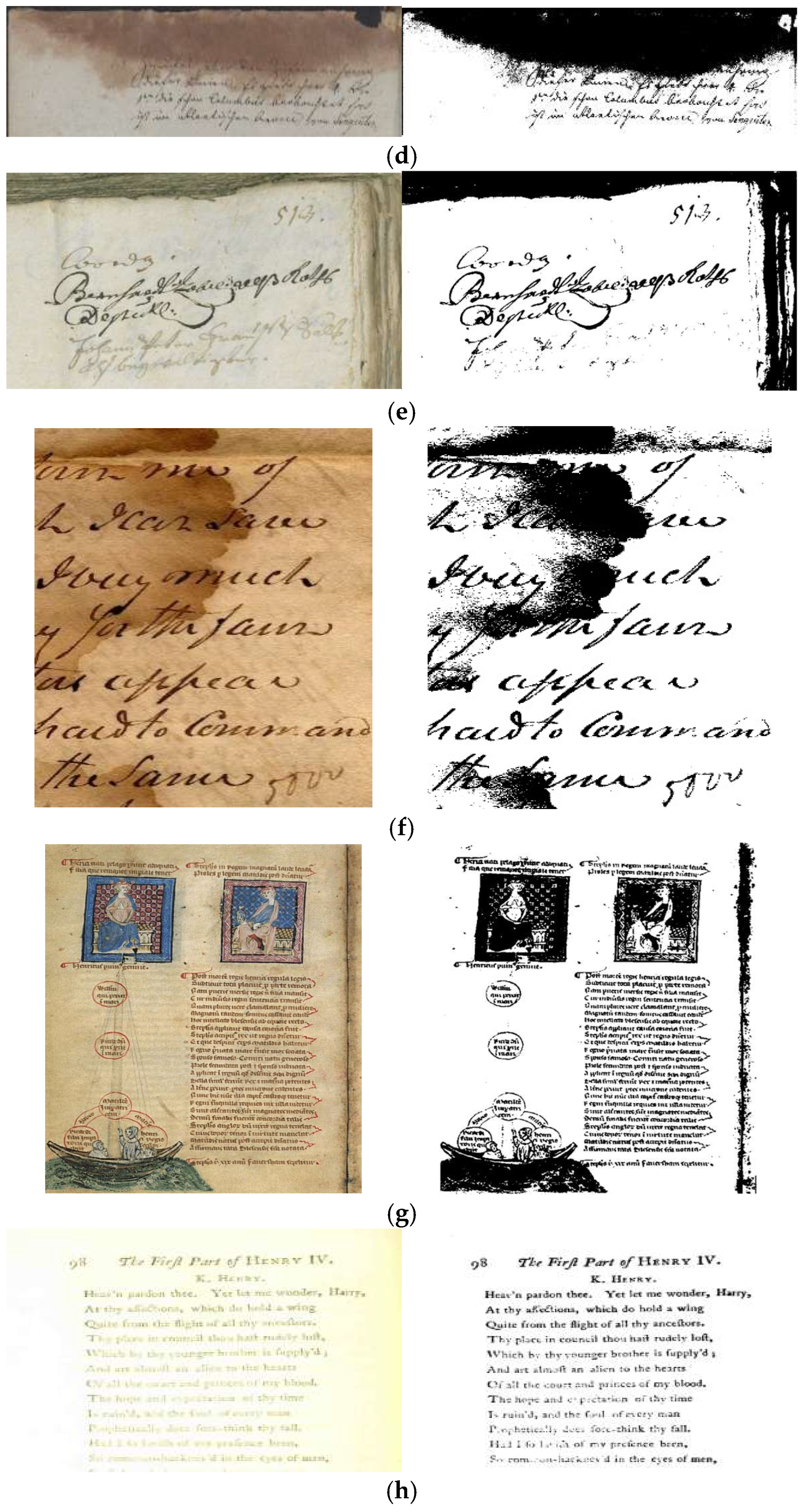

3.3. Degraded and Historical Documents

- Ink leakage: Ink leakage occurs when ink from one side of the paper bleeds onto the opposite side, leading to overlapping text and uneven background intensities, which complicates binarization [45,46]. This can result in illegible text and dark areas, impeding the application of consistent binarization.

- Fold lines: Folding a document for a long time can leave lines or marks that damage or reduce the quality of crossover texts, posing challenges during the binarization process [44,47,48]. Document folding marks obscure text and creates distortions, leading to missing or illegible text regions, thus complicating binarization.

- Deteriorated documents: Document deterioration, due to environmental factors such as improper storage and handling, coupled with inherent material instability [51,52,53], can lead to wear and tear, which cause color and contrast variations that hinder binarization algorithms from accurately distinguishing text regions.

- Faded text: Faded text is a common challenge in historical documents. As text fades over time, it becomes lighter, and the density of characters may vary, making it difficult for binarization methods to accurately extract text [54].

- Stains and smudges: Smudges and stains on historical documents significantly impede the process of binarization [55,56]. They can obscure text, cause blurring and distortion, and cause variations in color and contrast. Furthermore, exposure to liquids or moisture can cause ink to bleed, resulting in smudged text.

- Complex layouts and color differences: Historical documents often contain colorful graphics and decorations, which pose significant challenges [57,58,59]. Complex document layouts, denoted by overlapping text, multiple columns, and varying font attributes, greatly complicate the binarization process. Moreover, color differences within a document hinder the algorithm’s ability to distinguish between text and background.

4. Document Binarization Methods

4.1. Threshold-Based Methods

- In global thresholding, a single threshold value is applied to the entire image to separate the foreground and background pixels. This approach works well for fine images with uniform backgrounds and foregrounds. However, it may not be suitable for degraded images with variations in foreground and background illumination and intensity values [67,68].

- In local thresholding, the image is divided into sub-images, and multiple threshold values are calculated for each sub-image based on its pixels, allowing the threshold to adapt to changes in pixel values along with the image. This approach is more robust to variations in illumination and intensity values [67,69].

4.2. Edge-Based Methods

4.3. Texture-Based Methods

4.4. Clustering-Based Methods

4.5. Machine Learning-Based Methods

5. Benchmark Datasets

5.1. DIBCO Datasets

5.2. Bickley Diary Dataset

5.3. LS-HDIB

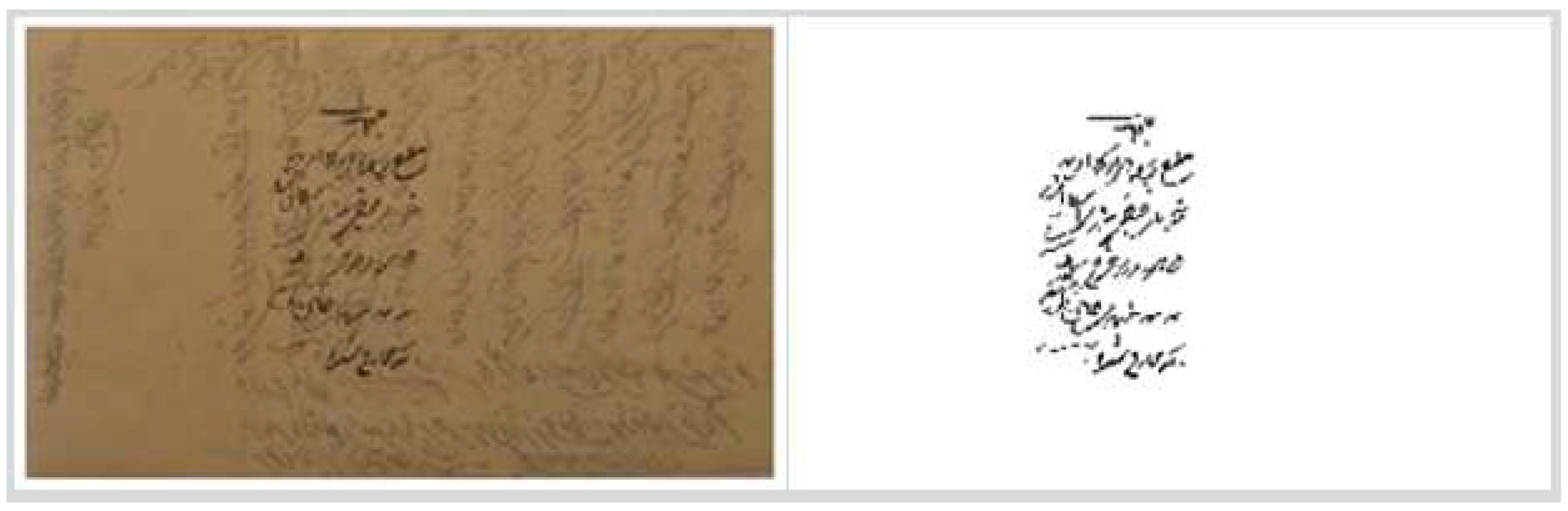

5.4. PHIBD 2012

5.5. LRDE DBD

6. Evaluation and Results

6.1. Evaluation Protocols

- True Positives (TPs): The count of pixels correctly identified as the background (black) in the binarized image and matching the ground truth.

- True Negatives (TN): The count of pixels correctly identified as the background (white) in the binarized image and matchings the ground truth.

- False Positives (FPs): The count of pixels incorrectly identified as the foreground (black) in the binarized image, while they are the background (white) in the ground truth.

- False Negatives (FNs): The count of pixels that are incorrectly identified as being in the background (white) in the binarized image, while being in the foreground (black) in the ground truth.

- Accuracy: The accuracy metric assesses the overall accuracy of a binary process by computing the percentage of correctly classified pixels in the binarized image when compared to the ground truth [95,126,127]. It offers a comprehensive evaluation of the binarization method’s capability to accurately identify both foreground and background pixels. Accuracy is calculated using the following equation:

- F-measure: The F-measure is a metric that offers a comprehensive evaluation of the accuracy and robustness of a binarization method. It combines precision and recall by calculating their harmonic mean, providing a balanced assessment of the method’s effectiveness [105,128,129]. The F-measure is computed using the following formula:

- pseudo-F-Measure (pFM): The pseudo-F-Measure (pFM) is a variant of the F-measure used to evaluate the performance of binarization algorithms on document images. Here, the pFM refers to the Fβ measure (weighted F-measure) commonly used in binary classification, which helps balance precision and recall for foreground detection in document binarization [65,103,130]. Unlike the traditional F-measure, the pFM considers the geometric information of the characters in the image. The pFM is calculated as follows:

- Peak Signal-to-Noise Ratio (PSNR): The peak signal-to-noise ratio (PSNR) metric is commonly used in image processing to measure the quality of a reconstructed image by comparing it to the original image [18,131,132]. The PSNR measures the ratio between the maximum possible power of a signal and the power of the distortion that affects the quality of its representation. It is calculated as follows:

- Geometric-mean pixel accuracy: The geometric-mean pixel accuracy is a widely used metric in image segmentation to evaluate the accuracy of pixel-level classifications [133]. It provides a comprehensive assessment of segmentation performance by considering both the true positive rate and the true negative rate. The calculation of the geometric-mean pixel accuracy is as follows:

- Distance Reciprocal Distortion (DRD): The Distortion Reciprocal Distance (DRD) measure evaluates the quality of binary image segmentation by measuring the distance between the segmented image and the ground truth, penalizing both false positives and false negatives [65,131]. It is calculated as follows:

6.2. Evaluation Results

7. Conclusions and Discussion

- There is a critical need for larger, more varied, and comprehensive datasets that cover various languages, scenarios, and challenges for training machine learning-based binarization models.

- While earlier methods combined diverse approaches, recent trends favor machine learning. We propose exploring hybrid methods by integrating machine learning with traditional techniques like enhancement, edge detection, and filtering, alongside other binarization types, to leverage their combined strengths for improved accuracy and robustness.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bataineh, B.; Abdullah, S.N.H.S.; Omar, K. A novel statistical feature extraction method for textual images: Optical font recognition. Expert Syst. Appl. 2012, 39, 5470–5477. [Google Scholar] [CrossRef]

- Bataineh, B.; Abdullah, S.N.H.S.; Omar, K.; Faidzul, M. Adaptive thresholding methods for documents image binarization. In Proceedings of the Pattern Recognition: Third Mexican Conference, MCPR 2011, Cancun, Mexico, 29 June–2 July 2011; Proceedings 3. Springer: Berlin/Heidelberg, Germany, 2011; pp. 230–239. [Google Scholar]

- Sauvola, J.; Pietikäinen, M. Adaptive document image binarization. Pattern Recognit. 2000, 33, 225–236. [Google Scholar] [CrossRef]

- Alqudah, M.K.; Bin Nasrudin, M.F.; Bataineh, B.; Alqudah, M.; Alkhatatneh, A. Investigation of binarization techniques for unevenly illuminated document images acquired via handheld cameras. In Proceedings of the 2015 International Conference on Computer, Communications, and Control Technology (I4CT), Kuching, Malaysia, 21–23 April 2015; pp. 524–529. [Google Scholar] [CrossRef]

- Bataineh, B. An iterative thinning algorithm for binary images based on sequential and parallel approaches. Pattern Recognit. Image Anal. 2018, 28, 34–43. [Google Scholar] [CrossRef]

- He, S.; Schomaker, L. CT-Net: Cascade T-shape deep fusion networks for document binarization. Pattern Recognit. 2021, 118, 108010. [Google Scholar] [CrossRef]

- Liu, S.C.; Zhang, F.Y.; Chen, M.X.; Xie, Y.F.; He, P.; Shao, J. Document binarization using recurrent attention generative model. In Proceedings of the 30th the British Machine Vision Conference, Cardiff, UK, 9–12 September 2019; p. 95. [Google Scholar]

- Bataineh, B.; Abdullah, S.N.H.S.; Omar, K. An adaptive local binarization method for document images based on a novel thresholding method and dynamic windows. Pattern Recognit. Lett. 2011, 32, 1805–1813. [Google Scholar] [CrossRef]

- Zhou, Y.; Zuo, S.; Yang, Z.; He, J.; Shi, J.; Zhang, R. A review of document image enhancement based on document degradation problem. Appl. Sci. 2023, 13, 7855. [Google Scholar] [CrossRef]

- Alghamdi, A.; Alluhaybi, D.; Almehmadi, D.; Alameer, K.; Siddeq, S.B.; Alsubait, T. Arabic Handwritten Manuscripts Text Recognition: A Systematic Review. Int. J. Comput. Sci. Netw. Secur. 2022, 22, 319. [Google Scholar]

- Alshehri, S.A. Journal of Umm Al-Qura University for Engineering and Architecture. J. Umm Al-Qura Univ. Eng. Archit. 2020, 11, 18–21. [Google Scholar]

- Anvari, Z.; Athitsos, V. A Survey on Deep learning based Document Image Enhancement. arXiv 2021, arXiv:2112.02719. [Google Scholar]

- Nikolaidou, K.; Seuret, M.; Mokayed, H.; Liwicki, M. A survey of historical document image datasets. Int. J. Doc. Anal. Recognit. 2022, 25, 305–338. [Google Scholar] [CrossRef]

- Vlasceanu, G.V.; Ghenadie, C.; Nitu, R.; Boiangiu, C.A. A voting method for image binarization of text-based documents. In Proceedings of the 2022 21st RoEduNet Conference: Networking in Education and Research (RoEduNet), Sovata, Romania, 15–16 September 2022. [Google Scholar] [CrossRef]

- Yang, Z.; Zuo, S.; Zhou, Y.; He, J.; Shi, J. A Review of Document Binarization: Main Techniques, New Challenges, and Trends. Electronics 2024, 13, 1394. [Google Scholar] [CrossRef]

- Nina, O.; Morse, B.; Barrett, W. A recursive Otsu thresholding method for scanned document binarization. In Proceedings of the 2011 IEEE Workshop on Applications of Computer Vision (WACV), Kona, HI, USA, 5–7 January 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 307–314. [Google Scholar]

- Savakis, A.E. Adaptive document image thresholding using foreground and background clustering. In Proceedings of the 1998 International Conference on Image Processing ICIP98 (Cat. No. 98CB36269), Chicago, IL, USA, 4–7 October 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 785–789. [Google Scholar]

- Bera, S.K.; Ghosh, S.; Bhowmik, S.; Sarkar, R.; Nasipuri, M. A non-parametric binarization method based on ensemble of clustering algorithms. Multimed. Tools Appl. 2021, 80, 7653–7673. [Google Scholar] [CrossRef]

- Jacobs, B.A.; Celik, T. Unsupervised document image binarization using a system of nonlinear partial differential equations. Appl. Math. Comput. 2022, 418, 126806. [Google Scholar] [CrossRef]

- Mandal, M.; Kumar, L.K.; Saran, M.S. MotionRec: A unified deep framework for moving object recognition. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2734–2743. [Google Scholar]

- Almeida, M.; Lins, R.D.; Bernardino, R.; Jesus, D.; Lima, B. A new binarization algorithm for historical documents. J. Imaging 2018, 4, 27. [Google Scholar] [CrossRef]

- Yuningsih, T. Detection of blood vessels in optic disc with maximum principal curvature and wolf thresholding algorithms for vessel segmentation and Prewitt edge detection and circular Hough transform for optic disc detection. Iran. J. Sci. Technol. Trans. Electr. Eng. 2021, 45, 435–446. [Google Scholar]

- Vardhan Rao, M.A.; Mukherjee, D.; Savitha, S. Implementation of Morphological Gradient Algorithm for Edge Detection. In Proceedings of the Congress on Intelligent Systems: Proceedings of CIS 2021, Bengaluru, India, 4–5 September 2021; Springer: Berlin/Heidelberg, Germany, 2022; Volume 1, pp. 773–789. [Google Scholar]

- Dey, A.; Das, N.; Nasipuri, M. Variational Augmentation for Enhancing Historical Document Image Binarization. arXiv 2022, arXiv:2211.06581. [Google Scholar]

- Bataineh, B.; Abdullah, S.; Omar, K. Generating an arabic calligraphy text blocks for global texture analysis. Int. J. Adv. Sci. Eng. Inf. Technol. 2011, 1, 150–155. [Google Scholar] [CrossRef]

- Bataineh, B.; Abdullah, S.N.H.S.; Omar, K. A statistical global feature extraction method for optical font recognition. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Phuket, Thailand, 7–10 April 2021; Springer: Berlin/Heidelberg, Germany, 2011; pp. 257–267. [Google Scholar]

- Venkatachalam, K.; Prabu, P.; Almutairi, A.; Abouhawwash, M. Secure biometric authentication with de-duplication on distributed cloud storage. PeerJ Comput. Sci. 2021, 7, e569. [Google Scholar]

- Mukhtar, M.; Malhotra, D. SiSbDp—The Technique to Identify Forgery in Legal Handwritten Documents. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1103–1108. [Google Scholar]

- Othman, P.S.; Ihsan, R.R.; Marqas, R.B.; Almufti, S.M. Image processing techniques for identifying impostor documents through digital forensic examination. Image Process. Tech. 2020, 62, 1781–1794. [Google Scholar]

- Fadl, S.; Hosny, K.M.; Hammad, M. Automatic fake document identification and localization using DE-Net and color-based features of foreign inks. J. Vis. Commun. Image Represent. 2023, 92, 103801. [Google Scholar] [CrossRef]

- Paliwal, S.S.; Vishwanath, D.; Rahul, R.; Sharma, M.; Vig, L. Tablenet: Deep learning model for end-to-end table detection and tabular data extraction from scanned document images. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 128–133. [Google Scholar]

- Ma, C.; Zhang, W.E.; Guo, M.; Wang, H.; Sheng, Q.Z. Multi-document summarization via deep learning techniques: A survey. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Xu, Y.; Li, M.; Cui, L.; Huang, S.; Wei, F.; Zhou, M. Layoutlm: Pre-training of text and layout for document image understanding. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, San Diego, CA, USA, 23–27 August 2020; pp. 1192–1200. [Google Scholar]

- Jiang, S.; Hu, J.; Magee, C.L.; Luo, J. Deep learning for technical document classification. IEEE Trans. Eng. Manag. 2022, 71, 1163–1179. [Google Scholar] [CrossRef]

- Feng, H.; Zhou, W.; Deng, J.; Tian, Q.; Li, H. DocScanner: Robust document image rectification with progressive learning. arXiv 2021, arXiv:2110.14968. [Google Scholar]

- Shemiakina, J.; Limonova, E.; Skoryukina, N.; Arlazarov, V.V.; Nikolaev, D.P. A method of image quality assessment for text recognition on camera-captured and projectively distorted documents. Mathematics 2021, 9, 2155. [Google Scholar] [CrossRef]

- Souibgui, M.A.; Kessentini, Y.; Fornés, A. A conditional gan based approach for distorted camera captured documents recovery. In Proceedings of the Pattern Recognition and Artificial Intelligence: 4th Mediterranean Conference, MedPRAI 2020, Hammamet, Tunisia, 20–22 December 2020; Proceedings 4. Springer: Berlin/Heidelberg, Germany, 2021; pp. 215–228. [Google Scholar]

- Mahajan, S.; Rani, R. Text Detection and Localization in Scene Images: A Broad Review; Springer: Dordrecht, The Netherlands, 2021; Volume 54, ISBN 0123456789. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Bataineh, B.M.A.; Shambour, M.K.Y. A robust algorithm for emoji detection in smartphone screenshot images. J. ICT Res. Appl. 2019, 13, 192–212. [Google Scholar] [CrossRef]

- Bhowmik, S.; Kundu, S.; Sarkar, R. BINYAS: A complex document layout analysis system. Multimed. Tools Appl. 2021, 80, 8471–8504. [Google Scholar] [CrossRef]

- Faizullah, S.; Ayub, M.S.; Hussain, S.; Khan, M.A. A Survey of OCR in Arabic Language: Applications, Techniques, and Challenges. Appl. Sci. 2023, 13, 4584. [Google Scholar] [CrossRef]

- Rani, U.; Kaur, A.; Josan, G. A new binarization method for degraded document images. Int. J. Inf. Technol. 2023, 15, 1035–1053. [Google Scholar] [CrossRef]

- Bipin Nair, B.J.; Nair, A.S. Ancient Horoscopic Palm Leaf Binarization Using A Deep Binarization Model—RESNET. In Proceedings of the 2021 5th International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 8–10 April 2021; pp. 1524–1529. [Google Scholar]

- Bataineh, B.; Abdullah, S.N.H.S.; Omar, K. Adaptive binarization method for degraded document images based on surface contrast variation. Pattern Anal. Appl. 2017, 20, 639–652. [Google Scholar] [CrossRef]

- Habib, S.; Shukla, M.K.; Kapoor, R. A Comparative Study on Recognition of Degraded Urdu and Devanagari Printed Documents BT–Proceedings of International Conference on Machine Intelligence and Data Science Applications; Prateek, M., Singh, T.P., Choudhury, T., Pandey, H.M., Gia Nhu, N., Eds.; Springer: Singapore, 2021; pp. 357–368. [Google Scholar]

- Rahiche, A.; Bakhta, A.; Cheriet, M. Blind Source Separation Based Framework for Multispectral Document Images Binarization. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1476–1481. [Google Scholar]

- Rani, N.S.; Bipin Nair, B.J.; Karthik, S.K.; Srinidhi, A. Binarization of Degraded Photographed Document Images- A Variational Denoising Auto Encoder. In Proceedings of the 2021 Third International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 2–4 September 2021; pp. 119–124. [Google Scholar]

- Saddami, K.; Munadi, K.; Muchallil, S.; Arnia, F. Improved Thresholding Method for Enhancing Jawi Binarization Performance. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 1108–1113. [Google Scholar]

- Singh, B.M.; Sharma, R.; Ghosh, D.; Mittal, A. Adaptive binarization of severely degraded and non-uniformly illuminated documents. Int. J. Doc. Anal. Recognit. 2014, 17, 393–412. [Google Scholar] [CrossRef]

- Costin-Anton, B.; Andrei-Iulian, D.; Dan-Cristian, C. Binarization for digitization projects using hybrid foreground-reconstruction. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing, Cluj-Napoca, Romania, 27–29 August 2009; pp. 141–144. [Google Scholar]

- Karthika, M.; James, A. A Novel Approach for Document Image Binarization Using Bit-plane Slicing. Procedia Technol. 2015, 19, 758–765. [Google Scholar] [CrossRef]

- Latrache, H.; Meziani, F.; Bouchakour, L.; Ghribi, K.; Yahiaoui, M. A New Binarization Method For Degraded Printed Document Images. In Proceedings of the 2021 International Conference on Information Systems and Advanced Technologies (ICISAT), Annaba, Algeria, 27–28 December 2021; pp. 1–4. [Google Scholar]

- Sakila, A.; Vijayarani, S. A hybrid approach for document image binarization. In Proceedings of the 2017 International Conference on Inventive Computing and Informatics (ICICI), Coimbatore, India, 23–24 November 2017; pp. 645–650. [Google Scholar]

- Mustafa, W.A.; Yazid, H.; Jaafar, M. An improved sauvola approach on document images binarization. J. Telecommun. Electron. Comput. Eng. 2018, 10, 43–50. [Google Scholar]

- Mustafa, W.A.; Abdul Kader, M.M.M. Binarization of Document Images: A Comprehensive Review. J. Phys. Conf. Ser. 2018, 1019, 012023. [Google Scholar] [CrossRef]

- Dey, S.; Nicolaou, A.; Llados, J.; Pal, U. Local Binary Pattern for Word Spotting in Handwritten Historical Document BT—Structural, Syntactic, and Statistical Pattern Recognition; Robles-Kelly, A., Loog, M., Biggio, B., Escolano, F., Wilson, R., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 574–583. [Google Scholar]

- Mysore, S.; Gupta, M.K.; Belhe, S. Complex and degraded color document image binarization. In Proceedings of the 2016 3rd International Conference on Signal Processing and Integrated Networks (SPIN), Noida, India, 11–12 February 2016; pp. 157–162. [Google Scholar]

- Tsai, C.-M.; Lee, H.-J. Binarization of color document images via luminance and saturation color features. IEEE Trans. Image Process. 2002, 11, 434–451. [Google Scholar] [CrossRef]

- Okoye, K.; Tawil, A.R.H.; Naeem, U.; Islam, S.; Lamine, E. Semantic-Based Model Analysis Towards Enhancing Information Values of Process Mining: Case Study of Learning Process Domain; Springer: Cham, Switzerland, 2018; Volume 614, ISBN 9783319606170. [Google Scholar]

- Song, Z.; Ali, S.; Bouguila, N. Bayesian Learning of Infinite Asymmetric Gaussian Mixture Models for Background Subtraction; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; Volume 11662, ISBN 9783030272012. [Google Scholar]

- Yang, Z.; Liu, B.; Xiong, Y.; Wu, G. GDB: Gated Convolutions-based Document Binarization. Pattern Recognit. 2024, 146, 109989. [Google Scholar] [CrossRef]

- Yuan, C.; Agaian, S.S. A comprehensive review of Binary Neural Network. Artif. Intell. Rev. 2023, 56, 12949–13013. [Google Scholar] [CrossRef]

- Tensmeyer, C.; Martinez, T. Historical Document Image Binarization: A Review. SN Comput. Sci. 2020, 1, 173. [Google Scholar] [CrossRef]

- Hsia, C.H.; Lin, T.Y.; Chiang, J.S. An adaptive binarization method for cost-efficient document image system in wavelet domain. J. Imaging Sci. Technol. 2020, 64, 1–14. [Google Scholar] [CrossRef]

- Michalak, H.; Okarma, K. Robust combined binarization method of non-uniformly illuminated document images for alphanumerical character recognition. Sensors 2020, 20, 2914. [Google Scholar] [CrossRef]

- Bonny, M.Z.; Uddin, M.S. A Hybrid-Binarization Approach for Degraded Document Enhancement. J. Comput. Commun. 2020, 08, 12. [Google Scholar] [CrossRef]

- Bardozzo, F.; De La Osa, B.; Horanská, Ľ.; Fumanal-Idocin, J.; delli Priscoli, M.; Troiano, L.; Tagliaferri, R.; Fernandez, J.; Bustince, H. Sugeno integral generalization applied to improve adaptive image binarization. Inf. Fusion 2021, 68, 37–45. [Google Scholar] [CrossRef]

- Han, Z.; Su, B.; Li, Y.; Ma, Y.; Wang, W.; Chen, G. An enhanced image binarization method incorporating with Monte-Carlo simulation. J. Cent. South Univ. 2019, 26, 1661–1671. [Google Scholar] [CrossRef]

- Niblack, W. An Introduction to Digital Image Processing; Prentice-Hall: Englewood Cliffs, NJ, USA, 1986; ISBN 0134806743. [Google Scholar]

- Bradley, D.; Roth, G. Adaptive thresholding using the integral image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- McDanel, B.; Teerapittayanon, S.; Kung, H.T. Embedded binarized neural networks. arXiv 2017, arXiv:1709.02260. [Google Scholar]

- Mustafa, W.A.; Yazid, H.; Alkhayyat, A.; Jamlos, M.A.; Rahim, H.A. Effect of direct statistical contrast enhancement technique on document image binarization. Comput. Mater. Contin. 2022, 70, 3549–3564. [Google Scholar] [CrossRef]

- Jindal, H.; Kumar, M.; Tomar, A.; Malik, A. Degraded Document Image Binarization using Novel Background Estimation Technique. In Proceedings of the 2021 6th International Conference for Convergence in Technology (I2CT 2021), Pune, India, 2–4 April 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Kaur, A.; Rani, U.; Josan, G.S. Modified Sauvola binarization for degraded document images. Eng. Appl. Artif. Intell. 2020, 92, 103672. [Google Scholar] [CrossRef]

- Guo, J.; He, C.; Wang, Y. Fourth order indirect diffusion coupled with shock filter and source for text binarization. Signal Process. 2020, 171, 107478. [Google Scholar] [CrossRef]

- Wu, F.; Zhu, C.; Xu, J.; Bhatt, M.W.; Sharma, A. Research on image text recognition based on canny edge detection algorithm and k-means algorithm. Int. J. Syst. Assur. Eng. Manag. 2022, 13, 72–80. [Google Scholar] [CrossRef]

- Xiong, W.; Zhou, L.; Yue, L.; Li, L.; Wang, S. An enhanced binarization framework for degraded historical document images. EURASIP J. Image Video Process. 2021, 2021, 13. [Google Scholar] [CrossRef]

- Yang, Y.; Yan, H. An adaptive logical method for binarization of degraded document images. Pattern Recognit. 2000, 33, 787–807. [Google Scholar] [CrossRef]

- Sehad, A.; Chibani, Y.; Hedjam, R.; Cheriet, M. Gabor filter-based texture for ancient degraded document image binarization. Pattern Anal. Appl. 2019, 22, 1–22. [Google Scholar] [CrossRef]

- Bernardino, R.; Lins, R.D.; Barboza, R. Texture-based Document Binarization. In Proceedings of the ACM Symposium on Document Engineering 2024, San Jose, CA, USA, 20–23 August 2024; Association for Computing Machinery: New York, NY, USA, 2024. [Google Scholar]

- Sehad, A.; Chibani, Y.; Hedjam, R.; Cheriet, M. LBP-based degraded document image binarization. In Proceedings of the 2015 International Conference on Image Processing Theory, Tools and Applications (IPTA), Orléans, France, 10–13 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 213–217. [Google Scholar]

- Armanfard, N.; Valizadeh, M.; Komeili, M.; Kabir, E. Document image binarization by using texture-edge descriptor. In Proceedings of the 2009 14th International CSI Computer Conference, Tehran, Iran, 20–21 October 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 134–139. [Google Scholar]

- Susan, S.; Rachna Devi, K.M. Text area segmentation from document images by novel adaptive thresholding and template matching using texture cues. Pattern Anal. Appl. 2020, 23, 869–881. [Google Scholar] [CrossRef]

- Lins, R.D.; Bernardino, R.; da Silva Barboza, R.; De Oliveira, R.C. Using Paper Texture for Choosing a Suitable Algorithm for Scanned Document Image Binarization. J. Imaging 2022, 8, 272. [Google Scholar] [CrossRef]

- Zhang, X.; He, C.; Guo, J. Selective diffusion involving reaction for binarization of bleed-through document images. Appl. Math. Model. 2020, 81, 844–854. [Google Scholar] [CrossRef]

- Ju, R.-Y.; Lin, Y.-S.; Jin, Y.; Chen, C.-C.; Chien, C.-T.; Chiang, J.-S. Three-stage binarization of color document images based on discrete wavelet transform and generative adversarial networks. arXiv 2022, arXiv:2211.16098. [Google Scholar] [CrossRef]

- Bataineh, B.; Alzahrani, A.A. Fully Automated Density-Based Clustering Method. Comput. Mater. Contin. 2023, 76, 1833–1851. [Google Scholar] [CrossRef]

- Bataineh, B. Fast Component Density Clustering in Spatial Databases: A Novel Algorithm. Information 2022, 13, 477. [Google Scholar] [CrossRef]

- Elgbbas, E.M.; Khalil, M.I.; Abbas, H. Binarization of Colored Document Images using Spectral Clustering. In Proceedings of the 2018 13th International Conference on Computer Engineering and Systems (ICCES 2018), Cairo, Egypt, 18–19 December 2018; pp. 411–416. [Google Scholar] [CrossRef]

- Valdivia, S.; Soto, R.; Crawford, B.; Caselli, N.; Paredes, F.; Castro, C.; Olivares, R. Clustering-based binarization methods applied to the crow search algorithm for 0/1 combinatorial problems. Mathematics 2020, 8, 1070. [Google Scholar] [CrossRef]

- Jana, P.; Ghosh, S.; Bera, S.K.; Sarkar, R. Handwritten document image binarization: An adaptive K-means based approach. In Proceedings of the 2017 IEEE Calcutta Conference (CALCON), Kolkata, India, 2–3 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 226–230. [Google Scholar]

- Wakahara, T.; Kita, K. Binarization of color character strings in scene images using k-means clustering and support vector machines. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 274–278. [Google Scholar]

- Jana, P.; Ghosh, S.; Sarkar, R.; Nasipuri, M. A fuzzy C-means based approach towards efficient document image binarization. In Proceedings of the 2017 Ninth International Conference on Advances in Pattern Recognition (ICAPR), Bangalore, India, 27–30 December 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Mustafa, W.A.; Aziz, H.; Khairunizam, W.; Zunaidi, I.; Razlan, Z.M.; Shahriman, A.B. Document Images Binarization Using Hybrid Combination of Fuzzy C-Means and Deghost Method. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 557. [Google Scholar] [CrossRef]

- Kv, A.R.; Kedar, M.; Pai, V.S.; Ev, S. Ancient Epic Manuscript Binarization and Classification Using False Color Spectralization and VGG-16 Model. Procedia Comput. Sci. 2023, 218, 631–643. [Google Scholar] [CrossRef]

- Pastor-Pellicer, J.; España-Boquera, S.; Zamora-Martínez, F.; Afzal, M.Z.; Castro-Bleda, M.J. Insights on the use of convolutional neural networks for document image binarization. In Proceedings of the Advances in Computational Intelligence: 13th International Work-Conference on Artificial Neural Networks, IWANN 2015, Palma de Mallorca, Spain, 10–12 June 2015; Proceedings, Part II 13. Springer: Berlin/Heidelberg, Germany, 2015; pp. 115–126. [Google Scholar]

- Ghoshal, R.; Banerjee, A. SVM and MLP based segmentation and recognition of text from scene images through an effective binarization scheme. In The Computational Intelligence in Pattern Recognition: Proceedings of CIPR 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 237–246. [Google Scholar]

- Zhao, P.; Wang, W.; Zhang, G.; Lu, Y. Alleviating pseudo-touching in attention U-Net-based binarization approach for the historical Tibetan document images. Neural Comput. Appl. 2023, 35, 13791–13802. [Google Scholar] [CrossRef]

- Akbari, Y.; Al-Maadeed, S.; Adam, K. Binarization of Degraded Document Images Using Convolutional Neural Networks and Wavelet-Based Multichannel Images. IEEE Access 2020, 8, 153517–153534. [Google Scholar] [CrossRef]

- De, R.; Chakraborty, A.; Sarkar, R. Document Image Binarization Using Dual Discriminator Generative Adversarial Networks. IEEE Signal Process. Lett. 2020, 27, 1090–1094. [Google Scholar] [CrossRef]

- Castellanos, F.J.; Gallego, A.J.; Calvo-Zaragoza, J. Unsupervised neural domain adaptation for document image binarization. Pattern Recognit. 2021, 119, 108099. [Google Scholar] [CrossRef]

- Suh, S.; Kim, J.; Lukowicz, P.; Lee, Y.O. Two-stage generative adversarial networks for binarization of color document images. Pattern Recognit. 2022, 130, 108810. [Google Scholar] [CrossRef]

- Khamekhem Jemni, S.; Souibgui, M.A.; Kessentini, Y.; Fornés, A. Enhance to read better: A Multi-Task Adversarial Network for Handwritten Document Image Enhancement. Pattern Recognit. 2022, 123, 108370. [Google Scholar] [CrossRef]

- Dang, Q.V.; Lee, G.S. Document Image Binarization with Stroke Boundary Feature Guided Network. IEEE Access 2021, 9, 36924–36936. [Google Scholar] [CrossRef]

- Souibgui, M.A.; Biswas, S.; Jemni, S.K.; Kessentini, Y.; Fornes, A.; Llados, J.; Pal, U. DocEnTr: An End-to-End Document Image Enhancement Transformer. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR 2022), Montréal, QC, Canada, 21–25 August 2022; pp. 1699–1705. [Google Scholar] [CrossRef]

- Lihota, K.; Gayer, A.; Arlazarov, V. Threshold U-Net: Speed up document binarization with adaptive thresholds. In Proceedings of the Sixteenth International Conference on Machine Vision, Yerevan, Armenia, 15–18 November 2023; Volume 13072, p. 3. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, K.; Wan, Y. An Efficient Transformer–CNN Network for Document Image Binarization. Electronics 2024, 13, 2243. [Google Scholar] [CrossRef]

- Du, Z.; He, C. Nonlinear diffusion system for simultaneous restoration and binarization of degraded document images. Comput. Math. Appl. 2024, 153, 237–248. [Google Scholar] [CrossRef]

- Kang, S.; Iwana, B.K.; Uchida, S. Complex image processing with less data—Document image binarization by integrating multiple pre-trained U-Net modules. Pattern Recognit. 2021, 109, 107577. [Google Scholar] [CrossRef]

- Basu, A.; Mondal, R.; Bhowmik, S.; Sarkar, R. U-Net versus Pix2Pix: A comparative study on degraded document image binarization. J. Electron. Imaging 2020, 29, 1–25. [Google Scholar] [CrossRef]

- Antonacopoulos, A.; Clausner, C.; Papadopoulos, C.; Pletschacher, S. ICDAR2015 competition on recognition of documents with complex layouts-RDCL2015. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Nancy, France, 23–26 August 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1151–1155. [Google Scholar]

- Gatos, B.; Ntirogiannis, K.; Pratikakis, I. DIBCO 2009: Document image binarization contest. Int. J. Doc. Anal. Recognit. 2011, 14, 35–44. [Google Scholar] [CrossRef]

- Sulaiman, A.; Omar, K.; Nasrudin, M.F. Degraded historical document binarization: A review on issues, challenges, techniques, and future directions. J. Imaging 2019, 5, 48. [Google Scholar] [CrossRef]

- Mustafa, W.A.; Khairunizam, W.; Zunaidi, I.; Razlan, Z.M.; Shahriman, A.B. A Comprehensive Review on Document Image (DIBCO) Database. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 557. [Google Scholar] [CrossRef]

- Khitas, M.; Ziet, L.; Bouguezel, S. Improved degraded document image binarization using median filter for background estimation. Elektron. Elektrotech. 2018, 24, 82–87. [Google Scholar] [CrossRef]

- Sadekar, K.; Tiwari, A.; Singh, P.; Raman, S. LS-HDIB: A Large Scale Handwritten Document Image Binarization Dataset. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montréal, QC, Canada, 21–25 August 2022; pp. 1678–1684. [Google Scholar] [CrossRef]

- Saddami, K.; Munadi, K.; Away, Y.; Arnia, F. Effective and fast binarization method for combined degradation on ancient documents. Heliyon 2019, 5, e02613. [Google Scholar] [CrossRef] [PubMed]

- Ayatollahi, S.M.; Nafchi, H.Z. Persian heritage image binarization competition (PHIBC 2012). In Proceedings of the 2013 First Iranian Conference on Pattern Recognition and Image Analysis (PRIA), Tehran, Iran, 6–8 March 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–4. [Google Scholar]

- Suh, S.; Kim, J.; Lukowicz, P.; Lee, Y.O. Two-stage generative adversarial networks for document image binarization with color noise and background removal. arXiv 2020, arXiv:2010.10103. [Google Scholar]

- Guo, Y.; Ji, C.; Zheng, X.; Wang, Q.; Luo, X. Multi-scale multi-attention network for moiré document image binarization. Signal Process. Image Commun. 2021, 90, 116046. [Google Scholar] [CrossRef]

- Milyaev, S.; Barinova, O.; Novikova, T.; Kohli, P.; Lempitsky, V. Fast and accurate scene text understanding with image binarization and off-the-shelf OCR. Int. J. Doc. Anal. Recognit. 2015, 18, 169–182. [Google Scholar] [CrossRef]

- Mishra, A.; Alahari, K.; Jawahar, C.V. An MRF model for binarization of natural scene text. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; IEEE: Piscataway, NJ, USA, 2011; pp. 11–16. [Google Scholar]

- Gatos, B.; Ntirogiannis, K.; Pratikakis, I. ICDAR 2009 document image binarization contest (DIBCO 2009). In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 1375–1382. [Google Scholar]

- Pratikakis, I.; Gatos, B.; Ntirogiannis, K. ICDAR 2013 document image binarization contest (DIBCO 2013). In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1471–1476. [Google Scholar]

- Michalak, H.; Okarma, K. Optimization of Degraded Document Image Binarization Method Based on Background Estimation. Comput. Sci. Res. Notes 2020, 3001, 89–98. [Google Scholar] [CrossRef]

- Mustafa, W.A.; Khairunizam, W.; Ibrahim, Z.; Shahriman, A.B.; Razlan, Z.M. Improved Feng Binarization Based on Max-Mean Technique on Document Image. In Proceedings of the 2018 International Conference on Computational Approach in Smart Systems Design and Applications (ICASSDA 2018), Kuching, Malaysia, 15–17 August 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Tran, M.T.; Vo, Q.N.; Lee, G.S. Binarization of music score with complex background by deep convolutional neural networks. Multimed. Tools Appl. 2021, 80, 11031–11047. [Google Scholar] [CrossRef]

- Dueire Lins, R.; Bernardino, R.; Jesus, D.M. A quality and time assessment of binarization algorithms. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1444–1450. [Google Scholar] [CrossRef]

- Guo, J.; He, C.; Zhang, X. Nonlinear edge-preserving diffusion with adaptive source for document images binarization. Appl. Math. Comput. 2019, 351, 8–22. [Google Scholar] [CrossRef]

- Michalak, H.; Okarma, K. Improvement of image binarization methods using image preprocessing with local entropy filtering for alphanumerical character recognition purposes. Entropy 2019, 21, 562. [Google Scholar] [CrossRef] [PubMed]

- Chutani, G.; Patnaik, T.; Dwivedi, V. Degraded Document Image Binarization. Adv. Comput. Sci. Inf. Technol. 2015, 2, 469–472. [Google Scholar]

- Abd Elfattah, M.; Hassanien, A.E.; Abuelenin, S. A hybrid swarm optimization approach for document binarization. Stud. Inform. Control 2019, 28, 65–76. [Google Scholar] [CrossRef]

- Mousa, U.W.A.; El Munim, H.E.A.; Khalil, M.I. A Multistage Binarization Technique for the Degraded Document Images. In Proceedings of the 2018 13th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 18–19 December 2018; pp. 332–337. [Google Scholar] [CrossRef]

- Quattrini, F.; Pippi, V.; Cascianelli, S.; Cucchiara, R. Binarizing Documents by Leveraging both Space and Frequency. In International Conference on Document Analysis and Recognition; Springer Nature: Cham, Switzerland, 2024; pp. 3–22. [Google Scholar] [CrossRef]

- He, S.; Schomaker, L. DeepOtsu: Document enhancement and binarization using iterative deep learning. Pattern Recognit. 2019, 91, 379–390. [Google Scholar] [CrossRef]

- Huang, X.; Li, L.; Liu, R.; Xu, C.; Ye, M. Binarization of degraded document images with global-local U-Nets. Optik 2020, 203, 164025. [Google Scholar] [CrossRef]

- Detsikas, N.; Mitianoudis, N.; Papamarkos, N. A Dilated MultiRes Visual Attention U-Net for historical document image binarization. Signal Process. Image Commun. 2024, 122, 117102. [Google Scholar] [CrossRef]

| Author(s) (Year) | Method Used | Advantages | Disadvantages |

|---|---|---|---|

| Thresholding-based methods (5 methods) | |||

| Bonny and Uddin (2020) [67] | Integration of Otsu, Sauvola, and Nick | Combines strengths of different methods | Slow and complex |

| Kaur et al. (2020) [75] | Dynamic Sauvola with adaptive window size | Better binarization via stroke width adjustment | Complex window size estimation |

| Bardozzo et al. (2021) [68] | Global techniques for uniform background | Fast and effective for clean documents | Fails with non-uniform illumination |

| Jindal et al. (2021) [74] | Background estimation + Otsu + CCL | Improved text segmentation | High computational cost |

| Mustafa et al. (2022) [73] | Classifies regions via local stats | Good under contrast and brightness variations | Requires preprocessing and tuning |

| Edge-based methods (3 methods) | |||

| Guo et al. (2020) [76] | Zero-crossing + time-dependent diffusion | Separates text using local features | Slow and less effective on degraded images |

| Xiong et al. (2021) [78] | Laplacian energy + SWT + background estimation | Edge detection using structured morphology | Computationally heavy; needs preprocessing |

| Wu et al. (2022) [77] | Canny edge detection | Captures sharp boundaries well | Fails on blurred, low-contrast, or noisy images |

| Texture-based methods (7 methods) | |||

| Yang and Yan (2000) [79] | Run-length histograms for texture analysis | Works well on varied backgrounds | Struggles with complex documents |

| Hsia et al. (2020) [65] | Wavelet transforms + local thresholds + LMS | Effective for complex backgrounds | Extensive preprocessing and postprocessing |

| Susan et al. (2020) [84] | Sliding window texture matching + Otsu | Good text regions in complex layouts | Fixed template may not suit all document layouts |

| Zhang et al. (2020) [86] | Reaction-diffusion model + Perona-Malik + tensor diffusion | Excellent for bleed-through artifacts | Computationally expensive; not suitable for all document types |

| Ju et al. (2022) [87] | GAN + foreground extraction + integration | Advanced for degraded, ancient manuscripts | Complex and time-consuming |

| Lins et al. (2022) [85] | Use texture as a feature | Ideal for historical docs | Limited generalizability |

| Bernardino et al. (2024) [81] | Gray-level co-occurrence matrices | Effective for complex backgrounds | Decreases performance with low contrast/noise |

| Clustering-based methods (2 methods) | |||

| Bera et al. (2021) [18] | Hybrid of clustering methods | Effective in complex backgrounds | Complex and computationally expensive |

| Kv et al. (2023) [96] | VGG-16 integrated with clustering | Suitable for modern document images | High computational cost and training data requirements |

| Machine-learning methods (20 methods) | |||

| Ghoshal & Banerjee (2020) [98] | SVMs for features and classification | Effective binary classification | Sensitive to features and tuning |

| Basu et al. (2020) [111] | U-Net and Pix2Pix | Good for degraded docs | Struggles with noise |

| Akbari et al. (2020) [100] | U-Net, SegNet, and DeepLabv3 | Robust and handles text extraction well | Requires lots of training data |

| De et al. (2020) [101] | DD-GAN with focal loss | Good for degraded documents | GANs are hard to train |

| Liu et al. (2020) [7] | Recurrent attention GAN with Spatial RNNs | Handles degradation well | Needs large datasets and has high computational requirements |

| Zhao et al. (2021) [99] | U-Net | Good for complex docs | High computational cost |

| He et al. (2021) [6] | T-shaped neural network | Enhances image quality | Requires large datasets |

| Kang et al.(2021) [110] | U-Net with pre-trained modular cascade | Better generalization; less training data | Module selection critical |

| Castellanos et al. (2021) [102] | NN with data augmentation | Works on diverse data; unsupervised | Sensitive to data variability |

| Dang & Lee (2021) [105] | Multi-task learning with stroke boundaries + adversarial loss | Embeds expert knowledge | Risk of overfitting to strokes |

| Dey et al. (2022) [24] | Two-stage CNN + variational inference | Adaptable to degradation | Complex; may not handle extreme degradation |

| Suh et al. (2022) [103] | Two-stage GAN | Robust to variations | Needs careful training |

| Khamekhem (2022) [104] | End-to-end GAN | Good on degraded documents | Resource-heavy training |

| Souibgui (2022) [106] | Vision encoder–decoder | Good on degraded documents | Large datasets and GPU |

| Ju et al. (2022) [87] | GAN with wavelet | Good on degraded documents | High computational load |

| Yang et al. (2023) [62] | Gated convolutions | Precise edge mapping | Slow on large documents |

| Lihota et al. (2024) [107] | Threshold U-Net | Memory-efficient; fast | Sensitive with resolution |

| Zhang et al. (2024) [108] | U-Net + MobileViT | Lightweight; works in real time | Sensitive with large documents |

| Yang et al. (2024) [62] | Gated convolutions | Works in diverse styles | Needs high-quality data |

| Du & He (2024) [109] | Nonlinear diffusion | Efficient and accurate | Struggles with noise |

| Version | Description |

|---|---|

| DIBCO 2009 (https://users.iit.demokritos.gr/~bgat/DIBCO2009/, accessed on 1 January 2025) | 5 degraded handwritten documents and 5 degraded printed documents |

| DIBCO 2010 (https://users.iit.demokritos.gr/~bgat/H-DIBCO2010/, accessed on 1 January 2025) | 10 handwritten document images |

| DIBCO 2011 (http://utopia.duth.gr/~ipratika/DIBCO2011/, accessed on 1 January 2025) | 8 printed and 8 handwritten images |

| DIBCO 2012 (http://utopia.duth.gr/~ipratika/HDIBCO2012/resources.html, accessed on 1 January 2025) | 8 handwritten images and 8 printed images |

| DIBCO 2013 (http://utopia.duth.gr/~ipratika/DIBCO2013/benchmark, accessed on 1 January 2025) | 8 handwritten images and 8 printed images |

| DIBCO 2014 (http://users.iit.demokritos.gr/~bgat/HDIBCO2014/benchmark, accessed on 1 January 2025) | 10 handwritten images without any printed images |

| DIBCO 2016 (https://vc.ee.duth.gr/h-dibco2016/, accessed on 1 January 2025) | 10 handwritten images with different sizes and resolutions |

| DIBCO 2017 (https://vc.ee.duth.gr/dibco2017/benchmark/, accessed on 1 January 2025) | 10 handwritten images and 10 printed images |

| DIBCO 2018 (http://vc.ee.duth.gr/h-dibco2018/benchmark/, accessed on 1 January 2025) | 10 handwritten documents with representative degradations |

| DIBCO 2019 (https://vc.ee.duth.gr/dibco2019/, accessed on 1 January 2025) | 10 historical printed and 10 historical handwritten document images |

| DIBCO | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | Category | Metrics | ‘09 | ‘10 | ‘11 | ‘12 | ‘13 | ‘14 | ‘16 | ‘17 | ‘18 | ‘19 |

| Niblack [70] | Thresh | FM | - | - | 70.4 | - | 71.4 | 86.0 | 72.6 | 51.2 | 41.2 | 51.5 |

| PSNR | - | - | 12.4 | - | 13.5 | 16.5 | 13.3 | 7.7 | 6.8 | 10.5 | ||

| Otsu [39] | Thresh | FM | 78.6 | 85.4 | 82.1 | 75.1 | 80.0 | 91.6 | 86.6 | 77.7 | 51.5 | 47.8 |

| PSNR | 15.3 | 17.5 | 15.7 | 15.0 | 16.6 | 18.7 | 17.8 | 13.9 | 9.7 | 9.1 | ||

| Sauvola [3] | Thresh | FM | 85.4 | 75.2 | 82.1 | 81.6 | 82.7 | 84.7 | 84.6 | 77.1 | 67.8 | 51.7 |

| PSNR | 16.4 | 15.9 | 15.7 | 16.9 | 17.0 | 17.8 | 17.1 | 14.3 | 13.8 | 13.7 | ||

| Bataineh [8] | Thresh | FM | 85.1 | 80.4 | 83.5 | 81.9 | 82.4 | 84.2 | 83.7 | 78.7 | 61.3 | - |

| PSNR | 16.2 | 16.6 | 16 | 16.9 | 17.0 | 17.0 | 16.7 | 14.5 | 12. 9 | - | ||

| He [136] | ML | FM | 94.9 | 93.9 | 95.3 | 92.9 | 95.7 | 97.7 | 91.1 | 92.7 | 92.2 | - |

| PSNR | 20.5 | 21.2 | 21.5 | 22.9 | 23.0 | 23.9 | 19.2 | 19.2 | 20.1 | - | ||

| Kang [110] | ML | FM | 96.7 | - | 95.5 | 95.2 | 95.9 | 97.1 | 93.1 | 91.6 | 89.7 | - |

| PSNR | 20.9 | - | 19.9 | 21.4 | 23.0 | 22.4 | 19.2 | 15.9 | 19.4 | - | ||

| Yang [62] | ML | FM | 93.6 | 95.5 | 94.6 | 95.4 | 95.9 | 97.6 | 89.9 | 91.3 | 90.8 | 73.9 |

| PSNR | 20.4 | 22.3 | 20.7 | 22.3 | 22.9 | 23.7 | 18.9 | 18.3 | 19.7 | 14.8 | ||

| Suh [103] | ML | FM | 93.3 | 93.9 | 93.4 | 94.5 | 94.8 | 96.2 | 91.1 | 91.0 | 91.9 | 70.6 |

| PSNR | 19.7 | 21.2 | 20.0 | 21.8 | 21.8 | 21.8 | 19.3 | 18.4 | 20.0 | 14.7 | ||

| Souibgui [106] | ML | FM | - | - | 94.2 | 95.1 | - | - | - | 92.5 | 90.6 | - |

| PSNR | - | - | 20.6 | 22.0 | - | - | - | 19.1 | 19.5 | - | ||

| Bera [18] | Cluster | FM | - | - | - | - | - | - | 90.4 | 83.4 | 76.8 | 72.9 |

| PSNR | - | - | - | - | - | - | 18.9 | 15.5 | 15.3 | 14.5 | ||

| Dang [105] | Edge | FM | - | - | - | - | 96.0 | - | - | 92.1 | 91.3 | - |

| PSNR | - | - | 22.1 | - | 23.1 | - | - | 18.7 | 19.8 | - | ||

| Huang [137] | ML | FM | - | - | - | - | - | - | 89.7 | 90.7 | 91.8 | - |

| PSNR | - | - | - | - | - | - | 18.9 | 17.9 | 19.8 | - | ||

| De [101] | ML | FM | - | - | - | - | 92.1 | 96.9 | 87.6 | 91.0 | 88.3 | - |

| PSNR | - | - | - | - | 20.7 | 22.7 | 18.1 | 18.3 | 19.1 | - | ||

| Basu [111] | ML | FM | - | - | - | - | 93.6 | 95.4 | 89.6 | 92.3 | 89.9 | 60.1 |

| PSNR | - | - | - | - | 21.3 | 22.4 | 19.0 | 18.9 | 19.4 | 12.4 | ||

| Hsia [65] | Texture | FM | 82.7 | 79.7 | 85.6 | 81.1 | 87.7 | 86.9 | 85.7 | 84.2 | - | - |

| PSNR | 15.9 | 15.4 | 17.8 | 17.0 | 18.1 | 17.8 | 18.5 | 15.2 | - | - | ||

| Detsikas [138] | ML | FM | 95.7 | - | 94.68 | - | - | 97.55 | 90.8 | 92.2 | 85.9 | - |

| PSNR | 21.42 | - | 21 | - | - | 23.62 | 19.2 | 18.7 | 18.2 | - | ||

| Ju [87] | ML | FM | - | - | 94.22 | - | 94.6 | 96.6 | 91.8 | 91.3 | 92.9 | - |

| PSNR | - | - | 20.54 | - | 22.0 | 22.23 | 19.7 | 18.6 | 20.4 | - | ||

| Quattrini [135] | ML | FM | - | 96.4 | 96.03 | 97 | 96.7 | 98.19 | 91.3 | 93.8 | 93. | - |

| PSNR | - | 23.4 | 22.26 | 24.3 | 24.2 | 25.18 | 19.7 | 19.6 | 20.9 | - | ||

| Lihota [107] | ML | FM | - | - | - | - | - | - | - | 88.6 | - | - |

| PSNR | - | - | - | - | - | - | - | 17.5 | - | - | ||

| Zhang [108] | ML | FM | - | - | - | 96.4 | - | - | - | 93.2 | 90.59 | 65.92 |

| PSNR | - | - | - | 23.3 | - | - | - | 19.3 | 19.52 | 15.25 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bataineh, B.; Tounsi, M.; Zamzami, N.; Janbi, J.; Abu-ain, W.A.K.; AbuAin, T.; Elnazer, S. A Comprehensive Review on Document Image Binarization. J. Imaging 2025, 11, 133. https://doi.org/10.3390/jimaging11050133

Bataineh B, Tounsi M, Zamzami N, Janbi J, Abu-ain WAK, AbuAin T, Elnazer S. A Comprehensive Review on Document Image Binarization. Journal of Imaging. 2025; 11(5):133. https://doi.org/10.3390/jimaging11050133

Chicago/Turabian StyleBataineh, Bilal, Mohamed Tounsi, Nuha Zamzami, Jehan Janbi, Waleed Abdel Karim Abu-ain, Tarik AbuAin, and Shaima Elnazer. 2025. "A Comprehensive Review on Document Image Binarization" Journal of Imaging 11, no. 5: 133. https://doi.org/10.3390/jimaging11050133

APA StyleBataineh, B., Tounsi, M., Zamzami, N., Janbi, J., Abu-ain, W. A. K., AbuAin, T., & Elnazer, S. (2025). A Comprehensive Review on Document Image Binarization. Journal of Imaging, 11(5), 133. https://doi.org/10.3390/jimaging11050133