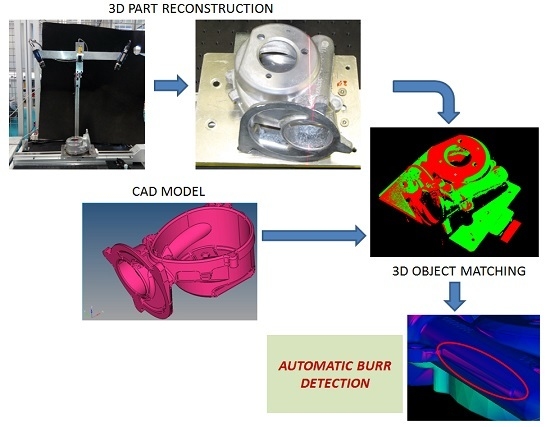

Robust 3D Object Model Reconstruction and Matching for Complex Automated Deburring Operations

Abstract

Share and Cite

Tellaeche, A.; Arana, R. Robust 3D Object Model Reconstruction and Matching for Complex Automated Deburring Operations. J. Imaging 2016, 2, 8. https://doi.org/10.3390/jimaging2010008

Tellaeche A, Arana R. Robust 3D Object Model Reconstruction and Matching for Complex Automated Deburring Operations. Journal of Imaging. 2016; 2(1):8. https://doi.org/10.3390/jimaging2010008

Chicago/Turabian StyleTellaeche, Alberto, and Ramón Arana. 2016. "Robust 3D Object Model Reconstruction and Matching for Complex Automated Deburring Operations" Journal of Imaging 2, no. 1: 8. https://doi.org/10.3390/jimaging2010008

APA StyleTellaeche, A., & Arana, R. (2016). Robust 3D Object Model Reconstruction and Matching for Complex Automated Deburring Operations. Journal of Imaging, 2(1), 8. https://doi.org/10.3390/jimaging2010008