Subpixel Localization of Isolated Edges and Streaks in Digital Images

Abstract

1. Introduction

2. Coordinate Frames and Conventions

3. Computation of Zernike Moments in Digital Images

3.1. Zernike Polynomials

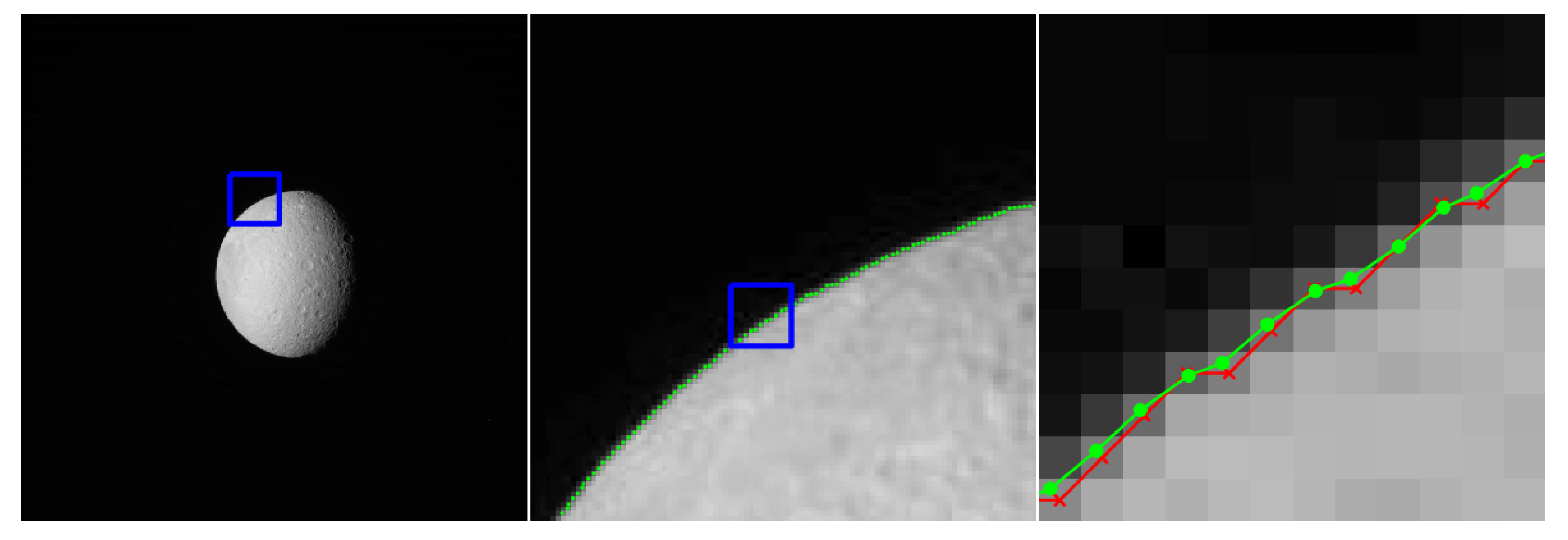

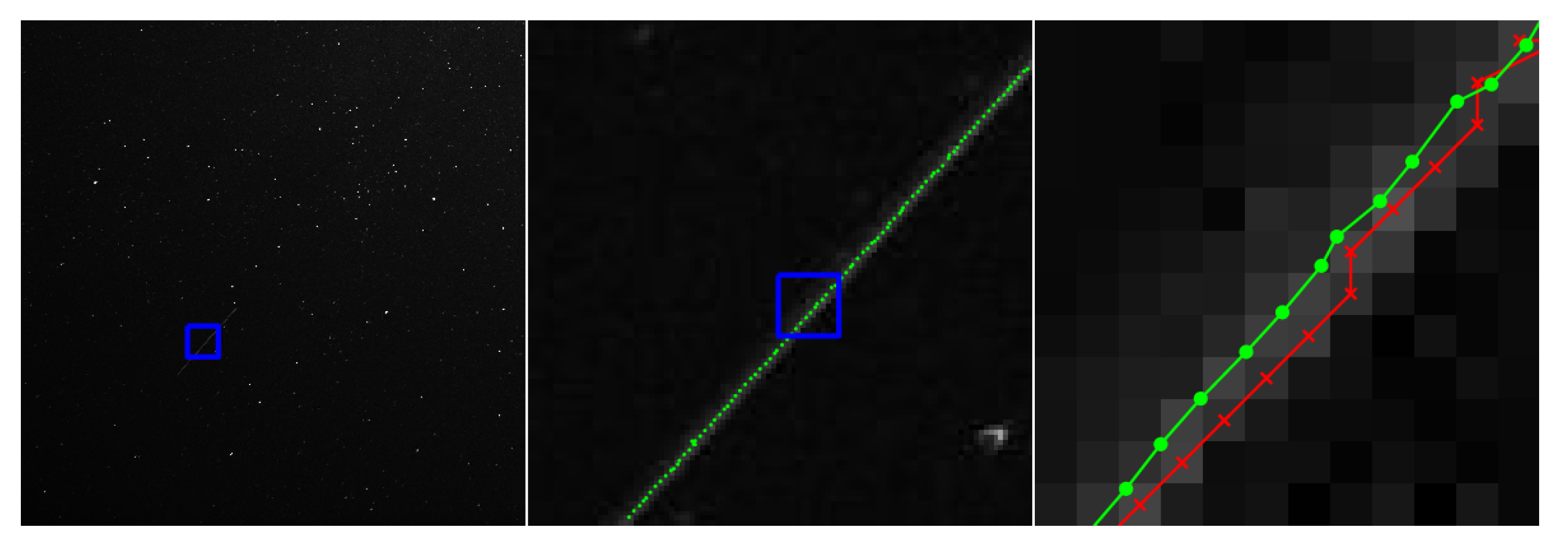

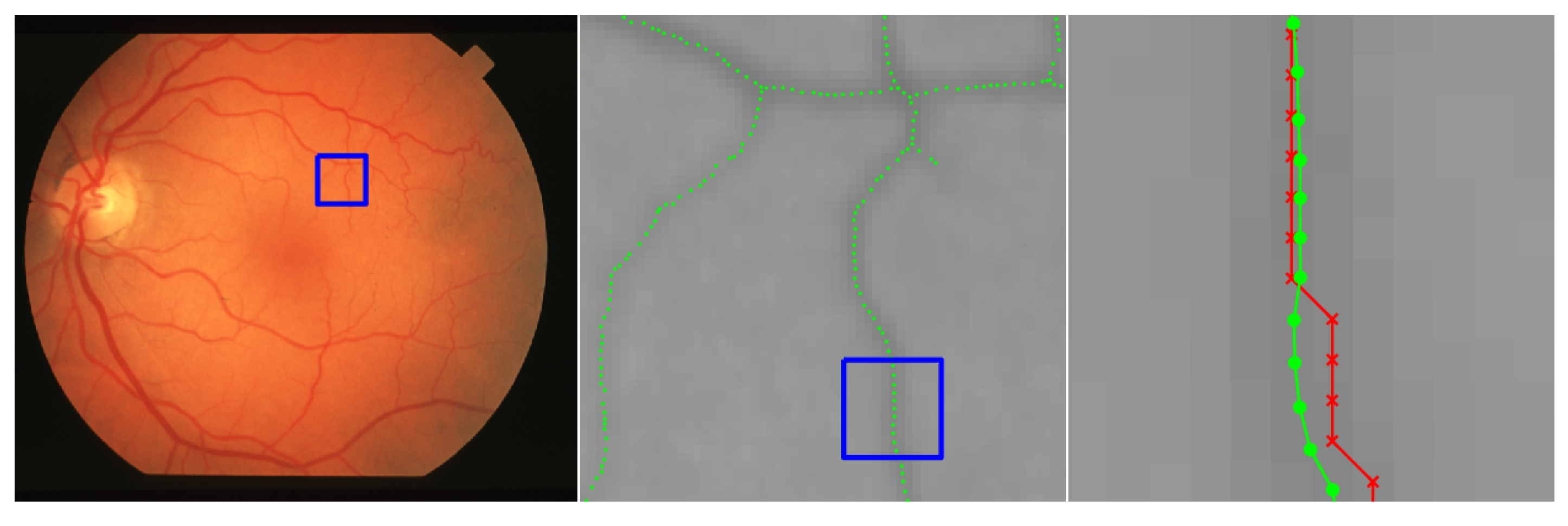

3.2. Zernike Moments for a Continuous 2D Signal

3.3. Rotational Properties of Zernike Moments

3.4. Zernike Moments for a Digital Image

4. Moment-Based Edge and Streak Localization

4.1. Computing Edge or Streak Orientation

4.2. Computing ℓ for Edges

4.3. Computing ℓ for Streaks

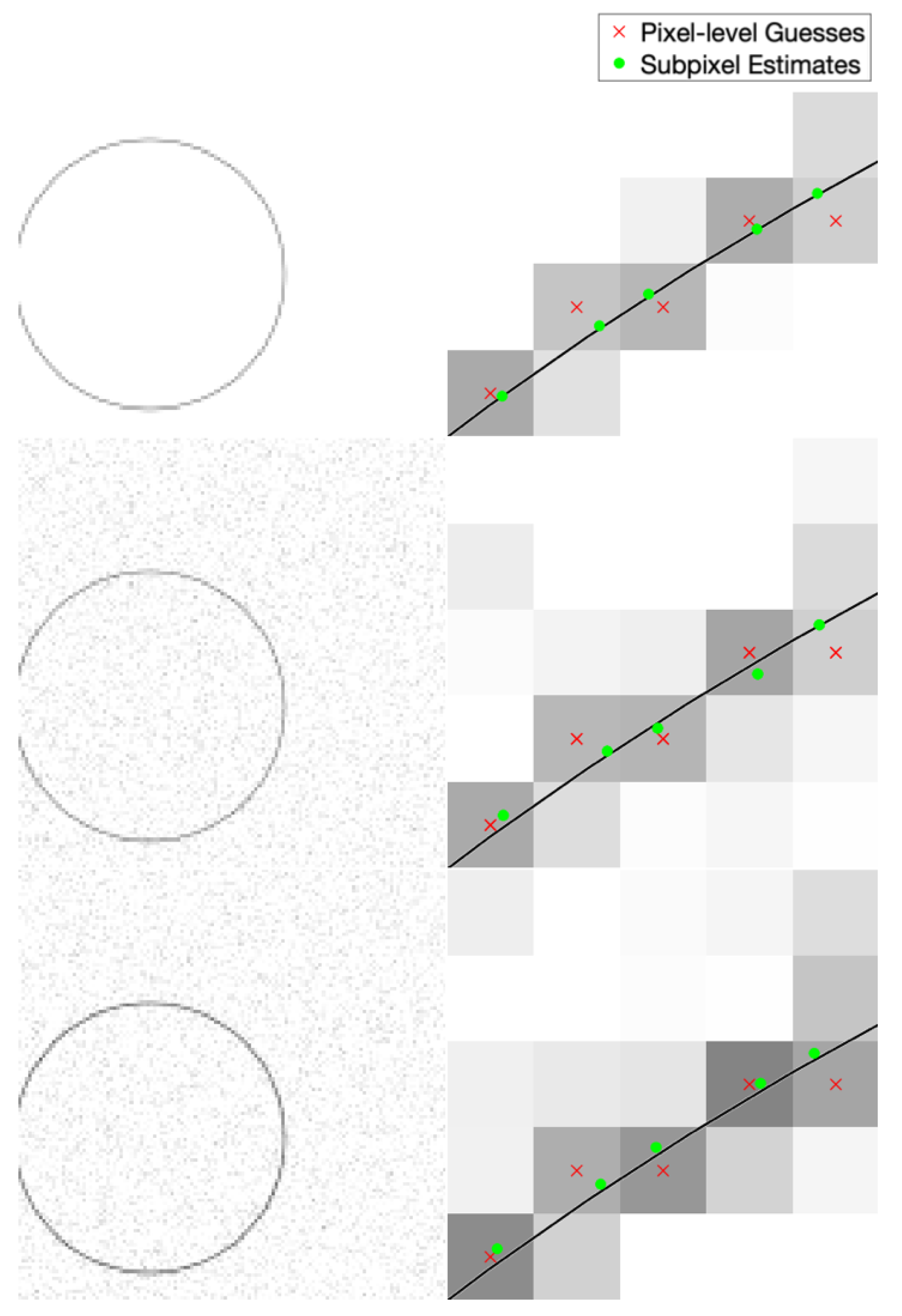

5. Numerical Validation on Synthetic Images

5.1. Synthetic Images with Edges

5.1.1. Ideal Edge Localization Performance

5.1.2. Digital Image Edge Localization Performance

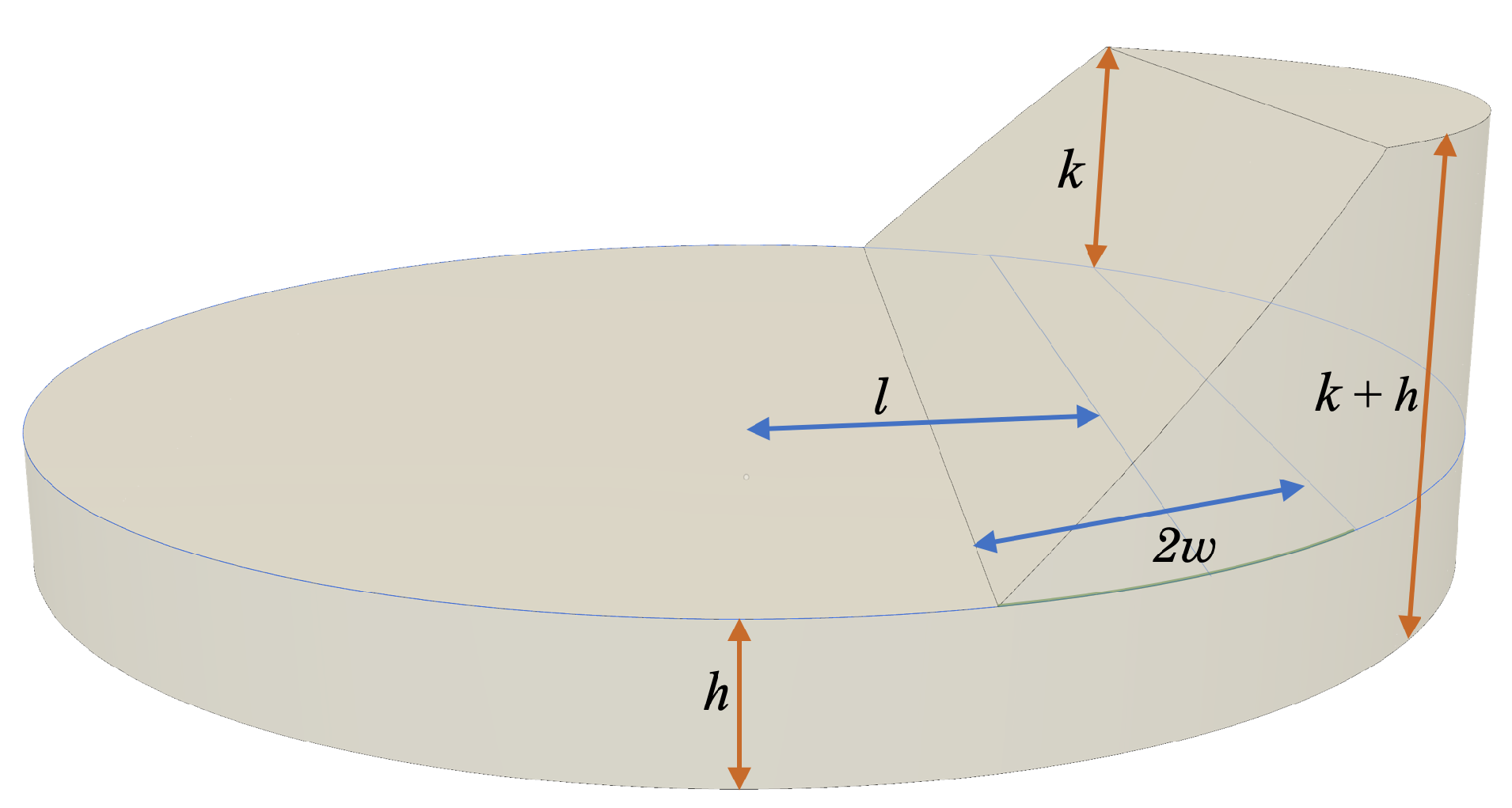

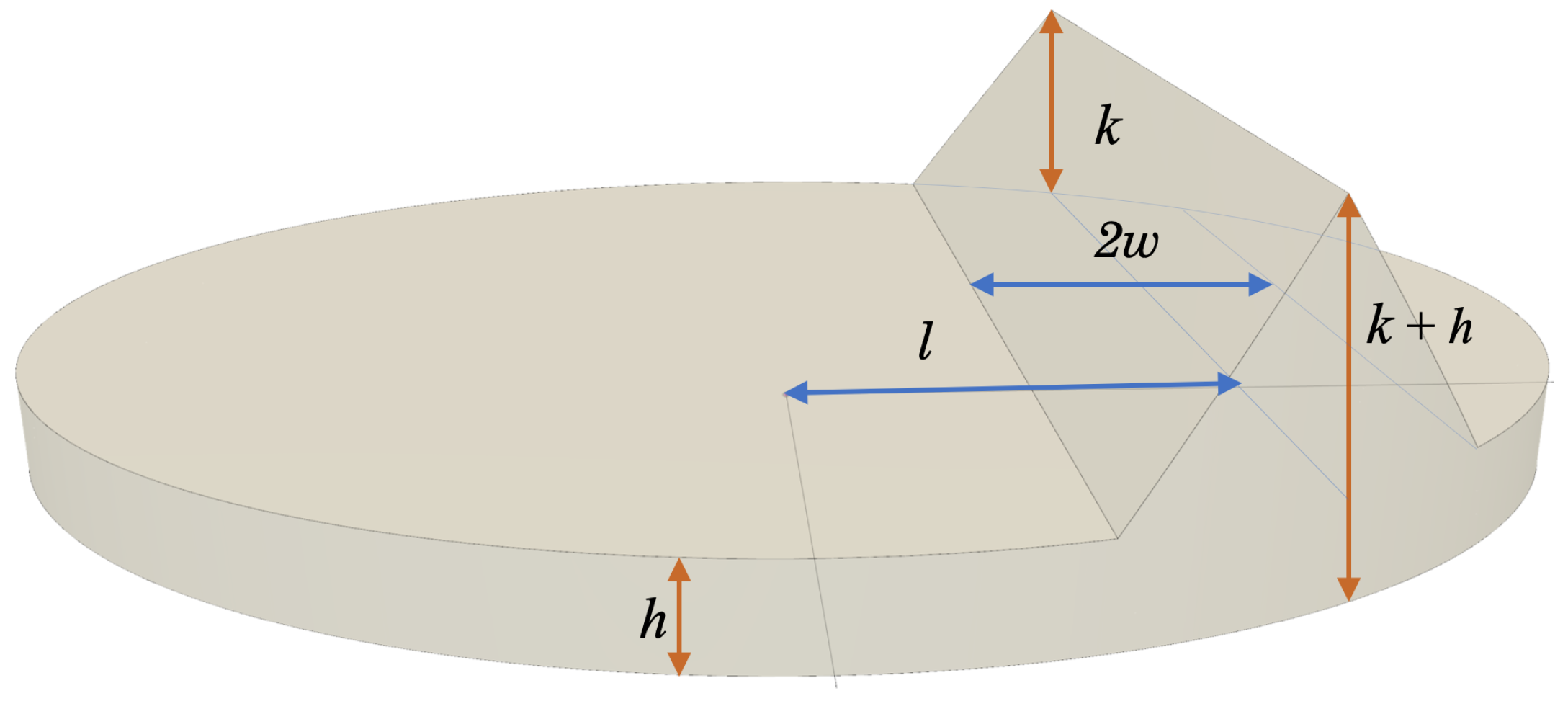

5.2. Synthetic Images with Streaks

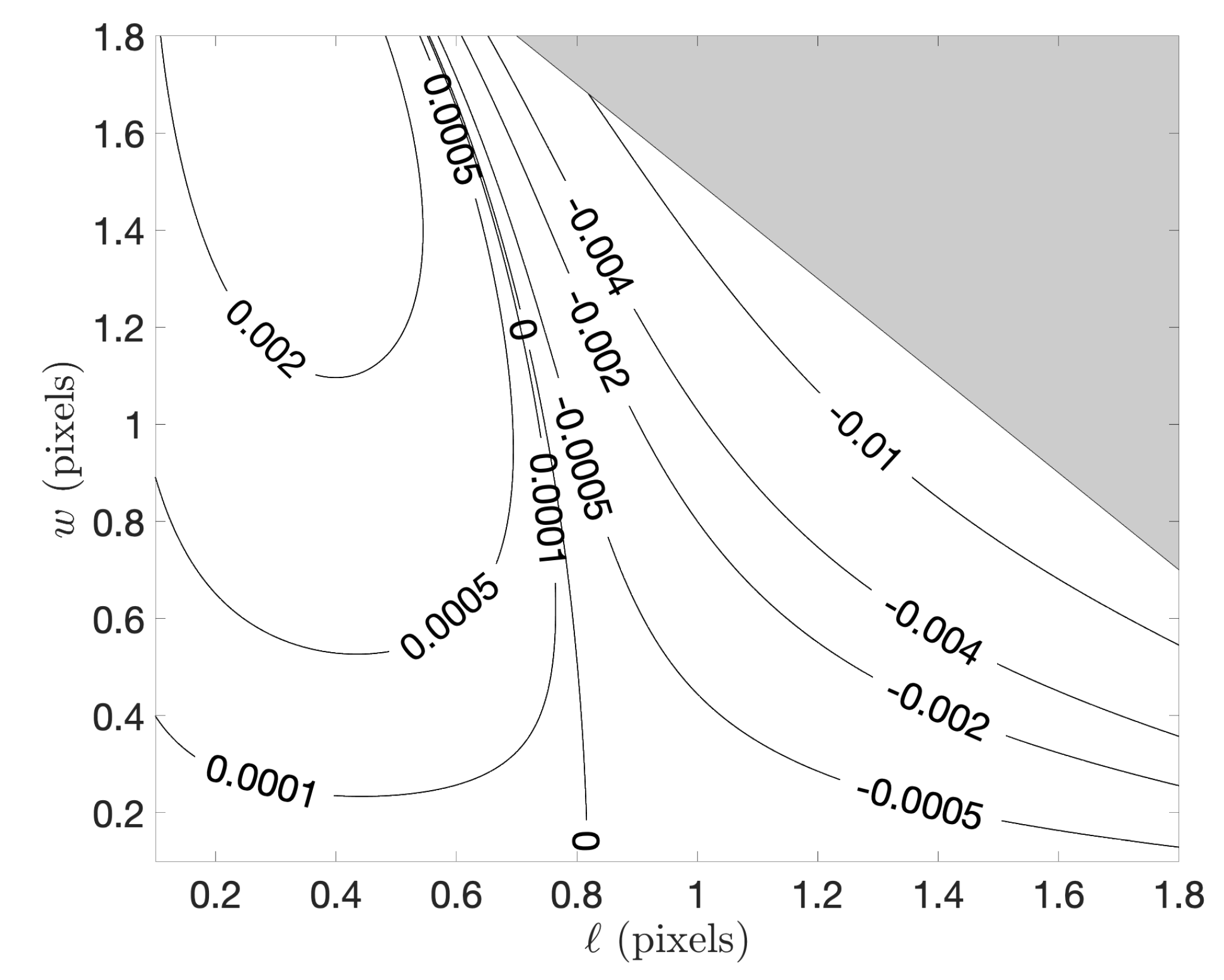

5.2.1. Ideal Streak Localization Performance

5.2.2. Digital Image Streak Localization Performance

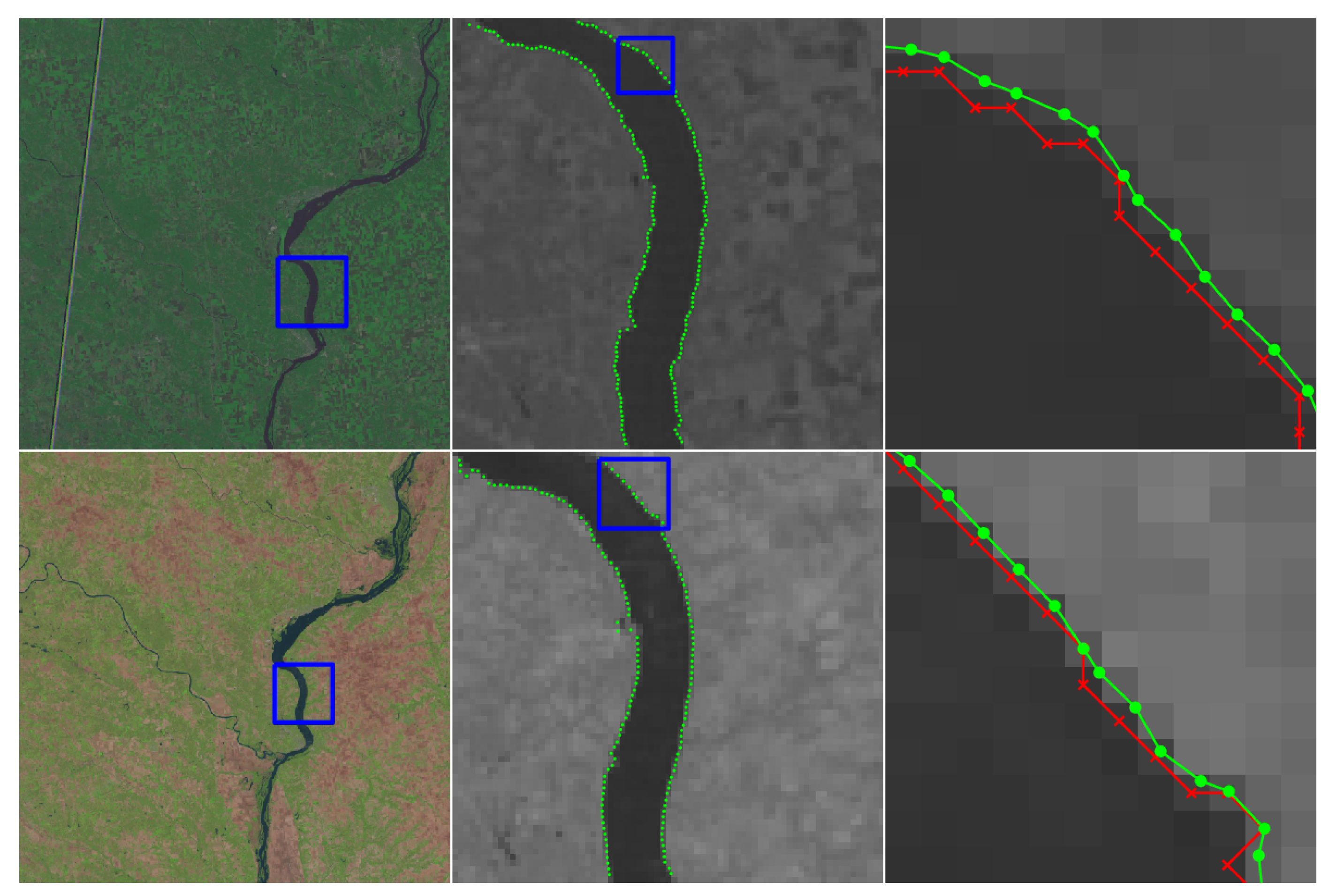

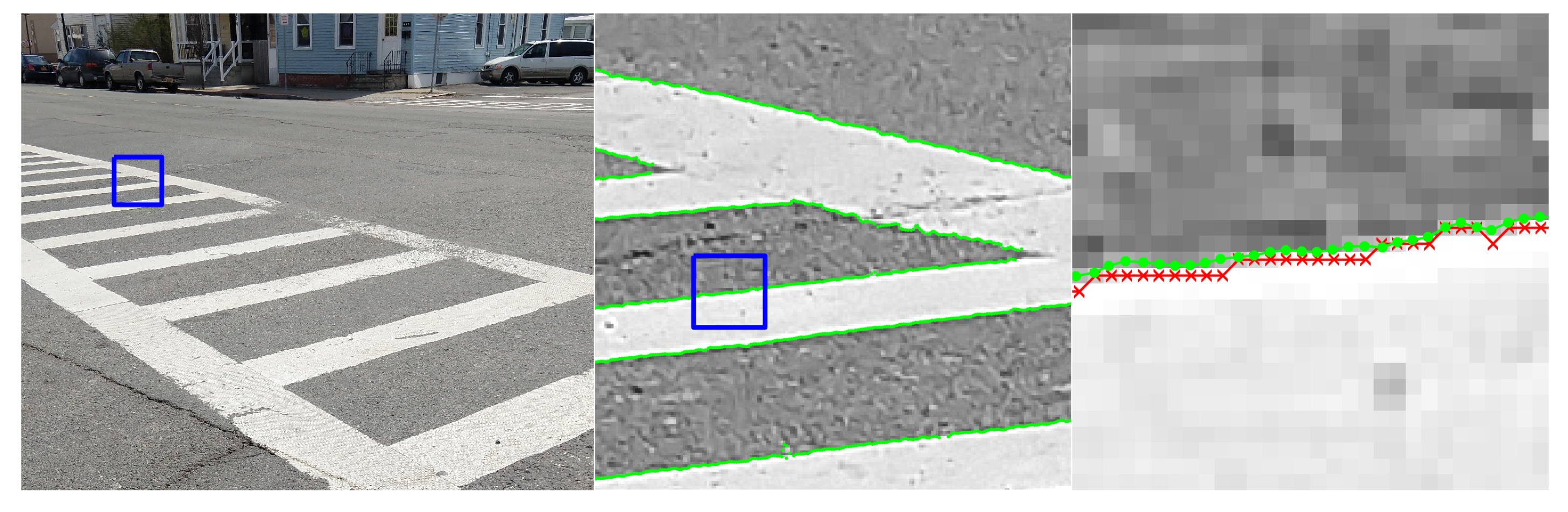

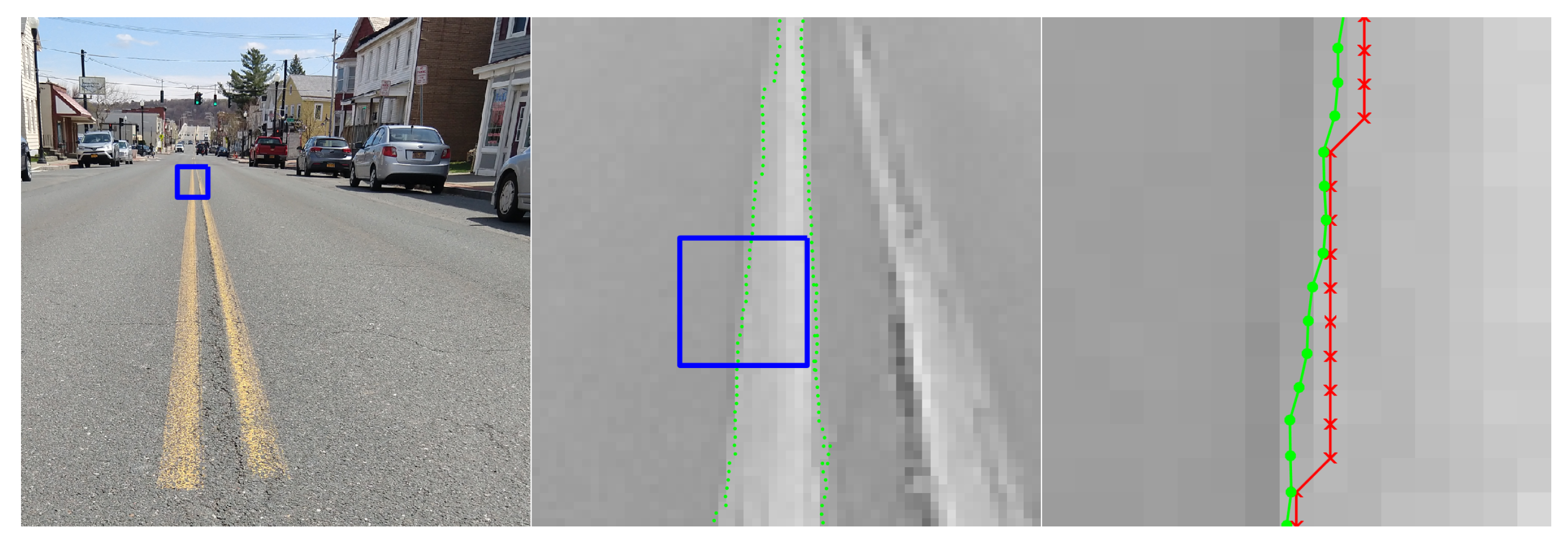

6. Validation on Real Data

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sobel, I. An Isotropic 3×3 Image Gradient Operator. Present. Stanf. A.I. Proj. 1968 2014. [Google Scholar] [CrossRef]

- Prewitt, J. Object Enhancement and Extraction. In Picture Processing and Psychopictorics; Lipkin, B., Rosenfeld, A., Eds.; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Marr, D.; Hildreth, E. Theory of Edge Detection. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1980, 207, 187–217. [Google Scholar] [CrossRef]

- Canny, J.F. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Dollár, P.; Tu, Z.; Belongie, S. Supervised Learning of Edges and Object Boundaries. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006. [Google Scholar] [CrossRef]

- Bertasius, G.; Shi, J.; Torresani, L. DeepEdge: A multi-scale bifurcated deep network for top-down contour detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Shen, W.; Wang, X.; Wang, Y.; Bai, X.; Zhang, Z. DeepContour: A deep convolutional feature learned by positive-sharing loss for contour detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Wang, Y.; Zhao, X.; Li, Y.; Huang, K. Deep Crisp Boundaries: From Boundaries to Higher-Level Tasks. IEEE Trans. Image Process. 2019, 28, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Lyvers, E.P.; Mitchell, O.R.; Akey, M.L.; Reeves, A.P. Subpixel measurements using a moment-based edge operator. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 1293–1309. [Google Scholar] [CrossRef]

- Ghosal, S.; Mehrotra, R. Orthogonal moment operators for subpixel edge detection. Pattern Recognit. 1993, 26, 295–306. [Google Scholar] [CrossRef]

- Ye, J.; Fu, G.; Poudel, U. High-accuracy edge detection with Blurred Edge Model. Image Vis. Comput. 2005, 23, 453–467. [Google Scholar] [CrossRef]

- Trujillo-Pino, A.; Krissian, K.; Alemán-Flores, M.; Santana-Cedrés, D. Accurate subpixel edge location based on partial area effect. Image Vis. Comput. 2013, 31, 72–90. [Google Scholar] [CrossRef]

- Hermosilla, T.; Bermejo, E.; Balaguer, A.; Ruiz, L. Non-linear fourth-order image interpolation for subpixel edge detection and localization. Image Vis. Comput. 2008, 26, 1240–1248. [Google Scholar] [CrossRef]

- Christian, J.A. Accurate Planetary Limb Localization for Image-Based Spacecraft Navigation. J. Spacecr. Rocket. 2017, 54, 708–730. [Google Scholar] [CrossRef]

- Bhatia, A.B.; Wolf, E. On the circle polynomials of Zernike and related orthogonal sets. Math. Proc. Camb. Philos. Soc. 1954, 50, 40–48. [Google Scholar] [CrossRef]

- Von Zernike, F. Beugungstheorie des schneidenver-fahrens und seiner verbesserten form, der phasenkontrastmethode. Physica 1934, 1, 689–704. [Google Scholar] [CrossRef]

- Khotanzad, A.; Hong, Y.H. Invariant image recognition by Zernike moments. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 489–497. [Google Scholar] [CrossRef]

- Lin, T.W.; Chou, Y.F. A comparative study of Zernike moments. In Proceedings of the IEEE/WIC International Conference on Web Intelligence (WI 2003), Halifax, NS, Canada, 13–17 October 2003; pp. 516–519. [Google Scholar] [CrossRef]

- Teh, C.; Chin, R.T. On image analysis by the methods of moments. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 496–513. [Google Scholar] [CrossRef]

- Tango, W. The circle polynomials of Zernike and their application in optics. Appl. Phys. 1977, 13, 327. [Google Scholar] [CrossRef]

- Peng, S.; Su, W.; Hu, X.; Liu, C.; Wu, Y.; Nam, H. Subpixel Edge Detection Based on Edge Gradient Directional Interpolation and Zernike Moment. In Proceedings of the International Conference on Computer Science and Software Engineering (CSSE), Nanjing, China, 25–27 May 2018. [Google Scholar]

- Born, M.; Wolf, E. Principles of Optics: Electromagnetic Theory of Propagation, Interference and Diffraction of Light; Cambridge University Press: Cambridge, UK; New York, NY, USA, 1997. [Google Scholar] [CrossRef]

- Rao, U.V.G.; Jain, V.K. Gaussian and Exponential Approximations of the Modulation Transfer Function. J. Opt. Soc. Am. 1967, 57, 1159–1160. [Google Scholar] [CrossRef]

- Ofir, N.; Galun, M.; Alpert, S.; Brandt, A.; Nadler, B.; Basri, R. On Detection of Faint Edges in Noisy Images. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef]

- Hickson, P. A fast algorithm for the detection of faint orbital debris tracks in optical images. Adv. Space Res. 2018, 62, 3078–3085. [Google Scholar] [CrossRef]

- Wilson, J.; Gerber, M.; Prince, S.; Chen, C.; Schwartz, S.; Hubschman, J.; Tsao, T. Intraocular robotic interventional surgical system (IRISS): Mechanical design, evaluation, and master–slave manipulation. Int. J. Med. Robot. Comput. Assist. Surg. 2018, 14, e1842. [Google Scholar] [CrossRef]

- Song, Y.; Liu, F.; Ling, F.; Yue, L. Automatic Semi-Global Artificial Shoreline Subpixel Localization Algorithm for Landsat Imagery. Remote Sens. 2019, 11, 1779. [Google Scholar] [CrossRef]

- Bausys, R.; Kazakeviciute-Januskeviciene, G.; Cavallaro, F.; Usovaite, A. Algorithm Selection for Edge Detection in Satellite Images by Neutrosophic WASPAS Method. Sustainability 2020, 12, 548. [Google Scholar] [CrossRef]

- EarthExplorer. Earth Resources Observation and Science (EROS) Center, U.S. Geological Survey (USGS). Available online: https://earthexplorer.usgs.gov (accessed on 13 December 2019).

- Porco, C. CASSINI ORBITER SATURN ISSNA/ISSWA 2 EDR VERSION 1.0; NASA Planetary Data System; National Aeronautics and Space Administration: Moffett Field, CA, USA, 2005.

- Hoover, A.; Kouznetsova, V.; Goldbaum, M. Locating Blood Vessels in Retinal Images by Piece-wise Threshold Probing of a Matched Filter Response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef] [PubMed]

- Hoover, A.; Goldbaum, M. Locating the optic nerve in a retinal image using the fuzzy convergence of the blood vessels. IEEE Trans. Med. Imaging 2003, 22, 951–958. [Google Scholar] [CrossRef] [PubMed]

- Mills, K.; Garikipati, K.; Kemkemer, R. Experimental characterization of tumor spheroids for studies of the energetics of tumor growth. Int. J. Mater. Res. 2011, 102, 889–895. [Google Scholar] [CrossRef]

- Mills, K.; Kemkemer, R.; Rudraraju, S.; Garikipati, K. Elastic free energy drives the shape of prevascular solid tumors. PLoS ONE 2014, 9, e103245. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Renshaw, D.T.; Christian, J.A. Subpixel Localization of Isolated Edges and Streaks in Digital Images. J. Imaging 2020, 6, 33. https://doi.org/10.3390/jimaging6050033

Renshaw DT, Christian JA. Subpixel Localization of Isolated Edges and Streaks in Digital Images. Journal of Imaging. 2020; 6(5):33. https://doi.org/10.3390/jimaging6050033

Chicago/Turabian StyleRenshaw, Devin T., and John A. Christian. 2020. "Subpixel Localization of Isolated Edges and Streaks in Digital Images" Journal of Imaging 6, no. 5: 33. https://doi.org/10.3390/jimaging6050033

APA StyleRenshaw, D. T., & Christian, J. A. (2020). Subpixel Localization of Isolated Edges and Streaks in Digital Images. Journal of Imaging, 6(5), 33. https://doi.org/10.3390/jimaging6050033