Identification of QR Code Perspective Distortion Based on Edge Directions and Edge Projections Analysis

Abstract

:1. Introduction

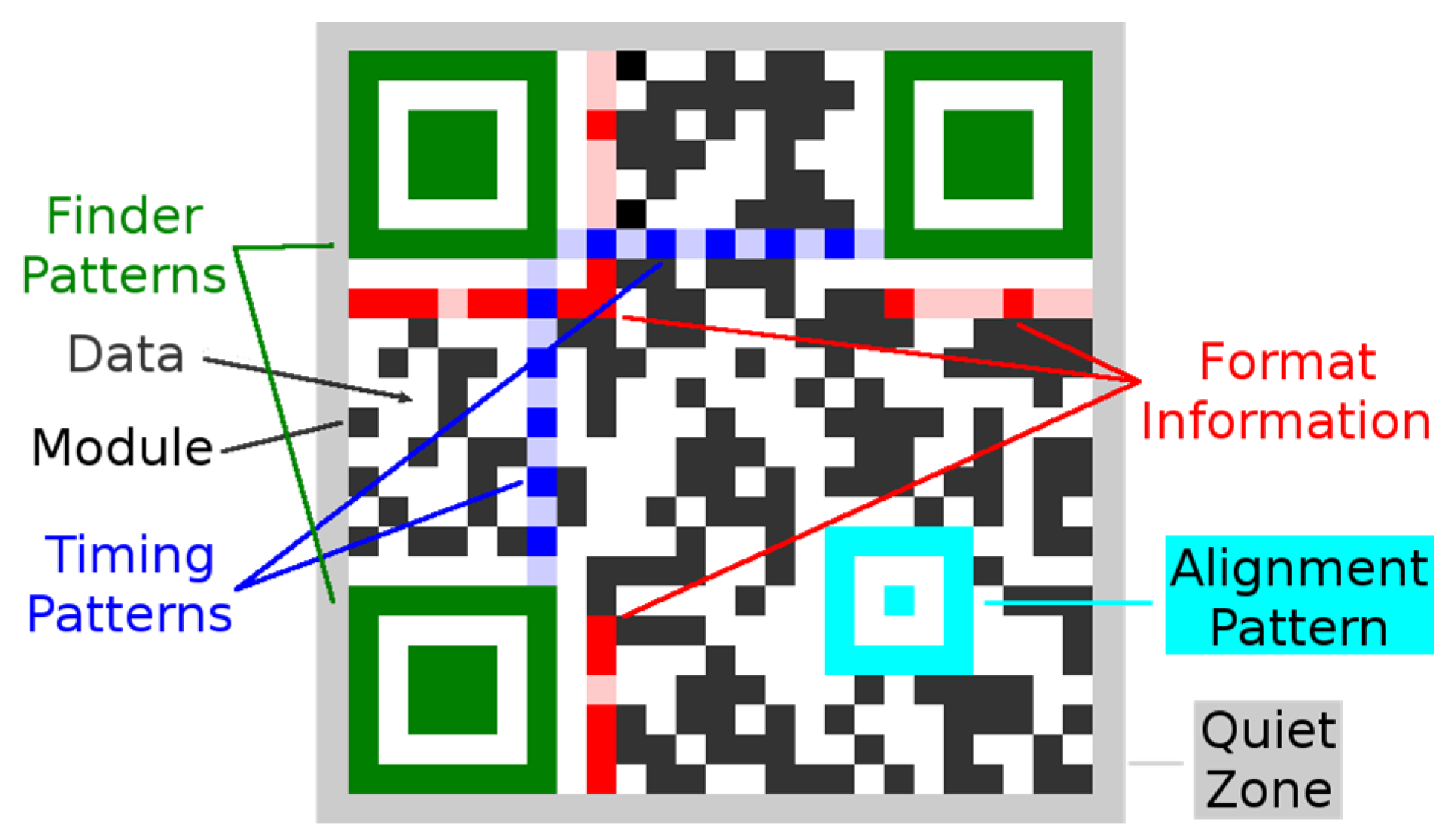

- Module—the smallest building element of a QR Code represented by a small dark or light square. One module represents one bit: usually, dark module for the “1” and light module for the “0”. Modules are organized into rows and columns and form squared matrix.

- Finder patterns—also called position detection patterns, these are localized in three corners of a QR Code. Each Finder Pattern is formed by an inner dark square (of size 3 × 3 modules) surrounded by a dark frame (of size 7 × 7 modules). Finder Patterns are separated from the rest of a QR Code by a light area of width one module. Finder Patterns are used by readers to determine position and orientation of a QR Code.

- Timing patterns—these are placed inside a QR Code and interconnect finder patterns. Timing patterns are formed by sequence of alternating dark and light modules. The timing pattern is used to determine the size of a module, the number of rows and columns, and possible distortion of a code.

- Alignment patterns—there may be none or more alignment patterns according to a version of a QR Code (QR Code version 1 has no alignment pattern). They allow the scanning device to determine the possible perspective distortion of the QR Code image.

- Format information—this contains additional information such as used error correction level (4 options) or a mask patterns number (7 options), which are required for decoding a QR Code.

- Quiet zone—this is a white area of width at least four modules located around a QR Code (in practice, the width is often less than four modules as required by the standard). The quiet zone should not contain any patterns or structures which can confuse readers.

- Data—they are encoded inside a QR Code and are protected by an error correction carried out via a Reed–Solomon algorithm (allows restoration of damaged data). This also means that a QR Code can be partially damaged and can still be entirely read out. QR Codes provide four user selectable levels of error correction: L (Low), M (Medium), Q (Quartile), and H (High). It means that up to approximately 7%, 15%, 25%, and 30% of the code words, which are damaged, can be restored [3]. Increasing the level of error correction reduces the available data capacity of a QR Code.

2. Related Work

2.1. Finder Pattern-Based Localization Methods

2.2. Local Features with Region Growing-Based Localization Methods

2.3. Connected Components-Based Localization Methods

2.4. Deep Learning Based Localization Methods

3. The Finder Pattern Localization Methods

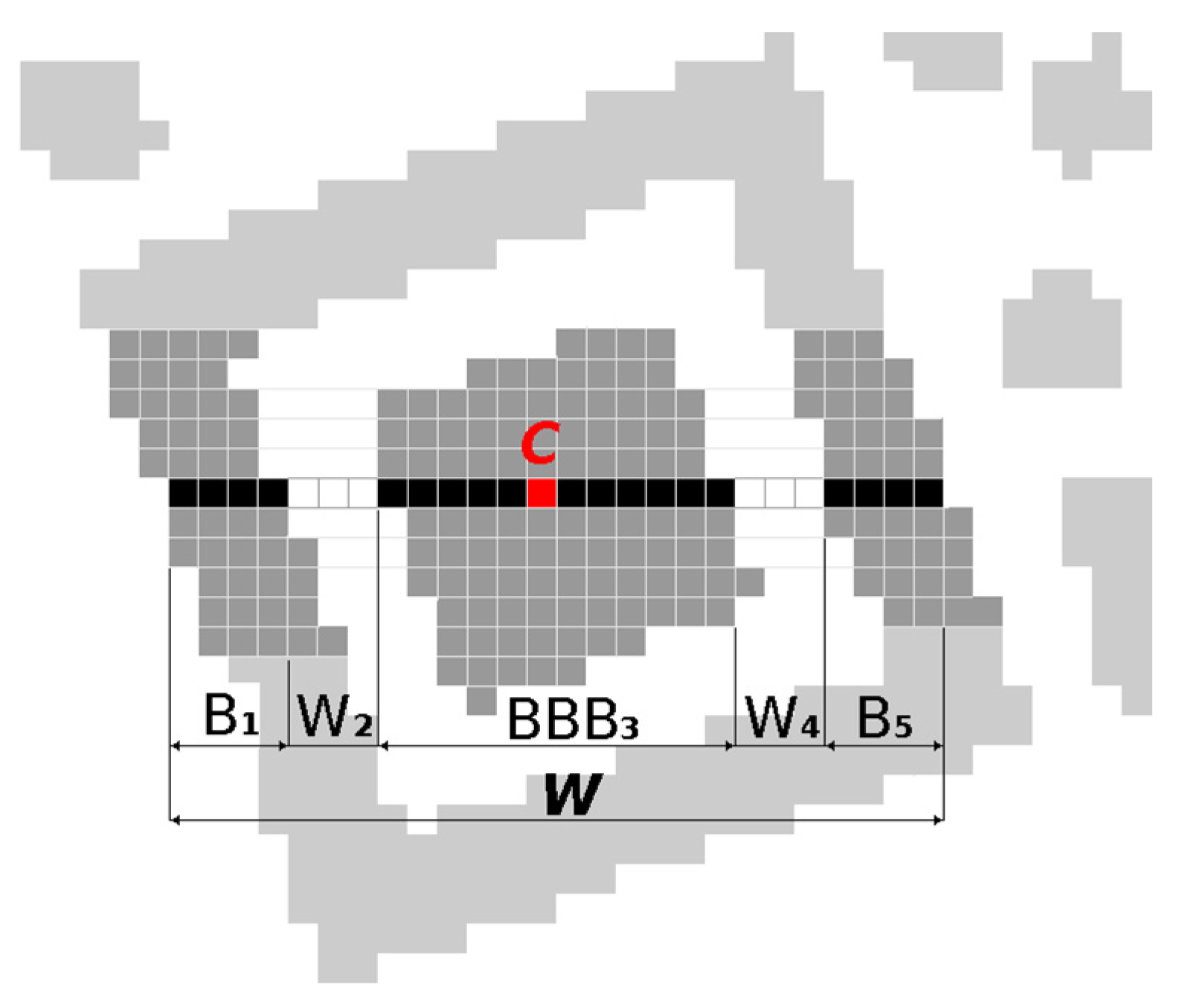

3.1. Finder Pattern Localization Based on 1:1:3:1:1 Search

- Area(RA) < Area(RB) < Area(RC) and Area(RB)/Area(RA) < 3 and Area(RC)/Area(RA) < 4

- 0.7 < Width(RB)/Height(RB) < 1.3 and 0.7 < Width(RC)/Height(RC) < 1.3

- Distance(Centroid(RA), Centroid(RB)) < 3 and Distance(Centroid(RA), Centroid(RC)) < 3

- Area (M00)

- Centroid (M10/M00, M01/M00), where M00, M10, M00 are raw image moments

- Bounding box (Top, Left, Right, Bottom)

3.2. Finder Pattern Localization Based on the Overlap of the Centroids of Continuous Regions

- raw moments M00 ←M00 + 1, M10←M10 + x, M01←M01 + y, which we will use later to calculate the centroid of the area (Cx = M10/M00, Cy = M01/M00);

- bounding box defined by top, left, bottom and right boundary.

- Area (M00) must be at least 9 (minimum inner square size is 3 × 3);

- Aspect ratio width to height of the region must be between 0.7 and 1.3 (here we assume that the QR Code is approximately square sized. In case of a much stretched QR Code this tolerance may be increased).

- Area(RC) > Area(RA) and Area(RC)/Area(RA) ≤ 4

- Bounding box of region RA must lie entirely within the bounding box of region RC

- Distance(Centroid(RC), Centroid(RA)) < 3

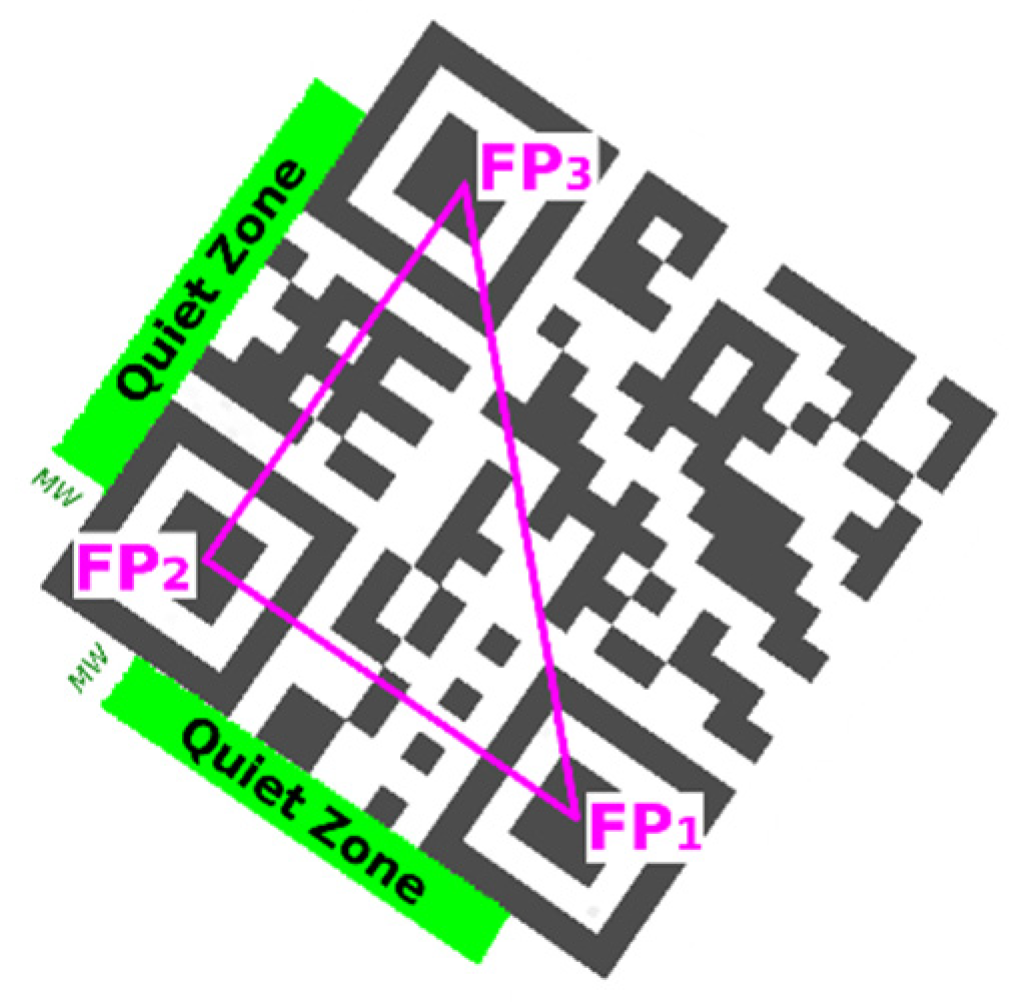

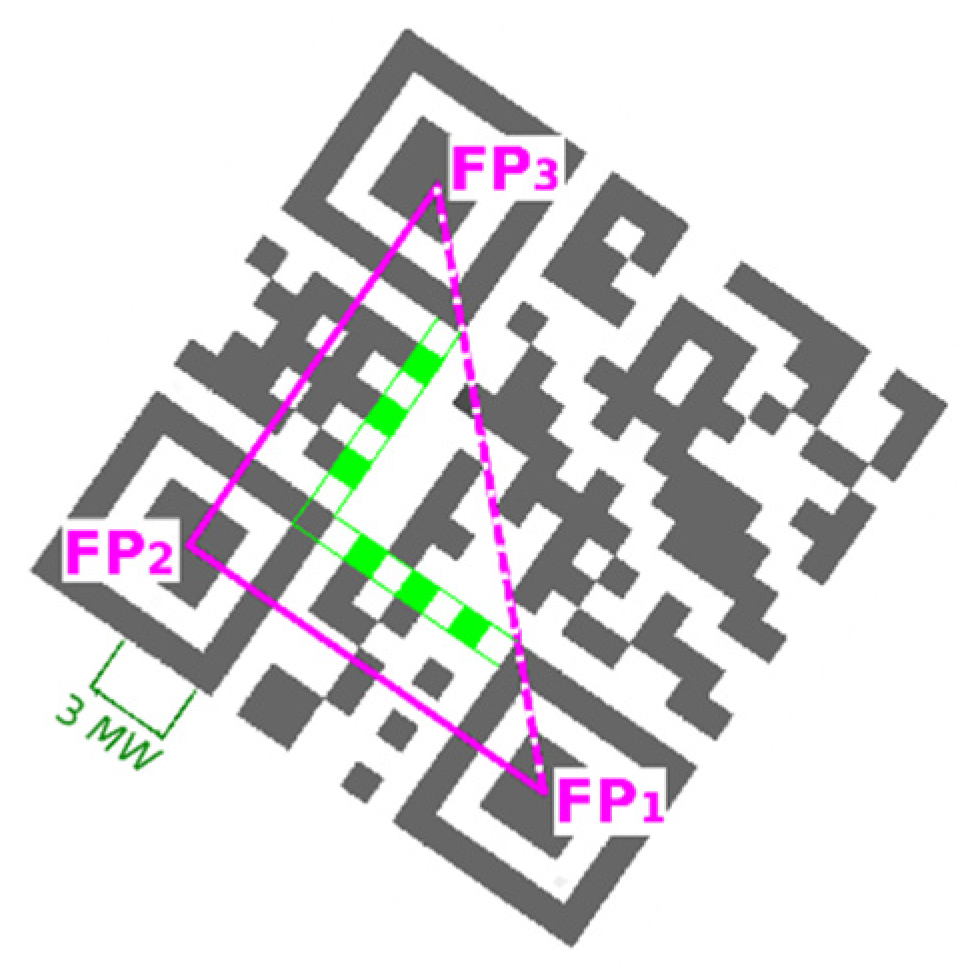

3.3. Grouping of Finder Patterns

- size of each side must be in predefined interval (the smallest and the largest expected QR Code);

- difference in sizes of two legs must be in tolerance ±14 (for non-distorted, non-stretched QR Code is sufficient less tolerance);

- difference in size of the real and theoretical hypotenuse must be in tolerance ±12 (for non-distorted, non-stretched QR Code is sufficient less tolerance).

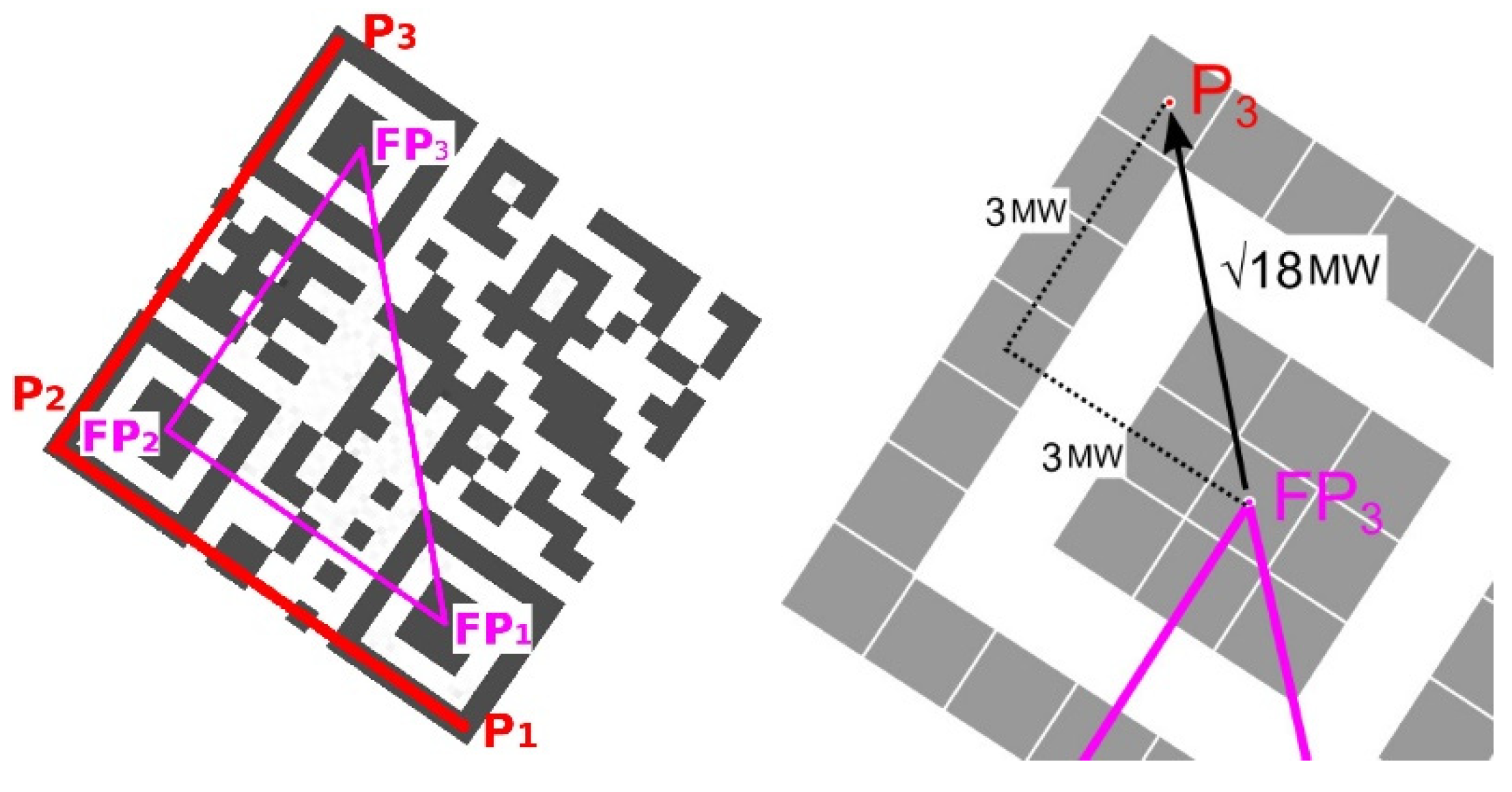

3.4. QR Code Bounding Box

4. The Proposed Method

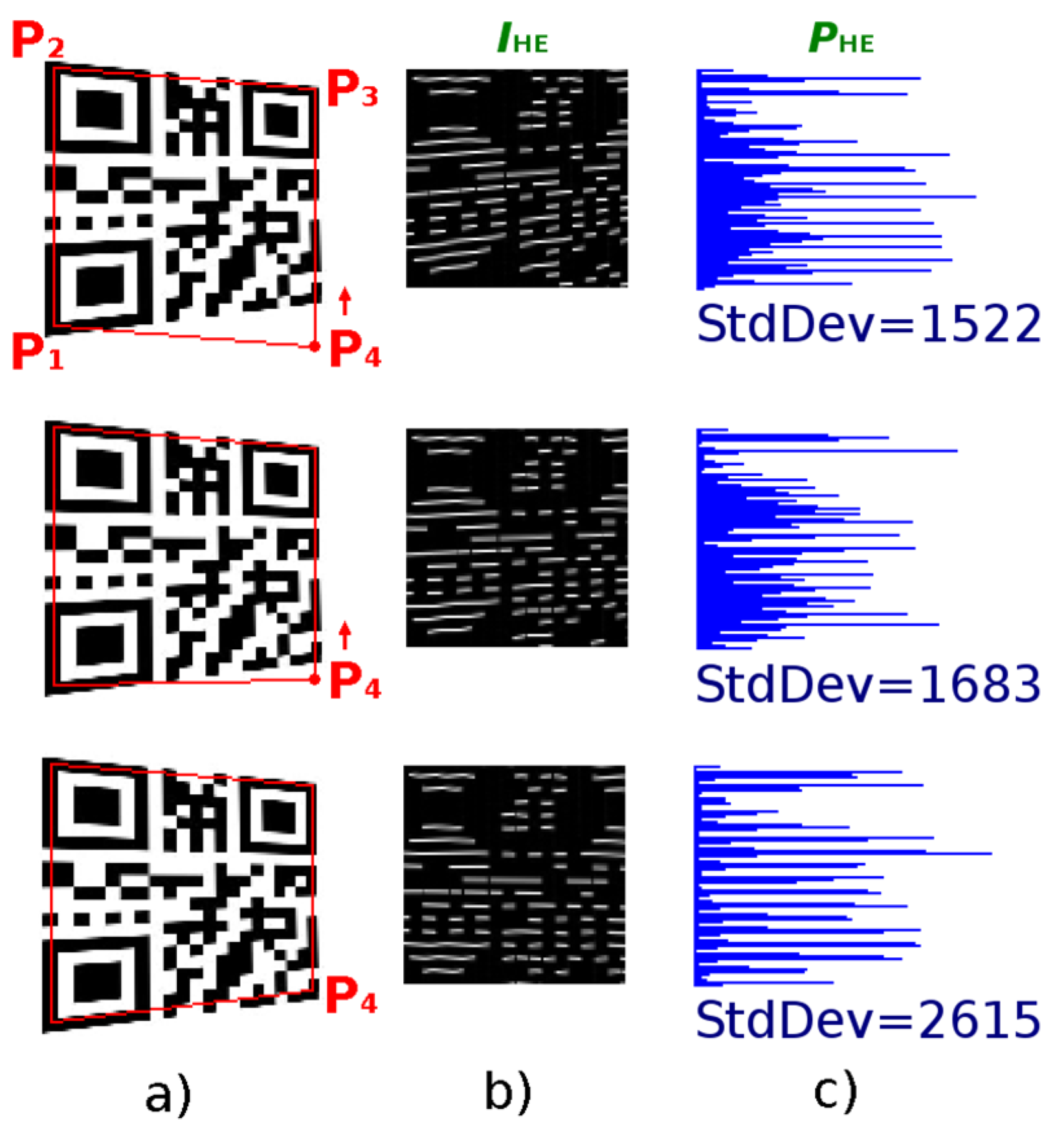

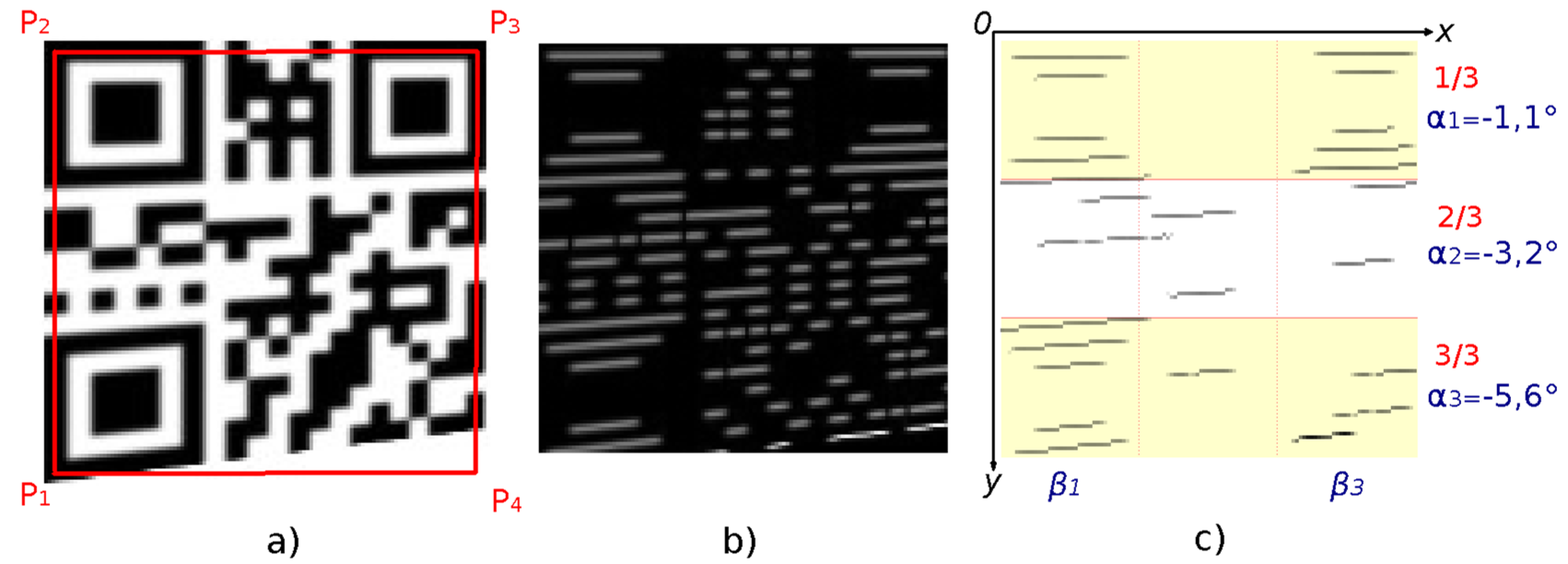

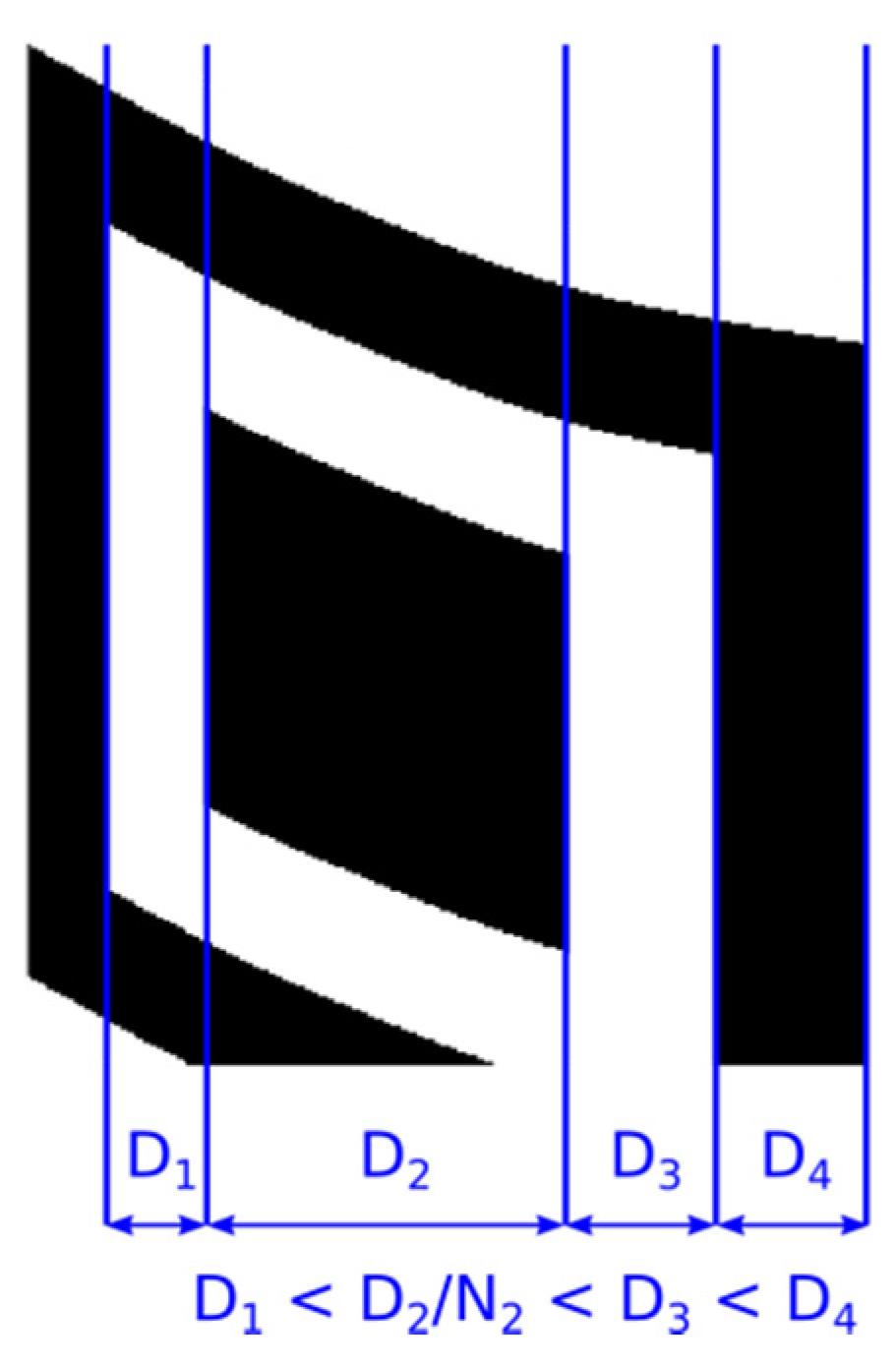

4.1. Alternative A—Evaluation of the Edge Projections

Identification of QR Code Distortion by Edge Orientation Analysis

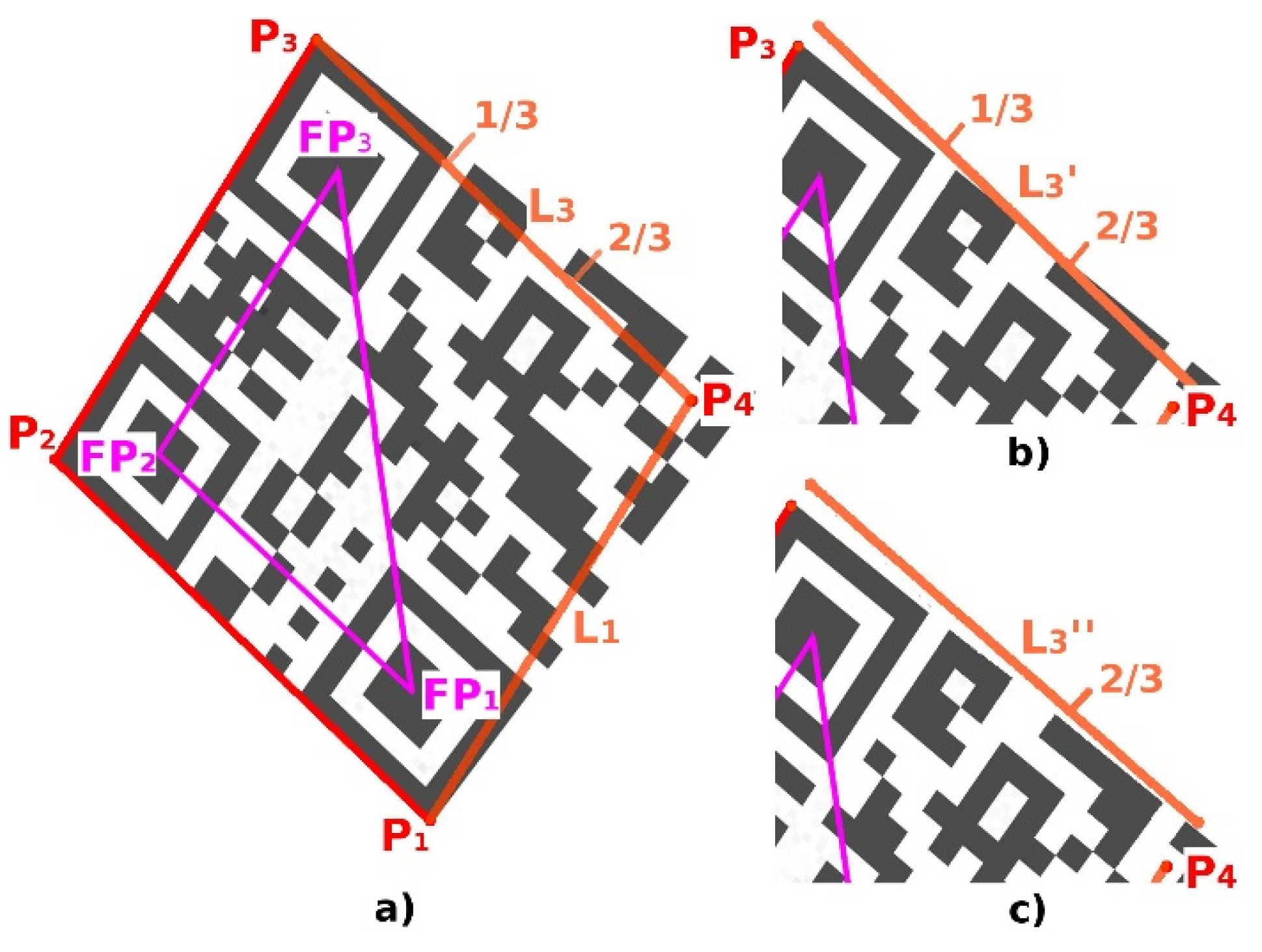

4.2. Alternative B—Evaluation the Overlap of the Boundary Line

4.3. Decoding the QR Code

5. Results

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Frankovsky, P.; Pastor, M.; Dominik, L.; Kicko, M.; Trebuna, P.; Hroncova, D.; Kelemen, M. Wheeled Mobile Robot in Structured Environment. In Proceedings of the 12th International Conference ELEKTRO, Mikulov, Czech Republic, 21–23 May 2018. [Google Scholar]

- Božek, P.; Nikitin, Y.; Bezák, P.; Fedorko, G.; Fabian, M. Increasing the production system productivity using inertial navigation. Manuf. Technol. 2015, 15, 274–278. [Google Scholar]

- Denso Wave Incorporated: What Is a QR Code? Available online: http://www.qrcode.com/en/about/ (accessed on 6 September 2018).

- Denso Wave Incorporated: History of QR Code. Available online: http://www.qrcode.com/en/history/ (accessed on 6 September 2018).

- Lin, J.-A.; Fuh, C.-S. 2D Barcode Image Decoding. Math. Probl. Eng. 2013, 1–10. [Google Scholar] [CrossRef]

- Li, S.; Shang, J.; Duan, Z.; Huang, J. Fast detection method of quick response code based on run-length coding. IET Image Process. 2018, 12, 546–551. [Google Scholar] [CrossRef]

- Belussi, L.F.F.; Hirata, N.S.T. Fast component-based QR Code detection in arbitrarily acquired images. J. Math. Imaging Vis. 2013. [Google Scholar] [CrossRef]

- Bodnár, P.; Nyúl, L.G. Improved QR Code Localization Using Boosted Cascade of Weak Classifiers. Acta Cybern. 2015, 22, 21–33. [Google Scholar] [CrossRef] [Green Version]

- Tribak, H.; Zaz, Y. QR Code Recognition based on Principal Components Analysis Method. Int. J. Adv. Comput. Sci. Appl. 2017, 8. [Google Scholar] [CrossRef] [Green Version]

- Tribak, H.; Zaz, Y. QR Code Patterns Localization based on Hu Invariant Moments. Int. J. Adv. Comput. Sci. Appl. 2017. [Google Scholar] [CrossRef] [Green Version]

- Ciążyński, K.; Fabijańska, A. Detection of QR-Codes in Digital Images Based on Histogram Similarity. Image Process. Commun. 2015, 20, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Szentandrási, I.; Herout, A.; Dubská, M. Fast Detection and Recognition of QR Codes in High-Resolution Images. In Proceedings of the 28th Spring Conference on Computer Graphics; ACM: New York, NY, USA, 2012. [Google Scholar]

- Gaur, P.; Tiwari, S. Recognition of 2D Barcode Images Using Edge Detection and Morphological Operation. Int. J. Comput. Sci. Mob. Comput. IJCSMC 2014, 3, 1277–1282. [Google Scholar]

- Kong, S. QR Code Image Correction based on Corner Detection and Convex Hull Algorithm. J. Multimed. 2013, 8, 662–668. [Google Scholar] [CrossRef]

- Sun, A.; Sun, Y.; Liu, C. The QR-Code Reorganization in Illegible Snapshots Taken by Mobile Phones. In Proceedings of the 2007 International Conference on Computational Science and its Applications (ICCSA 2007), Kuala Lumpur, Malaysia, 26–39 August 2007; pp. 532–538. [Google Scholar]

- Hansen, D.K.; Nasrollahi, K.; Rasmussen, C.B.; Moeslund, T.B. Real-Time Barcode Detection and Classification using Deep Learning. IJCCI 2017, 1, 321–327. [Google Scholar]

- Zharkov, A.; Zagaynov, I. Universal Barcode Detector via Semantic Segmentation. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 837–843. [Google Scholar]

- Chou, T.-H.; Ho, C.-S.; Kuo, Y.-F. QR Code Detection Using Convolutional Neural Networks. In Proceedings of the 2015 International Conference on Advanced Robotics and Intelligent Systems (ARIS), Taipei, Taiwan, 29–31 May 2015; pp. 1–5. [Google Scholar] [CrossRef]

- Kurniawan, W.C.; Okumura, H.; Muladi; Handayani, A.N. An Improvement on QR Code Limit Angle Detection using Convolution Neural Network. In Proceedings of the 2019 International Conference on Electrical, Electronics and Information Engineering (ICEEIE), Denpasar, Bali, Indonesia, 3 October 2019; pp. 234–238. [Google Scholar] [CrossRef]

- Lopez-Rincon, O.; Starostenko, O.; Alarcon-Aquino, V.; Galan-Hernandez, J.C. Binary Large Object-Based Approach for QR Code Detection in Uncontrolled Environments. J. Electr. Comput. Eng. 2017, 2, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Niblack, W. An Introduction to Digital Image Processing; Prentice Hall: Englewood Cliffs, NJ, USA, 1986. [Google Scholar]

- Sauvola, J.; Pietikäinen, M. Adaptive document image binarization. Pattern Recognit. 2020, 33, 225–236. [Google Scholar] [CrossRef] [Green Version]

- Bradley, D.; Roth, G. Adaptive thresholding using the integral image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Sulaiman, A.; Omar, K.; Nasrudin, M.F. Degraded Historical Document Binarization: A Review on Issues, Challenges, Techniques, and Future Directions. J. Imaging 2019, 5, 48. [Google Scholar] [CrossRef] [Green Version]

- Calvo-Zaragoza, J.; Gallego, A.-J. A selectional auto-encoder approach for document image binarization. Pattern Recognit. 2019, 86, 37–47. [Google Scholar] [CrossRef] [Green Version]

- Rosenfeld, A.; Pfaltz, J. Sequential Operations in Digital Picture Processing. J. ACM 1966, 13, 471–494. [Google Scholar] [CrossRef]

- Bailey, D.G.; Klaiber, M.J. Zig-Zag Based Single-Pass Connected Components Analysis. J. Imaging 2019, 5, 45. [Google Scholar] [CrossRef] [Green Version]

- Bresenham, J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Heckbert, P. Fundamentals of Texture Mapping and Image Warping. Available online: http://www2.eecs.berkeley.edu/Pubs/TechRpts/1989/5504.html (accessed on 6 September 2018).

- Google: ZXing (“Zebra Crossing”) Barcode Scanning Library for Java, Android. Available online: https://github.com/zxing (accessed on 22 September 2018).

- Beer, D. Quirc-QR Decoder Library. Available online: https://github.com/dlbeer/quirc (accessed on 22 September 2018).

- Leadtools: QR Code SDK Technology. Available online: http://demo.leadtools.com/JavaScript/Barcode/index.html (accessed on 22 September 2018).

- Inlite Research Inc.: Barcode Reader SDK. Available online: https://online-barcode-reader.inliteresearch.com (accessed on 22 September 2018).

- Terriberry, T.B. ZBar Barcode Reader. Available online: http://zbar.sourceforge.net (accessed on 22 September 2018).

- Dynamsoft: Barcode Reader SDK. Available online: https://www.dynamsoft.com/Products/Dynamic-Barcode-Reader.aspx (accessed on 22 September 2018).

- Lay, K.; Wang, L.; Wang, C. Rectification of QR-Code Images Using the Parametric Cylindrical Surface Model. In Proceedings of the International Symposium on Next-Generation Electronics (ISNE), Taipei, Taiwan, 4–6 May 2015; pp. 1–5. [Google Scholar]

- Karrach, L.; Pivarčiová, E.; Nikitin, Y.R. Comparing the impact of different cameras and image resolution to recognize the data matrix codes. J. Electr. Eng. 2018, 69, 286–292. [Google Scholar] [CrossRef] [Green Version]

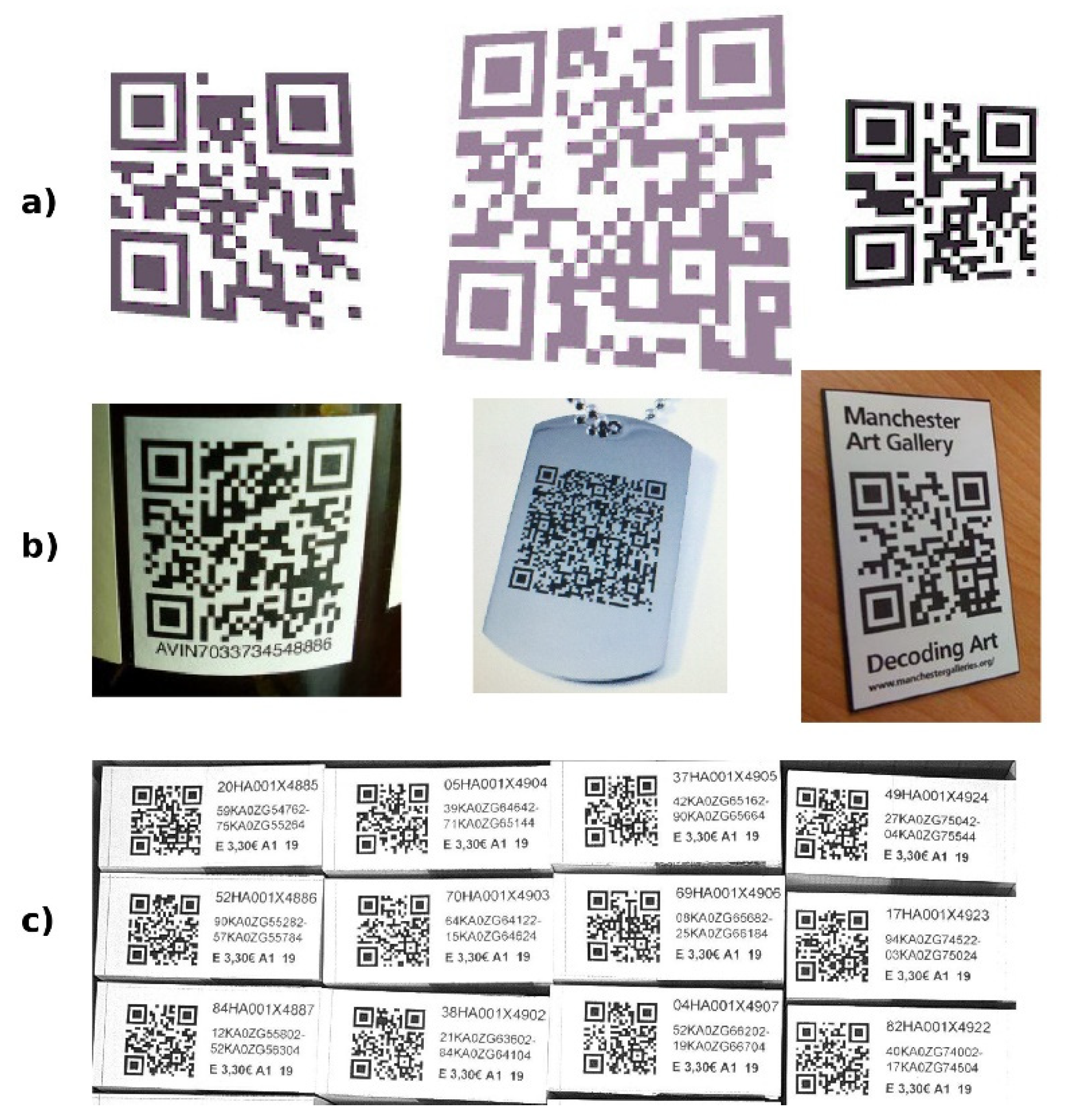

| Software | Samples (a) | Samples (b) | Samples (c) |

|---|---|---|---|

| Google ZXing (open-source) [30] | 1 | 70 | 30 |

| Quirc (open-source) [31] | 12 | 66 | 39 |

| LEADTOOLS QR Code SDK [32] | 7 | 69 | 100 |

| Inlite Barcode Reader SDK [33] | 18 | 79 | 141 |

| ZBar (open-source) [34] | 20 | 78 | 167 |

| Dynamsoft Barcode Reader SDK [35] | 20 | 84 | 178 |

| Our—Alternative 1.A, Dynamic grid | 20 | 85 | 180 |

| Our—Alternative 2.A, Dynamic grid | 20 | 85 | 179 |

| Software | 1024 × 768 | 1296 × 960 | 2592 × 1920 | ||||

|---|---|---|---|---|---|---|---|

| 1 code | 10 codes | 1 code | 10 codes | 1 code | 10 codes | 50 codes | |

| Quirc (open-source) | 10 ms | 34 ms | 15 ms | 37 ms | 66 ms | 130 ms | 430 ms |

| ZBar (open-source) | 48 ms | 96 ms | 76 ms | 124 ms | 338 ms | 396 ms | 1781 ms |

| Our—Alternative 1.A | 17 ms | 77 ms | 23 ms | 83 ms | 74 ms | 135 ms | 426 ms |

| Our—Alternative 2.A | 17 ms | 79 ms | 22 ms | 83 ms | 69 ms | 129 ms | 414 ms |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karrach, L.; Pivarčiová, E.; Božek, P. Identification of QR Code Perspective Distortion Based on Edge Directions and Edge Projections Analysis. J. Imaging 2020, 6, 67. https://doi.org/10.3390/jimaging6070067

Karrach L, Pivarčiová E, Božek P. Identification of QR Code Perspective Distortion Based on Edge Directions and Edge Projections Analysis. Journal of Imaging. 2020; 6(7):67. https://doi.org/10.3390/jimaging6070067

Chicago/Turabian StyleKarrach, Ladislav, Elena Pivarčiová, and Pavol Božek. 2020. "Identification of QR Code Perspective Distortion Based on Edge Directions and Edge Projections Analysis" Journal of Imaging 6, no. 7: 67. https://doi.org/10.3390/jimaging6070067

APA StyleKarrach, L., Pivarčiová, E., & Božek, P. (2020). Identification of QR Code Perspective Distortion Based on Edge Directions and Edge Projections Analysis. Journal of Imaging, 6(7), 67. https://doi.org/10.3390/jimaging6070067