Noncontact Sensing of Contagion

Abstract

:1. Introduction

2. Epidemiology

3. Clinical Features of COVID-19

4. Diagnosis of COVID-19

5. Symptoms of COVID-19 Detectable by Noncontact Sensors

5.1. Fever

5.2. Cough

5.3. Fatigue

5.4. Ocular Signs

5.5. Respiratory Rate and Heart Rate

6. Technology Used for Measuring Vital Signs

6.1. Thermal Imaging Technology

6.1.1. Body Temperature Measured with Thermal Camera

6.1.2. HR and BR Measured with Thermal Camera

6.1.3. Image Processing Techniques Associated to Thermal Imaging

- Although these systems may be in use for initial temperature assessment to triage individuals in high throughput areas (for example, airports, businesses, and sporting events), the systems have not been shown to be effective when used to take the temperature of multiple people at the same time. It is difficult to find stable solutions related to “mass fever screening”.

- These systems measure surface skin temperature, which is usually lower than a temperature measured orally. Thermal imaging systems must be adjusted properly to correct for this difference in measurements.

- These systems work effectively only when all the following are true:

- o

- The systems are used in the right environment or location.

- o

- The systems are set up and operated correctly.

- o

- The person being assessed is prepared according to instructions.

- o

- The person handling the thermal imaging system is properly trained.

- Room temperature should be 68–76 °F (20–24 °C) and relative humidity 10–50 percent.

- There are items that could impact the temperature measurement:

- o

- Reflective backgrounds (glass, mirrors, and metallic surfaces) could reflect infrared radiation.

- o

- Movement of air in the room, direct sunlight and radiant heat (portable heaters, electrical sources).

- o

- Strong lighting (incandescent, halogen, and quartz tungsten halogen light bulbs).

- Some systems require the use of a calibrated blackbody (a tool for checking the calibration of an infrared temperature sensor) during evaluation to make sure measurements are accurate.

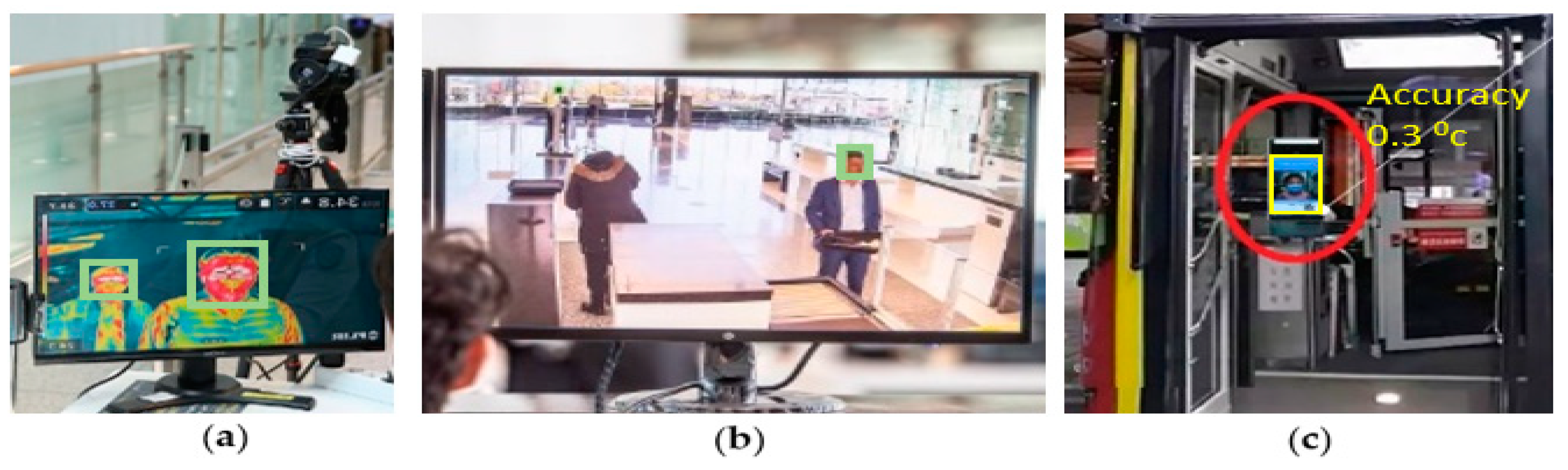

6.1.4. Thermal Imaging in COVID-19

6.2. Video Camera Imaging Technology

6.2.1. Vital Signs Measured with a Webcam

6.2.2. Vital Signs Measured with Digital Cameras

6.2.3. Vital Signs Measured with Other Sensors

6.3. Combinations of Different Technologies

7. Hypoxemia Detection Using Video Cameras

7.1. SpO2 Measured with Monochrome Camera

7.2. SpO2 Measured with RGB Camera

8. Acoustic Detection of Respiratory Infection

9. Video Based Cough Detection

10. Discussion

11. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, Y.-C.; Chen, C.-S.; Chan, Y.-J. The outbreak of COVID-19: An overview. J. Chin. Med. Assoc. 2020, 83, 217–220. [Google Scholar] [CrossRef]

- Sohrabi, C.; Alsafi, Z.; O’Neill, N.; Khan, M.; Kerwan, A.; Al-Jabir, A.; Iosifidis, C.; Agha, R. World Health Organization declares global emergency: A review of the 2019 novel coronavirus (COVID-19). Int. J. Surg. 2020, 76, 71–76. [Google Scholar] [CrossRef]

- 2019-nCoV Situation Report 51. World Health Organization. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/situationreports/.2020 (accessed on 3 April 2020).

- 2019-nCoV Situation Report 71. World Health Organization. Available online: https://www.who.int/emergencies/diseases/novel-coronavirus-2019/situationreports/.2020 (accessed on 3 April 2020).

- Zhou, P.; Yang, X.-L.; Wang, X.-G.; Hu, B.; Zhang, L.; Zhang, W.; Si, H.-R.; Zhu, Y.; Li, B.; Huang, C.-L. A pneumonia outbreak associated with a new coronavirus of probable bat origin. Nature 2020, 579, 270–273. [Google Scholar] [CrossRef] [Green Version]

- Gorbalenya, A.E. The species Severe acute respiratory syndrome-related coronavirus: Classifying 2019-nCoV and naming it SARS-CoV-2. Nat. Microbiol. 2020, 5, 536–544. [Google Scholar]

- Adhikari, S.P.; Meng, S.; Wu, Y.-J.; Mao, Y.-P.; Ye, R.-X.; Wang, Q.-Z.; Sun, C.; Sylvia, S.; Rozelle, S.; Raat, H. Epidemiology, causes, clinical manifestation and diagnosis, prevention and control of coronavirus disease (COVID-19) during the early outbreak period: A scoping review. Infect. Dis. Poverty 2020, 9, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.-C.; Chang, R.-E.; Chang, W.-C. Limitations of forehead infrared body temperature detection for fever screening for severe acute respiratory syndrome. Infect. Control Hosp. Epidemiol. 2004, 25, 1109–1111. [Google Scholar] [CrossRef] [PubMed]

- Qiu, H.; Wu, J.; Hong, L.; Luo, Y.; Song, Q.; Chen, D. Clinical and epidemiological features of 36 children with coronavirus disease 2019 (COVID-19) in Zhejiang, China: An observational cohort study. Lancet Infect. Dis. 2020, 20, 689–696. [Google Scholar] [CrossRef] [Green Version]

- Kelvin, A.A.; Halperin, S. COVID-19 in children: The link in the transmission chain. Lancet Infect. Dis. 2020, 20, 633–634. [Google Scholar] [CrossRef] [Green Version]

- Zhou, F.; Yu, T.; Du, R.; Fan, G.; Liu, Y.; Liu, Z.; Xiang, J.; Wang, Y.; Song, B.; Gu, X. Clinical course and risk factors for mortality of adult inpatients with COVID-19 in Wuhan, China: A retrospective cohort study. Lancet 2020, 355, 1054–1062. [Google Scholar] [CrossRef]

- Mehra, M.R.; Ruschitzka, F. COVID-19 Illness and Heart Failure: A Missing Link? JACC Heart Fail. 2020, 8, 512–514. [Google Scholar] [CrossRef]

- Sun, Q.; Qiu, H.; Huang, M.; Yang, Y. Lower mortality of COVID-19 by early recognition and intervention: Experience from Jiangsu Province. Ann. Intensive Care 2020, 10, 1–4. [Google Scholar] [CrossRef] [Green Version]

- Turner, A.J.; Hiscox, J.A.; Hooper, N.M. ACE2: From vasopeptidase to SARS virus receptor. Trends Pharmacol. Sci. 2004, 25, 291–294. [Google Scholar] [CrossRef] [PubMed]

- Keidar, S.; Kaplan, M.; Gamliel-Lazarovich, A. ACE2 of the heart: From angiotensin I to angiotensin (1–7). Cardiovasc Res. 2007, 73, 463–469. [Google Scholar] [CrossRef]

- Sun, P.; Lu, X.; Xu, C.; Sun, W.; Pan, B. Understanding of COVID-19 based on current evidence. J. Med. Virol. 2020, 82, 548–551. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Wang, Y.; Li, X.; Ren, L.; Zhao, J.; Hu, Y.; Zhang, L.; Fan, G.; Xu, J.; Gu, X. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet 2020, 395, 497–506. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Y.-Y.; Ma, Y.-T.; Zhang, J.-Y.; Xie, X. COVID-19 and the cardiovascular system. Nat. Rev. Cardiol. 2020, 17, 259–260. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- COVID-19 Testing in Australia—Information for Health Professionals. Therapeutic Goods Administration, Department of Health, Australian Government. Available online: https://www.tga.gov.au/node/904153 (accessed on 2 April 2020).

- Ai, T.; Yang, Z.; Hou, H.; Zhan, C.; Chen, C.; Lv, W.; Tao, Q.; Sun, Z.; Xia, L. Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: A report of 1014 cases. Radiology 2020, 296, E32–E40. [Google Scholar] [CrossRef] [Green Version]

- Cascella, M.; Rajnik, M.; Cuomo, A.; Dulebohn, S.C.; Di Napoli, R. Features, evaluation and treatment coronavirus (COVID-19). In StatPearls [Internet]; National Center for Biotechnology Information, U.S. National Library of Medicine, StatPearls Publishing: Treasure Island, FL, USA, 2020. [Google Scholar]

- Body Temperature Norms. MedlinePlus (Internet). Bethesda (MD): National Library of Medicine (US). Available online: https://medlinelineplus.gov/ency/article/001982.htm (accessed on 31 March 2020).

- Updated WHO Advice for International Traffic in Relation to the Outbreak of the Novel Coronavirus 2019-nCoV. World Health Organization. Available online: https://www.who.int/ith/2020-24-01-outbreak-of-Pneumonia-caused-by-new-coronavirus/en/ (accessed on 2 April 2020).

- Bwire, G.M.; Paulo, L.S. Coronavirus disease-2019: Is fever an adequate screening for the returning travelers? Trop. Med. Health 2020, 48, 1–3. [Google Scholar] [CrossRef]

- Du, Z.; Xu, X.; Wu, Y.; Wang, L.; Cowling, B.J.; Meyers, L.A. The serial interval of COVID-19 from publicly reported confirmed cases. medRxiv 2020, 10, 19-20025452. [Google Scholar]

- Bai, Y.; Yao, L.; Wei, T.; Tian, F.; Jin, D.-Y.; Chen, L.; Wang, M. Presumed asymptomatic carrier transmission of COVID-19. JAMA 2020, 323, 1406–1407. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Wang, S.; Du, C.; Huang, Y.; Feng, X.; Zhou, F. Accurate fatigue detection based on multiple facial morphological features. J. Sens. 2019, 2019, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Wu, P.; Duan, F.; Luo, C.; Liu, Q.; Qu, X.; Liang, L.; Wu, K. Characteristics of Ocular Findings of Patients With Coronavirus Disease 2019 (COVID-19) in Hubei Province, China. JAMA Ophthalmol. 2020, 138, 575–578. [Google Scholar] [CrossRef]

- Cretikos, M.A.; Bellomo, R.; Hillman, K.; Chen, J.; Finfer, S.; Flabouris, A. Respiratory rate: The neglected vital sign. Med. J. Aust. 2008, 188, 657–659. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; McGoogan, J.M. Characteristics of and important lessons from the coronavirus disease 2019 (COVID-19) outbreak in China: Summary of a report of 72 314 cases from the Chinese Center for Disease Control and Prevention. JAMA 2020, 323, 1239–1242. [Google Scholar] [CrossRef]

- Karjalainen, J.; Viitasalo, M. Fever and cardiac rhythm. Arch. Intern. Med. 1986, 146, 1169–1171. [Google Scholar] [CrossRef] [PubMed]

- Khanam, F.-T.-Z.; Al-Naji, A.; Chahl, J. Remote Monitoring of Vital Signs in Diverse Non-Clinical and Clinical Scenarios Using Computer Vision Systems: A Review. Appl. Sci. 2019, 9, 4474. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Cheng, J.; Song, R.; Liu, Y.; Ward, R.; Wang, Z.J. Video-Based Heart Rate Measurement: Recent Advances and Future Prospects. IEEE Trans. Instrum. Meas. 2018, 68, 3600–3615. [Google Scholar] [CrossRef]

- Al-Naji, A.; Gibson, K.; Lee, S.-H.; Chahl, J. Monitoring of cardiorespiratory signal: Principles of remote measurements and review of methods. IEEE Access 2017, 5, 15776–15790. [Google Scholar] [CrossRef]

- Jasti, N.; Bista, S.; Bhargav, H.; Sinha, S.; Gupta, S.; Chaturvedi, S.; Gangadhar, B. Medical Applications of Infrared Thermography: A Narrative Review. J. Stem Cells 2019, 14, 35–53. [Google Scholar]

- Gade, R.; Moeslund, T.B. Thermal cameras and applications: A survey. Mach. Vis. Appl. 2014, 25, 245–262. [Google Scholar] [CrossRef] [Green Version]

- Lloyd, C. How Does Thermal Imaging Work? Available online: https://www.howtogeek.com/294076/how-does-thermal-imaging-work/ (accessed on 10 December 2020).

- Bouzida, N.; Bendada, A.; Maldague, X.P. Visualization of body thermoregulation by infrared imaging. J. Therm. Biol. 2009, 34, 120–126. [Google Scholar] [CrossRef]

- Thermal Imaging for Detecting Elevated Body Temperature. FLIR Systems. Available online: https://www.flir.com.au/discover/public-safety/thermal-imaging-for-detecting-elevated-body-temperature (accessed on 22 May 2020).

- Ng, E.Y.; Kawb, G.; Chang, W. Analysis of IR thermal imager for mass blind fever screening. Microvasc. Res. 2004, 68, 104–109. [Google Scholar] [CrossRef]

- Bilodeau, G.-A.; Torabi, A.; Lévesque, M.; Ouellet, C.; Langlois, J.P.; Lema, P.; Carmant, L. Body temperature estimation of a moving subject from thermographic images. Mach. Vis. Appl. 2012, 23, 299–311. [Google Scholar] [CrossRef]

- Aubakir, B.; Nurimbetov, B.; Tursynbek, I.; Varol, H.A. Vital Sign Monitoring Utilizing Eulerian Video Magnification and Thermography. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 3527–3530. [Google Scholar]

- Sharma, A.; Yadav, A.R. Image Processing Based Body Temperature Estimation Using Thermal Video Sequence. In Proceedings of the 2017 International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 18–19 July 2017; pp. 846–852. [Google Scholar]

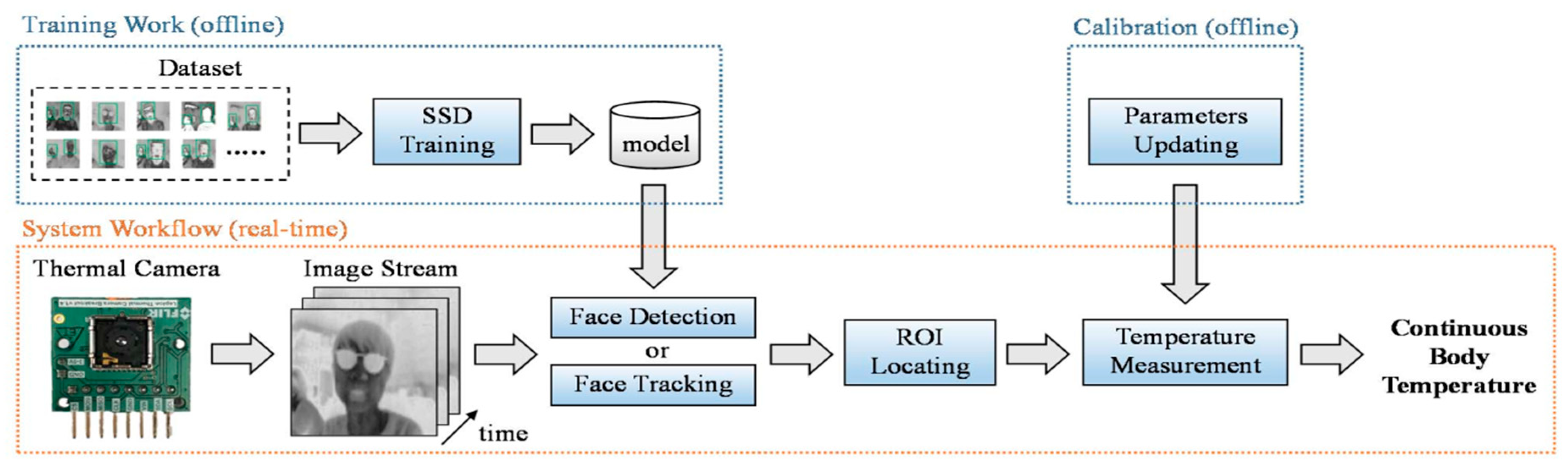

- Lin, J.-W.; Lu, M.-H.; Lin, Y.-H. A Thermal Camera Based Continuous Body Temperature Measurement System. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, South Korea, 27–28 October 2019; pp. 1681–1687. [Google Scholar]

- Sumriddetchkajorn, S.; Chaitavon, K. Field test studies of our infrared-based human temperature screening system embedded with a parallel measurement approach. Infrared Phys. Technol. 2009, 52, 119–123. [Google Scholar] [CrossRef]

- Silawan, N.; Kusukame, K.; Kek, K.J.; Kuan, W.S. A Novel Environment-Invariant Core Body Temperature Estimation for High Sensitivity and Specificity Fever Screening. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 1612–1615. [Google Scholar]

- Thomas, J.B.; Pahler, L.; Handy, R.; Thiese, M.S.; Schaefer, C. Pilot study predicting core body temperatures in hot work environments using thermal imagery. J. Chem. Health Saf. 2019, 26, 75–83. [Google Scholar] [CrossRef]

- Yang, M.; Liu, Q.; Turner, T.; Wu, Y. Vital Sign Estimation from Passive Thermal Video. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Murthy, R.; Pavlidis, I. Noncontact measurement of breathing function. Ieee Eng. Med. Biol. Mag. 2006, 25, 57–67. [Google Scholar] [CrossRef]

- Murthy, R.; Pavlidis, I.; Tsiamyrtzis, P. Touchless Monitoring of Breathing Function. In Proceedings of the 26th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Francisco, CA, USA, 1–5 September 2004; pp. 1196–1199. [Google Scholar]

- Fei, J.; Pavlidis, I. Analysis of Breathing Air Flow Patterns in Thermal Imaging. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 946–952. [Google Scholar]

- Fei, J.; Zhu, Z.; Pavlidis, I. Imaging Breathing Rate in the CO2 Absorption Band. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 17–18 January 2006; pp. 700–705. [Google Scholar]

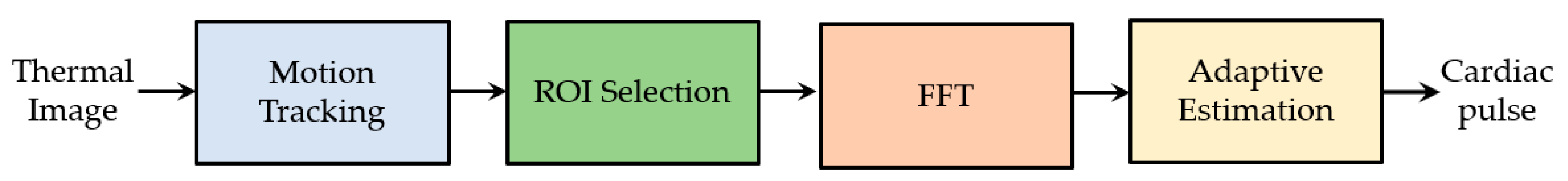

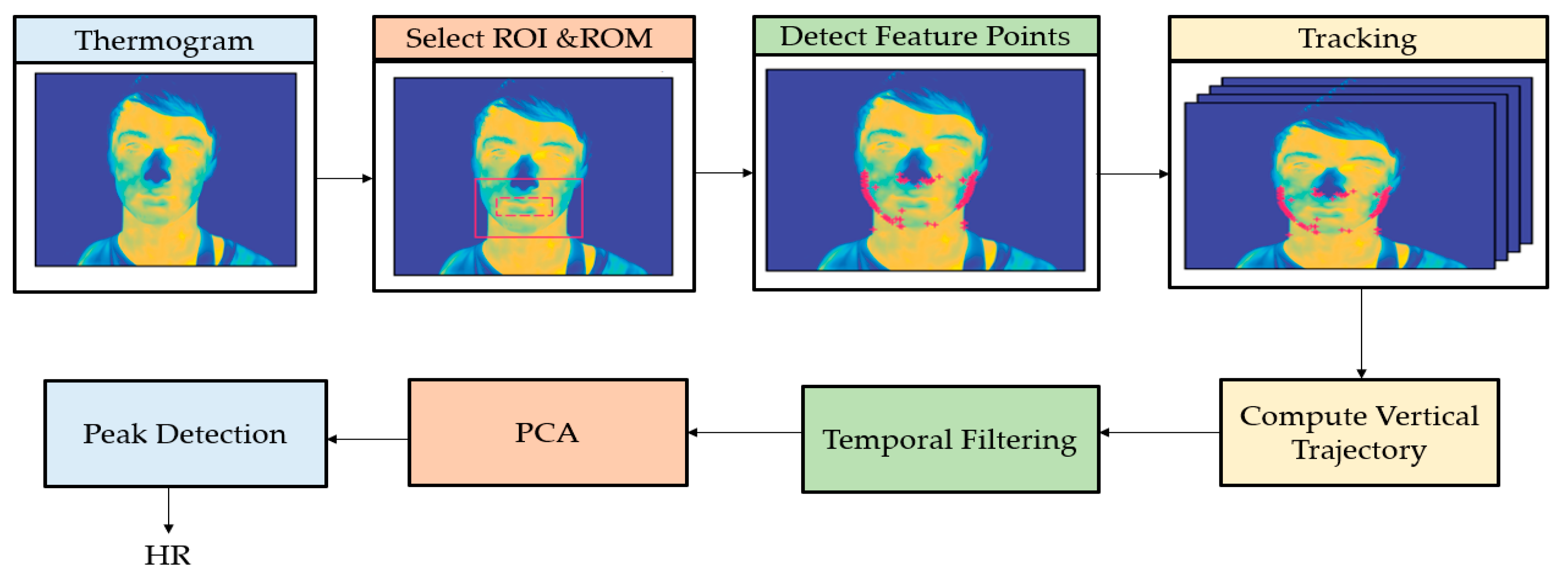

- Sun, N.; Garbey, M.; Merla, A.; Pavlidis, I. Imaging the Cardiovascular Pulse. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 416–421. [Google Scholar]

- Garbey, M.; Sun, N.; Merla, A.; Pavlidis, I. Contact-free measurement of cardiac pulse based on the analysis of thermal imagery. IEEE Trans. Biomed. Eng. 2007, 54, 1418–1426. [Google Scholar] [CrossRef]

- Chekmenev, S.Y.; Rara, H.; Farag, A.A. Non-Contact, Wavelet-Based Measurement of Vital Signs Using Thermal Imaging. In Proceedings of the First International Conference on Graphics, Vision, and Image Processing (GVIP), Cairo, Egypt, 17–22 June 2007; pp. 107–112. [Google Scholar]

- Fei, J.; Pavlidis, I. Thermistor at a distance: Unobtrusive measurement of breathing. IEEE Trans. Biomed. Eng. 2009, 57, 988–998. [Google Scholar]

- Shakhih, M.F.M.; Wahab, A.A.; Salim, M.I.M. Assessment of inspiration and expiration time using infrared thermal imaging modality. Infrared Phys. Technol. 2019, 99, 129–139. [Google Scholar] [CrossRef]

- Pereira, C.B.; Yu, X.; Czaplik, M.; Rossaint, R.; Blazek, V.; Leonhardt, S. Remote monitoring of breathing dynamics using infrared thermography. Biomed. Opt. Express 2015, 6, 4378–4394. [Google Scholar] [CrossRef] [PubMed]

- Barbosa Pereira, C.; Czaplik, M.; Blazek, V.; Leonhardt, S.; Teichmann, D. Monitoring of cardiorespiratory signals using thermal imaging: A pilot study on healthy human subjects. Sensors 2018, 18, 1541. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pereira, C.B.; Yu, X.; Blazek, V.; Venema, B.; Leonhardt, S. Multisensor Data Fusion for Enhanced Respiratory Rate Estimation in Thermal Videos. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1381–1384. [Google Scholar]

- Abbas, A.K.; Heimann, K.; Jergus, K.; Orlikowsky, T.; Leonhardt, S. Neonatal non-contact respiratory monitoring based on real-time infrared thermography. Biomed. Eng. Online 2011, 10, 93. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pereira, C.B.; Heimann, K.; Venema, B.; Blazek, V.; Czaplik, M.; Leonhardt, S. Estimation of Respiratory Rate from Thermal Videos of Preterm Infants. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 3818–3821. [Google Scholar]

- Pereira, C.B.; Yu, X.; Goos, T.; Reiss, I.; Orlikowsky, T.; Heimann, K.; Venema, B.; Blazek, V.; Leonhardt, S.; Teichmann, D. Noncontact monitoring of respiratory rate in newborn infants using thermal imaging. IEEE Trans. Biomed. Eng. 2018, 66, 1105–1114. [Google Scholar] [CrossRef] [PubMed]

- Berg, A. Detection and Tracking in Thermal Infrared Imagery; Linköping University Electronic Press: Linköping, Sweden, 2016; p. 66. Available online: https://spie.org/Publications/Proceedings/Volume/11399?SSO=1 (accessed on 5 February 2021).

- Haghmohammadi, H.F.; Necsulescu, D.; Vahidi, M. Remote Measurement of Body Temperature for an Indoor Moving Crowd. In Proceedings of the 2018 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 24–26 May 2018; pp. 1–6. [Google Scholar]

- Alkali, A.H. Evaluation of thresholding based noncontact respiration rate monitoring using thermal imaging. Evaluation 2017, 6, 50–58. [Google Scholar]

- Kopaczka, M.; Nestler, J.; Merhof, D. Face detection in thermal infrared images: A comparison of algorithm-and machine-learning-based approaches. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Antwerp, Belgium, 18–21 September 2017; pp. 518–529. [Google Scholar]

- Palmero, C.; Clapés, A.; Bahnsen, C.; Møgelmose, A.; Moeslund, T.B.; Escalera, S. Multi-modal rgb–depth–thermal human body segmentation. Int. J. Comput. Vis. 2016, 118, 217–239. [Google Scholar] [CrossRef] [Green Version]

- Kezebou, L.; Oludare, V.; Panetta, K.; Agaian, S. TR-GAN: Thermal to RGB Face Synthesis with Generative Adversarial Network for Cross-Modal Face Recognition. In Proceedings of the Mobile Multimedia/Image Processing, Security, and Applications 2020; p. 113990. Available online: https://spie.org/Publications/Proceedings/Volume/11399 (accessed on 5 February 2021).

- Chen, L.; Hu, M.; Liu, N.; Zhai, G.; Yang, S.X. Collaborative use of RGB and thermal imaging for remote breathing rate measurement under realistic conditions. Infrared Phys. Technol. 2020, 111, 103504. [Google Scholar] [CrossRef]

- Iranmanesh, S.M.; Dabouei, A.; Kazemi, H.; Nasrabadi, N.M. Deep cross Polarimetric Thermal-To-Visible Face Recognition. In Proceedings of the 2018 International Conference on Biometrics (ICB), Gold Coast, QLD, Australia, 20–23 February 2018; pp. 166–173. [Google Scholar]

- Zhang, T.; Wiliem, A.; Yang, S.; Lovell, B. Tv-gan: GENERATIVE Adversarial Network Based Thermal to Visible Face Recognition. In Proceedings of the 2018 International Conference on Biometrics (ICB), Gold Coast, QLD, Australia, 20–23 February 2018; pp. 174–181. [Google Scholar]

- Hu, S.; Short, N.J.; Riggan, B.S.; Gordon, C.; Gurton, K.P.; Thielke, M.; Gurram, P.; Chan, A.L. A Polarimetric Thermal Database for Face Recognition Research. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegus, NV, USA, 26 June–1 July 2016; pp. 119–126. [Google Scholar]

- Setjo, C.H.; Achmad, B. Thermal Image Human Detection Using Haar-Cascade Classifier. In Proceedings of the 2017 7th International Annual Engineering Seminar (InAES), Yogyakarta, Indonesia, 1–2 August 2017; pp. 1–6. [Google Scholar]

- Ivašić-Kos, M.; Krišto, M.; Pobar, M. Human Detection in Thermal Imaging Using YOLO. In Proceedings of the 2019 5th International Conference on Computer and Technology Applications, Istanbul, Turkey, 16–17 April 2019; pp. 20–24. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Akula, A.; Ghosh, R.; Kumar, S.; Sardana, H. WignerMSER: Pseudo-Wigner Distribution Enriched MSER Feature Detector for Object Recognition in Thermal Infrared Images. IEEE Sens. J. 2019, 19, 4221–4228. [Google Scholar] [CrossRef]

- Miethig, B.; Liu, A.; Habibi, S.; Mohrenschildt, M.v. Leveraging Thermal Imaging for Autonomous Driving. In Proceedings of the 2019 IEEE Transportation Electrification Conference and Expo (ITEC), Detroit, MI, USA, 19–21 June 2019; pp. 1–5. [Google Scholar]

- Agrawal, K.; Subramanian, A. Enhancing Object Detection in Adverse Conditions using Thermal Imaging. arXiv 2019, arXiv:1909.13551. [Google Scholar]

- Malpani, S.; Asha, C.; Narasimhadhan, A. Thermal Vision Human Classification and Localization Using Bag of Visual Word. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, 22–25 November 2016; pp. 3135–3139. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single Shot Multibox Detector. In European Conference on Computer Vision, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Piniarski, K.; Pawłowski, P. Segmentation of Pedestrians in Thermal Imaging. In Proceedings of the 2018 Baltic URSI Symposium (URSI), Poznan, Poland, 14–17 May 2018; pp. 210–211. [Google Scholar]

- Sun, Y.; Zuo, W.; Liu, M. Rtfnet: Rgb-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot. Autom. Lett. 2019, 4, 2576–2583. [Google Scholar] [CrossRef]

- Shivakumar, S.S.; Rodrigues, N.; Zhou, A.; Miller, I.D.; Kumar, V.; Taylor, C.J. Pst900: Rgb-Thermal Calibration, Dataset and Segmentation Network. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 9441–9447. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE international conference on computer vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Wang, T.; Zhang, T.; Lovell, B.C. EBIT: Weakly-supervised image translation with edge and boundary enhancement. Pattern Recognit. Lett. 2020, 138, 534–539. [Google Scholar] [CrossRef]

- Panetta, K.; Wan, Q.; Agaian, S.; Rajeev, S.; Kamath, S.; Rajendran, R.; Rao, S.; Kaszowska, A.; Taylor, H.; Samani, A. A comprehensive database for benchmarking imaging systems. Ieee Trans. Pattern Anal. Mach. Intell. 2018, 42, 509–520. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial nets. arXiv 2014, arXiv:1406.2661. [Google Scholar]

- Kniaz, V.V.; Knyaz, V.A.; Hladuvka, J.; Kropatsch, W.G.; Mizginov, V. Thermalgan: Multimodal Color-to-Thermal Image Translation for Person re-Identification in Multispectral Dataset. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 1–19. [Google Scholar]

- Zhang, H.; Patel, V.M.; Riggan, B.S.; Hu, S. Generative Adversarial Network-Based Synthesis of Visible Faces from Polarimetrie Thermal Faces. In Proceedings of the 2017 IEEE International Joint Conference on Biometrics (IJCB), Denver, CO, USA, 1–4 October 2017; pp. 100–107. [Google Scholar]

- Thermal Imaging Systems (Infrared Thermographic Systems/Thermal Imaging Cameras). Available online: https://www.fda.gov/medical-devices/general-hospital-devices-and-supplies/thermal-imaging-systems-infrared-thermographic-systems-thermal-imaging-cameras (accessed on 16 December 2020).

- Enforcement Policy for Telethermographic Systems During the Coronavirus Disease 2019 (COVID-19) Public Health Emergency. Available online: https://www.fda.gov/regulatory-information/search-fda-guidance-documents/enforcement-policy-telethermographic-systems-during-coronavirus-disease-2019-covid-19-public-health (accessed on 16 December 2020).

- Ring, F.J.; Ng, E.; Diakides, M.; Bronzino, J.; Peterson, D. Infrared thermal imaging standards for human fever detection. In Medical Infrared Imaging: Principles and Practices; CRC Press: Boca Raton, FL, USA, 2012; pp. 21–22. [Google Scholar]

- Ghassemi, P.; Pfefer, T.J.; Casamento, J.P.; Simpson, R.; Wang, Q. Best practices for standardized performance testing of infrared thermographs intended for fever screening. PLoS ONE 2018, 13, e0203302. [Google Scholar] [CrossRef] [Green Version]

- Howell, K.J.; Mercer, J.B.; Smith, R.E. Infrared thermography for mass fever screening: Repeating the mistakes of the past? J. Med. Virol. 2020, 30, 5–6. [Google Scholar]

- Temperature Screening White Paper. Available online: https://www.een.com/thermal-cameras-for-elevated-body-temperature-whitepaper/ (accessed on 16 December 2020).

- Kumar, A.; Sharma, K.; Singh, H.; Naugriya, S.G.; Gill, S.S.; Buyya, R. A drone-based networked system and methods for combating coronavirus disease (COVID-19) pandemic. Future Gener. Comput. Syst. 2020, 115, 1–19. [Google Scholar] [CrossRef]

- Jeffrey Dastin, K.H. Exclusive: Amazon Deploys Thermal Cameras at Warehouses to Scan for Fevers Faster. Available online: https://www.reuters.com/article/us-health-coronavirus-amazon-com-cameras/exclusive-amazon-deploys-thermal-cameras-at-warehouses-to-scan-for-fevers-faster-idUSKBN2200HT (accessed on 16 December 2020).

- Martín, H. Airports Are Testing Thermal Cameras and Other Technology to Screen Travelers for COVID-19. Available online: https://www.latimes.com/business/story/2020-05-13/airports-test-technology-screen-covid-19 (accessed on 16 December 2020).

- Incheon International Airport Chooses FLIR Thermal Imaging Cameras in Response to the COVID-19 Pandemic. Available online: https://www.flir.com/discover/incheon-international-airport-chooses-flir-thermal-imaging-cameras-in-response-to-the-covid-19-pandemic/ (accessed on 16 December 2020).

- Evans, S. Coronavirus: Canberra Airport Temperature Screening all Passengers and Staff. Available online: https://www.canberratimes.com.au/story/6744912/canberra-airport-ramps-up-covid-19-safety-measures/ (accessed on 16 December 2020).

- Chandran, R. Asian Cities Virus-Proof Public Transit with Smart Shelters, Thermal Scanners. Available online: https://www.weforum.org/agenda/2020/08/asian-cities-virus-proof-public-transit-with-smart-shelters-thermal-scanners/ (accessed on 16 December 2020).

- Temperature Screening for COVID-19 with Thermal Cameras or Scanners Is Increasing More and More in Hospitals, Airports, Public Transport. Available online: https://www.soraxteknoloji.com/en/2020/08/16/temperature-screening-for-covid-19-with-thermal-cameras-or-scanners-is-increasing-more-and-more-in-hospitals-airports-public-transport/ (accessed on 16 December 2020).

- ASU to Fight COVID-19 Spread with Thermal Imaging Tech. Available online: https://www.govtech.com/products/ASU-to-Fight-COVID-19-Spread-with-Thermal-Imaging-Tech.html (accessed on 16 December 2020).

- Gostic, K.M.; Gomez, A.C.; Mummah, R.O.; Kucharski, A.J.; Lloyd-Smith, J.O. Estimated effectiveness of traveller screening to prevent international spread of 2019 novel coronavirus (2019-nCoV). medRxiv 2020, 1–19. Available online: https://www.medrxiv.org/content/10.1101/2020.01.28.20019224v2 (accessed on 5 February 2021).

- Bitar, D.; Goubar, A.; Desenclos, J.-C. International travels and fever screening during epidemics: A literature review on the effectiveness and potential use of non-contact infrared thermometers. Eurosurveillance 2009, 14, 19115. [Google Scholar]

- Priest, P.C.; Duncan, A.R.; Jennings, L.C.; Baker, M.G. Thermal image scanning for influenza border screening: Results of an airport screening study. PLoS ONE 2011, 6, e14490. [Google Scholar] [CrossRef] [Green Version]

- Tay, M.; Low, Y.; Zhao, X.; Cook, A.; Lee, V. Comparison of Infrared Thermal Detection Systems for mass fever screening in a tropical healthcare setting. Public Health 2015, 129, 1471–1478. [Google Scholar] [CrossRef] [PubMed]

- Perera, A.G.; Khanam, F.-T.-Z.; Al-Naji, A.; Chahl, J. Detection and Localisation of Life Signs from the Air Using Image Registration and Spatio-Temporal Filtering. Remote Sens. 2020, 12, 577. [Google Scholar] [CrossRef] [Green Version]

- Al-Naji, A.; Chahl, J. Remote respiratory monitoring system based on developing motion magnification technique. Biomed. Signal. Process. Control 2016, 29, 1–10. [Google Scholar] [CrossRef]

- Buzzelli, M.; Albé, A.; Ciocca, G. A vision-based system for monitoring elderly people at home. Appl. Sci. 2020, 10, 374. [Google Scholar] [CrossRef] [Green Version]

- Thi, T.H.; Wang, L.; Ye, N.; Zhang, J.; Maurer-Stroh, S.; Cheng, L. Recognizing flu-like symptoms from videos. Bmc Bioinform. 2014, 15, 300. [Google Scholar] [CrossRef] [Green Version]

- Kvapilova, L.; Boza, V.; Dubec, P.; Majernik, M.; Bogar, J.; Jamison, J.; Goldsack, J.C.; Kimmel, D.J.; Karlin, D.R. Continuous Sound Collection Using Smartphones and Machine Learning to Measure Cough. Digit. Biomark. 2019, 3, 166–175. [Google Scholar] [CrossRef]

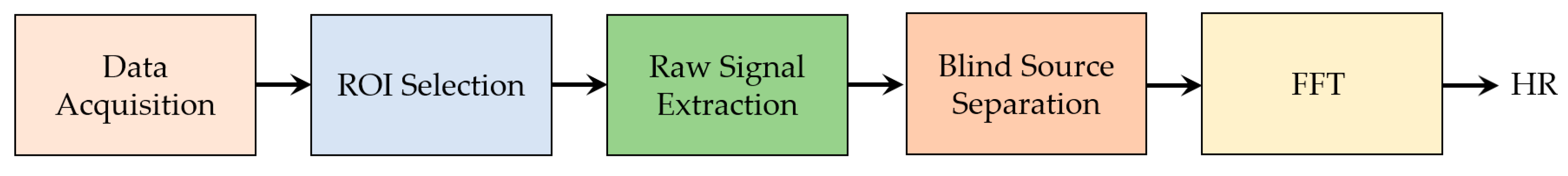

- Poh, M.-Z.; McDuff, D.J.; Picard, R.W. Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Opt. Express 2010, 18, 10762–10774. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. Cvpr. (1) 2001, 1, 3. [Google Scholar]

- Pursche, T.; Krajewski, J.; Moeller, R. Video-Based Heart Rate Measurement from Human Faces. In Proceedings of the 2012 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 12–15 January 2012; pp. 544–545. [Google Scholar]

- Feng, L.; Po, L.-M.; Xu, X.; Li, Y. Motion Artifacts Suppression for Remote Imaging Photoplethysmography. In Proceedings of the 2014 19th International Conference on Digital Signal Processing, Hong Kong, China, 20–23 August 2014; pp. 18–23. [Google Scholar]

- Lewandowska, M.; Rumiński, J.; Kocejko, T.; Nowak, J. Measuring Pulse Rate with a Webcam—A Non-Contact Method for Evaluating Cardiac Activity. In Proceedings of the 2011 federated conference on computer science and information systems (FedCSIS), Szczecin, Poland, 18–21 September 2011; pp. 405–410. [Google Scholar]

- Bousefsaf, F.; Maaoui, C.; Pruski, A. Continuous wavelet filtering on webcam photoplethysmographic signals to remotely assess the instantaneous heart rate. Biomed. Signal. Process. Control 2013, 8, 568–574. [Google Scholar] [CrossRef]

- Wu, B.-F.; Huang, P.-W.; Tsou, T.-Y.; Lin, T.-M.; Chung, M.-L. Camera-Based Heart Rate Measurement Using Continuous Wavelet transform. In Proceedings of the 2017 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh City, Vietnam, 21–23 July 2017; pp. 7–11. [Google Scholar]

- Wu, B.-F.; Huang, P.-W.; Lin, C.-H.; Chung, M.-L.; Tsou, T.-Y.; Wu, Y.-L. Motion resistant image-photoplethysmography based on spectral peak tracking algorithm. IEEE Access 2018, 6, 21621–21634. [Google Scholar] [CrossRef]

- Feng, L.; Po, L.-M.; Xu, X.; Li, Y.; Ma, R. Motion-resistant remote imaging photoplethysmography based on the optical properties of skin. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 879–891. [Google Scholar] [CrossRef]

- Cheng, J.; Chen, X.; Xu, L.; Wang, Z.J. Illumination variation-resistant video-based heart rate measurement using joint blind source separation and ensemble empirical mode decomposition. IEEE J. Biomed. Health Inform. 2017, 21, 1422–1433. [Google Scholar] [CrossRef]

- Xu, L.; Cheng, J.; Chen, X. Illumination variation interference suppression in remote PPG using PLS and MEMD. Electron. Lett. 2017, 53, 216–218. [Google Scholar] [CrossRef]

- Chen, D.-Y.; Wang, J.-J.; Lin, K.-Y.; Chang, H.-H.; Wu, H.-K.; Chen, Y.-S.; Lee, S.-Y. Image sensor-based heart rate evaluation from face reflectance using Hilbert–Huang transform. IEEE Sens. J. 2015, 15, 618–627. [Google Scholar] [CrossRef]

- Lin, K.-Y.; Chen, D.-Y.; Tsai, W.-J. Face-based heart rate signal decomposition and evaluation using multiple linear regression. IEEE Sens. J. 2016, 16, 1351–1360. [Google Scholar] [CrossRef]

- Lee, D.; Kim, J.; Kwon, S.; Park, K. Heart Rate Estimation from Facial Photoplethysmography during Dynamic Illuminance Changes. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2758–2761. [Google Scholar]

- Tarassenko, L.; Villarroel, M.; Guazzi, A.; Jorge, J.; Clifton, D.; Pugh, C. Non-contact video-based vital sign monitoring using ambient light and auto-regressive models. Physiol. Meas. 2014, 35, 807. [Google Scholar] [CrossRef] [PubMed]

- Al-Naji, A.; Chahl, J.; Lee, S.-H. Cardiopulmonary signal acquisition from different regions using video imaging analysis. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2018, 1–15. [Google Scholar] [CrossRef]

- Aarts, L.A.; Jeanne, V.; Cleary, J.P.; Lieber, C.; Nelson, J.S.; Oetomo, S.B.; Verkruysse, W. Non-contact heart rate monitoring utilizing camera photoplethysmography in the neonatal intensive care unit—A pilot study. Early Hum. Dev. 2013, 89, 943–948. [Google Scholar] [CrossRef]

- Cobos-Torres, J.-C.; Abderrahim, M.; Martínez-Orgado, J. Non-Contact, Simple Neonatal Monitoring by Photoplethysmography. Sensors 2018, 18, 4362. [Google Scholar] [CrossRef] [Green Version]

- Gibson, K.; Al-Naji, A.; Fleet, J.; Steen, M.; Esterman, A.; Chahl, J.; Huynh, J.; Morris, S. Non-contact heart and respiratory rate monitoring of preterm infants based on a computer vision system: A method comparison study. Pediatric. Res. 2019, 86, 738–741. [Google Scholar] [CrossRef]

- De Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef] [PubMed]

- De Haan, G.; Van Leest, A. Improved motion robustness of remote-PPG by using the blood volume pulse signature. Physiol. Meas. 2014, 35, 1913. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Stuijk, S.; De Haan, G. A novel algorithm for remote photoplethysmography: Spatial subspace rotation. IEEE Trans. Biomed. Eng. 2016, 63, 1974–1984. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; den Brinker, A.C.; Stuijk, S.; de Haan, G. Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 2017, 64, 1479–1491. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, W.; den Brinker, A.C.; Stuijk, S.; de Haan, G. Robust heart rate from fitness videos. Physiol. Meas. 2017, 38, 1023. [Google Scholar] [CrossRef] [Green Version]

- Yu, S.; Hu, S.; Azorin-Peris, V.; Chambers, J.A.; Zhu, Y.; Greenwald, S.E. Motion-compensated noncontact imaging photoplethysmography to monitor cardiorespiratory status during exercise. J. Biomed. Opt. 2011, 16, 077010. [Google Scholar]

- Kwon, S.; Kim, H.; Park, K.S. Validation of Heart Rate Extraction Using Video Imaging on a Built-In Camera System of a Smartphone. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–2 September 2012; pp. 2174–2177. [Google Scholar]

- Bernacchia, N.; Scalise, L.; Casacanditella, L.; Ercoli, I.; Marchionni, P.; Tomasini, E.P. Non Contact Measurement of Heart and Respiration Rates Based on Kinect™. In Proceedings of the 2014 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Lisbon, Portugal, 11–12 June 2014; pp. 1–5. [Google Scholar]

- Smilkstein, T.; Buenrostro, M.; Kenyon, A.; Lienemann, M.; Larson, G. Heart Rate Monitoring Using Kinect and Color Amplification. In Proceedings of the 2014 IEEE Healthcare Innovation Conference (HIC), Seattle, WA, USA, 8–10 October 2014; pp. 60–62. [Google Scholar]

- Gambi, E.; Agostinelli, A.; Belli, A.; Burattini, L.; Cippitelli, E.; Fioretti, S.; Pierleoni, P.; Ricciuti, M.; Sbrollini, A.; Spinsante, S. Heart rate detection using microsoft kinect: Validation and comparison to wearable devices. Sensors 2017, 17, 1776. [Google Scholar] [CrossRef]

- Al-Naji, A.; Perera, A.G.; Chahl, J. Remote Measurement of Cardiopulmonary Signal Using an Unmanned Aerial Vehicle. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Zawiercie, Poland, 26–29 September 2018; p. 012001. [Google Scholar]

- Al-Naji, A.; Chahl, J. Remote Optical Cardiopulmonary Signal Extraction With Noise Artifact Removal, Multiple Subject Detection & Long-Distance. IEEE Access 2018, 6, 11573–11595. [Google Scholar]

- Gupta, O.; McDuff, D.; Raskar, R. Real-Time Physiological Measurement and Visualization Using a Synchronized Multi-Camera System. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 46–53. [Google Scholar]

- Hu, M.-H.; Zhai, G.-T.; Li, D.; Fan, Y.-Z.; Chen, X.-H.; Yang, X.-K. Synergetic use of thermal and visible imaging techniques for contactless and unobtrusive breathing measurement. J. Biomed. Opt. 2017, 22, 036006. [Google Scholar] [CrossRef]

- Hu, M.; Zhai, G.; Li, D.; Fan, Y.; Duan, H.; Zhu, W.; Yang, X. Combination of near-infrared and thermal imaging techniques for the remote and simultaneous measurements of breathing and heart rates under sleep situation. PLoS ONE 2018, 13, e0190466. [Google Scholar] [CrossRef] [Green Version]

- Bennett, S.; El Harake, T.N.; Goubran, R.; Knoefel, F. Adaptive Eulerian Video Processing of Thermal Video: An Experimental Analysis. IEEE Trans. Instrum. Meas. 2017, 66, 2516–2524. [Google Scholar] [CrossRef]

- Wieringa, F.P.; Mastik, F.; van der Steen, A.F. Contactless multiple wavelength photoplethysmographic imaging: A first step toward “SpO 2 camera” technology. Ann. Biomed. Eng. 2005, 33, 1034–1041. [Google Scholar] [CrossRef]

- Humphreys, K.; Ward, T.; Markham, C. Noncontact simultaneous dual wavelength photoplethysmography: A further step toward noncontact pulse oximetry. Rev. Sci. Instrum. 2007, 78, 044304. [Google Scholar] [CrossRef] [PubMed]

- Humphreys, K.; Ward, T.; Markham, C. A CMOS Camera-Based Pulse Oximetry Imaging System. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 1–4 September 2005; pp. 3494–3497. [Google Scholar]

- Kong, L.; Zhao, Y.; Dong, L.; Jian, Y.; Jin, X.; Li, B.; Feng, Y.; Liu, M.; Liu, X.; Wu, H. Non-contact detection of oxygen saturation based on visible light imaging device using ambient light. Opt. Express 2013, 21, 17464–17471. [Google Scholar] [CrossRef]

- Shao, D.; Liu, C.; Tsow, F.; Yang, Y.; Du, Z.; Iriya, R.; Yu, H.; Tao, N. Noncontact monitoring of blood oxygen saturation using camera and dual-wavelength imaging system. IEEE Trans. Biomed. Eng. 2015, 63, 1091–1098. [Google Scholar] [CrossRef] [PubMed]

- Verkruysse, W.; Bartula, M.; Bresch, E.; Rocque, M.; Meftah, M.; Kirenko, I. Calibration of contactless pulse oximetry. Anesth. Analg. 2017, 124, 136. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moço, A.; Verkruysse, W. Pulse oximetry based on photoplethysmography imaging with red and green light. J. Clin. Monit. Comput. 2020, 2020, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Van Gastel, M.; Stuijk, S.; De Haan, G. New principle for measuring arterial blood oxygenation, enabling motion-robust remote monitoring. Sci. Rep. 2016, 6, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bal, U. Non-contact estimation of heart rate and oxygen saturation using ambient light. Biomed. Opt. Express 2015, 6, 86–97. [Google Scholar] [CrossRef] [Green Version]

- Guazzi, A.R.; Villarroel, M.; Jorge, J.; Daly, J.; Frise, M.C.; Robbins, P.A.; Tarassenko, L. Non-contact measurement of oxygen saturation with an RGB camera. Biomed. Opt. Express 2015, 6, 3320–3338. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rosa, A.F.; Betini, R.C. Noncontact SpO2 measurement using Eulerian video magnification (EVM). IEEE Trans. Instrum. Meas. 2019, 69, 2120–2130. [Google Scholar] [CrossRef]

- Matos, S.; Birring, S.S.; Pavord, I.D.; Evans, H. Detection of cough signals in continuous audio recordings using hidden Markov models. IEEE Trans. Biomed. Eng. 2006, 53, 1078–1083. [Google Scholar] [CrossRef] [PubMed]

- Coyle, M.A.; Keenan, D.B.; Henderson, L.S.; Watkins, M.L.; Haumann, B.K.; Mayleben, D.W.; Wilson, M.G. Evaluation of an ambulatory system for the quantification of cough frequency in patients with chronic obstructive pulmonary disease. Cough 2005, 1, 3. [Google Scholar] [CrossRef] [Green Version]

- Drugman, T.; Urbain, J.; Dutoit, T. Assessment of Audio Features for Automatic Cough Detection. In Proceedings of the 2011 19th European Signal Processing Conference, Barcelona, Spain, 29 August–2 September 2011; pp. 1289–1293. [Google Scholar]

- Amoh, J.; Odame, K. Deep neural networks for identifying cough sounds. IEEE Trans. Biomed. Circuits Syst. 2016, 10, 1003–1011. [Google Scholar] [CrossRef] [PubMed]

- Hoyos-Barcelo, C.; Monge-Alvarez, J.; Shakir, M.Z.; Alcaraz-Calero, J.-M.; Casaseca-de-La-Higuera, P. Efficient k-NN implementation for real-time detection of cough events in smartphones. IEEE J. Biomed. Health Inform. 2017, 22, 1662–1671. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, M.Y.; Rahman, M.M.; Kuang, J. DeepLung: Smartphone Convolutional Neural Network-based Inference of Lung Anomalies for Pulmonary Patients. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019; pp. 2335–2339. [Google Scholar]

- Casaseca-de-la-Higuera, P.; Lesso, P.; McKinstry, B.; Pinnock, H.; Rabinovich, R.; McCloughan, L.; Monge-Álvarez, J. Effect of Downsampling and Compressive Sensing on Audio-Based Continuous Cough Monitoring. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 6231–6235. [Google Scholar]

- Larson, E.C.; Lee, T.; Liu, S.; Rosenfeld, M.; Patel, S.N. Accurate and Privacy Preserving Cough Sensing Using a Low-Cost Microphone. In Proceedings of the 13th International Conference on Ubiquitous Computing, Beijing, China, 17–21 September 2011; pp. 375–384. [Google Scholar]

- Monge-Álvarez, J.; Hoyos-Barceló, C.; San-José-Revuelta, L.M.; Casaseca-de-la-Higuera, P. A machine hearing system for robust cough detection based on a high-level representation of band-specific audio features. IEEE Trans. Biomed. Eng. 2018, 66, 2319–2330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barata, F.; Kipfer, K.; Weber, M.; Tinschert, P.; Fleisch, E.; Kowatsch, T. Towards Device-Agnostic Mobile Cough Detection with Convolutional Neural Networks. In Proceedings of the 2019 IEEE International Conference on Healthcare Informatics (ICHI), Xi’an, China, 10–13 June 2019; pp. 1–11. [Google Scholar]

- Smith, J. Ambulatory methods for recording cough. Pulm. Pharmacol. Ther. 2007, 20, 313–318. [Google Scholar] [CrossRef]

- You, M.; Liu, Z.; Chen, C.; Liu, J.; Xu, X.-H.; Qiu, Z.-M. Cough detection by ensembling multiple frequency subband features. Biomed. Signal. Process. Control 2017, 33, 132–140. [Google Scholar] [CrossRef]

- Sterling, M.; Rhee, H.; Bocko, M. Automated cough assessment on a mobile platform. J. Med. Eng. 2014, 2014, 1–9. [Google Scholar] [CrossRef]

- Krajnik, M.; Damps-Konstanska, I.; Gorska, L.; Jassem, E. A portable automatic cough analyser in the ambulatory assessment of cough. Biomed. Eng. Online 2010, 9, 17. [Google Scholar] [CrossRef] [Green Version]

- You, M.; Wang, H.; Liu, Z.; Chen, C.; Liu, J.; Xu, X.-H.; Qiu, Z.-M. Novel feature extraction method for cough detection using NMF. IET Signal. Process. 2017, 11, 515–520. [Google Scholar] [CrossRef]

- Wang, H.-H.; Liu, J.-M.; You, M.; Li, G.-Z. Audio Signals Encoding for Cough Classification Using Convolutional Neural Networks: A Comparative Study. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; pp. 442–445. [Google Scholar]

- Sharan, R.V.; Abeyratne, U.R.; Swarnkar, V.R.; Porter, P. Automatic croup diagnosis using cough sound recognition. IEEE Trans. Biomed. Eng. 2018, 66, 485–495. [Google Scholar] [CrossRef] [PubMed]

- Salamon, J.; Bello, J.P. Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal. Process. Lett. 2017, 24, 279–283. [Google Scholar] [CrossRef]

- Tschannen, M.; Kramer, T.; Marti, G.; Heinzmann, M.; Wiatowski, T. Heart Sound Classification Using Deep Structured Features. In Proceedings of the 2016 Computing in Cardiology Conference (CinC), Vancouver, BC, Canada, 11–14 September 2014; pp. 565–568. [Google Scholar]

- Amiriparian, S.; Gerczuk, M.; Ottl, S.; Cummins, N.; Freitag, M.; Pugachevskiy, S.; Baird, A.; Schuller, B.W. Snore Sound Classification Using Image-Based Deep Spectrum Features. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 3512–3516. [Google Scholar]

- Kahl, S.; Wilhelm-Stein, T.; Hussein, H.; Klinck, H.; Kowerko, D.; Ritter, M.; Eibl, M. Large-Scale Bird Sound Classification using Convolutional Neural Networks. In Proceedings of the CLEF 2017 (Working Notes), Dublin, Ireland, 11–14 September 2017. [Google Scholar]

- Sak, H.; Senior, A.; Beaufays, F. Long short-term memory based recurrent neural network architectures for large vocabulary speech recognition. arXiv 2014, arXiv:1402.1128. [Google Scholar]

- Murata, A.; Taniguchi, Y.; Hashimoto, Y.; Kaneko, Y.; Takasaki, Y.; Kudoh, S. Discrimination of productive and non-productive cough by sound analysis. Intern. Med. 1998, 37, 732–735. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. Ntu rgb+ d: A Large Scale Dataset for 3D Human Activity Analysis. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Rahmani, H.; Mahmood, A.; Huynh, D.; Mian, A. Histogram of oriented principal components for cross-view action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2430–2443. [Google Scholar] [CrossRef] [Green Version]

- Smith, J.A.; Earis, J.E.; Woodcock, A.A. Establishing a gold standard for manual cough counting: Video versus digital audio recordings. Cough 2006, 2, 6. [Google Scholar] [CrossRef] [Green Version]

- Protalinski, E. How Draganfly Brought a ‘Pandemic Drone’ to the U.S. Available online: https://venturebeat.com/2020/04/30/draganfly-pandemic-drone-united-states-pilots/ (accessed on 8 May 2020).

- McNabb, M. This “Pandemic Drone” Can See Who Has a Temperature—Or Coughs. Available online: https://dronelife.com/2020/03/31/this-pandemic-drone-can-see-who-has-a-temperature-or-coughs/ (accessed on 8 May 2020).

| Ref | Sensor Used | ROI | Used Technique | Temperature Measured |

|---|---|---|---|---|

| Bilodeau et al. [41] | 7.5–13 μm, LWIR (FLIR ThermoVision A40M) | Face | Particle filter, Kalman filter | - |

| Aubakir et al. [42] | 8–14 μm, LWIR (FLIR Lepton 2.5) | Forehead | V-J method | 34.95 °C to 37.00 °C |

| Sharma et al. [43] | 7.5–13 μm, LWIR (FLIR X63900) NIR (CP-PLUS CP-USC-TAL2) | Face | V-J method | 29.45 °C to 32.82 °C |

| Lin et al. [44] | 8–14 μm, LWIR (FLIR Lepton 2.5) 8–14 μm, LWIR (KeySight Keysight U5855A) | Forehead | Deep- learning | 27 °C to 37.5 °C |

| Sumriddetchkajorn et al. [45] | 7.5–13 μm, LWIR (FLIR ThermoVision A40M) | Face | Image filtering, particle analysis | 35 °C to 40 °C |

| Silawan et al. [46] | 8–14 μm, LWIR (Optris PI450) | Forehead, mouth, cheek | Multiple data comparison | 36.0 °C to 39.5 °C |

| Thomas et al. [47] | 7.5–14 μm, LWIR (Fluke TiS65) | Face | Linear regression | 34 °C to 41 °C |

| Ref | Sensor Used | Vital Signs | ROI | Used Technique | Result |

|---|---|---|---|---|---|

| Murthy et al. [50] | 3–5 μm, MWIR (hardware unspecified) | BR | Nose | Advanced statistical algorithm | Accuracy = 98.5% |

| Fei et al. [51,52] | 3–5 μm, MWIR (FLIR model unspecified) 3–5 μm, MWIR (Indigo Systems model unspecified) | BR | Nose | Optical bandpass filter | - |

| Sun et al. [53] | MWIR (Indigo Systems model unspecified) | HR | Forehead, neck and wrist | FFT | PCC = 0.994 |

| Garbey et al. [54] | MWIR Indigo camera (Indigo Systems model unspecified) | HR | Forehead, neck and wrist | FFT | CAND = 88.52% |

| Chekmenev et al. [55] | LWIR (FLIR model unspecified) | HR and BR | Face and neck | CWT | - |

| Fei et al. [56] | 3–5 μm, MWIR (FLIR SC6000) | BR | Nose | CWT | CAND = 98.27% |

| Shakhih et al. [57] | 7–14 μm, LWIR (Infrared Camera Incorporation 7640 P-series) | TI and TE | Nose | Mean pixel intensity | PCC = 0.796 (TI), 0.961 (TE) |

| Pereira et al. [58] | 7.5–14 μm, LWIR (VarioCAM R HD head 820S/30) | BR | Nose | Particle filter framework and temporal filtering | MAE = 0.33, 0.55 and 0.96 breaths/min. |

| Pereira et al. [59] | 2–5.5 μm, MWIR (InfraTec 9300) | HR and BR | Head and nose | Particle filter framework, temporal filtering and PCA | RMSE = 3 bpm (HR), RMSE = 0.7 breaths/min. |

| Pereira et al. [60] | 7.5–14 μm, LWIR (VarioCAM R HD head 820S/30) | BR | Nose, mouth, shoulders | Particle filter framework and signal fusion | RMSE = 0.24,0. 89 breaths/min. |

| Abbas et al. [61] | 1–14 μm, LWIR (VarioCAM HR head) | BR | Nose | CWT | - |

| Pereira et al. [62] | 7.5–14 μm, LWIR (VarioCAM R HD head 820S/30) | BR | Nose | Particle filter framework and temporal filtering | Relative error = 3.42% |

| Pereira et al. [63] | 7.5–14 μm, LWIR (VarioCAM R HD head 820S/30) | BR | - | Black-box | RMSE = 4.15 ± 1.44 breaths/min. |

| Ref | Sensor Used | Vital Signs | ROI | Used Technique | Results |

|---|---|---|---|---|---|

| Pho et al. [116] | Webcam | HR | Face | ICA | PCC = 0.95, RMSE = 4.63 bpm |

| Purche et al. [118] | Webcam | HR | Forehead, nose and mouth | ICA | |

| Feng et al. [119] | Webcam | HR | Forehead | ICA | PCC = 0.99 |

| Lewandoska et al. [120] | Webcam | HR | Face and forehead | PCA | |

| Bousefsaf et al. [121] | Webcam | HR | Face | CWT | |

| Wu et al. [122] | Webcam | HR | Face | CWT | SNR (dB) = −3.01 |

| Wu et al. [123] | Webcam | HR | Cheeks | MRSPT | RMSE = 6.44 bpm |

| Feng et al. [124] | Webcam | HR | Cheeks | GRD | PCC = 1 |

| Cheng et al. [125] | Webcam | HR | Face | JBSS + EEMD | PCC = 0.91 |

| Xu et al. [126] | Webcam | HR | Face | PLS + MEMD | PCC = 0.81 |

| Chen et al. [127] | Digital camera | HR | Brow area | EEMD | PCC = 0.91 |

| Lin et al. [128] | Digital camera | HR | Brow area | EEMD + MLR | PCC = 0.96 |

| Lee et al. [129] | Digital Camera | HR | Cheek | MOCF | RMSE = 1.8 bpm |

| Tarassenko et al. [130] | Digital camera | HR, RR, SpO2 | Forehead and cheek | AR modelling and pole cancellation | MAE = 3 bpm |

| Al-Naji et al. [131] | Digital camera | HR and RR | Face, palm, wrist, arm, neck, leg, forehead, head and chest | EEMD + ICA | PCC = 0.96, RMSE = 3.52 |

| Arts et al. [132] | Digital camera | HR | Face and Cheek | JFTD | - |

| Cobos-Torres et al. [133] | Digital camera | HR | Abdominal area | Stack FIFO | PCC = 0.94 |

| Gibson et al. [134] | Digital camera | HR and RR | Face and chest | EVM | Mean bias = 4.5 bpm |

| De Haan et al. [135] | CCD | HR | Face | CHROM | PCC = 1, RMSE = 0.5 |

| De Haan et al. [136] | CCD | HR | Face | PBV | PCC = 0.99, RMSE = 0.64 |

| Wang et al. [137] | CCD | HR | Face and forehead | 2SR | PCC = 0.94 |

| Wang et al. [138] | CCD | HR | Face | POS | SNR (dB) = 5.16 |

| Wang et al. [139] | CCD | HR | Face | Sub-band decomposition | SNR (dB) = 4.77 |

| Yu et al. [140] | CMOS | HR and RR | Palm and face | SCICA | PCC = 0.9 |

| Kwon et al. [141] | Smartphone | HR | Face | ICA | MAE = 1.47 bpm |

| Bernacchia et al. [142] | Microsoft Kinect | HR and RR | Neck, thorax and abdominal area | ICA | PCC = 0.91 |

| Smilkstein et al. [143] | Microsoft Kinect | HR | Face | EVM | - |

| Gambi et al. [144] | Microsoft Kinect | HR | Forehead, cheeks, neck, | EVM | RMSE = 2.2 bpm |

| Al-Naji et al. [145] | UAV | HR and RR | Face | CEEMD + ICA | PCC = 0.99, RMSE= 0.7 bpm |

| Al-Naji et al. [146] | Digital camera, UAV | HR and RR | Face and Forehead | CEEMDAN + CCA | PCC = 0.99, RMSE= 0.89 bpm |

| Ref | Sensor Used | Vital Signs | ROI | Used Technique | Result |

|---|---|---|---|---|---|

| Gupta et al. [147] | RGB, monochrome and thermal camera | HR and HRV | Cheeks and forehead | ICA | Error = 4.62% |

| Hu et al. [148] | RGB and thermal camera | BR | nose and mouth | Viola–Jones algorithm together with the screening technique | LCC = 0.971 |

| Hu et al. [149] | RGB infrared and thermal camera | HR and BR | Mouth and nose regions | Moving average filter | LCC =0.831 (BR), LCC = 0.933 (HR) |

| Bennett et al. [150] | Thermal and digital camera | HR and blood perfusion | Face and arm | EVM | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khanam, F.-T.-Z.; Chahl, L.A.; Chahl, J.S.; Al-Naji, A.; Perera, A.G.; Wang, D.; Lee, Y.H.; Ogunwa, T.T.; Teague, S.; Nguyen, T.X.B.; et al. Noncontact Sensing of Contagion. J. Imaging 2021, 7, 28. https://doi.org/10.3390/jimaging7020028

Khanam F-T-Z, Chahl LA, Chahl JS, Al-Naji A, Perera AG, Wang D, Lee YH, Ogunwa TT, Teague S, Nguyen TXB, et al. Noncontact Sensing of Contagion. Journal of Imaging. 2021; 7(2):28. https://doi.org/10.3390/jimaging7020028

Chicago/Turabian StyleKhanam, Fatema-Tuz-Zohra, Loris A. Chahl, Jaswant S. Chahl, Ali Al-Naji, Asanka G. Perera, Danyi Wang, Y.H. Lee, Titilayo T. Ogunwa, Samuel Teague, Tran Xuan Bach Nguyen, and et al. 2021. "Noncontact Sensing of Contagion" Journal of Imaging 7, no. 2: 28. https://doi.org/10.3390/jimaging7020028

APA StyleKhanam, F. -T. -Z., Chahl, L. A., Chahl, J. S., Al-Naji, A., Perera, A. G., Wang, D., Lee, Y. H., Ogunwa, T. T., Teague, S., Nguyen, T. X. B., McIntyre, T. D., Pegoli, S. P., Tao, Y., McGuire, J. L., Huynh, J., & Chahl, J. (2021). Noncontact Sensing of Contagion. Journal of Imaging, 7(2), 28. https://doi.org/10.3390/jimaging7020028