1. Introduction

New techniques for the dissemination of cultural heritage through digital media is one of the lines of research providing benefits to society since the research results can be available to the public by means online virtual museum displays. In fact, many important international museums [

1], are using these technologies in collaboration with the multinational Google, which has developed its own high-resolution digital capture system for paintings. These works are exhibited on the Arts and Culture Project website [

2], which contains many artworks that can be displayed with a high level of detail. They are digital reproductions with a gigapixel resolution, that is, a resolution greater than 1000 megapixels, which is 50 times greater than the image resolution provided by a conventional digital camera.

Gigapixel images allow documenting and analysing the paintings accurately, which is very useful for curators and art scholars. Furthermore, the virtual visualizations that can be generated from this type of image make the artwork accessible to anyone connected to the Internet. The viewers will be able to dive into the work, in such a way, that they would appreciate many details, which would be negligible to the eye in a real visit (

Figure 1).

Apart from Google, there are very few companies specialized in capturing gigapixel images of artworks due to the technical complexity and the specialized equipment. There are some examples, such as the French state organization

Centre de Recherche et de Restauration des Musées de France CR2MF [

3], the Italian company

Haltadefinizione [

4], or the Spanish Madpixel [

5]. Some researchers have used gigapixel photography for documenting rupestrian paintings [

6] and even combined with multispectral imaging techniques [

7]. Other research works have used gigapixel images for documenting large Baroque illusionistic paintings [

8,

9].

Digital photographic capture with gigapixel resolution is not an easy task and serious difficulties may arise due to physical problems such as the diffraction of light, which represents a barrier that limits the sharpness that can be obtained with an optical device and a digital sensor. For this reason, the progression in the resolution of digital sensors has already reached the limit established by the diffraction of light and by the optical resolution provided by the lenses [

10] that cannot match the resolution of the current top digital sensors. Going beyond this would not take advantage of the effective resolution of the sensor, unless the size of the optical-sensor assembly were increased, which is not practical for the future development of conventional cameras. In fact, there have been few attempts to develop prototype cameras providing gigapixel images on the fly. One of the first was the large format camera developed within the

Gigapxl Project by Graham Flint in 2000 [

11]. In this bulky camera, the image was exposed on a 450 × 225 mm negative film, which was later digitized to form a 4 gigapixel (Gp) image. Another more recent proposal is the AWARE 2 gigapixel camera, started by D. Brady and his team in 2012. This prototype included 98 micro-cameras with a combined resolution of 1 Gp and it is still being developed and improved. The AWARE 10 and 40 models reach almost 3 Gp. Nevertheless, such special prototypes are far from becoming an accessible option due to their high complexity and cost.

A reasonable solution to overcome the problem of diffraction and achieve gigapixel images using conventional cameras is multi-shot panoramic capture, which consists in obtaining a set of photographs from the same point of view and with a sufficient overlap between adjacent pictures so that, by means of image stitching software, they can be joined to compose a higher resolution image [

12].

In order to achieve a perfect stitch between pictures and to avoid parallax errors, it is mandatory to use a panoramic head (

Figure 2) to fix the position of the optical centre or no-parallax point of the lens, while rotating the camera to obtain the different shots that will compose the final image. Moreover, a telephoto lens should be used so as to maximize the final resolution.

The capture system developed by Google, called Art Camera [

13], seem to be based on this same principle, and consists of a camera integrated in a panoramic head that progressively sweeps the painting from a fixed point. The partial images are subsequently processed by the multinational itself. This type of camera is not for sale and there are only a very limited number of units available exclusively to the company.

The capture systems used by other commercial companies such as Haltadefinizione, are not fully described, but some of them are based on using high-end digital reflex cameras and high-cost automated panoramic heads by Clauss [

14]. Madpixel has his own automatic panoramic head called MadpixelROB [

15]. The aforementioned research works have also used the panoramic head methodology.

Using a panoramic head and a telephoto lens is a very effective method for documenting relatively small paintings with gigapixel resolution, but when it comes to capturing artworks of moderate size, there arise some drawbacks that limit image sharpness due to narrow depth of field provided by long focal length lenses. This problem will be discussed and fixed by following the techniques that will be revealed below, since one of the main aims of this article is to show a new and very accurate gigapixel capture methodology that uses relatively affordable equipment. Moreover, the digital image processing to generate the gigapixel image and the dissemination of the results on the Internet will be explained and carried out by means of opensource software.

2. Materials and Methods

2.1. Capture Techniques

The photographic equipment that was used in this research work consisted of a 32.5 Mp digital camera Canon EOS 90D, equipped with a fixed telephoto lens Canon EF 200 mm f2.8 L II USM, a Manfrotto tripod 28B and a Manfrotto panoramic head 303 SPH.

Long focal length lenses should be used to maximize resolution and the camera must be quite close to the artwork, so that, in these circumstances, depth of field provided by the lens is very short. Furthermore, this is aggravated by the need to use an intermediate diaphragm aperture to avoid losing global sharpness by the effect of light diffraction [

16]. This problem causes a loss of sharpness in certain zones of the off-centred shots of the paintings, when using the panoramic head capture methodology. This is due to the angular deviation between the camera sensor and the painting.

When the digital sensor remains parallel to the painting, all the points are perfectly focused, so the distance between them and the sensor remain the same. However, as the camera is rotated towards the picture margins, the angle between the sensor and the painting increases dramatically (

Figure 3). This causes only those points that are at the same distance from the camera sensor as the focused point to be perfectly sharp, while those remaining at different distances, will be gradually blurred due to narrow depth of field (

Figure 4).

To solve this problem, a new capture technique has been put into practice, which consists in taking the shots while moving the camera parallel to the painting (

Figure 5). Doing so, the sharpness of the pictures will be always optimal and never limited by depth of field. However, while this method would solve the problem of sharpness, it also generates some difficulties that must be overcome. On the one hand, as the viewpoint would vary continuously, the light reflected by the work will change between the shots, causing slight differences in exposure and problems with specular reflections. Those issues can be solved by using a strategically placed controlled light source, which moves along with the camera.

Another problem arises when it comes to joining the photo mosaic, obtained in that way, so most of the current software for stitching images would be unable to join the mosaic when the viewpoint is not static. Fortunately, this problem can be solved with the help of some stitching algorithms, initially developed by the German professor Helmut Dersch [

17], which were implemented in Hugin [

18], an opensource stitching software with general public license that allows joining pictures taken from different viewpoints, as long as they represent a planar surface. Optionally, the commercial software PTGUI [

19] would also do an excellent job when stitching these kinds of photomosaics.

Another way to construct the gigapixel image is by means SfM (structure from motion) photogrammetry software, since the construction algorithm is conceived to generate a textured mesh from pictures taken from different viewpoints. The problem is that it would be needed a greater number of shots since a minimum overlap of 50% between adjacent pictures is advisable, while a 30% overlap would be fine when using stitching software. However, an advantage of using SfM photogrammetry software would be the 3D reconstruction of the painting and even the frame, which is not a plane surface, while stitching software would provide an accurate 2D rectified orthophoto of the canvas but not of the volumetric frame.

Taking into account the previous considerations, the following gigapixel capture techniques are proposed, which will be suitable for paintings or planar surfaces:

The single-viewpoint capture technique is a known and common methodology, which consist in using a panoramic head to rotate the camera around the no-parallax point, which would be fixed and centred in front of the canvas, thus obtaining a set of overlapping pictures (

Figure 6). This technique requires limiting the obliquity of the shots in relation to the canvas in order to preserve the maximum image sharpness.

The parallel-multi-viewpoint capture technique is a new method, which consist in taking a mosaic of overlapped pictures as the camera is moved in parallel to the canvas describing several rows or columns. The camera digital sensor must be parallel to the artwork, or, in other words, the lens optical axis should be orthogonal to the canvas (

Figure 7).

The tilted-multi-viewpoint capture technique is a variation of the previous one, in which the camera can be tilted if required. This option may seem awkward but can be useful in the case of capturing large paintings with a tripod that is not tall or short enough to allow the parallel movement of the camera in front of the whole painting. In that case, the camera could be tilted when taking the upper or lower rows to cover the entire canvas. (

Figure 8).

The resolution of the final gigapixel image, achieved with those methods, will depend on several factors, such as the camera sensor resolution, the lens focal length, and the distance between the canvas and the camera, as will be discussed later.

2.2. Illumination Set

When capturing paintings there are several approaches to illuminate the artwork properly. The simplest way is to use the museum’s own illumination when the capture process is carried out in situ. Nevertheless, for a uniform and colour-accurate result it would be advisable to use dedicated and controlled light sources. In this research, there were used two Mettle 28 inch soft boxes lights, providing a colour temperature of 5500 K and a CRI (Colour Rendering Index) greater than 90.

The soft boxes must be placed strategically to avoid specular reflections on the canvas and to guarantee lighting uniformity, so the lighting scheme proposed in

Figure 9 is advisable. The lights must be placed outside the family of angles zone, as otherwise specular reflections may occur.

When using the multi-viewpoint capture technique, it is mandatory to move the lighting system along with the camera, so a special mount was designed to attach the soft boxes to the tripod (

Figure 10). Additionally, a sliding system for the tripod’s feet, which consisted in a two-wheeled aluminium bar, was also constructed to facilitate the movement of the set.

2.3. Camera Settings and Colour Accuracy

It is advisable to use an intermediate diaphragm aperture value to avoid the diffraction phenomenon while maintaining good depth of field. Setting the camera to f8 would be advisable as it is usually the golden number for maximizing image sharpness. The camera speed will depend on the lighting conditions. Slow speeds will not be a problem since a tripod is used.

The camera ISO value must be at the lower setting to avoid noisy pictures. An ISO 100 value is advisable. RAW Image file format should be used instead of JPG in other to maximize the dynamic range and to properly adjust the white balance and to apply an accurate colour profile during the digital developing process.

When using telephoto lenses, it is advisable to shoot the camera remotely, even when a tripod is used. For this reason, it is recommended using a remote shooter or, even better, a mobile device or notepad with a compatible application for shooting the camera. In this research, a notebook with the free software EOS Utility by Canon, was used to control the camera via Wi-Fi.

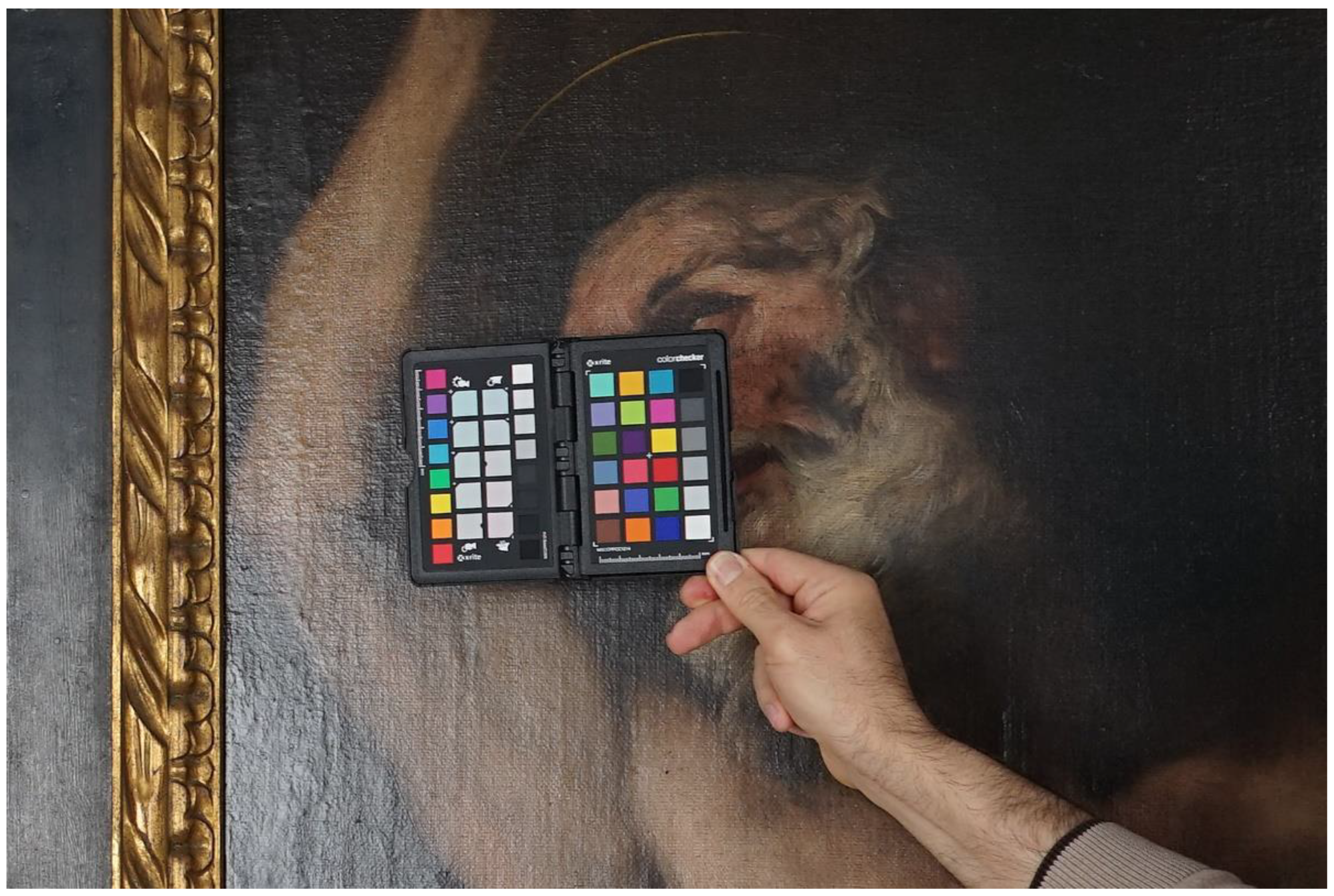

In order to capture the colours of the artwork accurately, a Xrite ColorChecker chart was used to create a colour profile from a picture of the chart, which was illuminated by the scene lights (

Figure 11). The colour profile was generated by the ColorChecker Camera Calibration software by Xrite and it can be used with RAW developers, such as the opensource RawTherapee [

20], as well as with other commercial software, such as Adobe Camera Raw or Adobe Lightroom [

21]. The picture of the chart can also be used as a reference to adjust the proper white balance of all the shots at once, by using the middle grey patch of the chart to neutralize any colour predominance.

2.4. Generating the Gigapixel Image

In order to join the set of pictures obtained with any of the aforementioned techniques, a stitching software such as Hugin or PTGUI can be used. As mentioned before, Hugin is a powerful opensource stitching software that can even calculate the camera translation between shots, when the multi-viewpoint technique is used. The only drawback of Hugin is that is slow when compared to the commercial alternative PTGUI, which is incredibly fast.

The workflow with the stitching software is very simple. Initially, the set of pictures are analysed to automatically detect homologous points in the overlapping zones of the shots. Then, the optimizer algorithm uses all these points to calculate the spatial position of every picture, which is defined by the pitch, yaw, and roll angles. In addition to this, the camera translations, and the parameters to correct the radial distortion, caused by the lens, are also computed. The final step allows rendering the set of pictures into a whole image at gigapixel resolution. The maximum final resolution will depend on the number of shots and their original resolution. That is estimated by the software so as to avoid incremental interpolations.

When using the multi-viewpoint technique, it is possible to assemble the gigapixel image with an automated SfM photogrammetry software, such as Agisoft Metashape. As mentioned before, using this kind of software would require a greater overlapping between adjacent shots and it is much more time consuming. This option would be interesting only when documenting volumetric artworks, such as altar pieces. The workflow for this kind of software begins with the determination of the camera positions from the analysis of the homologous points and the sparse point cloud. Then, the dense cloud of the model can be computed, and can be later triangulated to form a polygonal mesh. This mesh can be texturized by projecting the original pictures onto the mesh. Finally, an orthophoto of the 3D textured model can be generated at gigapixel resolution.

It should be mentioned that SfM photogrammetric techniques would not provide an absolutely accurate three-dimensional restitution of those subtle surface details, but since our main objective is obtaining an orthophoto of the painting, it would provide excellent results. In order to render fine surface properties of the painting, reflectance transformation imaging (RTI) would be very useful, as well as other much more expensive 3D scanning techniques [

22].

2.5. Canvas Measurement

Knowing the real dimension of the paintings is important to provide an accurate scale of the gigapixel image. Normally the dimensions of the artwork are specified on the wall labels, but they are approximated. A Topcon Image IS robotic total station was used for taking the measurement of the canvases without touching the artwork. This total station has a reflector-less measurement capability between 1.5 and 250 m, providing a short-range precision of ±5 mm (MSE), and an angular precision of 0.3 mgon. The instrument was placed in front of the painting, and, with the help of the telescopic sight, the corners of the canvas were identified. The coordinates of these points were obtained using TopSurv, a software that comes with the total station.

4. Discussion

The new proposed multi-viewpoint gigapixel capture techniques, compared to the conventional single-viewpoint capture technique, improve the image quality of the resulting gigapixel image. The parallel multi-viewpoint gigapixel capture technique is, in general, most recommended, so there is no loss of sharpness produced by narrow depth of field provided by telephoto lenses. The novel multi-viewpoint capture techniques imply using different shooting methods and moving the light system along with the camera to avoid changing specular reflections during the capture process.

Nevertheless, the single-viewpoint capture technique can be also a proper method when the depth of field provides an acceptable sharpness in the whole picture. The acceptable sharpness would depend on the focal lens, the diaphragm aperture value, the focus distance, and the camera sensor size. It can be calculated by using a free DOF calculator such as DOFMASTER [

23].

From the experience acquired in this research, there are some practical considerations that can be very useful for future researchers, which will be discussed here.

When documenting artworks with gigapixel resolution, the pixel density is an important aspect to bear in mind when planning the photographic session. A pixel density range between 600 ppi and about 1000 ppi was established to guarantee an excellent level of detail of the paintings. The maximum reachable pixel density value is always imposed by the photographic equipment, so it depends on the lens focal length, the camera distance, and the camera sensor resolution and size.

In order to maximize the pixel density, it would be advisable to use long focal lenses and to place the camera as close to the painting as possible (the minimum focusing distance of the lens can be the most limiting factor).

The pixel density of the final gigapixel image can be calculated easily by Equation (1), which can be deduced geometrically:

where,

Pixel Density of the Image (ppc or ppi)

Sensor Width Resolution (px)

Lens Focal Length (mm)

Unit Conversion Factor [use 10 for obtaining the pixel density in pixels per centimetre (ppc), and 25.4 for pixels per inch (ppi)]

Camera Distance (mm)

Sensor Width (mm)

Obtaining a high pixel density is not necessarily a synonym of image quality, so a picture with a great pixel density can be totally blurred. For this reason, it is crucial to choose a lens providing excellent optical quality and to use it properly.

When using the proposed parallel multi-viewpoint technique, there is no problem with depth of field, so the camera sensor must remain parallel to the canvas always. The goal, in this case, is focusing the camera on the canvas as accurately as possible, maintaining the camera steady when shooting, and minimizing the loss of sharpness caused by the lens diffraction.

In order to do so, it is necessary to use a tripod to maintain the camera totally stabilized. A measuring tape can be placed on the floor, parallel to the canvas, to work as a guide for the tripod legs so as to maintain the same distance from the camera to the canvas.

Additionally, it is advisable to focus and shoot the camera remotely to avoid small movements that would produce slightly blurred images. This can be done by using a computer or a mobile device with the software provided by the camera. The previous aspects are critical, especially when using long focal lenses in which any small movement would be extremely amplified on the camera sensor.

Moreover, setting the ISO setting to its minimum is mandatory to improve the image quality, as well as setting the proper exposure values to capture the entire dynamic range of the scene. In this sense, it is advisable to set the aperture to medium values, such as f8. Intermediate apertures would maximize the image sharpness, while avoiding diffraction problems. Once the aperture is set, the shutter speed can be determined accordingly to the diaphragm aperture to obtain the proper exposure. Visualizing the live histogram at this point can be very helpful to avoid clipping areas.

The image mosaic can be planned before starting the capture to know both the horizontal and the vertical camera displacements, as well as the total amount of shots that will be necessary to cover the entire painting. As mentioned before, a minimum overlap of about 30% between adjacent shots is advisable for a successful stitching. It is also recommended to shoot the camera in portrait mode to reduce the vertical camera movements, which are less convenient than the horizontal ones.

The camera vertical displacement can be determined by Equation (2):

where,

Camera Vertical Displacement (mm)

Camera Distance (mm)

Sensor Width (mm)

Image Overlap Percentage (%) (a minimum of 30% is advisable)

Lens Focal Length (mm)

The camera horizontal displacement can be easily calculated as a fraction of the vertical displacement, so the sensor aspect ratio is known:

where,

Camera Horizontal Displacement (mm)

Camera Vertical Displacement (mm)

Sensor Aspect Ratio (normally 3:2 for professional cameras)

The total amount of shots composing the Photographic Mosaic (Rows and Columns) can be predicted as follows:

where,

Number of Rows of the Photographic Mosaic

Number of Columns of the Photographic Mosaic

Painting Height (mm)

Painting Width (mm)

Camera Horizontal Displacement (mm)

Camera Vertical Displacement (mm)

A prediction of the resolution of the final gigapixel image in Gigapixels can be obtained from the next equation:

where,

Total Resolution of the Gigapixel Image (Gp)

Painting Height (cm)

Painting Width (cm)

Pixel Density of the Image (ppc)

As explained before, the resulting mosaic could be assembled with free stitching software, such as Hugin or with commercial one, such as PTGUI, which is very straightforward and fast. The resulting gigapixel image can be very heavy and difficult to handle unless a powerful computer is used. Fortunately, the dissemination of such heavy gigapixel images through the Internet can be carried out by decomposing the image in a multiresolution mosaic, which is also called pyramidal image. The process consists in applying an algorithm that generates different tiles from the gigapixel image, with different sizes and levels of resolution. Then, these image tiles can be loaded progressively in real time by using a specific html5 viewer. In this way, when the user demands more definition by zooming up, the viewer loads only the specific tiles for the visualization area.

When visualizing the resulting multiresolution mosaic through the Internet, there is no need to use powerful equipment, since even a conventional smartphone can manage this kind of pyramidal image. This technique is used in very well-known applications such as Google Maps or Google Earth.

It is important to mention that, due to the different kinds of display that the spectators can use for the visualization of the images online, it is advisable to generate the multiresolution mosaic using the standard colour profile sRGB, which is suitable for most of the devices. Nevertheless, the accuracy of the colour reproduction will depend on the gamut of the display and its proper calibration.

Zoomify [

23] is one of the software packages that can provide multiresolution mosaics and has its own viewers, including a free version. There are another powerful opensource viewers, such as OpenSeadragon [

24], which is the one used for this project.

As a result, the gigapixel images that have been shown in this article can be visualized online by using the QR codes on

Figure 20 or by following the links at the caption.

The rest of the museum imaging process can be solved using a proper web page design and this may depend on the needs of the researchers. In this case, Elementor [

25], a simple and free web design plugin based on WordPress [

26], was used to develop the entire webpage that allow the results to be disseminated:

www.gpix.upv.es (accessed on 19 August 2021).