1. Introduction

The process of converting a color image into its black-and-white (or monochromatic) version is called binarization or thresholding. The binary version of document images are, in general, more readable by humans, and save storage space [

1,

2] and communication bandwidth in networks, as the size of binary images is often orders of magnitudes smaller than the original gray or color images; they also use less toner for printing. Thresholding is a key preprocessing step for document transcription via OCR, which allows document classification and indexing.

No single binarization algorithm is good for all kinds of document images, as is demonstrated by the recent Quality-Time Binarization Competitions [

3,

4,

5,

6,

7]. The quality of the resulting image depends on a wide variety of factors, from the digitalization device and its setup to the intrinsic features of the document, from the paper color and texture to the way the document was handwritten or printed. The time elapsed in binarization also depends on the document features and varies widely between algorithms. A fundamental question arises here: if the document features are deterministic for the quality output of the binary image and there is also a large time-performance variation, and there is a growing number of binarization algorithms, how does one choose an algorithm that provides the best quality-time trade-off? Most users tend to binarize a document image with one of the classical algorithms, such as Otsu [

8] or Sauvola [

9]. Often, the quality of the result is not satisfactory, forcing the user to enhance the image through filtering (salt-and-pepper, etc.) or to hand-correct the image.

The case of the binarization of photographed documents is even more complex than scanned ones, as the document image has uneven resolution and illumination. The ACM DocEng Quality-Time Binarization Competitions for Photographed Documents [

5,

6,

7] have shown that in addition to the physical document characteristics, the camera features and its setup (whether the in-built strobe flash is on or off) influence which binarization algorithm performs the best in quality and time performance. The recent paper [

10] presents a new methodology to choose the “best” binarization algorithm in quality and time performance for documents photographed with portable digital cameras embedded in cell phones. It assesses 61 binarization algorithms to point out which binarization algorithm quality-time performs the best for OCR preprocessing or image visualization/printing/network transmission for each of the tested devices and setup. It also chooses the “overall winner”, and the binarization algorithms that would be the “first-choice” in the case of a general embedded application, for instance.

The binarization of scanned documents is also a challenging task. The quality of the resulting image varies not only with the set resolution of the scanner (today, the “standard” is either 200 or 300 dpi), but it also depends heavily on the features of each document, such as paper color and texture, how the document was handwritten or printed, the existence of physical noises [

11], etc. Thus, it is important to have some criteria to point out which binarization algorithm, among the best algorithms today, provides the best quality-time trade-off for scanned documents.

Traditionally, binarization algorithms convert the color image into gray-scale before performing binarization. Reference [

12] shows that the performance of binarization algorithms may differ if the algorithm is fed with the color image, its gray-scale converted image or one of its R, G, or B channels. Several authors [

13,

14] show that texture analysis plays an important role in document image processing. Two of the authors of this paper showed that the analysis of paper texture allows one to determine the age of documents for forensic purposes [

15], avoiding document forgeries. This paper shows that by extracting a sample of the paper (background) texture of a scanned document, one can have a good indication of one of the 315 binarization schemes tested [

12] that provides a suitable quality monochromatic image, with a reasonable processing time to be integrated into a document processing pipeline.

2. Materials and Methods

This work made use of the International Association for Pattern Recognition (IAPR) document image binarization (DIB) platform (

https://dib.cin.ufpe.br, last accessed on 24 August 2022), which focuses on document binarization. It encompasses several datasets of document images of historical, bureaucratic, and ordinary documents, which were handwritten, machine-typed, offset, laser- and ink-jet printed, and both scanned and photographed; several documents had corresponding ground-truth images. In additon to being a document repository, the DIB platform encompasses a synthetic document image generator, which allows the user to create over 5.5 million documents with different features. As already mentioned, Ref. [

12] shows that binarization algorithms, in general, yield different quality images whenever fed with the color, gray-scale-converted, and R, G, and B channels. Here, 63 classical and recently published binarization algorithms are fed with the five versions of the input image, totaling 315 different binarization schemes. The full list of the algorithms used is presented in

Table 1 and

Table 2, along with a short description and the approach followed in each of them.

Ref. [

63] presents a machine learning approach for choosing among five binarization algorithms to binarize parts of a document image. Another interesting approach to enhance document image binarization is proposed in [

64] and consists of analyzing the features of the original document to compose the result of the binarization of several algorithms to generate a better monochromatic image. Such a scheme was tested with 25 binarization algorithms, and it performed more than 3% better than the first rank in the H-DIBCO 2012 contest in terms of F-measure. The time-processing cost of such a scheme is prohibitive if one considers processing document batches, however.

One of the aims raised by the researchers in the DIB platform team is to develop an “image matcher” in such a way that given a real-world document, it looks for the synthetic document that better matches its features, as sketched in

Figure 1. For each of the 5.5 million synthetic documents in the DIB platform, one would have the algorithms that would yield the best quality-time performance for document readability or OCR transcription. Thus, the match of the “real-world” document and the synthetic one would point out which binarization algorithm would yield the “best” quality-time performance for the real-world document. It is fundamental that the Image Matcher is a very lightweight process not to overload the binarization processing time. If one or a small set of document features provide enough information to make such a good choice, it is more likely that it will be for the image-matcher to be fast enough to be part of a document processing pipeline.

In this paper, the image texture is taken as a key for selecting the real-world image that more closely resembles another real-world document for which one has a ground-truth monochromatic image of reference. Such images were carefully chosen from the set of historical documents in the DIB platform such as to match a large number of historical documents of interest from the late 19th century to today. To extract a sample of the texture, one manually selects a window of 120 × 60 pixels from the document to be binarized, as shown in

Figure 2. Only one window from each image was cropped in such a way that there was no presence of text from the front or any back-to-front interference. A vector of features is built, taking into account each RGB channel of the sample, the image average filtered (R + G + B)/3, and its gray-scale equivalent. Seven statistical measures are taken and placed in a vector: mean, standard deviation, mode, minimum value, maximum value, median, and kurtosis. This results in a vector containing 28 features, which describes the overall color and texture characteristics.

In this study, 40 real-world images are used, and the Euclidean distance between the texture vectors is used to find the 20 pairs of most similar documents. The texture with the smallest distance is chosen, and its source document image is used to determine the best binarization algorithm.

Figure 3 illustrates how such a process is applied to a sample image and the chosen texture.

3. Binarization Algorithm Selection Based on the Paper Texture

In a real-world document, one expects to find three overlapping color distributions. This includes one that corresponds to the plain paper background, which becomes the paper texture, which should yield white pixels in the monochromatic image. The second distribution tends to be a much narrower Gaussian that corresponds to the printing or writing, which is mapped onto black pixels in the binary image. The third distribution, the back-to-front interference [

11,

65] overlaps the other two distributions, bringing one of the most important causes of binarization errors.

Figure 4 presents a saple image with the corresponding color distributions.

Deciding which binarization algorithm to use in a document tends to be a “wild guess”, a user-experience-based guess, or an a posteriori decision, which means one uses several binarization algorithms and chooses the image that “looks best” as a result. Binarization time is seldom considered. One must agree that the larger the number of binarization algorithms one has, the harder it is to guess the ones that will perform well for a given document. Ideally, the Image Matcher under development in the DIB-platform would estimate all the image parameters (texture type, kind of writing or printing, the color of ink, intensity of the back-to-front interference, etc.) to pinpoint which of the over 5.5 million synthetic images best matches the features of the “real world” document to be binarized. If that synthetic image is known, one would know which of the 315 binarization schemes assessed here would offer the best quality-time balance for that synthetic image.

This paper assumes that by comparing the paper texture between two real-world documents, one of which knows which binarization algorithm presents the best quality-time trade-off, one can use that algorithm on the other document, yielding acceptable quality results. Cohen’s Kappa [

66,

67] (denoted by

k) is used here as a quality measure:

which compares the observed accuracy with an expected accuracy, assessing the classifier performance.

is the number of correctly mapped pixels (accuracy) and

is

where

and

are the number of pixels mapped as foreground and background on the binary image, respectively, and

and

are the number of foreground and background pixels on the GT image, and

N is the total number of pixels. The ranking for the pixels is defined by sorting the measured kappa in ascending order.

The peak signal-noise ratio (PSNR), distance reciprocal distortion (DRD) and F-Measure (FM) have been used for a long time to assess binarization results [

68,

69], becoming the chosen measures for nearly all studies in this area. Thus, they are also provided, even though the ranking process only takes Cohen’s Kappa into account. The PSNR for a

image is defined as the peak signal power to average noise power, which, for 8-bit images, is

The DRD [

70] correlates the human visual perception with the quality of the generated binary image. It is computed by

where NUBN (GT) is the number of non-uniform

binary blocks in the ground-truth (GT) image,

S is the flipped pixels and

is the distortion of the pixel at position

in relation to the binary image (B), which is calculated by using a 5 × 5 normalized weight matrix

as defined in [

70].

equals to the weighted sum of the pixels in the 5 × 5 block of the GT that differ from the centered kth flipped pixel at

in the binarization result image

B. The smaller the DRD, the better.

The F-Measure is computed as

where

,

and

,

,

denote the true positive, false positive and false negative values, respectively.

Once the matching image (the most similar) is found, the best quality-time algorithm is used to binarize the original image. Algorithms with the same kappa are in the same ranking position. Several algorithms have a similar processing time. Among the top-10 in terms of quality, the fastest is chosen as the best quality-time binarization algorithm. This paper conjectures that considering two documents that were similarly printed (handwritten, offset printed, etc.) and have similar textures, if the best quality-time algorithm is known for one image, that same algorithm could be applied to the other image, yielding high-quality results. No doubt that if a larger number of document features besides the document texture, such as the strength of the back-to-front interference, the ink color and kind of pen, the printing method, etc. were used, the chances of selecting the best quality binarization scheme would be larger, but could imply in a prohibitive time overhead. It is also important to stress that the number of documents with back-to-front interference is small in most document files, and the ones with strong interference is even smaller. In the case of the bequest [

71] of Joaquim Nabuco (1849/1910, Brazilian statesman and writer and the first Brazilian ambassador to the U.S.A.), for instance, the number of letters is approximately 6500, totaling about 22,000 pages. Only 180 documents were written on both sides in translucent paper, of which less than 10% of them exhibit strong back-to-front interference. Even in those documents, the paper texture plays an important role in the parameters of the binarization algorithms. Thus, in this paper, one assumes that the paper texture is the key information for choosing a suitable binarization scheme that has a large probability of being part of an automatic document processing pipeline. Evidence that such a hypothesis is valid is shown in the next section.

4. Results

In order to evaluate the automatic algorithm selection based on the texture, 26 handwritten and 14 typewritten documents were carefully selected from the DIB platform such that they are representative of a large number of real-world historical documents. Such documents belong to the Nabuco bequest [

71] and were scanned in 200 dpi.

Table 3 presents the full size of each document used in this study. All of them have a ground-truth binary image. The Euclidean distance between the feature vector of their paper textures was used to find the pairs of most similar documents. Five versions of the original and matched image were used in the final ranking.

The other parts stand for:

- 1.

Original Image: the image one wants to binarize.

- 2.

Matched Image: the image which one already has the algorithm that yields the best quality-time trade-off amongst all the 315 binarization schemes.

- 3.

Textures samples: sample of the paper background of the original image (left) used to select the texture matched image (right), whose sample is presented below each document

- 4.

Results Table: the best 10 algorithms for the original image

- 5.

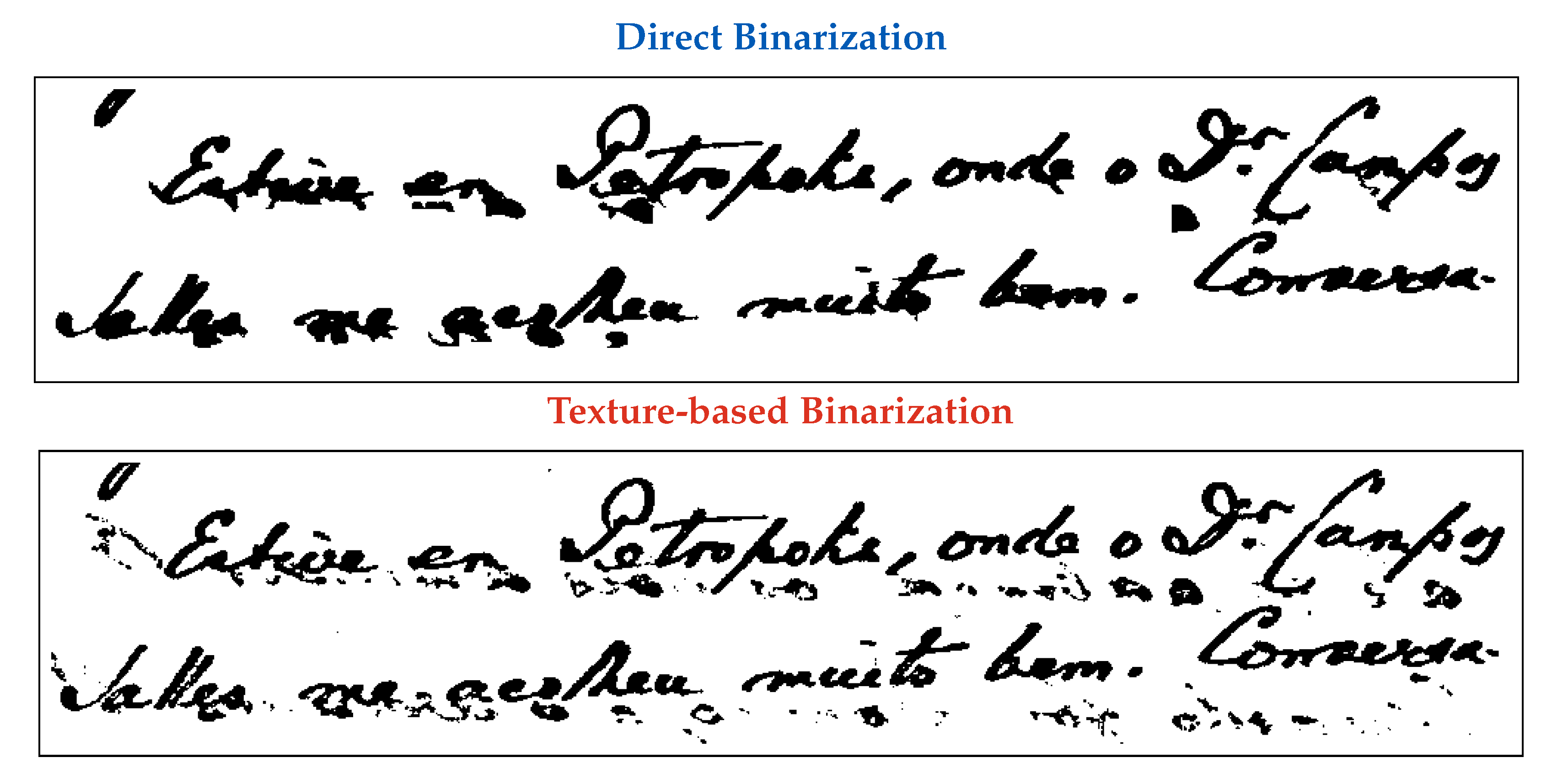

Direct Binarization: the best quality-time algorithm and corresponding binary image according to the ranking of all 315 binarization schemes. The choice is made by directly looking at the results of all algorithms.

- 6.

Texture-based Binarization: the best quality-time algorithm of the matched image and the corresponding monochromatic version of the original image binarized with the chosen algorithm.

The algorithm choice was appropriate for all the presented images, as can be noted by visually inspecting the binary images, their quality ranking, and the kappa, PSNR, DRD, and F-Measure values. For

Table 4,

Table 6,

Table 7, and

Table 9, the selected algorithm was at rank 5 or more and did not yield a significantly worse image in those cases. The difference in kappa was smaller than 10%, except in the case of the image shown in

Table 8, in which the kappa reached 12%. It is interesting to observe that for the image shown in

Table 8, an image with strong back-to-front interference, although the value of kappa has the highest percent difference of all the tested images, the monochromatic image produced by using the texture binarization scheme proposed here is visually more pleasant and readable for humans than the scheme that yields the best kappa, as may be observed in the zoomed image shown in

Figure 5. One may see that the texture-based choice of the binarization scheme leaves some noise in areas that correspond to the back-to-front interference, most of which could be removed with a salt-and-pepper filter. As previously remarked here, images with strong back-to-front interference tend to be rare in any historical document file.

In the case of the document image HW 04, presented in

Table 7, although the difference in kappa is 7.3% , visually inspecting the resulting binary image, it is really close in quality to the actual best in terms of quality, which implies the choice based on texture does indicate a good option of binarization algorithm even with a relatively lower rank, although the Howe algorithm [

32] used to binarize the matched image HW 09, has a much higher processing time than the da Silva–Lins–Rocha algorithm [

2] (dSLR-C), the top quality algorithm using direct binarization.

It is also relevant to say that there is a small degree of subjectivity in the whole process as the ground-truth images of historic documents are hand-processed. If one looks at

Table 10, one may also find some differences in the produced images that illustrate such subjectivity. The result of the direct binarization using Li–Tam algorithm [

41] yields an image with a high kappa of 0.94 with much thicker strokes than the one chosen by the texture-based method, the Su–Lu algorithm [

57], both of which were fed with the gray-scale image obtained by using the conventional luminance equation. Although the kappa of the Su–Lu binarized image is 0.89, the resulting image is as readable as the Li–Tam one, a phenomenon which is somehow similar to the one presented in

Figure 5. The main idea of the proposed methodology is not to find exactly the same best quality-time algorithm as directly binarizing, but one algorithm that yields satisfactory results.

5. Conclusions

Document binarization is a key step in many document processing pipelines; thus it is important to be performed quickly and with high quality. Depending on the intrinsic features of the scanned document image, the quality-time performance of the binarization algorithms known today varies widely. The search for a document feature that is possible to be extracted automatically with a low time complexity that may provide an indication of which binarization algorithm provides the best quality-time trade-off is thus of strategic importance. This paper takes the document texture as such a feature.

The results presented have shown that the document texture information may be satisfactorily used as a way to choose which binarization algorithm to apply to scanned historical documents, and how the input image should be if the original color image, its gray-scale conversion or one of its RGB channels is to be successfully scanned. The choice of the algorithms is based on the use of real images that “resemble” the paper background of the document to be binarized. A sample of the texture of the document is collected and compared with the remaining 39 different paper textures used for handwritten or machine typed documents, each of which points to an algorithm that provides the best quality-time trade-off for the synthetic document. The use of that algorithm in the real-world document to be binarized was assessed here and yielded results that may be considered of good quality and quickly produced, both for image readability by humans or automatic OCR transcription.

This paper presents evidences that by matching the textures of scanned documents, one can find suitable binarization algorithms for a given new image. The methodology presented may be enhanced further by including new textures and binarization schemes. The inclusion of new textures may narrow the euclidean distance between the image to be binarized document and the existing textures in the dataset. The choice of the most suitable binarization scheme for the document with the new texture may be done by the visual inspection of the result of the top-ranked binarization algorithms of the document image with the closest Euclidean distance of the images already in the reference dataset.

A number of issues remain open for further work, however. The first one is automating the process of texture sampling and matching in such a way as not to be a high overload on the binarization process as a whole. This may also involve the collection of texture samples in different parts of the document to avoid collecting parts either printed with back-to-front interference or other physical noises, such as stains or holes. The second point is trying to minimize the number of features in the vector-of-features to be matched with the vector-of-features of the synthetic textures. The third point is attempting to find a better matching strategy than simply calculating the Euclidean distance between the vectors, as done here, perhaps by using some kind of clustering.