A Comparative Review on Applications of Different Sensors for Sign Language Recognition

Abstract

:1. Introduction

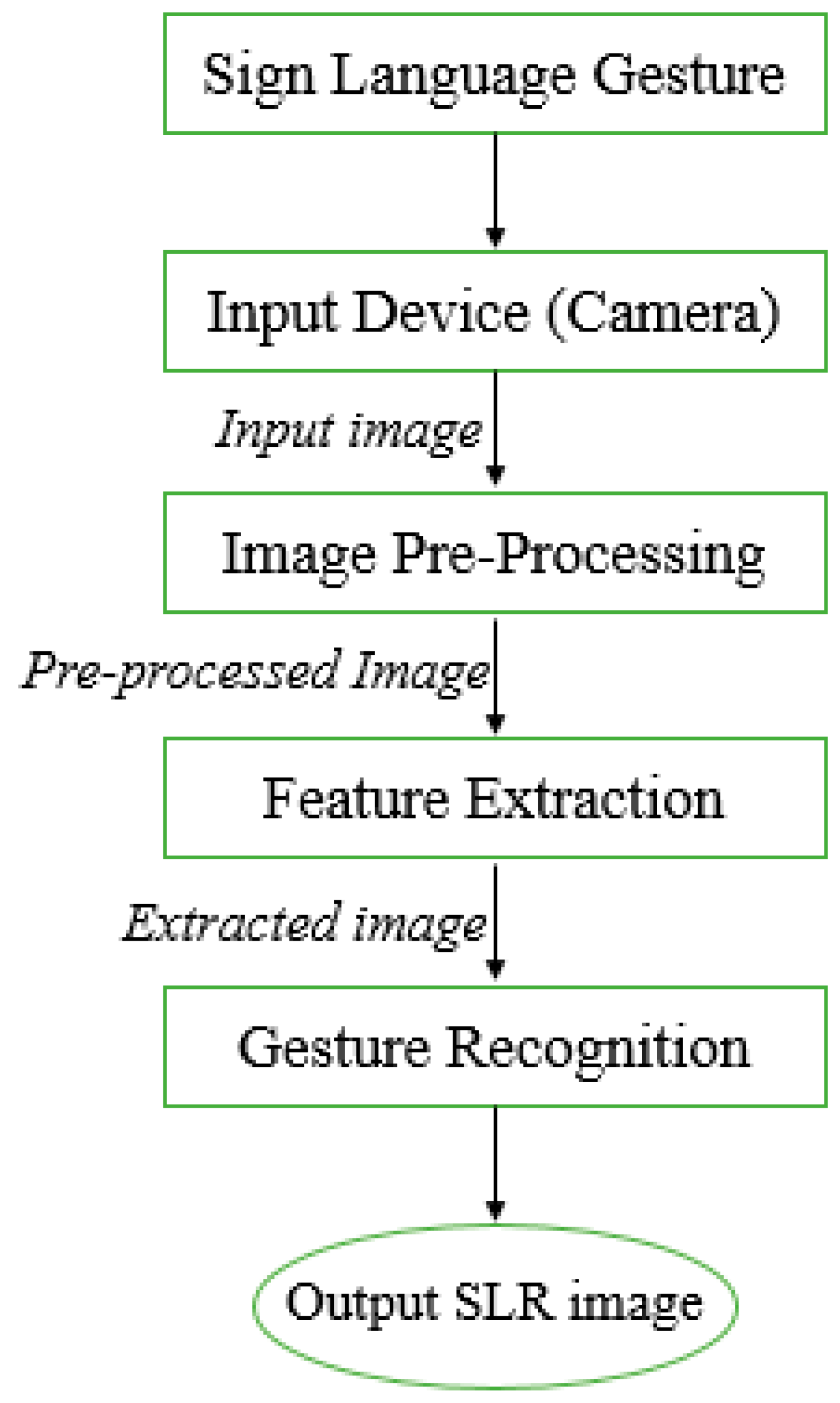

- Vision-sensor based SLR system

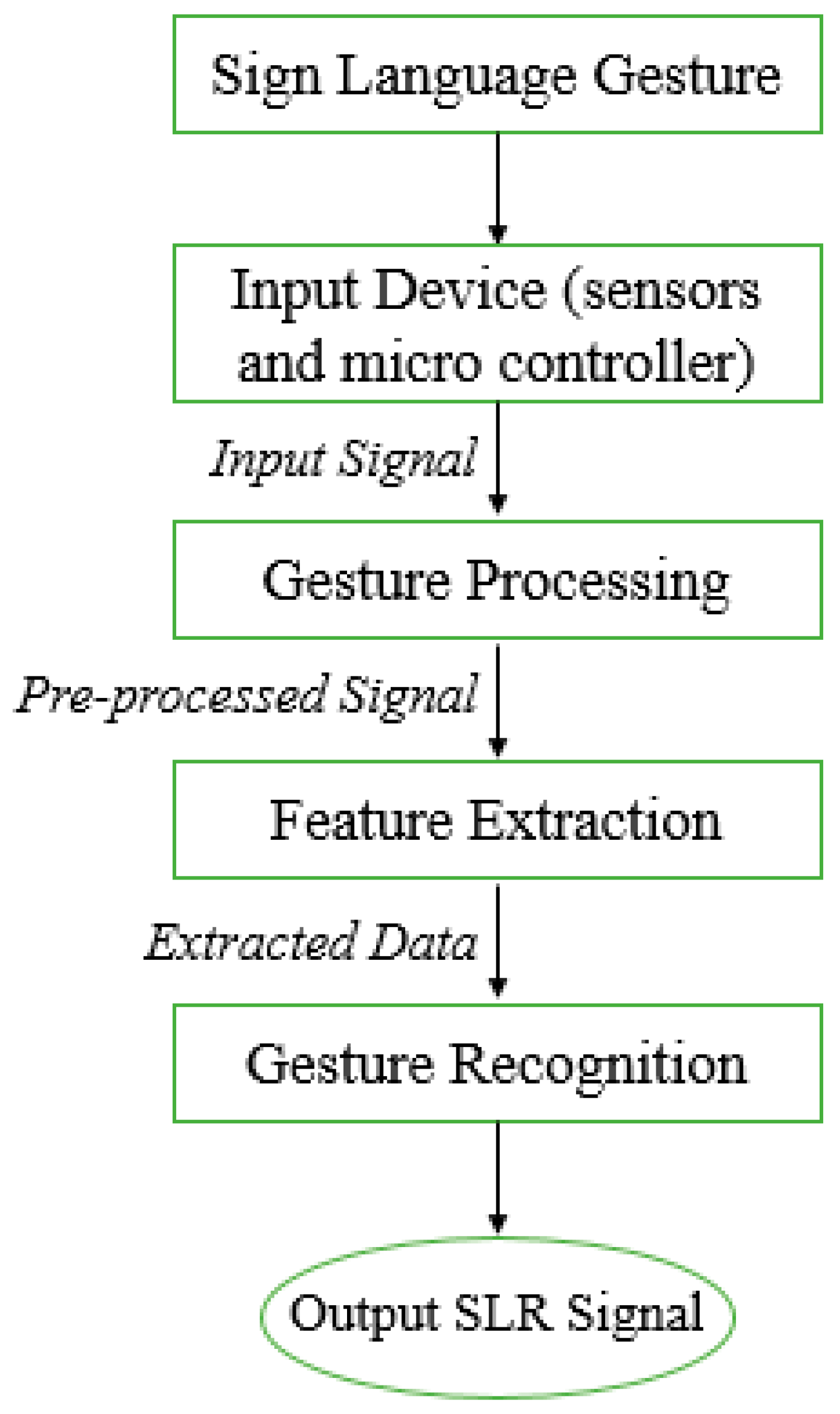

- Sensor-based SLR system

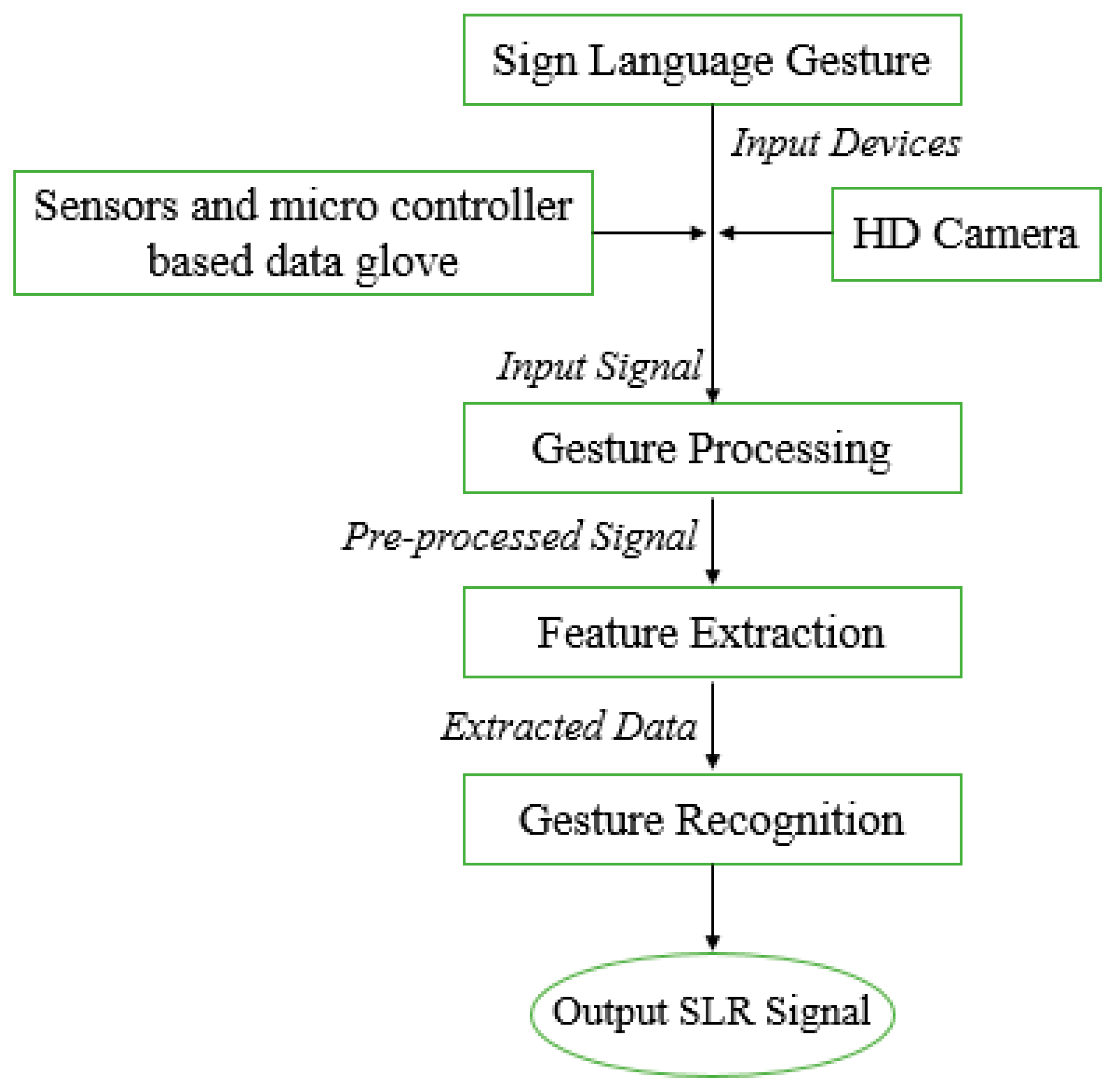

- Hybrid SLR system

2. Literature-Based Sign Language Recognition Models

2.1. Sensor-Based Models

2.2. Vision Based Models

2.3. Non-Commercial Models for Data Glove

2.4. Commercial Data Glove Based Models

2.5. Hybrid Recognition Models

2.6. Framework-Based Recognition Models

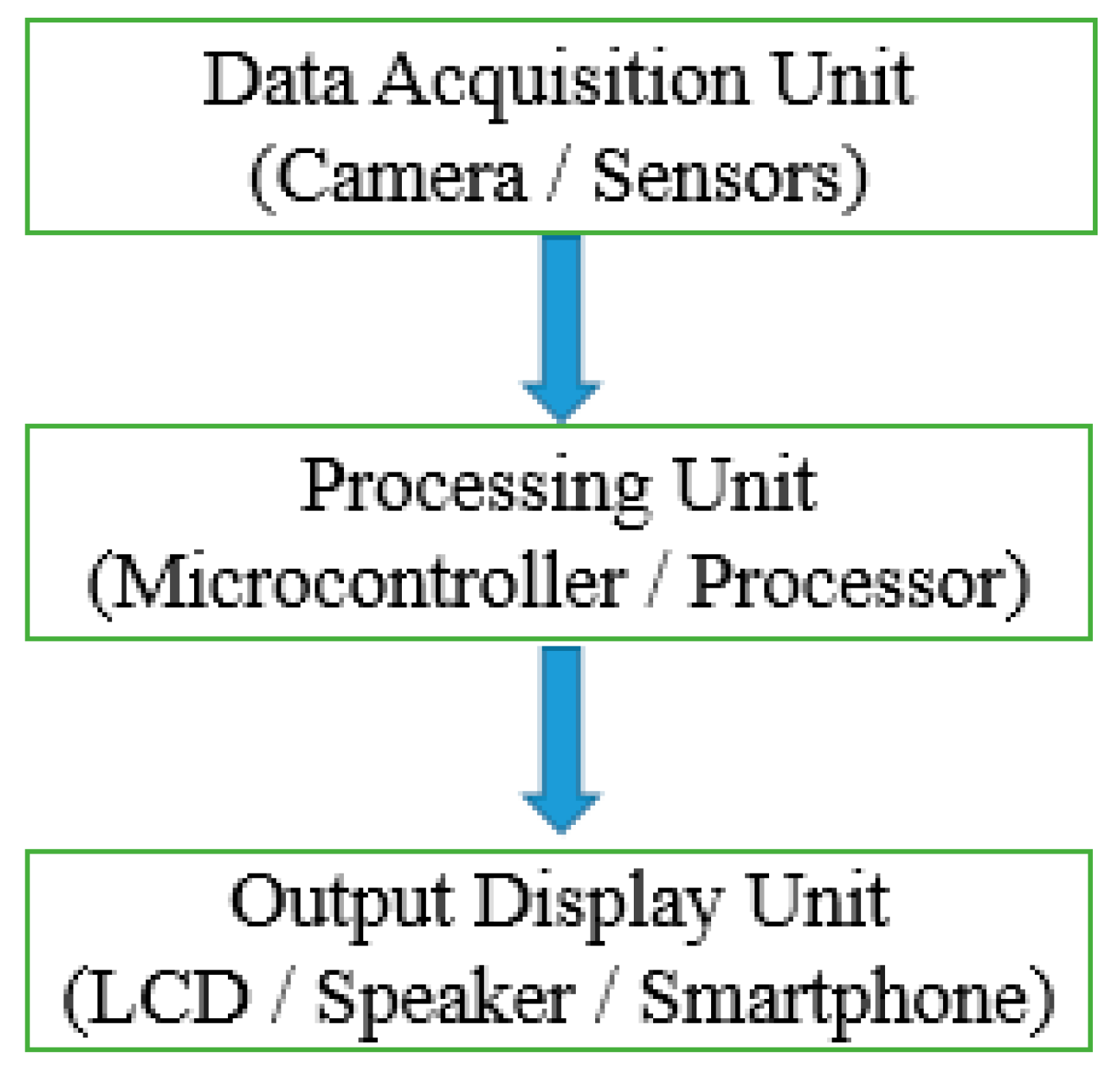

3. Components and Methods

3.1. Data Acquisition Unit

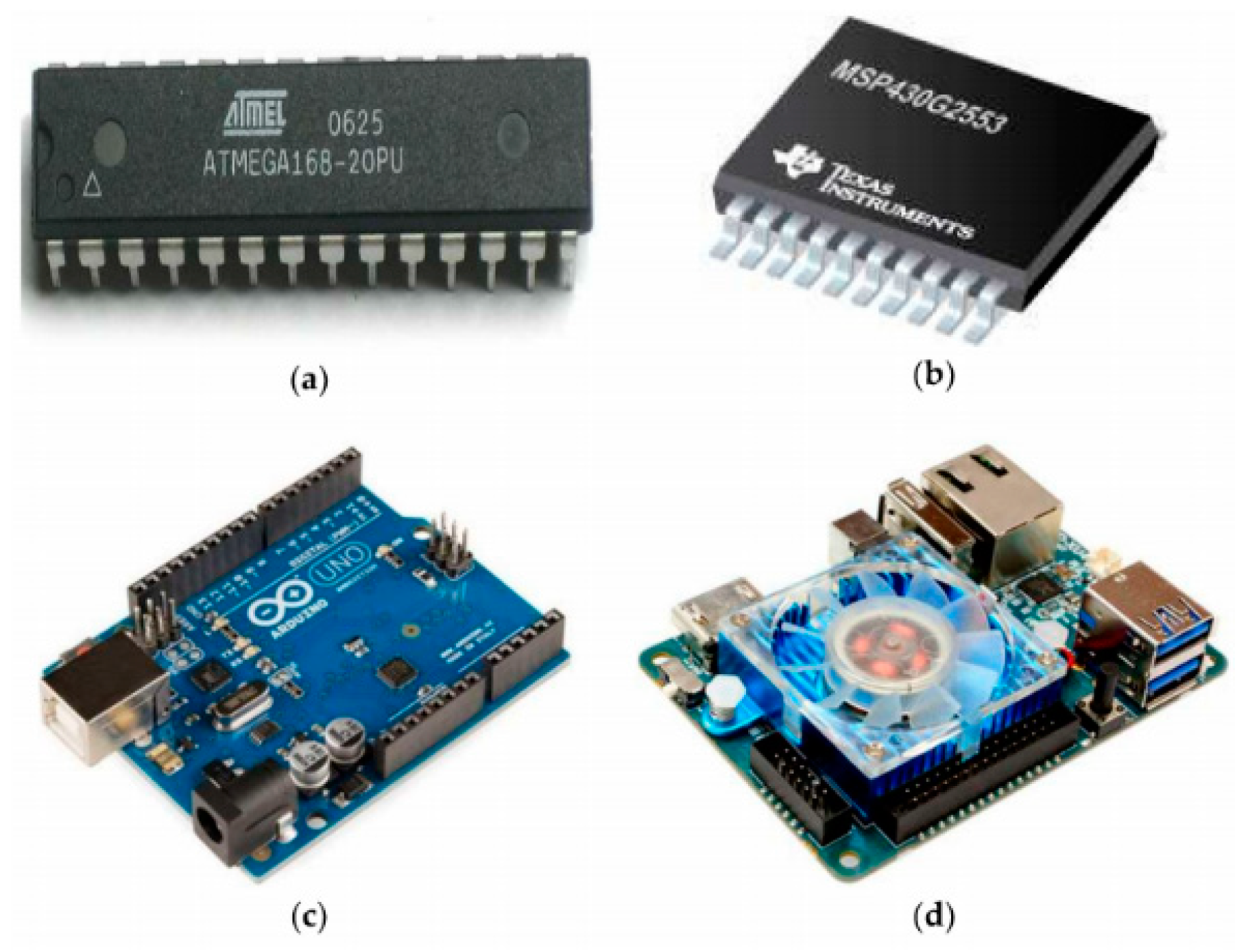

3.2. Processing Unit

3.3. Output Unit

3.4. Gesture Classification Method

3.5. Training Datasets

4. Machine Learning

- Training Data;

- Testing Data;

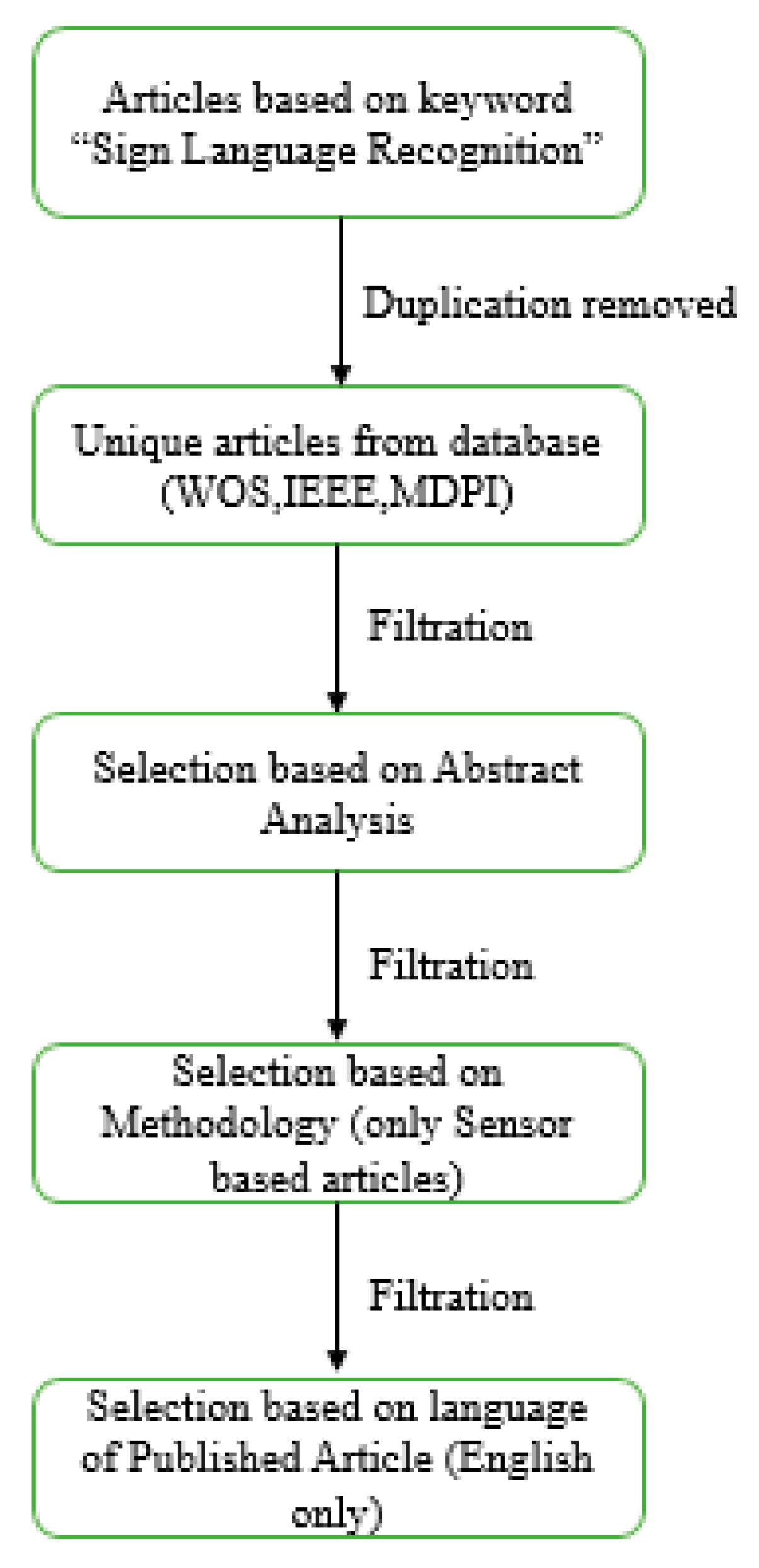

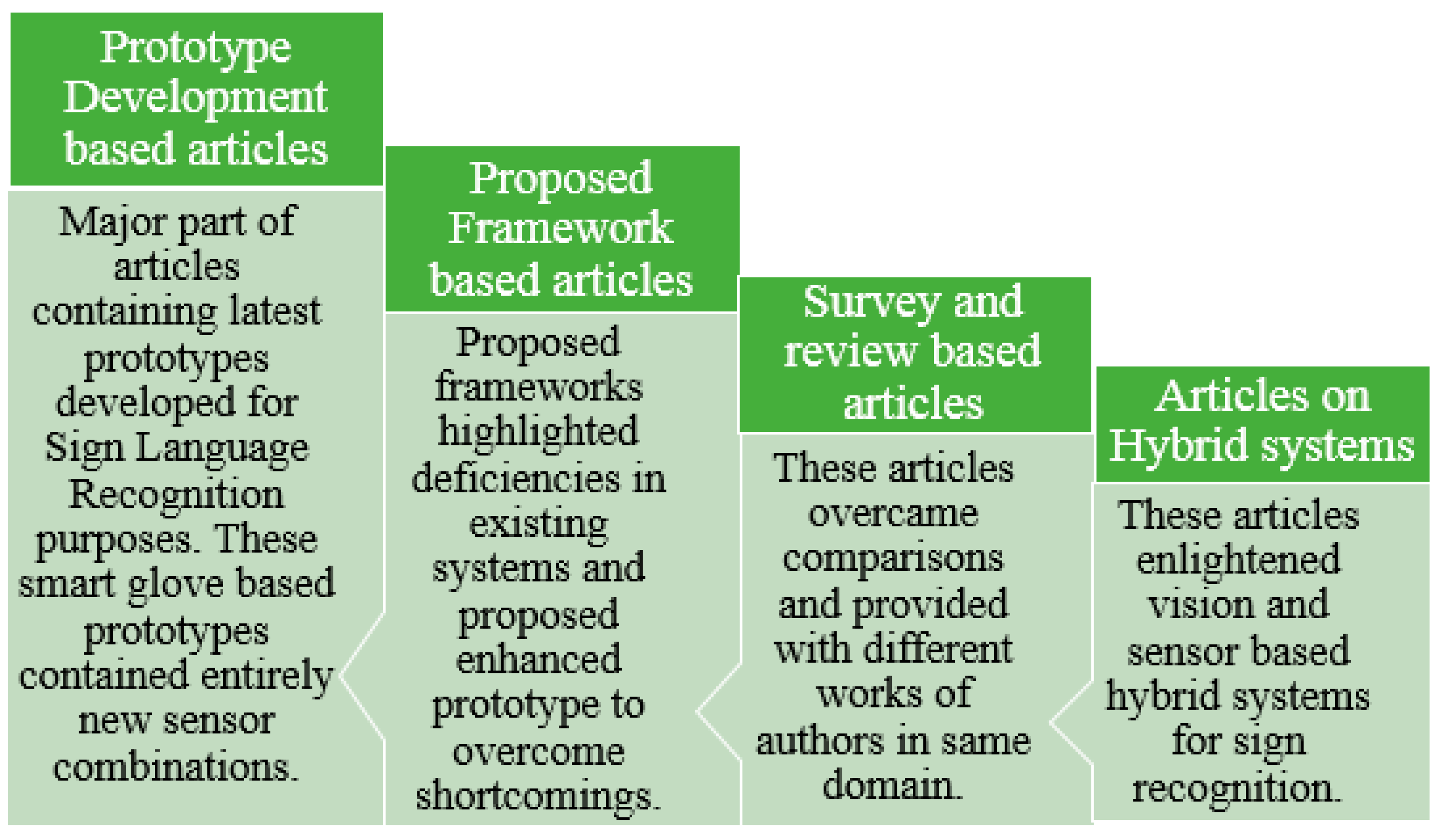

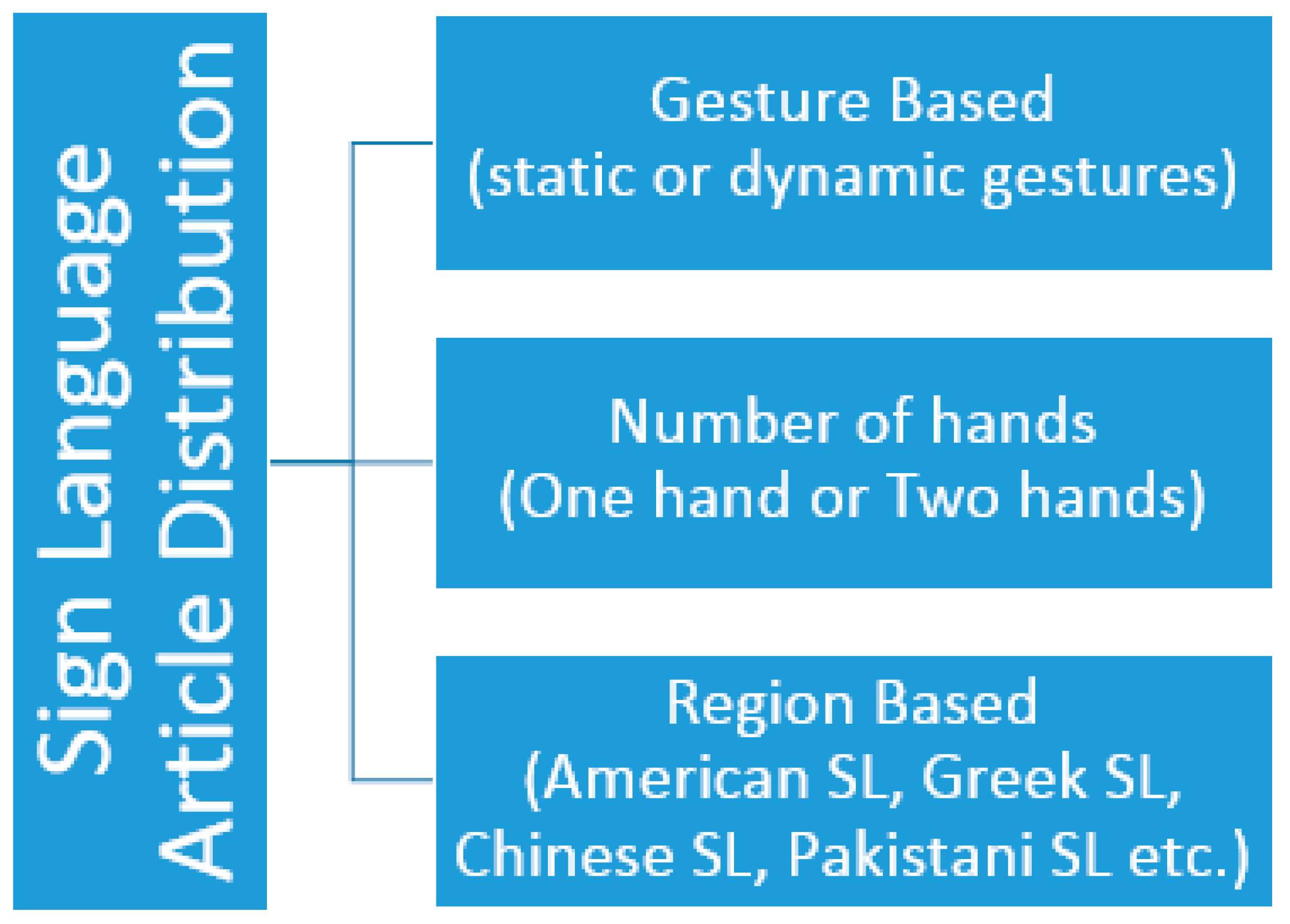

5. Article Filtration and Distribution Analysis

6. Analysis

7. Motivations

7.1. Technological Advancement

7.2. Daily Usage

7.3. Benefits

7.4. Limitations

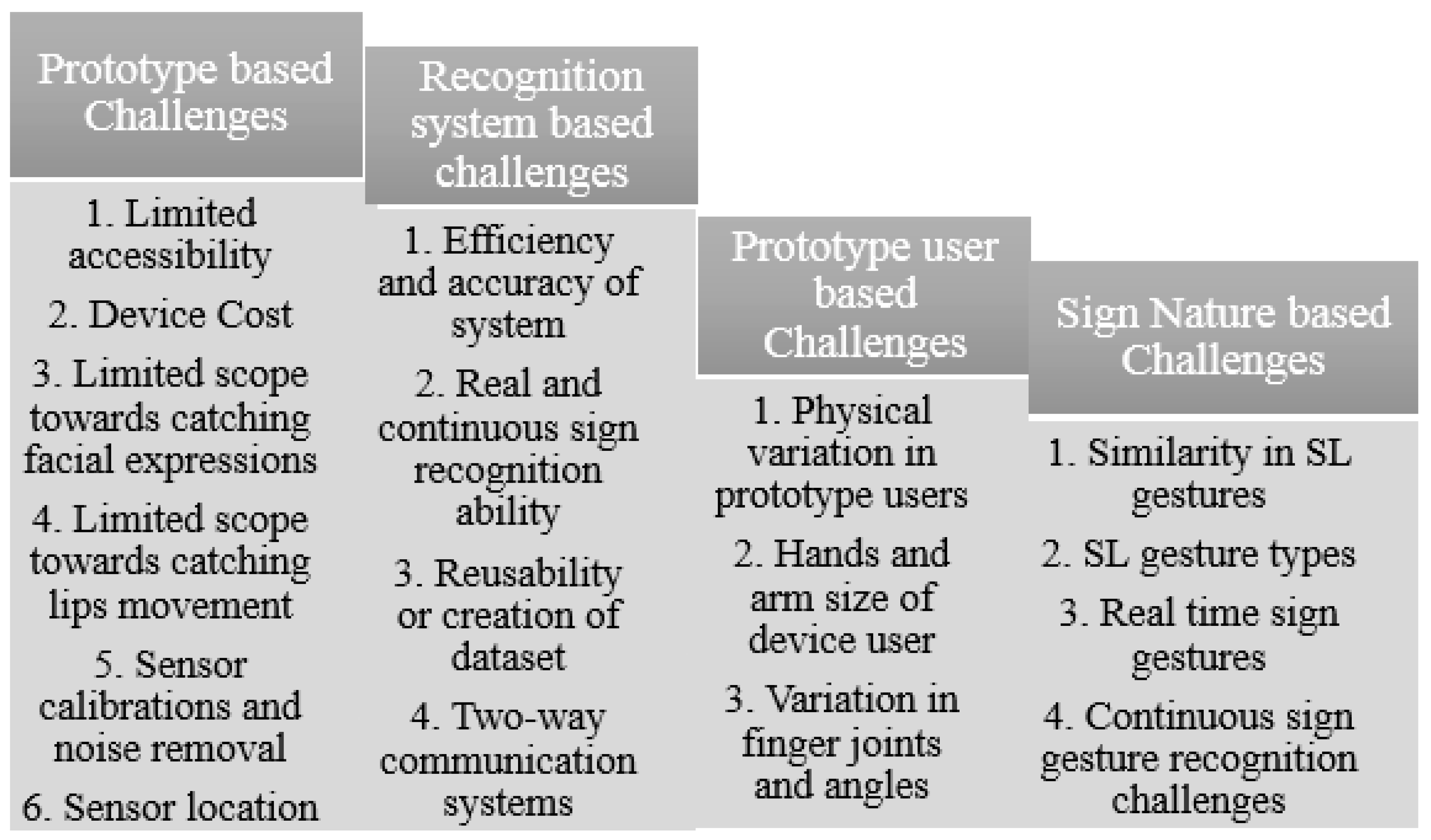

8. Challenges

8.1. Sign Nature

8.2. User Interactions

8.3. Device Infrastructure

8.4. Accuracy

9. Recommendations

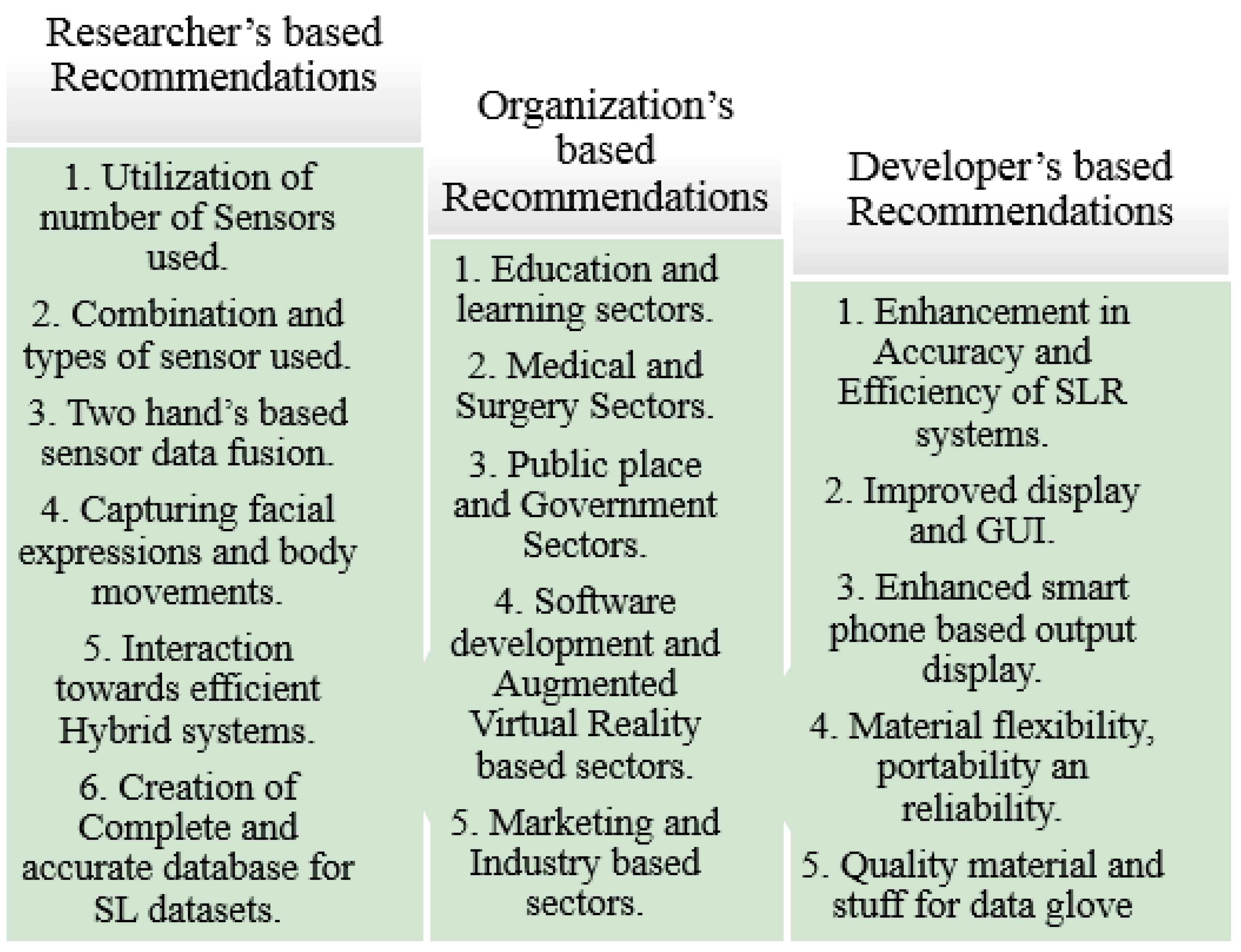

9.1. Developers

9.2. Organization

9.3. Researchers

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Bhatnagar, V.; Magon, R.; Srivastava, R.; Thakur, M. A Cost Effective Sign Language to voice emulation system. In Proceedings of the 2015 Eighth International Conference on Contemporary Computing (IC3), Noida, India, 20–22 August 2015. [Google Scholar]

- Masieh, M.A. Smart Communication System for Deaf-Dumb People. In Proceedings of the International Conference on Embedded Systems, Cyber-physical Systems, and Applications (ESCS), Athens, Greece, 2017; Available online: https://www.proquest.com/openview/2747505eab9eb43cb1717f9654ca7d16/1?pq-origsite=gscholar&cbl=1976354 (accessed on 21 December 2021).

- Kashyap, A.S. Digital Text and Speech Synthesizer Using Smart Glove for Deaf and Dumb. Int. J. Adv. Res. Electron. Commun. Eng. (IJARECE) 2017, 6, 4. [Google Scholar]

- Amin, M.S.; Amin, M.T.; Latif, M.Y.; Jathol, A.A.; Ahmed, N.; Tarar, M.I.N. Alphabetical Gesture Recognition of American Sign Language using E-Voice Smart Glove. In Proceedings of the 2020 IEEE 23rd International Multitopic Conference (INMIC), Bahawalpur, Pakistan, 5–7 November 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Lokhande, P.; Prajapati, R.; Pansare, S. Data Gloves for Sign Language Recognition System. Int. J. Comput. Appl. 2015, 975, 8887. [Google Scholar]

- Iwasako, K.; Soga, M.; Taki, H. Development of finger motion skill learning support system based on data glove. Procedia Comput. Sci. Appl. 2014, 35, 1307–1314. [Google Scholar] [CrossRef]

- Aditya, T.S. Meri Awaaz—Smart Glove Learning Assistant for Mute Students and teachers. Int. J. Innov. Res. Comput. Commun. Eng. 2017, 5, 6. [Google Scholar]

- Padmanabhan, M. Hand gesture recognition and voice conversion system for dumb people. Int. J. Sci. Eng. Res. 2014, 5, 427. [Google Scholar]

- Kalyani, B.S. Hand Talk gloves for Gesture Recognizing. Int. J. Eng. Sci. Manag. Res. 2015, 2, 5. [Google Scholar]

- Patel, H.S. Smart Hand Gloves for Disable People. Int. Res. J. Eng. Technol. (IRJET) 2018, 5, 1423–1426. [Google Scholar]

- Pławiak, P.; Sósnicki, T.; Niedzwiecki, M.; Tabor, Z.; Rzecki, K. Hand body language gesture recognition based on signals from specialized glove and machine learning algorithms. IEEE Trans. 2016, 12, 1104–1113. [Google Scholar]

- Ahmed, S.M. Electronic speaking glove for speechless patients, a tongue to a dumb. In Proceedings of the 2010 IEEE Conference on Sustainable Utilization and Development in Engineering and Technology, Petaling Jaya, Malaysia, 20–21 November 2010. [Google Scholar]

- Bedregal, B.; Dimuro, G. Interval fuzzy rule-based hand gesture recognition. In Proceedings of the 12th GAMM-IMACS International Symposium on Scientific Computing, Computer Arithmetic and Validated Numerics, Duisburg, Germany, 26–29 September 2006. [Google Scholar]

- Tanyawiwat, N.; Thiemjarus, S. Design of an assistive communication glove using combined sensory channels. In Proceedings of the 2012 Ninth International Conference on Wearable and Implantable Body Sensor Networks (BSN), London, UK, 9–12 May 2012. [Google Scholar]

- Arif, A.; Rizvi, S.; Jawaid, I.; Waleed, M.; Shakeel, M. Techno-Talk: An American Sign Language (ASL) Translator. In Proceedings of the 2016 International Conference on Control, Decision and InformationTechnologies (CoDIT), Saint Julian, Malta, 6–8 April 2016. [Google Scholar]

- Chouhan, T.; Panse, A.; Voona, A.; Sameer, S. Smart glove with gesture recognition ability for the hearing and speech impaired. In Proceedings of the 2014 IEEE Global Humanitarian Technology Conference-South Asia Satellite (GHTC-SAS), Rivandrum, India, 26–27 September 2014. [Google Scholar]

- Vijayalakshmi, P.; Aarthi, M. Sign language to speech conversion. In Proceedings of the 2016 International Conference on Recent Trends in Information Technology, Chennai, India, 8–9 April 2016. [Google Scholar]

- Praveen, N.; Karanth, N.; Megha, M. Sign language interpreter using a smart glove. In Proceedings of the 2014 International Conference on Advances in Electronics, Computers and Communications (ICAECC), Bangalore, India, 10–11 October 2014. [Google Scholar]

- Phi, L.; Nguyen, H.; Bui, T.; Vu, T. A glove-based gesture recognition system for Vietnamese sign Languages. In Proceedings of the 2015 15th International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015. [Google Scholar]

- Preetham, C.; Ramakrishnan, G.; Kumar, S.; Tamse, A.; Krishnapura, N. Hand talk-implementation of a gesture recognizing glove. In Proceedings of the 2013 Texas Instruments India Educators’ Conference (TIIEC), Bangalore, India, 4–6 April 2013. [Google Scholar]

- Mehta, A.; Solanki, K.; Rathod, T. Automatic Translate Real-Time Voice to Sign Language Conversion for Deaf and Dumb People. Int. J. Eng. Res. Technol. (IJERT) 2021, 9, 174–177. [Google Scholar]

- Sharma, D.; Verma, D.; Khetarpal, P. LabVIEW based Sign Language Trainer cum portable display unit for the speech impaired. In Proceedings of the 2015 Annual IEEE India Conference (INDICON), New Delhi, India, 17–20 December 2015. [Google Scholar]

- Lavanya, M.S. Hand Gesture Recognition and Voice Conversion System Using Sign Language Transcription System. IJECT 2014, 5, 4. [Google Scholar]

- Vutinuntakasame, S.; Jaijongrak, V.; Thiemjarus, S. An assistive body sensor network glove for speech-and hearing-impaired disabilities. In Proceedings of the 2011 International Conference on Body Sensor Networks (BSN), Dallas, TX, USA, 23–25 May 2011. [Google Scholar]

- Borghetti, M.; Sardini, E.; Serpelloni, M. Sensorized glove for measuring hand finger flexion for rehabilitation purposes. IEEE Trans. 2013, 62, 3308–3314. [Google Scholar] [CrossRef]

- Adnan, N.; Wan, K.; Shahriman, A.; Zaaba, S.; Basah, S.; Razlan, Z.; Hazry, D.; Ayob, M.; Rudzuan, M.; Aziz, A. Measurement of the flexible bending force of the index and middle fingers for virtual interaction. Procedia Eng. 2012, 41, 388–394. [Google Scholar] [CrossRef] [Green Version]

- Alvi, A.; Azhar, M.; Usman, M.; Mumtaz, S.; Rafiq, S.; Rehman, R.; Ahmed, I. Pakistan sign language recognition using statistical template matching. Int. J. Inf. Technol. 2004, 1, 1–12. [Google Scholar]

- Shukor, A.; Miskon, M.; Jamaluddin, M.; bin Ali, F.; Asyraf, M.; bin Bahar, M. A new data glove approach for Malaysian sign language detection. Procedia Comput. Sci. Appl. 2015, 76, 60–67. [Google Scholar] [CrossRef] [Green Version]

- Elmahgiubi, M.; Ennajar, M.; Drawil, N.; Elbuni, M. Sign language translator and gesture recognition. In Proceedings of the 2015 Global Summit on Computer & Information Technology (GSCIT), Sousse, Tunisia, 11–13 June 2015. [Google Scholar]

- Sekar, H.; Rajashekar, R.; Srinivasan, G.; Suresh, P.; Vijayaraghavan, V. Low-cost intelligent static gesture recognition system. In Proceedings of the 2016 Annual IEEE Systems Conference, Orlando, FL, USA, 18–21 April 2016. [Google Scholar]

- Mehdi, S.; Khan, Y. Sign language recognition using sensor gloves. In Proceedings of the 9th International Conference on Neural Information Processing, Singapore, 8–22 November 2002. [Google Scholar]

- Ibrahim, N.; Selim, M.; Zayed, H. An Automatic Arabic Sign Language Recognition System (ArSLRS). J. King Saud Univ. Comput. Inf. Sci. 2018, 30, 470–477. [Google Scholar] [CrossRef]

- López-Noriega, J.; Emiliano, J.; Fernández-Valladares, M.I.; Uc-cetina, V. Glove-based sign language recognition solution to assist communication for deaf users. In Proceedings of the 2014 11th International Conference on Electrical Engineering, Computing Science and Automatic Control (CCE), Ciudad del Carmen, Mexico, 29 September–3 October 2014. [Google Scholar]

- Aly, S.; Aly, W. DeepArSLR: A Novel Signer-Independent Deep Learning Framework for Isolated Arabic Sign Language Gestures Recognition. IEEE Access 2020, 8, 83199–83212. [Google Scholar] [CrossRef]

- Chaithanya Kumar, M.; Leo, F.P.P.; Verma, K.D.; Kasireddy, S.; Scholl, P.M.; Kempfle, J.; Van Laerhoven, K. Real-Time and Embedded Detection of Hand Gestures with an IMU-Based Glove. Informatics 2018, 5, 28. [Google Scholar]

- Deriche, M.; Aliyu, S.O.; Mohandes, M. An Intelligent Arabic Sign Language Recognition System Using a Pair of LMCs with GMM Based Classification. IEEE Sens. J. 2019, 19, 8067–8078. [Google Scholar] [CrossRef]

- Farman Shah, M.S. Sign Language Recognition Using Multiple Kernel Learning: A Case Study of Pakistan Sign Language. IEEE Access 2021, 9, 67548–67558. [Google Scholar] [CrossRef]

- Jiang, S.; Lv, B.; Guo, W.; Zhang, C.; Wang, H.; Sheng, X.; Shull, P.B. Feasibility of Wrist-Worn, Real-Time Hand, and Surface Gesture Recognition via sEMG and IMU Sensing. IEEE Trans. Ind. Inform. 2018, 14, 3376–3385. [Google Scholar] [CrossRef]

- Kim, S.; Kim, J.; Ahn, S.; Kim, Y. Finger language recognition based on ensemble artificial neural network learning using armband EMG sensors. Technol Health Care 2018, 26, 249–258. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lee, B.G.; Lee, S.M. Smart Wearable Hand Device for Sign Language Interpretation System with Sensors Fusion. IEEE Sens. J. 2018, 18, 1224–1232. [Google Scholar] [CrossRef]

- Li, L.; Jiang, S.; Shull, P.B.; Gu, G. SkinGest: Artificial skin for gesture recognition via filmy stretchable strain sensors. Adv. Robot. 2018, 32, 1112–1121. [Google Scholar] [CrossRef]

- Mittal, A.; Kumar, P.; Roy, P.P.; Balasubramanian, R.; Chaudhuri, B.B. A Modified LSTM Model for Continuous Sign Language Recognition Using Leap Motion. IEEE Sens. J. 2019, 19, 7056–7063. [Google Scholar] [CrossRef]

- Pan, W.; Zhang, X.; Ye, Z. Attention-Based Sign Language Recognition Network Utilizing Keyframe Sampling and Skeletal Features. IEEE Access 2020, 8, 215592–215602. [Google Scholar] [CrossRef]

- Sincan, O.M.; Keles, H.Y. AUTSL: A Large Scale Multi-Modal Turkish Sign Language Dataset and Baseline Methods. IEEE Access 2020, 8, 181340–181355. [Google Scholar] [CrossRef]

- Zhang, S.; Meng, W.; Li, H.; Cui, X. Multimodal Spatiotemporal Networks for Sign Language Recognition. IEEE Access 2019, 7, 180270–180280. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, J.; Wang, Y.; Liu, H.; Chen, Y. Towards Low-Cost Sign Language Gesture Recognition Leveraging Wearables. IEEE Trans. Mob. Comput. 2021, 20, 1685–1701. [Google Scholar] [CrossRef]

- Sharma, V.; Kumar, V.; Masaguppi, S.; Suma, M.; Ambika, D. Virtual Talk for Deaf, Mute, Blind and Normal Humans. In Proceedings of the 2013 Texas Instruments India Educators’ Conference (TIIEC), Bangalore, India, 4–6 April 2013. [Google Scholar]

- Abdulla, D.; Abdulla, S.; Manaf, R.; Jarndal, A. Design and implementation of a sign-to-speech/text system for deaf and dumb people. In Proceedings of the 2016 5th International Conference on Electronic Devices, Systems and Applications, Ras AL Khaimah, United Arab Emirates, 6–8 December 2016. [Google Scholar]

- Kadam, K.; Ganu, R.; Bhosekar, A.; Joshi, S. American sign language interpreter. In Proceedings of the 2012 IEEE Fourth International Conference on Technology for Education (T4E), Hyderabad, India, 18–20 July 2012. [Google Scholar]

- Ibarguren, A.; Maurtua, I.; Sierra, B. Layered architecture for real-time sign recognition. Int. J. Comput. 2009, 53, 1169–1183. [Google Scholar] [CrossRef]

- Munib, Q.; Habeeb, M.; Takruri, B.; Al-Malik, H. American sign language (ASL) recognition based on Hough transform and neural networks. Exp. Syst. Appl. 2007, 32, 24–37. [Google Scholar] [CrossRef]

- Geetha, M.; Manjusha, U. A vision-based recognition of indian sign language alphabets and numerals using B-spline approximation. Int. J. Comput. Sci. Eng. 2012, 4, 406. [Google Scholar]

- Elons, A.; Abull-Ela, M.; Tolba, M. A proposed PCNN features quality optimization technique for pose-invariant 3D Arabic sign language recognition. Softw. Comput. Appl. 2013, 13, 1646–1660. [Google Scholar] [CrossRef]

- Mohandes, S.M. Arabic sign language recognition an image-based approach. In Proceedings of the 21st International Conference on Advanced Information Networking and Applications Workshops, Niagara Falls, ON, Canada, 21–23 May 2007. [Google Scholar]

- Erol, A.; Bebis, G.; Nicolescu, M.; Boyle, R.; Twombly, X. Vision-based hand pose estimation: A review. Comput. Vis. Imag. 2007, 108, 52–73. [Google Scholar] [CrossRef]

- Ahmed, A.; Aly, S. Appearance-based arabic sign language recognition using hidden markov models. In Proceedings of the 2014 International Conference on Engineering and Technology (ICET), Cairo, Egypt, 19–20 April 2014. [Google Scholar]

- Thalange, A.; Dixit, S.C. OHST and wavelet features based Static ASL numbers recognition. Procedia Comput. Sci. 2016, 92, 455–460. [Google Scholar] [CrossRef] [Green Version]

- Kau, L.; Su, W.; Yu, P.; Wei, S. A real-time portable sign language translation system. In Proceedings of the 2015 IEEE 58th International Midwest Symposium on Circuits and Systems (MWSCAS), Fort Collins, CO, USA, 2–5 August 2015. [Google Scholar]

- Kong, W.; Ranganath, S.T. Towards subject independent continuous sign language recognition: A segment and merge approach. Pattern Recogn. 2014, 47, 1294–1308. [Google Scholar] [CrossRef]

- Al-Qurishi, M.; Khalid, T.; Souissi, R. Deep Learning for Sign Language Recognition: Current Techniques, Benchmarks, and Open Issues. IEEE Access 2021, 9, 126917–126951. [Google Scholar] [CrossRef]

- Aly, W.; Aly, S.; Almotairi, S. User-Independent American Sign Language Alphabet Recognition Based on Depth Image and PCANet Features. IEEE Access 2019, 7, 123138–123150. [Google Scholar] [CrossRef]

- Breland, D.S.; Skriubakken, S.B.; Dayal, A.; Jha, A.; Yalavarthy, P.K.; Cenkeramaddi, L.R. Deep Learning-Based Sign Language Digits Recognition From Thermal Images with Edge Computing System. IEEE Sens. J. 2021, 21, 10445–10453. [Google Scholar] [CrossRef]

- Casam Njagi, N.; Wario, R.D. Sign Language Gesture Recognition through Computer Vision. In Proceedings of the 2018 IST-Africa Week Conference, Botswana, Africa, 9–11 May 2018; Cunningham, P., Cunningham, M., Eds.; IIMC International Information Management Corporation: Botswana, Africa, 2018; p. 8. [Google Scholar]

- Huang, J.; Zhou, W.; Li, H.; Li, W. Attention-Based 3D-CNNs for Large-Vocabulary Sign Language Recognition. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 2822–2832. [Google Scholar] [CrossRef]

- Huang, S.; Ye, Z. Boundary-Adaptive Encoder with Attention Method for Chinese Sign Language Recognition. IEEE Access 2021, 9, 70948–70960. [Google Scholar] [CrossRef]

- Huang, S.; Mao, C.; Tao, J.; Ye, Z. A Novel Chinese Sign Language Recognition Method Based on Keyframe-Centered Clips. IEEE Signal Proc. Lett. 2018, 25, 442–446. [Google Scholar] [CrossRef]

- Joy, J.; Balakrishnan, K.; Sreeraj, M. SignQuiz: A Quiz Based Tool for Learning Fingerspelled Signs in Indian Sign Language Using ASLR. IEEE Access. 2019, 7, 28363–28371. [Google Scholar] [CrossRef]

- Kumar, D.A.; Sastry, A.S.; Kishore, P.V.; Kumar, E.K.; Kumar, M.T. S3DRGF: Spatial 3-D Relational Geometric Features for 3-D Sign Language Representation and Recognition. IEEE Signal Proc. Lett. 2019, 26, 169–173. [Google Scholar] [CrossRef]

- Kumar, E.K.; Kishore, P.V.; Sastry, A.S.; Kumar, M.T.; Kumar, D.A. Training CNNs for 3-D Sign Language Recognition with Color Texture Coded Joint Angular Displacement Maps. IEEE Signal Proc. Lett. 2018, 25, 645–649. [Google Scholar] [CrossRef]

- Kumar, E.K.; Kishore, P.; Kumar, M.T.; Kumar, D.A.; Sastry, A. Three-Dimensional Sign Language Recognition with Angular Velocity Maps and Connived Feature ResNet. IEEE Signal Proc. Lett. 2018, 25, 1860–1864. [Google Scholar] [CrossRef]

- Liao, Y.; Xiong, P.; Min, W.; Min, W.; Lu, J. Dynamic Sign Language Recognition Based on Video Sequence with BLSTM-3D Residual Networks. IEEE Access 2019, 7, 38044–38054. [Google Scholar] [CrossRef]

- Muneer, A.-H.; Ghulam, M.; Wadood, A.; Mansour, A.; Mohammed, B.; Tareq, A.; Mathkour, H.; Amine, M.M. Deep Learning-Based Approach for Sign Language Gesture Recognition with Efficient Hand Gesture Representation. IEEE Access 2020, 8, 192527–192542. [Google Scholar]

- Oliveira, T.; Escudeiro, N.; Escudeiro, P.; Emanuel, R. The VirtualSign Channel for the Communication between Deaf and Hearing Users. IEEE Revista Iberoamericana de Tecnologias del Aprendizaje 2019, 14, 188–195. [Google Scholar] [CrossRef]

- Kishore, P.; Kumar, D.A.; Sastry, A.C.; Kumar, E.K. Motionlets Matching with Adaptive Kernels for 3-D Indian Sign Language Recognition. IEEE Sens. J. 2018, 18, 8. [Google Scholar] [CrossRef]

- Papastratis, I.; Dimitropoulos, K.; Konstantinidis, D.; Daras, P. Continuous Sign Language Recognition Through Cross-Modal Alignment of Video and Text Embeddings in a Joint-Latent Space. IEEE Access 2020, 8, 91170–91180. [Google Scholar] [CrossRef]

- Kanwal, K.; Abdullah, S.; Ahmed, Y.; Saher, Y.; Jafri, A. Assistive Glove for Pakistani Sign Language Translation. In Proceedings of the 2014 IEEE 17th International Multi-Topic Conference (INMIC), Karachi, Pakistan, 8–10 December 2014. [Google Scholar]

- Sriram, N.; Nithiyanandham, M. A hand gesture recognition based communication system for silent speakers. In Proceedings of the 2013 International Conference on Human Computer Interactions (ICHCI), Warsaw, Poland, 14–17 August 2013. [Google Scholar]

- Fu, Y.; Ho, C. Static finger language recognition for handicapped aphasiacs. In Proceedings of the Second International Conference on Innovative Computing, Information and Control, Kumamoto, Japan, 5–7 September 2007. [Google Scholar]

- Kumar, P.; Gauba, H.; Roy, P.; Dogra, D. A multimodal framework for sensor based sign language recognition. Neurocomputing 2017, 259, 21–38. [Google Scholar] [CrossRef]

- El Hayek, H.; Nacouzi, J.; Kassem, A.; Hamad, M.; El-Murr, S. Sign to letter translator system using a hand glove. In Proceedings of the 2014 Third International Conference on e-Technologies and Networks forDevelopment (ICeND), Beirut, Lebanon, 29 April–1 May 2014. [Google Scholar]

- Matételki, P.; Pataki, M.; Turbucz, S.; Kovács, L. An assistive interpreter tool using glove-based hand gesture recognition. In Proceedings of the 2014 IEEE Canada International Humanitarian Technology Conference-(IHTC), Montreal, QC, Canada, 1–4 June 2014. [Google Scholar]

- McGuire, R.; Hernandez-Rebollar, J.; Starner, T.; Henderson, V.; Brashear, H.; Ross, D. Towards a one-way American sign language translator. In Proceedings of the Sixth IEEE International Conference on Automatic Face and Gesture Recognition, Amsterdam, The Netherlands, 17–19 September 2004. [Google Scholar]

- Gałka, J.; Masior, M.M.; Zaborski, M.; Barczewska, K. Inertial motion sensing glove for sign language gesture acquisition and recognition. IEEE J. Sens. 2016, 16, 6310–6316. [Google Scholar] [CrossRef]

- Oz, C.; Leu, M. American Sign Language word recognition with a sensory glove using artificial neural networks. Artif. Intell. Based Comput. Appl. 2011, 24, 1204–1213. [Google Scholar] [CrossRef]

- Bavunoglu, H.; Bavunoglu, E. System of Converting Hand and Finger Movements into Text and Audio. Google Patents 15,034,875, 29 September 2016. [Google Scholar]

- Barranco, J.Á.Á. System and Method of Sign Language Interpretation. Spanish Patent 201,130,193, 14 February 2011. [Google Scholar]

- Sagawa, H.; Takeuchi, M. A method for recognizing a sequence of sign language words represented in a Japanese sign language sentence. In Proceedings of the Fourth IEEE International Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000. [Google Scholar]

- Ibarguren, A.; Maurtua, I.; Sierra, B. Layered architecture for real time sign recognition: Hand gesture and movement. Artif. Intellegence Based Eng. Appl. 2010, 23, 1216–1228. [Google Scholar] [CrossRef]

- Bajpai, D.; Porov, U.; Srivastav, G.; Sachan, N. Two Way Wireless Data Communication and American Sign Language Translator Glove for Images Text and Speech Display on Mobile Phone. In Proceedings of the 2015 Fifth International Conference on Communication Systems and Network Technologies (CSNT), Gwalior, India, 4–6 April 2015. [Google Scholar]

- Lei, L.; Dashun, Q. Design of data-glove and Chinese sign language recognition system based on ARM9. In Proceedings of the 2015 12th IEEE International Conference on Electronic Measurement & Instruments (ICEMI), Qingdao, China, 16–18 July 2015. [Google Scholar]

- Cocchia, A. Smart and digital city: A Systematic Literature Review. In Smart City; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Vijay, P.; Suhas, N.; Chandrashekhar, C.; Dhananjay, D. Recent developments in sign language recognition: A review. Int. J. Adv. Comput. Eng. Commun. Technol. 2012, 1, 21–26. [Google Scholar]

- Dipietro, L.; Sabatini, A.; Dario, P. A survey of glove-based systems and their applications. IEEE Trans. Syst. Man Cybern. 2008, 38, 461–482. [Google Scholar] [CrossRef]

- Khan, S.; Gupta, G.; Bailey, D.; Demidenko, S.; Messom, C. Sign language analysis and recognition: A preliminary investigation. In Proceedings of the 24th International Conference Image and Vision Computing New Zealand, Wellington, New Zealand, 23–25 November 2009. [Google Scholar]

- Verma, S.K. HANDTALK: Interpreter for the Differently Abled: A Review. IJIRCT 2016, 1, 4. [Google Scholar]

- Shriwas, N.V. A Preview Paper on Hand Talk Glove. Int. J. Res. Appl. Sci. Eng. Technol. 2015. Available online: https://www.ijraset.com/fileserve.php?FID=2179 (accessed on 21 December 2021).

- Al-Ahdal, M.; Nooritawati, M. Review in sign language recognition systems. In Proceedings of the 2012 IEEE Symposium on Computers & Informatics (ISCI), Penang, Malaysia, 18–20 March 2012. [Google Scholar]

- Anderson, R.; Wiryana, F.; Ariesta, M.; Kusuma, G. Sign Language Recognition Application Systems for Deaf-Mute People: A Review Based on Input-Process-Output. Procedia Comput. Sci. 2017, 116, 441–448. [Google Scholar]

- Pradhan, G.; Prabhakaran, B.; Li, C. Hand-gesture computing for the hearing and speech impaired. IEEE MultiMedia 2008, 15, 20–27. [Google Scholar] [CrossRef] [Green Version]

- Bui, T.; Nguyen, L. Recognizing postures in Vietnamese sign language with MEMS accelerometers. IEEE J. Sens. 2007, 7, 707–712. [Google Scholar] [CrossRef]

- Sadek, M.; Mikhael, M.; Mansour, H. A new approach for designing a smart glove for Arabic Sign Language Recognition system based on the statistical analysis of the Sign Language. In Proceedings of the 2017 34th National Radio Science Conference (NRSC), Alexandria, Egypt, 13–16 March 2017. [Google Scholar]

- Khambaty, Y.; Quintana, R.; Shadaram, M.; Nehal, S.; Virk, M.; Ahmed, W.; Ahmedani, G. Cost effective portable system for sign language gesture recognition. In Proceedings of the 2008 IEEE International Conference on System of Systems Engineering, Monterey, CA, USA, 2–4 June 2008. [Google Scholar]

- Ahmed, S.; Islam, R.; Zishan, M.; Hasan, M.; Islam, M. Electronic speaking system for speech impaired people: Speak up. In Proceedings of the 2015 International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Dhaka, Bangladesh, 21–23 May 2015. [Google Scholar]

- Aguiar, S.; Erazo, A.; Romero, S.; Garcés, E.; Atiencia, V.; Figueroa, J. Development of a smart glove as a communication tool for people with hearing impairment and speech disorders. In Proceedings of the 2016 IEEE Ecuador Technical Chapters Meeting (ETCM), Guayaquil, Ecuador, 12–14 October 2016. [Google Scholar]

- Ani, A.; Rosli, A.; Baharudin, R.; Abbas, M.; Abdullah, M. Preliminary study of recognizing alphabet letter via hand gesture. In Proceedings of the 2014 International Conference on Computational Science and Technology (ICCST), Kota Kinabalu, Malaysia, 27–28 August 2014. [Google Scholar]

- Kamal, S.M.; Chen, Y.; Li, S.; Shi, X.; Zheng, J. Technical Approaches to Chinese Sign Language Processing: A Review. IEEE Access 2019, 7, 96926–96935. [Google Scholar] [CrossRef]

- Kudrinko, K.; Flavin, E.; Zhu, X.; Li, Q. Wearable Sensor-Based Sign Language Recognition: A Comprehensive Review. IEEE Rev. Biomed. Eng. 2021, 14, 82–97. [Google Scholar] [CrossRef] [PubMed]

| Entity | Attributes |

|---|---|

| Face Expression | Happy, angry, sad, excited, and wondering face with or without movements of the lips and head. |

| Orientation of hands | Up, down, inward, and flipped palm orientation. |

| Hand movement type | Forward, backward, left, and right hand movement. |

| Configuration of hands | Finger movement and bending with or without palm bending. |

| Hand articulation points | Finger, wrist, elbow, and shoulder joints. |

| ML Approach | Advantages | Disadvantages |

|---|---|---|

| Supervised machine Learning | Defined data classes with labelled data, making it easier to learn and classify with more accurate results | Labelling data by humans which is not appropriate for the system to operate automatically. Computationally expensive. Required dataset for training and testing. |

| Unsupervised machine Learning | No labelling of data. No training data are required. Accurate results for new or unseen objects | Can produce less accurate results due to no labelled data. Do not provide details. |

| Deep Learning | Feature engineering work is reduced which is time taking and extracting information more accurately which are even hidden from the human eye. | Requires large dataset to train and computationally much more expensive. |

| Sr. No | Algorithm | Algorithmic Variants |

|---|---|---|

| 1 | Decision Tree | Simple Tree Medium Tree Complex Tree |

| 2 | Discriminant Analysis | Linear Discriminant Quadratic Discriminant |

| 3 | Support Vector Machine (SVM) | Linear SVM Quadratic SVM Cubic SVM Fine Gaussian SVM Medium Gaussian SVM Coarse Gaussian SVM |

| 4 | K-Nearest Neighbors (KNN) | Fine KNN Medium KNN Coarse KNN Cosine KNN Cubic KNN Weighted KNN |

| 5 | Ensemble | Ensemble Boosted Trees Ensemble Bagged Trees Ensemble Subspace Discriminant Ensemble Subspace KNN Ensemble RUSBoosted Trees |

| Article Reference | Article Publisher | Impact Factor | Citations |

|---|---|---|---|

| [71] | IEEE Access | 45 | |

| [68] | IEEE | 7 | |

| [65] | IEEE | 3 | |

| [43] | IEEE Access | 2 | |

| [67] | IEEE Access | 15 | |

| [34] | IEEE Access | 18 | |

| [74] | IEEE Access | 21 | |

| [60] | IEEE | ||

| [37] | IEEE Access | 2 | |

| [107] | IEEE | 9 | |

| [61] | IEEE Access | 34 | |

| [45] | IEEE Access | 5 | |

| [46] | IEEE | 8 | |

| [66] | IEEE | 19 | |

| [64] | IEEE | 37 | |

| [42] | IEEE | 44 | |

| [75] | IEEE | 12 | |

| [73] | IEEE | 3 | |

| [41] | Advanced Robotics Journal | 1 | |

| [38] | IEEE Transaction | 65 | |

| [39] | National Library of Medicine | ||

| [35] | Journal of Informatics | 22 | |

| [107] | IEEE | 9 | |

| [37] | IEEE Access | 478 | |

| [35] | Informatics | 2.90 | 281 |

| [26] | Procedia Engineering | 3.78 | 112 |

| [58] | International Midwest Symposium on Circuits and Systems | 83 | |

| [65] | IEEE Access | 60 | |

| [56] | International Conference on Engineering and Technology | 51 | |

| [32] | Journal of King Saud University of Computing and Information Sciences | 5.62 | 43 |

| [20] | Texas Instruments India Educators’ Conference | 56 | |

| [28] | Computer Science applications | 2.90 | 33 |

| [21] | IEEE | 30 | |

| [29] | Global Summit on Computer & Information Technology | 30 | |

| [51] | Experimental System Applications | 2.87 | 25 |

| [50] | International Journal of Computing | 22 | |

| [52] | International Journal of Computer Science and Engineering | 21 | |

| [30] | IEEE | 20 | |

| [47] | Texas Instruments India Educators’ Conference | 20 | |

| [38] | IEEE Transaction | 18 | |

| [44] | IEEE Access | 17 | |

| [63] | Conference Proceedings Paul Cunningham and Miriam Cunningham (Eds) | 17 | |

| [12] | IEEE | 16 | |

| [16] | IEEE | 16 | |

| [54] | International Conference on Advanced Information Networking and Applications Workshops | 16 | |

| [3] | International Journal of Advanced Research in Electronics and Communication Engineering | 0.77 | 14 |

| [55] | Computer Vision Images | 14 | |

| [23] | IJECT | 13 | |

| [25] | IEEE | 13 | |

| [31] | International Conference on Neural Information Processing | 13 | |

| [61] | IEEE Access | 13 | |

| [9] | International Journal of Engineering Sciences & Management Research | 11 | |

| [45] | IEEE Access | 11 | |

| [57] | Procedia Computer Sciences | 11 | |

| [13] | International Symposium on Scientific Computing, Computer Arithmetic and Validated Numerics | 10 | |

| [19] | International Conference on Control, Automation and Systems | 10 | |

| [24] | 2011 International Conference on Body Sensor Networks | 10 | |

| [44] | IEEE Access | 9 | |

| [11] | IEEE Transaction | 9 | |

| 1 | International Conference on Contemporary Computing | 8 | |

| [6] | Procedia Computer Science Applications | 0.29 | 8 |

| [8] | International Journal of Scientific & Engineering Research | 8 | |

| [64] | IEEE Transactions | 8 | |

| [4] | International Colloquium on Signal Processing & Its Applications | 7 | |

| [5] | International Journal of Computing Applications | 7 | |

| [18] | International Conference on Advances in Electronics, Computers and Communications | 7 | |

| [40] | IEEE Sensors Journal | 7 | |

| [7] | International Journal of Innovative Research in Computer and Communication Engineering | 6 | |

| [14] | International Conference on Wearable and Implantable Body Sensor Networks | 6 | |

| [15] | International Conference on Control, Decision and Information Technologies | 6 | |

| [27] | International Journal of Information Technology | 0.80 | 6 |

| [33] | International Conference on Electrical Engineering, Computing Science and Automatic Control | 6 | |

| [59] | Pattern Recognition | 3.60 | 6 |

| [60] | IEEE Access | 6 | |

| [12] | IEEE | 5 | |

| [34] | IEEE Access | 5 | |

| [66] | IEEE Signal Processing Letters | 5 | |

| [67] | IEEE Access | 5 | |

| [22] | IEEE | 4 | |

| [43] | IEEE | 4 | |

| [46] | IEEE | 4 | |

| [49] | IEEE | 4 | |

| [36] | IEEE Sensor Journal | 3 | |

| [41] | Advanced Robotics | 3 | |

| [42] | Sensors Journal | 2 | |

| [48] | International Conference on Electronic Devices, Systems and Applications | 2 | |

| [53] | Software Computing Applications | 1 | |

| [62] | IEEE Sensors Journal | 1 |

| Ref | Classification and Recognition Algorithms | Results (Accuracy/Efficiency/Outcome) |

|---|---|---|

| [71] | 3-dimensional residual ConvNet and bi-directional LSTM networks | 89.8% on DEVISIGN_D dataset and 86.9% on SLR dataset |

| [34] | Convolutional self-organizing map | 89.5% on Deep Labv3+ hand semantic segmentation |

| [37] | Support Vector Machine (SVM) | 91.93% recognition accuracy |

| [61] | PCA and SVM | 88.7% average accuracy by leave one out strategy |

| [45] | Aligned Random Sampling in Segments | Recognition accuracy of 96.7% on CSL dataset and 63.78% on IsoGD dataset |

| [46] | Gradient Boost Tree with Deep NN | Recognition accuracy over 98.00% |

| [42] | LSTM Model | 89.5% on isolated sign words and 72.3% on signed sentences |

| [41] | LDA, KNN, and SVM | 98% average accuracy on ASL |

| [38] | Wrist based gesture recognition system | 92.66% on air gestures and 88.8% on surface gestures |

| [35] | Local Fusion algorithm on motion sensor | F1 score of 91%, mean accuracy of 92% and 93% gyro-to-accele ratio on LSF data |

| [57] | Orientation Histogram and Statistical (COHST) Features and Wavelet Features based Neural Network | Recognition rate of 98.17% |

| [58] | Wavelet transform and neural network | 94.06% on sensitivity of gesture recognition |

| [51] | Hough transform and neural NN | 92.3% recognition accuracy on ASL |

| [52] | B-Spline Approximation and support vector machines (SVM) | 90% for alphabets and 92% for numbers on average 91% |

| [53] | Hybrid pulse-coupled neural network (PCNN), non-deterministic finite automaton (NFA) and “best-match” | 96% on pose invariant restrictions |

| [54] | Local binary patterns (LBP) and principal component analysis (PCA) Hidden Markov Model (HMM) | 93% recognition accuracy |

| [56] | Local Binary Patterns, Principal Component Analysis, Hidden Markov Model | 99.97% signer independent recognition accuracy |

| [15] | Matching technique | Voice and Display based output |

| [16] | template matching | Computer display based output |

| [17] | HMM-based model | Text-to-speech based outcome |

| [18] | Matching technique | computer display and voice based output |

| [83] | HMM and Parallel HMM | 99.75% recognition accuracy |

| [19] | Matching algorithm | 92% |

| [25] | statistical template matching with LabVIEW Interface | 95.4% as confidence intervals |

| [27] | Statistical Template Matching | 69.1% accuracy with LMS and 85% for excluding ambiguous signs |

| [28] | matching template | 89% in case of translating all gestures, 93.33% for numbers, 78.33% for gesture recognition and 95% overall average accuracy. |

| [30] | selection-elimination embedded intelligent algorithm | System efficiency was enhanced from 83.1% to 94.5% |

| [84] | artificial neural networks (ANN) | 92% and 95% accuracy for global and local feature extraction |

| [31] | ANN | 89% recognition accuracy for sentences and punctuation |

| [32] | Hand segmentation, tracking feature extraction, classification for skin blob tracking | 97% recognition rate for signer independent platform |

| [33] | NN based cross validation method | 96.1% |

| [89] | Modified K-Nearest Neighbor (MKNN) | 98.9% |

| [90] | decision tree and multi stream hidden Markov | 72.5% |

| [8] | Motion sensor-based matching system | Voice and display based output |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amin, M.S.; Rizvi, S.T.H.; Hossain, M.M. A Comparative Review on Applications of Different Sensors for Sign Language Recognition. J. Imaging 2022, 8, 98. https://doi.org/10.3390/jimaging8040098

Amin MS, Rizvi STH, Hossain MM. A Comparative Review on Applications of Different Sensors for Sign Language Recognition. Journal of Imaging. 2022; 8(4):98. https://doi.org/10.3390/jimaging8040098

Chicago/Turabian StyleAmin, Muhammad Saad, Syed Tahir Hussain Rizvi, and Md. Murad Hossain. 2022. "A Comparative Review on Applications of Different Sensors for Sign Language Recognition" Journal of Imaging 8, no. 4: 98. https://doi.org/10.3390/jimaging8040098

APA StyleAmin, M. S., Rizvi, S. T. H., & Hossain, M. M. (2022). A Comparative Review on Applications of Different Sensors for Sign Language Recognition. Journal of Imaging, 8(4), 98. https://doi.org/10.3390/jimaging8040098