LightBot: A Multi-Light Position Robotic Acquisition System for Adaptive Capturing of Cultural Heritage Surfaces

Abstract

:1. Introduction

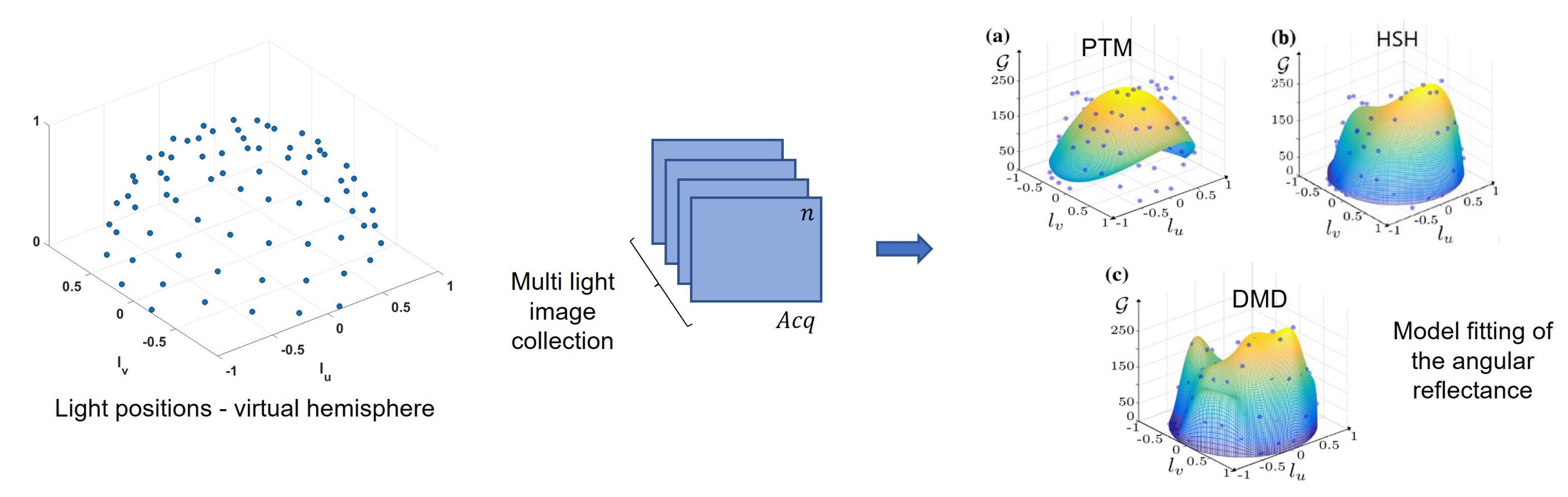

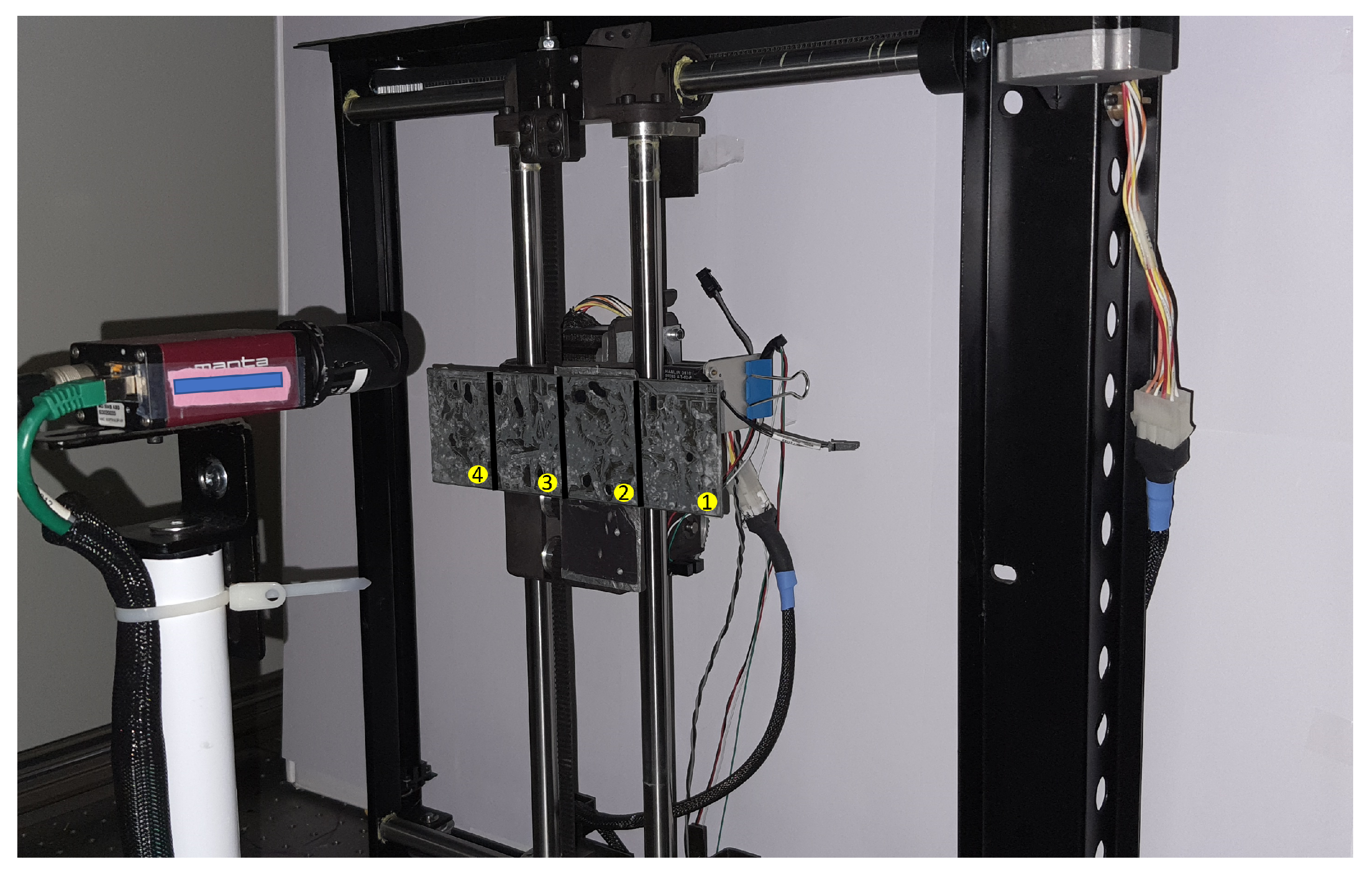

2. Proposed System

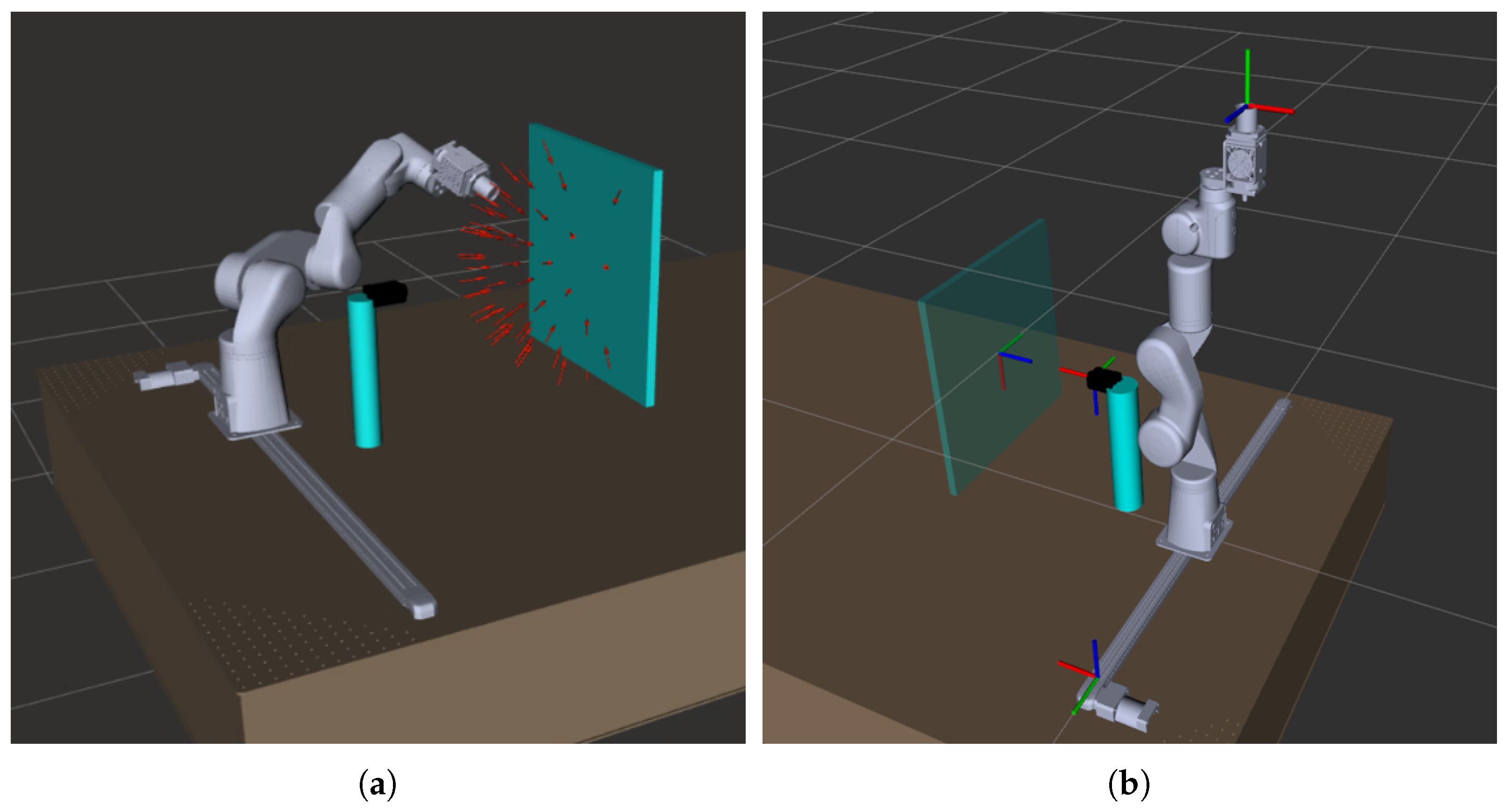

2.1. Engineering Design

2.2. System Architecture

2.3. Motion Planning

2.4. System Calibration

3. Applications

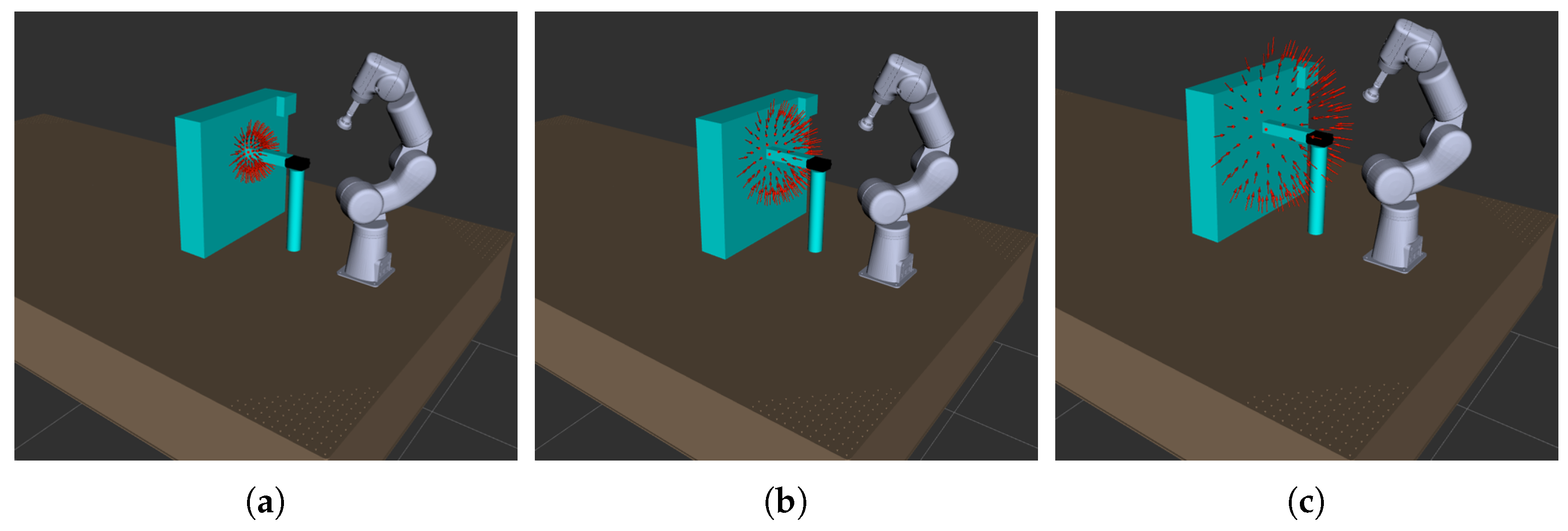

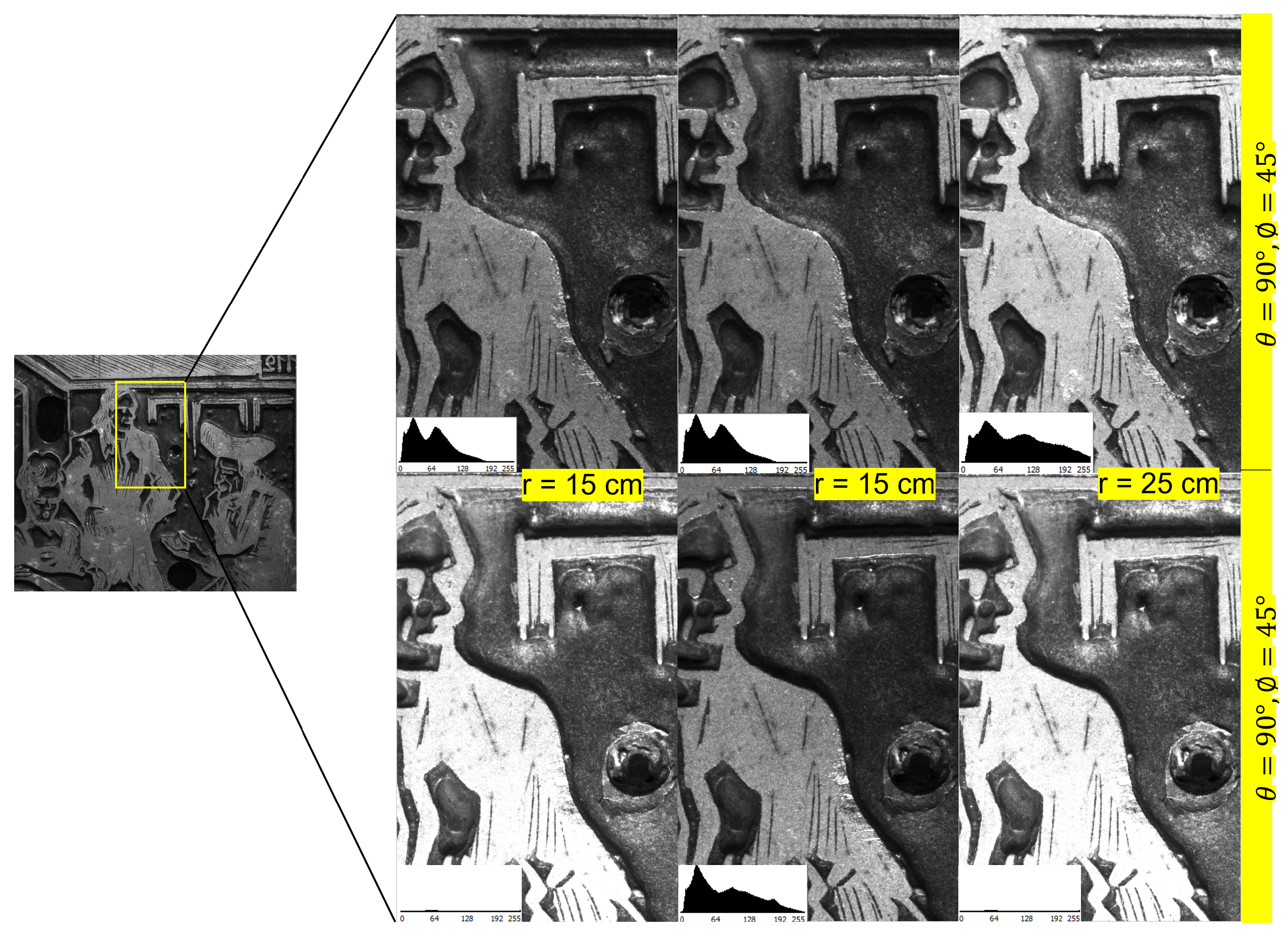

3.1. Surface Adaptive Virtual Dome with Adjustable Radius

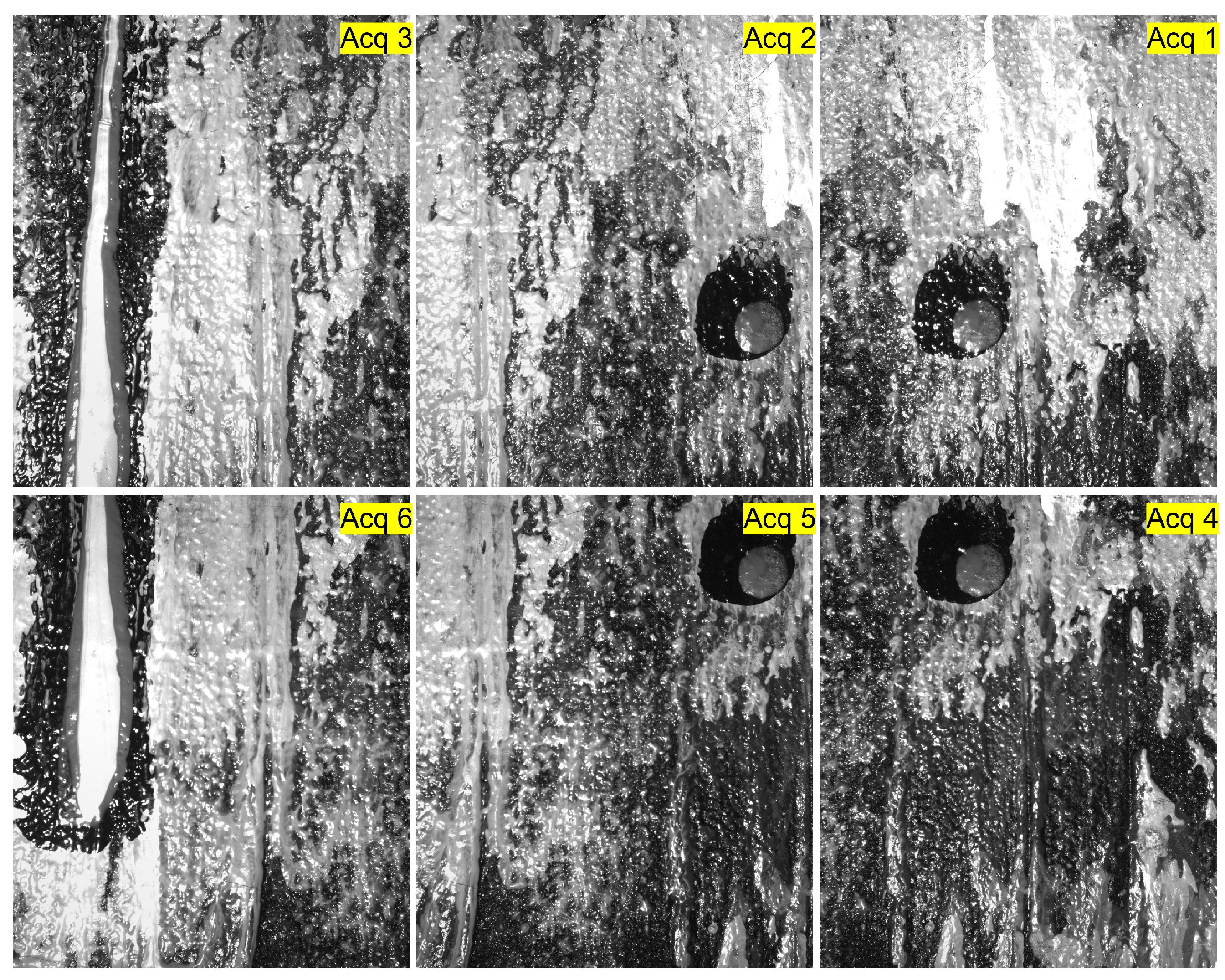

3.2. RTI of Large Surfaces Using Data Stitching Methods

3.3. Batch Acquisition

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| RTI | Reflectance Transformation Imaging |

| CH | Cultural Heritage |

| ROS | Robot Operating System |

| BRDF | Bidirectional Reflectance Distribution Function |

| H-RTI | Highlight Reflectance Transformation Imaging |

| LP | Light Positions |

| NBLP | Next Best Light Position |

| HD-RTI | High Dynamic range Reflectance Transformation Imaging |

| ROI | Region Of Interest |

| API | Application Programming Interface |

References

- Woodham, R.J. Photometric stereo: A reflectance map technique for determining surface orientation from image intensity. In Image Understanding Systems and Industrial Applications I; SPIE: Bellingham, WA, USA, 1979; Volume 155, pp. 136–143. [Google Scholar]

- Mudge, M.; Malzbender, T.; Chalmers, A.; Scopigno, R.; Davis, J.; Wang, O.; Gunawardane, P.; Ashley, M.; Doerr, M.; Proenca, A.; et al. Image-Based Empirical Information Acquisition, Scientific Reliability, and Long-Term Digital Preservation for the Natural Sciences and Cultural Heritage. In Proceedings of the Eurographics 2008—Tutorials, Hersonissos, Crete, Greece, 14–18 April 2008; Roussou, M., Leigh, J., Eds.; The Eurographics Association: Crete, Greece, 2008. [Google Scholar] [CrossRef]

- Ciortan, I.; Pintus, R.; Marchioro, G.; Daffara, C.; Giachetti, A.; Gobbetti, E. A practical reflectance transformation imaging pipeline for surface characterization in cultural heritage. In Proceedings of the Eurographics Workshop on Graphics and Cultural Heritage, Genova, Italy, 5–7 October 2016. [Google Scholar]

- Pitard, G.; Le Goic, G.; Favreliere, H.; Samper, S. Discrete Modal Decomposition for Surface Appearance Modelling and Rendering. In Proceedings of the Optical Measurement Systems for Industrial Inspection IX, Munich, Germany, 22–25 June 2015; p. 952523. [Google Scholar]

- Earl, G.; Basford, P.; Bischoff, A.; Bowman, A.; Crowther, C.; Dahl, J.; Hodgson, M.; Isaksen, L.; Kotoula, E.; Martinez, K.; et al. Reflectance transformation imaging systems for ancient documentary artefacts. Electron. Vis. Arts 2011, 147–154. [Google Scholar]

- Castro, Y.; Nurit, M.; Pitard, G.; Zendagui, A. Calibration of spatial distribution of light sources in reflectance transformation imaging based on adaptive local density estimation. J. Electron. Imaging 2020, 29, 041004. [Google Scholar] [CrossRef]

- Malzbender, T.; Gelb, D.; Wolters, H. Polynomial texture maps. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 519–528. [Google Scholar]

- Mytum, H.; Peterson, J.R. The Application of Reflectance Transformation Imaging (RTI) in Historical Archaeology. Hist. Archaeol. 2018, 5, 489–503. [Google Scholar] [CrossRef] [Green Version]

- Kitanovski, V.; Hardeberg, J.Y. Objective evaluation of relighting models on translucent materials from multispectral RTI images. Electron. Imaging 2021, 2021, 133-1–133-8. [Google Scholar] [CrossRef]

- Santos, P.; Ritz, M.; Fuhrmann, C.; Fellner, D. 3D mass digitization: A milestone for archeological documentation. Virtual Archaeol. Rev. 2017, 8, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Nicodemus, F.E. Directional reflectance and emissivity of an opaque surface. Appl. Opt. 1965, 4, 767–775. [Google Scholar] [CrossRef]

- Avgousti, A.; Nikolaidou, A.; Georgiou, R. OpeNumisma: A Software Platform Managing Numismatic Collections with A Particular Focus On Reflectance Transformation Imaging. Code4Lib J. 2017, 37, 1. [Google Scholar]

- Siatou, A.; Nurit, M.; Brambilla, L.; Mansouri, A.; Le Goïc, G.; Degrigny, C. Surface appearance assessment as a tool for characterizing silver tarnishing. In Proceedings of the Association Internationale de Couleur Interim Meeting (AIC 2020), Virtual, 25–27 November 2020. [Google Scholar] [CrossRef]

- Imvia. Available online: https://imvia.u-bourgogne.fr/laboratoire/ (accessed on 11 May 2022).

- Luxman, R.; Nurit, M.; Goïc, G.L.; Marzani, F.; Mansouri, A. Next Best Light Position: A self configuring approach for the Reflectance Transformation Imaging acquisition process. Electron. Imaging 2021, 2021, 132–141. [Google Scholar] [CrossRef]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Enebuse, I.; Foo, M.; Ibrahim, B.K.K.; Ahmed, H.; Supmak, F.; Eyobu, O.S. A comparative review of hand-eye calibration techniques for vision guided robots. IEEE Access 2021, 9, 13143–113155. [Google Scholar] [CrossRef]

- McGuigan, M.; Christmas, J. Automating RTI: Automatic light direction detection and correcting non-uniform lighting for more accurate surface normals. Comput. Vis. Image Underst. 2020, 192, 102880. [Google Scholar] [CrossRef]

- Huang, X.; Walton, M.; Bearman, G.; Cossairt, O. Near light correction for image relighting and 3D shape recovery. In Proceedings of the 2015 Digital Heritage, Granada, Spain, 28 September–2 October 2015; Volume 1, pp. 215–222. [Google Scholar]

- Eastman, R.D.; Netanyahu, N.S.; Le Moigne, J. Survey of image registration methods. Image Regist. Remote Sens. 2011, 21, 35–76. [Google Scholar]

- Degrigny, C.; Argyropoulos, V.; Pouli, P.; Grech, M.; Kreislova, K.; Harith, M.; Mirambet, F.; Haddad, N.; Angelini, E.; Cano, E.; et al. The methodology for the PROMET project to develop/test new non-toxic corrosion inhibitors and coatings for iron and copper alloy objects housed in Mediterranean museums. In Proceedings of the Interim Meeting of the ICOM-CC Metal WG, Amsterdam, The Nerherlands, 17–21 September 2007; Volume 5, pp. 31–37. [Google Scholar]

- Nurit, M.; Le Goïc, G.; Lewis, D.; Castro, Y.; Zendagui, A.; Chatoux, H.; Favrelière, H.; Maniglier, S.; Jochum, P.; Mansouri, A. HD-RTI: An adaptive multi-light imaging approach for the quality assessment of manufactured surfaces. Comput. Ind. 2021, 132, 103500. [Google Scholar] [CrossRef]

| Acquisition Time | Number of Interventions | |

|---|---|---|

| Conventional systems | 4 | |

| LightBot | 1 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luxman, R.; Castro, Y.E.; Chatoux, H.; Nurit, M.; Siatou, A.; Le Goïc, G.; Brambilla, L.; Degrigny, C.; Marzani, F.; Mansouri, A. LightBot: A Multi-Light Position Robotic Acquisition System for Adaptive Capturing of Cultural Heritage Surfaces. J. Imaging 2022, 8, 134. https://doi.org/10.3390/jimaging8050134

Luxman R, Castro YE, Chatoux H, Nurit M, Siatou A, Le Goïc G, Brambilla L, Degrigny C, Marzani F, Mansouri A. LightBot: A Multi-Light Position Robotic Acquisition System for Adaptive Capturing of Cultural Heritage Surfaces. Journal of Imaging. 2022; 8(5):134. https://doi.org/10.3390/jimaging8050134

Chicago/Turabian StyleLuxman, Ramamoorthy, Yuly Emilia Castro, Hermine Chatoux, Marvin Nurit, Amalia Siatou, Gaëtan Le Goïc, Laura Brambilla, Christian Degrigny, Franck Marzani, and Alamin Mansouri. 2022. "LightBot: A Multi-Light Position Robotic Acquisition System for Adaptive Capturing of Cultural Heritage Surfaces" Journal of Imaging 8, no. 5: 134. https://doi.org/10.3390/jimaging8050134

APA StyleLuxman, R., Castro, Y. E., Chatoux, H., Nurit, M., Siatou, A., Le Goïc, G., Brambilla, L., Degrigny, C., Marzani, F., & Mansouri, A. (2022). LightBot: A Multi-Light Position Robotic Acquisition System for Adaptive Capturing of Cultural Heritage Surfaces. Journal of Imaging, 8(5), 134. https://doi.org/10.3390/jimaging8050134