Multimodal Registration for Image-Guided EBUS Bronchoscopy

Abstract

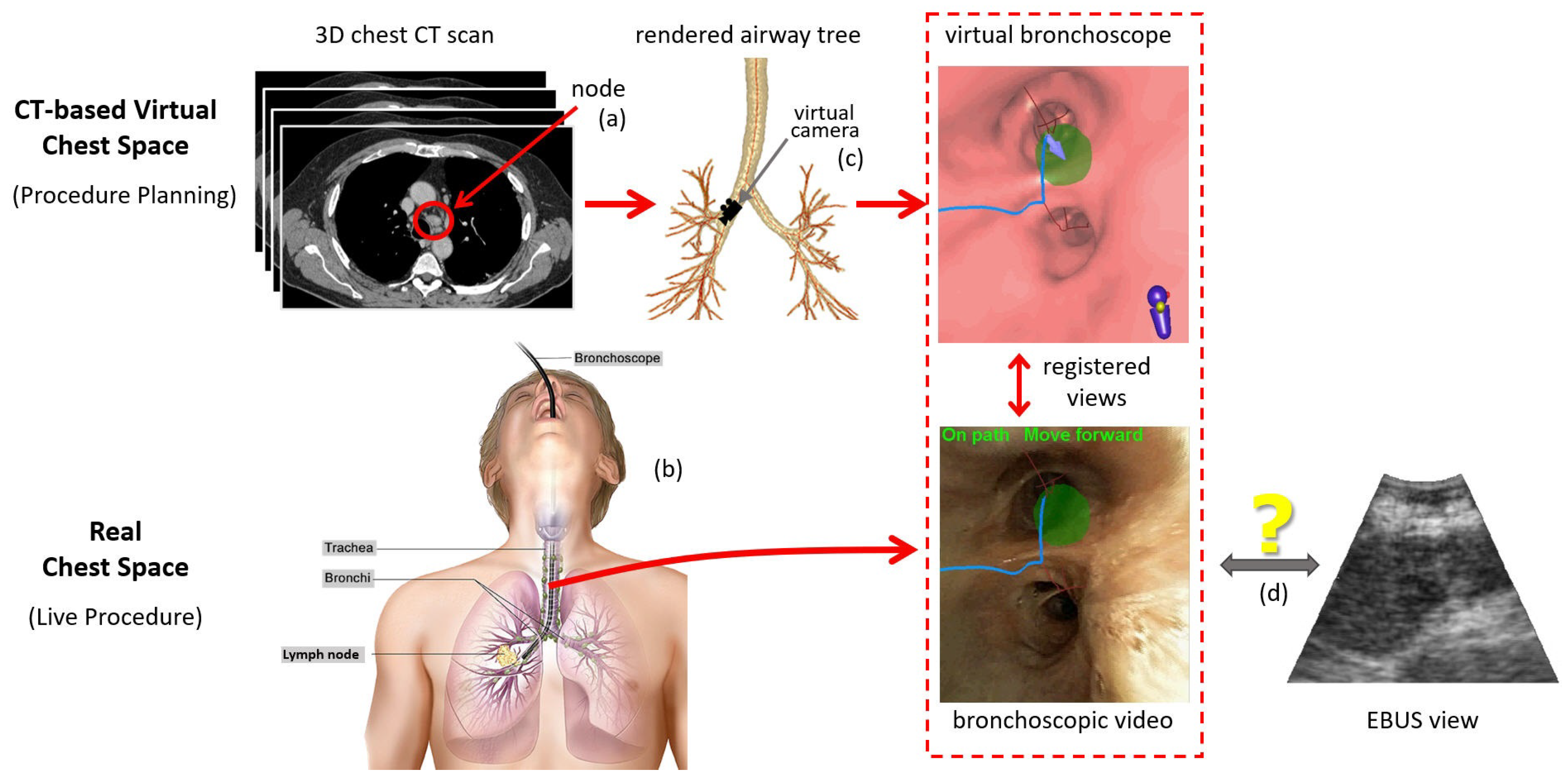

:1. Introduction

2. Methods

2.1. Overview

- Bronchoscope tip starts at point with axis .

- Videobronchoscope camera axis = , offset by an angle from .

- EBUS probe axis ⊥ at a distance 6 mm from the tip start ; i.e., mm, where is the origin of .

- 2D EBUS fan-shaped scan plane sweep = 60 with range = 4 cm.

2.2. Virtual-to-Real EBUS Registration

| Algorithm 1: Multimodal CT-EBUS Registration Algorithm. |

|

- 1.

- Compute dot product , where and .

- 2.

- If , is part of the correct airway and is kept as the updated vertex.

- 3.

- Otherwise, is from the wrong branch. Find the closest airway centerline point to and search for a new surface voxel candidate based on the HU threshold along the direction from to .

2.3. Implementation

3. Results

3.1. CT-EBUS Registration Study

- 1.

- Position difference , which measures the Euclidean distance between and :

- 2.

- Direction error , which gives the angle between and :

- 3.

- Needle difference , which indicates the distance between two extended needle tips at and :

- 1.

- Positional parameters , , and , range [−10 mm, 10 mm], step size = 2.5 mm.

- 2.

- Angle parameters , , and , range[−100, 100], step size = 25.

- 3.

- Iteration parameter T from 5 to 25, step size 5.

3.2. Image-Guided EBUS Bronchoscopy System

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wahidi, M.; Herth, F.; Chen, A.; Cheng, G.; Yarmus, L. State of the Art: Interventional Pulmonology. Chest 2020, 157, 724–736. [Google Scholar] [CrossRef] [PubMed]

- Avasarala, S.K.; Aravena, C.; Almeida, F.A. Convex probe endobronchial ultrasound: Historical, contemporary, and cutting-edge applications. J. Thorac. Disease 2020, 12, 1085–1099. [Google Scholar] [CrossRef] [PubMed]

- Sheski, F.; Mathur, P. Endobronchial Ultrasound. Chest 2008, 133, 264–270. [Google Scholar] [CrossRef]

- Kinsey, C.M.; Arenberg, D.A. Endobronchial Ultrasound–guided Transbronchial Needle Aspiration for Non–Small Cell Lung Cancer Staging. Am. J. Respir. Crit. Care Med. 2014, 189, 640–649. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Villar, A.; Leiro-Fernández, V.; Botana-Rial, M.; Represas-Represas, C.; Núñez-Delgado, M. The endobronchial ultrasound-guided transbronchial needle biopsy learning curve for mediastinal and hilar lymph node diagnosis. Chest 2012, 141, 278–279. [Google Scholar] [CrossRef] [PubMed]

- Ernst, A.; Herth, F.J. Endobronchial Ultrasound: An Atlas and Practical Guide; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar]

- Davoudi, M.; Colt, H.; Osann, K.; Lamb, C.; Mullon, J. Endobronchial ultrasound skills and tasks assessment tool. Am. J. Respir. Crit. Care Med. 2012, 186, 773–779. [Google Scholar] [CrossRef]

- Folch, E.; Majid, A. Point: Are > 50 supervised procedures required to develop competency in performing endobronchial ultrasound-guided transbronchial needle aspiration for mediastinal staging? Yes. Chest 2013, 143, 888–891. [Google Scholar] [CrossRef]

- Reynisson, P.J.; Leira, H.O.; Hernes, T.N.; Hofstad, E.F.; Scali, M.; Sorger, H.; Amundsen, T.; Lindseth, F.; Langø, T. Navigated bronchoscopy: A technical review. J. Bronchol. Interv. Pulmonol. 2014, 21, 242–264. [Google Scholar] [CrossRef]

- Criner, G.J.; Eberhardt, R.; Fernandez-Bussy, S.; Gompelmann, D.; Maldonado, F.; Patel, N.; Shah, P.L.; Slebos, D.J.; Valipour, A.; Wahidi, M.M.; et al. Interventional Bronchoscopy: State-of-the-Art Review. Am. J. Respir. Crit. Care Med. 2020, 202, 29–50. [Google Scholar] [CrossRef]

- Vining, D.J.; Liu, K.; Choplin, R.H.; Haponik, E.F. Virtual bronchoscopy: Relationships of virtual reality endobronchial simulations to actual bronchoscopic findings. Chest 1996, 109, 549–553. [Google Scholar] [CrossRef]

- Mori, K.; Hasegawa, J.; Toriwaki, J.; Anno, H.; Katada, K. Recognition of bronchus in three dimensional X-Ray CT images with application to virtualized bronchoscopy system. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 3, pp. 528–532. [Google Scholar]

- Higgins, W.E.; Ramaswamy, K.; Swift, R.; McLennan, G.; Hoffman, E.A. Virtual bronchoscopy for 3D pulmonary image assessment: State of the art and future needs. Radiographics 1998, 18, 761–778. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Merritt, S.; Khare, R.; Bascom, R.; Higgins, W. Interactive CT-Video Registration for Image-Guided Bronchoscopy. IEEE Trans. Med. Imaging 2013, 32, 1376–1396. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wahidi, M.M.; Hulett, C.; Pastis, N.; Shepherd, R.W.; Shofer, S.L.; Mahmood, K.; Lee, H.; Malhotra, R.; Moser, B.; Silvestri, G.A. Learning experience of linear endobronchial ultrasound among pulmonary trainees. Chest 2014, 145, 574–578. [Google Scholar] [CrossRef] [PubMed]

- Merritt, S.A.; Gibbs, J.D.; Yu, K.C.; Patel, V.; Rai, L.; Cornish, D.C.; Bascom, R.; Higgins, W.E. Image-Guided Bronchoscopy for Peripheral Lung Lesions: A Phantom Study. Chest 2008, 134, 1017–1026. [Google Scholar] [CrossRef] [PubMed]

- Ost, D.E.; Ernst, A.; Lei, X.; Feller-Kopman, D.; Eapen, G.A.; Kovitz, K.L.; Herth, F.J.; Simoff, M. Diagnostic yield of endobronchial ultrasound-guided transbronchial needle aspiration: Results of the AQuIRE Bronchoscopy Registry. Chest 2011, 140, 1557–1566. [Google Scholar] [CrossRef] [Green Version]

- Ost, D.E.; Ernst, A.; Lei, X.; Kovitz, K.L.; Benzaquen, S.; Diaz-Mendoza, J.; Greenhill, S.; Toth, J.; Feller-Kopman, D.; Puchalski, J.; et al. Diagnostic Yield and Complications of Bronchoscopy for Peripheral Lung Lesions. Results of the AQuIRE Registry. Am. J. Respir. Crit. Care Med. 2016, 193, 68–77. [Google Scholar] [CrossRef] [Green Version]

- Sato, M.; Chen, F.; Aoyama, A.; Yamada, T.; Ikeda, M.; Bando, T.; Date, H. Virtual endobronchial ultrasound for transbronchial needle aspiration. J. Thorac. Cardiovas. Surg. 2013, 146, 1204–1212. [Google Scholar] [CrossRef] [Green Version]

- Sorger, H.; Hofstad, E.F.; Amundsen, T.; Langø, T.; Leira, H.O. A novel platform for electromagnetic navigated ultrasound bronchoscopy (EBUS). Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1431–1443. [Google Scholar] [CrossRef] [Green Version]

- Sorger, H.; Hofstad, E.; Amundsen, T.; Lango, T.; Bakeng, J.; Leira, H. A multimodal image guiding system for Navigated Ultrasound Bronchoscopy (EBUS): A human feasibility study. PLoS ONE 2017, 12, e0171841. [Google Scholar] [CrossRef]

- Tamiya, M.; Okamoto, N.; Sasada, S.; Shiroyama, T.; Morishita, N.; Suzuki, H.; Yoshida, E.; Hirashima, T.; Kawahara, K.; Kawase, I. Diagnostic yield of combined bronchoscopy and endobronchial ultrasonography, under LungPoint guidance for small peripheral pulmonary lesions. Respirology 2013, 18, 834–839. [Google Scholar] [CrossRef]

- Luo, X.; Mori, K. Beyond Current Guided Bronchoscopy: A Robust and Real-Time Bronchoscopic Ultrasound Navigation System. In MICCAI 2013 Lecture Notes in Computer Science; Mori, K., Sakuma, I., Sato, Y., Barillot, C., Navab, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8149, pp. 388–395. [Google Scholar]

- Asano, F. Practical Application of Virtual Bronchoscopic Navigation. In Interventional Bronchoscopy; Mehta, A., Jain, P., Eds.; Humana: Totowa, NJ, Canada, 2013; pp. 121–140. [Google Scholar]

- Zang, X.; Gibbs, J.; Cheirsilp, R.; Byrnes, P.; Toth, J.; Bascom, R.; Higgins, W. Optimal Route Planning for Image-Guided EBUS Bronchoscopy. Comput. Biol. Med. 2019, 112, 103361. [Google Scholar] [CrossRef] [PubMed]

- Zang, X.; Cheirsilp, R.; Byrnes, P.D.; Kuhlengel, T.K.; Abendroth, C.; Allen, T.; Mahraj, R.; Toth, J.; Bascom, R.; Higgins, W.E. Image-guided EBUS bronchoscopy system for lung-cancer staging. Inform. Med. Unlocked 2021, 25, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Moore, J.; Guiraudon, G.; Jones, D.L.; Bainbridge, D.; Ren, J.; Peters, T.M. Dynamic 2D Ultrasound and 3D CT Image Registration of the Beating Heart. IEEE Trans. Med. Imaging 2009, 28, 1179–1189. [Google Scholar] [CrossRef]

- Kaar, M.; Hoffmann, R.; Bergmann, H.; Figl, M.; Bloch, C.; Kratochwil, A.; Birkfellner, W.; Hummel, J. Comparison of two navigation system designs for flexible endoscopes using abdominal 3D ultrasound. In Proceedings of the SPIE Medical Imaging 2011, Lake Buena Vista, FL, USA, 13–17 February 2011; Volume 7964, pp. 18–25. [Google Scholar]

- Rueckert, D.; Clarkson, M.J.; Hill, D.L.J.; Hawkes, D.J. Non-rigid registration using higher-order mutual information. In Proceedings of the SPIE Medical Imaging 2000, San Diego, CA, USA, 18–29 March 2000; pp. 438–447. [Google Scholar]

- Sotiras, A.; Davatzikos, C.; Paragios, N. Deformable Medical Image Registration: A survey. IEEE Trans. Med. Imaging 2013, 32, 1153–1190. [Google Scholar] [CrossRef] [Green Version]

- Studholme, C.; Hill, D.L.G.; Hawkes, D.J. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recognit. 1999, 32, 71–86. [Google Scholar] [CrossRef]

- Studholme, C.; Drapaca, C.; Iordanova, B.; Cardenas, V. Deformation-based mapping of volume change from serial brain MRI in the presence of local tissue contrast change. IEEE Trans. Med. Imaging 2006, 25, 626–639. [Google Scholar] [CrossRef] [PubMed]

- Knops, Z.F.; Maintz, J.B.A.; Viergever, M.A.; Pluim, J.P.W. Registration using segment intensity remapping and mutual information. In Proceedings of the International Conference on Medical Imaging and Computer Assisted Intervention, Saint-Malo, France, 26–29 September 2004; pp. 805–812. [Google Scholar]

- Gibbs, J.; Graham, M.W.; Bascom, R.; Cornish, D.; Khare, R.; Higgins, W. Optimal procedure planning and guidance system for peripheral bronchoscopy. IEEE Trans. Biomed. Eng. 2014, 61, 638–657. [Google Scholar] [CrossRef] [Green Version]

- Gibbs, J.D.; Graham, M.W.; Higgins, W.E. 3D MDCT-based system for planning peripheral bronchoscopic procedures. Comput. Biol. Med. 2009, 39, 266–279. [Google Scholar] [CrossRef] [Green Version]

- Graham, M.W.; Gibbs, J.D.; Cornish, D.C.; Higgins, W.E. Robust 3D Airway-Tree Segmentation for Image-Guided Peripheral Bronchoscopy. IEEE Trans. Med. Imaging 2010, 29, 982–997. [Google Scholar] [CrossRef]

- Lu, K.; Higgins, W.E. Segmentation of the central-chest lymph nodes in 3D MDCT images. Comput. Biol. Med. 2011, 41, 780–789. [Google Scholar] [CrossRef] [Green Version]

- Bricault, I.; Ferretti, G.; Cinquin, P. Registration of Real and CT-Derived Virtual Bronchoscopic Images to Assist Transbronchial Biopsy. IEEE Trans. Med. Imaging 1998, 17, 703–714. [Google Scholar] [CrossRef] [PubMed]

- Helferty, J.P.; Sherbondy, A.J.; Kiraly, A.P.; Higgins, W.E. Computer-based system for the virtual-endoscopic guidance of bronchoscopy. Comput. Vis. Image Underst. 2007, 108, 171–187. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Crum, W.; Camara, O.; Hill, D. Generalized Overlap Measures for Evaluation and Validation in Medical Image Analysis. IEEE Trans. Med. Imaging 2006, 25, 1451–1461. [Google Scholar] [CrossRef] [PubMed]

- Rueda, S.; Fathima, S.; Knight, C.L.; Yaqub, M.; Papageorghiou, A.T.; Rahmatullah, B.; Foi, A.; Maggioni, M.; Pepe, A.; Tohka, J.; et al. Evaluation and comparison of current fetal ultrasound image segmentation methods for biometric measurements: A grand challenge. IEEE Trans. Med. Imaging 2014, 33, 797–813. [Google Scholar] [CrossRef]

- Zang, X.; Bascom, R.; Gilbert, C.; Toth, J.; Higgins, W. Methods for 2-D and 3-D Endobronchial Ultrasound Image Segmentation. IEEE Trans. Biomed. Eng. 2016, 63, 1426–1439. [Google Scholar] [CrossRef] [Green Version]

- Nelder, J.A.; Mead, R. A simplex method for function optimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Higgins, W.E.; Helferty, J.P.; Lu, K.; Merritt, S.A.; Rai, L.; Yu, K.C. 3D CT-video fusion for image-guided bronchoscopy. Comput. Med. Imaging Graph. 2008, 32, 159–173. [Google Scholar] [CrossRef] [Green Version]

- Schroeder, W.; Martin, K.; Lorensen, B. The Visualization Toolkit, 4th ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Khare, R.; Bascom, R.; Higgins, W. Hands-Free System for Bronchoscopy Planning and Guidance. IEEE Trans. Biomed. Eng. 2015, 62, 2794–2811. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Ahmad, D.; Toth, J.; Bascom, R.; Higgins, W.E. Endobronchial Ultrasound Image Simulation for Image-Guided Bronchoscopy. IEEE Trans. Biomed. Eng. 2022. [Google Scholar]

- Bray, F.; Ferlay, J.; Soerjomataram, I.; Siegel, R.; Torre, L.; Jemal, A. Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2018, 68, 394–424. [Google Scholar] [CrossRef] [Green Version]

- Lu, K.; Taeprasartsit, P.; Bascom, R.; Mahraj, R.; Higgins, W. Automatic definition of the central-chest lymph-node stations. Int. J. Comput. Assist. Radiol. Surg. 2011, 6, 539–555. [Google Scholar] [CrossRef] [PubMed]

- Kuhlengel, T.K.; Higgins, W.E. Multi-Destination Planning for Comprehensive Lymph Node Staging Bronchoscopy. In SPIE Medical Imaging 2020: Image-Guided Procedures, Robotic Interventions, and Modeling; Fei, B., Linte, C., Eds.; SPIE: Bellingham, DC, USA, 2020; Volume 11315, pp. 113151T-1–113151T-7. [Google Scholar]

- Zhao, W. Planning and Guidance Methods for Peripheral Bronchoscopy. Ph.D. Thesis, The Pennsylvania State University, Department of Electrical Engineering, State College, PA, USA, 2022. [Google Scholar]

- Fujino, K.; Ujiie, H.; Kinoshita, T.; Lee, C.Y.; Igai, H.; Inage, T.; Motooka, Y.; Gregor, A.; Suzuki, M.; Yasufuku, K. First Evaluation of the Next-Generation Endobronchial Ultrasound System in Preclinical Models. Ann. Thorac. Surg. 2019, 107, 1464–1471. [Google Scholar] [CrossRef] [PubMed]

| Metric | Mean ± Std. Dev. | [Low, High] |

|---|---|---|

| (mm) | 2.2 mm ± 2.3 mm | [0.2 mm, 11.8 mm] |

| (mm) | 4.3 mm ± 3.0 mm | [1.1 mm, 11.7 mm] |

| () | 11.8 ± 8.8 | [0.4, 41.3] |

| (mm) | (mm) | (mm) | () | |||

|---|---|---|---|---|---|---|

| −10.0 | 3.7 ± 3.4 | [1.1, 11.7] | 7.3 ± 5.1 | [2.1, 14.6] | 18.1 ± 11.1 | [4.6, 38.3] |

| −7.5 | 4.3 ± 3.3 | [1.8, 12.2] | 8.4 ± 5.3 | [2.5, 18.7] | 21.2 ± 15.2 | [6, 55.9] |

| −5.0 | 4.5 ± 3.5 | [1.3, 12.2] | 6.4 ± 4 | [1.5, 11.4] | 18.9 ± 12.7 | [5.9, 45.8] |

| −2.5 | 4.6 ± 3.1 | [1, 10.2] | 7.8 ± 4 | [2.1, 14.4] | 22.5 ± 14.5 | [6.2, 46.5] |

| 0.0 | 3.7 ± 3.2 | [1.4, 10.5] | 7.8 ± 3.9 | [2.7, 14.4] | 22.8 ± 12.9 | [8.8, 46.2] |

| 2.5 | 4 ± 2.8 | [0.9, 9.4] | 8.5 ± 6.7 | [1.4, 20.9] | 25.9 ± 19.4 | [5.7, 61.5] |

| 5.0 | 3.8 ± 4.5 | [0.6, 14.5] | 6.4 ± 4.8 | [2, 14.9] | 19.3 ± 12.1 | [5.9, 41.8] |

| 7.5 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] |

| 10.0 | 3.8 ± 3.9 | [1.1, 12] | 5.7 ± 4.1 | [1.6, 11.7] | 16 ± 9.8 | [5.5, 30.3] |

| (mm) | (mm) | () | ||||

|---|---|---|---|---|---|---|

| −100.0 | 6.6 ± 4.2 | [2.2, 13.4] | 9.0 ± 4.1 | [5.4, 14.5] | 25.0 ± 12.6 | [7.1, 41.2] |

| −75.0 | 4.8 ± 4.0 | [1.2, 11.5] | 8.6 ± 4.8 | [3.3, 16.2] | 24.2 ± 11.5 | [8.0, 40.4] |

| −50.0 | 5.0 ± 4.6 | [1.3, 14.9] | 6.7 ± 3.2 | [2.8, 12.3] | 17.3 ± 8.8 | [5.3, 28.2] |

| −25.0 | 3.6 ± 3.6 | [0.6, 11.2] | 8.2 ± 6.2 | [1.3, 20.1] | 23.3 ± 13.6 | [5.2, 50.8] |

| 0.0 | 4.4 ± 3.6 | [1, 11.8] | 8 ± 2.4 | [5.5, 12] | 26.3 ± 10.3 | [15.9, 47.9] |

| 25.0 | 3.5 ± 2.9 | [1, 9.9] | 7 ± 3.4 | [2.1, 13.4] | 20.1 ± 11 | [7.8, 36] |

| 50.0 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] |

| 75.0 | 3.1 ± 3.2 | [0.9, 10.4] | 6.1 ± 2.8 | [3.0, 11.5] | 16.5 ± 9.5 | [5.6, 29.4] |

| 100.0 | 3.6 ± 3.2 | [1.0, 11.0] | 5.0 ± 4.1 | [1.4, 10.5] | 19.0 ± 11.5 | [8.1, 43.7] |

| T | (mm) | (mm) | () | Time (s) | |||

|---|---|---|---|---|---|---|---|

| 5 | 3 ± 4.2 | [0.9, 13.2] | 5.9 ± 5.2 | [2, 17.7] | 17.3 ± 9.3 | [6.4, 36] | 1.3 |

| 10 | 2.8 ± 3.8 | [0.9, 11.8] | 5.3 ± 4.2 | [1.3, 11.7] | 13.6 ± 7.3 | [1.3, 21.9] | 2.6 |

| 15 | 2.8 ± 3.8 | [0.3, 11.8] | 4.8 ± 4.5 | [1.1, 11.7] | 10.8 ± 8.8 | [0.4, 22.9] | 3.4 |

| 20 | 3.2 ± 3.9 | [0.3, 11.8] | 4.4 ± 3.9 | [1.1, 11.7] | 9.6 ± 7.5 | [0.4, 20.5] | 5.0 |

| 25 | 2.7 ± 3.8 | [0.3, 11.8] | 4.2 ± 3.7 | [1.1, 11.7] | 9.8 ± 7.6 | [0.4, 20.5] | 8.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zang, X.; Zhao, W.; Toth, J.; Bascom, R.; Higgins, W. Multimodal Registration for Image-Guided EBUS Bronchoscopy. J. Imaging 2022, 8, 189. https://doi.org/10.3390/jimaging8070189

Zang X, Zhao W, Toth J, Bascom R, Higgins W. Multimodal Registration for Image-Guided EBUS Bronchoscopy. Journal of Imaging. 2022; 8(7):189. https://doi.org/10.3390/jimaging8070189

Chicago/Turabian StyleZang, Xiaonan, Wennan Zhao, Jennifer Toth, Rebecca Bascom, and William Higgins. 2022. "Multimodal Registration for Image-Guided EBUS Bronchoscopy" Journal of Imaging 8, no. 7: 189. https://doi.org/10.3390/jimaging8070189

APA StyleZang, X., Zhao, W., Toth, J., Bascom, R., & Higgins, W. (2022). Multimodal Registration for Image-Guided EBUS Bronchoscopy. Journal of Imaging, 8(7), 189. https://doi.org/10.3390/jimaging8070189