The Role of Artificial Intelligence in Echocardiography

Abstract

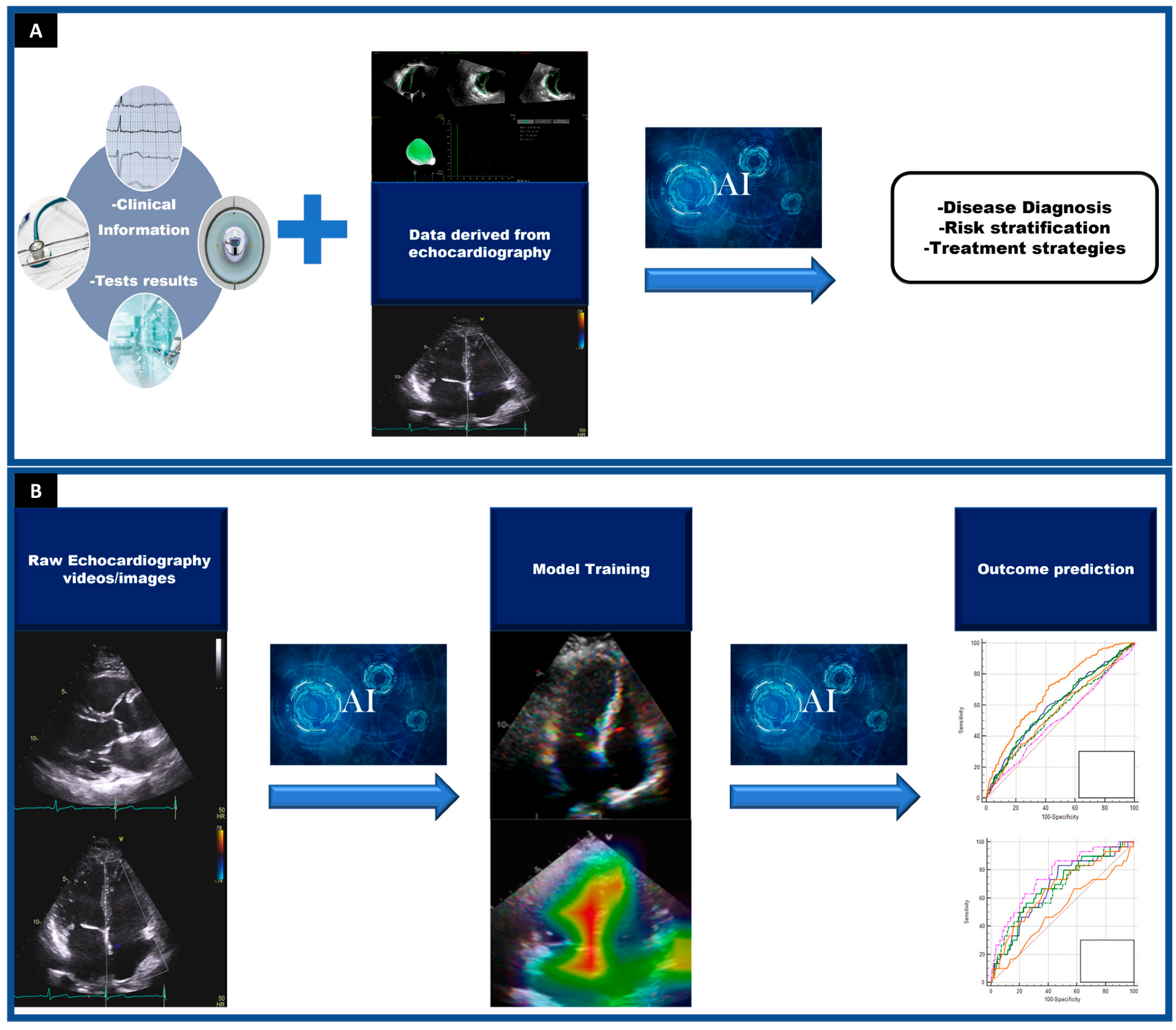

1. Introduction

2. Types of Machine Learning Algorithms

| Type of Machine Learning | Examples |

|---|---|

| Supervised learning | Logistic regression and random forests |

| Unsupervised learning | Hierarchical clustering, tensor factorization |

| Reinforcement learning | Robotics and control systems |

| Deep learning | Image recognition (echocarcardiography, chest x-ray, computed tomography). |

3. Automated Assessment of Myocardial Function and Valvular Disease

3.1. Diastolic Function

3.2. Global Longitudinal Strain

4. The Role of AI in Identifying Disease States

4.1. Valvular Heart Disease

4.2. Coronary Artery Disease

4.3. Etiology Determination of Increased Left Ventricular Wall Thickness

4.4. Cardiomyopathies

4.5. Intracardiac Masses

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Alsharqi, M.; Woodward, W.J.; Mumith, J.A.; Markham, D.C.; Upton, R.; Leeson, P. Artificial intelligence and echocardiography. Echo Res. Pract. 2018, 5, R115–R125. [Google Scholar] [CrossRef]

- Davis, A.; Billick, K.; Horton, K.; Jankowski, M.; Knoll, P.; Marshall, J.E.; Paloma, A.; Palma, R.; Adams, D.B. Artificial Intelligence and Echocardiography: A Primer for Cardiac Sonographers. J. Am. Soc. Echocardiogr. 2020, 33, 1061–1066. [Google Scholar] [CrossRef]

- Sehly, A.; Jaltotage, B.; He, A.; Maiorana, A.; Ihdayhid, A.R.; Rajwani, A.; Dwivedi, G. Artificial Intelligence in Echocardiography: The Time is Now. RCM 2022, 23, 256. [Google Scholar] [CrossRef]

- Jordan, M.I.; Mitchell, T.M. Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Shehab, M.; Abualigah, L.; Shambour, Q.; Abu-Hashem, M.A.; Shambour, M.K.Y.; Alsalibi, A.I.; Gandomi, A.H. Machine learning in medical applications: A review of state-of-the-art methods. Comput. Biol. Med. 2022, 145, 105458. [Google Scholar] [CrossRef]

- Benkedjouh, T.; Medjaher, K.; Zerhouni, N.; Rechak, S. Health assessment and life prediction of cutting tools based on support vector regression. J. Intell. Manuf. 2015, 26, 213–223. [Google Scholar] [CrossRef]

- Soualhi, A.; Medjaher, K.; Zerhouni, N. Bearing Health Monitoring Based on Hilbert–Huang Transform, Support Vector Machine, and Regression. IEEE Trans. Instrum. Meas. 2015, 64, 52–62. [Google Scholar] [CrossRef]

- Dickinson, E.; Rusilowicz, M.J.; Dickinson, M.; Charlton, A.J.; Bechtold, U.; Mullineaux, P.M.; Wilson, J. Integrating transcriptomic techniques and k-means clustering in metabolomics to identify markers of abiotic and biotic stress in Medicago truncatula. Metabolomics 2018, 14, 126. [Google Scholar] [CrossRef]

- Khan, M.M.R.; Siddique, M.A.B.; Arif, R.B.; Oishe, M.R. ADBSCAN: Adaptive Density-Based Spatial Clustering of Applications with Noise for Identifying Clusters with Varying Densities. In Proceedings of 2018 4th International Conference on Electrical Engineering and Information & Communication Technology (iCEEiCT), Dhaka, Bangladesh, 13–15 September 2018; pp. 107–111. [Google Scholar]

- Jolliffe, I. Principal Component Analysis. In Wiley StatsRef: Statistics Reference Online; John Wiley & Sons, Inc.: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Chao, C.J.; Kato, N.; Scott, C.G.; Lopez-Jimenez, F.; Lin, G.; Kane, G.C.; Pellikka, P.A. Unsupervised Machine Learning for Assessment of Left Ventricular Diastolic Function and Risk Stratification. J. Am. Soc. Echocardiogr. 2022, 35, 1214–1225.e8. [Google Scholar] [CrossRef]

- Lachmann, M.; Rippen, E.; Schuster, T.; Xhepa, E.; von Scheidt, M.; Pellegrini, C.; Trenkwalder, T.; Rheude, T.; Stundl, A.; Thalmann, R.; et al. Subphenotyping of Patients with Aortic Stenosis by Unsupervised Agglomerative Clustering of Echocardiographic and Hemodynamic Data. JACC Cardiovasc. Interv. 2021, 14, 2127–2140. [Google Scholar] [CrossRef]

- Wongchaisuwat, P.; Klabjan, D.; Jonnalagadda, S.R. A Semi-Supervised Learning Approach to Enhance Health Care Community-Based Question Answering: A Case Study in Alcoholism. JMIR Med. Inform. 2016, 4, e24. [Google Scholar] [CrossRef]

- Cheplygina, V.; de Bruijne, M.; Pluim, J.P.W. Not-so-supervised: A survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image Anal. 2019, 54, 280–296. [Google Scholar] [CrossRef]

- Yu, G.; Sun, K.; Xu, C.; Shi, X.-H.; Wu, C.; Xie, T.; Meng, R.-Q.; Meng, X.-H.; Wang, K.-S.; Xiao, H.-M.; et al. Accurate recognition of colorectal cancer with semi-supervised deep learning on pathological images. Nat. Commun. 2021, 12, 6311. [Google Scholar] [CrossRef]

- Watkins, C. Q-Learning. Mach. Learn. 1992, 8, 279–292. [Google Scholar] [CrossRef]

- van Otterlo, M.; Wiering, M. (Eds.) Reinforcement Learning and Markov Decision Processes. In Reinforcement Learning: State-of-the-Art; Springer: Berlin/Heidelberg, Germany, 2012; pp. 3–42. [Google Scholar]

- Goodfellow, I. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Krizhevsky, A. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 10, 97–105. [Google Scholar] [CrossRef]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J.; et al. Development and Validation of a Deep Learning Algorithm for Detection of Diabetic Retinopathy in Retinal Fundus Photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef]

- Rajkomar, A.; Oren, E.; Chen, K.; Dai, A.M.; Hajaj, N.; Hardt, M.; Liu, P.J.; Liu, X.; Marcus, J.; Sun, M.; et al. Scalable and accurate deep learning with electronic health records. NPJ Digit. Med. 2018, 1, 18. [Google Scholar] [CrossRef]

- Ismael, A.M.; Sengur, A. Deep learning approaches for COVID-19 detection based on chest X-ray images. Expert. Syst. Appl. 2021, 164, 114054. [Google Scholar] [CrossRef]

- Ghorbani, A.; Ouyang, D.; Abid, A.; He, B.; Chen, J.H.; Harrington, R.A.; Liang, D.H.; Ashley, E.A.; Zou, J.Y. Deep learning interpretation of echocardiograms. NPJ Digit. Med. 2020, 3, 10. [Google Scholar] [CrossRef]

- Lassau, N.; Ammari, S.; Chouzenoux, E.; Gortais, H.; Herent, P.; Devilder, M.; Soliman, S.; Meyrignac, O.; Talabard, M.P.; Lamarque, J.P.; et al. Integrating deep learning CT-scan model, biological and clinical variables to predict severity of COVID-19 patients. Nat. Commun. 2021, 12, 634. [Google Scholar] [CrossRef]

- Lang, R.M.; Badano, L.P.; Mor-Avi, V.; Afilalo, J.; Armstrong, A.; Ernande, L.; Flachskampf, F.A.; Foster, E.; Goldstein, S.A.; Kuznetsova, T.; et al. Recommendations for cardiac chamber quantification by echocardiography in adults: An update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. J. Am. Soc. Echocardiogr. 2015, 28, 1–39.e14. [Google Scholar] [CrossRef]

- Foley, T.A.; Mankad, S.V.; Anavekar, N.S.; Bonnichsen, C.R.; Morris, M.F.; Miller, T.D.; Araoz, P.A. Measuring Left Ventricular Ejection Fraction—Techniques and Potential Pitfalls. Eur. Cardiol. 2012, 8, 108–114. [Google Scholar] [CrossRef]

- Thavendiranathan, P.; Liu, S.; Verhaert, D.; Calleja, A.; Nitinunu, A.; Van Houten, T.; De Michelis, N.; Simonetti, O.; Rajagopalan, S.; Ryan, T.; et al. Feasibility, accuracy, and reproducibility of real-time full-volume 3D transthoracic echocardiography to measure LV volumes and systolic function: A fully automated endocardial contouring algorithm in sinus rhythm and atrial fibrillation. JACC Cardiovasc. Imaging 2012, 5, 239–251. [Google Scholar] [CrossRef]

- Knackstedt, C.; Bekkers, S.C.; Schummers, G.; Schreckenberg, M.; Muraru, D.; Badano, L.P.; Franke, A.; Bavishi, C.; Omar, A.M.; Sengupta, P.P. Fully Automated Versus Standard Tracking of Left Ventricular Ejection Fraction and Longitudinal Strain: The FAST-EFs Multicenter Study. J. Am. Coll. Cardiol. 2015, 66, 1456–1466. [Google Scholar] [CrossRef]

- Zhang, J.; Gajjala, S.; Agrawal, P.; Tison, G.H.; Hallock, L.A.; Beussink-Nelson, L.; Lassen, M.H.; Fan, E.; Aras, M.A.; Jordan, C.; et al. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation 2018, 138, 1623–1635. [Google Scholar] [CrossRef]

- Nagueh, S.F.; Smiseth, O.A.; Appleton, C.P.; Byrd, B.F., III; Dokainish, H.; Edvardsen, T.; Flachskampf, F.A.; Gillebert, T.C.; Klein, A.L.; Lancellotti, P.; et al. Recommendations for the Evaluation of Left Ventricular Diastolic Function by Echocardiography: An Update from the American Society of Echocardiography and the European Association of Cardiovascular Imaging. Eur. Heart J.—Cardiovasc. Imaging 2016, 17, 1321–1360. [Google Scholar] [CrossRef]

- Shah, A.M.; Cikes, M.; Prasad, N.; Li, G.; Getchevski, S.; Claggett, B.; Rizkala, A.; Lukashevich, I.; O’Meara, E.; Ryan, J.J.; et al. Echocardiographic Features of Patients with Heart Failure and Preserved Left Ventricular Ejection Fraction. J. Am. Coll. Cardiol. 2019, 74, 2858–2873. [Google Scholar] [CrossRef]

- Fletcher, A.J.; Lapidaire, W.; Leeson, P. Machine Learning Augmented Echocardiography for Diastolic Function Assessment. Front. Cardiovasc. Med. 2021, 8, 711611. [Google Scholar] [CrossRef]

- Pandey, A.; Kagiyama, N.; Yanamala, N.; Segar, M.W.; Cho, J.S.; Tokodi, M.; Sengupta, P.P. Deep-Learning Models for the Echocardiographic Assessment of Diastolic Dysfunction. JACC Cardiovasc. Imaging 2021, 14, 1887–1900. [Google Scholar] [CrossRef]

- Salte, I.M.; Østvik, A.; Smistad, E.; Melichova, D.; Nguyen, T.M.; Karlsen, S.; Brunvand, H.; Haugaa, K.H.; Edvardsen, T.; Lovstakken, L.; et al. Artificial Intelligence for Automatic Measurement of Left Ventricular Strain in Echocardiography. JACC Cardiovasc. Imaging 2021, 14, 1918–1928. [Google Scholar] [CrossRef] [PubMed]

- Nedadur, R.; Wang, B.; Tsang, W. Artificial intelligence for the echocardiographic assessment of valvular heart disease. Heart 2022, 108, 1592–1599. [Google Scholar] [CrossRef] [PubMed]

- Yoon, Y.E.; Kim, S.; Chang, H.J. Artificial Intelligence and Echocardiography. J. Cardiovasc. Imaging 2021, 29, 193–204. [Google Scholar] [CrossRef] [PubMed]

- Moghaddasi, H.; Nourian, S. Automatic assessment of mitral regurgitation severity based on extensive textural features on 2D echocardiography videos. Comput. Biol. Med. 2016, 73, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Vafaeezadeh, M.; Behnam, H.; Hosseinsabet, A.; Gifani, P. A deep learning approach for the automatic recognition of prosthetic mitral valve in echocardiographic images. Comput. Biol. Med. 2021, 133, 104388. [Google Scholar] [CrossRef]

- Martins, J.; Nascimento, E.R.; Nascimento, B.R.; Sable, C.A.; Beaton, A.Z.; Ribeiro, A.L.; Meira, W.; Pappa, G.L. Towards automatic diagnosis of rheumatic heart disease on echocardiographic exams through video-based deep learning. J. Am. Med. Inform. Assoc. 2021, 28, 1834–1842. [Google Scholar] [CrossRef] [PubMed]

- Sengupta, P.P.; Shrestha, S.; Kagiyama, N.; Hamirani, Y.; Kulkarni, H.; Yanamala, N.; Bing, R.; Chin, C.W.L.; Pawade, T.A.; Messika-Zeitoun, D.; et al. A Machine-Learning Framework to Identify Distinct Phenotypes of Aortic Stenosis Severity. JACC Cardiovasc. Imaging 2021, 14, 1707–1720. [Google Scholar] [CrossRef]

- Yang, F.; Chen, X.; Lin, X.; Chen, X.; Wang, W.; Liu, B.; Li, Y.; Pu, H.; Zhang, L.; Huang, D.; et al. Automated Analysis of Doppler Echocardiographic Videos as a Screening Tool for Valvular Heart Diseases. JACC Cardiovasc. Imaging 2022, 15, 551–563. [Google Scholar] [CrossRef]

- Saraste, A.; Barbato, E.; Capodanno, D.; Edvardsen, T.; Prescott, E.; Achenbach, S.; Bax, J.J.; Wijns, W.; Knuuti, J. Imaging in ESC clinical guidelines: Chronic coronary syndromes. Eur Heart J. Cardiovasc. Imaging 2019, 20, 1187–1197. [Google Scholar] [CrossRef]

- Paetsch, I.; Jahnke, C.; Ferrari, V.A.; Rademakers, F.E.; Pellikka, P.A.; Hundley, W.G.; Poldermans, D.; Bax, J.J.; Wegscheider, K.; Fleck, E.; et al. Determination of interobserver variability for identifying inducible left ventricular wall motion abnormalities during dobutamine stress magnetic resonance imaging. Eur. Heart J. 2006, 27, 1459–1464. [Google Scholar] [CrossRef]

- Upton, R.; Mumith, A.; Beqiri, A.; Parker, A.; Hawkes, W.; Gao, S.; Porumb, M.; Sarwar, R.; Marques, P.; Markham, D.; et al. Automated Echocardiographic Detection of Severe Coronary Artery Disease Using Artificial Intelligence. JACC Cardiovasc. Imaging 2022, 15, 715–727. [Google Scholar] [CrossRef] [PubMed]

- Pellikka, P.A. Artificially Intelligent Interpretation of Stress Echocardiography: The Future Is Now. JACC Cardiovasc. Imaging 2022, 15, 728–730. [Google Scholar] [CrossRef] [PubMed]

- Laumer, F.; Di Vece, D.; Cammann, V.L.; Wurdinger, M.; Petkova, V.; Schonberger, M.; Schonberger, A.; Mercier, J.C.; Niederseer, D.; Seifert, B.; et al. Assessment of Artificial Intelligence in Echocardiography Diagnostics in Differentiating Takotsubo Syndrome from Myocardial Infarction. JAMA Cardiol. 2022, 7, 494–503. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Du, M.; Chang, S.; Chen, Z. Artificial intelligence in echocardiography: Detection, functional evaluation, and disease diagnosis. Cardiovasc. Ultrasound 2021, 19, 29. [Google Scholar] [CrossRef]

- Karakus, G.; Degirmencioglu, A.; Nanda, N.C. Artificial intelligence in echocardiography: Review and limitations including epistemological concerns. Echocardiography 2022, 39, 1044–1053. [Google Scholar] [CrossRef]

- Yu, F.; Huang, H.; Yu, Q.; Ma, Y.; Zhang, Q.; Zhang, B. Artificial intelligence-based myocardial texture analysis in etiological differentiation of left ventricular hypertrophy. Ann. Transl. Med. 2021, 9, 108. [Google Scholar] [CrossRef] [PubMed]

- Narula, S.; Shameer, K.; Salem Omar, A.M.; Dudley, J.T.; Sengupta, P.P. Machine-Learning Algorithms to Automate Morphological and Functional Assessments in 2D Echocardiography. J. Am. Coll. Cardiol. 2016, 68, 2287–2295. [Google Scholar] [CrossRef] [PubMed]

- Goto, S.; Mahara, K.; Beussink-Nelson, L.; Ikura, H.; Katsumata, Y.; Endo, J.; Gaggin, H.K.; Shah, S.J.; Itabashi, Y.; MacRae, C.A.; et al. Artificial intelligence-enabled fully automated detection of cardiac amyloidosis using electrocardiograms and echocardiograms. Nat. Commun. 2021, 12, 2726. [Google Scholar] [CrossRef] [PubMed]

- Duffy, G.; Cheng, P.P.; Yuan, N.; He, B.; Kwan, A.C.; Shun-Shin, M.J.; Alexander, K.M.; Ebinger, J.; Lungren, M.P.; Rader, F.; et al. High-Throughput Precision Phenotyping of Left Ventricular Hypertrophy with Cardiovascular Deep Learning. JAMA Cardiol. 2022, 7, 386–395. [Google Scholar] [CrossRef] [PubMed]

- Hwang, I.C.; Choi, D.; Choi, Y.J.; Ju, L.; Kim, M.; Hong, J.E.; Lee, H.J.; Yoon, Y.E.; Park, J.B.; Lee, S.P.; et al. Differential diagnosis of common etiologies of left ventricular hypertrophy using a hybrid CNN-LSTM model. Sci. Rep. 2022, 12, 20998. [Google Scholar] [CrossRef]

- Mahmood, R.; Syeda-Mahmood, T. Automatic detection of dilated cardiomyopathy in cardiac ultrasound videos. AMIA Annu. Symp. Proc. 2014, 2014, 865–871. [Google Scholar]

- Katsushika, S.; Kodera, S.; Nakamoto, M.; Ninomiya, K.; Kakuda, N.; Shinohara, H.; Matsuoka, R.; Ieki, H.; Uehara, M.; Higashikuni, Y.; et al. Deep Learning Algorithm to Detect Cardiac Sarcoidosis from Echocardiographic Movies. Circ. J. 2021, 86, 87–95. [Google Scholar] [CrossRef]

- Sengupta, P.P.; Huang, Y.M.; Bansal, M.; Ashrafi, A.; Fisher, M.; Shameer, K.; Gall, W.; Dudley, J.T. Cognitive Machine-Learning Algorithm for Cardiac Imaging: A Pilot Study for Differentiating Constrictive Pericarditis from Restrictive Cardiomyopathy. Circ. Cardiovasc. Imaging 2016, 9, e004330. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Chang, H.; Yang, D.; Yang, F.; Wang, Q.; Deng, Y.; Li, L.; Lv, W.; Zhang, B.; Yu, L.; et al. A deep learning framework assisted echocardiography with diagnosis, lesion localization, phenogrouping heterogeneous disease, and anomaly detection. Sci. Rep. 2023, 13, 3. [Google Scholar] [CrossRef]

- Strzelecki, M.; Materka, A.; Drozdz, J.; Krzeminska-Pakula, M.; Kasprzak, J.D. Classification and segmentation of intracardiac masses in cardiac tumor echocardiograms. Comput. Med. Imaging Graph. 2006, 30, 95–107. [Google Scholar] [CrossRef]

- Sun, L.; Li, Y.; Zhang, Y.T.; Shen, J.X.; Xue, F.H.; Cheng, H.D.; Qu, X.F. A computer-aided diagnostic algorithm improves the accuracy of transesophageal echocardiography for left atrial thrombi: A single-center prospective study. J. Ultrasound Med. 2014, 33, 83–91. [Google Scholar] [CrossRef]

- Dell’Angela, L.; Nicolosi, G.L. Artificial intelligence applied to cardiovascular imaging, a critical focus on echocardiography: The point-of-view from “the other side of the coin”. J. Clin. Ultrasound 2022, 50, 772–780. [Google Scholar] [CrossRef] [PubMed]

- Buzaev, I.V.; Plechev, V.V.; Nikolaeva, I.E.; Galimova, R.M. Artificial intelligence: Neural network model as the multidisciplinary team member in clinical decision support to avoid medical mistakes. Chronic Dis. Transl. Med. 2016, 2, 166–172. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barry, T.; Farina, J.M.; Chao, C.-J.; Ayoub, C.; Jeong, J.; Patel, B.N.; Banerjee, I.; Arsanjani, R. The Role of Artificial Intelligence in Echocardiography. J. Imaging 2023, 9, 50. https://doi.org/10.3390/jimaging9020050

Barry T, Farina JM, Chao C-J, Ayoub C, Jeong J, Patel BN, Banerjee I, Arsanjani R. The Role of Artificial Intelligence in Echocardiography. Journal of Imaging. 2023; 9(2):50. https://doi.org/10.3390/jimaging9020050

Chicago/Turabian StyleBarry, Timothy, Juan Maria Farina, Chieh-Ju Chao, Chadi Ayoub, Jiwoong Jeong, Bhavik N. Patel, Imon Banerjee, and Reza Arsanjani. 2023. "The Role of Artificial Intelligence in Echocardiography" Journal of Imaging 9, no. 2: 50. https://doi.org/10.3390/jimaging9020050

APA StyleBarry, T., Farina, J. M., Chao, C.-J., Ayoub, C., Jeong, J., Patel, B. N., Banerjee, I., & Arsanjani, R. (2023). The Role of Artificial Intelligence in Echocardiography. Journal of Imaging, 9(2), 50. https://doi.org/10.3390/jimaging9020050