An Effective Hyperspectral Image Classification Network Based on Multi-Head Self-Attention and Spectral-Coordinate Attention

Abstract

:1. Introduction

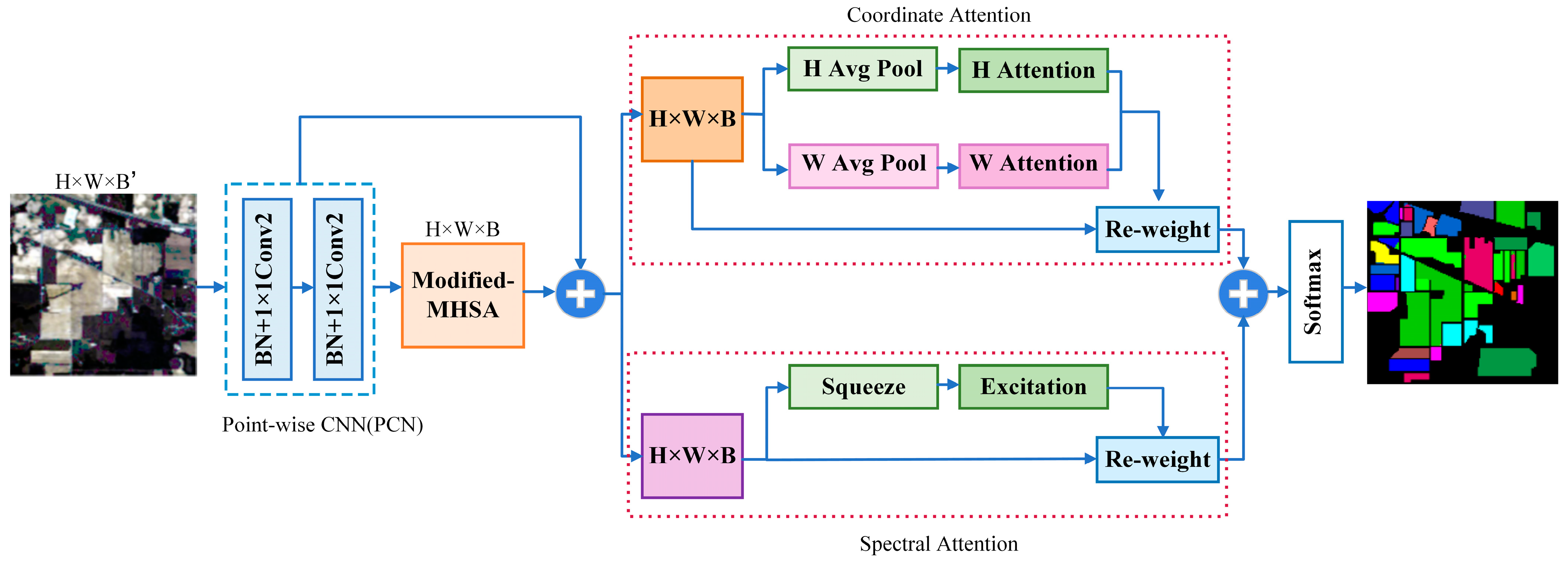

2. Proposed Methods

2.1. Point-Wise Convolution Network (PCN)

2.2. Modified Multi-Head Self-Attention (M-MHSA)

2.3. Spectral-Coordinate Attention Fusion Network (SCA)

2.3.1. Spectral Attention

2.3.2. Coordinate Attention

3. Experiments

3.1. Configuration for Parameters

3.2. HSI Datasets

- (1)

- Indian Pines dataset: The first dataset is the Indian Pines dataset acquired by the imaging spectrometer AVIRIS in northwest Indiana, USA. The HSI of this scene consists of 145 × 145 pixels, with 220 bands and a spatial resolution of 20 m/pixel. After removing interference bands, the dataset includes 200 available bands. The dataset comprises 16 different categories of ground objects, with 10,249 reference samples. For training, validation, and testing purposes, 10%, 1%, and 89% of each category were randomly selected, respectively. Figure 5 displays the false-color image and real map, while Table 1 provides detailed category information for this HSI dataset.

- (2)

- Pavia University dataset: The second dataset is the Pavia University dataset acquired at the Pavia University using the Imaging Spectrometer Sensor ROSIS of the Reflexology System. The HSI of this scene comprises 610 × 340 pixels, with 115 bands and a spatial resolution of 1.3 m/pixel. After removing the interference bands, the dataset includes 103 available bands. The dataset contains nine different categories of ground objects, with 42,776 reference samples. For training, verification, and testing purposes, 1%, 1%, and 98% of each category’s samples were randomly selected, respectively. Figure 6 displays the false-color image and real map, while Table 2 provides detailed class information for this HSI dataset.

- (3)

- Salinas dataset: The third dataset is the Salinas dataset acquired by the AVIRIS Imaging Spectrometer sensor over the Salinas Valley. The HSI of the scene comprises 512 × 217 pixels, with 224 bands and a spatial resolution of 3.7 m/pixel. After discarding 20 interference bands, the dataset includes 204 available bands. The dataset contains 16 different categories of features, with 54,129 samples available for the experiment. For training, verification, and testing purposes, 1%, 1%, and 98% of each category’s samples were randomly selected, respectively. Figure 7 displays the false-color image and the real object map, while Table 3 provides detailed class information for this HSI dataset.

| No. | Class | Train. | Val. | Test. |

|---|---|---|---|---|

| 1 | Alfalfa | 5 | 1 | 48 |

| 2 | Corn-notill | 143 | 14 | 1277 |

| 3 | Corn-mintill | 83 | 8 | 743 |

| 4 | Corn | 23 | 2 | 209 |

| 5 | Grass-pasture | 49 | 4 | 444 |

| 6 | Grass-trees | 74 | 7 | 666 |

| 7 | Grass-pasture-mowed | 2 | 1 | 23 |

| 8 | Hay-windrowed | 48 | 4 | 437 |

| 9 | Oats | 2 | 1 | 17 |

| 10 | Soybean-notill | 96 | 9 | 863 |

| 11 | Soybean-mintill | 246 | 24 | 2198 |

| 12 | Soybean-clean | 61 | 6 | 547 |

| 13 | Wheat | 21 | 2 | 189 |

| 14 | Woods | 129 | 12 | 1153 |

| 15 | Buildings-Grass-Trees-Drives | 38 | 3 | 339 |

| 16 | Stone-Steel-Towers | 9 | 1 | 85 |

| Total | 1029 | 99 | 9238 |

| No. | Class | Train. | Val. | Test. |

|---|---|---|---|---|

| 1 | Asphalt | 67 | 67 | 6497 |

| 2 | Meadows | 187 | 187 | 18,275 |

| 3 | Gravel | 21 | 21 | 2057 |

| 4 | Trees | 31 | 31 | 3002 |

| 5 | Painted metal sheets | 14 | 14 | 1317 |

| 6 | Bare Soil | 51 | 51 | 4927 |

| 7 | Bitumen | 14 | 14 | 1302 |

| 8 | Self-Blocking Bricks | 37 | 37 | 3608 |

| 9 | Shadows | 10 | 10 | 927 |

| Total | 432 | 432 | 41,912 |

| No. | Class | Train. | Val. | Test. |

|---|---|---|---|---|

| 1 | Brocoli_green_weeds_1 | 21 | 21 | 1967 |

| 2 | Brocoli_green_weeds_2 | 38 | 38 | 3650 |

| 3 | Fallow | 20 | 20 | 1936 |

| 4 | Fallow_rough_plow | 14 | 14 | 1366 |

| 5 | Fallow_smooth | 27 | 27 | 2624 |

| 6 | Stubble | 40 | 40 | 3879 |

| 7 | Celery | 36 | 36 | 3507 |

| 8 | Grapes_untrained | 113 | 113 | 11,045 |

| 9 | Soil_vinyard_develop | 63 | 63 | 6077 |

| 10 | Corn_senesced_green_weeds | 33 | 33 | 3212 |

| 11 | Lettuce_romaine_4 wk | 11 | 11 | 1046 |

| 12 | Lettuce_romaine_5 wk | 20 | 20 | 1887 |

| 13 | Lettuce_romaine_6 wk | 10 | 10 | 896 |

| 14 | Lettuce_romaine_7 wk | 11 | 11 | 1048 |

| 15 | Vinyard_untrained | 73 | 73 | 7122 |

| 16 | Vinyard_vertical_trellis | 19 | 19 | 1769 |

| Total | 549 | 549 | 53,031 |

3.3. Comparison of Classification Results

3.4. Ablation Study

3.5. Training Sample Ratio

3.6. Running Time

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Govender, M.; Chetty, K.; Naiken, V.; Bulcock, H. A comparison of satellite hyperspectral and multispectral remote sensing imagery for improved classification and mapping of vegetation. Water Sa 2019, 34, 147–154. [Google Scholar] [CrossRef] [Green Version]

- Govender, M.; Chetty, K.; Bulcock, H. A review of hyperspectral remote sensing and its application in vegetation and water resource studies. Water Sa 2009, 33, 145–151. [Google Scholar] [CrossRef] [Green Version]

- Khan, M.J.; Khan, H.S.; Yousaf, A.; Khurshid, K.; Abbas, A. Modern Trends in Hyperspectral Image Analysis: A Review. IEEE Access 2018, 6, 14118–14129. [Google Scholar] [CrossRef]

- Huang, H.; Liu, L.; Ngadi, M. Recent Developments in Hyperspectral Imaging for Assessment of Food Quality and Safety. Sensors 2014, 14, 7248–7276. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Blanzieri, E.; Melgani, F. Nearest Neighbor Classification of Remote Sensing Images with the Maximal Margin Principle. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1804–1811. [Google Scholar] [CrossRef]

- Amini, A.; Homayouni, S.; Safari, A. Semi-supervised classification of hyperspectral image using random forest algorithm. In Proceedings of the 2014 IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 2866–2869. [Google Scholar] [CrossRef]

- Li, S.; Jia, X.; Zhang, B. Superpixel-based Markov random field for classification of hyperspectral images. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium—IGARSS, Melbourne, Australia, 21–26 July 2013; pp. 3491–3494. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef] [Green Version]

- Qi, Y.; Wang, Y.; Zheng, X.; Wu, Z. Robust feature learning by stacked autoencoder wit-h maximum correntropy criterion. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 6716–6720. [Google Scholar] [CrossRef]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep Recurrent Neural Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef] [Green Version]

- Mughees, A.; Tao, L. Multiple deep-belief-network-based spectral-spatial classification of hyperspectral images. Tsinghua Sci. Technol. 2019, 24, 183–194. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhang, Y.; Li, L.; Zhu, M.; He, Y.; Li, M.; Guo, Z.; Yang, X.; Liu, X. Classification based on deep convolutional neural networks with hyperspectral image. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 1828–1831. [Google Scholar] [CrossRef]

- Ma, C.; Guo, M.Y. Hyperspectral Image Classification Based on Convolutional Neural Network. In Proceedings of the 2018 5th International Conference on Information, Cybernetics, and Computational Social Systems (ICCSS), Hangzhou, China, 16–19 August 2018; pp. 117–121. [Google Scholar] [CrossRef]

- Dai, X.; Xue, W. Hyperspectral Remote Sensing Image Classification Based on Convolutional Neural Network. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 10373–10377. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep Convolutional Neural Networks f-or Hyperspectral Image Classification. J. Sens. 2015, 2015, 258619. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhang, H.; Shen, Q. Spectral—Spatial Classification of Hyperspectral Imagery with 3D Convolutional Neural Network. Remote Sens. 2017, 9, 67. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Zhao, Y.Q.; Chan, W.C. Learning and Transferring Deep Joint Spectral—Spatial Features for Hyperspectral Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, J.J.; Zheng, C.H.; Yan, Q.; Xun, L.N. Classification of Hyperspectral Data Using a Multi-Channel Convolutional Neural Network. In Proceedings of the Intelligent Computing Methosologies; Huang, D.S., Gromiha, M.M., Han, K., Hussain, A., Eds.; Springer International Publishing: Cham, Switzerlands, 2018; pp. 81–92. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–Spatial Residual Network for Hyperspectral Image Classification: A 3-D Deep Learning Framework. IEEE Trans. Geosci. Remote Sens. 2018, 56, 847–858. [Google Scholar] [CrossRef]

- Wang, W.; Dou, S.; Jiang, Z.; Sun, L. A Fast Dense Spectral—Spatial Convolution Network Framework for Hyperspectral Images Classification. Remote Sens. 2018, 10, 1068. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Qin, A.; Shang, Z.; Tian, J.; Wang, Y.; Zhang, T.; Tang, Y.Y. Spectral-Spatial Graph Convolutional Networks for Semel-Supervised Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. Lett. 2019, 16, 241–245. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 2–8. Available online: http://arxiv.org/abs/1706.03762v5 (accessed on 15 June 2023).

- Song, C.; Mei, S.; Ma, M.; Xu, F.; Zhang, Y.; Du, Q. Hyperspectral Image Classification Using Hierarchical Spatial-Spectral Transformer. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 3584–3587. [Google Scholar] [CrossRef]

- Valsalan, P.; Latha, G.C.P. Hyperspectral image classification model using squeeze and excitation network with deep learning. Comput. Intell. Neurosci. 2022, 2022, 9430779. [Google Scholar] [CrossRef]

- Sun, H.; Zheng, X.; Lu, X.; Wu, S. Spectral—Spatial Attention Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3232–3245. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Yang, Y.; Wang, X. Classification of Hyperspectral Image Based on Double-Branch Dual-Attention Mechanism Network. Remote Sens. 2020, 12, 582. [Google Scholar] [CrossRef] [Green Version]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate Attention for Efficient Mobile Network Design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar] [CrossRef]

- Roy, S.K.; Krishna, G.; Dubey, S.R.; Chaudhuri, B.B. HybridSN: Exploring 3-D—2-D CNN Feature Hierarchy for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 17, 277–281. [Google Scholar] [CrossRef] [Green Version]

- Liu, Q.; Xiao, L.; Yang, J.; Chan, J.C. Content-Guided Convolutional Neural Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6124–6137. [Google Scholar] [CrossRef]

- Ma, W.; Yang, Q.; Wu, Y.; Zhao, W.; Zhang, X. Double-Branch Multi-Attention Mechanism Network for Hyperspectral Image Classification. Remote Sens. 2019, 11, 1307. [Google Scholar] [CrossRef] [Green Version]

| Class | SVM | FDSSC | SSRN | HybridSN | CGCNN | DBMA | DBDA | MSSCA |

|---|---|---|---|---|---|---|---|---|

| 1 | 18.88 ± 7.76 | 45.20 ± 30.35 | 75.95 ± 29.99 | 97.16 ± 2.27 | 97.04 ± 2.46 | 39.23 ± 19.48 | 73.37 ± 25.28 | 97.54 ± 1.58 |

| 2 | 46.05 ± 6.40 | 78.02 ± 12.10 | 85.75 ± 4.76 | 97.15 ± 0.56 | 98.62 ± 0.59 | 70.87 ± 10.59 | 79.10 ± 8.95 | 99.01 ± 0.67 |

| 3 | 45.88 ± 15.31 | 75.69 ± 14.23 | 83.49 ± 12.87 | 98.25 ± 0.65 | 98.61 ± 1.13 | 67.69 ± 14.74 | 79.24 ± 12.45 | 99.35 ± 0.62 |

| 4 | 30.05 ± 7.38 | 74.05 ± 30.61 | 77.95 ± 27.61 | 97.90 ± 2.75 | 97.91 ± 1.32 | 64.24 ± 20.66 | 82.49 ± 16.99 | 98.86 ± 1.06 |

| 5 | 71.42 ± 21.58 | 96.71 ± 3.60 | 96.07 ± 7.65 | 98.49 ± 0.96 | 98.64 ± 1.31 | 89.66 ± 6.55 | 96.89 ± 3.97 | 99.44 ± 0.78 |

| 6 | 74.53 ± 4.01 | 90.74 ± 12.06 | 94.83 ± 4.15 | 98.92 ± 0.38 | 99.75 ± 0.16 | 85.52 ± 4.97 | 95.36 ± 5.63 | 99.75 ± 0.18 |

| 7 | 25.70 ± 15.00 | 36.11 ± 28.85 | 70.04 ± 29.66 | 100.00 ± 0.00 | 99.20 ± 0.16 | 32.95 ± 26.11 | 30.56 ± 14.81 | 100.00 ± 0.00 |

| 8 | 87.20 ± 3.06 | 97.55 ± 5.29 | 97.82 ± 3.43 | 99.67 ± 0.31 | 99.63 ± 0.48 | 99.03 ± 2.20 | 100.00 ± 0.00 | 99.95 ± 0.10 |

| 9 | 18.28 ± 9.91 | 43.79 ± 29.36 | 79.96 ± 28.87 | 92.38 ± 5.25 | 100.00 ± 0.00 | 12.05 ± 5.46 | 45.58 ± 17.69 | 86.67 ± 15.15 |

| 10 | 50.16 ± 8.78 | 80.41 ± 12.34 | 87.96 ± 7.00 | 98.74 ± 0.81 | 97.29 ± 1.48 | 70.97 ± 13.57 | 84.06 ± 8.54 | 97.89 ± 1.26 |

| 11 | 52.03 ± 4.62 | 80.90 ± 8.31 | 86.75 ± 6.39 | 99.16 ± 0.26 | 99.22 ± 0.46 | 73.04 ± 5.70 | 83.82 ± 9.71 | 99.49 ± 0.49 |

| 12 | 34.82 ± 10.77 | 74.71 ± 27.55 | 85.83 ± 5.92 | 97.47 ± 1.17 | 97.95 ± 0.91 | 63.04 ± 15.42 | 81.08 ± 12.23 | 98.94 ± 0.93 |

| 13 | 76.72 ± 5.64 | 92.72 ± 12.56 | 99.50 ± 1.49 | 98.02 ± 1.64 | 99.45 ± 0.01 | 92.24 ± 7.71 | 93.20 ± 8.57 | 99.78 ± 0.44 |

| 14 | 79.21 ± 5.25 | 91.99 ± 4.60 | 93.93 ± 3.81 | 99.32 ± 0.44 | 99.80 ± 0.13 | 93.12 ± 4.56 | 93.90 ± 4.10 | 99.96 ± 0.04 |

| 15 | 48.80 ± 20.15 | 69.33 ± 35.62 | 93.06 ± 4.79 | 97.64 ± 1.58 | 98.01 ± 0.02 | 67.51 ± 12.90 | 89.00 ± 13.52 | 98.64 ± 1.87 |

| 16 | 98.50 ± 2.57 | 80.07 ± 7.38 | 95.68 ± 3.51 | 91.02 ± 4.07 | 99.04 ± 0.12 | 81.27 ± 12.08 | 83.83 ± 6,76 | 97.10 ± 2.36 |

| OA (%) | 55.98 ± 2.75 | 81.89 ± 6.27 | 88.63 ± 3.98 | 98.44 ± 0.16 | 98.85 ± 0.18 | 73.39 ± 2.88 | 84.13 ± 1.19 | 99.23 ± 0.19 |

| AA (%) | 53.64 ± 3.45 | 76.00 ± 11.45 | 87.79 ± 8.56 | 97.58 ± 0.82 | 98.76 ± 0.18 | 68.91 ± 4.26 | 80.72 ± 4.33 | 98.27 ± 1.03 |

| KPP (×100) | 48.72 ± 3.32 | 79.19 ± 7.37 | 86.97 ± 4.63 | 98.23 ± 0.18 | 98.69 ± 0.21 | 69.53 ± 3.27 | 81.85 ± 1.39 | 99.12 ± 0.21 |

| Class | SVM | FDSSC | SSRN | HybridSN | CGCNN | DBMA | DBDA | MSSCA |

|---|---|---|---|---|---|---|---|---|

| 1 | 85.83 ± 7.24 | 91.37 ± 5.38 | 91.80 ± 7.91 | 95.13 ± 1.81 | 98.49 ± 0.81 | 91.41 ± 2.88 | 93.76 ± 2.83 | 99.10 ± 0.60 |

| 2 | 73.97 ± 4.12 | 94.37 ± 4.01 | 86.40 ± 4.03 | 99.16 ± 0.49 | 98.92 ± 0.40 | 89.02 ± 5.77 | 96.20 ± 2.11 | 99.96 ± 0.03 |

| 3 | 31.14 ± 8.49 | 59.20 ± 18.76 | 59.59 ± 20.11 | 88.73 ± 4.90 | 87.73 ± 5.44 | 65.63 ± 23.57 | 81.67 ± 9.26 | 95.76 ± 2.94 |

| 4 | 70.16 ± 25.62 | 97.69 ± 1.72 | 98.38 ± 3.37 | 98.18 ± 0.77 | 97.11 ± 1.26 | 94.38 ± 4.47 | 98.22 ± 1.39 | 97.23 ± 1.62 |

| 5 | 97.27 ± 2.47 | 99.40 ± 0.75 | 98.76 ± 1.58 | 98.98 ± 0.93 | 100.00 ± 0.00 | 99.44 ± 0.94 | 98.17 ± 2.50 | 99.95 ± 0.09 |

| 6 | 45.73 ± 22.56 | 86.06 ± 9.10 | 77.77 ± 8.00 | 98.66 ± 0.96 | 99.55 ± 0.69 | 74.11 ± 11.22 | 90.50 ± 8.77 | 99.95 ± 0.07 |

| 7 | 43.20 ± 7.05 | 90.68 ± 7.72 | 65.61 ± 25.35 | 96.64 ± 2.37 | 99.11 ± 0.64 | 66.72 ± 14.16 | 85.39 ± 15.01 | 99.85 ± 0.27 |

| 8 | 64.45 ± 9.43 | 68.03 ± 20.33 | 74.50 ± 14.25 | 90.69 ± 2.72 | 97.77 ± 1.93 | 66.76 ± 16.60 | 79.61 ± 9.58 | 98.13 ± 1.98 |

| 9 | 99.90 ± 0.11 | 96.91 ± 1.91 | 98.28 ± 1.61 | 97.21 ± 1.86 | 99.98 ± 0.04 | 90.27 ± 11.33 | 94.35 ± 3.64 | 99.74 ± 0.16 |

| OA (%) | 69.86 ± 2.21 | 88.46 ± 4.24 | 82.10 ± 3.01 | 97.01 ± 0.69 | 98.21 ± 0.13 | 83.45 ± 3.58 | 92.10 ± 1.12 | 99.26 ± 0.18 |

| AA (%) | 67.96 ± 5.27 | 87.08 ± 4.55 | 83.45 ± 2.55 | 95.93 ± 0.87 | 97.63 ± 0.26 | 81.97 ± 4.60 | 90.88 ± 1.41 | 98.85 ± 0.30 |

| KPP (×100) | 58.26 ± 3.78 | 84.61 ± 5.83 | 75.88 ± 4.07 | 96.02 ± 0.92 | 97.63 ± 0.18 | 77.85 ± 4.93 | 89.52 ± 1.45 | 99.02 ± 0.24 |

| Class | SVM | FDSSC | SSRN | HybridSN | CGCNN | DBMA | DBDA | MSSCA |

|---|---|---|---|---|---|---|---|---|

| 1 | 92.70 ± 7.26 | 96.81 ± 9.56 | 96.51 ± 6.29 | 99.79 ± 0.24 | 99.97 ± 0.04 | 97.16 ± 8.39 | 95.67 ± 8.61 | 99.98 ± 0.02 |

| 2 | 98.61 ± 1.01 | 99.89 ± 0.29 | 92.61 ± 12.10 | 99.97 ± 0.02 | 99.12 ± 0.82 | 98.48 ± 2.16 | 99.99 ± 0.02 | 100.00 ± 0.00 |

| 3 | 75.17 ± 7.04 | 93.61 ± 4.44 | 92.84 ± 7.88 | 99.96 ± 0.04 | 66.86 ± 3.93 | 95.16 ± 2.74 | 97.65 ± 1.25 | 100.00 ± 0.00 |

| 4 | 96.79 ± 0.99 | 95.65 ± 3.38 | 95.55 ± 3.39 | 98.35 ± 1.05 | 99.79 ± 0.18 | 85.27 ± 4.98 | 90.16 ± 3.58 | 99.88 ± 0.12 |

| 5 | 91.04 ± 5.51 | 95.99 ± 6.43 | 89.26 ± 8.50 | 99.93 ± 0.07 | 95.67 ± 4.80 | 94.43 ± 6.80 | 92.90 ± 6.88 | 98.28 ± 1.81 |

| 6 | 99.87 ± 0.28 | 99.99 ± 1.62 | 99.91 ± 0.15 | 99.93 ± 0.10 | 99.76 ± 0.30 | 99.23 ± 1.14 | 99.88 ± 0.23 | 99.96 ± 0.07 |

| 7 | 94.30 ± 2.30 | 99.27 ± 0.84 | 98.84 ± 2.19 | 100.00 ± 0.00 | 99.91 ± 0.05 | 95.84 ± 5.05 | 99.71 ± 0.21 | 99.98 ± 0.03 |

| 8 | 65.65 ± 3.55 | 84.04 ± 6.33 | 76.61 ± 8.99 | 98.81 ± 0.88 | 91.43 ± 3.59 | 81.52 ± 8.59 | 81.60 ± 9.69 | 99.12 ± 0.81 |

| 9 | 95.03 ± 6.16 | 98.88 ± 0.76 | 98.73 ± 1.33 | 99.96 ± 0.02 | 99.48 ± 0.31 | 98.53 ± 1.52 | 97.80 ± 1.98 | 100.00 ± 0.00 |

| 10 | 80.87 ± 11.45 | 95.96 ± 2.68 | 94.79 ± 3.45 | 98.96 ± 1.06 | 93.76 ± 2.90 | 92.15 ± 5.03 | 94.29 ± 2.99 | 97.03 ± 2.46 |

| 11 | 58.82 ± 27.59 | 100.00 ± 0.00 | 93.23 ± 4.40 | 99.21 ± 1.05 | 97.50 ± 2.17 | 80.78 ± 17.94 | 93.45 ± 4.67 | 99.87 ± 0.18 |

| 12 | 86.41 ± 10.28 | 99.00 ± 1.35 | 94.51 ± 7.94 | 99.81 ± 0.33 | 99.82 ± 0.31 | 97.75 ± 2.10 | 98.56 ± 1.31 | 100.00 ± 0.00 |

| 13 | 81.66 ± 11.84 | 98.24 ± 2.60 | 92.66 ± 7.85 | 98.77 ± 2.28 | 98.28 ± 1.79 | 86.76 ± 16.37 | 99.53 ± 0.24 | 99.93 ± 0.09 |

| 14 | 80.08 ± 14.32 | 94.24 ± 4.79 | 97.01 ± 1.50 | 99.60 ± 0.45 | 98.10 ± 2.05 | 89.69 ± 7.44 | 95.76 ± 1.86 | 98.70 ± 0.83 |

| 15 | 48.14 ± 24.59 | 77.43 ± 9.62 | 69.53 ± 10.99 | 97.88 ± 2.67 | 75.90 ± 12.45 | 75.30 ± 10.42 | 80.91 ± 5.96 | 99.33 ± 0.41 |

| 16 | 88.65 ± 15.52 | 99.66 ± 0.69 | 99.02 ± 1.39 | 100.00 ± 0.00 | 96.12 ± 0.64 | 96.39 ± 5.84 | 99.11 ± 1.73 | 99.55 ± 0.55 |

| OA (%) | 80.50 ± 2.68 | 91.23 ± 1.94 | 86.85 ± 1.98 | 99.27 ± 0.29 | 92.78 ± 1.20 | 88.29 ± 2.03 | 91.41 ± 2.86 | 99.41 ± 0.32 |

| AA (%) | 83.36 ± 5.23 | 94.77 ± 1.43 | 92.60 ± 1.20 | 99.43 ± 0.19 | 94.47 ± 0.56 | 91.53 ± 2.09 | 94.81 ± 0.91 | 99.48 ± 0.25 |

| KPP (×100) | 78.21 ± 3.06 | 90.23 ± 2.18 | 85.33 ± 2.20 | 99.19 ± 0.32 | 91.94 ± 1.36 | 86.96 ± 2.29 | 90.41 ± 3.22 | 99.34 ± 0.35 |

| Dataset | CA | SE | CA + SE | PCN + SE + CA |

|---|---|---|---|---|

| Indian Pines | 98.19 ± 0.17 | 98.18 ± 0.84 | 99.15 ± 0.13 | 99.23 ± 0.19 |

| Pavia University | 98.09 ± 0.40 | 98.17 ± 0.24 | 98.52 ± 0.18 | 99.26 ± 0.18 |

| Salinas | 97.79 ± 0.26 | 98.20 ± 0.73 | 98.60 ± 0.47 | 99.41 ± 0.32 |

| Dataset | Methods | Train Time | Test Time |

|---|---|---|---|

| Indian Pines | SVM | 44.50 | 15.43 |

| FDSSC | 129.39 | 205.27 | |

| SSRN | 77.23 | 204.72 | |

| HybridSN | 239.82 | 6.89 | |

| CGCNN | 108.23 | 1.72 | |

| DBMA | 107.87 | 59.60 | |

| DBDA | 100.107 | 28.35 | |

| MSSCA | 52.93 | 0.52 |

| Dataset | Methods | Train Time | Test Time |

|---|---|---|---|

| Pavia University | SVM | 16.42 | 53.05 |

| FDSSC | 81.14 | 171.25 | |

| SSRN | 132.98 | 10.11 | |

| HybridSN | 97.12 | 52.60 | |

| CGCNN | 679.44 | 6.69 | |

| DBMA | 146.28 | 201.41 | |

| DBDA | 58.81 | 115.80 | |

| MSSCA | 99.65 | 8.93 |

| Dataset | Methods | Train Time | Test Time |

|---|---|---|---|

| Salinas | SVM | 9.85 | 3.82 |

| FDSSC | 129.39 | 205.27 | |

| SSRN | 77.23 | 204.72 | |

| HybridSN | 375.15 | 46.48 | |

| CGCNN | 340.81 | 4.69 | |

| DBMA | 84.12 | 323.03 | |

| DBDA | 62.29 | 161.02 | |

| MSSCA | 69.49 | 3.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Duan, Y.; Song, W.; Mei, H.; He, Q. An Effective Hyperspectral Image Classification Network Based on Multi-Head Self-Attention and Spectral-Coordinate Attention. J. Imaging 2023, 9, 141. https://doi.org/10.3390/jimaging9070141

Zhang M, Duan Y, Song W, Mei H, He Q. An Effective Hyperspectral Image Classification Network Based on Multi-Head Self-Attention and Spectral-Coordinate Attention. Journal of Imaging. 2023; 9(7):141. https://doi.org/10.3390/jimaging9070141

Chicago/Turabian StyleZhang, Minghua, Yuxia Duan, Wei Song, Haibin Mei, and Qi He. 2023. "An Effective Hyperspectral Image Classification Network Based on Multi-Head Self-Attention and Spectral-Coordinate Attention" Journal of Imaging 9, no. 7: 141. https://doi.org/10.3390/jimaging9070141

APA StyleZhang, M., Duan, Y., Song, W., Mei, H., & He, Q. (2023). An Effective Hyperspectral Image Classification Network Based on Multi-Head Self-Attention and Spectral-Coordinate Attention. Journal of Imaging, 9(7), 141. https://doi.org/10.3390/jimaging9070141