Levels of Automation for a Computer-Based Procedure for Simulated Nuclear Power Plant Operation: Impacts on Workload and Trust

Abstract

:1. Introduction

1.1. Computer-Based Procedures and Automation

1.2. Workload Issues for Automated Systems in NPP Operations

1.3. Measurement of Trust in Automation

1.4. The Present Study: Aims and Hypotheses

2. Method

2.1. Participants

2.2. Experimental Design

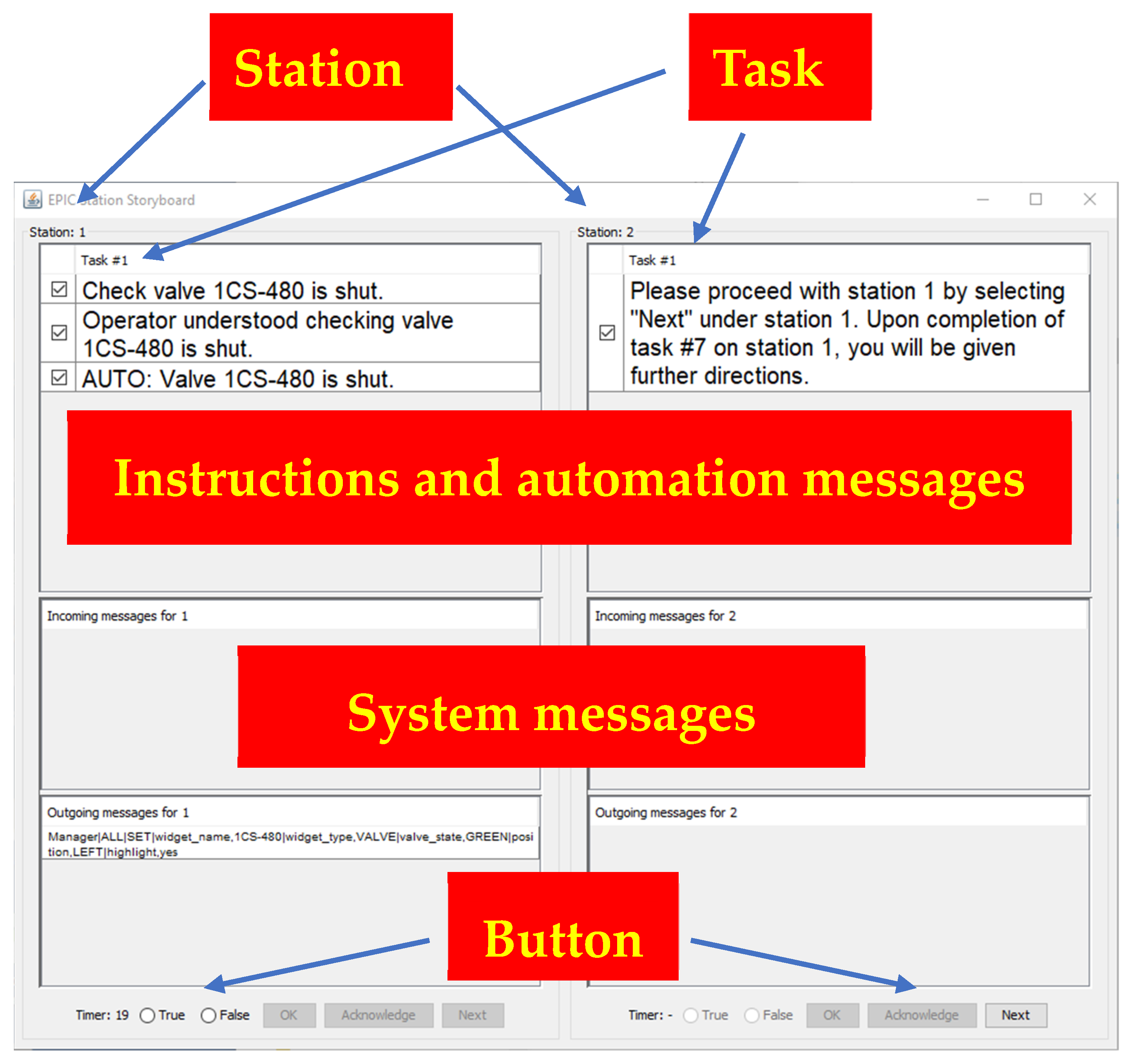

2.3. Apparatus

2.3.1. NPP Simulator

2.3.2. Scenario for the EOP

2.3.3. Secondary Task

2.4. Subjective Measures

2.4.1. Perfect Automation Schema (PAS)

2.4.2. Human Interaction and Trust (HIT)

2.4.3. NASA Task Load Index (NASA-TLX)

2.4.4. Multiple Resource Questionnaire (MRQ)

2.4.5. Instantaneous Self-Assessment (ISA)

2.4.6. Checklist of Trust Between People and Automation (CTPA)

2.5. Physiological Measures

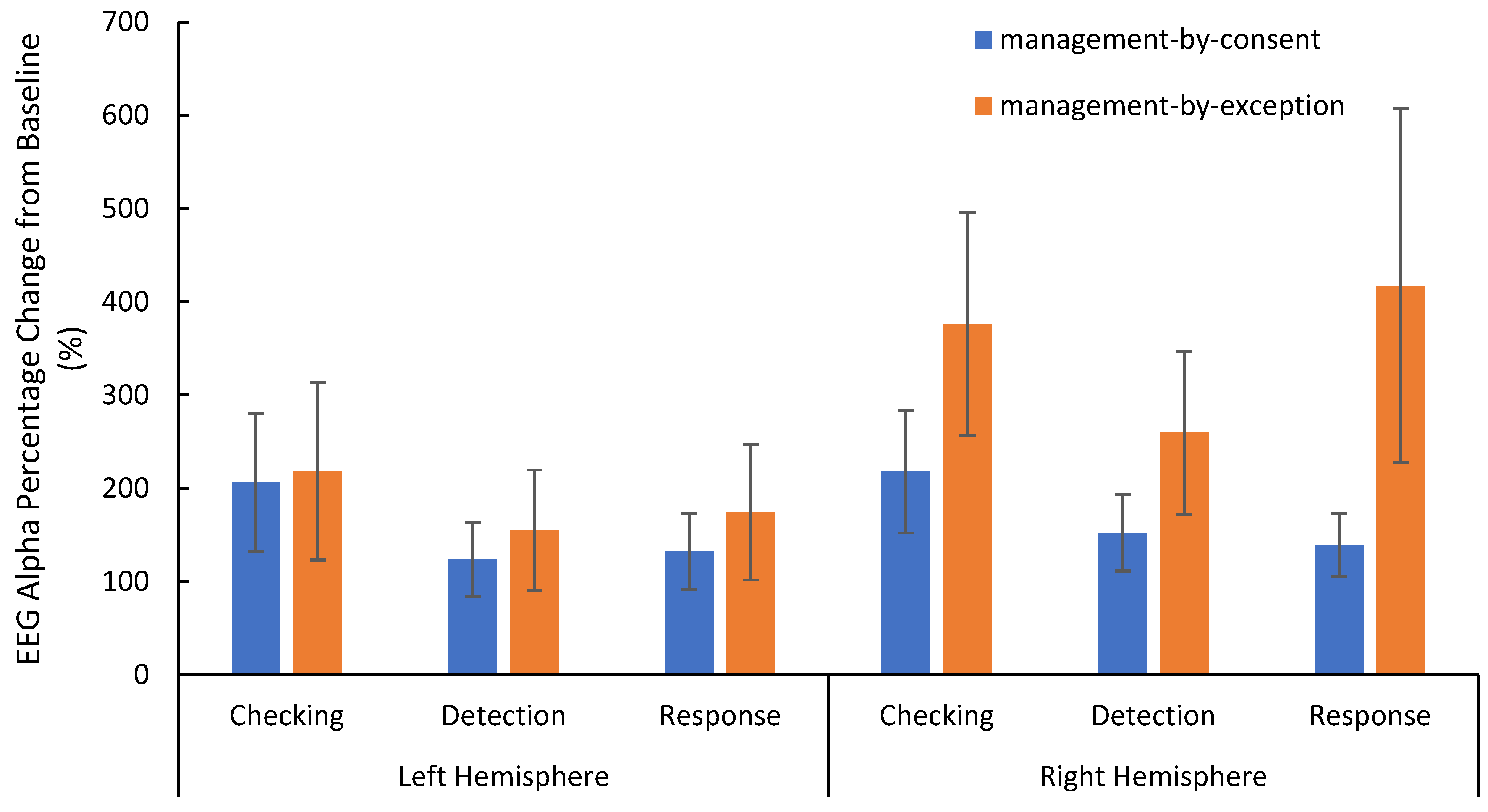

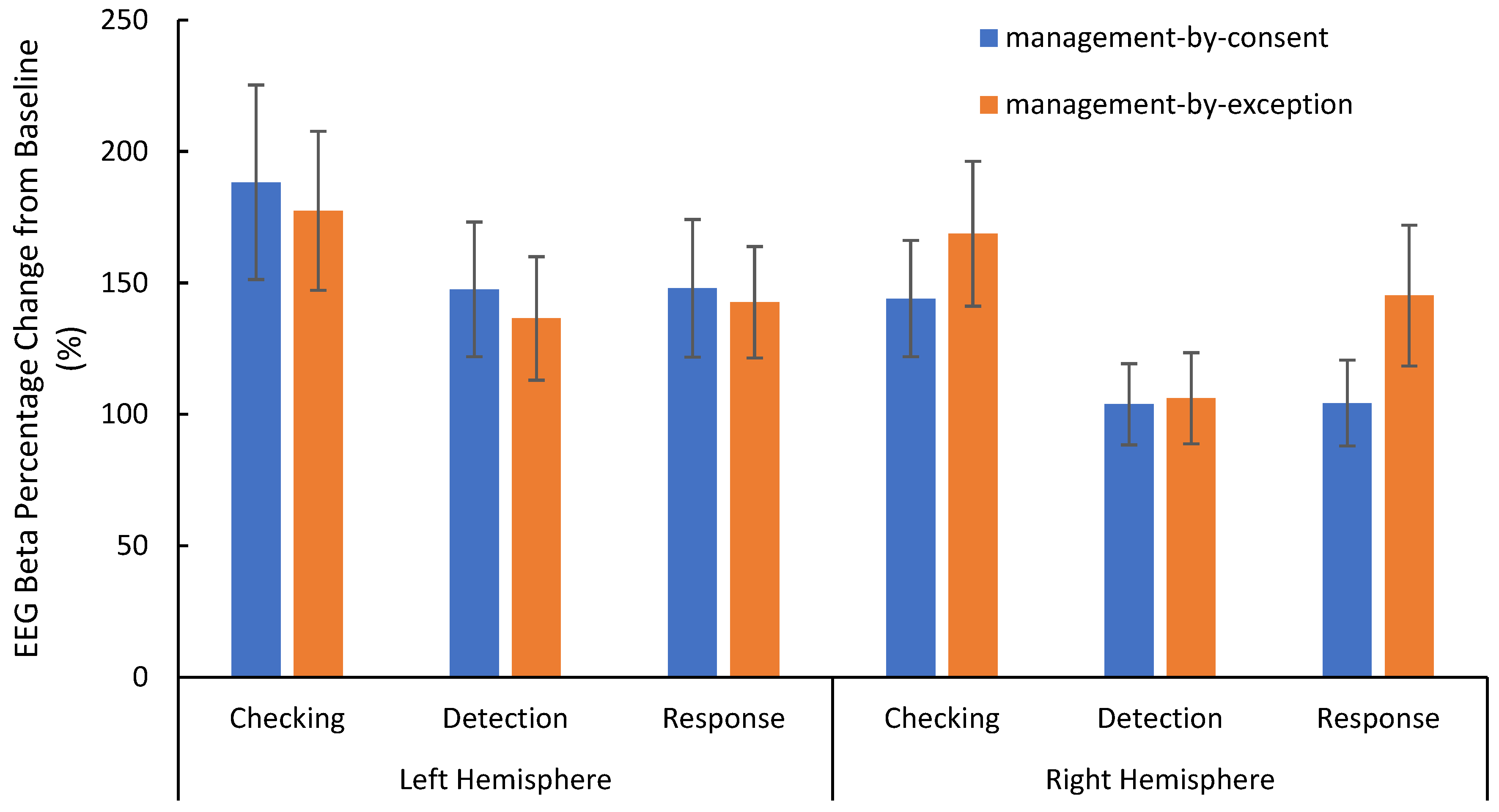

2.5.1. Electroencephalogram (EEG)

2.5.2. Electrocardiogram (ECG)

2.5.3. Transcranial Doppler (TCD)

2.5.4. Functional Near-Infrared Imaging (fNIRS)

2.6. Procedure

2.7. Statistical Methods

3. Results

3.1. Subjective Measures

3.1.1. NASA-TLX

3.1.2. MRQ

3.1.3. ISA

3.1.4. CPTA

3.2. Physiological Metrics

3.2.1. EEG

3.2.2. TCD

3.2.3. fNIRS

3.2.4. ECG

3.3. Secondary Task Accuracy and RT

3.4. Individual Differences in Trust

3.5. Cross-Study Comparison

4. Discussion

4.1. Task Factors and Workload Response During CBP Execution

4.2. The Role of Trust

4.3. Comparison with Conventional NPP Operation

4.4. Limitations

4.5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Items of the CPTA

- The system is deceptive

- The system behaves in an underhanded manner

- I am suspicious of the system’s intent, action, or outputs

- I am wary of the system

- The system’s actions will have a harmful or injurious outcome

- I am confident in the system

- The system provides security

- The system has integrity

- The system is dependable

- The system is reliable

- I can trust the system

- I am familiar with the system

References

- Hall, A.; Joe, J.C. Utility and industry perceptions of control room modernization over the last 10 years. Nucl. Technol. 2024, 210, 2290–2298. [Google Scholar] [CrossRef]

- Joe, J.C.; Kovesdi, C.R. Developing a Strategy for Full Nuclear Plant Modernization; (No. INL/EXT-18-51366-Rev000); Idaho National Lab. (INL): Idaho Falls, ID, USA, 2018. [Google Scholar]

- Boring, R.L.; Ulrich, T.A.; Lew, R. Levels of digitization, digitalization, and automation for advanced reactors. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2023, 67, 207–213. [Google Scholar] [CrossRef]

- Kim, Y.; Park, J. Envisioning human-automation interactions for responding emergency situations of NPPs: A viewpoint from human computer interaction. In Proceedings of the Transactions of the Korean Nuclear Society Autumn Meeting, Yeosu, Republic of Korea, 25–26 October 2018. [Google Scholar]

- Lee, J.D.; See, K.A. Trust in automation: Designing for appropriate reliance. Hum. Factors 2004, 46, 50–80. [Google Scholar] [CrossRef]

- Parasuraman, R.; Manzey, D.H. Complacency and bias in human use of automation: An attentional integration. Hum. Factors 2010, 52, 381–410. [Google Scholar] [CrossRef]

- Porthin, M.; Liinasuo, M.; Kling, T. Effects of digitalization of nuclear power plant control rooms on human reliability analysis—A review. Reliab. Eng. Syst. Saf. 2020, 194, 106415. [Google Scholar] [CrossRef]

- O’Hara, J.M.; Higgins, J.C.; Stubler, W.F.; Kramer, J. Computer-Based Procedure Systems: Technical Basis and Human Factors Review Guidance; Division of Systems Analysis and Regulatory Effectiveness, Office of Nuclear Regulatory Research, United States Nuclear Regulatory Commission: Washington, DC, USA, 2000. [Google Scholar]

- Gao, Q.; Yu, W.; Jiang, X.; Song, F.; Pan, J.; Li, Z. An integrated computer-based procedure for teamwork in digital nuclear power plants. Ergonomics 2015, 58, 1303–1313. [Google Scholar] [CrossRef]

- Le Blanc, K.L.; Oxstrand, J.H. Computer–Based Procedures for nuclear power plant field workers: Preliminary results from two evaluation studies. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2013, 57, 1722–1726. [Google Scholar] [CrossRef]

- Oxstrand, J.; Le Blanc, K.L.; Bly, A. The Next Step in Deployment of Computer Based Procedures for Field Workers: Insights and Results from Field Evaluations at Nuclear Power Plants; (No. INL/CON-14-32990); Idaho National Lab (INL): Idaho Falls, ID, USA, 2015. [Google Scholar]

- Hall, A.; Boring, R.L.; Ulrich, T.A.; Lew, R.; Velazquez, M.; Xing, J.; Whiting, T.; Makrakis, G.M. A comparison of three types of computer-based procedures: An experiment using the Rancor Microworld Simulator. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2023, 67, 2552–2557. [Google Scholar] [CrossRef]

- Balfe, N.; Sharples, S.; Wilson, J.R. Impact of automation: Measurement of performance, workload and behaviour in a complex control environment. Appl. Ergon. 2015, 47, 52–64. [Google Scholar] [CrossRef]

- Endsley, M.R. Automation and situation awareness. In Automation and Human Performance: Theory and Applications; Parasuraman, R., Mouloua, M., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1996; pp. 163–181. [Google Scholar]

- Lin, C.J.; Yenn, T.C.; Jou, Y.T.; Hsieh, T.L.; Yang, C.W. Analyzing the staffing and workload in the main control room of the advanced nuclear power plant from the human information processing perspective. Saf. Sci. 2013, 57, 161–168. [Google Scholar] [CrossRef]

- Parasuraman, R.; Riley, V. Humans and automation: Use, misuse, disuse, abuse. Hum. Factors 1997, 39, 230–253. [Google Scholar] [CrossRef]

- Kaber, D.B. Issues in human–automation interaction modeling: Presumptive aspects of frameworks of types and levels of automation. J. Cogn. Eng. Decis. Mak. 2018, 12, 7–24. [Google Scholar] [CrossRef]

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 286–297. [Google Scholar] [CrossRef] [PubMed]

- Kaber, D.B.; Onal, E.; Endsley, M.R. Design of automation for telerobots and the effect on performance, operator situation awareness, and subjective workload. Hum. Factors Ergon. Manuf. Serv. Ind. 2000, 10, 409–430. [Google Scholar] [CrossRef]

- O’Hara, J.M.; Fleger, S.A. Human-System Interface Design Review Guidelines; (NUREG-0700, Rev.3); United States Nuclear Regulatory Commission: Washington, DC, USA, 2020. [Google Scholar]

- Joe, J.C.; Boring, R.L.; Persensky, J.J. Commercial utility perspectives on nuclear power plant control room modernization. In Proceedings of the 8th International Topical Meeting on Nuclear Power Plant Instrumentation, Control, and Human-Machine Interface Technologies (NPIC&HMIT), San Diego, CA, USA, 22–26 July 2012; pp. 2039–2046. [Google Scholar]

- Anokhin, A.; Lourie, V.; Dzhumaev, S.; Golovanev, V.; Kompanietz, N. Upgrade of the Kursk NPP main control room (case study). Int. Control Room Des. Conf. Proc. 2010, 2010, 207–214. [Google Scholar]

- Reinerman-Jones, L.; Matthews, G.; Mercado, J.E. Detection tasks in nuclear power plant operation: Vigilance decrement and physiological workload monitoring. Saf. Sci. 2016, 88, 97–107. [Google Scholar] [CrossRef]

- Wu, X.; Li, Z. Secondary task method for workload measurement in alarm monitoring and identification tasks. In Cross-Cultural Design. Methods, Practice, and Case Studies: 5th International Conference, CCD 2013; Springer Berlin Heidelberg: Berlin, Germany, 2013; pp. 346–354. [Google Scholar]

- Matthews, G.; Reinerman-Jones, L. Workload Assessment: How to Diagnose Workload Issues and Enhance Performance; Human Factors and Ergonomics Society: Santa Monica, CA, USA, 2017. [Google Scholar]

- O’Hara, J.M.; Higgins, J.C.; Fleger, S.A.; Pieringer, P.A. Human Factors Engineering Program Review Model; (NUREG-0711, Rev.3); United States Nuclear Regulatory Commission: Washington, DC, USA, 2012. [Google Scholar]

- Swain, A.D.; Guttmann, H.E. Handbook of Human-Reliability Analysis with Emphasis on Nuclear Power Plant Applications; Final report (No. NUREG/CR--1278); Sandia National Labs: Albuquerque, NM, USA, 1983. [Google Scholar]

- Lin, J.; Matthews, G.; Hughes, N.; Dickerson, K. Novices as models of expert operators: Evidence from the NRC Human Performance Test Facility. Hum. Factors Simul. 2022, 30, 1–10. [Google Scholar]

- Hughes, N.; D’Agostino, A.; Dickerson, K.; Matthews, M.; Reinerman-Jones, L.; Barber, D.; Mercado, J.; Harris, J.; Lin, J. Human Performance Test Facility (HPTF). Volume 1—Systematic Human Performance Data Collection Using Nuclear Power Plant Simulator: A Methodology; Research Information Letter RIL 2022-11; United States Nuclear Regulatory Commission: Washington, DC, USA, 2023. [Google Scholar]

- Hughes, N.; Lin, J.; Matthews, G.; Barber, D.; Dickerson, K. Human Performance Test Facility (HPTF). Volume 3—Supplemental Exploratory Analyses of Sensitivity of Workload Measures; Research Information Letter RIL 2022-11; United States Nuclear Regulatory Commission: Washington, DC, USA, 2023. [Google Scholar]

- Hughes, N.; Reinerman-Jones, L.; Lin, J.; Matthews, G.; Barber, D.; Dickerson, K. Human Performance Test Facility (HPTF). Volume 2—Comparing Operator Workload and Performance Between Digitized and Analog Simulated Environments; Research Information Letter RIL 2022-11; United States Nuclear Regulatory Commission: Washington, DC, USA, 2023. [Google Scholar]

- Warm, J.S.; Parasuraman, R.; Matthews, G. Vigilance requires hard mental work and is stressful. Hum. Factors 2008, 50, 433–441. [Google Scholar] [CrossRef]

- Tran, T.Q.; Boring, R.L.; Joe, J.C.; Griffith, C.D. Extracting and converting quantitative data into human error probabilities. In Proceedings of the 2007 IEEE 8th Human Factors and Power Plants and HPRCT 13th Annual Meeting, Monterey, CA, USA, 26–31 August 2007; pp. 164–169. [Google Scholar]

- Hwang, S.L.; Yau, Y.J.; Lin, Y.T.; Chen, J.H.; Huang, T.H.; Yenn, T.C.; Hsu, C.C. Predicting work performance in nuclear power plants. Saf. Sci. 2008, 46, 1115–1124. [Google Scholar] [CrossRef]

- Lin, C.J.; Yenn, T.C.; Yang, C.W. Automation design in advanced control rooms of the modernized nuclear power plants. Saf. Sci. 2010, 48, 63–71. [Google Scholar] [CrossRef]

- Skjerve, A.B.M.; Skraaning, G., Jr. The quality of human-automation cooperation in human-system interface for nuclear power plants. Int. J. Hum. Comput. Stud. 2004, 61, 649–677. [Google Scholar] [CrossRef]

- Huang, F.H.; Hwang, S.L.; Yenn, T.C.; Yu, Y.C.; Hsu, C.C.; Huang, H.W. Evaluation and comparison of alarm reset modes in advanced control room of nuclear power plants. Saf. Sci. 2006, 44, 935–946. [Google Scholar] [CrossRef]

- Huang, F.H.; Lee, Y.L.; Hwang, S.L.; Yenn, T.C.; Yu, Y.C.; Hsu, C.C.; Huang, H.W. Experimental evaluation of human–system interaction on alarm design. Nucl. Eng. Des. 2007, 237, 308–315. [Google Scholar] [CrossRef]

- Jou, Y.T.; Yenn, T.C.; Lin, C.J.; Yang, C.W.; Chiang, C.C. Evaluation of operators’ mental workload of human–system interface automation in the advanced nuclear power plants. Nucl. Eng. Des. 2009, 239, 2537–2542. [Google Scholar] [CrossRef]

- Qing, T.; Liu, Z.; Tang, Y.; Hu, H.; Zhang, L.; Chen, S. Effects of automation for emergency operating procedures on human performance in a nuclear power plant. Health Phys. 2021, 121, 261. [Google Scholar] [CrossRef]

- Janssen, C.P.; Donker, S.F.; Brumby, D.P.; Kun, A.L. History and future of human-automation interaction. Int. J. Hum. Comput. Stud. 2019, 131, 99–107. [Google Scholar] [CrossRef]

- Bye, A. Future needs of human reliability analysis: The interaction between new technology, crew roles and performance. Saf. Sci. 2023, 158, 105962. [Google Scholar] [CrossRef]

- Tasset, D.; Charron, S.; Miberg, A.B.; Hollnagel, E. The Impact of Automation on Operator Performance. An Explorative Study; (No. HRP--352/V. 1); Institutt for Energiteknikk, OECD Halden Reactor Project: Halden, Norway, 1999. [Google Scholar]

- Kohn, S.C.; de Visser, E.J.; Wiese, E.; Lee, Y.C.; Shaw, T.H. Measurement of trust in automation: A narrative review and reference guide. Front. Psychol. 2021, 12, 604977. [Google Scholar] [CrossRef]

- Matthews, G.; Panganiban, A.R.; Lin, J.; Long, M.; Schwing, M. Super-machines or sub-humans: What are the unique features of trust in Intelligent Autonomous Systems? In Trust in Human-Robot Interaction: Research and Applications; Nam, C.S., Lyons, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2021; pp. 59–82. [Google Scholar]

- Lyons, J.B.; Guznov, S.Y. Individual differences in human–machine trust: A multi-study look at the perfect automation schema. Theor. Issues Ergon. Sci. 2019, 20, 440–458. [Google Scholar] [CrossRef]

- Merritt, S.M.; Unnerstall, J.L.; Lee, D.; Huber, K. Measuring individual differences in the perfect automation schema. Hum. Factors 2015, 57, 740–753. [Google Scholar] [CrossRef]

- Jian, J.Y.; Bisantz, A.M.; Drury, C.G. Foundations for an empirically determined scale of trust in automated systems. Int. J. Cogn. Ergon. 2000, 4, 53–71. [Google Scholar] [CrossRef]

- Sauer, J.; Chavaillaz, A.; Wastell, D. Experience of automation failures in training: Effects on trust, automation bias, complacency and performance. Ergonomics 2016, 59, 767–780. [Google Scholar] [CrossRef] [PubMed]

- GSE Systems, Inc. GSE’s Generic Nuclear Plant Simulator; GSE Systems, Inc.: Columbia, MD, USA, 2016; Available online: https://www.gses.com/wp-content/uploads/GSE-GPWR-brochure.pdf (accessed on 24 December 2024).

- Hughes, N.; D’Agostino, A.; Reinerman, L. The NRC’s Human Performance Test Facility: Methodological considerations for developing a research program for systematic data collection using an NPP simulator. In Proceedings of the Enlarged Halden Programme Group (EHPG) Meeting, Lillehammer, Norway, 18–24 September 2017. [Google Scholar]

- Reinerman-Jones, L.E.; Guznov, S.; Mercado, J.; D’Agostino, A. Developing methodology for experimentation using a nuclear power plant simulator. In Foundations of Augmented Cognition; Schmorrow, D.D., Fidopiastis, C.M., Eds.; Springer: Heidelberg, Germany, 2013; pp. 181–188. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; North-Holland: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar]

- Boles, D.B.; Adair, L.P. The Multiple Resources Questionnaire (MRQ). Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2001, 45, 1790–1794. [Google Scholar] [CrossRef]

- Tattersall, A.J.; Foord, P.S. An experimental evaluation of instantaneous self-assessment as a measure of workload. Ergonomics 1996, 39, 740–748. [Google Scholar] [CrossRef] [PubMed]

- Jasper, H.H. The 10/20 international electrode system. Electroencephalogr. Clin. Neurophysiol. 1958, 10, 370–375. [Google Scholar]

- Borghini, G.; Astolfi, L.; Vecchiato, G.; Mattia, D.; Babiloni, F. Measuring neurophysiological signals in aircraft pilots and car drivers for the assessment of mental workload, fatigue and drowsiness. Neurosci. Biobehav. Rev. 2014, 44, 58–75. [Google Scholar] [CrossRef]

- So, H.H.; Chan, K.L. Development of QRS detection method for real-time ambulatory cardiac monitor. In Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’, Chicago, IL, USA, 30 October–2 November 1997; Volume 1, pp. 289–292. [Google Scholar]

- Taylor, G.; Reinerman-Jones, L.E.; Cosenzo, K.; Nicholson, D. Comparison of multiple physiological sensors to classify operator state in adaptive automation systems. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2010, 54, 195–199. [Google Scholar] [CrossRef]

- Fairclough, S.H.; Mulder, L.J.M. Psychophysiological processes of mental effort investment. In How Motivation Affects Cardiovascular Response: Mechanisms and Applications; Wright, R.A., Gendolla, G.H.E., Eds.; American Psychological Association: Washington DC, USA, 2011; pp. 61–76. [Google Scholar]

- Warm, J.S.; Tripp, L.D.; Matthews, G.; Helton, W.S. Cerebral hemodynamic indices of operator fatigue in vigilance. In Handbook of Operator Fatigue; Matthews, G., Desmond, P.A., Neubauer, C., Hancock, P.A., Eds.; Ashgate Press: Aldershot, UK, 2012; pp. 197–207. [Google Scholar]

- Ayaz, H.; Shewokis, P.A.; Curtin, A.; Izzetoglu, M.; Izzetoglu, K.; Onaral, B. Using MazeSuite and functional near infrared spectroscopy to study learning in spatial navigation. J. Vis. Exp. 2011, 56, 3443. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Erlbaum: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef]

- Kim, Y.; Jung, W.; Kim, S. Empirical investigation of workloads of operators in advanced control rooms. J. Nucl. Sci. Technol. 2014, 51, 744–751. [Google Scholar] [CrossRef]

- Langner, R.; Eickhoff, S.B. Sustaining attention to simple tasks: A meta-analytic review of the neural mechanisms of vigilant attention. Psychol. Bull. 2013, 139, 870. [Google Scholar] [CrossRef] [PubMed]

- Kamzanova, A.T.; Kustubayeva, A.M.; Matthews, G. Use of EEG workload indices for diagnostic monitoring of vigilance decrement. Hum. Factors 2014, 56, 1136–1149. [Google Scholar] [CrossRef] [PubMed]

- Hoff, K.A.; Bashir, M. Trust in automation: Integrating empirical evidence on factors that influence trust. Hum. Factors 2015, 57, 407–434. [Google Scholar] [CrossRef]

- Joe, J.C.; Remer, S.J. Developing a Roadmap for Total Nuclear Plant Transformation; (No. INL/EXT-19-54766-Rev000); Idaho National Laboratory: Idaho Falls, ID, USA, 2019. [Google Scholar]

- Burkolter, D.; Kluge, A.; Sauer, J.; Ritzmann, S. Comparative study of three training methods for enhancing process control performance: Emphasis shift training, situation awareness training, and drill and practice. Comput. Hum. Behav. 2010, 26, 976–986. [Google Scholar] [CrossRef]

- Kluge, A.; Nazir, S.; Manca, D. Advanced applications in process control and training needs of field and control room operators. IIE Trans. Occup. Ergon. Hum. Factors 2014, 2, 121–136. [Google Scholar] [CrossRef]

- Hancock, P.A. Reacting and responding to rare, uncertain and unprecedented events. Ergonomics 2023, 66, 454–478. [Google Scholar] [CrossRef]

| Level | Automation Tasks | Human Tasks |

|---|---|---|

| (1) Manual Operation | No automation | Operators manually perform all tasks |

| (2) Shared Operation | Automatic performance of some tasks | Operators perform some tasks manually |

| (3) Operation by Consent | Automatic performance when directed by operators to do so, under close monitoring and supervision | Operators monitor closely, approve actions, and may intervene to provide supervisory commands that automation follows |

| (4) Operation by Exception | Essentially autonomous operation unless specific situations or circumstances are encountered | Operators must approve of critical decisions and may intervene |

| (5) Autonomous Operation | Fully autonomous operation. System cannot normally be disabled but may be started manually | Operators monitor performance and perform back up if necessary, feasible, and permitted |

| Management-by-Consent | Management-by-Exception | |||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t | df | |

| Global Workload | 22.21 | 14.95 | 25.43 | 16.00 | −1.66 | 34 |

| Mental Demand | 37.00 | 28.88 | 38.63 | 32.32 | −0.39 | 34 |

| Physical Demand | 17.29 | 14.52 | 21.89 | 21.01 | −1.33 | 34 |

| Temporal Demand | 19.14 | 21.13 | 28.06 | 25.24 | −3.04 * | 34 |

| Effort | 20.71 | 18.03 | 25.77 | 24.56 | −1.75 | 34 |

| Frustration | 18.49 | 27.23 | 17.97 | 20.39 | 0.13 | 34 |

| Performance | 20.66 | 28.97 | 20.26 | 27.16 | 0.07 | 34 |

| Management-by-Consent | Management-by-Exception | |||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t | df | |

| Auditory Emotional | 9.30 | 22.41 | 6.91 | 16.89 | 0.71 | 43 |

| Auditory Linguistic | 10.16 | 21.85 | 13.70 | 36.19 | −0.82 | 43 |

| Manual Process | 54.98 | 36.19 | 55.02 | 37.29 | −0.01 | 43 |

| Short-Term Memory | 51.48 | 35.66 | 57.23 | 36.44 | −1.75 | 43 |

| Spatial Attentive | 65.45 | 38.68 | 61.80 | 36.95 | 1.00 | 43 |

| Spatial Concentrative | 50.80 | 35.67 | 50.05 | 36.36 | 0.20 | 43 |

| Spatial Categorical | 44.80 | 35.53 | 40.91 | 36.50 | 0.96 | 43 |

| Spatial Emergent | 57.14 | 37.63 | 56.80 | 39.55 | 0.08 | 43 |

| Spatial Positional | 56.25 | 36.82 | 60.34 | 37.25 | −0.90 | 43 |

| Spatial Quantitative | 49.50 | 38.12 | 48.39 | 39.37 | 0.25 | 43 |

| Visual Lexical | 59.55 | 37.78 | 58.93 | 37.92 | 0.11 | 43 |

| Visual Phonetic | 27.82 | 34.06 | 37.25 | 39.01 | −2.29 | 43 |

| Visual Temporal | 53.55 | 39.66 | 53.45 | 38.31 | 0.02 | 43 |

| Vocal Process | 15.18 | 27.86 | 12.61 | 24.34 | 1.13 | 43 |

| Management-by-Consent | Management-by-Exception | ANOVA | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | n | M | SD | n | Effect | F | df | ɳp2 | |

| Task type | ||||||||||

| Checking | 1.41 | 0.64 | 37 | 1.76 | 0.90 | 37 | LOA | 5.81 * | 1.36 | 0.14 |

| Response | 1.24 | 0.44 | 37 | 1.62 | 0.72 | 37 | Task type | 1.59 | 2.72 | 0.04 |

| Detection | 1.59 | 0.69 | 37 | 1.51 | 0.80 | 37 | L × T | 1.23 ** | 2.72 | 0.18 |

| Management-by-Consent | Management-by-Exception | ANOVA | ||||||

|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | Effect | F | df | ɳp2 | |

| Checking | 76.64 | 143.09 | 36.23 | 71.31 | LOA | 4.28 * | 1.32 | 0.12 |

| Response Implementation | 49.75 | 116.10 | 29.93 | 77.49 | Task type | 1.16 | 2.64 | 0.04 |

| Detection | 65.70 | 129.10 | 40.17 | 62.94 | LOA × TT | 0.52 | 2.64 | 0.02 |

| Management-by-Consent | Management-by-Exception | |||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t | df | |

| Accuracy | 58.86 | 32.91 | 65.14 | 33.81 | −1.21 | 34 |

| Reaction Time | 12.49 | 4.08 | 10.06 | 3.80 | 2.38 * | 29 |

| HIT | PAS High Expectation | PAS All-or-None Thinking | |

|---|---|---|---|

| CTPA Management-by-Consent | 0.374 * | 0.295 | −0.003 |

| CTPA Management-by-Exception | 0.429 ** | 0.261 | 0.011 |

| Current Study | Hughes et al. (2023), Exp 2 [31] | |

|---|---|---|

| Simulator | GSE GPWR (touchscreen) | GSE GPWR (touchscreen) |

| Sample | Novice student | Novice student |

| Crew | Individual | Crew of three |

| Scenario | Derived from EOP ECA-0.0 | Derived from EOP ECA-0.0 |

| Panel | Similar but not identical | Similar but not identical |

| Task | Similar but not identical | Similar but not identical |

| Task step | In natural order | Grouped (by task type) |

| Automation | Two LOAs | None |

| Hughes et al. (2023; Exp 2) [31] | Current Study: Management-by-Consent | |||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t | df | |

| NASA-TLX | ||||||

| Global Workload | 30.93 | 16.19 | 22.48 | 15.17 | 2.62 * | 104 |

| Mental Demand | 39.00 | 24.12 | 38.11 | 29.19 | 1.70 | 104 |

| Physical Demand | 20.72 | 17.19 | 17.03 | 14.16 | 1.12 | 104 |

| Temporal Demand | 32.25 | 20.85 | 19.05 | 20.74 | 3.11 ** | 104 |

| Effort | 29.06 | 18.94 | 21.08 | 18.38 | 2.09 * | 104 |

| Frustration | 33.79 | 20.02 | 18.97 | 27.07 | 3.20 ** | 104 |

| Performance | 30.77 | 20.72 | 20.62 | 28.55 | 2.10 * | 104 |

| MRQ | ||||||

| Auditory Emotional | 40.40 | 24.90 | 9.30 | 22.41 | 6.89 ** | 98.69 |

| Auditory Linguistic | 69.89 | 19.41 | 10.16 | 21.84 | 15.18 ** | 111 |

| Manual Process | 51.76 | 22.43 | 54.98 | 36.18 | −0.53 | 64.22 |

| Short-Term Memory | 71.62 | 20.10 | 51.48 | 35.65 | 3.42 ** | 60.62 |

| Spatial Attentive | 66.32 | 19.59 | 65.45 | 38.68 | 0.14 | 57.25 |

| Spatial Concentrative | 59.03 | 18.77 | 50.80 | 35.67 | 1.41 | 58.38 |

| Spatial Categorical | 55.15 | 20.41 | 44.80 | 35.53 | 1.76 | 61.30 |

| Spatial Emergent | 66.71 | 19.94 | 57.14 | 37.63 | 1.55 | 58.59 |

| Spatial Positional | 67.20 | 18.94 | 56.25 | 36.82 | 1.82 | 57.71 |

| Spatial Quantitative | 49.77 | 22.32 | 49.50 | 38.12 | 0.04 | 61.99 |

| Visual Lexical | 69.05 | 20.85 | 59.55 | 37.78 | 1.53 | 59.90 |

| Visual Phonetic | 61.84 | 22.14 | 27.82 | 34.06 | 5.88 ** | 66.26 |

| Visual Temporal | 43.28 | 21.83 | 53.55 | 39.66 | −1.57 | 59.81 |

| Vocal Process | 67.42 | 22.21 | 15.18 | 27.86 | 11.03 ** | 111 |

| ISA | ||||||

| Checking | 2.41 | 0.58 | 1.41 | 0.64 | 8.29 ** | 106 |

| Detection | 2.01 | 0.93 | 1.62 | 0.71 | 2.32 * | 106 |

| Response | 2.16 | 0.53 | 1.28 | 0.51 | 8.35 ** | 106 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schreck, J.; Matthews, G.; Lin, J.; Mondesire, S.; Metcalf, D.; Dickerson, K.; Grasso, J. Levels of Automation for a Computer-Based Procedure for Simulated Nuclear Power Plant Operation: Impacts on Workload and Trust. Safety 2025, 11, 22. https://doi.org/10.3390/safety11010022

Schreck J, Matthews G, Lin J, Mondesire S, Metcalf D, Dickerson K, Grasso J. Levels of Automation for a Computer-Based Procedure for Simulated Nuclear Power Plant Operation: Impacts on Workload and Trust. Safety. 2025; 11(1):22. https://doi.org/10.3390/safety11010022

Chicago/Turabian StyleSchreck, Jacquelyn, Gerald Matthews, Jinchao Lin, Sean Mondesire, David Metcalf, Kelly Dickerson, and John Grasso. 2025. "Levels of Automation for a Computer-Based Procedure for Simulated Nuclear Power Plant Operation: Impacts on Workload and Trust" Safety 11, no. 1: 22. https://doi.org/10.3390/safety11010022

APA StyleSchreck, J., Matthews, G., Lin, J., Mondesire, S., Metcalf, D., Dickerson, K., & Grasso, J. (2025). Levels of Automation for a Computer-Based Procedure for Simulated Nuclear Power Plant Operation: Impacts on Workload and Trust. Safety, 11(1), 22. https://doi.org/10.3390/safety11010022