Rethinking Underwater Crab Detection via Defogging and Channel Compensation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Database Acquisition

2.2. Overview of the Methodology

2.3. Improved Dark Channel Prior Approach

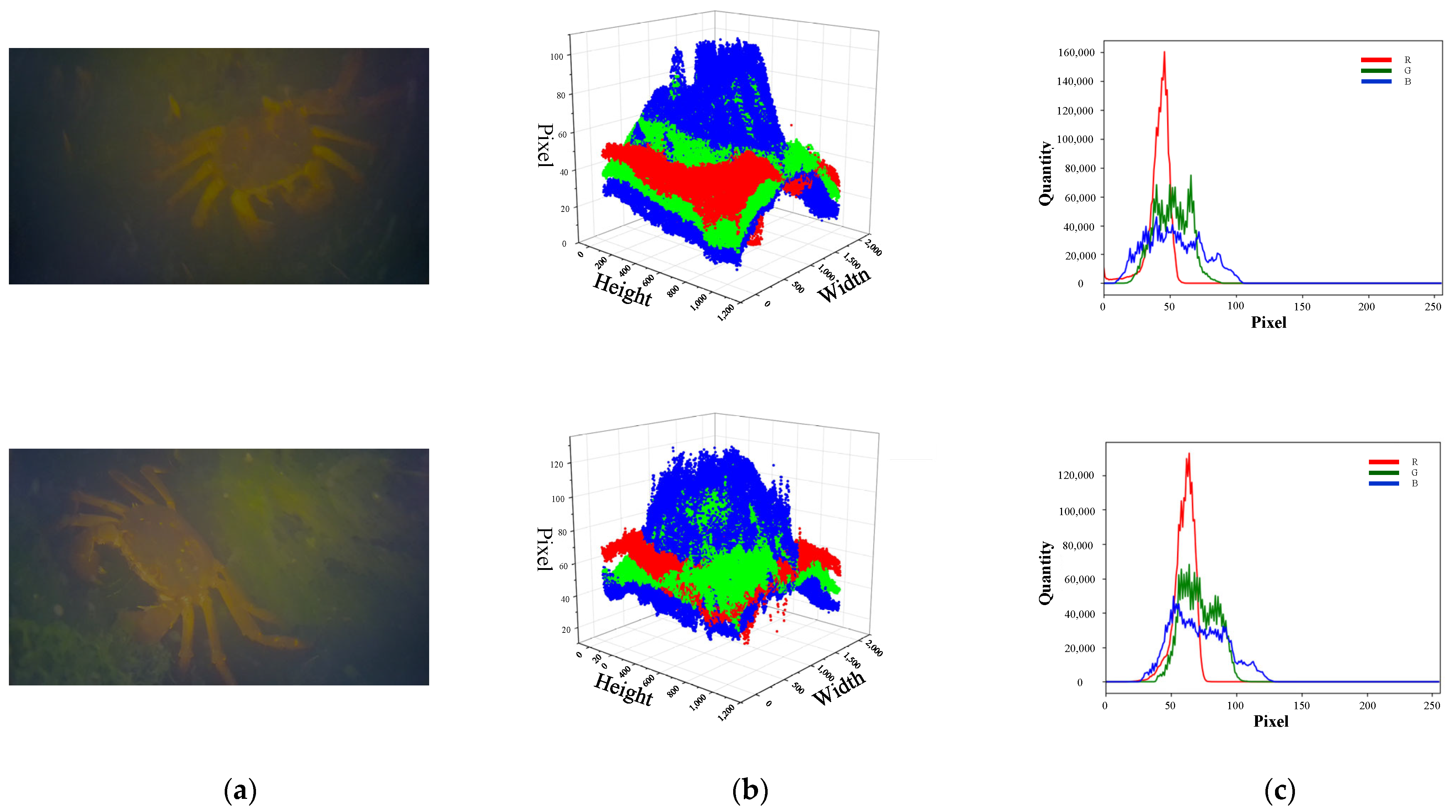

2.4. First-Step Color Correction

2.5. Second-Step Color Correction

2.6. Crab Identification Algorithm Based on Improved YOLOv5s

3. Result and Discussion

3.1. Subjective Estimation

3.2. Objective Estimation

3.3. Engineering Application Assessment

3.3.1. Comparison of Target Detection Algorithms

3.3.2. Engineering Application Evaluation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Cao, S.; Chen, Z.; Sun, Y.; Zhao, D.; Hong, J.; Ruan, C. Research on Automatic Bait Casting System for Crab Farming. In Proceedings of the 2020 5th International Conference on Electromechanical Control Technology and Transportation, Nanchang, China, 15–17 May 2020; pp. 403–408. [Google Scholar]

- Cao, S.; Zhao, D.; Sun, Y.; Ruan, C. Learning-based low-illumination image enhancer for underwater live crab detection. ICES J. Mar. Sci. 2021, 78, 979–993. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Shi, D.; Xu, F.; Wu, H. Study on Methods of Comprehensive Climatic Regionalization of the Crab in Jiangsu Province. Chin. J. Agric. Resour. Reg. Plan. 2021, 42, 130–135. [Google Scholar]

- Gu, J.; Zhang, Y.; Liu, X.; Du, Y. Preliminary Study on Mathematical Model of Growth for Young Chinese Mitten Crab. J. Tianjin Agric. Coll. 1997, 4, 23–30. [Google Scholar]

- Cao, S.; Zhao, D.; Liu, X.; Sun, Y. Real-time robust detector for underwater live crabs based on deep learning. Comput. Electron. Agric. 2020, 172, 105339. [Google Scholar] [CrossRef]

- Cao, S.; Zhao, D.; Sun, Y.; Liu, X.; Ruan, C. Automatic coarse-to-fine joint detection and segmentation of underwater non-structural live crabs for precise feeding. Comput. Electron. Agric. 2021, 180, 105905. [Google Scholar] [CrossRef]

- Li, J.; Xu, W.; Deng, L.; Xiao, Y.; Han, Z.; Zheng, H. Deep learning for visual recognition and detection of aquatic animals: A review. Rev. Aquac. 2022, 15, 409–433. [Google Scholar] [CrossRef]

- Zhai, X.; Wei, H.; He, Y.; Shang, Y.; Liu, C. Underwater Sea Cucumber Identification Based on Improved YOLOv5. Appl. Sci. 2022, 12, 9105. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, S.; Lu, J.; Wang, H.; Feng, Y.; Shi, C.; Li, D.; Zhao, R. A lightweight dead fish detection method based on deformable convolution and YOLOV4. Comput. Electron. Agric. 2022, 198, 107098. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, Z.; Zhao, D.; Zhan, T.; Zhou, W.; Ruan, C. Design and Experiment of Precise Feeding System for Pond Crab Culture. Trans. Chin. Soc. Agric. Mach. 2022, 53, 291–301. [Google Scholar]

- Siripattanadilok, W.; Siriborvornratanakul, T. Recognition of partially occluded soft-shell mud crabs using Faster R-CNN and Grad-CAM. Aquac. Int. 2023, 1–21. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y.; Li, D.; Duan, Q. Chinese mitten crab detection and gender classification method based on GMNet-YOLOv4. Comput. Electron. Agric. 2023, 214, 108318. [Google Scholar] [CrossRef]

- Zhu, D. Underwater Image Enhancement Based on the Improved Algorithm of Dark Channel. Mathematics 2023, 11, 1382. [Google Scholar] [CrossRef]

- Li, T.; Zhou, T. Multi-scale fusion framework via retinex and transmittance optimization for underwater image enhancement. PLoS ONE 2022, 17, e0275107. [Google Scholar] [CrossRef] [PubMed]

- Zhou, J.; Yao, J.; Zhang, W.; Zhang, D. Multi-scale retinex-based adaptive gray-scale transformation method for underwater image enhancement. Multimed. Tools Appl. 2021, 81, 1811–1831. [Google Scholar] [CrossRef]

- Zhou, J.; Liu, D.; Xie, X.; Zhang, W. Underwater image restoration by red channel compensation and underwater median dark channel prior. Appl. Opt. 2022, 61, 2915–2922. [Google Scholar] [CrossRef] [PubMed]

- Perez, J.; Attanasio, A.C.; Nechyporenko, N.; Sanz, P.J. A Deep Learning Approach for Underwater Image Enhancement. In Proceedings of the 6th International Work-Conference on the Interplay between Natural and Artificial Computation, Elche, Spain, 1–5 June 2017; pp. 183–192. [Google Scholar]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-Time Color Correction of Monocular Underwater Images. IEEE Robot. Autom. Lett. 2017, 3, 387–394. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, S.; Song, W.; He, Q.; Wei, Q. Lightweight Underwater Object Detection Based on YOLO v4 and Multi-Scale Attentional Feature Fusion. Remote Sens. 2021, 13, 4706. [Google Scholar] [CrossRef]

- Wen, G.; Li, S.; Liu, F.; Luo, X.; Er, M.-J.; Mahmud, M.; Wu, T. YOLOv5s-CA: A Modified YOLOv5s Network with Coordinate Attention for Underwater Target Detection. Sensors 2023, 23, 3367. [Google Scholar] [CrossRef] [PubMed]

- Zhao, S.; Zheng, J.; Sun, S.; Zhang, L. An Improved YOLO Algorithm for Fast and Accurate Underwater Object Detection. Symmetry 2022, 14, 1669. [Google Scholar] [CrossRef]

- Mao, G.; Weng, W.; Zhu, J.; Zhang, Y.; Wu, F.; Mao, Y. Model for marine organism detection in shallow sea using the improved YOLO-V4 network. Trans. Chin. Soc. Agric. Eng. 2021, 37, 152–158. [Google Scholar]

- Liu, K.; Peng, L.; Tang, S. Underwater Object Detection Using TC-YOLO with Attention Mechanisms. Sensors 2023, 23, 2567. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Xu, X.; Liu, Y.; Lu, L.; Wu, Q. YoloXT: A object detection algorithm for marine benthos. Ecol. Inform. 2022, 72, 101923. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, J.; Tang, M.; Li, G.; Hao, Q.; Fang, Y. Underwater image restoration based on improved dark channel prior. Symp. Nov. Photoelectron. Detect. Technol. Appl. 2021, 11763, 998–1003. [Google Scholar]

- Yu, J.; Huang, S.; Zhou, S.; Chen, L.; Li, H. The improved dehazing method fusion-based. In Proceedings of the 2019 Chinese Automation Congress, Hangzhou, China, 22–24 November 2019; pp. 4370–4374. [Google Scholar]

- Zou, Y.; Ma, Y.; Zhuang, L.; Wang, G. Image Haze Removal Algorithm Using a Logarithmic Guide Filtering and Multi-Channel Prior. IEEE Access 2021, 9, 11416–11426. [Google Scholar] [CrossRef]

- Suresh, S.; Rajan, M.R.; Pushparaj, J.; Asha, C.S.; Lal, S.; Reddy, C.S. Dehazing of Satellite Images using Adaptive Black Widow Optimization-based framework. Int. J. Remote Sens. 2021, 42, 5072–5090. [Google Scholar] [CrossRef]

- Sun, S.; Zhao, S.; Zheng, J. Intelligent Site Detection Based on Improved YOLO Algorithm. In Proceedings of the International Conference on Big Data Engineering and Education (BDEE), Guiyang, China, 12–14 August 2021; pp. 165–169. [Google Scholar]

- Gasparini, F.; Schettini, R. Color correction for digital photographs. In Proceedings of the 12th International Conference on Image Analysis and Processing, Mantova, Italy, 17–19 September 2003; pp. 646–651. [Google Scholar]

- Gasparini, F.; Schettini, R. Color balancing of digital photos using simple image statistics. Pattern Recognit. 2004, 37, 1201–1217. [Google Scholar] [CrossRef]

- Xu, X.; Cai, Y.; Liu, C.; Jia, K.; Sun, L. Color Cast Detection and Color Correction Methods Based on Image Analysis. Meas. Control Technol. 2008, 27, 10–12+21. [Google Scholar]

- Zhao, D.; Liu, X.; Sun, Y.; Wu, R.; Hong, J.; Ruan, C. Detection of Underwater Crabs Based on Machine Vision. Trans. Chin. Soc. Agric. Mach. 2019, 50, 151–158. [Google Scholar]

- Zhao, D.; Cao, S.; Sun, Y.; Qi, H.; Ruan, C. Small-sized Efficient Detector for Underwater Freely Live Crabs Based on Compound Scaling Neural Network. Trans. Chin. Soc. Agric. Mach. 2020, 51, 163–174. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1967; pp. 281–297. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-Means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. In Proceedings of the 34th AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2019; pp. 12993–13000. [Google Scholar]

- Zhou, Q.; Qin, J.; Xiang, X.; Tan, Y.; Xiong, N. Algorithm of Helmet Wearing Detection Based on AT-YOLO Deep Mode. Comput. Mater. Contin. 2021, 69, 159–174. [Google Scholar] [CrossRef]

- Bhateja, V.; Yadav, A.; Singh, D.; Chauhan, B. Multi-scale Retinex with Chromacity Preservation (MSRCP)-Based Contrast Enhancement of Microscopy Images. Smart Intell. Comput. Appl. 2022, 2, 313–321. [Google Scholar]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision, Waikoloa Village, HI, USA, 7–11 January 2018; pp. 1375–1383. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A Statistical Evaluation of Recent Full Reference Image Quality Assessment Algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Sowmya, A. An Underwater Color Image Quality Evaluation Metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Tsai, D.; Lee, Y.; Matsuyama, E. Information Entropy Measure for Evaluation of Image Quality. J. Digit. Imaging 2008, 21, 338–347. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

| Clustering Algorithms | Datasets | Pre-Selected Anchor Boxes |

|---|---|---|

| K-means | COCO | (10, 13), (16, 30), (33, 23), (30, 61), (62, 45), (59, 119), (116, 90), (156, 198), (373, 326) |

| K-means | Self-made | (333, 1079), (420, 968), (457, 1601), (506, 743), (506, 1141), (587, 1299), (619, 977), (659, 1568), (723, 1228) |

| K-means++ | Self-made | (328, 1062), (431, 1078), (447, 1581), (469, 714), (528, 1018), (577, 1285), (657, 985), (669, 1595), (756, 1256) |

| Image | Indicators | Proposed Method | DCP | AHE | MSRCP | GCANet |

|---|---|---|---|---|---|---|

| 1 | SSIM ↑ | 0.893 | 0.453 | 0.770 | 0.041 | 0.475 |

| PSNR ↑ | 15.189 | 14.916 | 14.061 | 7.306 | 9.866 | |

| UCIQE ↑ | 0.632 | 0.596 | 0.629 | 0.631 | 0.650 | |

| IE ↑ | 6.342 | 6.002 | 6.752 | 7.459 | 7.606 | |

| NIQE ↓ | 14.839 | 13.074 | 16.949 | 18.240 | 14.270 | |

| 2 | SSIM ↑ | 0.879 | 0.424 | 0.709 | 0.051 | 0.442 |

| PSNR ↑ | 15.724 | 14.176 | 15.200 | 7.273 | 9.573 | |

| UCIQE ↑ | 0.644 | 0.634 | 0.598 | 0.610 | 0.653 | |

| IE ↑ | 6.251 | 5.895 | 6.536 | 7.328 | 7.845 | |

| NIQE ↓ | 15.983 | 13.030 | 16.453 | 18.531 | 15.334 | |

| 3 | SSIM ↑ | 0.887 | 0.433 | 0.769 | 0.037 | 0.478 |

| PSNR ↑ | 15.202 | 14.965 | 14.163 | 7.156 | 9.316 | |

| UCIQE ↑ | 0.636 | 0.614 | 0.610 | 0.628 | 0.624 | |

| IE ↑ | 6.243 | 5.778 | 6.740 | 7.504 | 7.593 | |

| NIQE ↓ | 15.734 | 13.393 | 19.625 | 18.467 | 15.383 | |

| 4 | SSIM ↑ | 0.805 | 0.519 | 0.618 | 0.044 | 0.472 |

| PSNR ↑ | 16.467 | 13.478 | 10.834 | 6.116 | 8.613 | |

| UCIQE ↑ | 0.648 | 0.625 | 0.638 | 0.663 | 0.668 | |

| IE ↑ | 6.595 | 5.728 | 7.297 | 7.430 | 7.692 | |

| NIQE ↓ | 15.058 | 12.597 | 16.400 | 16.456 | 12.635 | |

| 5 | SSIM ↑ | 0.855 | 0.432 | 0.734 | 0.038 | 0.355 |

| PSNR ↑ | 14.662 | 14.260 | 13.514 | 6.067 | 8.506 | |

| UCIQE ↑ | 0.633 | 0.609 | 0.618 | 0.631 | 0.671 | |

| IE ↑ | 6.104 | 5.473 | 6.552 | 7.392 | 7.712 | |

| NIQE ↓ | 16.078 | 13.205 | 17.417 | 17.539 | 13.637 |

| Algorithm | Parameters (Million) | GFLOPs | Precision (%) | Recall (%) | mAP (%) | FPS (f/s) |

|---|---|---|---|---|---|---|

| YOLOv3 | 61.55 | 155.3 | 0.957 | 0.995 | 0.988 | 34 |

| YOLOv3 + SHU | 5.53 | 9.2 | 0.919 | 0.982 | 0.978 | 44 |

| YOLOv5s | 7.04 | 16 | 0.973 | 0.978 | 0.991 | 41 |

| YOLOv5s + SHU | 3.20 | 5.9 | 0.914 | 0.991 | 0.977 | 50 |

| YOLOv5s + MobileNetV3s | 3.56 | 6.4 | 0.962 | 0.962 | 0.985 | 36 |

| YOLOv5s + PP-LCNet | 13.42 | 24.9 | 0.947 | 0.987 | 0.984 | 41 |

| Number | Image | DCP | AHE | MSRCP | GCANet | Proposed Method | |

|---|---|---|---|---|---|---|---|

| Number of detections | 1800 | 1345 | 1439 | 1249 | 928 | 1321 | 1634 |

| Average confidence level | - | 0.51 | 0.63 | 0.47 | 0.43 | 0.51 | 0.75 |

| Detection rate | - | 74.72% | 79.94% | 69.39% | 51.56% | 73.39% | 90.78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Yuan, B.; Li, Z.; Liu, Y.; Zhao, D. Rethinking Underwater Crab Detection via Defogging and Channel Compensation. Fishes 2024, 9, 60. https://doi.org/10.3390/fishes9020060

Sun Y, Yuan B, Li Z, Liu Y, Zhao D. Rethinking Underwater Crab Detection via Defogging and Channel Compensation. Fishes. 2024; 9(2):60. https://doi.org/10.3390/fishes9020060

Chicago/Turabian StyleSun, Yueping, Bikang Yuan, Ziqiang Li, Yong Liu, and Dean Zhao. 2024. "Rethinking Underwater Crab Detection via Defogging and Channel Compensation" Fishes 9, no. 2: 60. https://doi.org/10.3390/fishes9020060

APA StyleSun, Y., Yuan, B., Li, Z., Liu, Y., & Zhao, D. (2024). Rethinking Underwater Crab Detection via Defogging and Channel Compensation. Fishes, 9(2), 60. https://doi.org/10.3390/fishes9020060