Psychometric Characteristics of Smartphone-Based Gait Analyses in Chronic Health Conditions: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

2.3. Data Extraction and Analysis

2.4. Methodological Quality Appraisal

3. Results

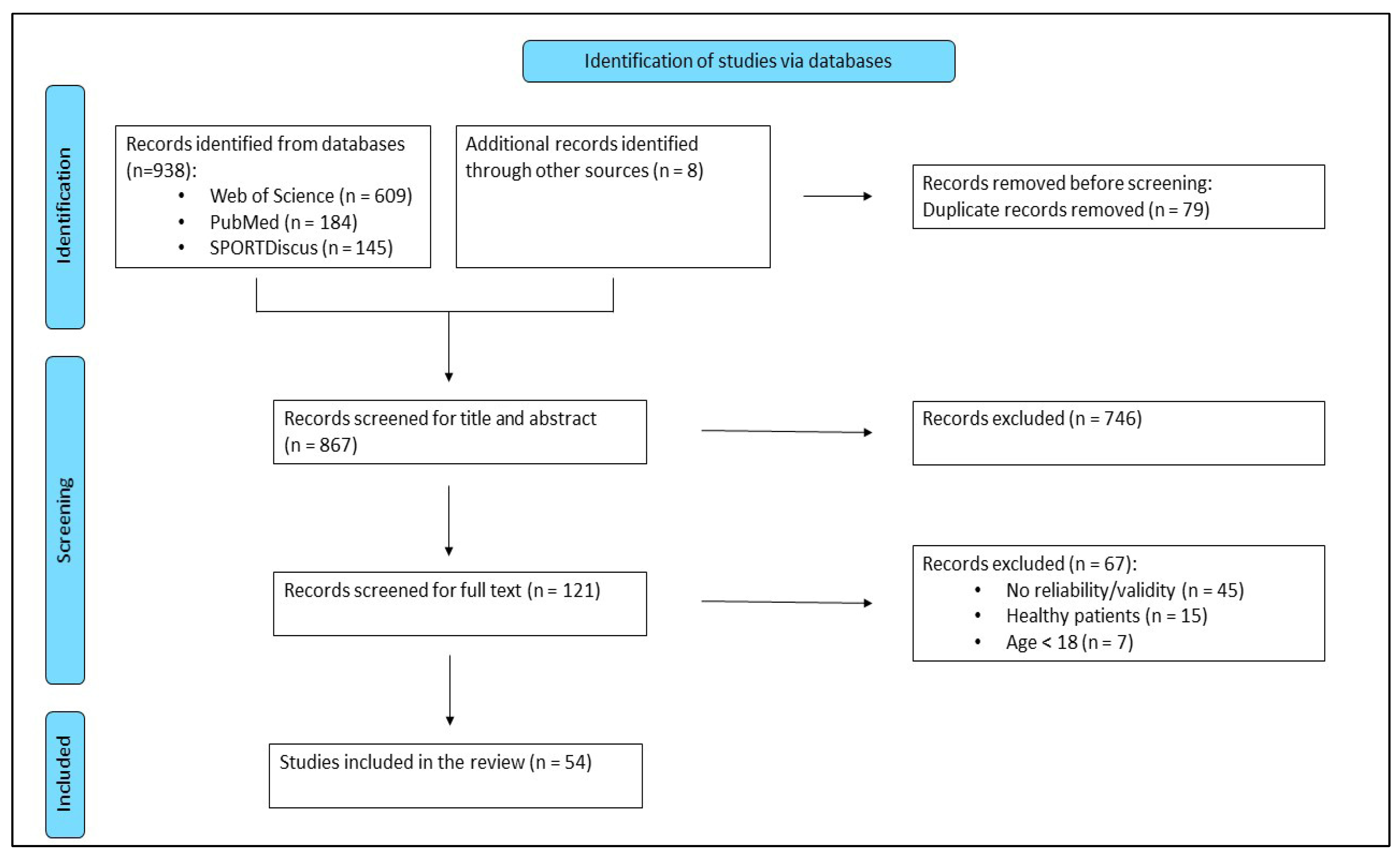

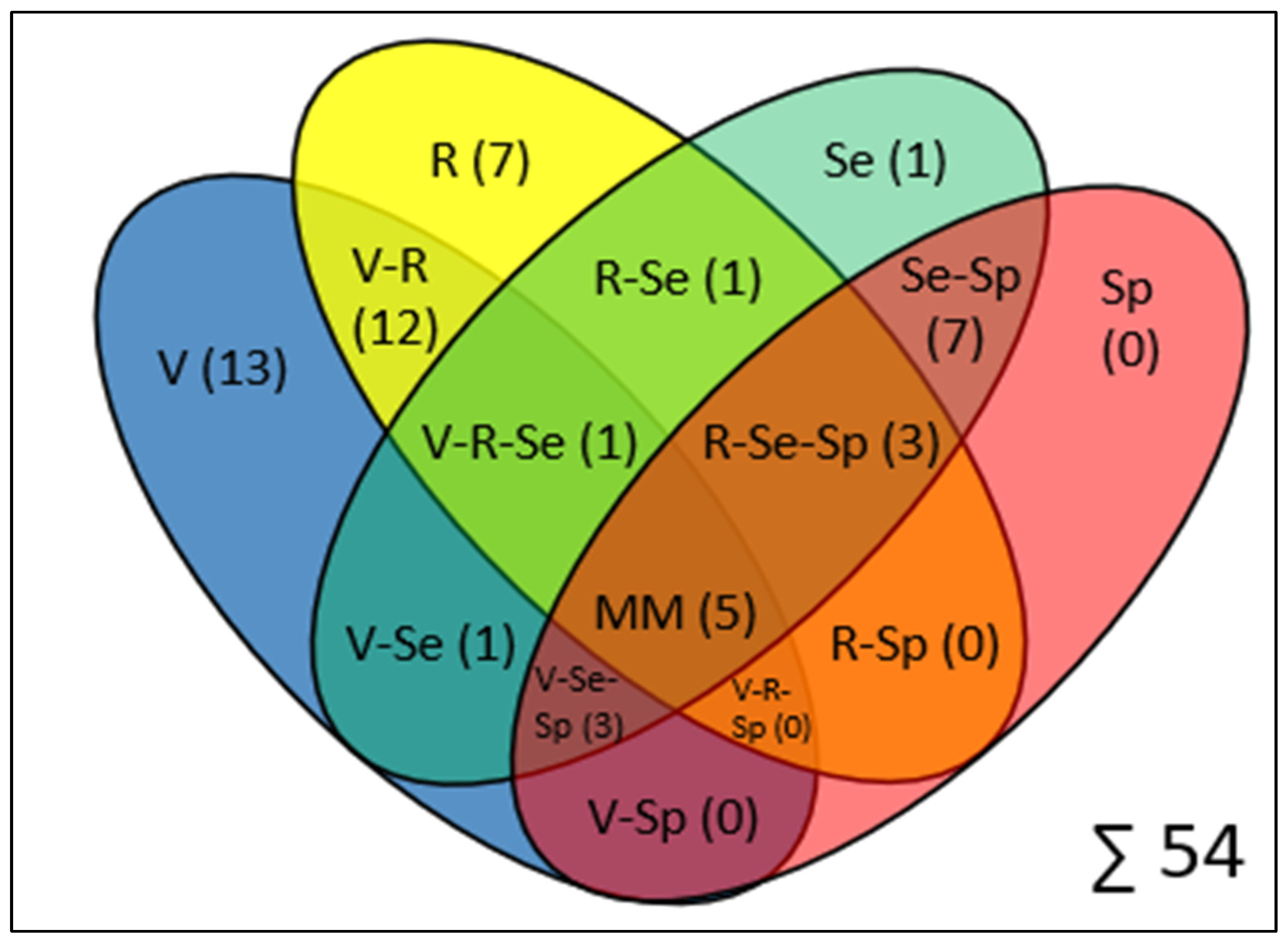

3.1. Study Selection

3.2. Study Analysis

3.3. Validation, Reliability, and Feasibility Outcomes

3.4. Methodological Quality of the Included Studies

4. Discussion

4.1. Key Findings

4.2. Validity of Smartphone-Based Gait Analysis

4.3. Reliability of Smartphone-Based Gait Analysis

4.4. Sensitivity and Specificity in Pathological Gait Detections

4.5. Feasibility and Usability in Clinical and Home Settings

5. Limitations and Future Research Perspectives

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Dionne, C.E.; Dunn, K.M.; Croft, P.R. Does back pain prevalence really decrease with increasing age? A systematic review. Age Ageing 2006, 35, 229–234. [Google Scholar] [CrossRef] [PubMed]

- Rapoport, J.; Jacobs, P.; Bell, N.R.; Klarenbach, S. Refining the measurement of the economic burden of chronic diseases in Canada. Age 2004, 20, 1–643. [Google Scholar]

- Duncan, R.P.; van Dillen, L.R.; Garbutt, J.M.; Earhart, G.M.; Perlmutter, J.S. Low Back Pain—Related Disability in Parkinson Disease: Impact on Functional Mobility, Physical Activity, and Quality of Life. Phys. Ther. 2019, 99, 1346–1353. [Google Scholar] [CrossRef]

- Carey, B.J.; Potter, J.F. Cardiovascular causes of falls. Age Ageing 2001, 30 (Suppl. 4), 19–24. [Google Scholar] [CrossRef]

- McIntosh, S.J.; Lawson, J.; Kenny, R.A. Clinical characteristics of vasodepressor, cardioinhibitory, and mixed carotid sinus syndrome in the elderly. Am. J. Med. 1993, 95, 203–208. [Google Scholar] [CrossRef]

- Montero-Odasso, M.; Schapira, M.; Duque, G.; Soriano, E.R.; Kaplan, R.; Camera, L.A. Gait disorders are associated with non-cardiovascular falls in elderly people: A preliminary study. BMC Geriatr. 2005, 5, 15. [Google Scholar] [CrossRef] [PubMed]

- Verghese, J.; Holtzer, R.; Lipton, R.B.; Wang, C. Quantitative gait markers and incident fall risk in older adults. J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2009, 64, 896–901. [Google Scholar] [CrossRef] [PubMed]

- Smith, J.A.; Stabbert, H.; Bagwell, J.J.; Teng, H.-L.; Wade, V.; Lee, S.-P. Do people with low back pain walk differently? A systematic review and meta-analysis. Cold Spring Harbor Laboratory. medRxiv 2021. [Google Scholar] [CrossRef]

- Agostini, V.; Ghislieri, M.; Rosati, S.; Balestra, G.; Knaflitz, M. Surface Electromyography Applied to Gait Analysis: How to Improve Its Impact in Clinics? Front. Neurol. 2020, 11, 994. [Google Scholar] [CrossRef]

- Nichols, J.K.; Sena, M.P.; Hu, J.L.; O’Reilly, O.M.; Feeley, B.T.; Lotz, J.C. A Kinect-based movement assessment system: Marker position comparison to Vicon. Comput. Methods Biomech. Biomed. Eng. 2017, 20, 1289–1298. [Google Scholar] [CrossRef]

- Bhambra, T.S.; Zafar, A.Q.; Fishlock, A. Understanding gait assessment and analysis. Orthop. Trauma 2024, 38, 371–377. [Google Scholar] [CrossRef]

- Salchow-Hömmen, C.; Skrobot, M.; Jochner, M.C.E.; Schauer, T.; Kühn, A.A.; Wenger, N. Review-Emerging Portable Technologies for Gait Analysis in Neurological Disorders. Front. Hum. Neurosci. 2022, 16, 768575. [Google Scholar] [CrossRef] [PubMed]

- Simon, S.R. Quantification of human motion: Gait analysis-benefits and limitations to its application to clinical problems. J. Biomech. 2004, 37, 1869–1880. [Google Scholar] [CrossRef]

- Rodrigues, T.B.; Salgado, D.P.; Catháin, C.Ó.; O’Connor, N.; Murray, N. Human gait assessment using a 3D marker-less multimodal motion capture system. Multimed. Tools Appl. 2020, 79, 2629–2651. [Google Scholar] [CrossRef]

- Abou, L.; Wong, E.; Peters, J.; Dossou, M.S.; Sosnoff, J.J.; Rice, L.A. Smartphone applications to assess gait and postural control in people with multiple sclerosis: A systematic review. Mult. Scler. Relat. Disord. 2021, 51, 102943. [Google Scholar] [CrossRef]

- Rashid, U.; Barbado, D.; Olsen, S.; Alder, G.; Elvira, J.L.L.; Lord, S.; Niazi, I.K.; Taylor, D. Validity and Reliability of a Smartphone App for Gait and Balance Assessment. Sensors 2021, 22, 124. [Google Scholar] [CrossRef]

- Parmenter, B.; Burley, C.; Stewart, C.; Whife, J.; Champion, K.; Osman, B.; Newton, N.; Green, O.; Wescott, A.B.; Gardner, L.A.; et al. Measurement Properties of Smartphone Approaches to Assess Physical Activity in Healthy Young People: Systematic Review. JMIR mHealth uHealth 2022, 10, e39085. [Google Scholar] [CrossRef]

- Chan, H.; Zheng, H.; Wang, H.; Gawley, R.; Yang, M.; Sterritt, R. Feasibility Study on iPhone Accelerometer for Gait Detection. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011; IEEE: New York, NY, USA, 2011. [Google Scholar] [CrossRef]

- McGuire, M.L. An Overview of Gait Analysis and Step Detection in Mobile Computing Devices. In Proceedings of the 2012 Fourth International Conference on Intelligent Networking and Collaborative Systems, Bucharest, Romania, 19–21 September 2012; IEEE: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Del Din, S.; Godfrey, A.; Mazzà, C.; Lord, S.; Rochester, L. Free-living monitoring of Parkinson’s disease: Lessons from the field. Mov. Disord. 2016, 31, 1293–1313. [Google Scholar] [CrossRef]

- Ferrari, A.; Ginis, P.; Hardegger, M.; Casamassima, F.; Rocchi, L.; Chiari, L. A Mobile Kalman-Filter Based Solution for the Real-Time Estimation of Spatio-Temporal Gait Parameters. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 764–773. [Google Scholar] [CrossRef]

- Ginis, P.; Nieuwboer, A.; Dorfman, M.; Ferrari, A.; Gazit, E.; Canning, C.G.; Rocchi, L.; Chiari, L.; Hausdorff, J.M.; Mirelman, A. Feasibility and effects of home-based smartphone-delivered automated feedback training for gait in people with Parkinson’s disease: A pilot randomized controlled trial. Park. Relat. Disord. 2016, 22, 28–34. [Google Scholar] [CrossRef]

- Kim, H.; Lee, H.J.; Lee, W.; Kwon, S.; Kim, S.K.; Jeon, H.S.; Park, H.; Shin, C.W.; Yi, W.J.; Jeon, B.S.; et al. Unconstrained detection of freezing of Gait in Parkinson’s disease patients using smartphone. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milano, Italy, 25–29 August 2015; IEEE: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- Ivkovic, V.; Fisher, S.; Paloski, W.H. Smartphone-based tactile cueing improves motor performance in Parkinson’s disease. Park. Relat. Disord. 2016, 22, 42–47. [Google Scholar] [CrossRef] [PubMed]

- Lipsmeier, F.; Taylor, K.I.; Kilchenmann, T.; Wolf, D.; Scotland, A.; Schjodt-Eriksen, J.; Cheng, W.-Y.; Fernandez-Garcia, I.; Siebourg-Polster, J.; Jin, L.; et al. Evaluation of smartphone-based testing to generate exploratory outcome measures in a phase 1 Parkinson’s disease clinical trial. Mov. Disord. 2018, 33, 1287–1297. [Google Scholar] [CrossRef] [PubMed]

- de Jesus, M.O.; Ostolin, T.L.V.D.P.; Proença, N.L.; da Silva, R.P.; Dourado, V.Z. Self-Administered Six-Minute Walk Test Using a Free Smartphone App in Asymptomatic Adults: Reliability and Reproducibility. Int. J. Environ. Res. Public Health 2022, 19, 1118. [Google Scholar] [CrossRef]

- Pepa, L.; Verdini, F.; Spalazzi, L. Gait parameter and event estimation using smartphones. Gait Posture 2017, 57, 217–223. [Google Scholar] [CrossRef]

- Da Silva, R.S.; Da Silva, S.T.; Cardoso, D.C.R.; Quirino, M.A.F.; Silva, M.H.A.; Gomes, L.A.; Fernandes, J.D.; Da Oliveira, R.A.N.S.; Fernandes, A.B.G.S.; Ribeiro, T.S. Psychometric properties of wearable technologies to assess post-stroke gait parameters: A systematic review. Gait Posture 2024, 113, 543–552. [Google Scholar] [CrossRef]

- Exter, S.H.; Koenders, N.; Wees, P.; Berg, M.G.A. A systematic review of the psychometric properties of physical performance tests for sarcopenia in community-dwelling older adults. Age Ageing 2024, 53, afae113. [Google Scholar] [CrossRef]

- Currell, K.; Jeukendrup, A.E. Validity, reliability and sensitivity of measures of sporting performance. Sports Med. 2008, 38, 297–316. [Google Scholar] [CrossRef] [PubMed]

- Thomas, J.R.; Silverman, S.J.; Nelson, J.K. Research Methods in Physical Activity, 7th ed.; Human Kinetics: Champaign, IL, USA, 2015. [Google Scholar]

- Di Biase, L.; Di Santo, A.; Caminiti, M.L.; de Liso, A.; Shah, S.A.; Ricci, L.; Di Lazzaro, V. Gait Analysis in Parkinson’s Disease: An Overview of the Most Accurate Markers for Diagnosis and Symptoms Monitoring. Sensors 2020, 20, 3529. [Google Scholar] [CrossRef]

- Yang, M.; Zheng, H.; Wang, H.; McClean, S.; Harris, N. Assessing the utility of smart mobile phones in gait pattern analysis. Health Technol. 2012, 2, 81–88. [Google Scholar] [CrossRef]

- Christensen, J.C.; Stanley, E.C.; Oro, E.G.; Carlson, H.B.; Naveh, Y.Y.; Shalita, R.; Teitz, L.S. The validity and reliability of the OneStep smartphone application under various gait conditions in healthy adults with feasibility in clinical practice. J. Orthop. Surg. Res. 2022, 17, 417. [Google Scholar] [CrossRef]

- Tang, S.T.; Tai, C.H.; Yang, C.Y.; Lin, J.H. Feasibility of Smartphone-Based Gait Assessment for Parkinson’s Disease. J. Med. Biol. Eng. 2020, 40, 582–591. [Google Scholar] [CrossRef]

- Abou, L.; Peters, J.; Wong, E.; Akers, R.; Dossou, M.S.; Sosnoff, J.J.; Rice, L.A. Gait and Balance Assessments using Smartphone Applications in Parkinson’s Disease: A Systematic Review. J. Med. Syst. 2021, 45, 87. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. Ann. Intern. Med. 2009, 151, 264–269. [Google Scholar] [CrossRef] [PubMed]

- NHLBI. Study Quality Assessment Tools. Available online: https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools (accessed on 27 December 2024).

- Abou, L.; Alluri, A.; Fliflet, A.; Du, Y.; Rice, L.A. Effectiveness of Physical Therapy Interventions in Reducing Fear of Falling Among Individuals with Neurologic Diseases: A Systematic Review and Meta-analysis. Arch. Phys. Med. Rehabil. 2021, 102, 132–154. [Google Scholar] [CrossRef] [PubMed]

- Adams, J.L.; Kangarloo, T.; Gong, Y.S.; Khachadourian, V.; Tracey, B.; Volfson, D.; Latzman, R.D.; Cosman, J.; Edgerton, J.; Anderson, D.; et al. Using a smartwatch and smartphone to assess early Parkinson’s disease in the WATCH-PD study over 12 months. NPJ Park. Dis. 2024, 10, 112. [Google Scholar] [CrossRef] [PubMed]

- Alexander, S.; Braisher, M.; Tur, C.; Chataway, J. The mSteps pilot study: Analysis of the distance walked using a novel smartphone application in multiple sclerosis. Mult. Scler. J. 2022, 28, 2285–2293. [Google Scholar] [CrossRef]

- Balto, J.M.; Kinnett-Hopkins, D.L.; Motl, R.W. Accuracy and precision of smartphone applications and commercially available motion sensors in multiple sclerosis. Mult. Scler. J. Exp. Transl. Clin. 2016, 2, 2055217316634754. [Google Scholar] [CrossRef]

- Brooks, G.C.; Vittinghoff, E.; Iyer, S.; Tandon, D.; Kuhar, P.; Madsen, K.A.; Marcus, G.M.; Pletcher, M.J.; Olgin, J.E. Accuracy and Usability of a Self-Administered 6-Minute Walk Test Smartphone Application. Circ. Heart Fail. 2015, 8, 905–913. [Google Scholar] [CrossRef]

- Capecci, M.; Pepa, L.; Verdini, F.; Ceravolo, M.G. A smartphone-based architecture to detect and quantify freezing of gait in Parkinson’s disease. Gait Posture 2016, 50, 28–33. [Google Scholar] [CrossRef]

- Chan, H.; Zheng, H.; Wang, H.; Newell, D. Assessment of gait patterns of chronic low back pain patients: A smart mobile phone based approach. In Proceedings of the 2015 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Washington, DC, USA, 9–12 November 2015; IEEE: New York, NY, USA, 2015; pp. 1016–1023. [Google Scholar] [CrossRef]

- Chien, J.H.; Torres-Russotto, D.; Wang, Z.; Gui, C.F.; Whitney, D.; Siu, K.C. The use of smartphone in measuring stance and gait patterns in patients with orthostatic tremor. PLoS ONE 2019, 14, e0220012. [Google Scholar] [CrossRef]

- Clavijo-Buendía, S.; Molina-Rueda, F.; Martín-Casas, P.; Ortega-Bastidas, P.; Monge-Pereira, E.; Laguarta-Val, S.; Morales-Cabezas, M.; Cano-de-la-Cuerda, R. Construct validity and test -retest reliability of a free mobile application for spatio-temporal gait analysis in Parkinson’s disease patients. Gait Posture 2020, 79, 86–91. [Google Scholar] [CrossRef]

- Costa, P.H.V.; de Jesus, T.P.D.; Winstein, C.; Torriani-Pasin, C.; Polese, J.C. An investigation into the validity and reliability of mHealth devices for counting steps in chronic stroke survivors. Clin. Rehabil. 2020, 34, 394–403. [Google Scholar] [CrossRef] [PubMed]

- Ellis, R.J.; Ng, Y.S.; Zhu, S.; Tan, D.M.; Anderson, B.; Schlaug, G.; Wang, Y. A Validated Smartphone-Based Assessment of Gait and Gait Variability in Parkinson’s Disease. PLoS ONE 2015, 10, e0141694. [Google Scholar] [CrossRef]

- Isho, T.; Tashiro, H.; Usuda, S. Accelerometry-Based Gait Characteristics Evaluated Using a Smartphone and Their Association with Fall Risk in People with Chronic Stroke. J. Stroke Cerebrovasc. Dis. 2015, 24, 1305–1311. [Google Scholar] [CrossRef]

- Juen, J.; Cheng, Q.; Schatz, B. A Natural Walking Monitor for Pulmonary Patients Using Mobile Phones. IEEE J. Biomed. Health Inform. 2015, 19, 1399–1405. [Google Scholar] [CrossRef]

- Lopez, W.O.C.; Higuera, C.A.E.; Fonoff, E.T.; de Oliveira Souza, C.; Albicker, U.; Martinez, J.A.E. Listenmee® and Listenmee® smartphone application: Synchronizing walking to rhythmic auditory cues to improve gait in Parkinson’s disease. Hum. Mov. Sci. 2014, 37, 147–156. [Google Scholar] [CrossRef] [PubMed]

- Mak, J.; Rens, N.; Savage, D.; Nielsen-Bowles, H.; Triggs, D.; Talgo, J.; Gandhi, N.; Gutierrez, S.; Aalami, O. Reliability and repeatability of a smartphone-based 6-min walk test as a patient-centred outcome measure. Eur. Heart J. Digit. Health 2021, 2, 77–87. [Google Scholar] [CrossRef] [PubMed]

- Maldaner, N.; Sosnova, M.; Zeitlberger, A.M.; Ziga, M.; Gautschi, O.P.; Regli, L.; Weyerbrock, A.; Stienen, M.N.; Int 6WT Study Grp. Evaluation of the 6-min walking test as a smartphone app-based self-measurement of objective functional impairment in patients with lumbar degenerative disc disease. J. Neurosurg. Spine 2020, 33, 779–788. [Google Scholar] [CrossRef]

- Marom, P.; Brik, M.; Agay, N.; Dankner, R.; Katzir, Z.; Keshet, N.; Doron, D. The Reliability and Validity of the OneStep Smartphone Application for Gait Analysis among Patients Undergoing Rehabilitation for Unilateral Lower Limb Disability. Sensors 2024, 24, 3594. [Google Scholar] [CrossRef]

- Pepa, L.; Verdini, F.; Capecci, M.; Maracci, F.; Ceravolo, M.G.; Leo, T. Predicting Freezing of Gait in Parkinson’s Disease with a Smartphone: Comparison Between Two Algorithms. Ambient. Assist. Living 2015, 11, 61–69. [Google Scholar] [CrossRef]

- Pepa, L.; Capecci, M.; Andrenelli, E.; Ciabattoni, L.; Spalazzi, L.; Ceravolo, M.G. A fuzzy logic system for the home assessment of freezing of gait in subjects with Parkinsons disease. Expert Syst. Appl. 2020, 147, 113197. [Google Scholar] [CrossRef]

- Polese, J.C.; e Faria, G.S.; Ribeiro-Samora, G.A.; Lima, L.P.; de Morais Faria, C.D.C.; Scianni, A.A.; Teixeira-Salmela, L.F. Google fit smartphone application or Gt3X Actigraph: Which is better for detecting the stepping activity of individuals with stroke? A validity study. J. Bodyw. Mov. Ther. 2019, 23, 461–465. [Google Scholar] [CrossRef]

- Regev, K.; Eren, N.; Yekutieli, Z.; Karlinski, K.; Massri, A.; Vigiser, I.; Kolb, H.; Piura, Y.; Karni, A. Smartphone-based gait assessment for multiple sclerosis. Mult. Scler. Relat. Disord. 2024, 82, 105394. [Google Scholar] [CrossRef] [PubMed]

- Serra-Añó, P.; Pedrero-Sánchez, J.F.; Inglés, M.; Aguilar-Rodríguez, M.; Vargas-Villanueva, I.; López-Pascual, J. Assessment of Functional Activities in Individuals with Parkinson’s Disease Using a Simple and Reliable Smartphone-Based Procedure. Int. J. Environ. Res. Public Health 2020, 17, 4123. [Google Scholar] [CrossRef] [PubMed]

- Shema-Shiratzky, S.; Beer, Y.; Mor, A.; Elbaz, A. Smartphone-based inertial sensors technology—Validation of a new application to measure spatiotemporal gait metrics. Gait Posture 2022, 93, 102–106. [Google Scholar] [CrossRef]

- Sugimoto, Y.A.; Rhea, C.K.; Ross, S.E. Modified proximal thigh kinematics captured with a novel smartphone app in individuals with a history of recurrent ankle sprains and altered dorsiflexion with walking. Clin. Biomech. 2023, 105, 105955. [Google Scholar] [CrossRef]

- Tao, S.; Zhang, H.; Kong, L.W.; Sun, Y.; Zhao, J. Validation of gait analysis using smartphones: Reliability and validity. Digit. Health 2024, 10, 20552076241257054. [Google Scholar] [CrossRef]

- Wagner, S.R.; Gregersen, R.R.; Henriksen, L.; Hauge, E.-M.; Keller, K.K. Wag. Sensors 2022, 22, 9396. [Google Scholar] [CrossRef]

- Yahalom, H.; Israeli-Korn, S.; Linder, M.; Yekutieli, Z.; Karlinsky, K.T.; Rubel, Y.; Livneh, V.; Fay-Karmon, T.; Hassin-Baer, S.; Yahalom, G. Psychiatric Patients on Neuroleptics: Evaluation of Parkinsonism and Quantified Assessment of Gait. Clin. Neuropharmacol. 2020, 43, 1–6. [Google Scholar] [CrossRef]

- Yahalom, G.; Yekutieli, Z.; Israeli-Korn, S.; Elincx-Benizri, S.; Livneh, V.; Fay-Karmon, T.; Tchelet, K.; Rubel, Y.; Hassin-Baer, S. Smartphone-Based Timed Up and Go Test Can Identify Postural Instability in Parkinson’s Disease. Isr. Med. Assoc. J. 2020, 22, 37–42. [Google Scholar]

- Abujrida, H.; Agu, E.; Pahlavan, K. Machine learning-based motor assessment of Parkinson’s disease using postural sway, gait and lifestyle features on crowdsourced smartphone data. Biomed. Phys. Eng. Express 2020, 6, 035005. [Google Scholar] [CrossRef] [PubMed]

- Arora, S.; Baig, F.; Lo, C.; Barber, T.R.; Lawton, M.A.; Zhan, A.D.; Rolinski, M.; Ruffmann, C.; Klein, J.C.; Rumbold, J.; et al. Smartphone motor testing to distinguish idiopathic REM sleep behavior disorder, controls, and PD. Neurology 2018, 91, 1528–1538. [Google Scholar] [CrossRef] [PubMed]

- Arora, S.; Venkataraman, V.; Zhan, A.; Donohue, S.; Biglan, K.M.; Dorsey, E.R.; Little, M.A. Detecting and monitoring the symptoms of Parkinson’s disease using smartphones: A pilot study. Park. Relat. Disord. 2015, 21, 650–653. [Google Scholar] [CrossRef] [PubMed]

- Bourke, A.K.; Scotland, A.; Lipsmeier, F.; Gossens, C.; Lindemann, M. Gait Characteristics Harvested during a Smartphone-Based Self-Administered 2-Minute Walk Test in People with Multiple Sclerosis: Test-Retest Reliability and Minimum Detectable Change. Sensors 2020, 20, 5906. [Google Scholar] [CrossRef]

- Chen, O.Y.; Lipsmeier, F.; Phan, H.; Prince, J.; Taylor, K.I.; Gossens, C.; Lindemann, M.; de Vos, M. Building a Machine-Learning Framework to Remotely Assess Parkinson’s Disease Using Smartphones. IEEE Trans. Biomed. Eng. 2020, 67, 3491–3500. [Google Scholar] [CrossRef]

- Creagh, A.P.; Dondelinger, F.; Lipsmeier, F.; Lindemann, M.; de Vos, M. Longitudinal Trend Monitoring of Multiple Sclerosis Ambulation Using Smartphones. IEEE Open J. Eng. Med. Biol. 2022, 3, 202–210. [Google Scholar] [CrossRef]

- Creagh, A.P.; Simillion, C.; Bourke, A.K.; Scotland, A.; Lipsmeier, F.; Bernasconi, C.; van Beek, J.; Baker, M.; Gossens, C.; Lindemann, M.; et al. Smartphone- and Smartwatch-Based Remote Characterisation of Ambulation in Multiple Sclerosis During the Two-Minute Walk Test. IEEE J. Biomed. Health Inform. 2021, 25, 838–849. [Google Scholar] [CrossRef]

- He, T.; Chen, J.; Xu, X.; Fortino, G.; Wang, W. Early Detection of Parkinson’s Disease Using Deep NeuroEnhanceNet with Smartphone Walking Recordings. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 3603–3614. [Google Scholar] [CrossRef]

- Mehrang, S.; Jauhiainen, M.; Pietil, J.; Puustinen, J.; Ruokolainen, J.; Nieminen, H. Identification of Parkinson’s Disease Utilizing a Single Self-recorded 20-step Walking Test Acquired by Smartphone’s Inertial Measurement Unit. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2018, 2018, 2913–2916. [Google Scholar] [CrossRef]

- Omberg, L.; Neto, E.C.; Perumal, T.M.; Pratap, A.; Tediarjo, A.; Adams, J.; Bloem, B.R.; Bot, B.M.; Elson, M.; Goldman, S.M.; et al. Remote smartphone monitoring of Parkinson’s disease and individual response to therapy. Nat. Biotechnol. 2022, 40, 480–487. [Google Scholar] [CrossRef]

- Schwab, P.; Karlen, W. PhoneMD: Learning to Diagnose Parkinson’s Disease from Smartphone Data. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 1118–1125. [Google Scholar] [CrossRef]

- van Oirschot, P.; Heerings, M.; Wendrich, K.; den Teuling, B.; Dorssers, F.; van Ee, R.; Martens, M.B.; Jongen, P.J. A two-minute walking test with a smartphone app for persons with multiple sclerosis: Validation study. MIR Form. Res. 2021, 5, e29128. [Google Scholar] [CrossRef]

- Zhai, Y.Y.; Nasseri, N.; Pöttgen, J.; Gezhelbash, E.; Heesen, C.; Stellmann, J.P. Smartphone Accelerometry: A Smart and Reliable Measurement of Real-Life Physical Activity in Multiple Sclerosis and Healthy Individuals. Front. Neurol. 2020, 11, 688. [Google Scholar] [CrossRef]

- Goñi, M.; Eickhoff, S.B.; Far, M.S.; Patil, K.R.; Dukart, J. Smartphone-Based Digital Biomarkers for Parkinson’s Disease in a Remotely-Administered Setting. IEEE Access 2022, 10, 28361–28384. [Google Scholar] [CrossRef]

- Raknim, P.; Lan, K.C. Gait Monitoring for Early Neurological Disorder Detection Using Sensors in a Smartphone: Validation and a Case Study of Parkinsonism. Telemed. e-Health 2016, 22, 75–81. [Google Scholar] [CrossRef] [PubMed]

- Su, D.N.; Liu, Z.; Jiang, X.; Zhang, F.Z.; Yu, W.T.; Ma, H.Z.; Wang, C.X.; Wang, Z.; Wang, X.M.; Hu, W.L.; et al. Simple Smartphone-Based Assessment of Gait Characteristics in Parkinson Disease: Validation Study. JMIR mHealth uHealth 2021, 9, e25451. [Google Scholar] [CrossRef] [PubMed]

- Cheng, W.-Y.; Bourke, A.K.; Lipsmeier, F.; Bernasconi, C.; Belachew, S.; Gossens, C.; Graves, J.S.; Montalban, X.; Lindemann, M. U-turn speed is a valid and reliable smartphone-based measure of multiple sclerosis-related gait and balance impairment. Gait Posture 2021, 84, 120–126. [Google Scholar] [CrossRef]

- Lam, K.-H.; Bucur, I.G.; van Oirschot, P.; de Graaf, F.; Strijbis, E.; Uitdehaag, B.; Heskes, T.; Killestein, J.; de Groot, V. Personalized monitoring of ambulatory function with a smartphone 2-min walk test in multiple sclerosis. Mult. Scler. J. 2023, 29, 606–614. [Google Scholar] [CrossRef]

- Hamy, V.; Garcia-Gancedo, L.; Pollard, A.; Myatt, A.; Liu, J.; Howland, A.; Beineke, P.; Quattrocchi, E.; Williams, R.; Crouthamel, M. Developing Smartphone-Based Objective Assessments of Physical Function in Rheumatoid Arthritis Patients: The PARADE Study. Digit. Biomark. 2020, 4, 26–43. [Google Scholar] [CrossRef]

- Salvi, D.; Poffley, E.; Tarassenko, L.; Orchard, E. App-Based Versus Standard Six-Minute Walk Test in Pulmonary Hypertension: Mixed Methods Study. JMIR mHealth uHealth 2021, 9, e22748. [Google Scholar] [CrossRef]

- Chan, H.; Zheng, H.; Wang, H.; Sterritt, R.; Newell, D. Smart mobile phone based gait assessment of patients with low back pain. In Proceedings of the 2013 Ninth International Conference on Natural Computation (ICNC), Shenyang, China, 23–25 July 2013; IEEE: New York, NY, USA, 2013; pp. 1062–1066. [Google Scholar] [CrossRef]

- Banky, M.; Clark, R.A.; Mentiplay, B.F.; Olver, J.H.; Kahn, M.B.; Williams, G. Toward Accurate Clinical Spasticity Assessment: Validation of Movement Speed and Joint Angle Assessments Using Smartphones and Camera Tracking. Arch. Phys. Med. Rehabil. 2019, 100, 1482–1491. [Google Scholar] [CrossRef]

- Brinkløv, C.F.; Thorsen, I.K.; Karstoft, K.; Brøns, C.; Valentiner, L.; Langberg, H.; Vaag, A.A.; Nielsen, J.S.; Pedersen, B.K.; Ried-Larsen, M. Criterion validity and reliability of a smartphone delivered sub-maximal fitness test for people with type 2 diabetes. BMC Sports Sci. Med. Rehabil. 2016, 8, 31. [Google Scholar] [CrossRef] [PubMed]

- Rozanski, G.; Putrino, D. Recording context matters: Differences in gait parameters collected by the OneStep smartphone application. Clin. Biomech. 2022, 99, 105755. [Google Scholar] [CrossRef] [PubMed]

- Gaßner, H.; Sanders, P.; Dietrich, A.; Marxreiter, F.; Eskofier, B.M.; Winkler, J.; Klucken, J. Clinical Relevance of Standardized Mobile Gait Tests. Reliability Analysis Between Gait Recordings at Hospital and Home in Parkinson’s Disease: A Pilot Study. J. Park. Dis. 2020, 10, 1763–1773. [Google Scholar] [CrossRef] [PubMed]

| Topic | Main Findings |

|---|---|

| Total Studies | 54 studies included |

| Study design/Percentage of total studies included |

|

| Diseases investigated/Percentage of total studies included |

|

| Test locations/Percentage of total studies included |

|

| Smartphone models and Apps/Percentage of total studies included |

|

| Sensor placement/Percentage of total studies included |

|

| Gait parameters analysed/Percentage of total studies included |

|

| Validation methods used/Percentage of total studies included |

|

| Topic | Main Findings |

|---|---|

| Total Studies | 54 studies included |

| Reliability/Percentage of total studies included |

|

| Validity/Percentage of total studies included |

|

| Sensitivity and Specificity |

|

| Feasibility and Usability/Percentage of total studies included |

|

| Author | Question/ Objective | Population | Participation Rate | Selection/ Recruitment | Exposure and Outcome | Timeframe Between Exposure and Outcome | Sample Size | Levels of Exposure | Exposure Measure | Repeated Exposure Measurement | Outcome Measure | Blinding of Outcome Assessors | Follow-Up Rate | Statistical Analyses | Overall Quality |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Abujrida et al. [67] | Yes | Yes | Yes | Yes | No | No | No | No | Yes | Yes | Yes | No | No | Yes | Good |

| Adams et al. [40] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Alexander et al. [41] | Yes | Yes | Yes | Yes | No | No | No | No | No | No | Yes | No | No | Yes | Fair |

| Arora et al. [69] | No | Yes | No | Yes | No | No | Yes | No | Yes | No | Yes | No | No | No | Fair |

| Arora et al. [68] | No | Yes | Yes | Yes | No | No | No | Yes | Yes | Yes | Yes | No | No | No | Fair |

| Balto et al. [42] | Yes | Yes | Yes | Yes | No | No | No | No | Yes | Yes | Yes | No | No | Yes | Good |

| Banky et al. [88] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Bourke et al. [70] | Yes | Yes | No | Yes | No | No | No | No | Yes | No | Yes | No | No | Yes | Good |

| Brinkløv et al. [89] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Brooks et al. [43] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Capecci et al. [44] | Yes | Yes | No | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Chan et al. [87] | Yes | Yes | No | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Chen et al. [71] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Cheng et al. [83] | Yes | Yes | No | Yes | No | No | No | No | Yes | No | Yes | No | No | Yes | Good |

| Chien et al. [46] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Clavijo-Buendía et al. [47] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Costa et al. [48] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Good |

| Creagh et al. [73] | Yes | No | No | No | Yes | Yes | No | No | Yes | Yes | Yes | No | No | Yes | Good |

| Creagh et al. [72] | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes | Good |

| Ellis et al. [49] | Yes | Yes | No | Yes | No | No | Yes | Yes | Yes | No | Yes | No | No | Yes | Good |

| Ginis et al. [22] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | No | Yes | Yes | Good |

| Goñi et al. [80] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | No | No | Yes | Good |

| Hamy et al. [85] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| He et al. [74] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Good |

| Isho et al. [50] | Yes | Yes | No | No | No | No | Yes | No | Yes | Yes | No | No | No | Yes | Fair |

| Juen et al. [51] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Kim et al. [23] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Lam et al. [84] | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes | Good |

| Lipsmeier et al. [25] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Lopez et al. [52] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | Yes | No | Yes | Good |

| Mak et al. [53] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Maldaner et al. [54] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | No | No | No | No | Yes | Good |

| Marom et al. [55] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | No | No | Yes | Good |

| Mehrang et al. [75] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | No | Yes | No | No | No | Good |

| Omberg et al. [76] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Pepa et al. [56] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Pepa et al. [57] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Polese et al. [58] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Good |

| Raknim et al. [81] | Yes | Yes | No | Yes | Yes | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes | Good |

| Regev et al. [59] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | No | No | Yes | Good |

| Rozanski et al. [90] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Salvi et al. [86] | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Schwab et al. [77] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | No | Yes | No | No | Yes | Good |

| Serra-Ano et al. [60] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Shema-Shiratzky et al. [61] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Su et al. [82] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Sugimoto et al. [62] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Tang et al. [35] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Tao et al. [63] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Van Oirschot et al. [78] | Yes | Yes | Yes | Yes | No | No | Yes | No | Yes | Yes | Yes | No | No | Yes | Good |

| Wagner et al. [64] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Yahalom et al. [65] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Yahalom et al. [66] | Yes | Yes | Yes | Yes | No | No | Yes | Yes | Yes | Yes | Yes | No | No | Yes | Good |

| Zhai et al. [79] | Yes | Yes | Yes | Yes | No | No | No | No | Yes | No | Yes | No | No | Yes | Good |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bea, T.; Chaabene, H.; Freitag, C.W.; Schega, L. Psychometric Characteristics of Smartphone-Based Gait Analyses in Chronic Health Conditions: A Systematic Review. J. Funct. Morphol. Kinesiol. 2025, 10, 133. https://doi.org/10.3390/jfmk10020133

Bea T, Chaabene H, Freitag CW, Schega L. Psychometric Characteristics of Smartphone-Based Gait Analyses in Chronic Health Conditions: A Systematic Review. Journal of Functional Morphology and Kinesiology. 2025; 10(2):133. https://doi.org/10.3390/jfmk10020133

Chicago/Turabian StyleBea, Tobias, Helmi Chaabene, Constantin Wilhelm Freitag, and Lutz Schega. 2025. "Psychometric Characteristics of Smartphone-Based Gait Analyses in Chronic Health Conditions: A Systematic Review" Journal of Functional Morphology and Kinesiology 10, no. 2: 133. https://doi.org/10.3390/jfmk10020133

APA StyleBea, T., Chaabene, H., Freitag, C. W., & Schega, L. (2025). Psychometric Characteristics of Smartphone-Based Gait Analyses in Chronic Health Conditions: A Systematic Review. Journal of Functional Morphology and Kinesiology, 10(2), 133. https://doi.org/10.3390/jfmk10020133