1. Introduction

A growing abundance of applied sport science initiatives include strategies for mitigating the deleterious effects of heat stress, which are preventable through the implementation of proper training and effective heat stress monitoring (HSM) protocols. The ramifications of heat stress, such as heat-induced severe cramping, edema, rhabdomyolysis, or heat strokes to name a few, are often characterized as exertional heat illnesses (EHI) [

1,

2,

3,

4], and arise when homeostasis cannot maintain a core body temperature beneath 40.5 °C (considered as excessive elevations in core body temperature during physical exertion). This process is primarily achieved through constant heat exchange between the human body and the ambient environment via evaporation, radiation, convection, and conduction [

2]. Athletes, whom inherently endure bouts of intense and voluminous physical exertion throughout their training cycles, rely on these thermoregulatory processes to counterbalance inevitable elevations in core body temperature as a result of metabolic heat production, a necessary by-product of exercise.

However, if an athlete’s physiological systems, namely their cardiovascular and nervous systems as well as their skin, are unable to prevent excess elevations in core body temperature then they are at a severe risk for injury, or in the most severe cases, fatality [

2,

5]. A position statement on exertional heat illnesses in collegiate athletes released in 2015 from the National Athletic Trainers Association related core temperatures exceeding 40.5 °C with exertional heat stroke [

2], a catastrophic event for athletes that must be avoided at all costs. While not all heat illnesses are temperature-mediated, it is worth noting that exertional heat strokes are among the leading causes of sudden death in collegiate athletes [

6]. Fortunately, heat stress morbidity and mortality are largely avoidable with proper HSM protocols.

In general, HSM strategies are designed to ensure the health and well-being of athletes, especially during training periods comprising bouts of high volume or intensity (often times training is a combination of both) conducted under extreme environmental conditions, such as high humidity and/or ambient temperatures. For example, American football is one (but not the only) sport that comprises high magnitudes of physical workload, often times performed in harsh environments [

7,

8,

9]. Prior to each competitive season, football athletes participate in summer training camp, which imposes tremendous amounts of fatigue on the athletes and likely occurs during some of the hottest days within a calendar year [

9,

10,

11]. In fact, the National Collegiate Athletic Association (NCAA) recently made drastic alterations to the practice specifications (e.g., number of practices per day, amount of collision contact) permitted during preseason training camp periods. The NCAA’s deliberate attempt to mitigate EHI is a result of the increased awareness surrounding EHI in athletes [

1,

2,

6,

12], which ultimately stem from tragic events that saturated mainstream media including but not limited to the deaths of pro-bowl offensive tackle, Korey Stringer, in August of 2001 and University of Maryland football athlete, Jordan McNair, who sustained an EHI in May 2018, and succumbed in June 2018. Both athletes suffered exertional heat strokes during the summer months. Moreover, the Coronavirus 2 (COVID-19) pandemic that halted the entire global sports domain in March 2020 and prevented training and monitoring protocols to progress normally poses a new and unique challenge to the sports performance realm as it is likely that athlete training statuses were negatively impacted [

13]. Indeed, athletes are at increased risk for EHI when first returning to practice following a relatively long “break”, such as the initial summer months following the spring semester for collegiate fall athletes [

3,

4], which further exposes the importance of expeditiously incorporating HSM strategies into athlete training and recovery.

As previously alluded to, EHIs are largely preventable with careful considerations applied to HSM protocols that are implemented and refined throughout the various training periods (e.g., preseason, offseason, competitive season). Yet, typical procedures garnering research quality data that measure core body temperature comprise invasive and uncomfortable instruments, such as rectal or esophageal temperature probes, that are impractical for routine use in athletics. Thus, efforts to develop more practical approaches for measuring core temperatures ensued [

14,

15,

16]. However, concerns regarding the validity and reliability for many of these instruments, such as axillary, oral, or tympanic sensors are questioned throughout the extant literature [

16,

17,

18,

19,

20]. One alternative strategy utilizes telemetric pills that are swallowed by athletes and transported through their digestive system within 24–48 h [

18,

19,

21]. Telemetric pills were previously validated as an acceptable instrument for quantifying core body temperature in humans [

22,

23], although limitations for daily or routine use beyond unit costs do exist. Unfortunately for many athletes, when telemetric pills are utilized, data collection typically requires the donning of a data logging device, which is impractical for contact sports such as American football, rugby, or mixed martial arts. Alternatively, remote transmissions of data are sometimes possible, which likely still necessitates that at least one human data recorder (presumably need much more for large groups of athletes such as American football teams) meticulously and manually records data throughout any given practice or training session. Furthermore, previous research reported that the accuracy of telemetric pills is sensitive to fluid ingestion [

18] and ingestion timing [

19], posing unique challenges for athletic groups that are constantly hydrating to prevent EHIs and other harmful effects of dehydration.

Interestingly, recent investigation of the utilization of serial raw heart rate signals to estimate core body temperature during ambulation reported an impressive overall bias of −0.03 ± 0.32 °C when compared to an ingested telemetric pill [

14], which was later replicated in a similar model conducted in first responders [

24]. The estimated core temperature (ECT) algorithm is based on a Kalman filter model using a series of heart rate (HR) measurements as the leading indicator; equations and MATLAB code available in Buller et al. [

14]. The model contains two relationships, a time update and observational component. The ECT updates in 1-min increments by using updated HR data (time update) to modulate the previous value (observational). As such, the possibility for real time core temperature monitoring in athletes (applicable to military populations as well) through existing live athlete monitoring platforms that already obtain heart rate data garnered intrigue from researchers and practitioners alike [

25,

26,

27]. Still, integrating the predictive algorithm developed through these previous efforts requires additional validations to ensure heart rate data obtained from the respective commercial devices are adequate enough for the algorithm to retain acceptable levels of accuracy. Further, while the published model for ECT [

14,

24] was rigorously developed and validated, this was done on non-athlete populations and not during sport-specific activities. Specifically, the nature of American Football in practice and game settings requires brief periods of high intensity effort followed by brief periods of rests (e.g., in-between plays) [

7,

28].

Therefore, the purpose of this study was to evaluate the accuracy of heart rate derived core body temperature estimations, which were obtained from a commercial electrocardiogram (ECG) chest strap using the published ECT algorithm. More specifically, data were collected on American football athletes during the highest risk time period of the season, summer training camp [

3,

4]. Temperature estimations were compared to a telemetric pill that was ingested by the athletes prior to several team practices. A secondary purpose was to examine potential influences that athlete body composition may impose on estimation accuracy. We hypothesized that ECT calculations would perform within 2 °F with respect to the telemetric pill. The +/−2 °F (1.1 °C) enables a range between 102.9 and 106.9 °F (39.4–41.6 °C, with a midpoint at 40.5 °C) thus allowing the monitoring of temperature ranges indicative of EHI risk [

2]. Due to the novelty of this data collection, previous data were unavailable for use in determining a required sample size a priori. However, a post-hoc power analysis was conducted using Bland Altman statistics calculated from the data. Post-hoc power analysis will aid in justifying results presented herein and will be considered for future applications of ECT in sport and research. These data will reveal critical information relevant to athletes and sport practitioners interested in HSM as a strategy for mitigating EHI.

3. Results

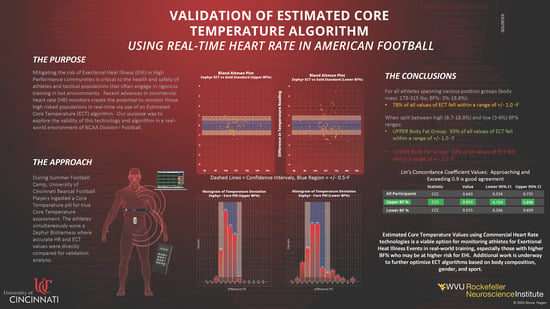

3.1. Analysis of All Participants

A total of 134 discrete measurements were collected across all participants and practices. A single measurement was defined as a value provided by the ingestible pill (Gold Standard) read locally to an RF reader, and recorded along with a timestamp to the nearest second. The Zephyr ECT value was manually recorded at the same time via a telemetry system on a laptop PC, and further validated against downloaded and processed data post-practice. The RF reader value corresponded to the “Gold Standard” value in equation 1, and Zephyr ECT value checked against the downloaded data corresponds to “Zephyr ECT”. The Bland–Altman plot in

Figure 1 gives an overall picture of Zephyr ECT measurement error for all participants across all practices.

The vast majority of measurements are within ±2 °F.

Table 3 below gives more details on the Bland–Altman statistics for comparison of Zephyr ECT to Gold Standard.

Overall, the bias value was −0.192, which means that Zephyr ECT missed the Gold Standard value by, on average −0.192 °F. The 95% CI for the bias value was (−0.333, −0.05). Since “0” is not contained in this interval, then at the 0.05 level, it can be concluded that Zephyr ECT consistently underestimated the Gold Standard temperature, although minimally.

The LOA range, (−1.803, 1.42), represents the range where 95% of the differences between Zephyr ECT and Gold Standard are expected to fall. These limits have confidence intervals of their own. Looking at the values, it is not out of the realm of expectations for a Zephyr ECT measurement to underestimate the Gold Standard by 2 °F.

Next, the concordance correlation coefficient (CCC) provides a single summary measure of the strength of the relationship between Zephyr ECT and Gold Standard measurements.

Figure 2 below provides a visualization of this.

The green dashed lines in the plot 0.5 °F above and below the concordance line represent “very good” agreement. The CCC (along with associated 95% confidence intervals) is listed in

Table 4 below.

The actual concordance value may not have much meaning in this context, as there is no other group or dataset being used in comparison (however this will be useful later to compare subgroups).

Now, the focus turns to the distribution of the differences between Zephyr ECT and Gold Standard measurements. The histogram in

Figure 3 shows that 78% of the data points fell within +/-1.0 °F, and 54% within +/−0.5 °F for all participants across all practices.

3.2. Analysis of Position Grouping

As stated in the methods, observations were further grouped by “Upper Body Fat% (BF)” and “Lower Body Fat% (BF)”, and it was of interest to investigate any differences in measurement accuracy between the two groups. First, a side-by-side Bland–Altman comparison shown in

Figure 4.

The “Upper BF%” plot clearly showed a much smaller spread in difference between temperature readings compared to “Lower BF%”.

Table 5 lists the Bland–Altman statistics of these two plots.

With regard to the bias, the players with higher BF% were seen to have their temperatures consistently underestimated by Zephyr ECT, as the 95% CI did not include “0”. This underestimation was minimal at −0.247 °F. The 95% CI for bias for players in the Lower BF% group did not present enough evidence to support this claim thus a case can be made that Zephyr ECT measurements on Lower BF% players are unbiased. For players in the Upper BF% group, the range between upper and lower LOA was calculated to be 0.766 – (−1.26) = 2.026, while for the Lower BF% group, the range was considerably larger at 1.77 – (−2.076) = 3.846. Thus, Upper BF% participants exhibited much more precision than Lower BF% in their Zephyr ECT measurements.

One way to compare the overall performance between “Upper BF%” and “Lower BF%” groups in accuracy compared to Gold Standard temperature is the CCC. Differences from the concordance line within subgroup are shown in

Figure 5 below.

Similar to the Bland Altman analysis, Lower BF% participants were seen to have a higher spread around the concordance line compared to Upper BF%. Next, CCC values are presented in

Table 6.

Upper BF% participants showed a higher CCC value compared to Lower BF%. The confidence intervals between the two groups do not overlap thus, at the 0.05 level, there is enough statistical evidence to conclude that the level of agreement (concordance) in temperature measurements was significantly higher in Upper BF% participants when using Zephyr ECT to measure core body temperature.

Lastly, when looking at the distribution of the differences in temperature measurements in

Figure 6 and

Table 7, it is clear that a much higher percentage of Zephyr ECT values in the Upper BF% group fell within 1.0 °F.

Due to the applied nature of this study, which is a common byproduct of studying sport, a post hoc power analysis was completed. With an acceptable range set at +/−2 °F, we set the lower limit of agreement “L” as −2 °F and upper limit of agreement “U” as 2 °F. If the lower limit of the 95% CI for L, and the upper limit of the 95% CI for U fall within (−2, 2), there is enough evidence to conclude the two methods “agree” as per the agreement requirement. Thus, we tested two hypotheses at the same time, and both need rejecting:

Hypothesis 1 (H01). L < −2; HA1: L ≥ −2.

Hypothesis 2 (H02). U > 2; HA2: U ≤ 2.

To power this, two values were used [

34]: standardized difference limit (the mean of differences divided by the standard deviation of the differences), and the standardized agreement limit (2 divided the standard deviation of the differences). Here, the standardized difference limit is −0.192/0.822 = −0.23, and the standardized agreement limit is 2/0.822 = 2.43. With these two values, a sample size of 402 measurement pairs would be required to achieve 80% power at the 0.05 level for the hypothesis test above. However, when this same post hoc power analysis was run using only the Upper BF% group data, only 14 measurement pairs would be required to achieve 80% power at the 0.05 level.

4. Discussion

The primary motivation behind this study was to assess the accuracy of using heart rate data to derive an estimated core body temperature in Division I NCAA football athletes during the highest EHI risk time of the season. When assessed for every participant across all practices, the result was a CCC of 0.643. Statistically with CCC, this falls in the “poor” range, but this translates to 78% of the values falling between +/−1.0 °F. The decision to utilize this technology and method during training comes down to the practitioners, and if they are willing to accept that most data points are within +/− 1.0 °F accuracy to help augment their HSM surveillance process.

More interestingly, when the participants were split by body composition into an Upper BF% group and Lower BF% group, the results were very different. The Upper BF% group, defined as above 6% BF with a range between 6% and 18.8%, showed a substantially and statistically significant improvement in CCC with a value of 0.834, compared to 0.515 for the Lower BF% group. This is also reflected in an improvement in percentage of values within +/−1.0 °F accuracy up to 93%, as compared to 62% for the Lower BF% group.

The ECT algorithm has been well published, cited, and used in military populations, but not formally investigated for validity in athletics. Currently, this algorithm is only specific to heart rate data, and the model was trained and validated on military personnel. One explanation for the increased accuracy for the Upper BF% group is that the ECT algorithm was trained on participants that were in a higher body composition range, of 13–18% [

14], which closer matches the Upper BF% group compared to the Lower BF% group. Physiologically, there are at least two other factors to consider due to body composition differences, surface area and metabolic demand of tissue. Potential differences in athletes’ body mass-to-body surface area ratio (BM/BSA) might suggest that there may be a greater surface area available for evaporative cooling based on upon an single athlete’s anthropometric/body composition makeup [

35]. Therefore, future developments on ECT algorithms should take this into account. A second factor to consider comprises the differing metabolic demands of skeletal muscle tissue compared to adipose tissue. Muscle tissue possesses inherently greater metabolic demands, both at rest and during exertion, when compared to fat thus impacting the overall energy expenditure, which should be investigated as an additional factor for thermal regulation applications. Future studies powered to and designed to incorporate body composition and BSA are being considered.

Due to the applied research aspect of this study, several limitations are evident. (1) Number of subjects: due to the cost associated with the one time use core temperature pills, a certain number were able to be procured with the budget provided. This number was distributed across 13 athletes to enable multiple training sessions with the same subjects. A follow-on study should include a larger number of athletes and use the data collected in this study to provide power analysis to include proper distribution among different body compositions for investigating updated algorithms. (2) Number of data points: the pills selected for this study were not able to log data, and required a reader to be placed on the back of the subject for data transfer. This significantly limited the total number of data points, with trainers only able to collect data between series during training. Future research should utilize a pill that enables continuous logging at 30–60 s increments that allows for post-training download. This will enable a single subject to collect between 120 and 240 unique data points during a 2-h practice, and will be well above the full group post hoc power analysis within a handful of subjects. (3) Potential interference with cold water ingestion: due to the early morning practice times, a 3-h pre-training ingestion time was logistically feasible, whereas a 6–8 h window that likely could further mitigate interference would be more ideal. (4) Based on the assessment of the core pills, a majority of the core temperatures in the subjects did not exceed 102.5 °F. Additional data should be collected to investigate data points exceeding 105 °F where clinical significance becomes more evident. By expanding the number of subjects and data points suggested previously, the potential for expanding the range of temperatures is likely to increase.

This, along with the data presented in this study suggest that modifications can be investigated in the ECT algorithm to improve accuracy for lower body composition athletes. Due to the nature of American Football, additional investigation for football specific exertion should be studied. This is a sport with substantial equipment worn, and repeated high intensity bouts with rest in-between. This can be 10–20 s between plays, and 5–10 min between series during practices and games. This differs greatly from sports like soccer, where long durations of aerobic and anaerobic work is endured. Even more different are tactical populations like the military which can endure hours upon hours of steady state movements with substantial equipment. It is possible that enhanced algorithms can be created for these cases.

5. Conclusions

HSM is an important tool that can be used in exertional training settings to help reduce the risk of EHIs. However, for this to become standard practice, scientifically validated tools must be used, but just as important, must be logistically feasible to implement on a daily basis by the training staff. Data must be trusted, reliable, and instantly actionable. The data presented in this study shows that deriving ECT off of accurate heart rate data shows promise in HSM applications. Additionally, the ECT algorithm was implemented in a commercial monitoring system and can be added to other systems with minimal software modifications, which is important for daily use applications and not research. It is ultimately up to the practitioners what level of error they are comfortable with in using this as a surveillance tool, but this data shows that it is feasible and logistically possible with commercial systems.

Future work should be done investigating modified ECT algorithms off of heart rate that are specific to body composition and sport/position for athletics applications. For tactical and military populations, additional algorithm work could include age, body composition, gender, specialty. Additionally, as the wearable technology sector continues to evolve, additional sensors should be investigated for inclusion in algorithm work as well, such as accelerometers, skin temperature, ambient temperature, and potentially sweat rate.