Abstract

The shift towards renewable energy, particularly wind energy, is rapidly advancing globally, with Southeastern Europe and Croatia, in particular, experiencing a notable increase in wind turbine construction. The frequent exposure of wind turbine blades to environmental stressors and operational forces requires regular inspections to identify defects, such as erosion, cracks, and lightning damage, in order to minimize maintenance costs and operational downtime. This study aims to develop a machine learning model using convolutional neural networks to simplify the defect detection process for wind turbine blades, enhancing the efficiency and accuracy of inspections conducted by drones. The model leverages transfer learning on the YOLOv7 architecture and is trained on a dataset of 231 images with 246 annotated defects across eight categories, achieving a mean average precision of 0.76 at an intersection over the union threshold of 0.5. This research not only presents a robust framework for automated defect detection but also proposes a methodological approach for future studies in deep learning for structural inspections, highlighting significant economic benefits and improvements in inspection quality and speed.

1. Introduction

The shift towards renewable energy, with wind energy standing out as a pivotal component, is accelerating globally. In Southeastern Europe, particularly in Croatia, there has been a significant uptick in the construction of wind turbines (WTs) in recent times [1], while this region is also lagging behind in terms of digital transformation [2]. Consequently, the need for maintenance and inspection of these facilities is on the rise. WT blades are continuously subjected to environmental influences, various stresses, and torsional forces, making them prone to wear and tear, such as erosion, cracks, and damage from lightning strikes [1]. Early detection of such defects can cut down on maintenance costs and reduce operational downtime for WTs. Visual inspection has emerged as the primary method for WT blade examination due to its straightforwardness and cost effectiveness relative to other techniques. Utilizing unmanned aerial vehicles for these inspections not only cuts down the duration but also significantly enhances the safety of the inspectors. Introducing machine learning can further drive down inspection costs and lead to quicker, more precise reporting for clients. The objective of this research is to develop a machine learning model that aids inspectors in producing faster, higher-quality reports, acting as an additional layer of supervision.

As part of the global transition to renewable energy sources in response to climate change, wind energy continued to grow in 2021 despite the coronavirus pandemic and the consequent disruption of supply chains. However, according to the report by the Global Wind Energy Council (GWEC) for 2022 [3], a significant increase in the growth rate of newly installed wind generators is needed in the coming years to meet the targets of the Paris Agreement, which stipulate a reduction in greenhouse gas emissions by roughly 50% by 2030. During 2021, 93.6 gigawatts (GWs) of new wind energy capacity were added globally, which is only 1.8% less compared to the record year of 2020. The total global wind energy capacity at the beginning of 2022 stood at 837 GWs, marking a growth of 12.7% compared to the previous year. The largest markets for new installations in 2021 were the People’s Republic of China, the United States, Brazil, Vietnam, and the United Kingdom. Together, these five countries accounted for 75.1% of global installations last year. The cumulative installation order of countries at the end of 2021 remained unchanged from before. China, the USA, Germany, India, and Spain constitute 72% of global installations [3]. According to the annual report by Wind Europe for 2022, in 2021, the EU recorded a growth of approximately 11 GWs of new installations, and the EU now covers a total of 15% of its electricity needs from wind energy. Croatia meets 11% of its electricity needs from wind energy, with new installations in 2021 amounting to 183 megawatts (MWs), resulting in a cumulative total of 990 MWs [1].

As the importance of wind energy grows, so does the demand for fast and high-quality inspections of wind turbines. In accordance with that, we investigate the possibility of relatively fast and low-cost development of a machine learning model that will help speed up and raise the quality of the inspection process. The dataset for model development was sourced from Airspect Ltd. (Zagreb, Croatia), a Croatian company specializing in WT inspections, complemented with publicly accessible data from a similar research project. Incorporating an extra dataset aims to avoid overfitting and boost the model’s generalizability. While similar methodologies have been explored in recent academic studies, this research stands out due to its unique model output classes or the specific defect types it can identify. As there is no international standardization for WT blade defects, the class selection was grounded in the expertise of Airspect Ltd. inspectors. Past inspection experiences informed their decision making, as did input from wind farm proprietors in SE Europe and guidelines from Bladena ApS, which specializes in training for WT blade inspection and upkeep. The end goal is a model capable of spotting commonly occurring defects on WT blade surfaces in SE Europe. The objective of this paper is to develop a wind turbine blade defect detection model using convolutional neural networks (CNNs), specifically focusing on a novel approach that allows the model to predict a significantly greater range of defect classes than existing models. This study leverages transfer learning with the YOLOv7 architecture to create and validate a model optimized for enhanced defect classification. By expanding the classification capability, the model enables more detailed and accurate inspections. Ultimately, the research aims to provide a framework that not only advances current inspection methodologies but also lays a foundation for future deep learning applications in structural integrity assessments.

As the goal is the low-cost development of a suitably good model, we aim to answer the question of whether the transfer learning technique is effective enough for this use case. A YOLOv7 model will be utilized in this process, and from that arises the next question, which is as follows: How does the YOLOv7 architecture perform in the detection and classification of defects on wind turbine blades compared to other object detection models used in similar research, such as Faster R-CNN and YOLOv4? Lastly, we aim to determine whether it is possible to develop a model with superior defect detection capabilities compared to those demonstrated in existing research.

2. Literature Review

2.1. Wind Turbine Blade Defects

The primary function of a wind turbine blade is to “capture” the wind and transfer the load to the shaft. This creates a bending moment on the root bearing and a rotational moment on the main shaft [4]. The action of the force couple causes material stress and, consequently, damages on wind turbine blades after long-term operation. Other sources of damage include environmental factors, incorrect handling during transport and installation, and collisions with foreign objects [5]. Composite materials are commonly used to construct blades and nacelles of wind turbines [6]. These materials, also called composites, are produced by combining two or more materials with different properties. The combination of these materials results in a new material with improved properties. Generally, a composite consists of three components: matrix, reinforcement (fibers or particles), and a fine interfacial region. By carefully selecting the matrix, reinforcement, and the manufacturing process that combines them, engineers can tailor the material properties to meet specific criteria [7]. Blades are the most crucial part made of composites and the most expensive component of the wind turbine and the wind generator after the tower [5]. Since large loads act on the blades during the operation of wind turbines, the blades must meet the structural integrity requirements to avoid potential risks [6].

Keeping blades in good condition is key for the turbine’s ability to generate its rated power over the anticipated operational period of 20 years [5]. Minor composite defects can be tolerated if they do not compromise the structural performance of the wind turbine or risk propagation under normal operating conditions. However, certain types of defects, when present in the structure, will rapidly spread and reduce the wind generator’s performance or even cause instability that will overload other structural components and potentially cause a structural failure of the wind generator. Obviously, this will halt the wind generator’s operation. When this happens, the wind park owner will face a significant repair cost to get the turbine back to an operational state [6]. Sheng (as cited in [5]) states that cost-effective repair of WT blades is crucial for the wind energy industry’s development in the coming years. According to Stephenson (as cited in [5]), an average blade repair can cost up to USD 30,000, and it can climb to USD 350,000 per week if a crane is required. For comparison, a new blade costs around USD 200,000.

Primarily due to the action of coupled forces on the blade and environmental factors, defects occur on the blades. Additionally, extreme environmental conditions can accelerate defect propagation, such as ice, alternating extremely hot and cold periods, moisture ingress, salty and sandy wind gusts, and surface erosion from rain, hail, ice, and insects. Even a relatively small amount of leading-edge erosion can affect the aerodynamic ability of the blade and, consequently, lead to a loss of revenue. If the erosion is not remedied, the damage will accumulate and eventually lead to catastrophic failure, like a split blade edge or detachment of a section [5].

The International Electrotechnical Commission (IEC), of which the Republic of Croatia is a member, has standards for WT blades, among other standards concerning wind generators. Still, the focus of standard IEC 61400-5:2020 [8] is on design, production requirements, and quality control during manufacturing. For photovoltaic systems, there is a technical specification, IEC 62446-3:2017 [9], as part of standard IEC 62446:2020 [10], which specifies the types of defects occurring during the operational lifetime of photovoltaic systems. Inspection method requirements are defined, defects are classified and described, and a recommendation for defect rectification is provided. This is not the case for wind turbines, so inspection companies must rely on wind generator manufacturer recommendations and guidelines from private companies, like the previously mentioned company Bladena ApS. The classification of defects on wind turbines according to the inspection instructions manual by Bladena ApS is presented below. The mentioned manual [11] divides defects into the following:

- LE erosion (leading-edge erosion);

- Lack of protective tape on the leading edge;

- Longitudinal cracks;

- Split bond line on the trailing edge;

- Paint and protective layer damage;

- Forty-five-degree cracks on the surface;

- Other surface cracks;

- Pinholes;

- Hydraulic oil contamination;

- Lightning strike damage;

- Missing vortex generator plate;

- Other missing parts;

- Voids.

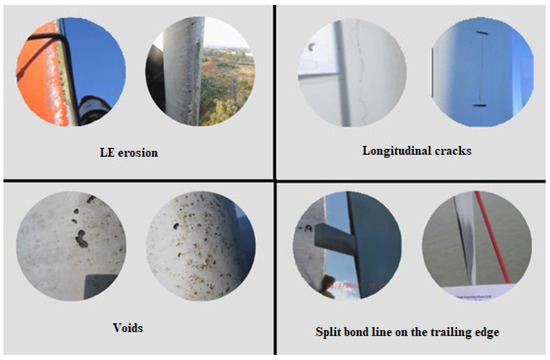

Figure 1 below shows an example of some of the types of defects listed earlier.

Figure 1.

Examples of four types of defects on blades [11], adapted by the authors.

Some of these defects do not occur, rarely occur, or are not applicable to wind turbines recorded in Southeast Europe. With this in mind, we made a pragmatic decision to combine certain classes and exclude some of them from further analysis.

2.2. Inspection Methods

Non-destructive testing (NDT) methods are crucial for identifying manufacturing defects in blades and blade defects that occur during the operation of wind turbines. According to García Márquez and Peco Chacón [12], the methods used are the visual method, the ultrasonic method, thermography, radiography, the electromagnetic method, acoustic emission, acousto–ultrasonic, and interferometry, among others. These methods, as pointed out by Li et al. [13], are extremely labor intensive and time consuming when the wind turbine is not operational, except for the thermographic method, which can be applied during operation. Still, it is sensitive to stains, reflections, etc. [12]. Furthermore, most non-destructive testing equipment is very expensive and bulky [13]. Humans still review the results of most of the previously listed NDT methods. Human inspection by qualified experts is still the standard in the industry, but it can be inconsistent, subjective, labor intensive, and time consuming. There is a need for the development of automatic inspection systems to reduce the workload of inspectors and ensure more consistent, objective, and efficient decisions [14]. In addition to those mentioned above, there are multi-modal methods of signal processing from various sensors on the wind turbine. Still, their reliability is questionable, as the signals obtained from these sensors are sensitive to environmental conditions [15], and most sensors are also very expensive [13].

In this paper, the focus is on the visual inspection method, which, as stated by Xu, Wen, and Liu [15], has greatly developed and popularized in recent years due to the development of deep learning and image processing techniques. Visual inspection has low accuracy compared to other NDT methods [12]. It does not detect internal defects [12,16], but its advantages are low cost, simple implementation [12], and fast data collection in the case of inspection by unmanned aerial vehicles (drones). Drone inspection is characterized by execution speed; under ideal conditions, depending on the turbine size, it can be performed in 20–45 min, according to our experience. Both photos and videos can be collected, but the photos are large and require a large data storage space. The downside is that the automatic scale added in processing can be distorted due to the angle at which the photos are collected. It cannot be performed at wind speeds greater than 14 m/s and high temperatures that affect the battery of the unmanned aerial vehicle [11].

2.3. Existing Research Utilizing Machine Learning for Wind Turbine Defect Detection

In recent years, object detection has been carried out almost exclusively with the help of deep learning methods, primarily artificial neural networks. One-stage methods, like the YOLOv7 model used in this paper, predict bounding boxes on images without the step of region proposal. This process takes much less time; hence, their real-time detection performance is much better, but generally, they are weaker in prediction. Zhang, Cosma, and Watkins [17] demonstrate the superiority of the Mask R-CNN model over one-stage methods from the You Only Look Once (YOLO) family of architectures, specifically in the example of wind turbine defect detection. However, Mask R-CNN proved to be better only by an approximately 7.5% weighted average of several evaluation metrics. According to a paper released with the latest version from the YOLO family, YOLO version 7 (abbreviated YOLOv7), is better both in terms of accuracy and speed than some of the best architectures based on Mask R-CNN [18]. This fact, coupled with significantly less training time needed, led us to decide to utilize YOLOv7 in our work.

In their study, Shihavuddin et al. [19] explored the automated detection of surface damage on wind turbine blades using deep learning techniques, with a focus on drone-acquired imagery. The researchers developed a model based on the Faster R-CNN framework, emphasizing the importance of multi-scale image augmentation for improved performance in detecting various surface issues. Their work successfully identified specific damage types, such as leading-edge erosion and missing vortex generator teeth, achieving precision levels close to human accuracy. However, their detection framework was limited to only four defect classes and did not leverage more recent object detection models, like YOLO, which are faster and can enhance object detection for multiple classes. The approach serves as a reference for future improvements in defect detection, highlighting the potential for integrating newer, real-time architectures in automated wind turbine inspection.

Reddy et al. [20] presented a foundational approach for automated damage detection in wind turbine blades using convolutional neural networks (CNNs). Their model primarily focused on binary classification to differentiate between faulty and non-faulty images, establishing an essential framework for initial defect identification. However, while effective at a basic level, this model did not encompass multiple-class object detection, limiting its ability to distinguish among specific defect types within faulty classifications. Despite this limitation, their work serves as a valuable methodological reference, providing insights into essential training and validation steps for fault detection in structural health monitoring (SHM). As they say, future techniques, such as You Only Look Once (YOLO), can build upon Reddy et al.’s methodology to advance beyond binary classification, allowing for enhanced multi-class object detection in SHM applications for wind turbines, just like was performed in this paper.

Patel et al. [21] conducted a study focusing on wind turbine blade damage detection using a VGG16-RCNN-based approach, which was applied to images acquired via drone inspections. Their model aimed to classify four specific classes: Damage Area, Erosion Area, Edge Area, and Reference Area. Despite utilizing a VGG16-RCNN model, their results were somewhat ambiguous due to the limited scope of only four defect categories, and challenges in accurately distinguishing damage types were noted. Nevertheless, the study offers valuable insight into the use of deep learning architectures, like VGG16-RCNN, in wind turbine inspection.

In their study, Zhang et al. [22] used an attention-based MobileNetv1-YOLOv4 model with transfer learning for wind turbine blade defect recognition. Their model focused on four specific defect types: surface spalling, pitting, cracks, and contamination. Due to the limited dataset of only 122 images, they relied heavily on data augmentation to create a more robust training set, applying fifteen augmentation techniques to simulate various conditions. While effective, their model remains limited to these four classes, leaving room for further expansion. Our study builds upon this foundation by expanding the number of defect classes, aiming to provide a more comprehensive model for multi-class defect detection across wind turbine blades.

In our paper, we address a significant gap in existing research on wind turbine blade defect detection. Most previous studies have focused on detecting a limited number of defect classes—typically around four—which include broad categories such as cracks, corrosion, and surface wear. While these studies have achieved promising results within these confined classes, they do not fully capture the range and diversity of defects encountered in real-world conditions. For instance, field inspections reveal additional types of defects, including rupture, corrosion, and edge erosion, which are critical for comprehensive blade maintenance but are often excluded from prior research.

Our study aims to bridge this gap by expanding the detection framework to cover eight distinct defect classes, making it more aligned with real-world inspection requirements. This approach not only increases the robustness of defect identification but also ensures that our model addresses the varied and complex conditions encountered by inspectors in actual wind turbine maintenance. By incorporating these additional classes, we provide a model that can detect a broader spectrum of blade damage, ultimately contributing a more realistic and practical tool for the industry. This study thus fills a critical literature gap by advancing beyond the simplified classifications used in previous work to offer a model capable of supporting real-world, multi-class defect detection.

3. Materials and Methods

3.1. Data Collection and Preparation

Data collection and variable selection are highly important steps in the development of machine learning applications [23]. For the collection of wind turbine blade photos, an unmanned aerial vehicle (UAV) from the Chinese manufacturer DJI, model Matrice 300 RTK, was used. DJI is the largest global manufacturer of commercial drones [24]. The Matrice 300 RTK proved to be an excellent choice due to its robustness, autonomy, and high payload capacity. The ability to carry heavier cameras opens possibilities for collecting very-high-resolution photos. Consequently, even very small defects remain noticeable. Deng, Guo, and Chai [25] list the minimum requirements for a UAV (drone) for collecting photos of wind turbine blades in the inspection procedure as follows: wind resistance of at least 10 m/s, operational capability at temperatures up to at least 40 degrees Celsius, flying ability above 300 m, and autonomy of at least 30 min. Matrice 300 RTK meets all these criteria [26]. The greatest strength of this drone is its autonomy and robustness to environmental conditions. The speed of performing the photo collection procedure is crucial to minimize the downtime of the wind turbine. The camera that was used is the Zenmuse P1, also produced by DJI. It is a camera with a full-frame sensor, producing photos with a resolution of 45 million pixels. The full-frame sensor allows operation even in lower light conditions, and the photo resolution is more than sufficient compared to the 10 million pixels cited by Deng, Guo, and Chai as a minimum [25].

For the preprocessing of input data, we used a personal computer with the following specifications: a Central Processing Unit (CPU) Intel i5 3570k with a clock speed of 4300 megahertz, 16 gigabytes of random-access memory (RAM), and a Graphics Processing Unit NVIDIA GTX 1060 with 6 gigabytes (GB) of video RAM (VRAM). The operating system used on the PC is Ubuntu 20.04, which is a distribution of the GNU/Linux family of operating systems maintained by the company Canonical [27]. The reasons for choosing this operating system are that it is free, offers wide software support, and is open-source software. GNU/Linux operating systems are based on the Linux operating system kernel developed by Linus Torvalds [28]. The Ubuntu 20.04 operating system was based on the Linux kernel version 5.13 at the time of work preparation. Initially, we planned to conduct the model training procedure on the computer above. Still, the relatively small VRAM size of 6 GB deterred them from this direction, as VRAM size is crucial for training large CNNs. We decided to utilize the capabilities of the online data science platform Kaggle [29]. This platform, for its registered users, offers free use of NVIDIA Tesla P100 graphics processing units with 16 GB of VRAM, which is sufficient for training the models in this research.

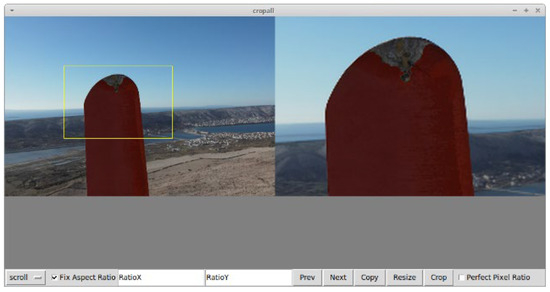

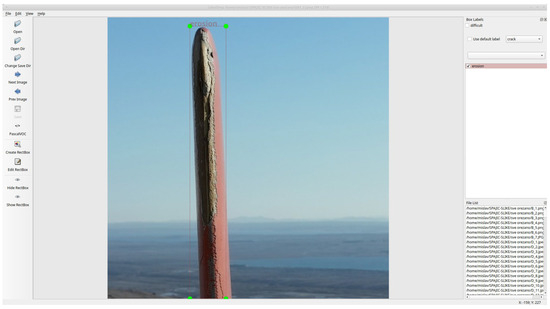

In regard to software that was used for data preprocessing, two open-source programs written in Python were used: cropall [30] and LabelImg [31]. Cropall was used as a simple tool for quickly cropping regions of interest (ROIs) on photos and resizing images to dimensions suitable for the model. In contrast, LabelImg was used to label defects on cropped images manually. Quick bounding box definition and associated class definition, along with the ability to export bounding box data in YOLO format, are the reasons for selecting this program. Figure 2 shows an example of cropall program usage for cropping ROIs and resizing, and Figure 3 shows the labeling process in the LabelImg program.

Figure 2.

Cropping region of interest and resizing image to suitable dimensions in cropall.

Figure 3.

Labeling a defect in LabelImg; Erosion defect with its corresponding bounding box.

All the software above is open source and available on the GitHub repositories of the authors of these programs. The code used for manipulating input data, training, and evaluating the model is available on the GitHub repository of the authors of this work [32]. Along with the author’s code, copies of the previously mentioned preprocessing programs with installation instructions are included. For the development and execution of the code for model development, the Jupyter Notebook environment [33] was used in the cloud on the previously mentioned Kaggle platform. The final element in the chain of utilized software support is the online platform Weights and Biases, which is used for monitoring the model training process, evaluating individual models, and comparing them with other developed versions of the models [34]. It offers simple integration with the Python programming language, the Jupyter Notebook environment, and the Kaggle platform. All the listed software used is free or even open source, which makes the methodology of work in this article highly reproducible.

The initial dataset obtained from Airspect Ltd. consisted of 4182 photographs collected from 10 wind turbines across 5 different wind farms in the Southeastern Europe region. The dimensions of the original photographs are 8192 × 5460 pixels, and each photograph was taken approximately 5 m from the blades. It is important to mention that the condition of the wind turbines and their images are protected as trade secrets. Generally, wind farm owners do not allow testing or photographing of their turbines, except for inspection purposes. In the case of the collected images for this study, displaying or sharing them is not possible due to confidentiality agreements. An agreement was made with one of the owners to use images for a few examples, provided the location of the wind turbine cannot be identified from them.

This dataset was supplemented with a dataset from similar research [35], consisting of 559 original photographs with dimensions of 5280 × 2970 pixels. This set of photographs was added to help the model generalize better, given that all images provided by Airspect Ltd. were taken in the Southeastern Europe region and have a very similar background, and very similar wind turbines were photographed. The result is an input dataset of 4741 photographs.

Data preparation, although simple, was one of the most time-consuming and demanding tasks due to the large amount of manual work required. Each photograph needed to be manually reviewed and then cropped for regions of interest, and finally, defects were labeled. After manually reviewing the original photographs obtained from Airspect Ltd., a subset of 200 photographs containing defects was selected. Out of 559 original photographs downloaded from the internet from similar research, only 14 photographs were chosen, as they contained defects of interest. Ultimately, this resulted in a total of 214 images being further processed. The next step was cropping the original images to the regions of interest using the cropall program. This technique was used as defects are often of small dimensions, and without doing this, they would “get lost” when resizing the image to smaller dimensions. They would occupy a very small number of pixels, making it very difficult to collect enough recognizable features for each defect [19]. Some other similar research also used this technique [19,20]. It is necessary to change the image dimensions to a smaller size to complete the training process in a reasonable time frame, and it requires reasonable amounts of video working memory of the GPU. The resizing of images after cropping was also performed in the cropall program. The chosen dimensions were 608 × 608 pixels, as YOLO models prefer image dimensions to be a multiple of 32. With such dimensions, there is no cropping of information or adding borders of “empty” pixels during training. Also, the mentioned dimensions are smaller than the smallest region of interest, so we are only reducing the image size, not enlarging it, thereby not adding noise to the input data.

Following this, images were labeled using the LabelImg program. Each defect was marked with a bounding box, and the corresponding class was entered. When defining classes, the aim was to follow the previously mentioned Bladena ApS manual. However, as mentioned earlier, some defect classes were omitted, and some were merged because of a small input dataset and the rarity of occurrence or inapplicability to the photographed wind turbines. For example, all cracks were combined into one class, cavities and pinholes were combined into the rupture class, the missing vortex generator plate was omitted due to inapplicability, etc. Classes for corrosion and missing parts, which indicate the lack of a protective cap between the hub and blade, were added. A final review of the chosen classes, names of classes used in labeling, and their distribution are given in the table below (Table 1).

Table 1.

Display of selected defect classes for labeling.

The total number of defect observations is 264, of which 241 come from the 200 images obtained from Airspect Ltd., while the 14 images from the publicly available set contain 21 defects. The labeled data were then uploaded as a private dataset to the Kaggle platform.

On the Kaggle platform, the data were divided into training and validation sets. Due to the lack of data, the validation set will effectively also serve as the testing set. A total of 80% of the data was retained as part of the training set, while 20% was allocated to the validation set. A stratified split was used, where the class representation ratio was maintained in both sets. The ratio is not entirely equal, as some images contain more than one defect. In such cases, for the class as a splitting criterion, the value of the most frequently occurring class was taken. In the case of equal representation in one image, the class whose name used for labeling comes alphabetically earlier was taken.

Due to the relatively small amount of input data, a separate testing set to serve as a performance indicator in a production environment was not set aside. Although this is not the best practice, such an approach is valid when there is a lack of data. The results on the validation set will not fully adequately measure the generalization error, but they are still a good guide. In similar works on wind turbine blades, we also see that the data were not divided into three but only into two sets [18,19,20].

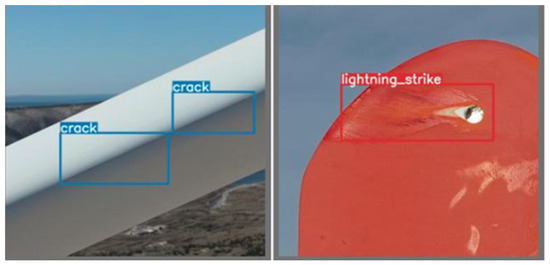

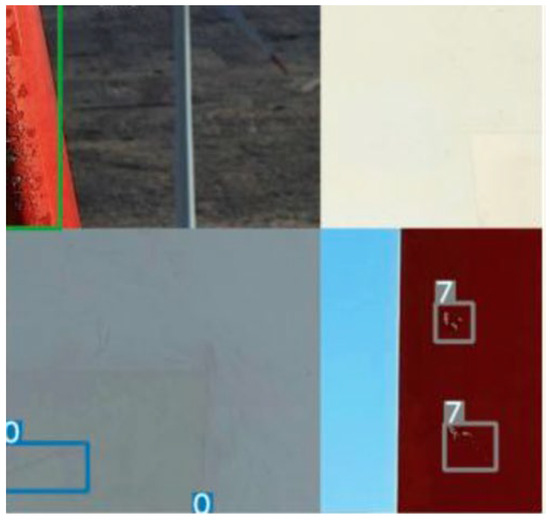

Reddy et al. [20] highlight the importance of the data augmentation method in defect detection on wind turbine blades. Indeed, images of defects are hard to obtain, and the available dataset is often small, as is the case in this study. This can be partially addressed by data augmentation. Within the YOLOv7 architecture, this technique is already implemented automatically. Augmentations, such as image saturation changes, image translation, image flipping, etc., are already predefined to occur with a certain probability or intensity during network training. During the hyperparameter optimization phase, attempts will be made to fine tune these probabilities. The most significant predefined augmentation within the YOLOv7 architecture is image mosaic assembly. An artificial image is created by taking several images and assembling them into a mosaic at a certain ratio of components. Figure 4 shows examples of two labeled images without any augmentations, and Figure 5 shows mosaic augmentation. Notice the distinction between the data that served as the input to the preparation part of the architecture (nonaugmented images in Figure 4) and the data that go into the model training (Figure 5 with augmented image). With augmentations, we effectively enlarge our input data and enhance the model’s generalization ability.

Figure 4.

Examples of images without any augmentations and with labeled defects.

Figure 5.

Result of mosaic augmentation for the purpose of artificially enlarging input data.

Standard YOLOv7, with 37 million parameters, was chosen as the base model for development. Already trained weights were pulled from the official repository, which lists them under the name yolov7.pt. The YOLOv7 model has a complex architecture, which we divide into three main components: the backbone, neck, and head [18]. The role of the backbone is to extract basic image features and pass them on to the head through the neck. The neck collects the feature maps extracted by the backbone, combines them, and creates so-called feature pyramids. At the end is the head, which consists of output layers performing the final detection [18]. The official YOLOv7 repository contains several versions of this model, and each is adapted to the approximate hardware on which it will be executed since YOLO is primarily a real-time detection model. Each of these versions has a different number of layers.

In this research, the initial attempt was to freeze the weight factors of the first 50 layers, which are part of the backbone in the selected version. Then, we proceeded with training. This is the previously explained approach of transfer learning, where the pre-trained model serves as a feature extractor. If the result was unsatisfactory, the fine-tuning approach was to be taken, where all the weight factors were unfrozen and adjusted during the training process.

The selected classes and the rationale behind their selection were explained earlier during the overview of the data preparation process. As part of the model development, it is necessary also to mention that the classes need to be listed in the data.yaml file, where we define the paths to the training and validation datasets, the number of output classes, and their names. The order of the list of names depends on the number assigned to each class during labeling with the LabelImg tool. Numbers were assigned so that the first defined defect class in the LabelImg program obtained the number zero, the next one, and so on.

3.2. Model Training

Model training is initiated from the Kaggle platform via a simple command that launches the training script. Hyperparameters and various options with which we adjust the training can be defined as arguments of the command. They can be in the form of text or a path to a file that defines the parameters of a particular argument. The individual arguments are explained in the table below (Table 2).

Table 2.

Arguments of the script for training the YOLOv7 model.

After initiating training, evaluation metrics and the state of the training process can be monitored in real time on the Weights and Biases platform. A prerequisite is an open account on that platform, and authorization is performed using a secret key. The first attempt at training used 50 frozen layers as feature extractors. It did not yield a good result, so we proceeded to unfreeze all weight factors and optimize the hyperparameters. Various combinations of hyperparameters were tested in the optimization process. An overview of the tested variants of individual hyperparameters is shown in the table below (Table 3). The listed variants were tested in all combinations.

Table 3.

Tested hyperparameter variants.

The modified file with augmentation parameters includes modifications of the following probabilities or intensities of augmentations compared to the basic file:

- Flipping the image upside down (probability); changed from 0.0 to 0.2;

- Scaling the image (probability); changed from 0.5 to 0.3;

- Translating the image (amount of shift); changed from 0.2 to 0.15.

Each combination can be called for training by separately launching the manually modified command to initiate the training process from the previous chapter. We can also initiate the training process of multiple variants through a simple for loop. In the for loop, we iterate through predefined variables. For example, we can try a certain number of epochs, batch size, and optimization functions with combinations of turned on or off image weight parameters and basic or modified augmentations.

3.3. Model Evaluation

The main metric used for evaluating performance will be mean average precision (). However, to understand what it represents, we first need to define the metrics that are included in its calculation. These metrics are as follows:

- Intersection over Union (IoU);

- True Positive (TP);

- False Positive (FP);

- False Negative (FN);

- True Negative (TN);

- Precision;

- Recall;

- Precision–Recall curve;

- Average precision (AP).

Intersection over Union is defined by Expression 1.

In practice, it represents a figure that quantifies the level of overlap between two bounding boxes. is usually expressed by computing at a certain threshold. For example, + represents the mean average precision at an overlap level of 50% and above. The metrics derived from the confusion matrix are defined as follows in object detection:

- TP represents a situation where the model predicted that a bounding box of a certain class exists at a certain position, and that is indeed the case.

- FP represents a situation where the model predicted that a bounding box of a certain class exists at a certain position, but this is not the case in the actual data.

- FN represents a situation where the model did not predict a bounding box of a certain class at a certain position, but a bounding box of a certain class does exist at that position.

- TN represents a situation where the model did not predict a bounding box of a certain class at a certain position, and that is indeed the case. This does not enter into further calculations.

Precision is defined by Expression 2, and recall is defined by Expression 3 as follows:

Furthermore, the Precision–Recall curve represents a curve whose x-values are recalled at different confidence intervals, and y-values are precisions at different confidence intervals. Average precision () is defined as the area under this curve and is determined by Expression 4 as follows:

Mean average precision () is the average AP across all classes. It is determined by Expression 5, where N is the total number of classes.

4. Results

The selection of the best model was based on the highest value of mAP@0.5. The model chosen as the best was the one where all layers were unfrozen during training, with the corresponding hyperparameters shown in the table below (Table 4). It achieved an of 0.76 on the validation set.

Table 4.

Hyperparameters of the best model.

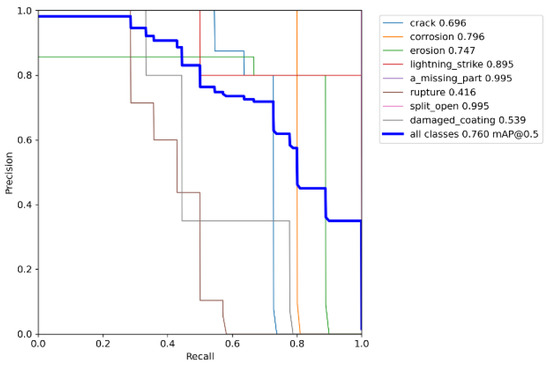

The other significant metrics are as follows:

- Average precision across all classes: 0.7318;

- Average recall across all classes: 0.6622;

- Precision–Recall curve per class, as illustrated in Figure 6;

Figure 6. Precision–Recall curve per class.

Figure 6. Precision–Recall curve per class. - mAP@0.5:0.95: 0.3683;

- mAP@0.5:0.95 represents the average mAP values at IoU thresholds ranging from 0.5 to 0.95 with a step of 0.05.

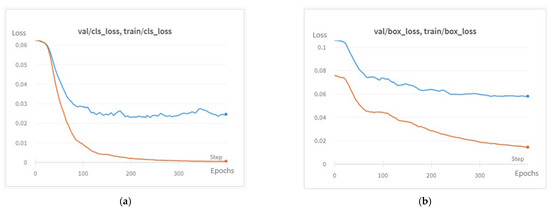

In Figure 7, the progression of the cost function’s results through epochs is depicted for both classes and bounding boxes. The left graph (a) illustrates the cost function’s progression for class predictions, while the right graph (b) shows the progression for bounding box predictions. In each graph, the orange curve represents the progression on the training set, and the blue curve represents the progression on the validation set. It is observable in this figure that the cost function’s result on the validation set follows a generally decreasing trend and does not begin to increase significantly after a certain number of epochs. This steady decrease suggests that the model has not yet reached a point of overfitting, as the validation error does not diverge from the training error. Thus, the number of epochs chosen for training is appropriate, and no excessive training was conducted. This stability across training and validation sets indicates that the model has effectively learned features without overfitting, providing a balanced performance across both datasets.

Figure 7.

Progression of the cost function results through 400 epochs of training, which indicates no overfit.

As illustrated in Figure 7, the stability of the cost function on both training and validation sets provides insight into the model’s generalization ability. The continuous decrease in the cost function without an observable increase on the validation set confirms that overfitting has been avoided. This trend indicates that the model has effectively learned to generalize features across defect classes, supporting reliable predictions in real-world applications where varied blade defects are encountered. In addition to accuracy, we evaluated the model’s training efficiency and computational requirements. Despite the extended defect class range, the model converged within 400 epochs, demonstrating an efficient use of resources. This efficiency can be attributed to our transfer learning and data augmentation strategies, which facilitated faster convergence and optimized performance with limited data. The practical implications of this efficiency suggest that our model could be deployed in field environments where computational resources are constrained.

Compared to existing studies, which typically identify only four defect types, our model demonstrates competitive performance despite the added complexity of detecting eight classes. Specifically, the mean average precision () of our model remains high, achieving 0.76, which aligns well with industry benchmarks. This expanded classification capability enables our model to better support real-world maintenance applications by recognizing a broader range of defect types, thus providing a more comprehensive tool for blade inspection.

Finally, a verification step was conducted where 43 images from the validation set, containing 54 defect observations, were reviewed and marked by a human inspector. The inspector was instructed to draw bounding boxes tightly around the defects, ensuring the entire surface of each defect was covered. He used the LabelImg tool for this task, following the same class numbering system as the original labeling by the authors. The labels were then converted from YOLO format to a suitable format for evaluation using the mAP tool [36] downloaded from GitHub. This tool was developed for another study on object recognition [37]. A confidence value of 1 was added to each label, indicating 100% confidence of the labeler. After that, the tool was run, and an of 0.9895 was calculated. The inspector achieved a significantly better result compared to the best model developed in this work, but that was to be expected since the human validating was an expert in the field of wind turbine defects. Notably, achieving these results took the inspector significantly more time (30 min) compared to the milliseconds it takes for the trained model to infer results.

5. Discussion

This paper gave us a functional model that can help wind turbine inspectors cut the time needed to deliver a report while doing it more accurately. Still, there are a few possible improvements for future research. One of the possible improvements in terms of the performance of the model can be obtained by training with other CNN architectures or model configurations to compare performance and potentially enhance detection and classification rates. The other possibility is to evaluate the model’s performance in real-world scenarios or in a live environment to understand its practical applicability and limitations. Also, it could be worth the effort to explore the integration of other technologies, such as reinforcement learning for active learning or anomaly detection techniques to uncover unseen or rare defects. Looking into fusing data from other sensors or sources (e.g., acoustic, vibrational data) to create a multi-modal defect detection system can also be observed.

Due to the different classes selected, direct comparison of the results with those of other works is complicated. The most similar works to this one are the work of Zhang, Yang, and Yang [22] and the work of Shihavuddin et al. [19]. The best model of this work will be compared with their best models. The comparison metric is . An overview is given in the table below (Table 5).

Table 5.

Overview of mAP@0.50 in comparison with similar research.

Both research studies with which this one is compared predicted four classes, while this predicts eight. Zhang, Yang, and Yang used a total of 122 images [22], which is comparable to the volume of input data of this work. They achieved a much better result with an older version of the YOLO model, but as can be seen in the examples from their work, their defects are also much easier to distinguish visually, and they used a much smaller dataset and distinguished only four defect classes. Shihavuddin et al. [19] used a dataset with 458 observations and only four classes, and they achieved a result that is better by about 6.7% than the result of this research. In this study, we used an expanded dataset with eight distinct defect classes compared to the four classes typically used in previous research. The increased number of classes adds complexity to both the training and classification tasks, as it requires the model to differentiate between a broader range of defect types. This expansion can naturally impact performance metrics, especially when compared to models trained on smaller, simpler datasets. Despite this, the inclusion of additional defect classes allows our model to provide more comprehensive and detailed detection, which is valuable for real-world applications. Therefore, it can be said that the result of this work is successful. Furthermore, we acknowledge that the performance of our wind turbine damage detection model could benefit from further refinement. The complexity of detecting multiple defect types, combined with variations in defect appearance and image quality, may contribute to performance limitations.

Limitations of the paper should be acknowledged as well. A primary limitation is the lack of validation graphs comparing predicted versus existing defect observations. Due to the technical characteristics of the software tools used in this research, generating such visual representations was not feasible within the scope of this study. This limitation constrains the ability to visually demonstrate the model’s performance and may impact the interpretability of the results for a broader audience. Similarly, while a verification step was conducted using 43 images from the validation set (containing 54 defect observations) and reviewed by a human inspector, a graphical representation of this process was also not included due to software limitations. Future research should explore alternative tools or methods to generate and present these graphs, which could enhance transparency and provide a more comprehensive validation of the model’s accuracy. To address these limitations, future studies could incorporate additional software platforms or develop custom visualization tools to bridge this gap. Moreover, expanding the dataset to include diverse environmental and operational conditions across multiple geographic regions would help to generalize the findings and further validate the proposed approach.

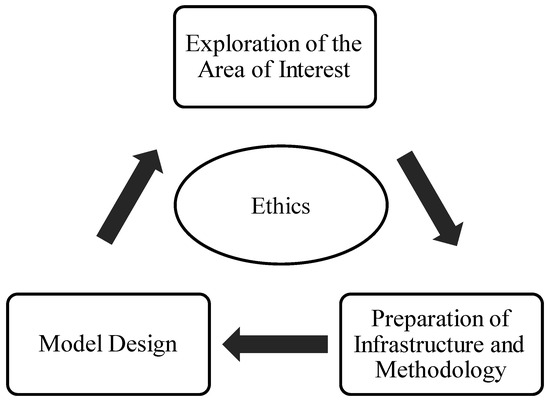

Exploring other domains where this model could be applied, such as in the inspection of other types of infrastructure (e.g., roofs, bridges, and power lines), is very important to consider. While considering application to other areas, we propose a concept that emerged from this research paper. It is a standard practice, and the fundamental concept during modeling encompasses the following phases: Data Collection, Data Cleaning and Transformation, Data Partitioning into training, testing, and validation sets, Modeling, Evaluation and Validation of the model, and Continuous Revalidation and Re-evaluation of the model. This is something to always adhere to during modeling. Herein, we propose a concept that provides an additional perspective on the entire process, encompassing the following steps: Exploration of the Area of Interest, Preparation of Infrastructure and Methodology, Model Design, and Ethical Considerations. This proposition offers a holistic approach to the systematic unfolding of the modeling process, ensuring a meticulous and ethically sound execution. The initial segment, or Exploration of the Area of Interest, encompasses market and technological research, research of recent scholarly and professional literature, the examination of existing practical outcomes, and the availability of pertinent data. This phase aims to build a robust foundation, ensuring an adept comprehension of the pre-existing knowledge landscape, thereby fostering an informed inception for our model’s development. In the subsequent phase, namely, Preparation of Infrastructure and Methodology, thoughtful deliberation on the infrastructure capable of supporting the performance exigencies of our modeling is indispensable. Prior to this, based on the insights garnered from the initial phase, decisions regarding the methodologies of machine learning to be employed are to be articulated. This phase is pivotal in orchestrating a conducive environment that is quintessential for the efficacious execution of the modeling process, ensuring that the infrastructural and methodological paradigms are in congruence with the envisaged modeling objectives. During the Model Design phase, it is imperative to discern the nature of the problem at hand and tailor the methodologies gleaned from the previous phase to these requisites. Subsequently, we segue into the standard modeling process described earlier. This phase is instrumental in architecting a model that is not only reflective of the problem’s intrinsic characteristics but is also capable of yielding insights that are both actionable and insightful.

In each of the outlined steps, it is crucial to consider the ethical aspect of the issues at hand and determine whether there are any ethical dilemmas in the realms of research, design, and inference (Figure 8).

Figure 8.

Methodological framework for future research.

Any ethical quandary should be adequately analyzed to understand the implications. This approach not only ensures the integrity of our work but also contributes to a broader understanding and responsible conduct in the scientific community. Through a thorough examination of ethical considerations, we aim to uphold the highest standards of ethical practice, ensuring that our work remains transparent, accountable, and, above all, beneficial to the broader societal and scientific discourse.

Ethics play a crucial role in the proposed methodological framework for wind turbine blade defect detection using convolutional neural networks (CNNs). This commitment begins with the data collection process, where privacy and confidentiality agreements are strictly adhered to, and informed consent is obtained from wind turbine owners and operators. The framework ensures that data used for model training and validation are legally and ethically acquired. Moreover, the preparation phase emphasizes the use of open-source tools and properly licensed technologies, conducting environmental impact assessments, and engaging with local stakeholders to address concerns, thus preventing any harm to the environment or communities.

During the model design phase, the framework ensures transparency and reproducibility by thoroughly documenting methodologies and making the code and data publicly available. This fosters a collaborative scientific community and helps avoid biases in defect detection. Ethical considerations extend to continuous evaluation, where the model’s performance and impact on stakeholders are regularly assessed. Feedback mechanisms and continuous improvements ensure the model remains accurate and reliable. Ethical data handling, compliance with data protection regulations, and anonymization practices are maintained throughout all phases to safeguard privacy and confidentiality.

Finally, the framework addresses potential conflicts of interest by requiring researchers to disclose any financial or personal interests. It also considers the broader environmental and social impacts of deploying the defect detection model, ensuring that the technologies used do not disrupt local wildlife or communities. The model aims to complement human inspectors rather than replace them, maintaining social responsibility. The ethical use of technology is paramount, guarding against misuse and ensuring the CNN model is used responsibly and for its intended purpose. By integrating ethics into every stage, the framework ensures the research advances scientific knowledge while upholding the highest standards of integrity and responsibility.

6. Conclusions

The global transition to renewable energy sources has led to an increase in the number of commercial wind turbines worldwide, which would not be possible without the strong support of digital technologies [38]. Consequently, the demand for inspection services for these installations is also rising, following the trend of other predictive maintenance applications [39]. Drone-based visual inspection of wind turbines, being a cost-effective and swift method of data collection, has emerged as a preferable non-destructive inspection technique. One of the primary focuses of these inspections is the turbine blades, which are among the most expensive components of wind turbines. Regular monitoring and maintenance of these blades are crucial for the economic sustainability of electricity production from wind energy, especially since the blades are susceptible to environmental impacts and operational stresses that often lead to damage.

In the visual inspection process using drones, data collection is relatively quick, but the same cannot be said for report generation. Reviewing the collected images, which is the first step in report generation, is time consuming and tedious. This challenge can be addressed by automatically identifying damages in the images. While current technology has not yet surpassed the human eye in this field, the combination of automated recognition and manual review can complement each other to produce high-quality reports in a significantly reduced timeframe. By leveraging the latest publicly available advancements in object detection, the pathway to the potential implementation of such a system in a production environment for wind turbine inspection companies can be significantly shortened. This work demonstrated that with relatively few resources and in a short period, it is possible to develop a model for the automatic detection and classification of defects that successfully identify most of the damages. One of the key practical contributions is the development of a model that can be trained and deployed using relatively modest computational resources. By leveraging the capabilities of platforms like Kaggle, which offers free access to powerful GPUs, this study demonstrates that high-quality defect detection models can be developed without significant investments in hardware. This democratizes the technology, making it accessible to a broader range of users.

A model on par with the most recent scientific research on this topic was developed. The primary goal of this work was to develop a model that could expedite the defect recognition process in wind turbine blade images and serve as an additional check for inspectors. Given the results, it can be said that this goal has been achieved. This study underscores the potential of CNNs to outperform traditional non-destructive testing (NDT) methods, which are often labor intensive and time consuming. It highlights the potential of one-stage object detection methods, like YOLOv7, over two-stage methods in terms of real-time performance, making a strong case for their future adoption in industrial applications. While Faster R-CNN is known for its high accuracy, it is generally slower due to its two-stage process. In contrast, YOLOv4, another one-stage detector, offers a balance between speed and accuracy, but YOLOv7 edges it out in both aspects. The research indicates that the YOLOv7 model can achieve results very close to those obtained by the Faster R-CNN method from other research, demonstrating its efficacy in real-world applications. Although real-time performance was not measured in this study, it remains a consideration for future research.

The use of transfer learning proved to be effective for the development of an automated defect detection model for wind turbine blades. Although the final model required unfreezing all layers during training, which meant transfer learning was not fully utilized in its traditional sense, the pre-trained weights significantly reduced the computational costs and training time. This approach allowed us to develop a low-cost solution that is both efficient and acceptable for practical applications. The YOLOv7-based model achieved satisfactory performance, demonstrating that transfer learning, even when partially applied, can greatly contribute to the creation of cost-effective machine learning solutions.

This study’s emphasis on the economic implications of automated defect detection, including cost reduction and increased inspection efficiency, bridges the gap between theoretical research and practical applications. This holistic approach ensures that the theoretical advancements are not only scientifically sound but also practically relevant, paving the way for their adoption in real-world scenarios. Finally, the proposed methodological framework for future research ensures that the practical contributions of this study are not isolated but are part of a broader effort to enhance the efficiency and reliability of wind turbine inspections. This framework can be adopted and adapted by other researchers, fostering a collaborative approach to solving industrial challenges.

Future work should focus on training these kinds of models on larger datasets to enhance their accuracy further, aiming to achieve performance on par with human inspection but with significantly better inference times. Other potential options include exploring other CNN architectures, integrating additional sensor data, and applying reinforcement learning techniques. The developed model is based on YOLOv7, the latest version in the YOLO family of object detection models. Since the YOLO family is optimized for real-time detection, future iterations of this architecture could potentially be integrated directly into drones. For the Matrice 300 RTK drone used in this work, the manufacturer offers a software development kit that could be used to channel camera inputs to an edge computing device attached to the drone where the model resides for predictions. This setup could pave the way for quick, real-time preliminary reporting to the inspection client, transforming the inspection and reporting process. These suggestions for future research ensure that the practical contributions of this study are not static but can evolve with advancements in technology.

Author Contributions

Conceptualization, M.S., M.T. and M.P.B.; methodology, M.S. and M.T.; software, M.S.; validation M.P.B. and M.T.; formal analysis, M.S.; investigation M.S., resources, M.S.; data curation, M.S.; writing—original draft preparation, M.S. and M.T.; writing—review and editing, M.P.B.; visualization, M.S.; supervision, M.T.; project administration, M.S., M.T. and M.P.B.; funding acquisition, M.P.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wind Europe. Wind Energy in Europe: 2021 Statistics and the Outlook for 2022–2026; Wind Europe: Brussels, Belgium, 2022. [Google Scholar]

- Huňady, J.; Pisár, P.; Khawaja, S.; Qureshi, F.H. The Digital Transformation of European Union Countries before and during COVID-19. Bus. Syst. Res. Int. J. Soc. Adv. Innov. Res. Econ. 2024, 15, 22–45. [Google Scholar] [CrossRef]

- Global Wind Energy Council. GWEC|Global Wind Report 2022; Global Wind Energy Council: Brussels, Belgium, 2022. [Google Scholar]

- Bladena. Wind Turbine Blades Handbook; Kirt&Thomsen: Copenhagen, Denmark, 2021; ISBN 978-87-971709-0-8. [Google Scholar]

- Shen, N.; Ding, H. Advanced Repairing of Composite Wind Turbine Blades and Advanced Manufacturing of Metal Gearbox Components. In Advanced Wind Turbine Technology; Springer International Publishing: Cham, Switzerland, 2018; pp. 219–245. [Google Scholar]

- Mishnaevsky, L.; Branner, K.; Petersen, H.; Beauson, J.; McGugan, M.; Sørensen, B. Materials for Wind Turbine Blades: An Overview. Materials 2017, 10, 1285. [Google Scholar] [CrossRef]

- Ngo, T.-D. Introduction to Composite Materials. In Composite and Nanocomposite Materials—From Knowledge to Industrial Applications; IntechOpen: London, UK, 2020. [Google Scholar]

- IEC 61400-5:2020; Wind Energy Generation Systems—Part 5: Wind Turbine Blades. International Electrotechnical Commission: Geneva, Switzerland, 2020.

- IEC 62446-3:2017; Photovoltaic (PV) Systems—Requirements for Testing, Documentation and Maintenance—Part 3: Photovoltaic Modules and Plants—Outdoor Infrared Thermography. International Electrotechnical Commission: Geneva, Switzerland, 2017.

- IEC 62446-2:2020; Photovoltaic (PV) Systems—Requirements for Testing, Documentation and Maintenance—Part 2: Grid Connected Systems—Maintenance of PV Systems. International Electrotechnical Commission: Geneva, Switzerland, 2020.

- Bladena; Vattenfall; EON; Statkraft; ENGIE; KIRT × THOMSEN. INSTRUCTION Blade Inspections. 2018. Available online: https://www.bladena.com/uploads/8/7/3/7/87379536/blade_inspections_report.pdf (accessed on 10 August 2022).

- García Márquez, F.P.; Peco Chacón, A.M. A Review of Non-Destructive Testing on Wind Turbines Blades. Renew. Energy 2020, 161, 998–1010. [Google Scholar] [CrossRef]

- Li, D.; Ho, S.-C.M.; Song, G.; Ren, L.; Li, H. A Review of Damage Detection Methods for Wind Turbine Blades. Smart Mater. Struct. 2015, 24, 033001. [Google Scholar] [CrossRef]

- Dong, X.; Taylor, C.J.; Cootes, T.F. Defect Detection and Classification by Training a Generic Convolutional Neural Network Encoder. IEEE Trans. Signal Process. 2020, 68, 6055–6069. [Google Scholar] [CrossRef]

- Xu, D.; Wen, C.; Liu, J. Wind Turbine Blade Surface Inspection Based on Deep Learning and UAV-Taken Images. J. Renew. Sustain. Energy 2019, 11, 053305. [Google Scholar] [CrossRef]

- Galappaththi, U.I.K.; De Silva, A.K.M.; Macdonald, M.; Adewale, O.R. Review of Inspection and Quality Control Techniques for Composite Wind Turbine Blades. Insight-Non-Destr. Test. Cond. Monit. 2012, 54, 82–85. [Google Scholar] [CrossRef]

- Zhang, J.; Cosma, G.; Watkins, J. Image Enhanced Mask R-CNN: A Deep Learning Pipeline with New Evaluation Measures for Wind Turbine Blade Defect Detection and Classification. J. Imaging 2021, 7, 46. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Shihavuddin, A.; Chen, X.; Fedorov, V.; Nymark Christensen, A.; Andre Brogaard Riis, N.; Branner, K.; Bjorholm Dahl, A.; Reinhold Paulsen, R. Wind Turbine Surface Damage Detection by Deep Learning Aided Drone Inspection Analysis. Energies 2019, 12, 676. [Google Scholar] [CrossRef]

- Reddy, A.; Indragandhi, V.; Ravi, L.; Subramaniyaswamy, V. Detection of Cracks and Damage in Wind Turbine Blades Using Artificial Intelligence-Based Image Analytics. Measurement 2019, 147, 106823. [Google Scholar] [CrossRef]

- Patel, J.; Sharma, L.; Dhiman, H.S. Wind Turbine Blade Surface Damage Detection Based on Aerial Imagery and VGG16-RCNN Framework. 2021. Available online: http://arxiv.org/abs/2108.08636 (accessed on 25 August 2022).

- Zhang, C.; Yang, T.; Yang, J. Image Recognition of Wind Turbine Blade Defects Using Attention-Based MobileNetv1-YOLOv4 and Transfer Learning. Sensors 2022, 22, 6009. [Google Scholar] [CrossRef] [PubMed]

- Andonovic, V.; Azemi, M.K.; Andonovic, B.; Dimitrov, A. Optimal Selection of Parameters for Production of Multiwall Carbon Nanotubes (MWCNTs) by Electrolysis in Molten Salts using Machine Learning. In Proceedings of the ENTRENOVA-ENTerprise REsearch InNOVAtion, Opatija, Croatia, 17–18 June 2022; Volume 8, pp. 16–23. [Google Scholar]

- De Miguel Molina, B.; Segarra Oña, M. The Drone Sector in Europe. In Ethics and Civil Drones; de Miguel Molina, M., Santamarina Campos, V., Eds.; Springer: Cham, Switzerland, 2018; pp. 7–33. [Google Scholar]

- Deng, L.; Guo, Y.; Chai, B. Defect Detection on a Wind Turbine Blade Based on Digital Image Processing. Processes 2021, 9, 1452. [Google Scholar] [CrossRef]

- DJI Enterprise. Specs—MATRICE 300 RTK. Available online: https://enterprise.dji.com/matrice-300/specs (accessed on 9 August 2022).

- Enterprise Open Source and Linux. Ubuntu. Available online: https://ubuntu.com/ (accessed on 9 August 2022).

- Torvalds, L. Linux Kernel Source Tree. Available online: https://github.com/torvalds/linux (accessed on 10 August 2022).

- Kaggle. Kaggle: Your Home for Data Science. Available online: https://www.kaggle.com/ (accessed on 12 August 2022).

- Anonymous. Interactive Semi-Batch Image Cropper. One Click to Crop Load Next. Available online: https://github.com/pknowles/cropall (accessed on 15 August 2022).

- Tzuta, L. LabelImg. Available online: https://github.com/heartexlabs/labelImg (accessed on 15 August 2022).

- Spajić, M.; Talajić, M.; Pejić Bach, M. Wind Turbine Defects Detection. Available online: https://github.com/MySlav/wind-turbine-defects-detection (accessed on 11 September 2022).

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.; Grout, J.; Corlay, S.; et al. Jupyter development team Jupyter Notebooks—A publishing format for reproducible computational workflows. In Positioning and Power in Academic Publishing: Players, Agents and Agendas; IOS Press: Amsterdam, The Netherlands, 2016; pp. 87–90. [Google Scholar] [CrossRef]

- Biewald, L. Experiment Tracking with Weights and Biases 2020. Available online: https://wandb.ai/wandb_fc/articles/reports/Machine-Learning-Experiment-Tracking--Vmlldzo1NDI1Mjcy (accessed on 11 September 2022).

- Shihavuddin, A.; Chen, X. DTU—Drone Inspection Images of Wind Turbine 2018, Mendeley Data, V2. Available online: https://data.mendeley.com/datasets/hd96prn3nc/2 (accessed on 5 August 2022).

- Cartucho, J. Mean Average Precision—This Code Evaluates the Performance of Your Neural Net for Object Recognition. Available online: https://github.com/Cartucho/mAP (accessed on 26 August 2022).

- Cartucho, J.; Ventura, R.; Veloso, M. Robust Object Recognition Through Symbiotic Deep Learning. In Mobile Robots, Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 2336–2341. [Google Scholar]

- Pejić Bach, M.; Ivec, A.; Hrman, D. Industrial Informatics: Emerging Trends and Applications in the Era of Big Data and AI. Electronics 2023, 12, 2238. [Google Scholar] [CrossRef]

- Pejić Bach, M.; Topalović, A.; Krstić, Ž.; Ivec, A. Predictive maintenance in industry 4.0 for the SMEs: A decision support system case study using open-source software. Designs 2023, 7, 98. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).