Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape

Abstract

1. Introduction

1.1. Benefits of AI Algorithms for Autonomous Vehicles

- Safety: AI can significantly reduce accidents by eliminating human error, leading to safer roads.

- Traffic Flow: Platooning [4] and efficient routing can ease congestion and improve efficiency.

- Accessibility: People with physical impairments or different abilities, the elderly, and the young can gain independent mobility.

- Energy Savings: Optimized driving reduces fuel consumption and emissions.

- Productivity and Convenience: Passengers use travel time productively while delivery services become more efficient.

1.1.1. Technological Advancements

- Sharper perception and decision-making: AI algorithms are more adept at understanding environments with advanced sensors and robust machine learning.

- Faster, more autonomous operation: Edge computing enables on-board AI processing for quicker decisions and greater independence.

- Enhanced safety and reliability: Redundant systems and rigorous fail-safe mechanisms prioritize safety above all else.

1.1.2. Education and Career Boom

- Surging demand for AI expertise: Specialized courses and degrees in autonomous vehicle technology will cater to a growing need for AI, robotics, and self-driving car professionals.

- Interdisciplinary skills will be key: Professionals with cross-functional skills bridging AI, robotics, and transportation will be highly sought after.

- New career paths in safety and ethics: Expertise in ethical considerations, safety audits, and regulatory [5] compliance will be crucial as self-driving cars become widespread.

1.1.3. Regulatory Landscape

- Standardized safety guidelines: Governments will establish common frameworks for performance and safety, building public trust and ensuring industry coherence.

- Stringent testing and validation: Autonomous systems will undergo rigorous testing before deployment, guaranteeing reliability and safety standards.

- Data privacy and security safeguards: Laws and regulations will address data privacy and cybersecurity concerns, protecting personal information and mitigating cyberattacks.

- Ethical and liability frameworks: Clearly defined legal frameworks will address ethical decision-making and determine liability in situations involving self-driving cars.

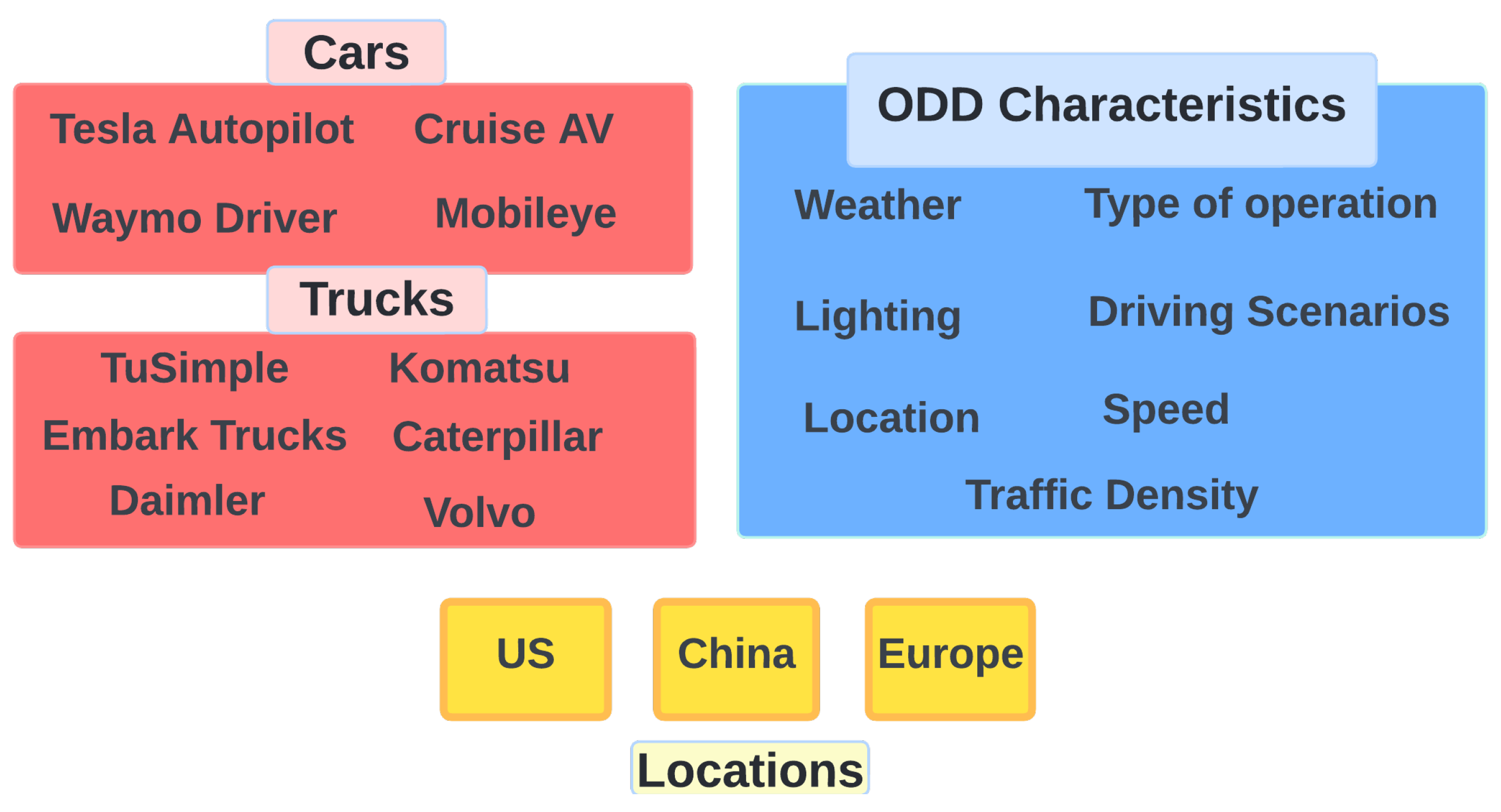

1.2. Operational Design Domains (ODDs) and Diversity—The Current Industry Landscape

- Waymo Driver: [9] Can handle a wider range of weather conditions, city streets, and highway driving, but speed limitations and geo-fencing restrictions apply.

- Tesla Autopilot: [10] Primarily for highway driving with lane markings, under driver supervision, and within specific speed ranges.

- Mobileye Cruise AV: [11] Operates in sunny and dry weather, on highways with clearly marked lanes, and at speeds below 45 mph.

- Aurora and Waymo Via: Wider range of weather conditions, including light rain/snow. Variable lighting (sunrise/sunset), multi-lane highways and rural roads with good pavement quality, daytime and nighttime operation, moderate traffic density, dynamic route planning, traffic light/stop sign recognition, intersection navigation, maneuvering in yards/warehouses, etc.

- TuSimple and Embark Trucks: [12] Sunny, dry weather, clear visibility. Temperature range −10 °C to 40 °C, limited-access highways with clearly marked lanes, daytime operation only, maximum speed of 70 mph, limited traffic density, pre-mapped routes, lane changes, highway merging/exiting, platooning with other AV trucks, etc.

- Pony.ai and Einride: Diverse weather conditions, including heavy rain/snow. Variable lighting and complex urban environments, narrow city streets, residential areas, and parking lots. Low speeds (20–30 mph), high traffic density, frequent stops and turns, geo-fenced delivery zones, pedestrian and cyclist detection/avoidance, obstacle avoidance in tight spaces, dynamic rerouting due to congestion, etc.

- Komatsu Autonomous Haul Trucks, Caterpillar MineStar Command for Haul Trucks: Harsh weather conditions (dust, heat, extreme temperatures). Limited or no network connectivity, unpaved roads, uneven terrain, steep inclines/declines, autonomous operation with remote monitoring, pre-programmed routes, high ground clearance, obstacle detection in unstructured environments, path planning around natural hazards, dust/fog mitigation, etc.

- Baidu Apollo: Highways and city streets in specific zones like Beijing and Shenzhen. Operates in the daytime and nighttime under clear weather conditions and limited traffic density. Designed for passenger transportation and robotaxis. Specific scenarios include lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation, and low-speed maneuvering in urban areas.

- WeRide: Limited-access highways and urban streets in Guangzhou and Nanjing. Operates in the daytime and nighttime under clear weather conditions. Targeted for robotaxi services and last-mile delivery. Specific scenarios include lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation, and automated pick-up and drop-off for passengers/packages.

- Bosch and Daimler [13]: Motorways and specific highways in Germany. Operates in the daytime and nighttime under good weather conditions. Focused on highway trucking applications. Specific scenarios include platooning with other AV trucks, automated lane changes and overtaking, emergency stopping procedures, and communication with traffic management systems.

- Volvo Trucks: Defined sections of Swedish highways. Operates in the daytime and nighttime under varying weather conditions. Tailored for autonomous mining and quarry operations. Specific scenarios include obstacle detection and avoidance in unstructured environments, path planning around natural hazards, pre-programmed routes with high precision, and remote monitoring and control.

1.3. Role of Connected Vehicle Technology

- Enhanced situational awareness: Real-time information exchange between connected vehicles and infrastructures provides a broader picture of the surrounding environment, including road conditions, traffic patterns, and potential hazards, which is crucial for autonomous vehicles to navigate safely and efficiently.

- Improved decision-making: Connected vehicles can leverage data from other vehicles and infrastructures to make better decisions, such as optimizing routes, avoiding congestion, and coordinating maneuvers with other vehicles, contributing to smoother and safer autonomous operation.

- Faster innovation and testing: Connected vehicle technology allows for real-time data collection and analysis of vehicle performance, enabling faster development and testing of autonomous driving algorithms, accelerating the path to safer and more reliable autonomous vehicles [15].

2. Review of Existing Research and Use Cases

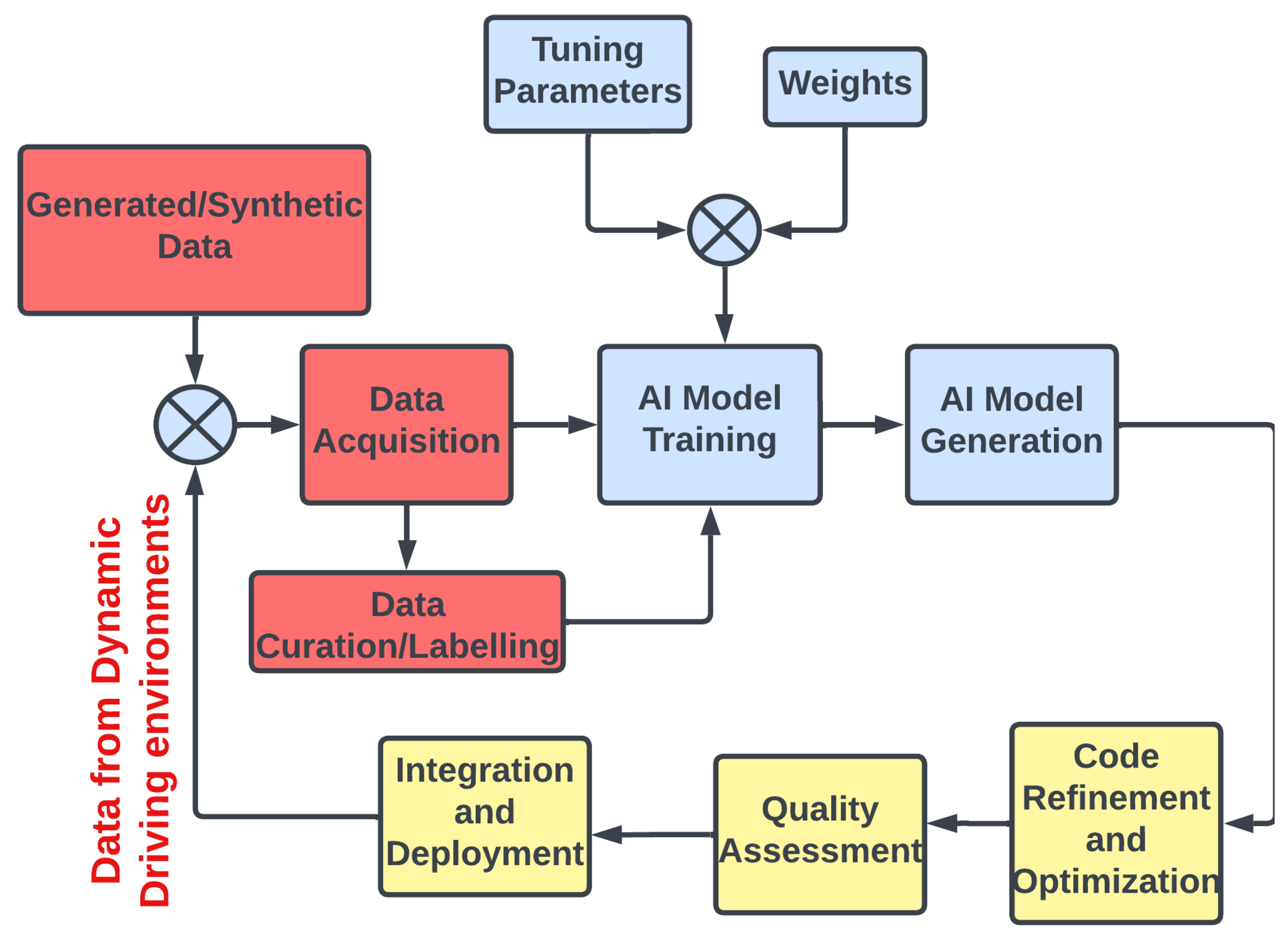

3. The AI-Powered Development Life Cycle in Autonomous Vehicles

3.1. Model Training and Deployment

3.2. Ensuring Software Quality and Security

4. Challenges in AI-Driven Software Development for Autonomous Vehicles

- Safety and Reliability: Ensuring flawless AI performance in all scenarios is paramount.

- Cybersecurity: Protecting against hacking and unauthorized access is essential.

- Regulations and Law: Clear standards for safety, insurance, and liability are needed.

- Public Trust and Acceptance: Addressing concerns about safety, data privacy, and ethical dilemmas is crucial.

- Addressing Edge cases: Being able to handle unforeseen scenarios is challenging as those scenarios are rare and could be hard to imagine in some cases.

- Ethical Dilemmas: Defining AI decision-making in ambiguous situations raises moral questions.

- Safety and Reliability:

- Challenge: Sensor failures can lead to the misinterpretation of the environment.

- −

- Mitigation: Use diverse sensors (LiDAR, cameras, radar) with redundancy and robust sensor fusion algorithms.

- Challenge: Cybersecurity vulnerabilities can be exploited for malicious control.

- −

- Mitigation: Implement strong cybersecurity measures, penetration testing, and secure communication protocols.

- Challenge: Limited real-world testing data can lead to unforeseen scenarios.

- −

- Mitigation: Utilize simulation environments with diverse and challenging scenarios, combined with real-world testing with safety drivers.

- Challenge: Lack of clear regulations and legal frameworks can hinder the development and deployment.

- −

- Mitigation: Advocate for clear and adaptable regulations that prioritize safety and innovation.

- Cybersecurity:

- Challenge: Vulnerable software susceptible to hacking and manipulation.

- −

- Mitigation: Implement secure coding practices, penetration testing, and continuous monitoring.

- Challenge: AI models can be vulnerable to adversarial attacks, posing security risks.

- −

- Mitigation: Robust testing against adversarial scenarios, incorporating security measures, and regular updates to address emerging threats.

- Regulations and law:

- Challenge: Lack of clear legal liability in case of accidents involving autonomous vehicles.

- −

- Mitigation: Develop frameworks assigning responsibility to manufacturers, software developers, and operators.

- Challenge: Difficulty in adapting existing traffic laws to address the capabilities and limitations of autonomous vehicles.

- −

- Mitigation: Establish new regulations that prioritize safety, consider ethical dilemmas, and update with technological advancements.

- Public Trust and Acceptance:

- Challenge: Public concerns regarding safety and a lack of trust in AI decision-making.

- −

- Mitigation: Increase transparency in testing procedures, demonstrate safety through rigorous testing and data, and prioritize passenger safety in design.

- Addressing Edge Cases:

- Challenge: Rare or unexpected scenarios that confuse the AI’s perception.

- −

- Mitigation: Utilize diverse and comprehensive testing data, including simulations of edge cases, and develop robust algorithms that can handle the unexpected. Furthermore, include new scenarios as they are seen in the field from collected data as a continuous feedback loop as shown in Figure 3

- Ethical Dilemmas [26]:Data Bias:

- Challenge: AI models learn from historical data, and if the training data are biased [27], the model can perpetuate and amplify existing biases.

- −

- Mitigation: Rigorous data pre-processing, diversity in training data, and continuous monitoring for bias are essential. Ethical data collection practices must be upheld.

Algorithmic Bias:- Challenge: Algorithms may inadvertently encode biases present in the training data, leading to discriminatory outcomes.

- −

- Mitigation [28]: Regular audits of algorithms for bias, transparency in algorithmic decision-making, and the incorporation of fairness metrics during model evaluation.

Fairness and Accountability:- Challenge: Ensuring fair outcomes [29] and establishing accountability for AI decisions is complex, especially when models are opaque.

- −

- Mitigation: Implementing explainable AI (XAI) techniques, defining clear decision boundaries, and establishing accountability frameworks for AI-generated decisions.

Inclusivity and Accessibility:- Challenge: Biases in AI can result in excluding certain demographics, reinforcing digital divides.

- −

- Mitigation: Prioritizing diversity in development teams, actively seeking user feedback, and conducting accessibility assessments to ensure inclusivity.

Social Impact:- Challenge: The deployment of biased AI systems can have negative social implications [30], affecting marginalized communities disproportionately.

- −

- Mitigation: Conducting thorough impact assessments, involving diverse stakeholders in development process, and considering societal consequences during AI development.

- Ethical Frameworks and Guidelines [31]:

- Challenge: The absence of standardized ethical frameworks can lead to inconsistent practices in AI development.

- −

- Mitigation: Adhering to established ethical guidelines, such as those provided by organizations like the ISO, IEEE, SAE, government regulatory boards, etc., and actively participating in the development of industry-wide standards.

- Explainability and Transparency:

- Challenge: Many AI models operate as “black boxes”, making it challenging to understand how decisions are reached. AI safety is another challenge that needs to be addressed in safety-critical applications like autonomous vehicles.

- −

- Mitigation: Prioritizing explainability [32] in AI models, using interpretable algorithms, and providing clear documentation on model behavior.

- User Privacy: [33]

- Challenge: AI systems often process vast amounts of personal data, raising concerns about user privacy.

- −

- Mitigation: Implementing privacy-preserving techniques, obtaining informed consent, and adhering to data protection regulations (e.g., GDPR [34]) to safeguard user privacy.

- Continuous Monitoring and Adaptation:

- Challenge: AI models may encounter new biases or ethical challenges as they operate in dynamic environments.

- −

- Mitigation: Establishing mechanisms for ongoing monitoring, feedback loops, and model adaptation to address evolving ethical considerations.

5. AI’s Role in the Emerging Trend of Internet of Things (IoT) Ecosystem for Autonomous Vehicles

- Real-time data processing and analysis for insights into traffic, road conditions, and vehicle health.

- Predictive analytics for proactive maintenance, efficient resource allocation, and informed decision-making.

- Enhanced automation for autonomous driving tasks, adaptive cruise control [37], and dynamic route optimization.

- Efficient resource management for optimizing energy consumption, bandwidth usage, and load balancing.

- Security and anomaly detection for identifying potential threats and preventing cyberattacks [38].

- Personalized user experience through customized settings, preferences, and tailored insights.

- Edge computing for real-time decision-making, reducing latency and improving responsiveness.

Enhancing User Experience

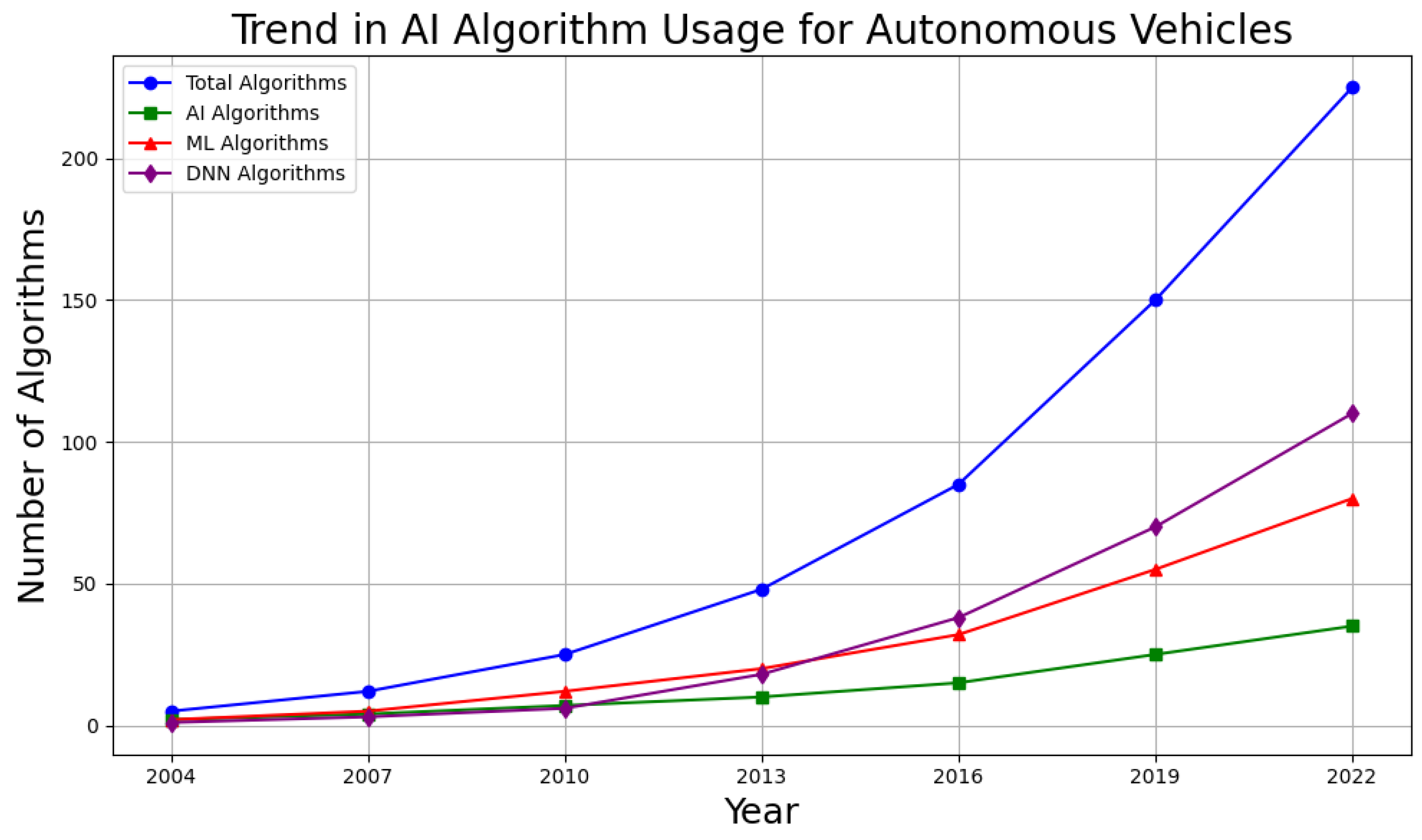

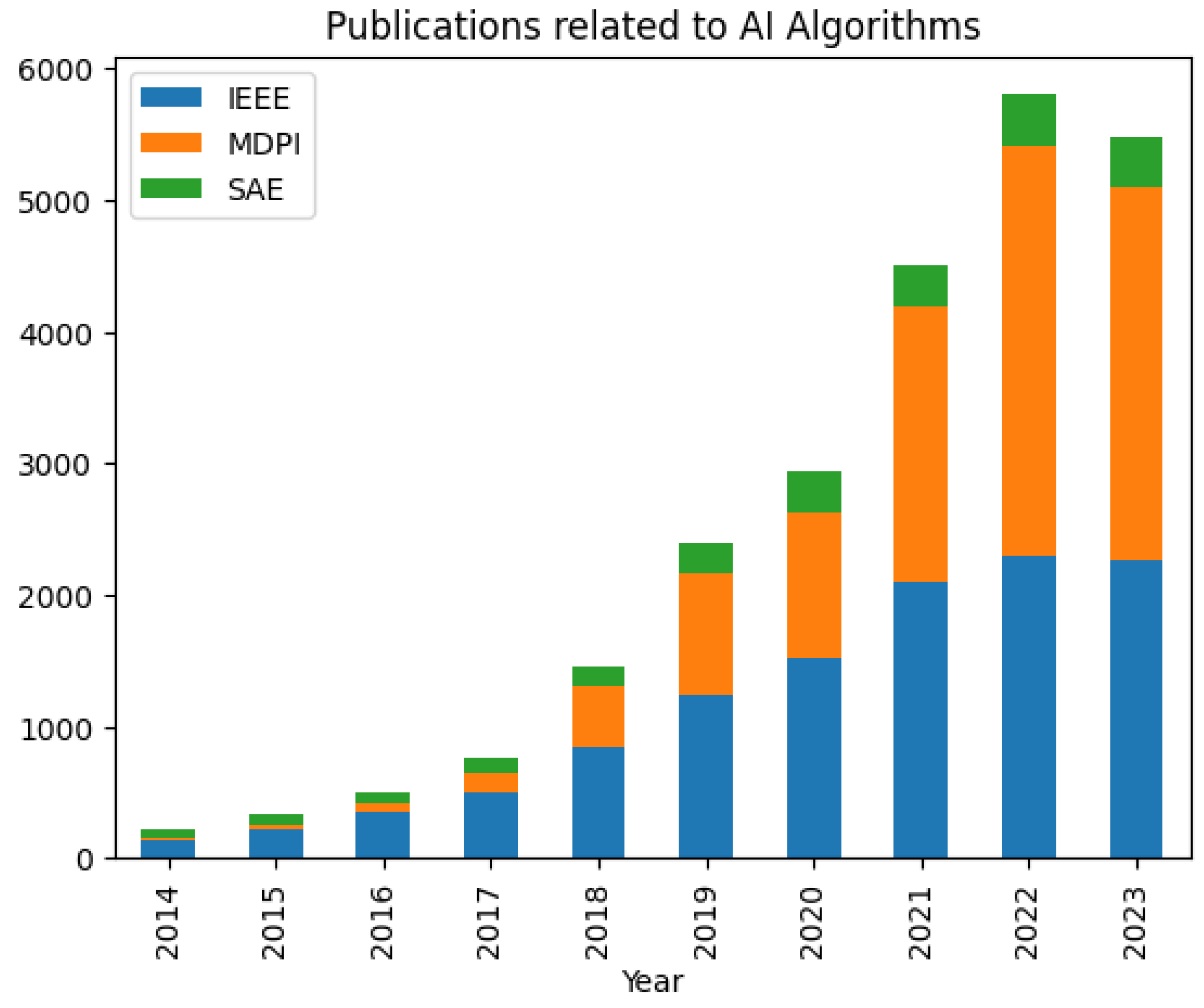

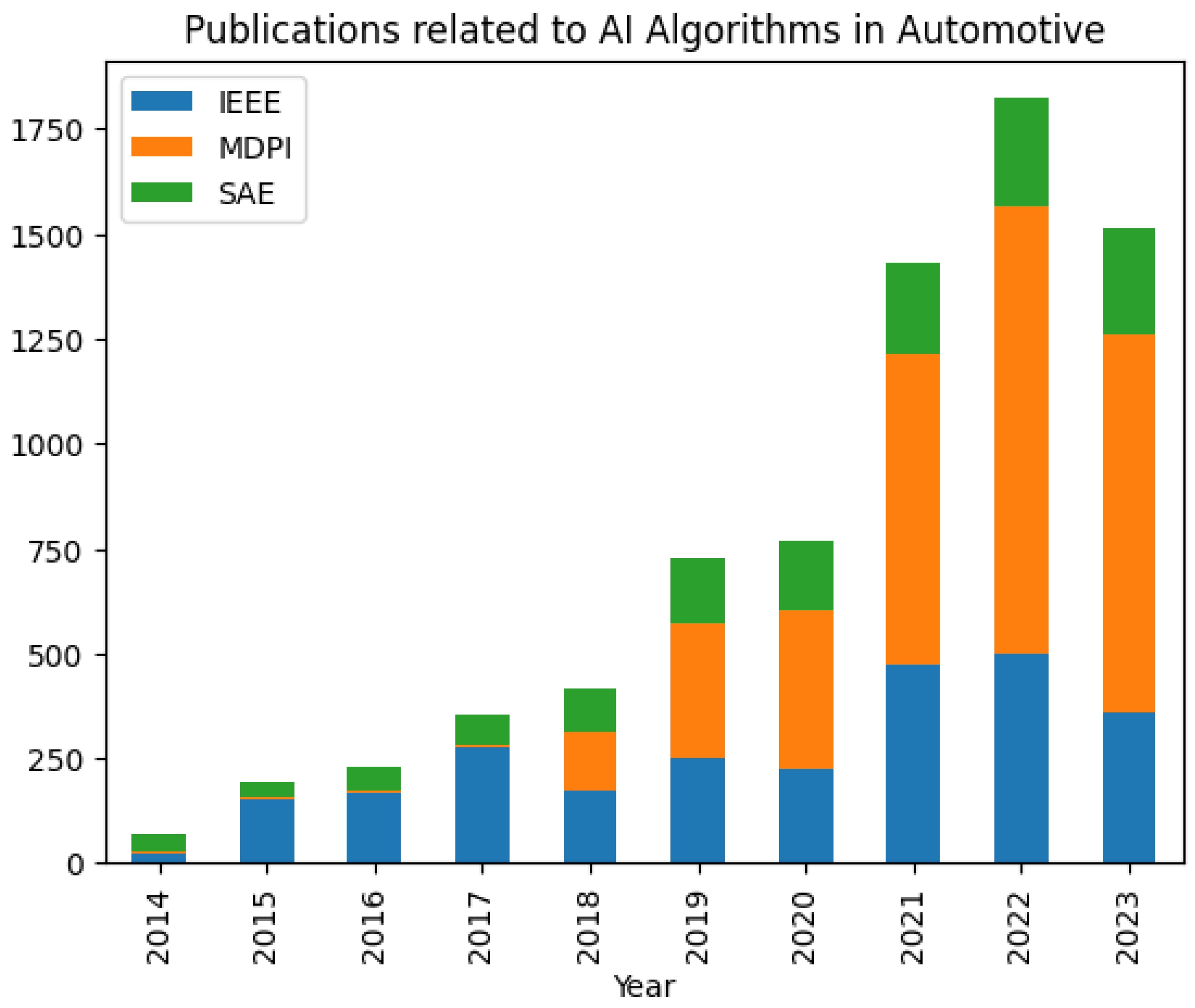

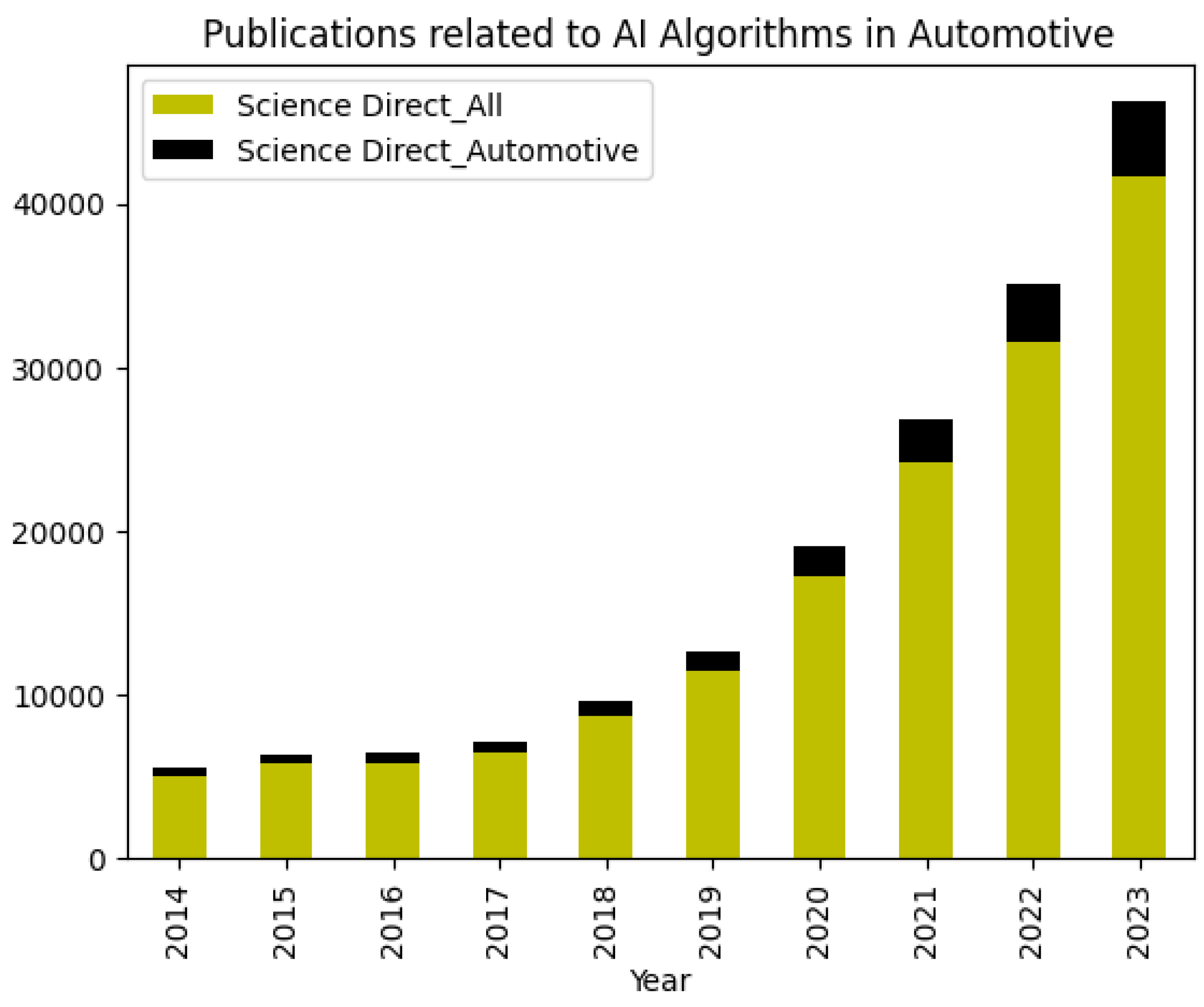

6. AI Algorithms’ Statistics for Autonomous Vehicles

- −

- Evolution of different types of AI algorithms over the years,

- −

- Research trends in the application of AI in all fields vs. autonomous vehicles,

- −

- Creation of a parameter set crucial for autonomous trucks versus cars,

- −

- Evolution of AI algorithms at different autonomy levels, and

- −

- Changes in the types of algorithms, software package size, etc., over time.

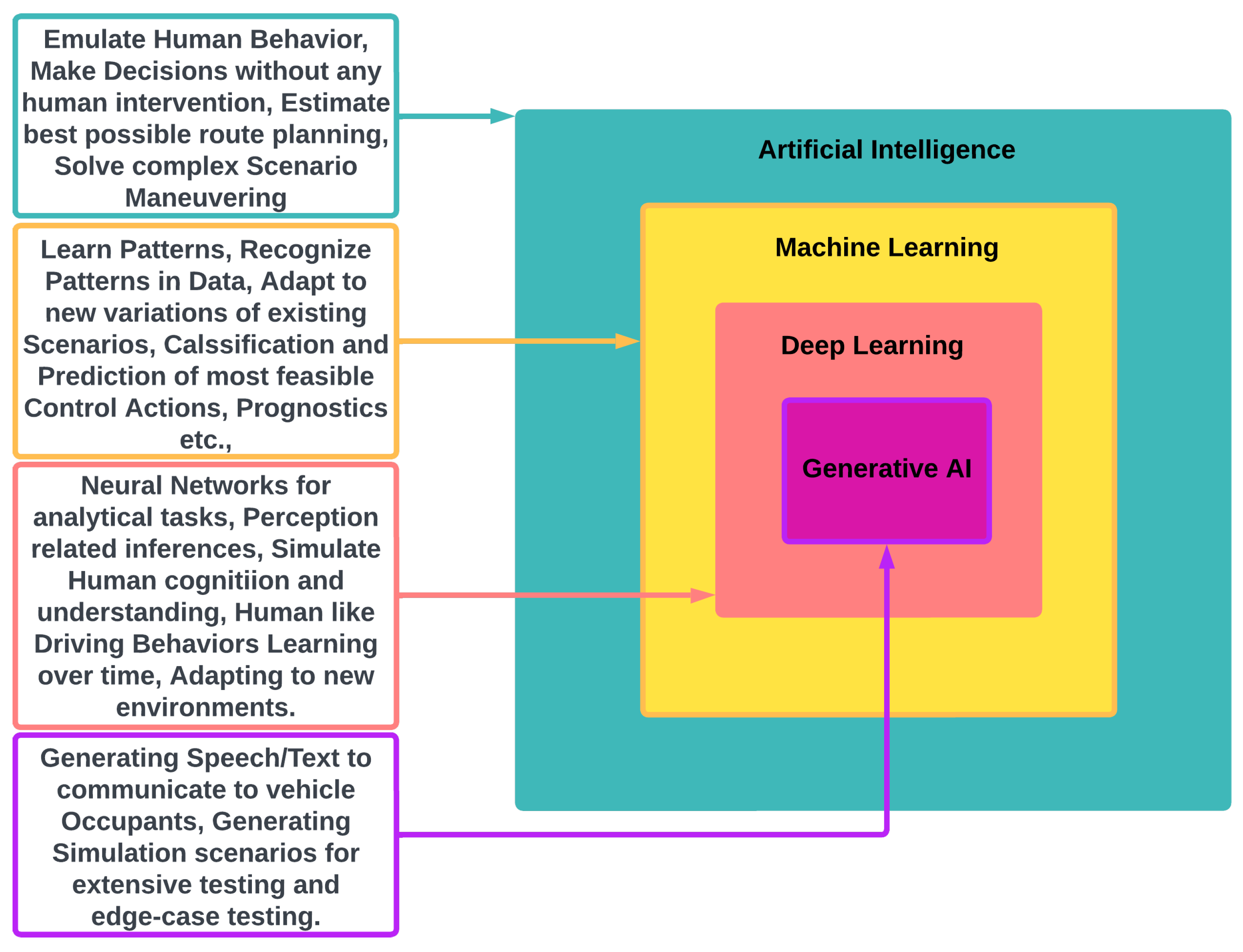

6.1. Stat1: Trends of Usage of AI Algorithms over the Years

- AI (Artificial Intelligence)

- Expert Systems: Rule-based systems that mimic human expertise in decision-making [44].

- Decision Trees: Hierarchical structures for classification and prediction. One good example is prognostics areas.

- Search Algorithms: Methods for finding optimal paths or solutions, such as A* search and path planning algorithms.

- Generative AI: To create scenarios for training the system and for balancing data in high severity accident/non-accident cases. (CRSS dataset). Create a non-existent scenario dataset. Supplement the real datasets. Simulation testing.

- NLP: AI Assistant (Yui, Concierge, Hey Mercedes, etc.,)—LLMs.

- ML (Machine Learning)

- Supervised Learning: Algorithms that learn from labeled data to make predictions, such as the following:

- −

- Linear Regression: For predicting continuous values.

- −

- Support Vector Machines (SVMs): For classification and outlier detection.

- −

- Decision Trees: For classification and rule generation.

- −

- Random Forests: Ensembles of decision trees for improved accuracy.

- Unsupervised Learning: Algorithms that find patterns in unlabeled data, such as the following:

- −

- Clustering Algorithms (K-means, Hierarchical): For grouping similar data points.

- −

- Dimensionality Reduction (PCA, t-SNE): For reducing data complexity.

- DNN (Deep Neural Networks)

- Convolutional Neural Networks (CNNs): For image and video processing, used for object detection, lane segmentation, and traffic sign recognition.

- Recurrent Neural Networks (RNNs): For sequential data processing, used for trajectory prediction and behavior modeling.

- Deep Reinforcement Learning (DRL): For learning through trial and error, used for control optimization and decision-making.

- Specific Examples in Autonomous Vehicles

- Lane Detection (DNN): CNNs are used to identify lane markings and road boundaries.

- Path Planning (AI): Search algorithms like A* and RRT are used to plan safe and efficient routes.

- Behavior Prediction (ML): SVMs or RNNs are used to anticipate the behavior of other vehicles and pedestrians.

6.2. Stat2: Parameters for AI Models (Trucks vs. Cars)

6.3. Stat3: Usage of AI Algorithms at Various Levels of Autonomy

- Status: Kodiak currently operates a fleet of Level 4 autonomous trucks for commercial freight hauling on behalf of shippers.

- Recent Developments:

- −

- Kodiak is focusing on scaling [59] its autonomous trucking service as a model, providing the driving system to existing carriers.

- −

- The company recently secured additional funding to expand its operations and partnerships.

- −

- No immediate news about the deployment of driverless trucks beyond current operations.

- Status: Waymo remains focused on Level 4 autonomous vehicle technology, primarily targeting robotaxi services in specific geographies [67].

- Recent Developments:

- −

- Waymo is expanding its robotaxi service in Phoenix, Arizona, with plans to eventually launch fully driverless operations.

- −

- The company’s Waymo Via trucking division continues testing autonomous trucks in California and Texas.

- −

- No publicly announced timeline for nationwide deployment of driverless trucks.

- Overall:

- Both Kodiak and Waymo are making progress towards commercializing Level 4 autonomous vehicles but are primarily focused on different segments (trucks vs. passenger cars).

- Driverless truck deployment timelines remain flexible and dependent on regulatory approvals and further testing as discussed previously.

- Key AI Components across Levels

- Perception:

- −

- L0–L2: Basic object detection and lane segmentation using CNNs.

- −

- L3–L4: LiDAR-based object detection, advanced sensor fusion algorithms for robust object recognition.

- −

- L5: 3D object mapping, robust sensor fusion, and interpretation.

- Decision-Making:

- −

- L0–L2: Rule-based algorithms for lane change assistance, adaptive cruise control.

- −

- L3–L4: Probabilistic roadmap planning (PRM), decision-making models for route selection.

- −

- L5: Deep reinforcement learning for adaptive behavior prediction, high-level route planning.

- Control:

- Limited Storage and Processing Power: The existing onboard storage and processing capabilities might not yet be sufficient for larger Level 4 and 5 software packages [63].

- Download and Update Challenges: Updating these larger packages may require longer download times and potentially disrupt vehicle operation.

- Security Concerns: The more complex the software, the higher the potential vulnerability to cyberattacks, necessitating robust security measures.

- Increased processing power and memory: This translates to higher hardware costs and potentially bulkier systems.

- Slower download and installation times: This can be frustrating for users, especially in areas with limited internet connectivity.

- Security concerns: Larger packages offer more attack vectors for potential hackers.

- Drive Orin platform: Designed for high-performance AV applications, Orin features a scalable architecture that can handle large software packages.

- Software optimization techniques: Nvidia uses various techniques like code compression and hardware-specific optimizations to reduce software size without sacrificing performance.

- Cloud-based solutions: Offloading some processing and data storage to the cloud can reduce the size of the onboard software package [42].

- Snapdragon Ride platform: Similar to Orin, Snapdragon Ride is a scalable platform built for the efficient processing of large AV software packages.

- Heterogeneous computing: Qualcomm utilizes different processing units like CPUs (Central Processing Units), GPUs (Graphics Processing Units), and NPUs (Neural Processing Units) to optimize performance and reduce software size by distributing tasks efficiently.

- Modular software architecture: Breaking down the software into smaller, modular components allows for easier updates and reduces the overall package size [2].

- Standardization: Industry-wide standards for AV software can help reduce duplication and fragmentation, leading to smaller package sizes.

- Compression algorithms: Advanced compression algorithms can significantly reduce the size of data and code without compromising functionality.

- Machine learning: Using machine learning to optimize software performance and resource utilization can help reduce the overall software footprint.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bordoloi, U.; Chakraborty, S.; Jochim, M.; Joshi, P.; Raghuraman, A.; Ramesh, S. Autonomy-driven Emerging Directions in Software-defined Vehicles. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, Z.; Zhang, W.; Zhao, F. Impact, challenges and prospect of software-defined vehicles. Automot. Innov. 2022, 5, 180–194. [Google Scholar] [CrossRef]

- Nadikattu, R.R. New ways in artificial intelligence. Int. J. Comput. Trends Technol. 2019, 67. [Google Scholar] [CrossRef]

- Deng, Z.; Yang, K.; Shen, W.; Shi, Y. Cooperative Platoon Formation of Connected and Autonomous Vehicles: Toward Efficient Merging Coordination at Unsignalized Intersections. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5625–5639. [Google Scholar] [CrossRef]

- Pugliese, A.; Regondi, S.; Marini, R. Machine Learning-based approach: Global trends, research directions, and regulatory standpoints. Data Sci. Manag. 2021, 4, 19–29. [Google Scholar] [CrossRef]

- SAE Industry Technologies Consortia’s Automated Vehicle Safety Consortium AVSC Best Practice for Describing an Operational Design Domain: Conceptual Framework and Lexicon, AVSC00002202004, Revised April, 2020. Available online: https://www.sae.org/standards/content/avsc00002202004/ (accessed on 28 January 2024).

- Khastgir, S.; Khastgir, S.; Vreeswijk, J.; Shladover, S.; Kulmala, R.; Alkim, T.; Wijbenga, A.; Maerivoet, S.; Kotilainen, I.; Kawashima, K.; et al. Distributed ODD Awareness for Connected and Automated Driving. Transp. Res. Procedia 2023, 72, 3118–3125. [Google Scholar] [CrossRef]

- Jack, W.; Jon, B. Navigating Tomorrow: Advancements and Road Ahead in AI for Autonomous Vehicles; (No. 11955); EasyChair: Manchester, UK, 2024. [Google Scholar]

- Lillo, L.D.; Gode, T.; Zhou, X.; Atzei, M.; Chen, R.; Victor, T. Comparative safety performance of autonomous-and human drivers: A real-world case study of the waymo one service. arXiv 2023, arXiv:2309.01206. [Google Scholar]

- Nordhoff, S.; Lee, J.D.; Hagenzieker, M.; Happee, R. (Mis-) use of standard Autopilot and Full Self-Driving (FSD) Beta: Results from interviews with users of Tesla’s FSD Beta. Front. Psychol. 2023, 14, 1101520. [Google Scholar] [CrossRef]

- Wansley, M.T. Regulating Driving Automation Safety. Emory Law J. 2024, 73, 505. [Google Scholar]

- Anton, K.; Oleg, K. MBSE and Safety Lifecycle of AI-enabled systems in transportation. Int. J. Open Inf. Technol. 2023, 11, 100–104. [Google Scholar]

- Kang, H.; Lee, Y.; Jeong, H.; Park, G.; Yun, I. Applying the operational design domain concept to vehicles equipped with advanced driver assistance systems for enhanced safety. J. Adv. Transp. 2023, 2023, 4640069. [Google Scholar] [CrossRef]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A Taxonomy and Survey of Edge Cloud Computing for Intelligent Transportation Systems and Connected Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 6206–6221. [Google Scholar] [CrossRef]

- Murphey, Y.L.; Kolmanovsky, I.; Watta, P. (Eds.) AI-Enabled Technologies for Autonomous and Connected Vehicles; Springer: Berlin/Heidelberg, Germany, 2022. [Google Scholar]

- Vishnukumar, H.; Butting, B.; Müller, C.; Sax, E. Machine Learning and deep neural network—Artificial intelligence core for lab and real-world test and validation for ADAS and autonomous vehicles: AI for efficient and quality test and validation. In Proceedings of the 2017 Intelligent Systems Conference (IntelliSys), London, UK, 7–8 September 2017; pp. 714–721. [Google Scholar]

- Bachute, M.R.; Subhedar, J.M. Autonomous driving architectures: Insights of machine Learning and deep Learning algorithms. Mach. Learn. Appl. 2021, 6, 00164. [Google Scholar] [CrossRef]

- Ma, Y.; Wang, Z.; Yang, H.; Yang, L. Artificial intelligence applications in the development of autonomous vehicles: A survey. IEEE/CAA J. Autom. Sin. 2020, 7, 315–329. [Google Scholar] [CrossRef]

- Bendiab, G.; Hameurlaine, A.; Germanos, G.; Kolokotronis, N.; Shiaeles, S. Autonomous Vehicles Security: Challenges and Solutions Using Blockchain and Artificial Intelligence. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3614–3637. [Google Scholar] [CrossRef]

- Chu, M.; Zong, K.; Shu, X.; Gong, J.; Lu, Z.; Guo, K.; Dai, X.; Zhou, G. Work with AI and Work for AI: Autonomous Vehicle Safety Drivers’ Lived Experiences. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–16. [Google Scholar]

- Hwang, M.H.; Lee, G.S.; Kim, E.; Kim, H.W.; Yoon, S.; Talluri, T.; Cha, H.R. Regenerative braking control strategy based on AI algorithm to improve driving comfort of autonomous vehicles. Appl. Sci. 2023, 13, 946. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, C.; Macesanu, G. A Survey of Deep Learning Techniques for Autonomous Driving. arXiv 2020, arXiv:1910.07738v2. [Google Scholar] [CrossRef]

- Le, H.; Wang, Y.; Deepak, A.; Savarese, S.; Hoi, S.C.-H. Coderl: Mastering code generation through pretrained models and deep reinforcement Learning. Adv. Neural Inf. Process. Syst. 2022, 35, 21314–21328. [Google Scholar]

- Allamanis, M. Learning Natural Coding Conventions. In Proceedings of the SIGSOFT/FSE’14: 22nd ACM SIGSOFT Symposium on the Foundations of Software Engineering, Hong Kong, China, 16–21 November 2014; pp. 281–293. [Google Scholar] [CrossRef]

- Sharma, O.; Sahoo, N.C.; Puhan, N.B. Recent advances in motion and behavior planning techniques for software architecture of autonomous vehicles: A state-of-the-art survey. Eng. Appl. Artif. Intell. 2021, 101, 104211. [Google Scholar] [CrossRef]

- Yoo, D.H. The Ethics of Artificial Intelligence From an Economics Perspective: Logical, Theoretical, and Legal Discussions in Autonomous Vehicle Dilemma. Ph.D. Thesis, Università degli Studi di Siena, Siena, Italy, 2023. [Google Scholar]

- Khan, S.M.; Salek, M.S.; Harris, V.; Comert, G.; Morris, E.; Chowdhury, M. Autonomous Vehicles for All? J. Auton. Transp. Syst. 2023, 1, 3. [Google Scholar] [CrossRef]

- Mensah, G.B. Artificial Intelligence and Ethics: A Comprehensive Review of Bias Mitigation, Transparency, and Accountability in AI Systems. 2023. Available online: https://www.researchgate.net/profile/George-Benneh-Mensah-2/publication/375744287_Artificial_Intelligence_and_Ethics_A_Comprehensive_Reviews_of_Bias_Mitigation_Transparency_and_Accountability_in_AI_Systems/links/656c8e46b86a1d521b2e2a16/Artificial-Intelligence-and-Ethics-A-Comprehensive-Reviews-of-Bias-Mitigation-Transparency-and-Accountability-in-AI-Systems.pdf (accessed on 26 January 2024).

- Stine, A.A.K.; Kavak, H. Bias, fairness, and assurance in AI: Overview and synthesis. In AI Assurance; Academic Press: Cambridge, MA, USA, 2023; pp. 125–151. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Yang, H.; Guo, J.; Wu, Y.; Liu, J. Ethical considerations of using ChatGPT in health care. J. Med. Internet Res. 2023, 25, e48009. [Google Scholar] [CrossRef]

- Prem, E. From ethical AI frameworks to tools: A review of approaches. AI Ethics 2023, 3, 699–716. [Google Scholar] [CrossRef]

- Hase, P.; Bansal, M. Evaluating explainable AI: Which algorithmic explanations help users predict model behavior? arXiv 2020, arXiv:2005.01831. [Google Scholar]

- Kumar, A. Exploring Ethical Considerations in AI-driven Autonomous Vehicles: Balancing Safety and Privacy. J. Artif. Intell. Gen. Sci. (Jaigs) 2024, 2, 125–138. [Google Scholar] [CrossRef]

- Vogel, M.; Bruckmeier, T.; Cerbo, F.D. General Data Protection Regulation (GDPR) Infrastructure for Microservices and Programming Model. U.S. Patent 10839099, 17 November 2020. [Google Scholar]

- Mökander, J.; Floridi, L. Operationalising AI governance through ethics-based auditing: An industry case study. Ethics 2023, 3, 451–468. [Google Scholar]

- Biswas, A.; Wang, H.-C. Autonomous Vehicles Enabled by the Integration of IoT, Edge Intelligence, 5G, and Blockchain. Sensors 2023, 23, 1963. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Lyu, B.; Wen, S.; Shi, K.; Zhu, S.; Huang, T. Robust adaptive safety-critical control for unknown systems with finite-time element-wise parameter estimation. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 1607–1617. [Google Scholar] [CrossRef]

- Madala, R.; Vijayakumar, N.; Verma, S.; Chandvekar, S.D.; Singh, D.P. Automated AI research on cyber attack prediction and security design. In Proceedings of the 2023 6th International Conference on Contemporary Computing and Informatics (IC3I), Gautam Buddha Nagar, India, 14–16 September 2023; pp. 1391–1395. [Google Scholar] [CrossRef]

- Nishant, R.; Kennedy, M.; Corbett, J. Artificial intelligence for sustainability: Challenges, opportunities, and a research agenda. Int. J. Inf. Manag. 2020, 53, 102104. [Google Scholar] [CrossRef]

- Yu, J.; Zhou, X.; Liu, X.; Liu, J.; Xie, Z.; Zhao, K. Detecting multi-type self-admitted technical debt with generative adversarial network-based neural networks. Inf. Softw. Technol. 2023, 158, 107190. [Google Scholar] [CrossRef]

- Zhuhadar, L.P.; Lytras, M.D. The Application of AutoML Techniques in Diabetes Diagnosis: Current Approaches, Performance, and Future Directions. Sustainability 2023, 15, 13484. [Google Scholar] [CrossRef]

- Marie, L. NVIDIA Enters Production with DRIVE Orin, Announces BYD and Lucid Group as New EV Customers, Unveils Next-Gen DRIVE Hyperion AV Platform. Published by Nvidia Newsroom, Press Release, 2022. Available online: https://nvidianews.nvidia.com/news/nvidia-enters-production-with-drive-orin-announces-byd-and-lucid-group-as-new-ev-customers-unveils-next-gen-drive-hyperion-av-platform (accessed on 26 January 2024).

- Tharakram, K. Snapdragon Ride SDK: A Premium Platform for Developing Customizable ADAS Applications. Published by Qualcomm OnQ Blog. 2022. Available online: https://www.qualcomm.com/news/onq/2022/01/snapdragon-ride-sdk-premium-solution-developing-customizable-adas-and-autonomous (accessed on 26 January 2024).

- Garikapati, D.; Liu, Y. Dynamic Control Limits Application Strategy For Safety-Critical Autonomy Features. In Proceedings of the IEEE 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 8–12 October 2022; pp. 695–702. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Kumar, A.; Srivastava, S. Object detection system based on convolution neural networks using single shot multi-box detector. Procedia Comput. Sci. 2020, 171, 2610–2617. [Google Scholar] [CrossRef]

- Madridano, A.; Al-Kaff, A.; Martín, D.; Escalera, A.D.L. Trajectory planning for multi-robot systems: Methods and applications. Expert Syst. Appl. 2021, 173, 114660. [Google Scholar] [CrossRef]

- Guo, F.; Wang, S.; Yue, B.; Wang, J. A deformable configuration planning framework for a parallel wheel-legged robot equipped with lidar. Sensors 2020, 20, 5614. [Google Scholar] [CrossRef] [PubMed]

- Borase, R.P.; Maghade, D.K.; Sondkar, S.Y.; Pawar, S.N. A review of PID control, tuning methods and applications. Int. J. Dyn. Control. 2021, 9, 818–827. [Google Scholar] [CrossRef]

- Tuli, S.; Mirhakimi, F.; Pallewatta, S.; Zawad, S.; Casale, G.; Javadi, B.; Yan, F.; Buyya, R.; Jennings, N.R. AI augmented Edge and Fog computing: Trends and challenges. J. Netw. Comput. Appl. 2023, 216, 103648. [Google Scholar] [CrossRef]

- Elkhediri, S.; Benfradj, A.; Thaljaoui, A.; Moulahi, T.; Zeadally, S. Integration of Artificial Intelligence (AI) with sensor networks: Trends and future research opportunities. J. King Saud-Univ.-Comput. Inf. Sci. 2023, 36, 101892. [Google Scholar] [CrossRef]

- Institute of Electrical and Electronics Engineers (IEEE) Explore. AI/ML Publications (2014–2023). Available online: https://ieeexplore.ieee.org/Xplore/home.jsp (accessed on 28 January 2024).

- Multidisciplinary Digital Publishing Institute (MDPI). AI/ML Publications (2014–2023). Available online: https://www.mdpi.com/ (accessed on 28 January 2024).

- Society of Automotive Engineers (SAE) International. AI/ML Publications (2014–2023). Available online: https://www.sae.org/publications (accessed on 28 January 2024).

- Science Direct. AI/ML Publications (2014–2023). Available online: https://www.sciencedirect.com/ (accessed on 28 January 2024).

- Fritschy, C.; Spinler, S. The impact of autonomous trucks on business models in the automotive and logistics industry—A Delphi-based scenario study. Technol. Forecast. Soc. Chang. 2019, 148, 119736. [Google Scholar] [CrossRef]

- Engholma, A.; Björkmanb, A.; Joelssonb, Y.; Kristofferssond, I.; Perneståla, A. The emerging technological innovation system of driverless trucks. Transp. Res. Procedia 2020, 49, 145–159. [Google Scholar] [CrossRef]

- Parekh, D.; Poddar, N.; Rajpurkar, A.; Chahal, M.; Kumar, N.; Joshi, G.P.; Cho, W. A Review on Autonomous Vehicles: Progress, Methods and Challenges. Electronics 2022, 11, 2162. [Google Scholar] [CrossRef]

- Kong, D.; Sun, L.; Li, J.L.; Xu, Y. Modeling cars and trucks in the heterogeneous traffic based on car–truck combination effect using cellular automata. Phys. Stat. Mech. Appl. 2021, 562, 125329. [Google Scholar] [CrossRef]

- Zeb, A.; Khattak, K.S.; Ullah, M.R.; Khan, Z.H.; Gulliver, T.A. HetroTraffSim: A macroscopic heterogeneous traffic flow simulator for road bottlenecks. Future Transp. 2023, 3, 368–383. [Google Scholar] [CrossRef]

- Qian, Z.S.; Li, J.; Li, X.; Zhang, M.; Wang, H. Modeling heterogeneous traffic flow: A pragmatic approach. Transp. Res. Part Methodol. 2017, 99, 183–204. [Google Scholar] [CrossRef]

- Andersson, P.; Ivehammar, P. Benefits and costs of autonomous trucks and cars. J. Transp. Technol. 2019, 9, 121–145. [Google Scholar] [CrossRef]

- Lee, S.; Cho, K.; Park, H.; Cho, D. Cost-Effectiveness of Introducing Autonomous Trucks: From the Perspective of the Total Cost of Operation in Logistics. Appl. Sci. 2023, 13, 10467. [Google Scholar] [CrossRef]

- SAE International. J3016_202104: Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles. Revised on April 2021. Available online: https://www.sae.org/standards/content/j3016_202104/ (accessed on 28 January 2024).

- Chen, D.; Lin, Y.; Li, W.; Li, P.; Zhou, J.; Sun, X. Measuring and relieving the over-smoothing problem for graph neural networks from the topological view. Proc. Aaai Conf. Artif. Intell. 2020, 34, 3438–3445. [Google Scholar] [CrossRef]

- Bojarski, M.; Testa, D.D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, D.L.; Monfort, M.; Muller, U.; Zhang, J.; et al. End-to-End Deep Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316. [Google Scholar]

- Zhou, H.; Zhou, A.L.J.; Wang, Y.; Wu, W.; Qing, Z.; Peeta, S. Review of learning-based longitudinal motion planning for autonomous vehicles: Research gaps between self-driving and traffic congestion. Transp. Res. Rec. 2022, 2676, 324–341. [Google Scholar] [CrossRef]

- Norouzi, A.; Heidarifar, H.; Borhan, H.; Shahbakhti, M.; Koch, C.R. Integrating machine learning and model predictive control for automotive applications: A review and future directions. Eng. Appl. Artif. Intell. 2023, 120, 105878. [Google Scholar] [CrossRef]

- Kummetha, V.C.; Kondyli, A.; Schrock, S.D. Analysis of the effects of adaptive cruise control on driver behavior and awareness using a driving simulator. J. Transp. Saf. Secur. 2020, 12, 587–610. [Google Scholar] [CrossRef]

- Koopman, P.; Wagner, M. Challenges in Autonomous Vehicle Testing and Validation. Sae Int. J. Transp. Safety 2016, 4, 15–24. [Google Scholar] [CrossRef]

- Bosich, D.; Chiandone, M.; Sulligoi, G.; Tavagnutti, A.A.; Vicenzutti, A. High-Performance Megawatt-Scale MVDC Zonal Electrical Distribution System Based on Power Electronics Open System Interfaces. IEEE Trans. Transp. Electrif. 2023, 9, 4541–4551. [Google Scholar] [CrossRef]

- Neural Information Processing Systems (NeurIPS). AI/ML Publications (2014–2023). Available online: https://nips.cc/ (accessed on 28 January 2024).

| Vehicle Company | Country | Environment | Operational Conditions | Driving Scenarios |

|---|---|---|---|---|

| Waymo Driver | United States | Sunny, light rain/snow | Moderate traffic density | Lane changes, highway merging/exiting, multi-lane highways, rural roads (good pavement), daytime/nighttime, dynamic route planning |

| Tesla Autopilot | United States | Clear weather | Limited traffic density, specific speed ranges | Lane markings (driver supervision required) |

| Mobileye Cruise AV | United States | Sunny, dry weather | Limited traffic density, below 45 mph | Highways with clearly marked lanes |

| Aurora and Waymo Via | United States | Light rain/snow, variable lighting | Moderate traffic density | Multi-lane highways, rural roads (good pavement), daytime, nighttime, dynamic route planning, traffic light/stop sign recognition, intersection navigation |

| TuSimple and Embark Trucks | United States | Sunny, dry weather, clear visibility | Limited traffic density, pre-mapped routes, daytime only, max speed 70 mph | Limited-access highways with clear lanes, lane changes, highway merging/exiting, platooning |

| Pony.ai and Einride | China | Diverse weather (heavy rain/snow) | High traffic density, frequent stops/turns | Narrow city streets, residential areas, parking lots, low speeds (20-30 mph), geo-fenced delivery zones, pedestrian/cyclist detection |

| Komatsu and Caterpillar | Various (depending on deployment) | Harsh weather (dust, heat, extreme temperatures) | Limited/no network connectivity, uneven terrain, steep inclines/declines | Unpaved roads, autonomous operation with remote monitoring, pre-programmed routes, obstacle detection (unstructured environments), path planning around natural hazards |

| Baidu Apollo | China | Clear weather, limited traffic density | Daytime, nighttime | Highways and city streets (specific zones), lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation (low-speed maneuvering) |

| WeRide | China | Clear weather | Daytime, nighttime | Limited-access highways and urban streets, lane changes, highway merging/exiting, traffic light/stop sign recognition, intersection navigation, automated pick-up/drop-off |

| Bosch and Daimler | Germany | Good weather | Daytime, nighttime | Motorways and specific highways, platooning with other AV trucks, automated lane changes/overtaking, emergency stopping procedures, communication with traffic management systems |

| Volvo Trucks | Sweden | Varying weather | Daytime, nighttime | Defined sections of highways, obstacle detection (unstructured environments), path planning around natural hazards, pre-programmed routes (high precision), remote monitoring/control (autonomous mining/quarry) |

| Technology | Developed over the Years | Future |

|---|---|---|

| Environmental perception | DL for object detection, YOLOv3, K-means clustering | Very challenging. Needs more research to better detect objects in blurry, extreme, and rare conditions in real time. |

| Pedestrian detection | PVANET and RCNN model for object detection in blurry weather | OrientNet, RPN, and PredictorNet to solve occlusion problem. |

| Path planning | DL algorithm based on CNN | Multisensor fusion system, along with an INS, a GNSS, and a LiDAR system, would be used to implement a 3D SLAM. |

| Vehicles’ cybersecurity | Security testing and TARA | Remote control of AV deploying IoT sensors. |

| Motion planning | Hidden Markov model Q-Learning algorithm | Grey prediction model utilizing an advanced model predictive control for effective lane change. |

| Parameter | Sub-Class | Trucks | Cars |

|---|---|---|---|

| Environment | Traffic Density | Operate on highways with predictable traffic patterns | Encounter diverse, often congested, urban environments |

| Road Infrastructure | Navigate primarily on well-maintained highways | Deal with varied road conditions and potentially unmarked streets | |

| Weather Conditions | May prioritize stability and visibility for cargo safety | May prioritize maneuverability for passenger comfort | |

| Vehicle characteristics | Size and Weight | Larger size and weight present different sensor ranges and dynamic response complexities | Smaller size and weight in comparison to trucks |

| Cargo Handling and Safety | Require AI to manage cargo weight distribution and potential shifting of cargo | This is not a concern for cars | |

| Fuel Efficiency and Emissions | Truck AI prioritizes efficient fuel consumption due to long-distance travel | Car AI may prioritize smoother acceleration and deceleration for passenger comfort | |

| Operational considerations | Route Planning and Optimization | Require long-distance route planning with considerations for infrastructural limitations, rest stops, and cargo delivery schedules | Generally focus on shorter, dynamic routes with real-time traffic updates |

| Communication and Connectivity | May rely on dedicated infrastructure for communication (platooning, V2X) | Primarily use existing cellular networks | |

| Legal and Regulatory Landscape | Regulations regarding automation and liability are tight | Regulations impacting AI and deployment are different than those of trucks | |

| AI algorithm and hardware needs | Perception and Sensor Fusion | May prioritize radar and LiDAR for long-range detection | Benefit from high-resolution cameras for near-field obstacle avoidance |

| Decision-Making and Planning | AI focuses on safe, fuel-efficient navigation and traffic flow optimization | AI prioritizes dynamic route adjustments, pedestrian/cyclist detection, and passenger comfort | |

| Redundancy and Safety Protocols | May have stricter fail-safe measures due to cargo risks | Have safety protocols with redundant systems | |

| Additional factors | Public Perception and Acceptance | Public trust in truck automation might be slower to build due to size and potential cargo risks | Public trust in car automation is higher due to fewer risks |

| Economic and Business Models | Automation models may involve fleet management and logistics optimizations | Automation may focus on ride-sharing and individual ownership |

| Level of Autonomy | % of Systems Using AI Algorithms | Algorithm Types | Key AI Algorithms | Key Tasks Automated | No. of AI Algorithms | SW Pack Size |

|---|---|---|---|---|---|---|

| L0 (No Automation) | 0% | N/A | N/A | N/A | 0 | Few MB |

| L1 (Driver Assistance) | 50–70% | Rule-based and Decision Trees | Adaptive Cruise Control, Lane Departure Warning (LDW), Automatic Emergency Braking (AEB) | Sensing, basic alerts and interventions | 3–5 | 100 s of MB |

| L2 (Partial Automation) | 80–90% | Rule-based, Decision trees, Reinforcement Learning (RL), and Support Vector Machines (SVM) | Traffic Sign Recognition, Highway Autopilot (ACC + lane centering), Traffic Jam Assist | Navigation, lane control, stop-and-go, limited environmental adaptation | 5–10 | 100 s MB to Few GBs |

| L3 (Conditional Automation) | 90–95% | Deep Learning (DL), Schochastic, Guassian | Urban Autopilot, Valet Parking | Full control under specific conditions, dynamic environment adaptation, complex decision-making | 10–15 | Few GB to 10 s of GB |

| L4 (High Automation) | 95–99% | Advanced DL (e.g., Generative Adversarial Networks, Transformer Models, etc.), Multi-agent RL, Sensor Fusion | City Navigation, Highway Chauffeur | Full control in specific environments, high-level navigation, complex traffic scenarios | 15–20 | 10 s of GB to 100 s of GB |

| L5 (Full Automation) | 100% | Hybrid Algorithms (combining various types), Explainable AI (XAI) for Advanced DL, Multi-agent RL | Universal Autonomy | Full control in all environments, self-learning and adaptation, human-like decision-making | 20+ | 100 s of GB to TBs |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garikapati, D.; Shetiya, S.S. Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data Cogn. Comput. 2024, 8, 42. https://doi.org/10.3390/bdcc8040042

Garikapati D, Shetiya SS. Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data and Cognitive Computing. 2024; 8(4):42. https://doi.org/10.3390/bdcc8040042

Chicago/Turabian StyleGarikapati, Divya, and Sneha Sudhir Shetiya. 2024. "Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape" Big Data and Cognitive Computing 8, no. 4: 42. https://doi.org/10.3390/bdcc8040042

APA StyleGarikapati, D., & Shetiya, S. S. (2024). Autonomous Vehicles: Evolution of Artificial Intelligence and the Current Industry Landscape. Big Data and Cognitive Computing, 8(4), 42. https://doi.org/10.3390/bdcc8040042