Numerical Investigation of a Class of Nonlinear Time-Dependent Delay PDEs Based on Gaussian Process Regression

Abstract

1. Introduction

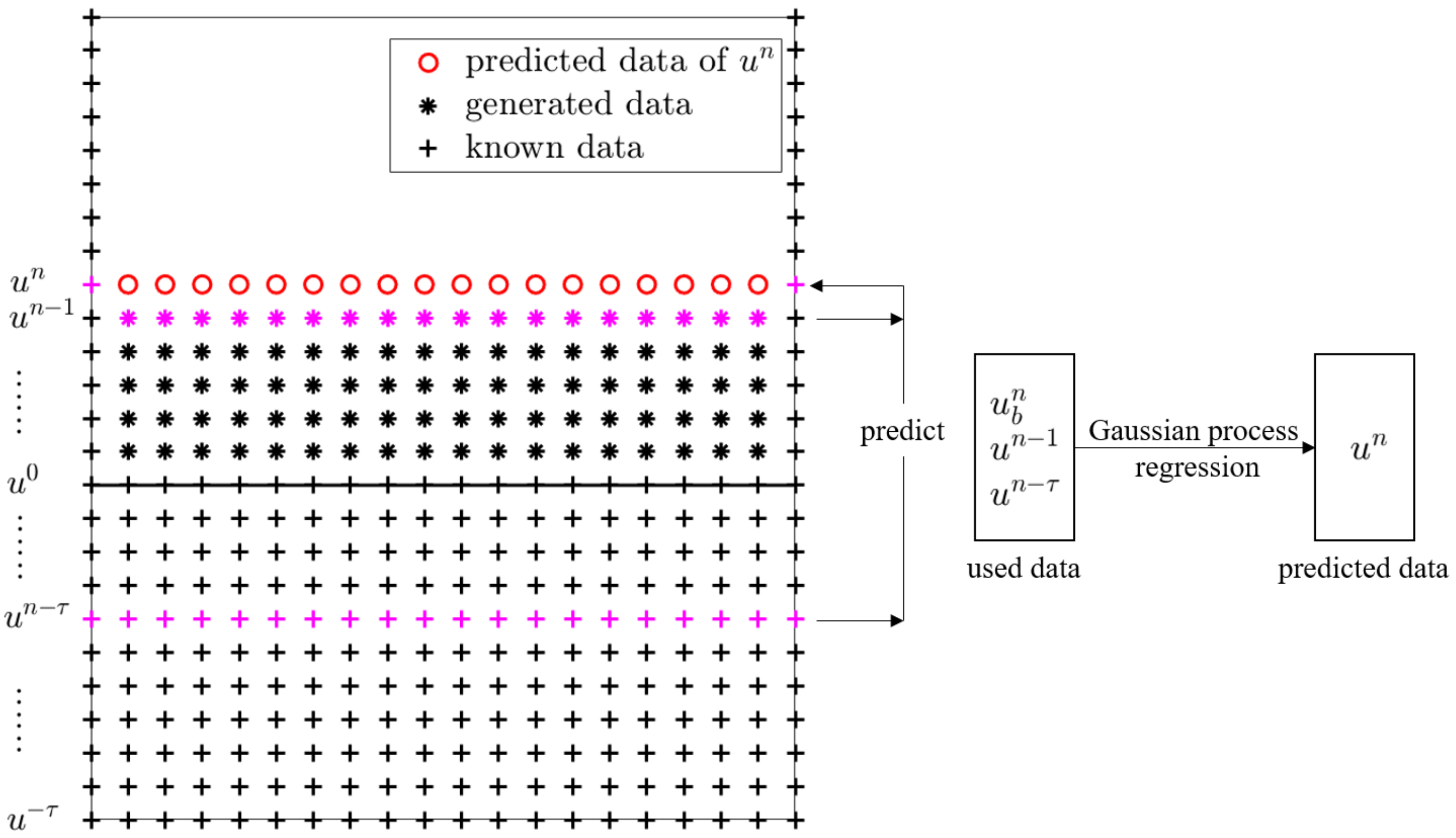

2. Multi-Priors Numerical Gaussian Processes

2.1. Prior

2.2. Training

2.3. Posterior

2.4. Propagating Uncertainty

2.5. Basic Workflow

- Firstly, we obtain the initial data , delay term data () and the inhomogeneous term data by randomly selecting points on spatial domain , and obtain the boundary data by randomly selecting points on . Then we train the hyper-parameters in (7) by using the obtained data.

- From the obtained hyper-parameters of step 1, (8) is used to predict the solution for the first time step and obtain artificially generated data , where points are randomly sampled from , and is the corresponding random vector. We obtain the delay term data () and the inhomogeneous term data by randomly selecting points on , then obtain the boundary data by randomly selecting points on .

- From , (), and , we train the hyper-parameters for the second time step.

- From the obtained hyper-parameters of step 3, (10) is used to predict the solution for the second time step and obtain artificially generated data , where points are randomly sampled from . We also obtain the delay term data () and the inhomogeneous term data by randomly selecting points on , then obtain the boundary data by randomly selecting points on .

- Steps 3 and 4 are repeated until the last time step.

2.6. Processing of Fractional-Order Operators

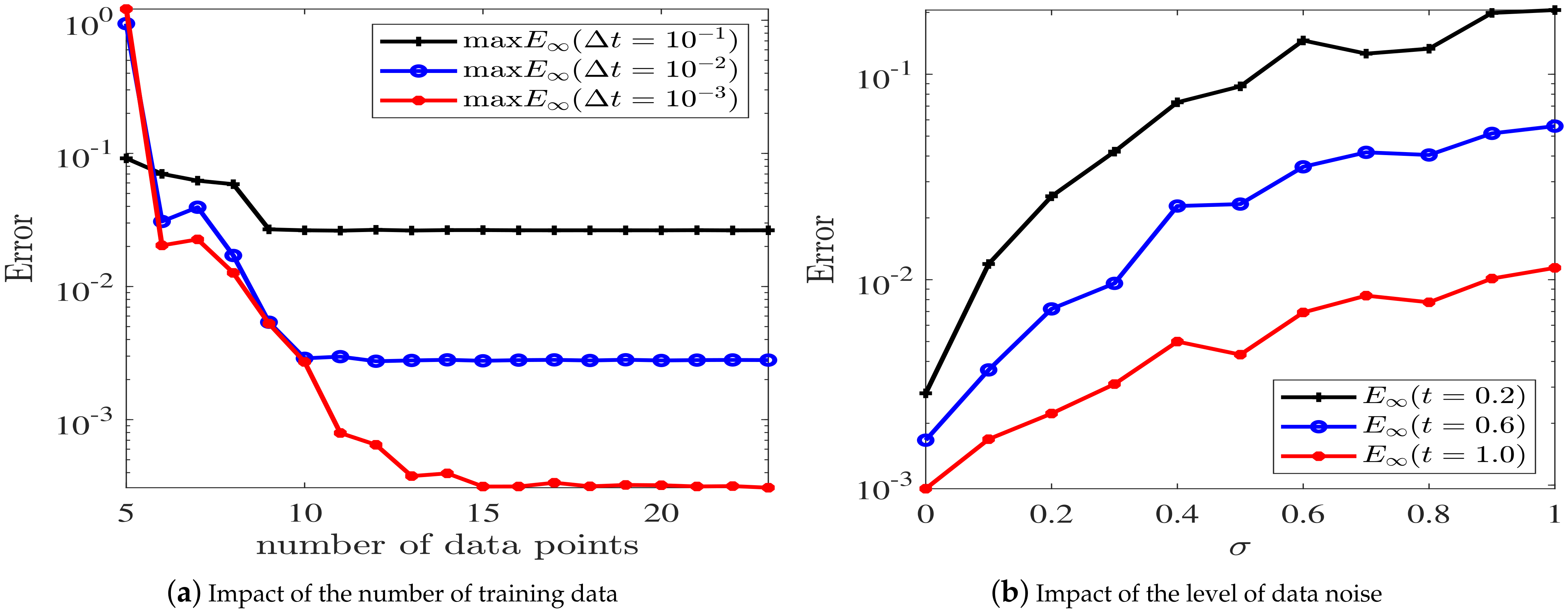

2.7. Numerical Tests

3. Runge–Kutta Methods

3.1. MP-NGPs Based on Runge–Kutta Methods

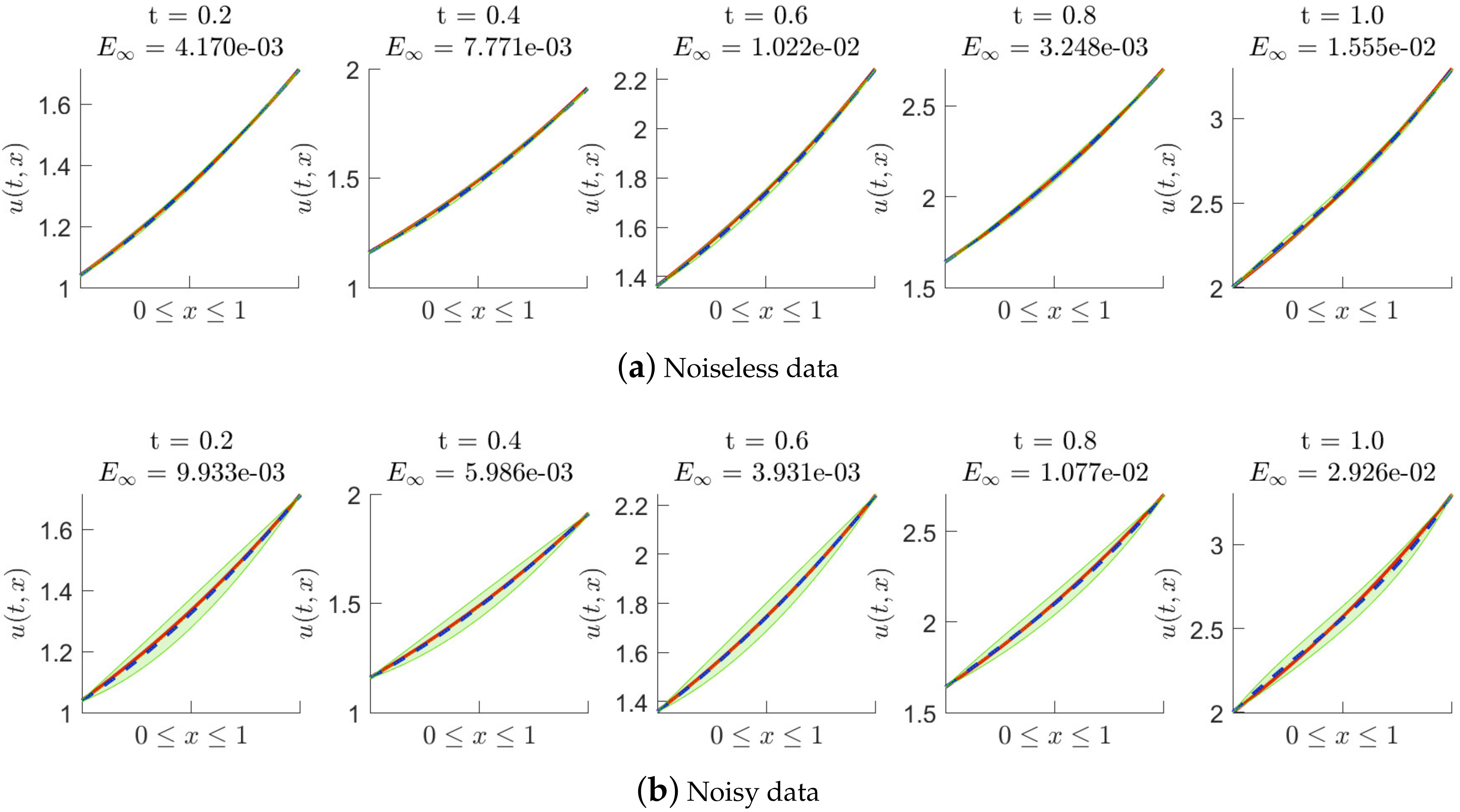

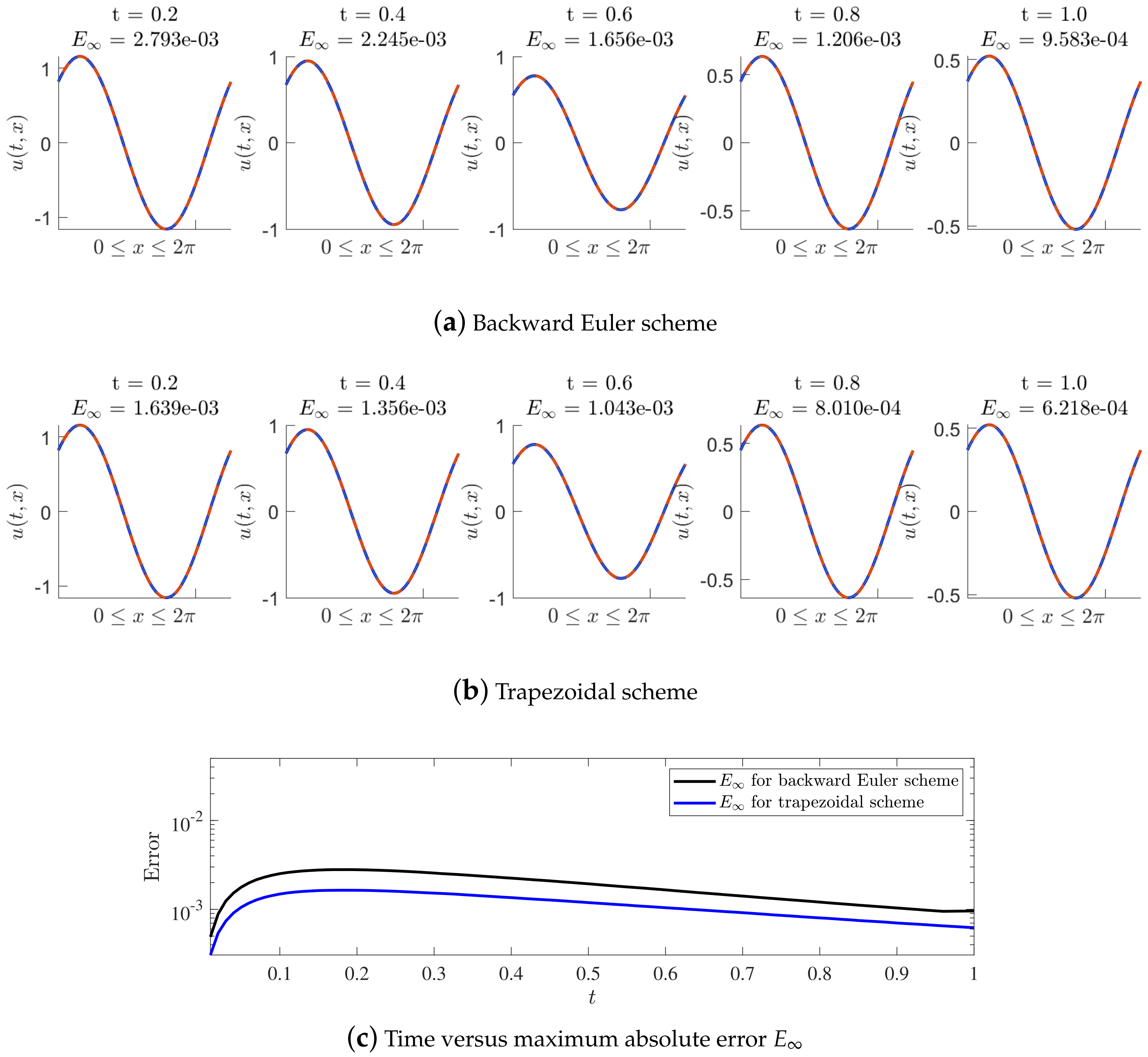

3.2. Numerical Test for the Problem with One Delay Applying the Trapezoidal Rule

4. Processing of Neumann and Mixed Boundary Conditions

4.1. Neumann Boundary Conditions

4.2. Mixed Boundary Conditions

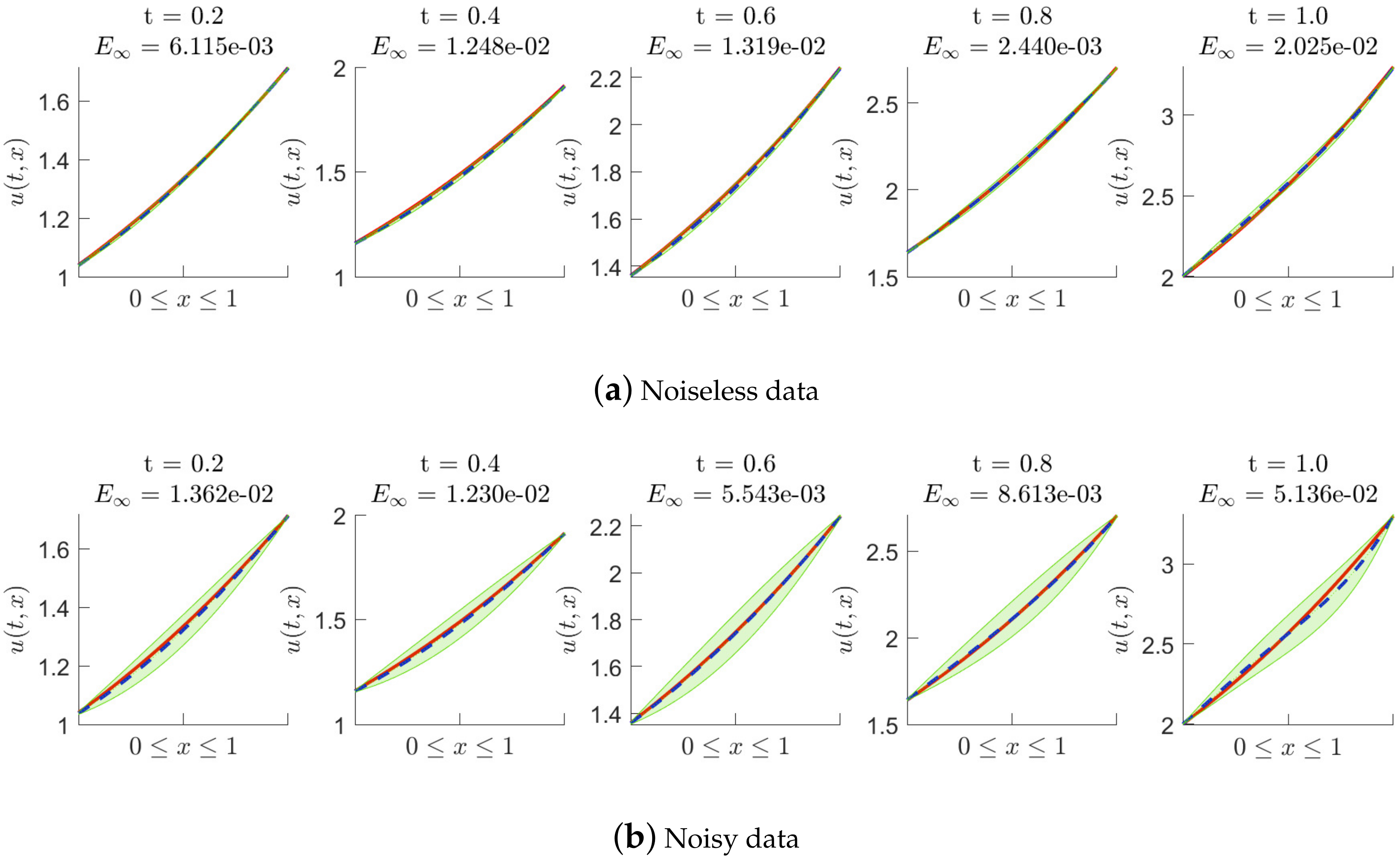

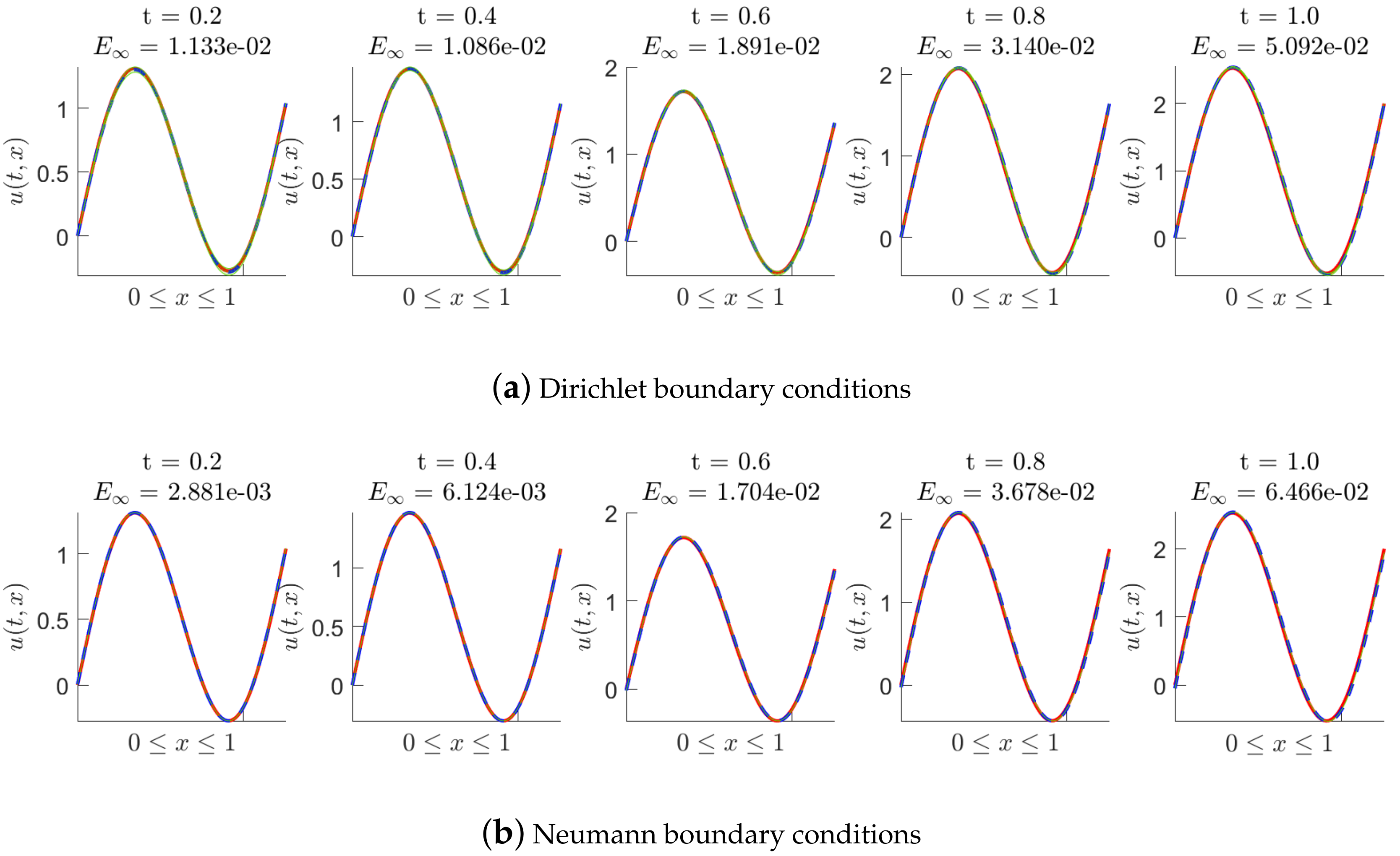

4.3. Numerical Tests for a Problem with Two Delays and Different Types of Boundary Conditions

5. Processing of Wave Equations

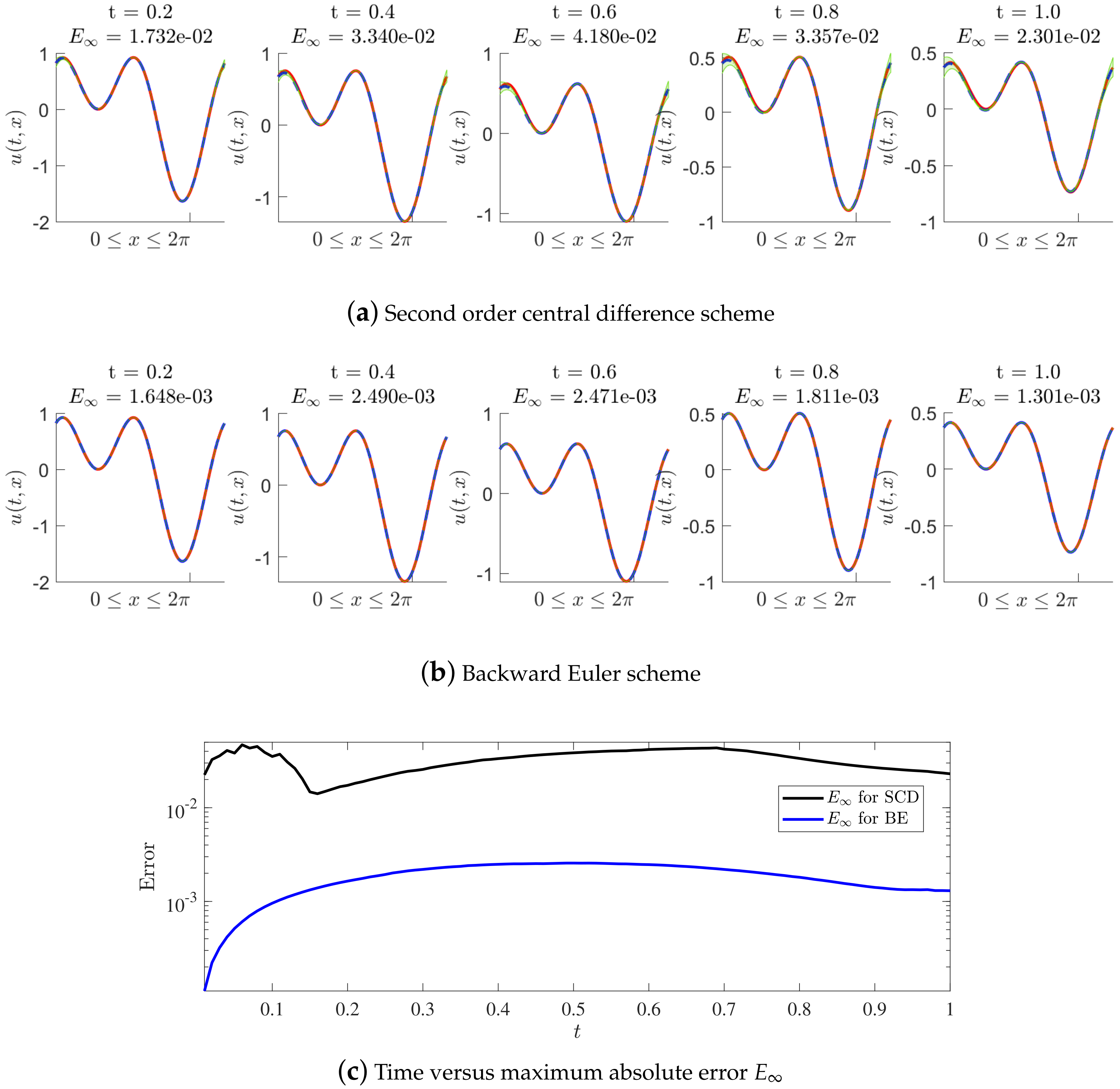

5.1. Second Order Central Difference Scheme

5.2. Backward Euler Scheme

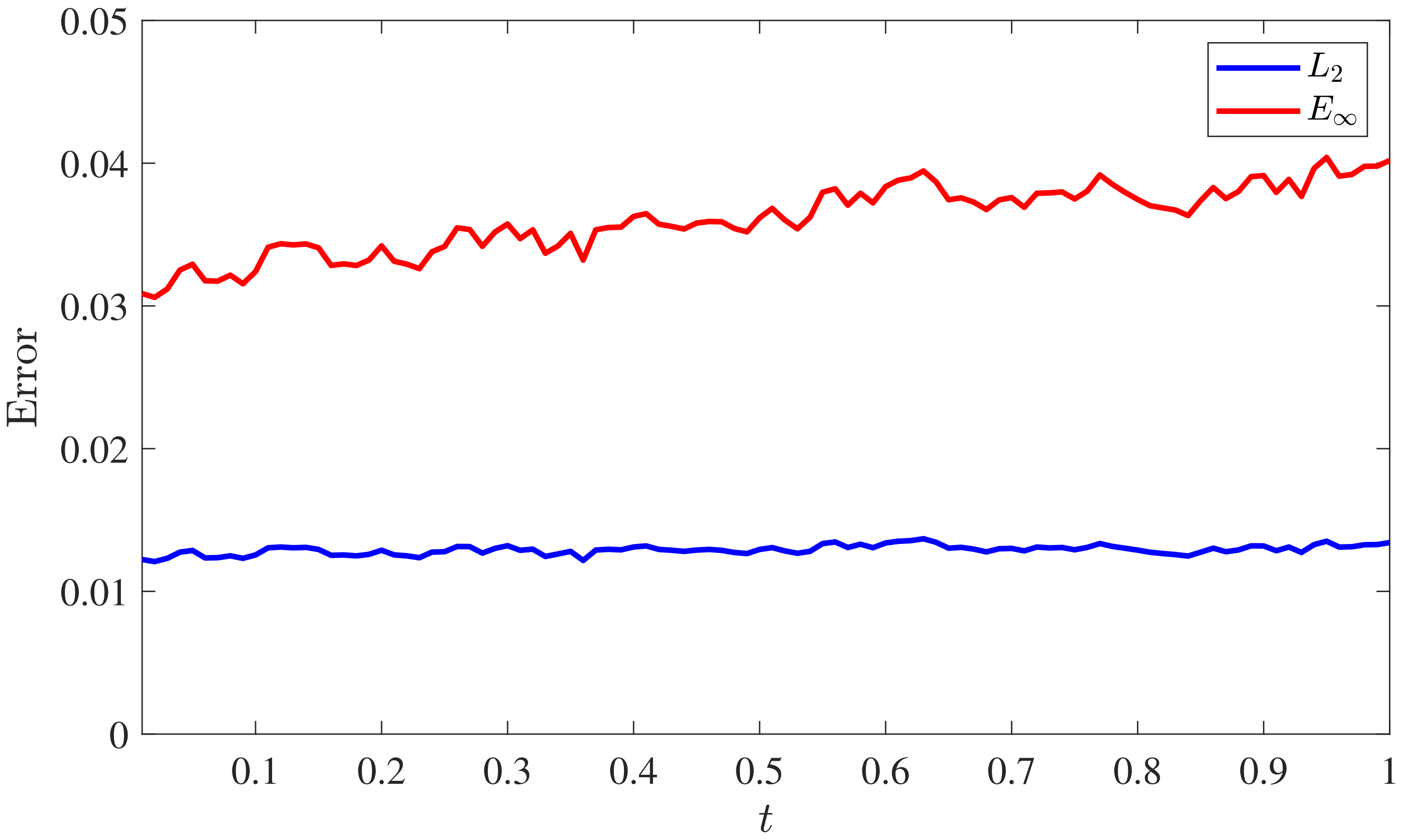

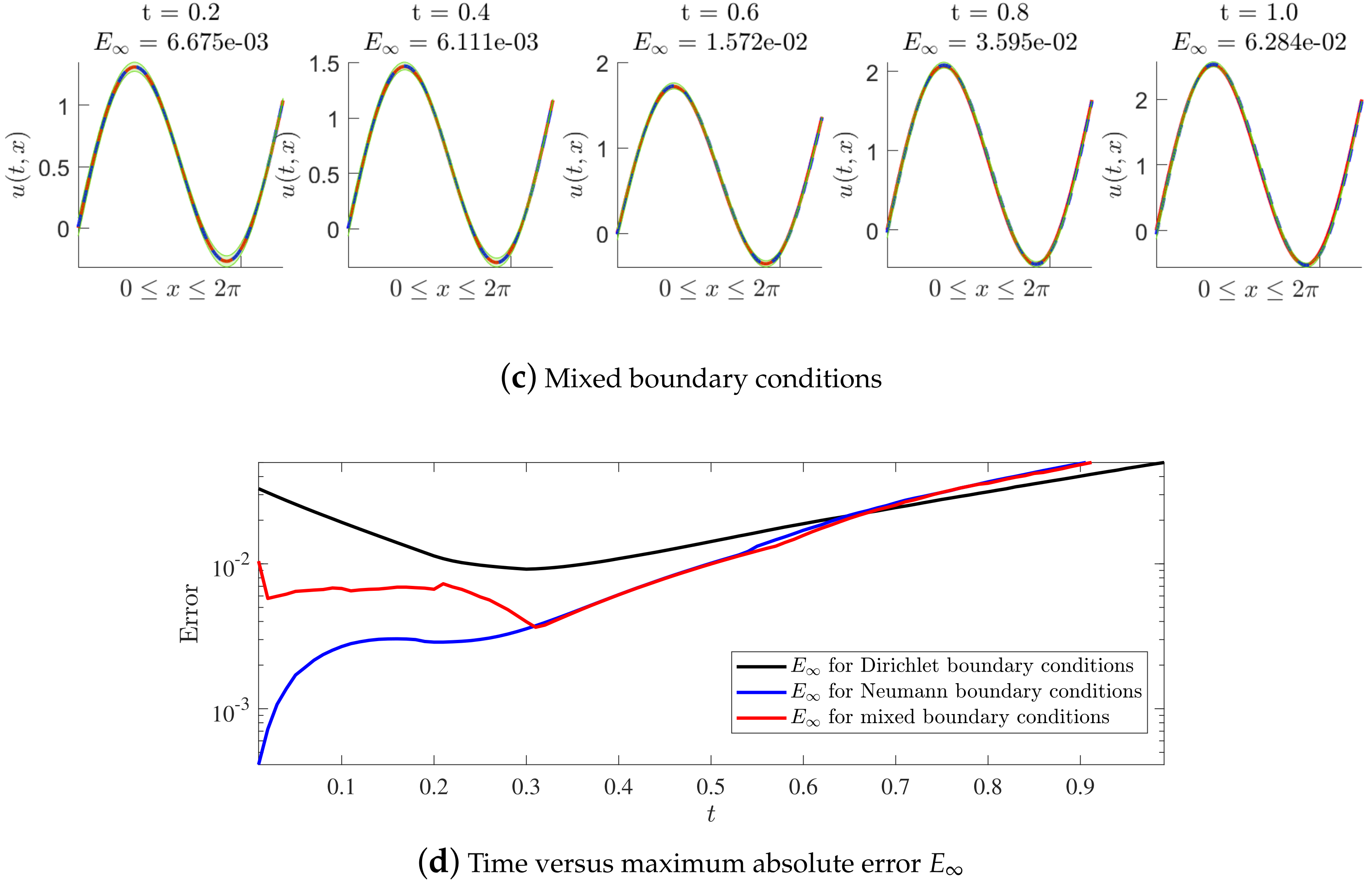

5.3. Numerical Tests

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ghahramani, Z. Probabilistic machine learning and artificial intelligence. Nature 2015, 521, 452–459. [Google Scholar] [CrossRef] [PubMed]

- Kempa-Liehr, A.W.; Lin, C.Y.C.; Britten, R.; Armstrong, D.; Wallace, J.; Mordaunt, D.; O’Sullivan, M. Healthcare pathway discovery and probabilistic machine learning. Int. J. Med. Inform. 2020, 137, 104087. [Google Scholar] [CrossRef] [PubMed]

- Maslyaev, M.; Hvatov, A.; Kalyuzhnaya, A.V. Partial differential equations discovery with EPDE framework: Application for real and synthetic data. J. Comput. Sci. 2021, 53, 101345. [Google Scholar] [CrossRef]

- Lorin, E. From structured data to evolution linear partial differential equations. J. Comput. Phys. 2019, 393, 162–185. [Google Scholar] [CrossRef]

- Arbabi, H.; Bunder, J.E.; Samaey, G.; Roberts, A.J.; Kevrekidis, I.G. Linking machine learning with multiscale numerics: Data-driven discovery of homogenized equations. JOM 2020, 72, 4444–4457. [Google Scholar] [CrossRef]

- Martina-Perez, S.; Simpson, M.J.; Baker, R.E. Bayesian uncertainty quantification for data-driven equation learning. Proc. R. Soc. A 2021, 477, 20210426. [Google Scholar] [CrossRef] [PubMed]

- Dal Santo, N.; Deparis, S.; Pegolotti, L. Data driven approximation of parametrized PDEs by reduced basis and neural networks. J. Comput. Phys. 2020, 416, 109550. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Kremsner, S.; Steinicke, A.; Szölgyenyi, M. A deep neural network algorithm for semilinear elliptic PDEs with applications in insurance mathematics. Risks 2020, 8, 136. [Google Scholar] [CrossRef]

- Guo, Y.; Cao, X.; Liu, B.; Gao, M. Solving partial differential equations using deep learning and physical constraints. Appl. Sci. 2020, 10, 5917. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Y.; Sun, H. Physics-informed learning of governing equations from scarce data. Nat. Commun. 2021, 12, 6136. [Google Scholar] [CrossRef] [PubMed]

- Gelbrecht, M.; Boers, N.; Kurths, J. Neural partial differential equations for chaotic systems. New J. Phys. 2021, 23, 043005. [Google Scholar] [CrossRef]

- Omidi, M.; Arab, B.; Rasanan, A.; Rad, J.; Parand, K. Learning nonlinear dynamics with behavior ordinary/partial/system of the differential equations: Looking through the lens of orthogonal neural networks. Eng. Comput. 2022, 38, 1635–1654. [Google Scholar] [CrossRef]

- Lagergren, J.H.; Nardini, J.T.; Michael Lavigne, G.; Rutter, E.M.; Flores, K.B. Learning partial differential equations for biological transport models from noisy spatio-temporal data. Proc. R. Soc. A 2020, 476, 20190800. [Google Scholar] [CrossRef]

- Koyamada, K.; Long, Y.; Kawamura, T.; Konishi, K. Data-driven derivation of partial differential equations using neural network model. Int. J. Model Simulat. Sci. Comput. 2021, 12, 2140001. [Google Scholar] [CrossRef]

- Kalogeris, I.; Papadopoulos, V. Diffusion maps-aided Neural Networks for the solution of parametrized PDEs. Comput. Meth. Appl. Mech. Eng. 2021, 376, 113568. [Google Scholar] [CrossRef]

- Kaipio, J.; Somersalo, E. Statistical and Computational Inverse Problems; Springer Science & Business Media: New York, NY, USA, 2006; Volume 160. [Google Scholar] [CrossRef]

- Williams, C.K.; Rasmussen, C.E. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006; Volume 2. [Google Scholar] [CrossRef]

- Mahmoodzadeh, A.; Mohammadi, M.; Abdulhamid, S.N.; Ali, H.F.H.; Ibrahim, H.H.; Rashidi, S. Forecasting tunnel path geology using Gaussian process regression. Geomech. Eng. 2022, 28, 359–374. [Google Scholar] [CrossRef]

- Hoolohan, V.; Tomlin, A.S.; Cockerill, T. Improved near surface wind speed predictions using Gaussian process regression combined with numerical weather predictions and observed meteorological data. Renew. Energy 2018, 126, 1043–1054. [Google Scholar] [CrossRef]

- Gonzalvez, J.; Lezmi, E.; Roncalli, T.; Xu, J. Financial applications of gaussian processes and bayesian optimization. arXiv 2019, arXiv:1903.04841. [Google Scholar] [CrossRef]

- Schölkopf, B.; Smola, A.J.; Bach, F. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and beyond; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Drucker, H.; Wu, D.; Vapnik, V.N. Support vector machines for spam categorization. IEEE Trans. Neural Netw. 1999, 10, 1048–1054. [Google Scholar] [CrossRef] [PubMed]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Lange-Hegermann, M. Linearly constrained gaussian processes with boundary conditions. In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, San Diego, USA, 13–15 April 2021; pp. 1090–1098. [Google Scholar]

- Gahungu, P.; Lanyon, C.W.; Alvarez, M.A.; Bainomugisha, E.; Smith, M.; Wilkinson, R.D. Adjoint-aided inference of Gaussian process driven differential equations. arXiv 2022, arXiv:2202.04589. [Google Scholar] [CrossRef]

- Gulian, M.; Frankel, A.; Swiler, L. Gaussian process regression constrained by boundary value problems. Comput. Methods Appl. Mech. Eng. 2022, 388, 114117. [Google Scholar] [CrossRef]

- Yang, S.; Wong, S.W.; Kou, S. Inference of dynamic systems from noisy and sparse data via manifold-constrained Gaussian processes. Proc. Natl. Acad. Sci. USA 2021, 118, e2020397118. [Google Scholar] [CrossRef] [PubMed]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Numerical Gaussian processes for time-dependent and nonlinear partial differential equations. SIAM J. Sci. Comput. 2018, 40, A172–A198. [Google Scholar] [CrossRef]

- Oates, C.J.; Sullivan, T.J. A modern retrospective on probabilistic numerics. Stat. Comput. 2019, 29, 1335–1351. [Google Scholar] [CrossRef]

- Hennig, P.; Osborne, M.A.; Girolami, M. Probabilistic numerics and uncertainty in computations. Proc. R. Soc. A 2015, 471, 20150142. [Google Scholar] [CrossRef] [PubMed]

- Conrad, P.R.; Girolami, M.; Särkkä, S.; Stuart, A.; Zygalakis, K. Statistical analysis of differential equations: Introducing probability measures on numerical solutions. Stat. Comput. 2017, 27, 1065–1082. [Google Scholar] [CrossRef]

- Kersting, H.; Sullivan, T.J.; Hennig, P. Convergence rates of Gaussian ODE filters. Stat. Comput. 2020, 30, 1791–1816. [Google Scholar] [CrossRef]

- Raissi, M.; Karniadakis, G.E. Hidden physics models: Machine learning of nonlinear partial differential equations. J. Comput. Phys. 2018, 357, 125–141. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Machine learning of linear differential equations using Gaussian processes. J. Comput. Phys. 2017, 348, 683–693. [Google Scholar] [CrossRef]

- Reddy, J.N. Introduction to the Finite Element Method; McGraw-Hill Education: Columbus, OH, USA, 2019. [Google Scholar] [CrossRef]

- Gottlieb, D.; Orszag, S.A. Numerical Analysis of Spectral Methods: Theory and Applications; SIAM: Philadelphia, PA, USA, 1977. [Google Scholar] [CrossRef]

- Strikwerda, J.C. Finite Difference Schemes and Partial Differential Equations; SIAM: Philadelphia, PA, USA, 2004; Available online: https://www.semanticscholar.org/paper/Finite-Difference-Schemes-and-Partial-Differential-Strikwerda/757830fca3a06a8a402efad2d812bea0cf561702 (accessed on 20 August 2022).

- Bernardo, J.M.; Smith, A.F. Bayesian Theory; John Wiley & Sons: Hoboken, NJ, USA, 2009; Volume 405, Available online: https://onlinelibrary.wiley.com/doi/book/10.1002/9780470316870 (accessed on 20 August 2022).

- Podlubny, I. Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications; Elsevier: Amsterdam, The Netherlands, 1998. [Google Scholar]

- Povstenko, Y. Linear Fractional Diffusion-Wave Equation for Scientists and Engineers; Birkhäuser: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- König, H. Eigenvalue Distribution of Compact Operators; Birkhäuser: Basel, Switzerland, 2013; Volume 16. [Google Scholar] [CrossRef]

- Berlinet, A.; Thomas-Agnan, C. Reproducing Kernel Hilbert Spaces in Probability and Statistics; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar] [CrossRef]

- Zhu, C.; Byrd, R.H.; Lu, P.; Nocedal, J. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Trans. Math. Softw. 1997, 23, 550–560. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Dorigo, M.; Birattari, M.; Stutzle, T. Ant colony optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Iserles, A. A First Course in the Numerical Analysis of Differential Equations; Number 44; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- Butcher, J.C. A history of Runge-Kutta methods. Appl. Numer. Math. 1996, 20, 247–260. [Google Scholar] [CrossRef]

| t | ||||||

| t | ||||||

| t | ||||||

| t | ||||||

| t | |||||

| t | |||||

| t | |||||

| t | |||||

| 0.2 | * | ||||||

| 0.1 | 1.966 | ||||||

| 0.05 | 1.951 | ||||||

| 0.025 | 1.966 | ||||||

| 0.0125 | 1.991 | ||||||

| 0.00625 | 1.963 |

| 0.2 | * | ||||||

| 0.1 | 2.573 | ||||||

| 0.05 | 2.135 | ||||||

| 0.025 | 2.002 | ||||||

| 0.0125 | 2.001 | ||||||

| 0.00625 | 1.964 |

| t | |||||

| t | |||||

| t | |||||

| t | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gu, W.; Zhang, W.; Han, Y. Numerical Investigation of a Class of Nonlinear Time-Dependent Delay PDEs Based on Gaussian Process Regression. Fractal Fract. 2022, 6, 606. https://doi.org/10.3390/fractalfract6100606

Gu W, Zhang W, Han Y. Numerical Investigation of a Class of Nonlinear Time-Dependent Delay PDEs Based on Gaussian Process Regression. Fractal and Fractional. 2022; 6(10):606. https://doi.org/10.3390/fractalfract6100606

Chicago/Turabian StyleGu, Wei, Wenbo Zhang, and Yaling Han. 2022. "Numerical Investigation of a Class of Nonlinear Time-Dependent Delay PDEs Based on Gaussian Process Regression" Fractal and Fractional 6, no. 10: 606. https://doi.org/10.3390/fractalfract6100606

APA StyleGu, W., Zhang, W., & Han, Y. (2022). Numerical Investigation of a Class of Nonlinear Time-Dependent Delay PDEs Based on Gaussian Process Regression. Fractal and Fractional, 6(10), 606. https://doi.org/10.3390/fractalfract6100606