A Fast High-Order Predictor–Corrector Method on Graded Meshes for Solving Fractional Differential Equations

Abstract

:1. Introduction

- (a)

- Suppose that . Then, there exist some constants and a function such that

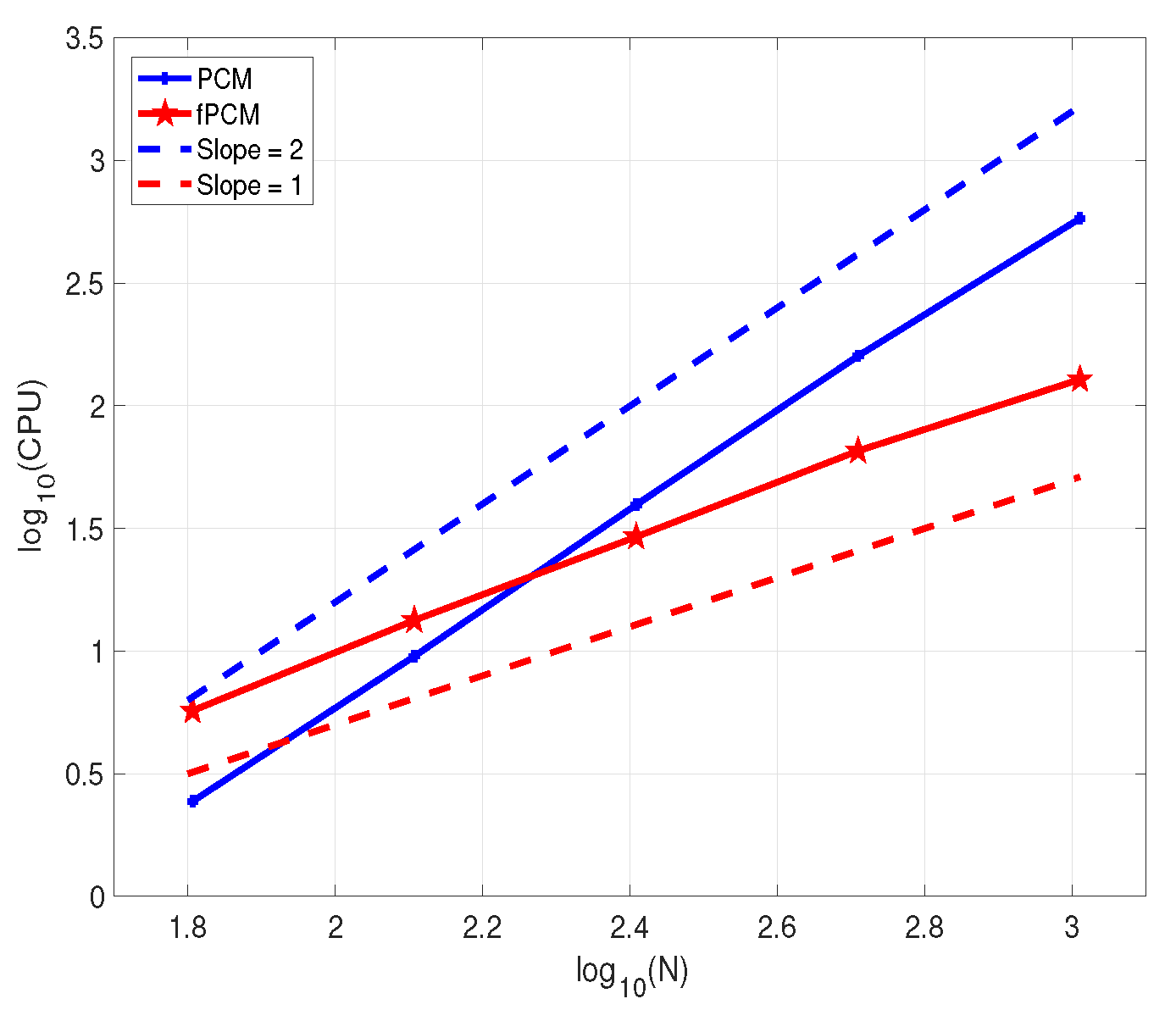

- (b)

- Suppose that . Then, there exist some constants , and a function , such that

2. High-Order Predictor–Corrector Method

- When , we use to approximate on interval .

- When , we use to approximate on intervals and .

- When , we use to approximate on first small interval , to approximate on each interval and to approximate on the last small interval .

3. Error Estimates of the Predictor–Corrector Method

4. Construction of the Fast Algorithm

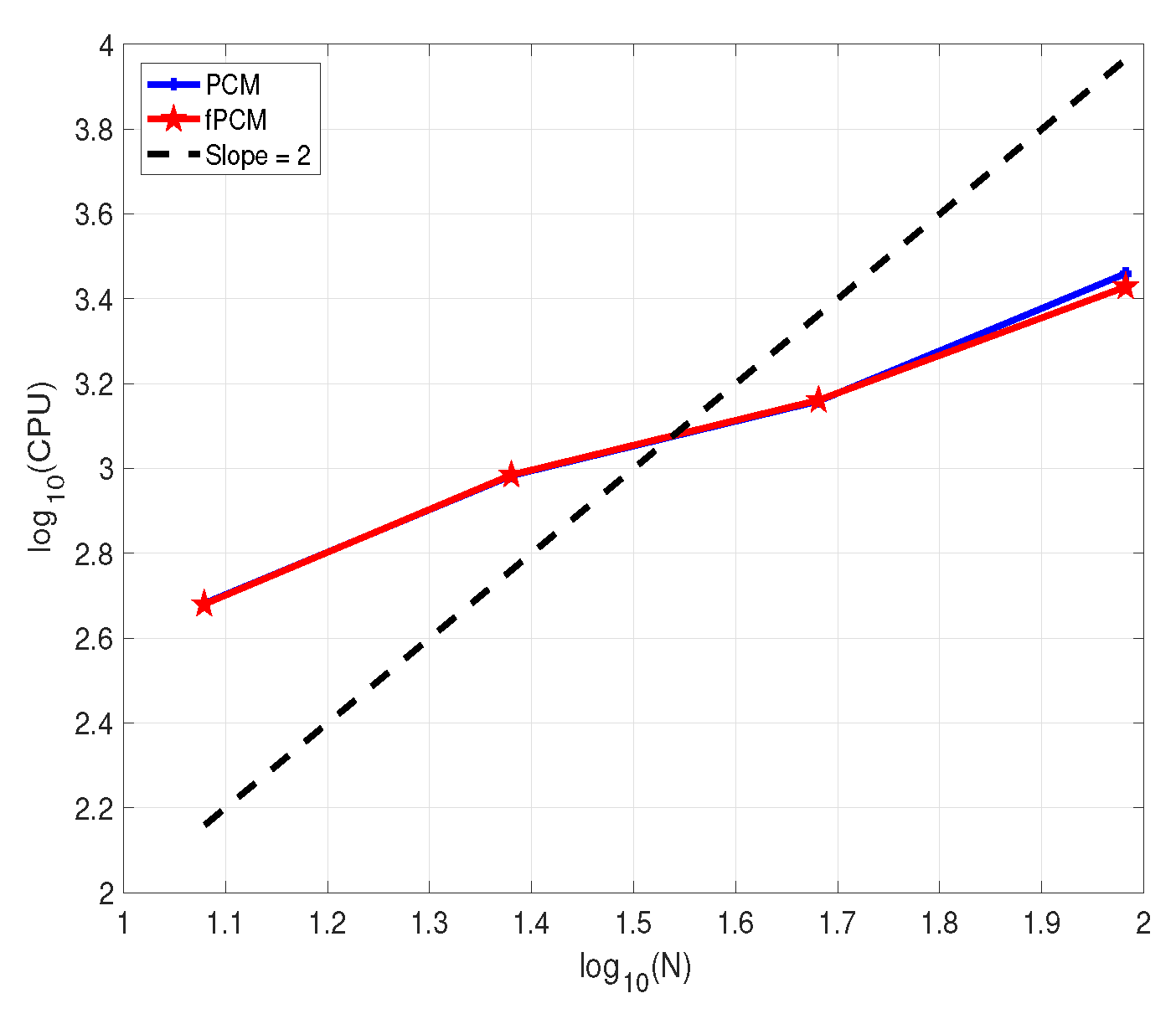

5. Numerical Examples

6. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SOE | Sum of exponentials |

| FDEs | Fractional differential equations |

| PCM | Predictor–corrector method |

| fPCM | Fast predictor–corrector method |

Appendix A. Proof of Lemma 4

References

- Diethelm, K. The Analysis of Fractional Differential Equations; Lecture Notes in Mathematics; Springer: Berlin, Germany, 2010; Volume 2004, pp. viii+247. [Google Scholar]

- Jin, B.; Lazarov, R.; Zhou, Z. Numerical methods for time-fractional evolution equations with nonsmooth data: A concise overview. Comput. Methods Appl. Mech. Engrg. 2019, 346, 332–358. [Google Scholar] [CrossRef]

- Chen, H.; Stynes, M. Error analysis of a second-order method on fitted meshes for a time-fractional diffusion problem. J. Sci. Comput. 2019, 79, 624–647. [Google Scholar] [CrossRef]

- Kopteva, N.; Meng, X. Error analysis for a fractional-derivative parabolic problem on quasi-graded meshes using barrier functions. SIAM J. Numer. Anal. 2020, 58, 1217–1238. [Google Scholar] [CrossRef]

- Stynes, M.; O’Riordan, E.; Gracia, J.L. Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 2017, 55, 1057–1079. [Google Scholar] [CrossRef]

- Cao, W.; Zeng, F.; Zhang, Z.; Karniadakis, G.E. Implicit-explicit difference schemes for nonlinear fractional differential equations with nonsmooth solutions. SIAM J. Sci. Comput. 2016, 38, A3070–A3093. [Google Scholar] [CrossRef]

- Diethelm, K.; Freed, A.D. The FracPECE subroutine for the numerical solution of differential equations of fractional order. Forsch. Und Wiss. Rechn. 1998, 1999, 57–71. [Google Scholar]

- Diethelm, K.; Ford, N.J. Analysis of fractional differential equations. J. Math. Anal. Appl. 2002, 265, 229–248. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J.; Freed, A.D. A predictor-corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 2002, 29, 3–22. [Google Scholar] [CrossRef]

- Diethelm, K.; Ford, N.J.; Freed, A.D. Detailed error analysis for a fractional Adams method. Numer. Algorithms 2004, 36, 31–52. [Google Scholar] [CrossRef]

- Li, C.; Yi, Q.; Chen, A. Finite difference methods with non-uniform meshes for nonlinear fractional differential equations. J. Comput. Phys. 2016, 316, 614–631. [Google Scholar] [CrossRef]

- Liu, Y.; Roberts, J.; Yan, Y. Detailed error analysis for a fractional Adams method with graded meshes. Numer. Algorithms 2018, 78, 1195–1216. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Roberts, J.; Yan, Y. A note on finite difference methods for nonlinear fractional differential equations with non-uniform meshes. Int. J. Comput. Math. 2018, 95, 1151–1169. [Google Scholar] [CrossRef]

- Lubich, C. Fractional linear multistep methods for Abel-Volterra integral equations of the second kind. Math. Comp. 1985, 45, 463–469. [Google Scholar] [CrossRef]

- Zhou, Y.; Suzuki, J.L.; Zhang, C.; Zayernouri, M. Implicit-explicit time integration of nonlinear fractional differential equations. Appl. Numer. Math. 2020, 156, 555–583. [Google Scholar] [CrossRef]

- Inc, M. The approximate and exact solutions of the space-and time-fractional Burgers equations with initial conditions by variational iteration method. J. Math. Anal. Appl. 2008, 345, 476–484. [Google Scholar] [CrossRef]

- Jafari, H.; Daftardar-Gejji, V. Solving linear and nonlinear fractional diffusion and wave equations by Adomian decomposition. Appl. Math. Comput. 2006, 180, 488–497. [Google Scholar] [CrossRef]

- Jin, B.; Lazarov, R.; Pasciak, J.; Zhou, Z. Error analysis of a finite element method for the space-fractional parabolic equation. SIAM J. Numer. Anal. 2014, 52, 2272–2294. [Google Scholar] [CrossRef]

- Zayernouri, M.; Karniadakis, G.E. Discontinuous spectral element methods for time- and space-fractional advection equations. SIAM J. Sci. Comput. 2014, 36, B684–B707. [Google Scholar] [CrossRef]

- Deng, W. Short memory principle and a predictor-corrector approach for fractional differential equations. J. Comput. Appl. Math. 2007, 206, 174–188. [Google Scholar] [CrossRef]

- Nguyen, T.B.; Jang, B. A high-order predictor-corrector method for solving nonlinear differential equations of fractional order. Fract. Calc. Appl. Anal. 2017, 20, 447–476. [Google Scholar] [CrossRef]

- Zhou, Y.; Li, C.; Stynes, M. A fast second-order predictor-corrector method for a nonlinear time-fractional Benjamin-Bona-Mahony-Burgers equation. 2022; submitted to Numer. Algorithms. [Google Scholar]

- Zhou, Y.; Stynes, M. Block boundary value methods for linear weakly singular Volterra integro-differential equations. BIT Numer. Math. 2021, 61, 691–720. [Google Scholar] [CrossRef]

- Zhou, Y.; Stynes, M. Block boundary value methods for solving linear neutral Volterra integro-differential equations with weakly singular kernels. J. Comput. Appl. Math. 2022, 401, 113747. [Google Scholar] [CrossRef]

- Zhou, B.; Chen, X.; Li, D. Nonuniform Alikhanov linearized Galerkin finite element methods for nonlinear time-fractional parabolic equations. J. Sci. Comput. 2020, 85, 39. [Google Scholar] [CrossRef]

- Lubich, C. Discretized fractional calculus. SIAM J. Math. Anal. 1986, 17, 704–719. [Google Scholar] [CrossRef]

- Zeng, F.; Zhang, Z.; Karniadakis, G.E. Second-order numerical methods for multi-term fractional differential equations: Smooth and non-smooth solutions. Comput. Methods Appl. Mech. Engrg. 2017, 327, 478–502. [Google Scholar] [CrossRef]

- Li, D.; Sun, W.; Wu, C. A novel numerical approach to time-fractional parabolic equations with nonsmooth solutions. Numer. Math. Theory Methods Appl. 2021, 14, 355–376. [Google Scholar]

- She, M.; Li, D.; Sun, H.w. A transformed L1 method for solving the multi-term time-fractional diffusion problem. Math. Comput. Simul. 2022, 193, 584–606. [Google Scholar] [CrossRef]

- Lubich, C. Runge-Kutta theory for Volterra and Abel integral equations of the second kind. Math. Comp. 1983, 41, 87–102. [Google Scholar] [CrossRef]

- Jiang, S.; Zhang, J.; Zhang, Q.; Zhang, Z. Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 2017, 21, 650–678. [Google Scholar] [CrossRef]

- Li, D.; Zhang, C. Long time numerical behaviors of fractional pantograph equations. Math. Comput. Simul. 2020, 172, 244–257. [Google Scholar] [CrossRef]

- Yan, Y.; Sun, Z.Z.; Zhang, J. Fast evaluation of the Caputo fractional derivative and its applications to fractional diffusion equations: A second-order scheme. Commun. Comput. Phys. 2017, 22, 1028–1048. [Google Scholar] [CrossRef]

- Liao, H.l.; Tang, T.; Zhou, T. A second-order and nonuniform time-stepping maximum-principle preserving scheme for time-fractional Allen-Cahn equations. J. Comput. Phys. 2020, 414, 109473. [Google Scholar] [CrossRef] [Green Version]

- Ran, M.; Lei, X. A fast difference scheme for the variable coefficient time-fractional diffusion wave equations. Appl. Numer. Math. 2021, 167, 31–44. [Google Scholar] [CrossRef]

| PCM | fPCM | |||||||

|---|---|---|---|---|---|---|---|---|

| N | r | p | CPU | p | CPU | |||

| 64 | 1 | – | 2.58 | – | 3.34 | |||

| 128 | 0.7646 | 9.51 | 0.7646 | 6.95 | ||||

| 256 | 0.7374 | 39.85 | 0.7374 | 14.99 | ||||

| 512 | 0.8351 | 162.84 | 0.8351 | 32.26 | ||||

| 1024 | 0.8917 | 638.56 | 0.8917 | 67.66 | ||||

| EOC | 1 | 1 | ||||||

| 64 | – | 2.41 | – | 4.68 | ||||

| 128 | 2.4493 | 9.61 | 2.4493 | 9.97 | ||||

| 256 | 2.1205 | 39.23 | 2.1205 | 22.57 | ||||

| 512 | 1.9715 | 157.12 | 1.9715 | 48.61 | ||||

| 1024 | 1.9860 | 639.94 | 1.9860 | 107.74 | ||||

| EOC | 2 | 2 | ||||||

| 64 | – | 2.44 | – | 5.71 | ||||

| 128 | 3.3485 | 9.53 | 3.3485 | 13.35 | ||||

| 256 | 3.0821 | 39.48 | 3.0821 | 29.22 | ||||

| 512 | 2.9975 | 159.67 | 2.9975 | 65.48 | ||||

| 1024 | 2.9991 | 580.18 | 2.9991 | 128.07 | ||||

| EOC | 3 | 3 | ||||||

| 64 | – | 2.41 | – | 6.95 | ||||

| 128 | 3.2885 | 8.71 | 3.2885 | 15.04 | ||||

| 256 | 3.1418 | 35.14 | 3.1418 | 33.36 | ||||

| 512 | 3.0677 | 142.50 | 3.0677 | 73.69 | ||||

| 1024 | 3.0306 | 568.88 | 3.0306 | 159.33 | ||||

| EOC | 3 | 3 | ||||||

| Scheme | N | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| PCM | 12 | – | – | – | |||||

| 24 | 4.3733 | 3.8939 | 3.4425 | ||||||

| 48 | 4.1753 | 3.5058 | 2.9541 | ||||||

| 96 | 3.8445 | 3.2151 | 2.8577 | ||||||

| EOC | 3 | 3 | 3 | ||||||

| fPCM | 12 | – | – | – | |||||

| 24 | 4.3733 | 3.8939 | 3.4425 | ||||||

| 48 | 4.1753 | 3.5058 | 2.9541 | ||||||

| 96 | 3.8445 | 3.2151 | 2.8577 | ||||||

| EOC | 3 | 3 | 3 | ||||||

| PCM | fPCM | ||||||

|---|---|---|---|---|---|---|---|

| M | CPU | CPU | |||||

| 8 | 1.9377 | 2141.30 | 1.9377 | 134.57 | |||

| 16 | 1.9722 | 2131.37 | 1.9722 | 133.85 | |||

| 32 | 1.9864 | 2164.59 | 1.9864 | 132.92 | |||

| 64 | 1.9932 | 2158.93 | 1.9933 | 137.41 | |||

| EOC | 2 | 2 | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, X.; Zhou, Y. A Fast High-Order Predictor–Corrector Method on Graded Meshes for Solving Fractional Differential Equations. Fractal Fract. 2022, 6, 516. https://doi.org/10.3390/fractalfract6090516

Su X, Zhou Y. A Fast High-Order Predictor–Corrector Method on Graded Meshes for Solving Fractional Differential Equations. Fractal and Fractional. 2022; 6(9):516. https://doi.org/10.3390/fractalfract6090516

Chicago/Turabian StyleSu, Xinxin, and Yongtao Zhou. 2022. "A Fast High-Order Predictor–Corrector Method on Graded Meshes for Solving Fractional Differential Equations" Fractal and Fractional 6, no. 9: 516. https://doi.org/10.3390/fractalfract6090516

APA StyleSu, X., & Zhou, Y. (2022). A Fast High-Order Predictor–Corrector Method on Graded Meshes for Solving Fractional Differential Equations. Fractal and Fractional, 6(9), 516. https://doi.org/10.3390/fractalfract6090516