New Approach to Quasi-Synchronization of Fractional-Order Delayed Neural Networks

Abstract

:1. Introduction

- (i)

- A new fractional-order differential inequality has been developed on an unbounded time interval. This inequality can be utilized to investigate the quasi-synchronization of fractional-order complex networks or neural networks.

- (ii)

- Utilizing the proposed inequality in combination with an adaptive controller, a novel criterion for the quasi-synchronization of fractional-order delayed neural networks has been derived.

- (iii)

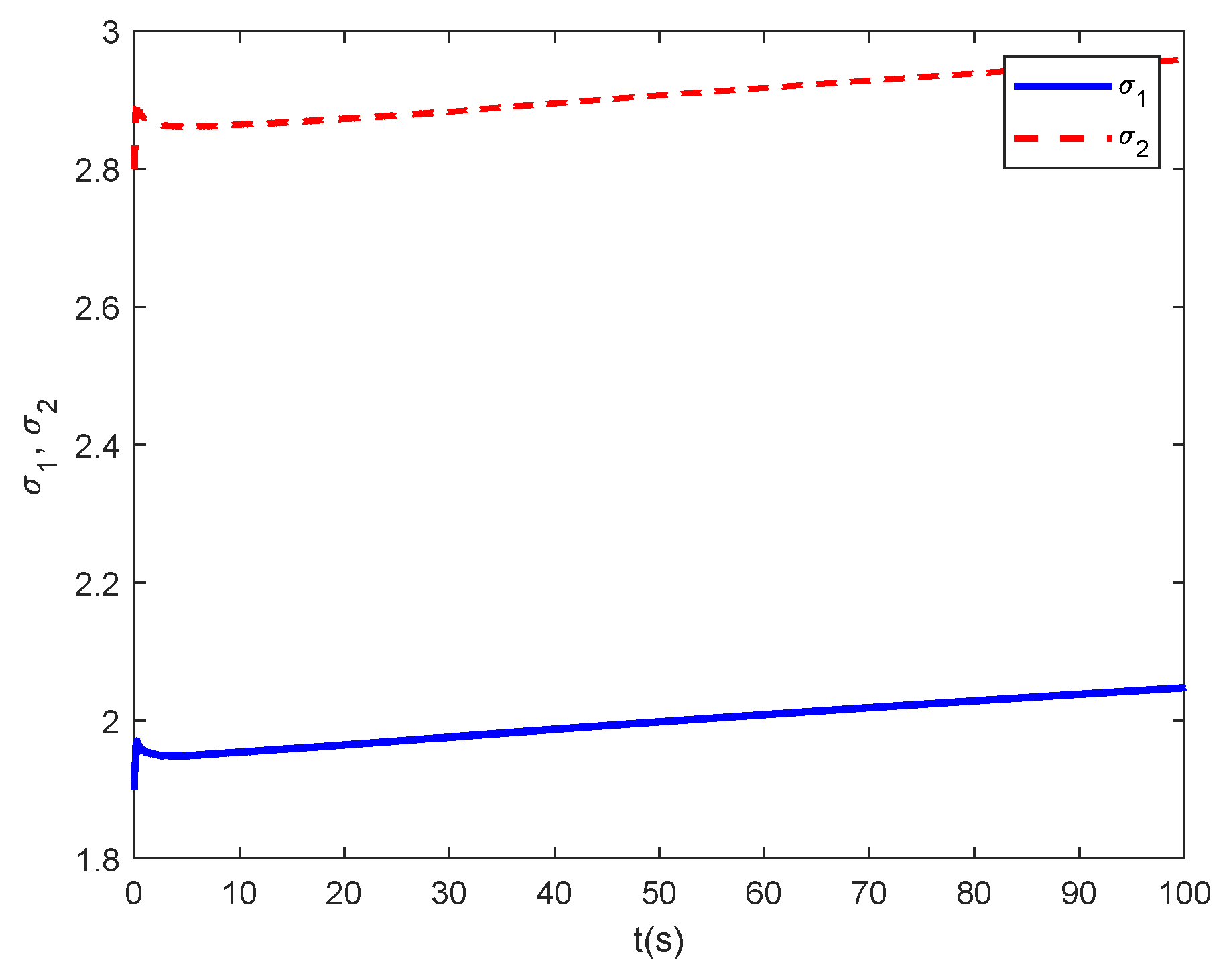

- The validity of the developed results is substantiated through a numerical analysis, offering sufficient evidence in support of the obtained synchronization criterion.

2. Preliminaries and Model Formulation

2.1. Preliminaries

2.2. Model Description

3. Main Results

4. Connections between the Mathematical Treatment and the Numerical Simulation

5. Numerical Simulation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Westerlund, S. Dead matter has memory! Phys. Scr. 1991, 43, 174–179. [Google Scholar] [CrossRef]

- Westerlund, S.; Ekstam, L. Capacitor theory. IEEE Trans. Dielectr. 1994, 1, 826–839. [Google Scholar] [CrossRef]

- Fan, Y.; Gao, J.H. Fractional motion model for characterization of anomalous diffusion from NMR signals. Phys. Rev. E 2015, 92, 012707. [Google Scholar] [CrossRef]

- Metzler, R.; Klafter, J. The random walks guide to anomalous diffusion: A fractional dynamics approach. Phys. Rep. 2000, 339, 1–77. [Google Scholar] [CrossRef]

- Engheia, N. On the role of fractional calculus in electromagnetic theory. IEEE Antenna Propag. Mag. 1997, 39, 35–46. [Google Scholar] [CrossRef]

- Cottone, G.; Paola, M.D.; Santoro, R. A novel exact representation of stationary colored Gaussian processes (fractional differential approach). J. Phys. A Math. Theor. 2010, 43, 085002. [Google Scholar] [CrossRef]

- Song, L.; Xu, S.; Yang, J. Dynamical models of happiness with fractional order. Commun. Nonlinear Sci. Numer. Simul. 2010, 15, 616–628. [Google Scholar] [CrossRef]

- Reyes-Melo, E.; Martinez-Vega, J.; Guerrero-Salazar, C.; Ortiz-Mendez, U. Application of fractional calculus to the modeling of dielectric relaxation phenomena in polymeric materials. J. Appl. Polym. Sci. 2005, 98, 923–935. [Google Scholar] [CrossRef]

- Cao, J.; Chen, G.; Li, P. Global synchronization in an array of delayed neural networks with hybrid coupling. IEEE Trans. SMC 2008, 38, 488–498. [Google Scholar]

- Huang, H.; Feng, G. Synchronization of nonidentical chaotic neural networks with time delays. Neural Netw. 2009, 22, 869–874. [Google Scholar] [CrossRef]

- Liang, J.; Wang, Z.; Liu, Y.; Liu, X. Robust synchronization of an array of coupled stochastic discrete-time delayed neural networks. IEEE Trans. Neural Netw. 2008, 19, 1910–1921. [Google Scholar] [CrossRef]

- Yang, X.; Zhu, Q.; Huang, C. Lag stochastic synchronization of chaotic mixed time-delayed neural networks with uncertain parameters or perturbations. Neurocomputing 2011, 74, 1617–1625. [Google Scholar] [CrossRef]

- Li, H.L.; Hu, C.; Cao, J.; Jiang, H.; Alsaedi, A. Quasi-projective and complete synchronization of fractional-order complex-valued neural networks with time delays. Neural Netw. 2019, 118, 102–109. [Google Scholar] [CrossRef] [PubMed]

- Li, H.L.; Jiang, H.; Cao, J. Global synchronization of fractional order quaternion-valued neural networks with leakage and discrete delays. Neurocomputing 2020, 385, 211–219. [Google Scholar] [CrossRef]

- Baldi, P.; Atiya, A. How delays affect neural dynamics and learning. IEEE Trans. Neural Netw. 1994, 5, 612–621. [Google Scholar] [CrossRef]

- Liao, X.; Wong, K.; Leung, C.; Wu, Z. Hopf bifurcation and chaos in a single delayed neuron equation with non-monotonic activation function. Chaos Solitons Fract. 2001, 12, 1535–1547. [Google Scholar] [CrossRef]

- Xu, W.; Cao, J.; Xiao, M.; Ho, D.W.C.; Wen, G. A new framework for analysis on stability and bifurcation in a class of neural networks with discrete and distributed delays. IEEE Trans. Cybern. 2015, 45, 2224–2236. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Tang, Y.; Zhang, W. Stability analysis of switched stochastic neural networks with time-varying delays. Neural Netw. 2014, 51, 39–49. [Google Scholar] [CrossRef]

- Tu, Z.; Cao, J.; Alsaedi, A.; Hayat, T. Global dissipativity analysis for delayed quaternion-valued neural networks. Neural Netw. 2017, 89, 97–104. [Google Scholar] [CrossRef]

- Hopfield, J. Neurons with graded response have collective computational properties like those of two-state neurons. Proc. Natl. Acad. Sci. USA 1984, 81, 3088–3092. [Google Scholar] [CrossRef]

- Lundstrom, B.; Higgs, M.; Spain, W.; Fairhall, A. Fractional differentiation by neocortical pyramidal neurons. Nat. Neurosci. 2008, 11, 1335–1342. [Google Scholar] [CrossRef] [PubMed]

- Wu, A.; Zeng, Z. Boundedness, Mittag-Leffler stability and asymptotical α-periodicity of fractional-order fuzzy neural networks. Neural Netw. 2016, 74, 73–84. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhao, Z.; Wang, Z.; Li, Y. Chaos and hyperchaos in fractional-order cellular neural networks. Neurocomputing 2012, 94, 13–21. [Google Scholar] [CrossRef]

- Roohi, M.; Zhang, C.; Chen, Y. Adaptive model-free synchronization of different fractional-order neural networks with an application in cryptography. Nonlinear Dyn. 2020, 100, 3979–4001. [Google Scholar] [CrossRef]

- Chen, J.; Zeng, Z.; Jiang, P. Global Mittag-Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 2014, 51, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Peng, J.; Gao, J. A comment on α-stability and α-synchronization for fractional-order neural networks. Neural Netw. 2013, 48, 207–208. [Google Scholar]

- Anastassiou, G.A. Fractional neural network approximation. Comput. Math. Appl. 2012, 64, 1655–1676. [Google Scholar] [CrossRef]

- Bondarenko, V. Information processing, memories, and synchronization in chaotic neural network with the time delay. Complexity 2005, 11, 39–52. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, T.; Xiang, L. Chaotic lag synchronization of coupled delayed neural networks and its applications in secure communication. Circuits Syst. Signal Process 2005, 24, 599–613. [Google Scholar] [CrossRef]

- Shanmugam, L.; Mani, P.; Rajan, R.; Joo, Y.H. Adaptive synchronization of reaction-diffusion neural networks and its application to secure communication. IEEE Trans. Cybern. 2020, 50, 911–922. [Google Scholar] [CrossRef]

- Fu, Q.; Zhong, S.; Jiang, W.; Xie, W. Projective synchronization of fuzzy memristive neural networks with pinning impulsive control. J. Frankl. Inst. 2020, 357, 10387–10409. [Google Scholar] [CrossRef]

- Shen, Y.; Shi, J.; Cai, S. Exponential synchronization of directed bipartite networks with node delays and hybrid coupling via impulsive pinning control. Neurocomputing 2021, 453, 209–222. [Google Scholar] [CrossRef]

- Tang, Z.; Park, J.H.; Wang, Y.; Zheng, W. Synchronization on Lur’e cluster networks with proportional delay: Impulsive effects method. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 4555–4565. [Google Scholar] [CrossRef]

- Yu, J.; Hu, C.; Jiang, H.; Fan, X. Projective synchronization for fractional neural networks. Neural Netw. 2014, 49, 87–95. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Huang, X.; Li, Y.; Xia, J.; Chen, G. Aperiodically intermittent control for quasi-synchronization of delayed memristive neural networks: An interval matrix and matrix measure combined method. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2254–2265. [Google Scholar] [CrossRef]

- He, W.; Qian, F.; Lam, J.; Chen, G.; Han, Q.L.; Kurths, J. Quasi-synchronization of heterogeneous dynamic networks via distributed impulsive control: Error estimation, optimization and design. Automatica 2015, 62, 249–262. [Google Scholar] [CrossRef]

- Tang, Z.; Park, J.H.; Feng, J. Impulsive effects on quasi-synchronization of neural networks with parameter mismatches and time-varying delay. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 908–919. [Google Scholar] [CrossRef]

- Tang, Z.; Park, J.H.; Wang, Y.; Feng, J. Distributed impulsive quasi-synchronization of Lur’e networks with proportional delay. IEEE Trans. Cybern. 2019, 49, 3105–3115. [Google Scholar] [CrossRef]

- Xin, Y.; Li, Y.; Huang, X.; Cheng, Z. Quasi-synchronization of delayed chaotic memristive neural networks. IEEE Trans. Cybern. 2019, 49, 712–718. [Google Scholar] [CrossRef]

- Zheng, M.; Li, L.; Peng, H.; Xiao, J.; Yang, Y.; Zhang, Y.; Zhao, H. Finite-time stability and synchronization of memristor-based fractional-order fuzzy cellular neural networks. Commun. Nonlinear Sci. Numer. Simul. 2018, 59, 272–291. [Google Scholar] [CrossRef]

- Du, F.; Lu, J. Adaptive finite-time synchronization of fractional-order delayed fuzzy cellular neural networks. Fuzzy Set Syst. 2023, 466, 108480. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations; Academic Press: New York, NY, USA, 1999. [Google Scholar]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Application of Fractional Differential Equations; Elsevier: New York, NY, USA, 2006. [Google Scholar]

- Yang, S.; Hu, C.; Yu, J.; Jiang, H. Exponential stability of fractional-order impulsive control systems with applications in synchronization. IEEE Trans. Cybern. 2019, 50, 3157–3168. [Google Scholar] [CrossRef]

- Wei, Z.; Li, Q.; Che, J. Initial value problems for fractional differential equations involving Riemann-Liouville sequential fractional derivative. J. Math. Anal. Appl. 2010, 367, 260–272. [Google Scholar] [CrossRef]

- Liu, P.; Wang, J.; Zeng, Z. Event-triggered synchronization of multiple fractional-order recurrent neural networks with time-varying delays. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 4620–4630. [Google Scholar] [CrossRef]

- Fu, Y. The Laplace Transform and Its Application; Harbin Institute of Technology Press: Harbin, China, 2015. (In Chinese) [Google Scholar]

- Diethelm, K. The Analysis of Fractional Differential Equations; Springer: New York, NY, USA, 2010. [Google Scholar]

- Chen, B.; Chen, J. Global asymptotical ω-periodicity of a fractional-order non-autonomous neural networks. Neural Netw. 2015, 68, 78–88. [Google Scholar] [CrossRef] [PubMed]

- Jia, J.; Zeng, Z.; Wang, F. Pinning synchronization of fractional-order memristor-based neural networks with multiple time-varying delays via static or dynamic coupling. J. Frankl. Inst. 2021, 358, 895–933. [Google Scholar] [CrossRef]

- Liu, P.; Zeng, Z.; Wang, J. Asymptotic and finite-time cluster synchronization of coupled fractional-order neural networks with time delay. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4956–4967. [Google Scholar] [CrossRef] [PubMed]

- Bhalekar, S.; Daftardar-Gejji, V.; Baleanu, D.; Magin, R. Fractional Bloch equation with delay. Comput. Math. Appl. 2011, 61, 1355–1365. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Du, F.; Chen, D. New Approach to Quasi-Synchronization of Fractional-Order Delayed Neural Networks. Fractal Fract. 2023, 7, 825. https://doi.org/10.3390/fractalfract7110825

Zhang S, Du F, Chen D. New Approach to Quasi-Synchronization of Fractional-Order Delayed Neural Networks. Fractal and Fractional. 2023; 7(11):825. https://doi.org/10.3390/fractalfract7110825

Chicago/Turabian StyleZhang, Shilong, Feifei Du, and Diyi Chen. 2023. "New Approach to Quasi-Synchronization of Fractional-Order Delayed Neural Networks" Fractal and Fractional 7, no. 11: 825. https://doi.org/10.3390/fractalfract7110825

APA StyleZhang, S., Du, F., & Chen, D. (2023). New Approach to Quasi-Synchronization of Fractional-Order Delayed Neural Networks. Fractal and Fractional, 7(11), 825. https://doi.org/10.3390/fractalfract7110825