1. Introduction

The growing use of digital imaging technology and the increasing importance of online data storage and transmission have made research on image encryption both timely and necessary. In turn, this has also lead to increased demands for image-encryption algorithms in various aspects of life, including:

(a) Increased use of digital imaging technology and applications [

1,

2]. With the widespread use of digital cameras, smartphones and other imaging devices, the amount of sensitive and personal information stored in digital images has increased dramatically. This has made image encryption an important area of research.

(b) Growth of online data storage and transmission [

3]. The increasing use of online data storage and transmission has made it easier for unauthorized parties to access confidential image data. This has made encryption an essential tool for protecting image data in transit and in storage.

(c) Threats to privacy and security [

4]. As more sensitive and confidential information is stored in digital images, the risk of unauthorized access, theft and tampering has increased. Image encryption is needed to protect against these threats.

(d) Advancements in computing power [

5]. As computing power continues to increase, attackers are able to use more sophisticated cryptanalysis methods to break encryption algorithms. This has made it important for researchers to continuously research and propose novel security measures for sensitive data.

While some researchers have focused their efforts into advancing cryptographic algorithms [

6,

7,

8,

9], others have dedicated their efforts towards the field of steganography [

10,

11]. Moreover, the literature shows a third group that combines the use of cryptography with steganography for added security [

12,

13,

14]. Such efforts were realized because traditional data encryption algorithms, such as DES [

15], 3DES [

16] and AES [

17,

18] were found to be no longer best-suited for image encryption.

This is due to a number of reasons, such as (a) the large size of image data, which increases the computational cost and time required for encryption and decryption; (b) different properties of image data, such as redundancy, pixel-correlation and structure, which can affect the security of traditional encryption techniques; (c) lack of adaptability, since traditional encryption techniques are not well suited to handle the unique challenges posed by image data, such as the need to preserve image quality and the requirement for real-time encryption in certain applications; and (d) vulnerability to attacks, because some traditional encryption techniques, such as DES, have already been shown to be prone to cryptanalysis [

19].

To that end, scientists and engineers have been making use of various mathematical constructs and ideas inspired by nature to design secure and robust image-encryption algorithms. The recent literature shows the employment of cellular automata (CA) [

20,

21,

22], DNA coding [

8,

23,

24,

25], electric circuits [

26,

27] as well as heavy reliance on dynamical functions of chaotic behavior [

6,

28,

29,

30,

31,

32]. The following paragraph highlights the utilization of various such ideas in the development of pseudo-random number generators (PRNGs) to build encryption keys and substitution boxes (S-boxes).

The development and deployment of PRNGs comprise the majority of cryptography research efforts. This is because a randomly distributed bit stream benefits both key generation and S-box design [

20]. Numerous examples in the literature illustrate the usage of PRNGs in image cryptosystems. The researchers in [

33], for instance, employed the Lucas sequence to construct an S-box for their proposed image cryptosystem. The authors of [

34] produced encryption keys using the Rossler chaotic system and a Recaman’s sequence. Likewise, the authors of [

35] constructed PRNGs as encryption keys utilizing the Fibonacci sequence, a chaotic tan function and a Bessel function.

The researchers in [

36] investigated elliptic curves and used them to create a PRNG, which they then combined with the Arnold map to encrypt images. Rule 30 CA generates a PRNG and was utilized as an encryption key in [

20]. In [

37], a field programmable gate array (FPGA) implementation of a PRNG utilizing a memristive Hopfield neural network with a specific activation gradient was proposed. The Mersenne Twister was deployed by the researchers in [

7] as one of the encryption keys in a multi-stage cryptosystem. An S-box was designed and utilized as the core stage in a three-stage image cryptosystem in [

8], where the Lorenz system was numerically solved, and its solution was used to generate a PRNG, which was then employed to generate the S-box.

In another multi-stage image cryptosystem, the authors of [

38] employed a discretized version of the chaotic sine map to create an S-box and the hyperchaotic Lu system as a PRNG. On the other hand, thus far, the literature on image encryption does not feature image cryptosystems where the PRNGs offered by Intel’s Math Kernel Library (MKL) or OpenSSL are employed. Intel’s MKL is a library of optimized mathematical functions, including a high-quality PRNG [

39]. It is specifically optimized for use on Intel hardware and can provide faster performance compared to other libraries, while OpenSSL is an open-source cryptography library that provides various cryptographic functions, including a random number generator [

40].

The literature clearly shows that chaos theory has been extensively studied and applied to image cryptosystems. This is due to the diversity of desirable traits exhibited by dynamical functions of chaotic behavior. These traits include periodicity, pseudo-randomness, sensitivity to initial values and ergodicity [

41]. Broadly, these functions are categorized as either low-dimensional (LD) or high-dimensional (HD) with each class having a set of exclusive advantages [

6].

LD chaotic functions dramatically simplify software and hardware implementations; however, their use in image cryptosystems could, in some cases, be insufficiently secure. In contrast, HD chaotic functions, despite being more complex and needing more computational resources and circuitry, are capable of offering exceptionally high levels of security. Furthermore, upon studying hyperchaotic functions, a wide number of control parameters are readily apparent [

42]. This implies that their use in image cryptosystems results in a significantly wider key space, which reduces the likelihood of brute-force attacks ever succeeding [

8]. Attempting to solve hyperchaotic systems at a fractional-order permits a further expansion of the number of control variables and, consequently, an even wider key space.

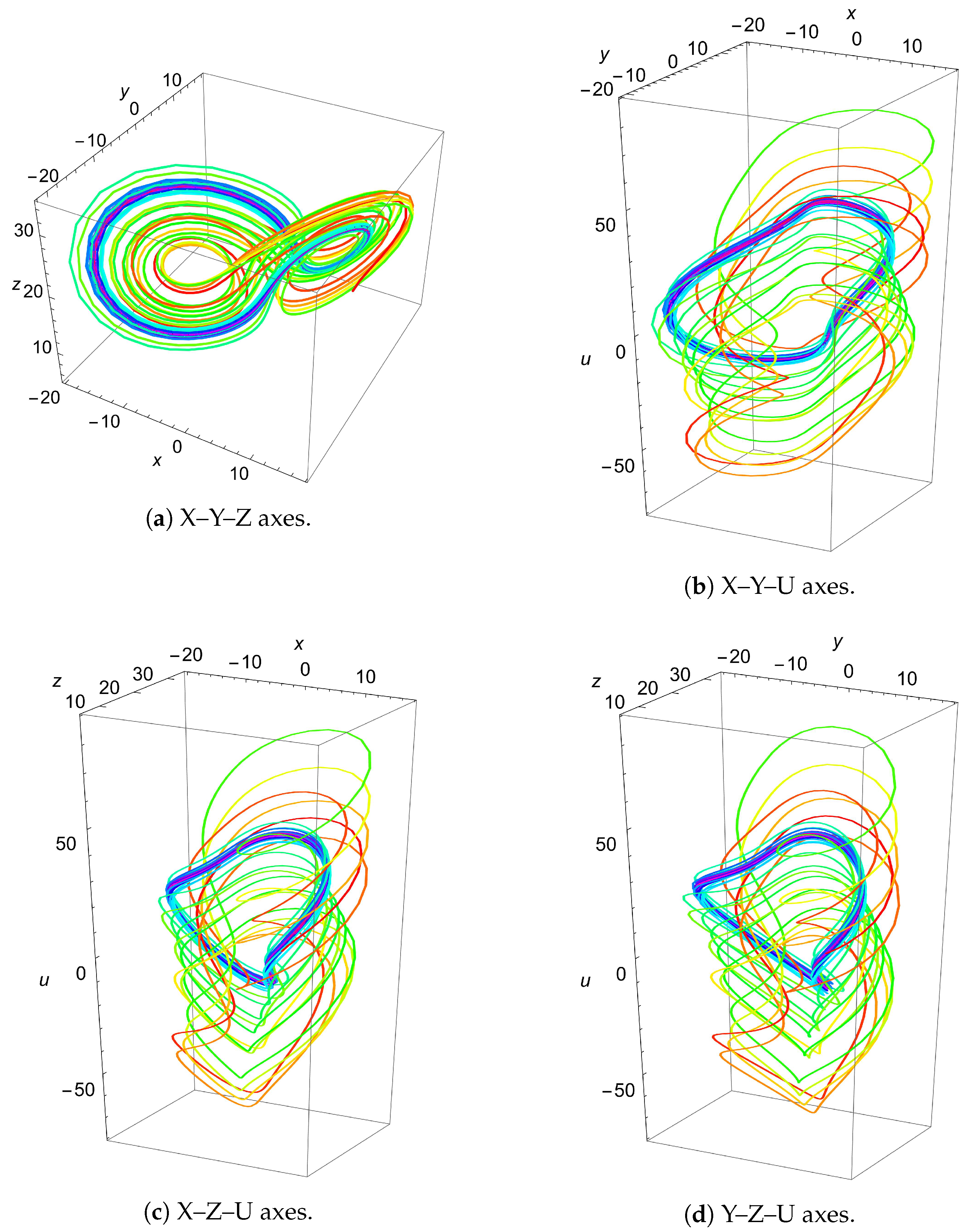

Recently, the image processing community has developed an interest in chaotic fractional-order dynamical systems [

43]. Specifically, their applications in image cryptosystems have gained traction due to their superior performance compared to their integer-order counterparts [

44,

45,

46,

47,

48]. The authors of [

44] proposed a secure image cryptosystem that employed smoothed sliding modes state observers for fractional-order chaotic systems. In [

45], an image cryptosystem with a very large key space was proposed using a fractional-order four-dimensional (4D) Chen hyperchaotic map in conjunction with a Fibonacci Q-matrix.

An efficient image cryptosystem was proposed in [

46], where various fractional-order systems were utilized in an alternating fashion. In [

47], a fractional-order logistic map was proposed by the authors for the implementation of an image cryptosystem, where its performance was then compared to that attained by a conventional logistic map. The authors of [

48] presented a technique for image encryption that used the solutions of chaotic fractional-order fuzzy cellular neural networks. However, it is easily observable that the use of fractional-order chaotic and hyperchaotic functions in image encryption makes for a rather new trend in the literature with only a few articles mentioning such an application.

The previous paragraphs aimed at describing the need for research on image cryptosystems, the reliance of scholars on PRNGs to generate encryption keys and robust S-boxes as well as the emerging utilization of hyperchaotic functions of fractional-order in this field of research. While the literature shows the prevalence of image cryptosystems that involve the use of multiple stages, in most cases, these are limited to only three stages that comprise a total of a permutation–substitution–permutation (as in [

7,

8,

9,

20,

33]).

In the rare case of employing more stages, the execution times were not reported [

45]. This is because of the increases in complexity and the need for longer execution times that result from adding further encryption stages. To make use of multiple-layer-encryption networks, while maintaining low complexity and short execution times, this research work proposes and achieves the following:

A highly efficient multiple layer image cryptosystem, where, in each layer, an encryption key is generated and utilized by XORing it with the image data, and then an S-box is generated and applied to the resulting image. This effectively allows for bit-diffusion and bit-confusion, thereby, satisfying Shannon’s theory for secure communications [

49].

In the first layer, a fractional-order hyperchaotic Chen map is employed for key generation, while a Mersenne Twister PRNG is utilized for S-box design and application.

In the second layer, a Mersenne Twister PRNG is employed for key generation, while an OpenSSL PRNG is utilized for S-box design and application.

In the third layer, Rule 30 CA is employed for key generation, while an Intel’s MKL PRNG is utilized for S-box design and application.

By utilizing a dynamical system with hyperchaotic behavior as well as selecting three S-boxes with specific criteria, a very large key space of is achieved, thus, fending off brute-force attacks.

By optimizing the code efficiency, a superior encryption rate is achieved by the proposed image cryptosystem with an average encryption rate of Mbps.

This paper is organized as follows.

Section 2 presents the preliminary constructs and PRNGs utilized in the proposed image cryptosystem as well as the design and selection criteria for the S-boxes in use.

Section 3 describes the proposed image cryptosystem in detail, along with algorithms and flow charts.

Section 4 reports the attained numerical results and presents a comparative study with other state-of-the-art algorithms. Finally,

Section 5 presents the conclusions of this research work and suggests plausible future research directions that could be further pursued.

4. Numerical Results and Performance Evaluation

This section aims at conducting a full performance evaluation analysis of the proposed image cryptosystem as well as at performing a comparative study with other state-of-the-art image-encryption algorithms. The conducted analyses will test the proposed image cryptosystem’s ability to fend off attacks of various natures. Those include visual, statistical, entropy and differential as well as brute-force attacks.

We further measure how wide the key space is, how fast the cryptosystem performs image encryption and decryption and whether it can successfully pass all the tests in the National Institute of Standards and Technology (NIST) test suite. The proposed image cryptosystem and its testing were implemented in the Wolfram language, utilizing Wolfram Mathematica® v.13.2. This was performed on a machine with the following specifications: 2.9 GHz 6-Core Intel® CoreTM i9 and 32 GB of 2400 MHz DDR4 RAM, running on macOS Catalina v.10.15.7.

A number of commonly utilized images from the image processing community were employed. These include Lena, Mandrill, Peppers, Sailboat, House, House2 and Tree, all of dimensions , unless otherwise specified. The following subsections present the results of each of the conducted tests.

4.1. Human Visual System Examination and Histogram Analysis

The simplest performance evaluation of an image cryptosystem may be easily conducted by examining a plain image and its encrypted version employing the human visual system (HVS).

Figure 10,

Figure 11,

Figure 12,

Figure 13,

Figure 14 and

Figure 15 (including sub-figures) showcase a number of plain images and their encrypted versions as obtained through the application of the proposed image cryptosystem. It is clear that no visual cues can be attained from the encrypted images as to what their plain versions could be.

Furthermore, by incorporating a statistical measure, the histograms of the plain images and their encrypted versions, which are provided in the same set of figures, also showcase excellent performance. While the histograms of every plain image clearly depict unique statistical characteristics, those of the encrypted images show an almost uniform distribution, which cannot be traced back to any specific plain image. This signifies the ability of the proposed image cryptosystem to fend off attacks of a statistical nature.

4.2. Mean Squared Error

The mean squared error (MSE) between two images is a widely used performance evaluation metric for image-encryption algorithms. It is a measure of the difference between a plain image and its encrypted version. The purpose of any image cryptosystem is to scramble the image data in such a way that it becomes extremely difficult for an unauthorized third-party to access the original plain image. To evaluate the effectiveness of an image cryptosystem, it is thus necessary to compare the encrypted image with the plain image and to measure the difference between them.

The MSE is one of the most common methods of achieving this. It is basically a scalar value that measures the average of the squared difference between the pixel values of two images. The smaller the MSE value, the more similar the two images are. In image encryption, the goal is to encrypt the image in such a way that the encrypted image is as different from the original image as possible, while still being able to decrypt it back to its original form.

A high MSE value between a plain image and its encrypted version indicates that the encryption process has been successful. The MSE is calculated as follows: Given two images

I and

with the same dimensions

, the MSE is calculated by summing the squared difference between each corresponding pixel in the two images and then dividing by the total number of pixels in the image. Mathematically, it is expressed as:

Table 4 displays the computed MSE values for different images. It also provides a comparison with other image cryptosystems in the state-of-the-art. It is shown that comparable performance is attained.

It is common to report the MSE and peak signal-to-noise ratio (PSNR) values jointly upon assessing image cryptosystems. This is usually performed since the computation of PSNR is based on the value of MSE. Nevertheless, the authors of [

60] only provided PSNR values without reporting MSE values. This is the reason

Table 4 shows columns of N/A under the heading of [

60].

4.3. Peak Signal-to-Noise Ratio

The peak signal-to-noise ratio (PSNR) is based on the MSE discussed in

Section 4.2. It aims to connect the error margin to the peak value of a given signal. In this research work, such a peak signal value is determined as the highest pixel intensity in an image (

). Therefore, for a given image

I, the PSNR is mathematically expressed as:

It is clear in (

20) that the PSNR is inversely proportional to the MSE. Thus, the lower the PSNR value, the better.

Table 5 displays the computed PSNR values for the image cryptosystem proposed in this work as well as those reported in the literature by counterpart algorithms. It is clear that the achieved PSNR values are comparable to the state-of-the-art.

4.4. Mean Absolute Error

The mean absolute error (MAE ) between a plain image and its encrypted version refers to the average difference between the intensity values of corresponding pixels in the two images. It represents the average magnitude of the differences between the original and encrypted pixels and is a measure of the quality of the encryption process in terms of preserving the visual information of the original image. The higher the MAE, the greater the difference of the encrypted image to the original plain image in terms of the pixel intensity values and the better the image cryptosystem is at distorting the original plain image information. It is represented mathematically as:

where

I and

are two images.

Table 6 displays the computed MAE values for the proposed image cryptosystem in comparison to other state-of-the-art algorithms. It is clear that the achieved MAE values are comparable to the state-of-the-art.

4.5. Information Entropy

In the realm of grayscale images, Shannon’s information entropy is used to quantify the randomness of an image’s gray pixel value distribution. According to Shannon’s theory, the formula for calculating information entropy is:

where

is the probability of occurrence of symbol

m, while

M is the total number of bits for each symbol. In relation to images, as a grayscale image has 256 distinct values

and

potential permutations, the entropy value of an encrypted image reaches a maximum of 8. Consequently, the entropy can be used to measure the unpredictability of encrypted images. In

Table 7, the entropy values computed for the image cryptosystem proposed in this work as well as other state-of-the-art algorithms are displayed. It is clear that the entropy values computed for the various images are extremely close to the ideal value of 8, indicating that the proposed image cryptosystem is resistant to entropy attacks. Moreover, the disparities in the information entropy values for the state-of-the-art are demonstrated to be insignificant.

4.6. Fourier Transformation Analysis

The discrete Fourier transform (DFT) is a mathematical technique that transforms a discrete signal into its equivalent frequency representation. In the context of image encryption, DFT can be used as a tool for analyzing the frequency content of an image. In order to transform an image from the spatial domain to the frequency domain, the following expression mathematically describes the application of DFT:

such that

is the spatial domain representation of the image, where the exponential term is the basis function corresponding to each point

in the Fourier space. When applied to a plain image, the DFT separates the image into its constituent frequencies, which can be visualized as peaks in the frequency spectrum. This representation is useful for analyzing the image structure, as certain patterns and features can be identified by the presence of specific frequencies. On the other hand, when applied to an encrypted image, the result is a transformed representation of the encrypted data. However, this transformed representation typically does not provide any useful information about the original image.

The encrypted data has been altered in a way that makes it difficult to extract any meaningful information—even after transforming it. The aim of any image cryptosystem is to render image content unintelligible, and DFT can help confirm this by showing that the transformed representation of the encrypted image is not representative of the original data.

Figure 15b,e display the DFT as applied to the plain Tree image and its encrypted version, respectively. Unlike the various special features, such as edges and corners, which result in the plus-sign-shape of the DFT of the plain image, the DFT of its encrypted version is distorted and lacks any such features.

4.7. Correlation Coefficient Analysis

This assessment approach evaluates the consistency of a single image. The objective of such an evaluation metric is to assess the cohesiveness of pixels in close proximity. This means that the aim here is to calculate the proportion of uniform regions relative to edge transitions. As a result, a rather high correlation coefficient (i.e., co-occurrence) value is anticipated in the case of plain images, which consist of more regions than edges. Alternatively, as substantial distortion is desired in encrypted images, a lower correlation coefficient is expected. The following set of equations mathematically describe how the pixel cross-correlation coefficient

is computed:

where

and

Classically, this metric is computed for three directions: horizontal, vertical and diagonal, where an image with a strong pixel cross-correlation would typically yield a value close to 1. On the other hand, for a well-encrypted image, its pixel cross-correlation would typically yield a value close to 0. Such values are well-exemplified in

Table 8, where the pixel correlation coefficients are computed and displayed for various plain images and their encrypted versions, each in three directions. Moreover,

Table 9 and

Table 10 provide numerical comparisons with other state-of-the-art algorithms of the pixel correlation coefficients for the Lena image both in RGB format and for each of the separate color channels.

In addition to the numerical analysis offered by computing (

24)–(

27), the co-occurrence matrix can be shown to visualize directional covariance. In the case of images with natural visual characteristics, there is a higher probability for values with high similarity to coexist, leading to magnitudes within the matrix to mostly exhibit a linear distribution. In contrast, for a well-encrypted image, a more uniform distribution of values is expected. To visually illustrate this,

Figure 16 provides 2D plots of the pixel co-occurrence matrices for the plain and encrypted Tree image in three directions.

Clearly, sub-figures (a), (b) and (c) are diagonal in nature, reflecting strong pixel correlation in the plain image, unlike sub-figures (d), (e) and (f), which reflect a rather uniform distribution, signifying random pixel values. Not surprisingly, the same pixel correlation behavior is noticed for each of the separate color channels of the Tree image, which are illustrated in

Figure 17,

Figure 18 and

Figure 19. Moreover, a similar 3D plot of the same metric is illustrated in

Figure 15, where sub-figures (c) and (f) provide pixel correlation for the plain and encrypted Tree images, respectively.

4.8. Differential Attack Analysis

This analysis evaluates the quality of an image-encryption algorithm based on the difference between the plain and encrypted images. This is conducted as follows. An input plain image is compared to its encrypted version on a pixel-by-pixel basis. Such a computation is performed to reach a percentage indicating the change in color intensities resulting from the encryption procedure. Since an absence of resemblance between comparable pixels in both images is promoted, such an evaluation must be performed pixel-by-pixel.

In addition, a more general aspect of the aggregate pixel change rates between images is analyzed, indicating the presence of prevailing color intensity similarities between these images. The literature suggests two tests to satisfy these requirements: the number of pixel change ratio (NPCR) for pixel-by-pixel comparison and the unified averaged change intensity (UACI) for the evaluation of the mean average difference.

The NPCR signifies the percentage evaluation of the number of altered pixels. Such a difference among pixels is performed with a stern equality stance. For two images,

and

(of dimensions

), the difference per pixel

(where

x and

y are the coordinates of the pixel) is equated as:

Thus, the NPCR is mathematically expressed as:

This means that a larger percentage reflects a more significant difference between the two images. As a significant difference is sought, the state-of-the-art suggests that is the target NPCR value for a well-encrypted image.

Utilizing a different assessment lens, the UACI attempts to assess the difference between two images with regard to their mean averages. The UACI is mathematically expressed as:

The state-of-the-art considers an ideal value of about to reflect a well-encrypted image (with respect to the color range , is approximated to 85 steps of difference in intensity.)

For the proposed image cryptosystem,

Table 11 displays the computed NPCR and UACI values for different images with average values corresponding to

and

, respectively, indicating very good NPCR and UACI performance. Furthermore,

Table 12 presents a comparison with the literature for the three separate color channels for various images. A comparable performance is shown. Finally,

Table 13, provides another comparison with the literature for the RGB Lena image. Furthermore, here, comparable performance is attained.

4.9. The National Institute of Standards and Technology Analysis

The National Institute of Standards and Technology (NIST) Special Publication (SP) 800 series provides guidelines, standards and best practices for various aspects of information security, including image encryption [

69]. The NIST SP 800 series is widely recognized as a leading source of information security guidance and is widely used by organizations in the public and private sectors. In relation to image encryption, NIST SP 800-60 provides guidelines for the selection and use of image-encryption algorithms. The publication provides a framework for evaluating and comparing different encryption algorithms based on factors, such as security, performance and implementation complexity.

The guidelines in SP 800-60 are intended to help organizations choose the most appropriate encryption algorithm for their specific needs and to ensure the security and privacy of encrypted images. Moreover, the NIST SP 800-63-3 provides guidelines for the secure use of biometric images, such as fingerprints, iris scans and facial recognition data. These guidelines cover various aspects of biometric image security, including the secure storage, transmission and use of biometric images. Furthermore, those specific guidelines in SP 800-63-3 are intended to help organizations protect the confidentiality, integrity and availability of biometric images, while also addressing privacy concerns. This makes it of paramount importance to include a NIST analysis as part of the performance evaluation of any image cryptosystem.

The NIST analysis suite of tests assesses a bit stream for randomness through various tests. For such a bit stream to successfully pass all the tests, it needs to score a

p-value of at least

in all of them. Upon performing a NIST analysis on encrypted bit streams resultant from the proposed image cryptosystem, we can see that it does indeed pass all NIST tests successfully. An example illustrates this in

Table 14, where all values pass the

threshold for randomness.

4.10. Key Space Analysis

A key space analysis was performed to determine the number of distinct keys that may be employed in a cryptosystem. In this work, we assumed that the transmitter and receiver pre-share the secret keys via a secure channel. Moreover, the state-of-the-art provides useful key-establishment protocols, such as in [

70]. For the proposed image cryptosystem, the Chen hyperchaotic map provides 13 variables, while each of the encryption keys provides a single variable as a seed as well as the variables related to the S-box evaluation metrics, which are

.

This means that there is a total of

variables affecting the key space. With the maximum machine precision being

, the key space is calculated to be

. It is clear that the achieved key space is much larger than the previously considered safe threshold of

[

71]. This signifies that the proposed image cryptosystem is fully resistant against brute-force attacks.

Table 15 presents a comparison of the key spaces of various image cryptosystems in the state-of-the-art and displays how the proposed cryptosystem fares among them, showcasing its superior performance in that regard.

4.11. Histogram Dependency Tests

In this testing category, the histograms of the plain and encrypted images are compared. Given two histograms, the comparisons attempt to assess the level of the linear dependency between both of them. For the five evaluations conducted [

76], the better the encryption performed, the less the correlation between the two histograms and, thus, the lower the dependency value computed.

Accordingly, when computing the dependency coefficient as a value in the range

, it is favored to be as close as possible to 0 since 1 and

both reflect a significant dependency in magnitude (aside form the direction presented by the sign). While the field of statistics could lend the field of image processing a myriad of dependency evaluation metrics, in this research work, five tests were performed: Blomqvist

, Goodman–Kruskal

, Kendall

, Spearman

and Pearson correlation

r [

77].

- 1.

Blomqvist

evaluates the correlation between two histograms

X and

Y with their medians

and

, respectively. It is mathematically expressed as:

With respect to the median as a reference point, every couple of elements across the two histograms belongs to one side of the median or not.

- 2.

The Goodman–Kruskal

measure of monotonic association is computed in a pairwise fashion, which demands converting the two histograms into a single set of pairs. Comparing two pairs, they are either in line with the correlation (

) or opposing it (

). Goodman–Kruskal correlation is mathematically expressed as:

- 3.

Kendall

evaluates correlation based on sample sizes,

,

and

n. It is mathematically expressed as:

- 4.

The Spearman rank correlation

test relates the element position in a sorted histogram in relation to the mean rank value. It is mathematically expressed as:

such that

x and

y are the two variables to be evaluated,

is the rank of element

i in list

l, and

is the average of ranks of

l.

- 5.

Pearson correlation

r associates elements in the histograms directly with their mean averages. It is mathematically expressed as:

such that

and

are the means of the histograms

X and

Y, respectively.

Table 16 presents the resulting values of running the five tests on a number of images. As all scores are close to 0, the dependency is shown to be minimal, showcasing the excellent pixel dispersion quality of the proposed image cryptosystem.

4.12. Execution Time Analysis

An image cryptosystem’s processing time, with regards to its encryption and decryption times, is a crucial performance evaluation metric. This is because: (a) this reflects the efficiency of running an algorithm and its ability to handle large-scale image encryption and decryption; (b) this reflects how well an image cryptosystems handles resource constraints, where the algorithm is expected to run on mobile and hand-held devices with low processing power; (c) this reflects the possibility (or its lack) of scalability, which is important as some algorithms exhibit superior performance for small images but weaken as the image size grows; and (d) this allows for a comparison with state-of-the-art algorithms as part of the trade-off between security performance and implementation complexity.

Table 17 displays the execution times for various square dimensions of the House image. For an image of dimensions

, a very short time of less than half a second is reported. It is also clear that there is a linear increase in time with increases in the image dimensions. Moreover,

Table 18 presents an execution time comparison with other state-of-the-art algorithms. It is clear that the proposed cryptosystem exhibits superior performance in that regard. It is worth mentioning here that execution times are not solely dependent on the complexity of an image cryptosystem.

Other factors that directly influence the execution times include the available processing power and random access memory (RAM) as well as the programming language or software of choice and, finally, the operating system. Traditionally, whenever execution times are reported in the literature, information is provided regarding the machine’s processor, RAM and the software upon which the image cryptosystem is implemented. The absence of such information, as in [

75] is rather unusual. The proposed image cryptosystem, as well as the algorithms provided in [

8,

20,

32], are implemented in the Wolfram language, utilizing Wolfram Mathematica

®, while the algorithms provided in [

60,

75,

78,

79] adopt Mathworks Matlab

®. The mean processing (encryption) rate of the proposed image cryptosystem was

Mbps.

4.13. S-Box Performance Analysis

With practically infinite possibilities to choose from when selecting an S-box for an image cryptosystem, performance evaluation metrics must be employed to gauge their performance and make an informed decision on which S-box would exhibit the best confusion properties. The literature offers five tests to achieve that. These metrics are as follows:

- 1.

Nonlinearity [

80] represents the measure of the effect of changing 1 bit in the input on the output (ideal value of 120, however, commonly reported in the state-of-the-art as 112).

- 2.

Linear approximation probability (LAP) [

81] calculates the bias of an S-box (ideal value being

).

- 3.

Differential approximation probability (DAP) [

82] is a metric that checks the impact of certain changes in inputs and their effect on the confused output (the ideal value being

).

- 4.

Bit independence criterion (BIC) [

83] evaluates the repeatability in patterns in the confused output (the ideal value being 112).

- 5.

Strict avalanche criterion (SAC) [

83] computes the rate of change in the confused output in relation to the change in the input (the ideal value being

).

Table 19 displays the results of computing those five metrics for the proposed S-boxes (displayed earlier in

Table 1,

Table 2 and

Table 3), alongside the ideal value for each metric. It is clear that the OpenSLL S-box provides the best performance with closest proximity to the set of ideal values. Furthermore,

Table 20 displays a comparison among the proposed S-boxes and a number of S-boxes utilized as part of other state-of-the-art algorithms.

It is clear that a comparable performance is indeed achieved. It is worth noting here that the main advantage of opting to use those three proposed S-boxes is the increase in the number of variables of the key space by 15, as explained earlier in

Section 2.3.4. While near-optimal S-box performance evaluation metrics were pursued, other important S-box design criteria (e.g., aiming to avoid short ring cycles and fixed points [

84,

85]) were not considered in this research work.

| Metric | Optimal | MT | OpenSSL | Intel’s MKL |

|---|

| Nonlinearity | 112 | 108 | 108 | 108 |

| SAC | | | | |

| BIC | 112 | 92 | 112 | 104 |

| LAP | | | | |

| DAP | | | | |

Table 20.

Comparison among the proposed S-boxes and those reported in the state-of-the-art.

Table 20.

Comparison among the proposed S-boxes and those reported in the state-of-the-art.

| S-box | NL | SAC | BIC | LAP | DAP |

|---|

| Proposed, MT | 108 | | 92 | | |

| Proposed, OpenSSL | 108 | | 112 | | |

| Proposed, Intel’s MKL | 108 | | 104 | | |

| AES [17] | 112 | | 112 | | |

| Khan et al. [30] | 111 | | 110 | | |

| Zahid et al. [86] | 107 | | | | |

| Aboytes et al. [87] | 112 | | 112 | | |

| Hayat et al. [88] | 100 | | | | |

| Nasir et al. (S4) [89] | 112 | | 112 | | |

4.14. Various Cryptanalyses and Noise Attacks

Table 21 provides a brief description of various forms of cryptanalyses that could be utilized to attack an image cryptosystem. However, due to the proposed image cryptosystem making use of a three-layered SPN, none of the attacks in

Table 21 would be effective against it.

A considerable portion of an encrypted image is lost during transmission in an occlusion attack. Using the same set of keys, the decryption process attempts to retrieve the original plain image from the encrypted image. Thus, some of the restored image’s information may be lost. Nonetheless, it may maintain the majority of visual information necessary to reconstruct the original image. The effect of an occlusion attack on the encrypted image created by the proposed image cryptosystem is depicted in

Figure 20. Transmission causes the loss of one-fourth of the cipher picture. Yet, the decryption technique can recover some of the visual information from the image, which is sufficient to comprehend the visual content of the original plain image and identify it as the House image. As would be expected, in

Figure 20, it is clear that increasing the fraction of the occlusion results in a decrypted image of a worse condition.

In a noise attack, portions of the pixel values of the encrypted images are altered during transmission owing to channel-deteriorating effects.

Figure 21 depicts the effect of a noise attack in which a salt-and-pepper noise is applied to encrypted images resulting from the proposed cryptosystem. When the noisy encrypted images are decrypted, the resulting images seem to retain the visual information of the original image. Thus, the cryptosystem is resistant to salt-and-pepper noise attacks.

Figure 22 represents the same scenario recreated for the case of a Gaussian noise attack. In both of

Figure 21 and

Figure 22, it is observed that, for the salt-and-pepper noise attack, with increased fraction of the image, as well as for the Gaussian noise attack, with increased standard deviation, the decrypted image, while still identifiable as the House image, is in worse condition.

5. Conclusions and Future Works

This research work aimed at proposing a novel image cryptosystem that makes use of a multiple-layer-encryption network. For every layer, an encryption key and an S-box were generated and utilized. Design ideas for the encryption keys and S-boxes were pooled from the 4D dynamical Chen system of a fractional-order, the Mersenne Twister, OpenSLL, Rule 30 Cellular Automata and, finally, Intel’s MKL. The employment of the hyperchaotic Chen map and the three PRNGs allowed for the introduction of a large number of variables, which have led to the vast expansion of the key space to . This is indeed one of the differentiating advantages of the proposed image cryptosystem over other state-of-the-art algorithms.

Another such advantage is its superior efficiency, encrypting images at an average rate of Mbps. Moreover, the attained security level of the proposed image cryptosystem is shown to be rather high, not only in quantitative terms—as exhibited by the comparable and sometimes superior performance evaluation metrics in relation to the state-of-the-art—but also from a qualitative aspect. Quantitatively, the proposed image cryptosystem showcases the average computed values for some key performance metrics as follows: MSE of , PSNR of dB, MAE of , entropy of , NPCR of as well as UACI of .

Qualitatively, upon examining other state-of-the-art algorithms, it is easy to realize that they implement a one-and-a-half layer (i.e., a permutation, a substitution and a final permutation), unlike the proposed image cryptosystem, which implements double that, while maintaining excellent code efficiency. Furthermore, inspection of the encrypted images by the HVS provides no information as to what the original plain image could be. Various cryptanalyses and noise attacks were also shown to be futile in breaking the proposed cryptosystem.

Future research could take on more than one direction. First, while the adopted idea of incorporating the S-box performance evaluation metrics as part of the encryption key itself has much improved the key space, this has inadvertently lead to the utilization of sub-optimal S-boxes. Nevertheless, the performance of the proposed image cryptosystem was not affected by this due to the application of the multiple-layer-encryption network. Still, further improvements could have been attained if better-performing S-boxes been chosen.

Second, some instances in the literature have indicated that, while the Mersenne Twister provides an excellent PRNG performance in general, in strict relation to cryptography applications, other PRNGs could potentially offer improved performance [

90]. Once again, this might not have affected the performance of the proposed image cryptosystem due to the application of the multiple-layer-encryption network. In that regard, future works could attempt to replace the Mersenne Twister with other PRNGs of higher cryptographic performance and check for any noticeable overall improvements in the image cryptosystem.