Abstract

This article introduces a new numerical algorithm dedicated to solving the most general form of variable-order fractional partial differential models. Both the time and spatial order of derivatives are considered as non-constant values. A combination of the shifted Chebyshev polynomials is used to approximate the solution of such equations. The coefficients of this combination are considered a function of time, and they are obtained using the collocation method. The theoretical aspects of the method are investigated, and then by solving some problems, the efficiency of the method is presented.

1. Introduction

Fractional-order integration and differentiation constitute the essence of fractional calculus. From the middle of the previous century, fractional calculus has had a very important effect in many different areas. One may find a few such areas in the book that is written by Diethelm [1]. In fractional calculus, the order of the integration or differentiation operator is usually indicated by a constant, positive, and real number, e.g., .

In 1993, Ross and Samko [2] presented the concept of the fractional derivative and integration operators with variable-orders. The order of their operators was a function of t, i.e., . In such operations, the order of operators can vary from point to point. In fact, they extended the constant-order fractional derivative to a variable-order one. Their work was followed by some researchers, most of whom worked on the theoretical aspect of the subject. Samko [3] presented a thorough investigation into fractional integration and differentiation with the variable-order. Further, Sun et al. [4] performed a comparative analysis between constant-order and variable-order fractional models. There is a suitable review on the variable-order of fractional calculus in [5]. Also, one may find good acknowledgment of fractional-order calculus of variations in [6]. Chechkin et al. [7] obtained a time-fractional equation along with a spatially varying fractional order of the time differentiation by starting from a continuous case time random walk approach with a space-dependent waiting-time probability density function. Zheng et al. [8] investigated the solution regularity of a well-posed reaction–diffusion problem in its variable-order of the time-fractional case. They considered the kernel of the equation as the Mittag–Leffler one. It must be noted that in practical problems, a constant-order fractional derivative cannot adequately capture the variability of memory with respect to time. This limitation causes using the variable-order fractional operator. Guo and Zheng [9] recently investigated a diffusion equation with a variable-order time fractional and a Mittag–Leffler kernel.

Variable-order fractional partial differential equations (VOFPDEs) serve crucial roles in two important areas, namely, mechanics [10] and viscoelasticity [11]. Therefore, there have been some efforts to solve VOFPDEs by different methods, e.g., the operational matrix method [12], approximation by Jacobi [13] and Chebyshev polynomials [14], the spectral method [15], approximation by Legendre wavelets [16], the finite difference method [17], the reproducing kernel approach [18], and approximation by Bernstein polynomials [19].

The following general form for a space-time VOFPDE is considered in this article:

and the initial/boundary conditions are considered as:

where , , , or and . Also, , , , , , , and are sufficiently smooth known functions.

To approximate the solution of Equation (1), a combination of shifted Chebyshev polynomials is employed, which makes a semi-analytical solution for it. The determination of the unknown coefficients in this combination is achieved through the collocation method.

2. Background Information

Within this section, we outline crucial definitions and notations that are fundamental for our subsequent discussions and analyses.

2.1. Fractional Derivative Operator

Here, we focus on the variable-order Caputo fractional derivative operator, whose order depends on time or space. The emphasis on variable-order fractional operators stems from their capacity to provide more accurate descriptions of numerous complex real-world problems. Some comparative investigations which characterize the importance of dealing with these types of operators rather than constant-order fractional derivatives were provided in [20,21,22]. Driven by the aforementioned considerations and motivations, the following definition for the variable-order Caputo fractional derivative can be stated.

Definition 1.

The variable-order of Caputo fractional differentiation is expressed as:

wherein shows the well-known Gamma function.

Remark 1.

By referring to Definition 1 and taking , we have:

where , and .

2.2. Shifted Chebyshev Polynomials

Numerous studies in the past have extensively investigated the operational matrices of fractional-order derivatives, including the articles cited in [23,24]. In this present research, we adopt a similar approach to derive the Caputo derivative operator with variable-order using shifted Chebyshev polynomials. Therefore, the following special framework of weighted -spaces is considered:

where weight function shows a measurable positive, and . Additionally, the relevant scalar product and norm functions are described as follows:

It must be noted that the orthogonality between functions is established when their inner product is zero. To have a family of orthogonal polynomials , each of degree k, one can apply the well-known Gram–Schmidt algorithm on the family of standard polynomial basis functions .

Citing [25], it is established that for any function , there is the unique best-approximating polynomial of degree non-greater than N, which can be explicitly obtained as:

The Chebyshev polynomials have particular significance for orthogonal polynomials, which are defined on interval . Their unique characteristics in the realms of approximation theory and computational fields have sparked considerable interest and prompted extensive study. The Chebyshev polynomial of the first kind, denoted as , are eigenfunctions of the singular Sturm–Liouville problem:

Additionally, the Chebyshev polynomials of the first kind can be derived using the following recurrence formula:

where the starting polynomials of this family are the zero-order polynomial , and the first-order polynomial . Considering and , these polynomials are orthogonal, that is,

Chebyshev polynomials play a fundamental role in approximation theory by utilizing their roots as nodes in polynomial interpolation. This approach mitigates Runge’s phenomenon, resulting in an interpolation polynomial that closely approximates the optimal polynomial for a continuous function under the maximum norm. Moreover, Chebyshev polynomials provide a stable representation exclusively within the interval . Within this interval, Chebyshev polynomials form a complete set, enabling any square integrable function to be expanded as a series using Chebyshev polynomials as the basis i.e., . For a square-integrable function that is infinitely differentiable on the interval , the coefficients in the Chebyshev expansion diminish exponentially as n increases. This phenomenon occurs because Chebyshev polynomials are eigenfunctions of the singular Sturm–Liouville problem [26]. While Laguerre, Legendre, and Hermite polynomials are also eigenfunctions of the Sturm–Liouville problem, Chebyshev polynomials handle boundary conditions more effectively [26]. Consequently, if the function is well behaved within , a relatively small number of terms will suffice to accurately represent the function.

To utilize these polynomials on a general interval , the concept of shifted Chebyshev polynomials (SCPs) through the variable transformation is introduced. After the implementation of the intended mapping, the resulting shifted Chebyshev polynomials are denoted as , and are written as:

As it can be seen from Equation (3), polynomials have the following properties:

The orthogonality property of , along with the change in variable , establishes the orthogonality of SCPs, i.e.,

where the corresponding weighted function for the SCPs is represented by .

The following theorem demonstrates the existence of the best approximating polynomials for any function .

Theorem 1.

Let , and be an arbitrary function. The optimal polynomial to approximate can be expressed by the following combination of SCPs:

such that it satisfies:

Proof.

Refer to Theorem 3.14 [25] for the proof. □

Theorem 2.

The error’s upper bound for the optimal polynomial that approximates a sufficiently smooth function defined over the interval in the -norm is:

where

Proof.

Owing to the first theorem, the optimal polynomial to approximate is obtained as:

Furthermore, for any , the polynomial fulfills the following inequality:

Let be the Taylor expansion of around a truncated to its first terms, given by:

By employing the variable transformation and considering Inequality (4), we can derive the following:

that provides our desired outcome. □

Corollary 1.

If is a sufficiently smooth function on interval , and is an arbitrary real number, then we can establish the following results:

- (i)

- For all , where is sufficiently large, the following relation holds for the error of the optimal approximating polynomial of :

- (ii)

- As , the following limit holds

Proof. (i)

Theorem 2 implies that:

which directly implies the corollary’s statement.

- (ii)

- By part (i), we have

The convergence of to is equivalent to the establishment of Equation (5).

Therefore, the proof is accomplished. □

2.3. Expansion for Variable-Order Caputo Fractional Derivatives

It is well known that for can be analytically expressed as:

To apply these polynomials over , the straightforward change in variable is employed. This transformation leads to the following analytical form of SCPs for :

Assuming , it can be obtained in terms of SCPs as:

where . In practice, a truncated series of this expansion with terms is commonly used:

Differentiating both sides of Equation (7) with respect to x using a Caputo fractional derivative of variable-order yields:

3. Algorithm of Solution for the Main Equation

The initiation of the presented method involves an initial approximation of the function in the following manner:

By replacing the spatial derivatives of order from in (9), we have:

wherein . Furthermore, the initial conditions (2) can be considered as:

where and . Also, the boundary conditions (2) can be considered as follows:

Furthermore, solving the boundary conditions (11) in terms of and results in:

where , and , respectively, indicate the sets of even and odd integer numbers. Certainly, both , are reliant on the remaining unknown components , which is an independent set. To ascertain these unknown components, we utilize the Chebyshev collocation method along the spatial variable which yields

wherein and s represent the following Chebyshev collocation points over the interval :

The correspondence between the number of our used collocation points and independent unknown components within set is noteworthy.

Since solving (13) depends on having , we proceed to approximate them as:

In this context, and stand as particular solutions to the auxiliary differential equations, established to meet the initial conditions of (13) for . To ensure that (14) conforms to the initial conditions of (13), it is required that:

Hence, we adopt the subsequent initial conditions for and as:

To further elaborate, the above-mentioned conditions will be adequate to ensure that complies with the initial condition (13). Consider as a set of basis functions defined over the interval . We will now establish and through the solution of a homogeneous problem and a non-homogeneous problem as:

Hence, solutions of and can be explicitly represented as follows:

To aid clarity, let us introduce the following notations:

The final stage of the presented method entails determining suitable values for . These components are crucial for deriving the approximate solution to (13). To accomplish this, the residual functions are generated by substituting into (13) as follows:

Our residual functions in (16) are adjusted to become zero at the specific collocation points . Therefore, the values of unknown components of can be ascertained by solving the following system.

Thus, the approximate solution of from is acquired via the values of the elements of . Furthermore, is calculated by substituting with in (8).

Algorithm

In the current section, a detailed presentation of the algorithm for the established scheme is provided. Algorithm 1 is applicable for solving Equation (1).

| Algorithm 1: Proposed method. |

|

4. Convergence Analysis

Based on Algorithm 1, the approximate solution is

By substituting in (1), we can establish the expected residual function for the governing equation as:

Next, we proceed by collocating at the zeros of , also defining as .

The ensuing theorem establishes the possibility of obtaining in a manner such that can be minimized.

Theorem 3.

Let , , and be continuous functions defined over area . For any given positive ϵ, there is a positive integer such that we have the following:

Proof.

Given the aforementioned assumptions, present a set of continuous functions. Consequently, this allows us to expand in terms of the SCPs as:

where depends on the components of . Additionally, it can be determined through the following expression:

Suppose that we set

The solution of system (17) provides the values of for and . As , we can derive the following inequality:

Since shows the shifted Chebyshev expansion function in this paper, the convergence property of the expansion of implies that for any positive , there is a positive integer such that for each , we have:

Therefore, the proof is concluded. □

5. Numerical Illustrations

In this section, we employ our presented scheme to solve some test problems to demonstrate its notable performance. To enhance the error analysis for each problem, we introduce the following convergence indicators, accompanied by their corresponding experimental convergence rates:

- Reference error: ;

- Maximum absolute residual function: ;

- Relative error:where , and .

If we designate to represent any of the aforementioned error indicators, then its corresponding experimental convergence rate is determined by:

In practice, signifies that as the count of SCPs in (8) arises from N to , and the number of approximating terms arises from M to , the maximum value of diminishes by a factor of .

Remark 2.

The maximum absolute residual function can be used to evaluate the accuracy of the approximate solution. If the exact solution is unavailable, this error indicator is applicable. When , the error of the approximate solution is deemed negligible.

Problem 1.

As a first challenge, we employ our method on the ensuing one-dimensional time-fractional diffusion equation:

subject to the following conditions:

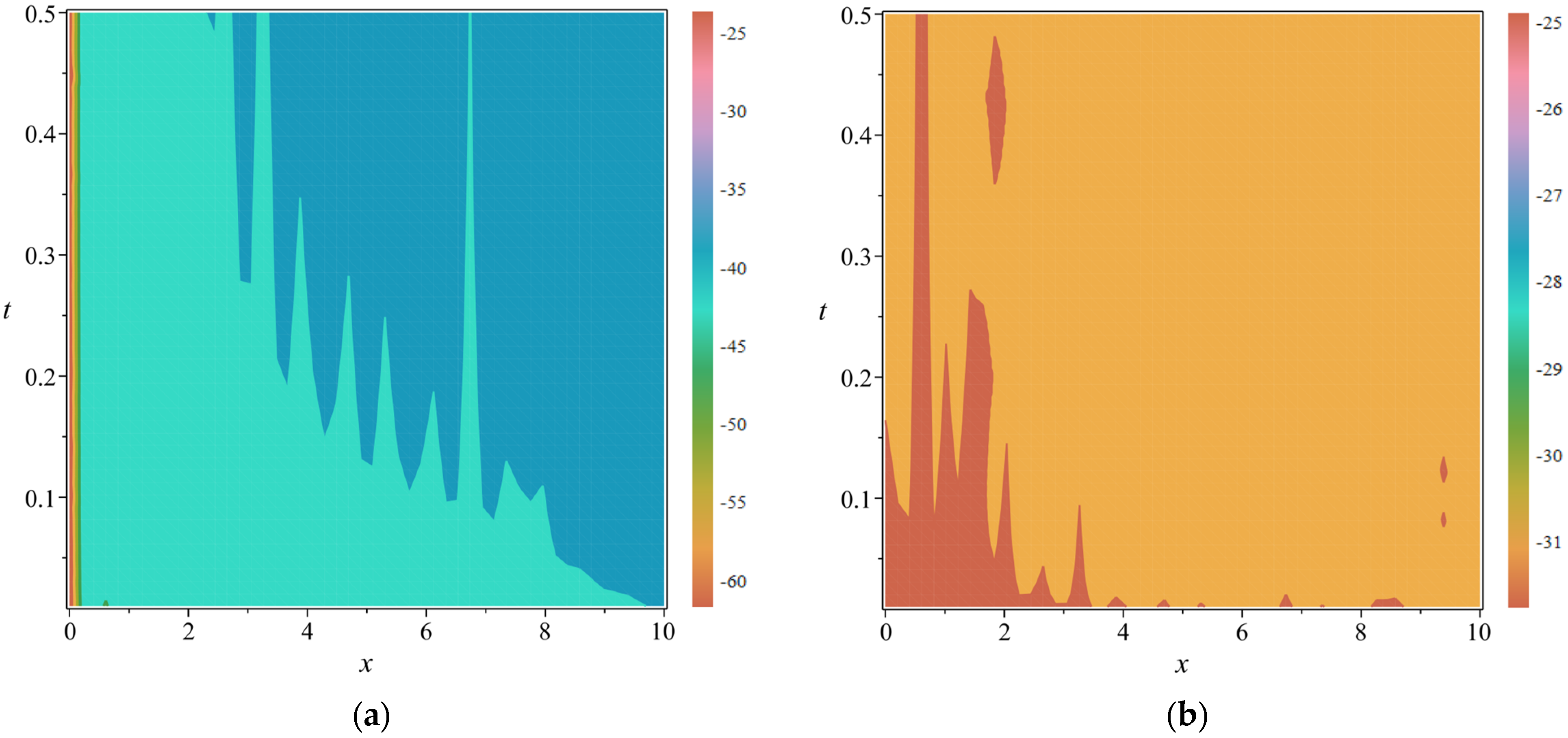

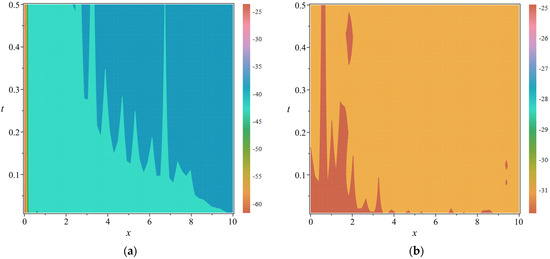

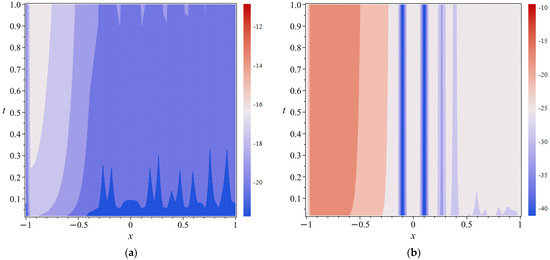

The analytical solution of Equation (18) is given by . We solve this equation for , , , and . In Table 1, we can see the absolute errors of our results which are juxtaposed with those documented in [27] at . These results underscore the superior precision of our novel algorithm over alternative methods. Table 2 presents the convergence analysis of our algorithm, detailing the error indicators and the corresponding experimental order of convergence of our computed results. Additionally, depicted in Figure 1 are the graphical representations of error indicators evaluated by proposed method for the parameters , , and .

Table 1.

Comparative analysis of absolute errors at for the equation detailed in Problem 1.

Table 2.

Error indicators and their corresponding convergence orders associated with our obtained results for Problem 1.

Figure 1.

Graphical representations of error indicators for Problem 1 with the specified parameters . (a) Logarithmic plot of the absolute error function: (b) Logarithmic plot of the absolute residual function: .

Problem 2.

For the second test problem, we apply our approach to solve the following fractional Burgers’ equation with variable-order. This is a one-dimensional linear inhomogeneous equation. This renowned Burgers’ equation is used extensively in the simulation of phenomena such as gas dynamics, fluid mechanics, traffic flow, and turbulence:

subject to the following initial and boundary conditions:

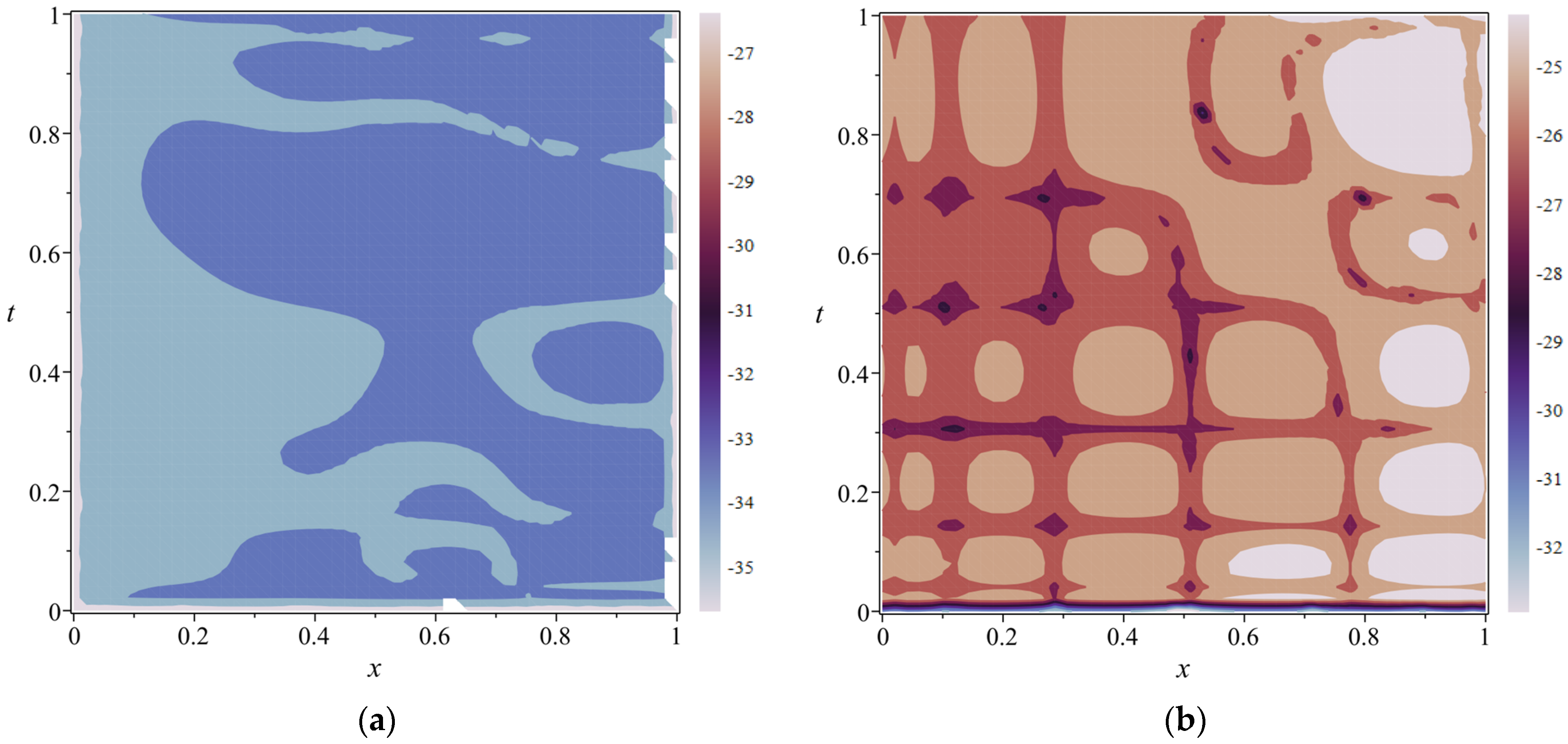

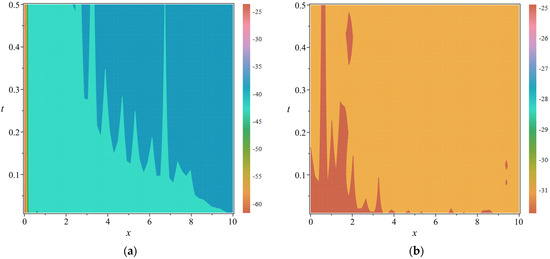

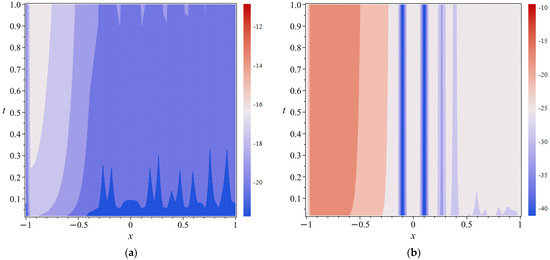

The analytical solution of Equation (19) is given by . In our numerical results, we make the assumption that . Figure 2 shows the graphical representations of error indicators evaluated through our approach, with parameters set as , , and . Additionally, in Table 3, the reference error, maximum absolute residual error, and relative error of our results across various values of N and M are reported.

Figure 2.

Graphical representations of error indicators for Problem 2 with the specified parameters . (a) Logarithmic plot of the absolute error function: (b) Logarithmic plot of the absolute residual function: .

Table 3.

Error indicators associated with our obtained results for Problem 2.

Problem 3.

This problem involves a variable-order time fractional PDE which is a multi-term equation as shown below:

with the following conditions:

Equation (20) possesses the exact solution , with the following :

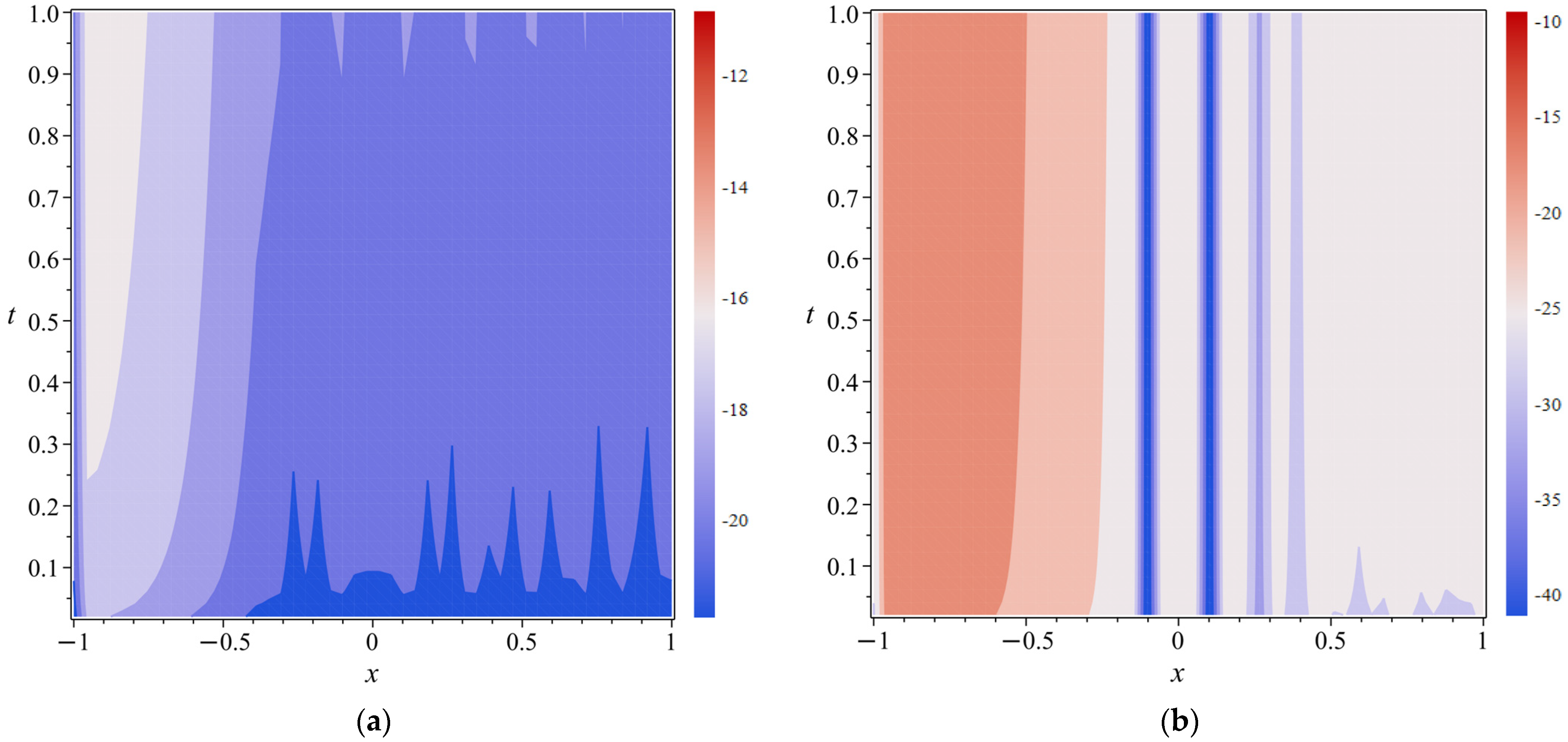

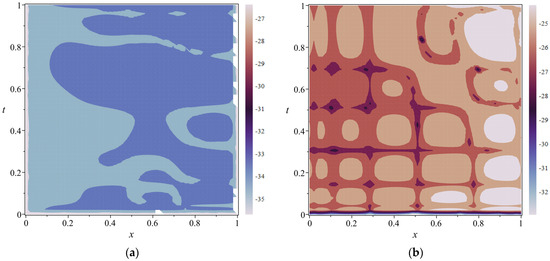

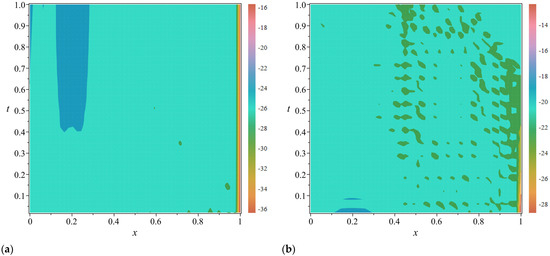

In Table 4, the reference error, maximum absolute residual error, and relative error of our results across various values of N and M are reported. Furthermore, the relative errors are juxtaposed with those documented in [28]. Table 5 illustrates the convergence analysis of our algorithm, providing error indicators and the corresponding experimental order of convergence for our approximations. Additionally, Figure 3 exhibits graphical depictions of error indicators assessed by our proposed method, utilizing parameters , , and .

Table 4.

Error indicators of our approximation and relative error in comparison with [28] for Problem 3.

Table 5.

Error indicators and their corresponding convergence orders associated with our obtained results for Problem 3.

Figure 3.

Graphical representations of error indicators for Problem 3 with the specified parameters . (a) Logarithmic plot of the absolute error function: (b) Logarithmic plot of the absolute residual function: .

Problem 4.

Our last variable-order time fractional PDE is:

with the following conditions:

Equation (21) possesses the exact solution , with the following :

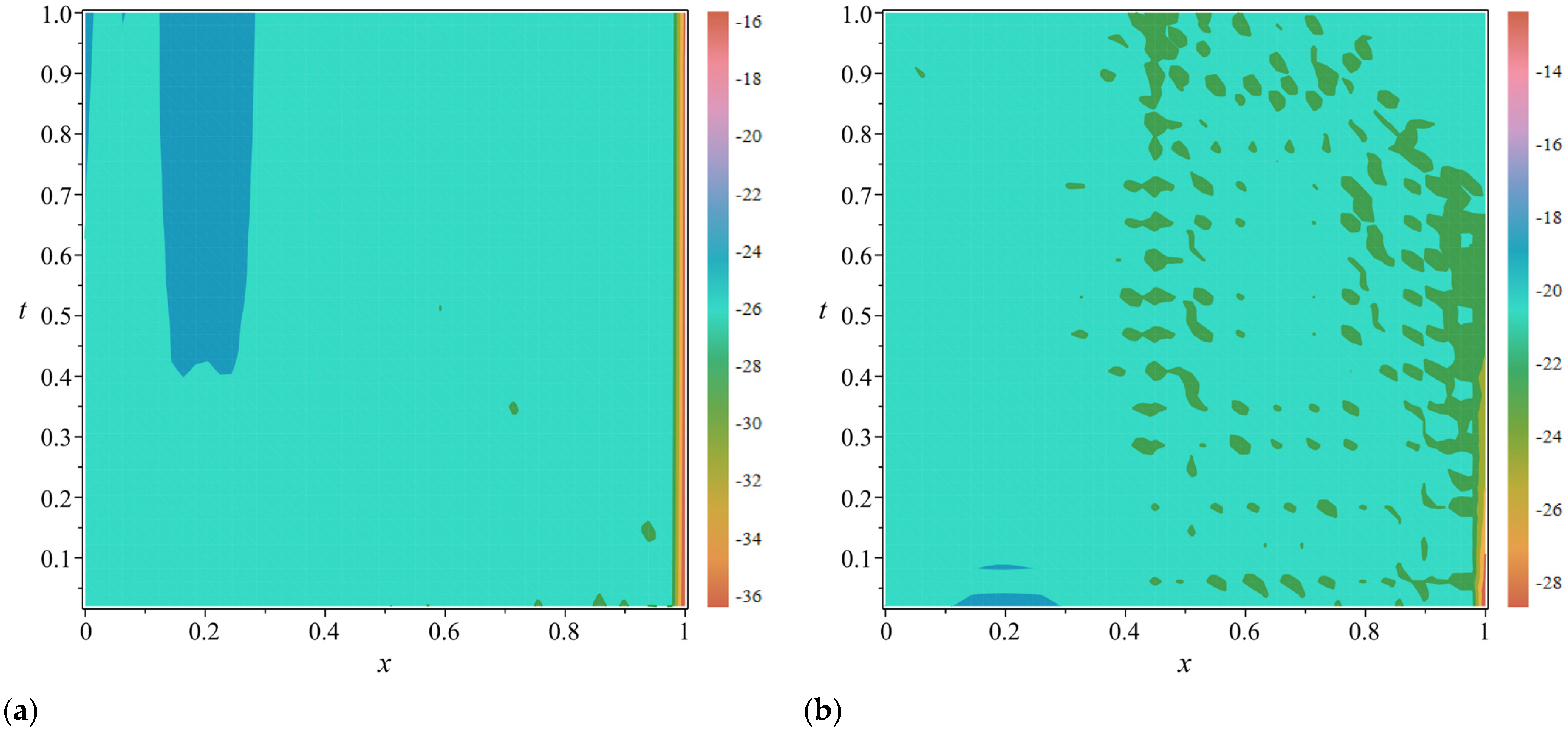

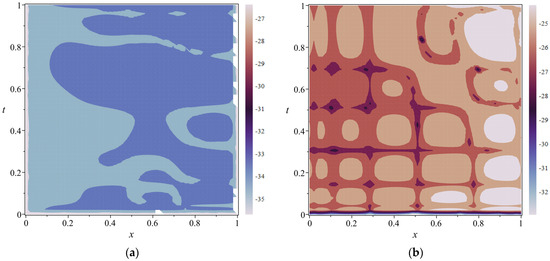

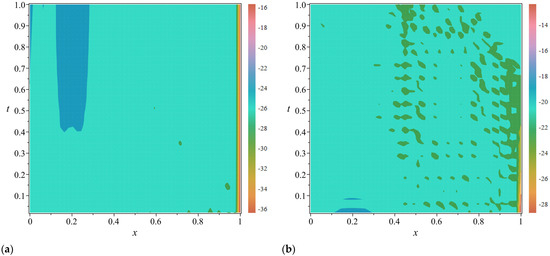

In Table 6, the reference error, maximum absolute residual error, and relative error of our results across various values of N and M are reported. Furthermore, the relative errors are juxtaposed with those documented in [28]. Table 7 illustrates the convergence analysis of our algorithm, providing error indicators and the corresponding experimental order of convergence for our approximations. Additionally, Figure 4 exhibits graphical depictions of error indicators assessed by our proposed method, utilizing parameters , , and .

Table 6.

Error indicators of our approximation and relative error in comparison with [28] for Problem 4.

Table 7.

Error indicators and their corresponding convergence orders associated with our obtained results for Problem 4.

Figure 4.

Graphical representations of error indicators for Problem 4 with the specified parameters . (a) Logarithmic plot of the absolute error function: (b) Logarithmic plot of the absolute residual function: .

Based on the findings presented in Table 1, Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7, it is evident that the maximum absolute values of error indicators exhibit a rapid decrease with increasing values of N or M. Moreover, the reported experimental convergence order in the tables underscores the efficacy and dependability of the proposed method.

6. Conclusions

We presented a novel and efficient algorithm aimed at dealing with the variable-order in space and time of fractional PDEs in their most general form. This encompasses a comprehensive investigation of these equations and their associated boundary conditions, formulated in a generalized framework. Our method involves approximating the solution of the governing equation using a combination of shifted Chebyshev polynomials with time-dependent coefficients. Through the integration of boundary conditions and the application of collocation methods, we have determined these coefficients. Theoretical analysis and numerical experiments corroborated the effectiveness and efficiency of our approach. Consequently, our method can be a suitable algorithm for addressing a wide range of differential equations.

Author Contributions

Conceptualization, S.K. and F.A.; Methodology, S.K. and F.A.; Software, S.K. and F.A.; Formal analysis, M.T.D.; Writing—original draft, S.K., F.A. and M.T.D.; Writing—review & editing, K.H. and E.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data that support the findings of the study are available upon reasonable request from the authors.

Acknowledgments

The authors deeply appreciate the valuable feedback from the esteemed reviewers and the respected editor, which has greatly enhanced the quality of this paper.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

References

- Diethelm, K. The Analysis of Fractional Differential Equations; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Ross, B.; Samko, S. Integration and differentiation to a variable fractional order. Integral Transform. Spec. Funct. 1993, 1, 277–300. [Google Scholar] [CrossRef]

- Samko, S. Fractional integration and differentiation of variable order. Anal. Math. 1995, 21, 213–236. [Google Scholar] [CrossRef]

- Sun, H.; Chen, W.; Wei, H.; Chen, Y. A comparative study of constant-order and variable-order fractional models in characterizing memory property of systems. Eur. Phys. J. Spec. Top. 2011, 193, 185–192. [Google Scholar] [CrossRef]

- Samko, S. Fractional integration and differentiation of variable order: An overview. Nonlinear Dyn. 2013, 71, 653–662. [Google Scholar] [CrossRef]

- Almeida, R.; Tavares, D.; Torres, D.F.M. The Variable-Order Fractional Calculus of Variations; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Chechkin, A.; Gorenflo, R.; Sokolov, I. Fractional diffusion in inhomogeneous media. J. Phys. Math. Gen. 2005, 38, L679–L684. [Google Scholar] [CrossRef]

- Zheng, X.; Wang, H.; Fu, H. Analysis of a physically-relevant variable-order time-fractional reaction–diffusion model with mittag-leffler kernel. Appl. Math. Lett. 2021, 112, 106804. [Google Scholar] [CrossRef]

- Guo, X.; Zheng, X. Variable-order time-fractional diffusion equation with mittag-leffler kernel: Regularity analysis and uniqueness of determining variable order. Z. FÜR Angew. Math. Phys. ZAMP 2023, 74, 64–68. [Google Scholar] [CrossRef]

- Coimbra, C. Mecganics with variable-order differential operators. Ann. Der Phys. 2001, 12, 692–703. [Google Scholar] [CrossRef]

- Orosco, J.; Coimbra, C. On the control and stability of variable-order mechanical systems. Nonlinear Dyn. 2016, 86, 695–710. [Google Scholar] [CrossRef]

- Ganji, R.M.; Jafari, H.; Adem, A.R. A numerical scheme to solve variable order diffusion-wave equations. Therm. Sci. 2019, 23, S2063–S2071. [Google Scholar] [CrossRef]

- Ganji, R.; Jafari, H. A numerical approach for multi-variable orders differential equations using jacobi polynomials. Int. J. Applied Comput. Math. 2019, 5, 34. [Google Scholar] [CrossRef]

- Ganji, R.; Jafari, H.; Baleanu, D. A new approach for solving multi variable orders differential equations with mittag-leffler kernel. Chaos Solitons Fractals 2020, 130, 109405. [Google Scholar] [CrossRef]

- Doha, E.H.; Abdelkawy, M.A.; Amin, A.Z.M.; Baleanu, D. Spectral technique for solving variable-order fractional volterra integro-differential equations. Numer. Methods Partial. Differ. Equ. 2018, 34, 1659–1677. [Google Scholar] [CrossRef]

- Chen, Y.-M.; Wei, Y.-Q.; Liu, D.-Y.; Yu, H. Numerical solution for a class of nonlinear variable order fractional differential equations with legendre wavelets. Appl. Math. Lett. 2015, 46, 83–88. [Google Scholar] [CrossRef]

- Xu, Y.; Ertürk, V.S. A finite difference technique for solving variable-order fractional integro-differential equations. Bull. Iran. Soc. 2014, 40, 699–712. [Google Scholar]

- Yang, J.; Yao, H.; Wu, B. An efficient numerical method for variable order fractional functional differential equation. Appl. Math. 2018, 76, 221–226. [Google Scholar] [CrossRef]

- Jafari, H.; Tajadodi, H.; Ganji, R.M. A numerical approach for solving variable order differential equations based on bernstein polynomials. Comput. Math. Methods 2019, 1, e1055. [Google Scholar] [CrossRef]

- Tavares, D.; Almeida, R.; Torres, D.F. Caputo derivatives of fractional variable order: Numerical approximations. Commun. Nonlinear Sci. Numer. Simul. 2016, 35, 69–87. [Google Scholar] [CrossRef]

- Santamaria, F.; Wils, S.; Schutter, E.D.; Augustine, G.J. Anomalous diffusion in purkinje cell dendrites caused by spines. Neuron 2006, 52, 635–648. [Google Scholar] [CrossRef]

- Sun, H.; Chen, W.; Chen, Y. Variable-order fractional differential operators in anomalous diffusion modeling. Phys. Stat. Mech. Its Appl. 2009, 388, 4586–4592. [Google Scholar] [CrossRef]

- Kheybari, S. Numerical algorithm to Caputo type time-space fractional partial differential equations with variable coefficients. Math. Comput. Simul. 2021, 182, 66–85. [Google Scholar] [CrossRef]

- Kheybari, S.; Darvishi, M.T.; Hashemi, M.S. A semi-analytical approach to caputo type time-fractional modified anomalous sub-diffusion equations. Appl. Numer. Math. 2020, 158, 103–122. [Google Scholar] [CrossRef]

- Shen, J.; Tang, T.; Wang, L.-L. Spectral Methods: Algorithms, Analysis and Applications; Springer Science & Business Media: Cham, The Netherland, 2011; Volume 41. [Google Scholar]

- Gottlieb, D.; Orszag, S.A. Numerical Analysis of Spectral Methods, Theory and Applications; SIAM-CBMS: Philadelphia, PA, USA, 1977. [Google Scholar] [CrossRef]

- Sun, H.; Chen, W.; Li, C.; Chen, Y. Finite difference schemes for variable-order time fractional diffusion equation. Int. J. Bifurc. Chaos 2012, 22, 1250085. [Google Scholar] [CrossRef]

- Tian, X.; Reutskiy, S.Y.; Fu, Z.-J. A novel meshless collocation solver for solving multi-term variable-order time fractional pdes. Eng. Comput. 2021, 38, 1527–1538. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).