Abstract

The Cauchy problem for the Laplace equation in an annular bounded region consists of finding a harmonic function from the Dirichlet and Neumann data known on the exterior boundary. This work considers a fractional boundary condition instead of the Dirichlet condition in a circular annular region. We found the solution to the fractional boundary problem using circular harmonics. Then, the Tikhonov regularization is used to handle the numerical instability of the fractional Cauchy problem. The regularization parameter was chosen using the L-curve method, Morozov’s discrepancy principle, and the Tikhonov criterion. From numerical tests, we found that the series expansion of the solution to the Cauchy problem can be truncated in , , or for smooth functions. For other functions, such as absolute value and the jump function, we have to choose other values of N. Thus, we found a stable method for finding the solution to the problem studied. To illustrate the proposed method, we elaborate on synthetic examples and MATLAB 2021 programs to implement it. The numerical results show the feasibility of the proposed stable algorithm. In almost all cases, the L-curve method gives better results than the Tikhonov Criterion and Morozov’s discrepancy principle. In all cases, the regularization using the L-curve method gives better results than without regularization.

1. Introduction

The challenge of determining a harmonic function within a bounded annular region based on partial boundary measurements (Cauchy data) is known as the Cauchy problem for the Laplace equation [1]. This problem is notoriously ill-posed in the sense of Hadamard, meaning that small perturbations in the Cauchy data can lead to significant changes in the solution, resulting in numerical instability. Consequently, regularization techniques are essential for solving this problem. To ensure a solution to the Cauchy problem, certain smoothness conditions must be imposed on the Cauchy data (refer to Theorem 1 in [2]).

Various methods have been developed to analyze the Cauchy problem. For instance, ref. [3] utilized singular value decomposition to find the solution in a circular annular region, employing the spectral cut-off of the pseudo-inverse method to manage the numerical instability. In Refs. [4,5], a novel regularization method was introduced using the method of fundamental solutions to address the Cauchy problem in both annular and multi-connected domains. To effectively solve the discrete ill-posed problem arising from a boundary collocation scheme, Tikhonov regularization and L-curve methods were applied in [5]. Additionally, the technique of layer potentials was used in [3,6,7] to derive an equivalent system of integral equations. The moment problem approach, based on Green’s formula, was employed in [8] to solve the Cauchy problem in more complex annular regions. A similar technique was proposed for the three-dimensional Cauchy problem in [9], where the solution was expressed using spherical harmonics and Tikhonov regularization. In [10], a variational formulation was introduced, minimizing the cost functional through conjugate gradient iterations combined with boundary element discretization. In [1], the potential on the interior boundary of the annular region was treated as a control function to match the Cauchy input data on the exterior boundary, incorporating a penalized term in the cost function. This approach allowed for the determination of the optimal solution using an iterative conjugate gradient algorithm, with the computational cost involving the solution of two elliptic problems per iteration, solved by the finite element method. Similar techniques have been applied to other control problems, as seen in [10,11,12,13,14].

The Cauchy problem holds significant importance due to its numerous applications, such as estimating pipeline deterioration, calculating solutions or potentials in inaccessible regions or boundaries, and studying cracks in plates [15,16]. Furthermore, the Cauchy problem is utilized in inverse electrocardiography problems [17,18,19] and in solving inverse problems in electroencephalography (EEG) [14]. EEG signals are known to exhibit fractal characteristics [20,21,22]. Additionally, fractional derivatives can model voltage propagation in axons using a fractional cable geometry to study human neural networks [23]. In the context of EEG, these fractal characteristics may be related to the sources generating the signal. One potential approach to relate these fractal characteristics to EEG signals is through the use of fractional operators, which warrants further investigation in future studies.

In this work, we consider one variant of the Cauchy problem. More precisely, we consider that we know the action of a fractional operator on the potential on the exterior boundary instead of the potential itself. We apply the Tikhonov regularization to handle the numerical instability that presents this variant, which we call the fractional Cauchy problem. Since we consider circular geometry, we use the Fourier series method to solve the normal equations. The adjoint operator was found using its definition. From this, we found a stable algorithm for some of the parameters defining the fractional operator. To illustrate the results presented in this work, we elaborate synthetic examples and programs in MATLAB.

Regarding the fractional Cauchy problem, we found no work on it. Therefore, as a validation of the results from our proposal, we include results for the classical case that considers a Dirichlet condition, which has been extensively studied, as we attempted to demonstrate in our literature review, which included a substantial number of articles. We obtain the same results as in the classical case using our method.

The paper is organized as follows: In Section 2, the definition and some results of the classical Cauchy problem, as well as the Sturm–Liouville operator, are presented. Section 2 also finalizes the definition of the fractional Cauchy problem. Section 3 applies the Tikhonov regularization to find an algorithm to recover the potential on the interior boundary. Section 4 presents numerical examples to illustrate the algorithm presented in this work. In Section 5, we discuss the stability of the proposed algorithm. In Section 6, we give the conclusions.

2. Problem Formulation

2.1. The Cauchy Problem

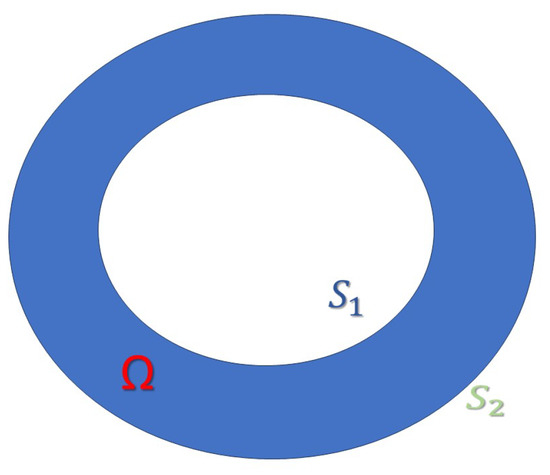

Let be a bounded annular region in with sufficiently smooth interior boundary and exterior boundary , as shown in Figure 1.

Figure 1.

Bi-dimensional circular annular region .

We consider the following boundary value problem: Find , such that

where , n is the outward unitary vector defined on , and denotes the outward normal derivative of w on . For simplicity, we consider (1) with by the change in variable , where is the unique harmonic function satisfying on , and . Then,

where . For the analysis of the Cauchy problem (2), the following problem is employed (see [15]):

Given a function φ defined on, find u such that

This problem is well-posed, and we will call it the auxiliary problem.

The inverse problem associated with the Cauchy problem can be formulated in the following way:

Recover the potential on from the measurements on , where u is the solution to the auxiliary problem (3).

Definition 1.

Theorem 1 given in [1] guarantees the existence and uniqueness of the weak solution and allows us to define the lineal, injective, and compact operator that associates to each the trace over of the weak solution u to the auxiliary problem (3). Operator K is compact because it is the composition of the continuous operator , which associates to each the weak solution to the auxiliary problem (3), with the trace operator from into , which is compact. The relationship between problem (2) and auxiliary problem (3) can be described by the operator K as follows:

A solution to the auxiliary problem (3) is also a solution to the problem (2) if we choose ϕ on , such that

where denotes the solution to the auxiliary problem (3), and V is the known measurement in problem (2), so we have .

The following result is very important for the statement of the minimization problem presented in Section 3, and its demonstration can be found in [10].

Theorem 1.

is dense in .

Equation (7) does not have a solution for all . However, if we impose some smoothness conditions on V, we can find global conditions of the existence of the solution, as in [10]. As K is an injective and well-defined [15] operator, it ensures uniqueness when a solution is available. Since the operator K is linear, injective, and compact, its inverse is not continuous. Therefore, the inverse problem is ill-posed due to its numerical instability.

2.2. Fractional Boundary Operator

The following material has been obtained from [24]. Let be a unit ball, . The corresponds with the unit sphere; , let be a Dirac operator, where . Let be a smooth function on the domain . For any , the following expression

is called an operator of integration of the order in the Hadamard sense. Furthermore, we will assume that

We consider the following modification of the Hadamard operator:

where m is a positive integer.

Properties and applications of the operators y have been studied in [24]. In that paper, the authors studied a certain generalization of the classical Neumann problem with the fractional order of boundary operators. Let , . In the domain , the authors consider the following problem:

2.3. Fractional Cauchy Problem

We consider the following fractional Cauchy problem

where the operator is given in (9).

In this case, the operator . We note that the operator is linear and continuous, and it has no singularities since . For the analysis of the fractional Cauchy problem (12), we also consider the auxiliary problem (3). We define the operator , which is a compact operator. We have the following two definitions to study the problem that concerns us.

Definition 2.

The Forward Problem (FP) related to the fractional Cauchy problem consists of finding the potential when ϕ is known.

We can consider other fractional Cauchy problems by changing the boundary operator. We can consider different kernels for the integral operator. For example, we can take the kernel of the Riemann–Liouville and Caputo fractional derivative, which can be found in [25].

Definition 3.

Given , the Inverse Problem (IP) related to the fractional Cauchy problem consists of finding such that .

3. Methods

3.1. Tikhonov Regularization of the Fractional Cauchy Problem

To find an approximate solution of Equation (7) for when we have a measurement with error , the minimization of the following Tikhonov functional is proposed in [26]:

where is the Tikhonov regularization parameter, which will be chosen by the L-curve method, Morozov’s discrepancy principle, and numerical tests. The first and second Fréchet derivatives are given by (see Appendix A):

This least squares procedure is equivalent to solving the normal equation

where is the adjoint operator.

According to Theorem 2.12 given in [26], the operator is boundedly invertible. Given , the exact solution to the auxiliary problem (3) in a circular annular region , in polar coordinates, is given by

where . The values , , are the Fourier coefficients of . The solution to the FP, called measurement, is given by , which is obtained by applying the operator , the identities

, ,

, , and then evaluating in , i.e.,

where the Fourier coefficients of exact measurement V are given by , for , 2, in which

In the numerical examples, the integrals are calculated using the function quadl of MATLAB.

The ‘exact solution’ u and the ‘exact measurement’ are generated taking terms of the Fourier series (15) and (16), with , which is obtained from numerical tests. To find the solution to the IP, we must solve the normal equations. To do this, we calculate the adjoint operator using its definition:

Without loss of generality, we consider functions in which the constant term of their series expansion is null. Using (16) and (18), we found

Thus, the adjoint operator is defined by ,

3.2. Tikhonov Regularization for the Classical Cauchy Problem

Given , the exact solution to the auxiliary problem (3) in a circular annular region is given, in polar coordinates, by (15). Therefore, the measurement is obtained with in (15):

which is the solution to the FP. The Fourier coefficients of V are given by

Therefore, the solution to the IP from the measurement with error

is given by the regularized solution

where

where are the Fourier coefficients of and is the Tikhonov regularization parameter. Thus, the solution to the IP (of the classical Cauchy problem) applying the Tikhonov regularization method (TRM) is given by (15), replacing the coefficients by the coefficients given by (25).

4. Numerical Results

In this section, we illustrate the method proposed in this work using synthetic examples. We know the exact defined on in this case. Then, we calculated the measurement with and without noise by solving the FP for the classical and fractional Cauchy problem.

The exact measurement is calculated by solving the FP. To generate the measurements with error , we added to the exact measurement a Gaussian error using the function of MATLAB. The exact measurement was calculated by solving the FP. Therefore, we define

where ‘’ is a vector of random numbers of length m (numbers of nodes on ) with a normal distribution. The corresponding numerical solutions are denoted by .

In this section, we obtain the relative error between the exact source and the recovered source shown in tables and denoted by . The relative error is given by

and the relative error between the exact measurement V and the measurement with error is denoted by , which is given by

where is the norm of the space .

4.1. Solution to the IP Related to the Classical Cauchy Problem

In the following two examples, we consider a circular annular region with and ; then and are two circumferences of radii and (see Figure 1), respectively.

Example 1.

We take the ‘exact potential’ , , that in polar coordinates is . In this case, , and the solution to the forward problem, that is, the solution to the auxiliary problem (3), is given by

where . Then, the ‘exact solution’ V and the ‘measurement with error’ are generated with the first N terms of the Fourier series (22) and (23), respectively. In this case, we take values of , 25, and 30 terms. For smooth functions in the Cauchy data, these values of N are obtained by combining numerical tests and the following ideas: is approximated by a truncation choosing N such that we can guarantee that , where the approximation . From the Parseval equality and (22), where we found that the Fourier coefficients of V decay at least as , we infer

From this, we can take for obtaining the inequality. With this, we obtain an error regarding the truncation of the series expansion.

Now, the measurement error is simulated by adding a random error to each Fourier coefficient , , such that , which guarantees that .

For other functions, such as absolute value and the jump function, we have to choose other values of N. This is shown in Examples 3 and 4, which are included in Section 4.2.6 .

Thus, we consider synthetic examples; that is, we examine the fundamental elements of the problems studied, such as how real data are generated and the inherent error within them. In this way, we attempt to emulate the characteristics of real-world problems so that our proposal is closely aligned with providing a solution to them.

Therefore, the measurement with error is given by the series

where are the Fourier coefficients of . The regularized solution to the inverse problem is given by the series (24) truncated to N terms. The solution without regularization to the IP is given by

where the coefficients are given by

Remark 1.

Table 1 shows the numerical results for data with and without error, applying TRM to solve the IP of the classical Cauchy problem (2). In this case, we observe that the solutions with regularization have a percentage of relative errors around , equal to the percentage of error included in the data with error for . The regularization parameter was chosen as for , 25, and 30. Also, we can see that the decreases when the error tends to zero, while the increases for each value of N. In particular, the increases faster when , for , , and y . In this case, the regularization parameter depends on .

Table 1.

Numerical results applying TRM to solve the IP related to the classical Cauchy problem (2), for , , and different values of and N.

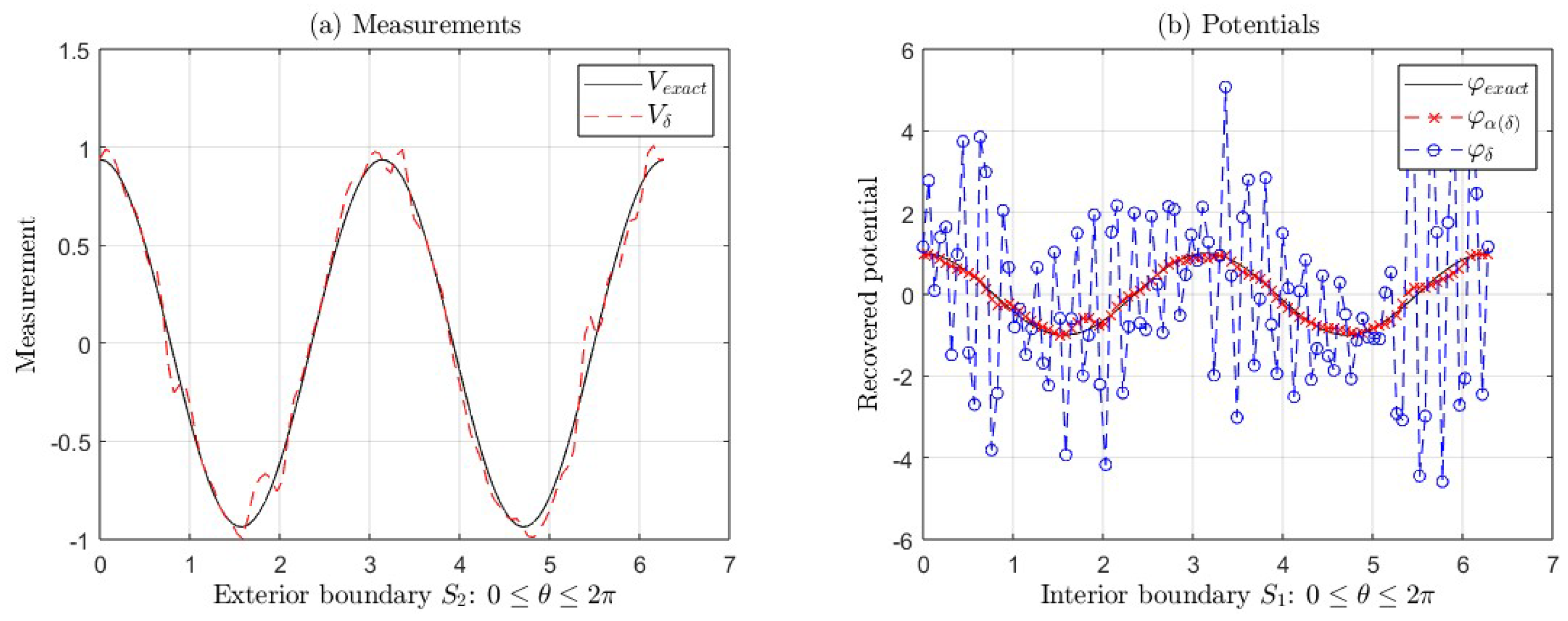

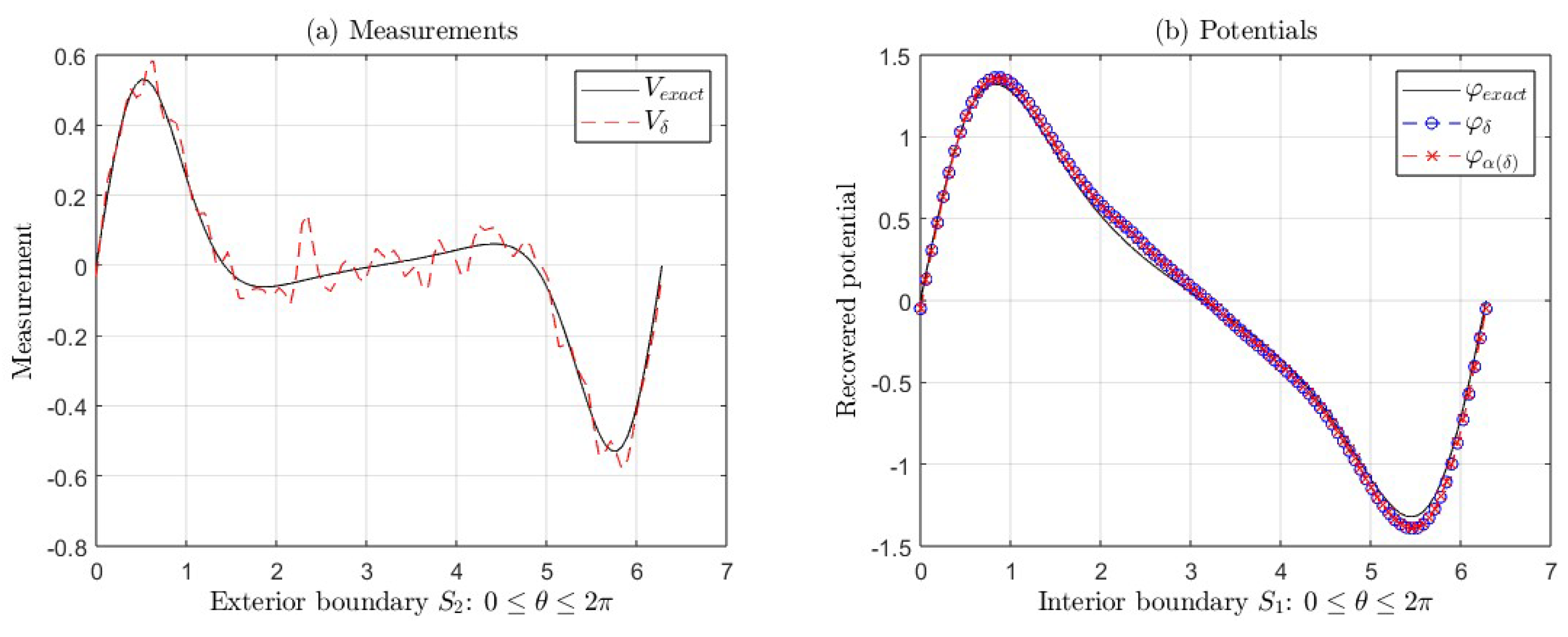

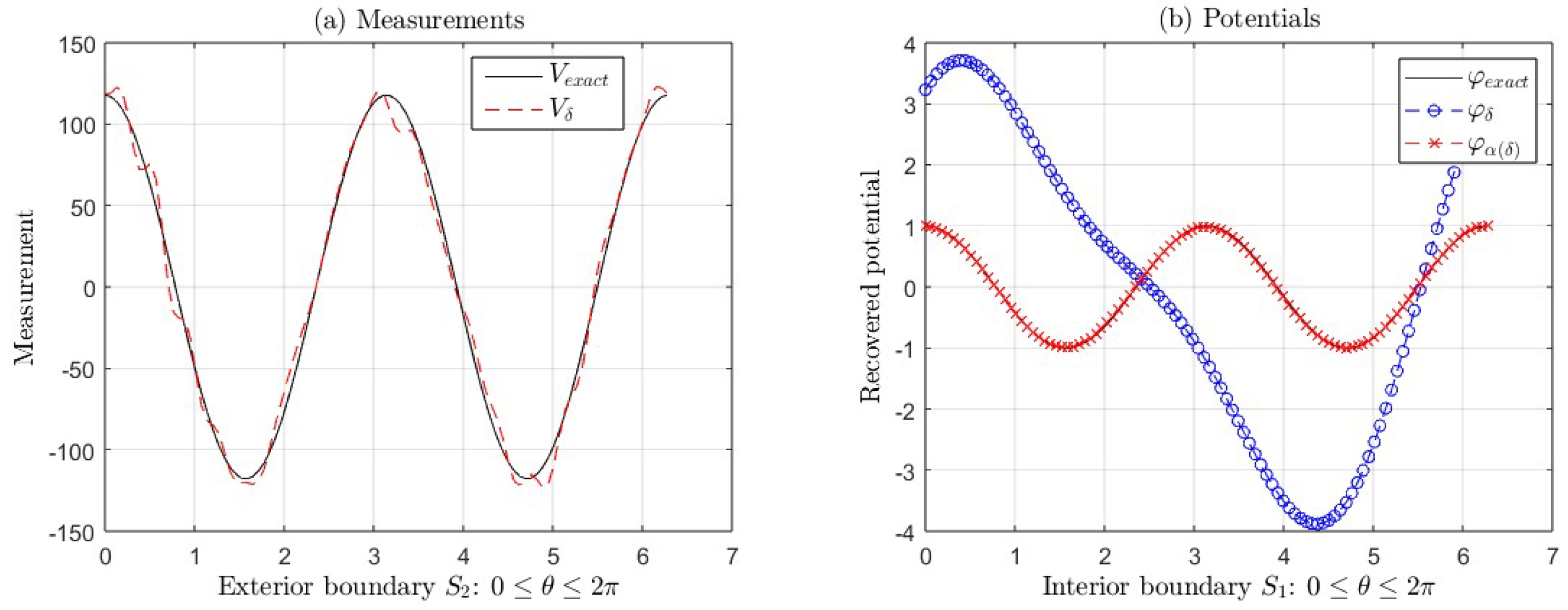

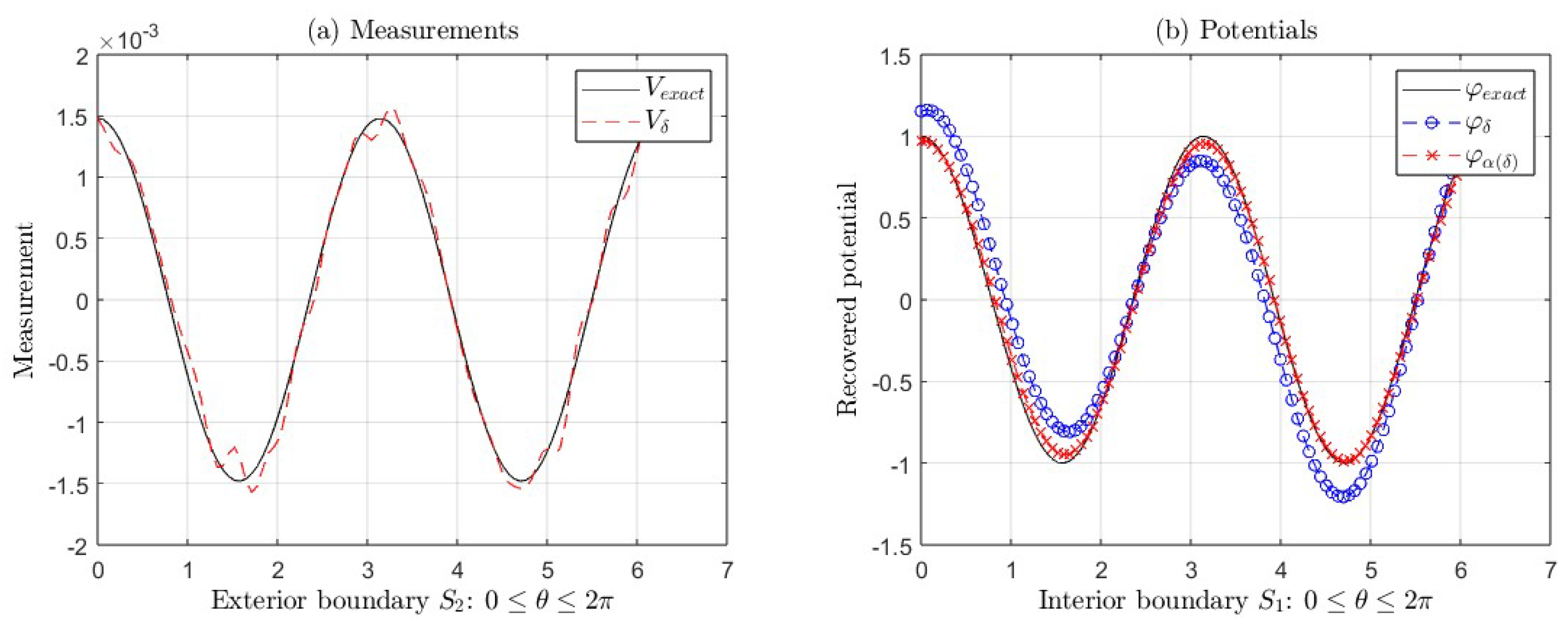

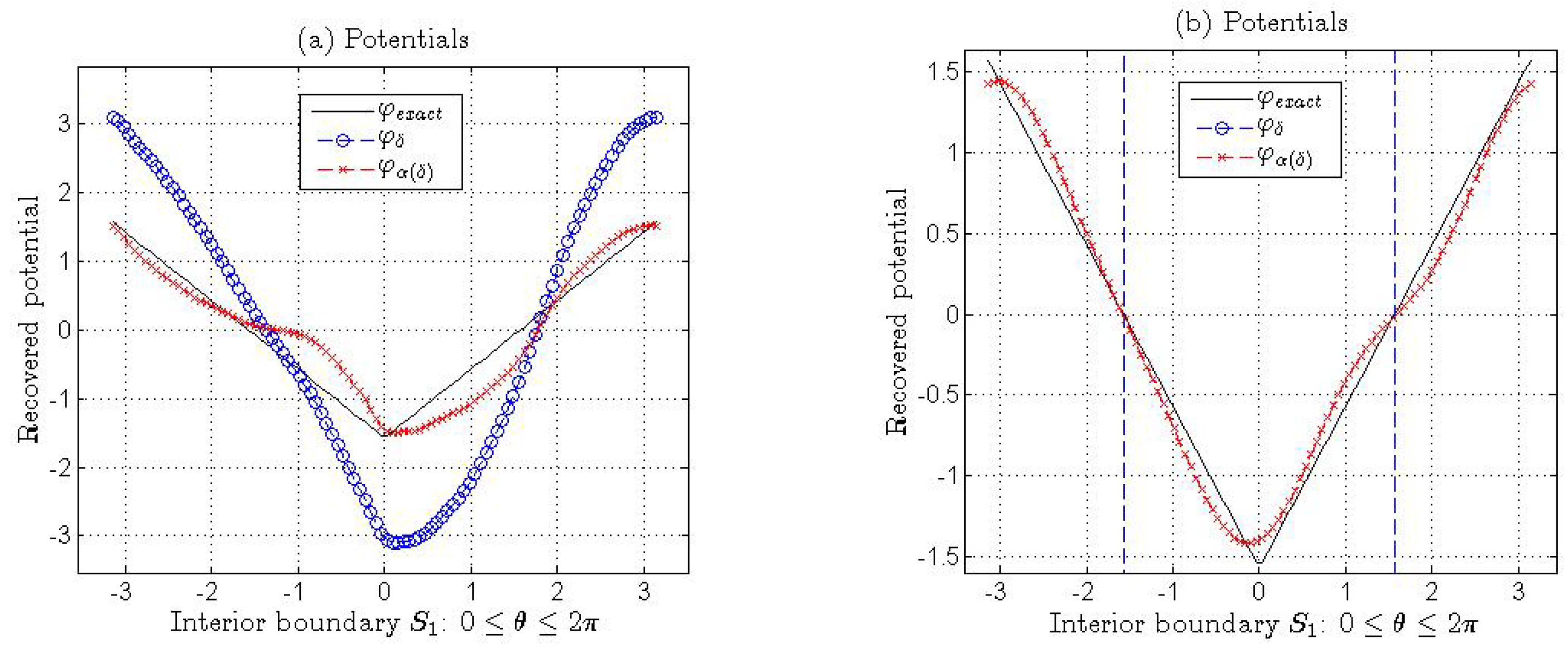

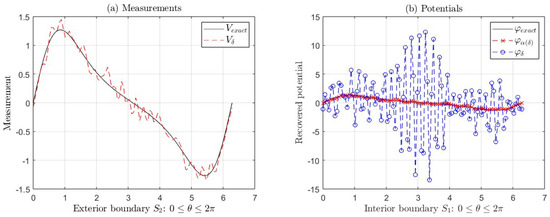

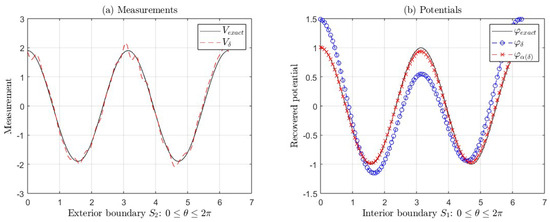

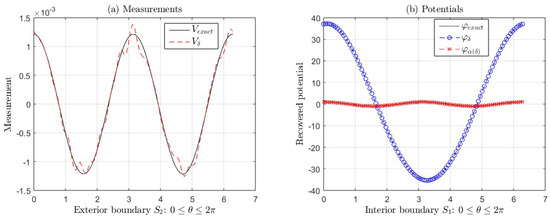

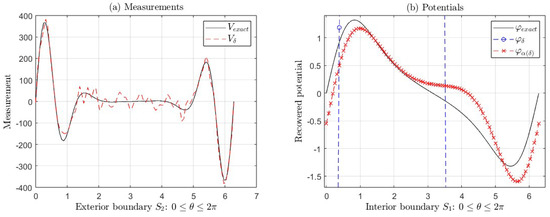

Figure 2a,b show the graphs of the exact measurement V and with error , the graphs of the exact potential and its approximations (with regularization) and (without regularization) taking and , corresponding to Example 1, for (see Table 1). In Figure 2b, we can see the ill-posedness of the inverse problem if we do not apply regularization, where and .

Figure 2.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and applying regularization and without regularization, corresponding to Example 1 for (see Table 1). We take and in this case.

Example 2.

We consider the ‘exact potential’ , for . Similar to the first example, the ‘exact measurement’ V and the ‘measurement with error’ are generated with the first N terms of the Fourier series (22) and (23), respectively, such that , with . In this case, and the Fourier coefficients , are obtained numerically using the intrinsic function of . Here, we take values of , 25, and 30 terms.

Table 2 shows the numerical results for data with and without error, applying TRM to solve the IP of the classical Cauchy problem (2). Analogous to Example 1, we can observe that the solutions with regularization have a percentage of relative errors around , equal to the percentage of error included in the data with error for . Also, we can see that the decreases when the error tends to zero, while the increases for each value of N. In particular, the increases when for each , , and . As in the previous example, the regularization parameter depends on , and we take for each value of , 25, and 30.

Table 2.

Numerical results applying TRM to solve the IP related to the classical Cauchy problem (2), for , and different values of and N.

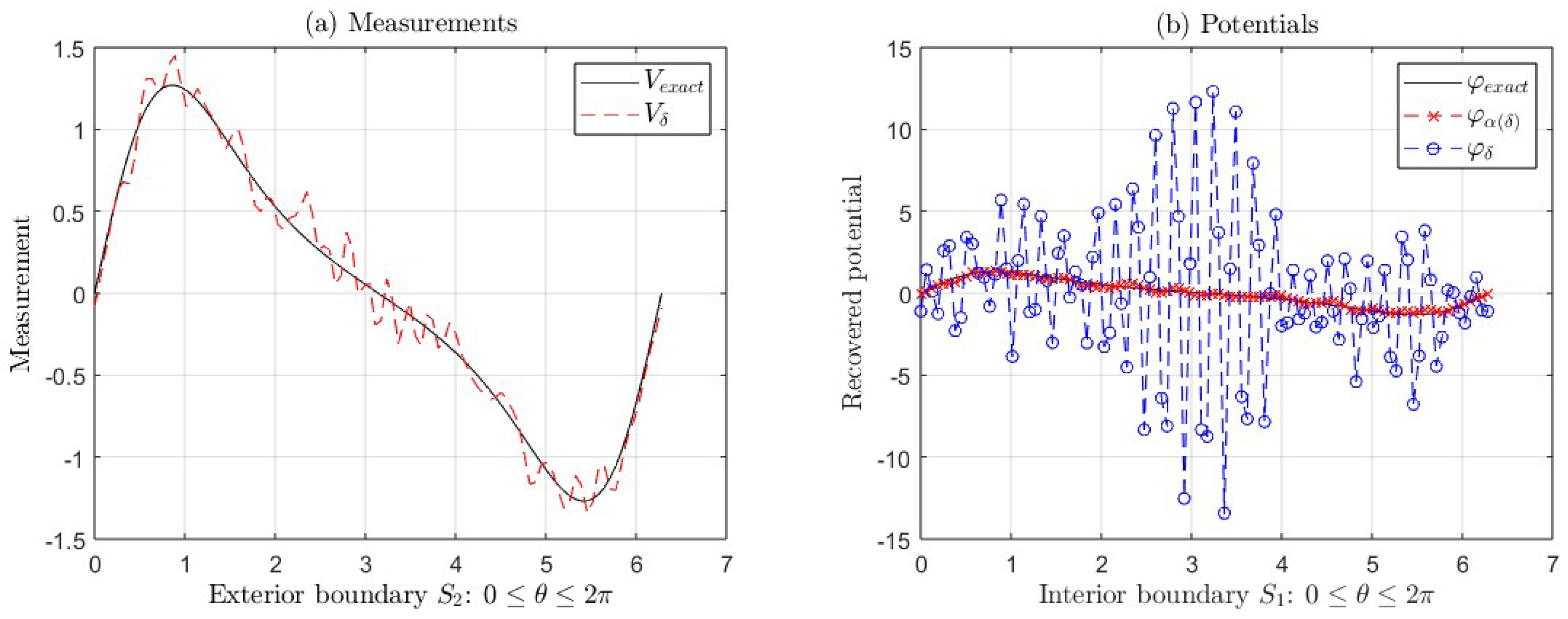

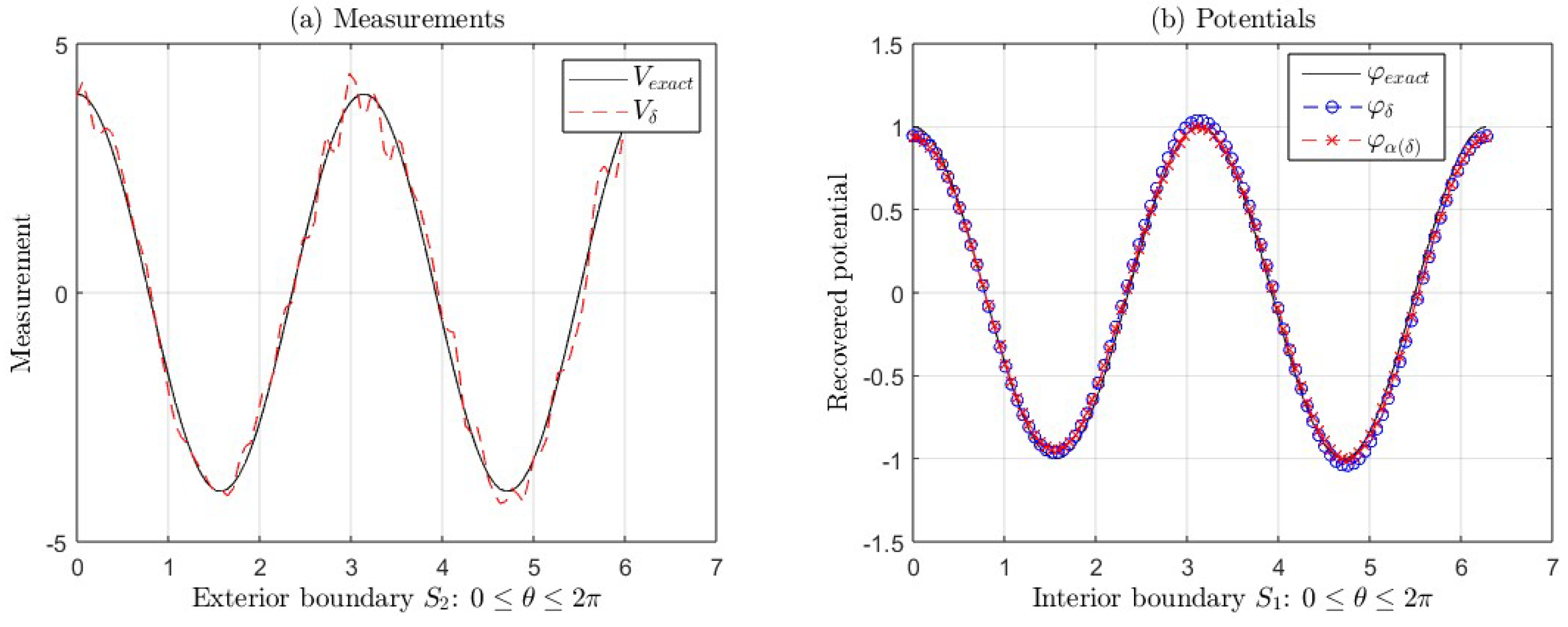

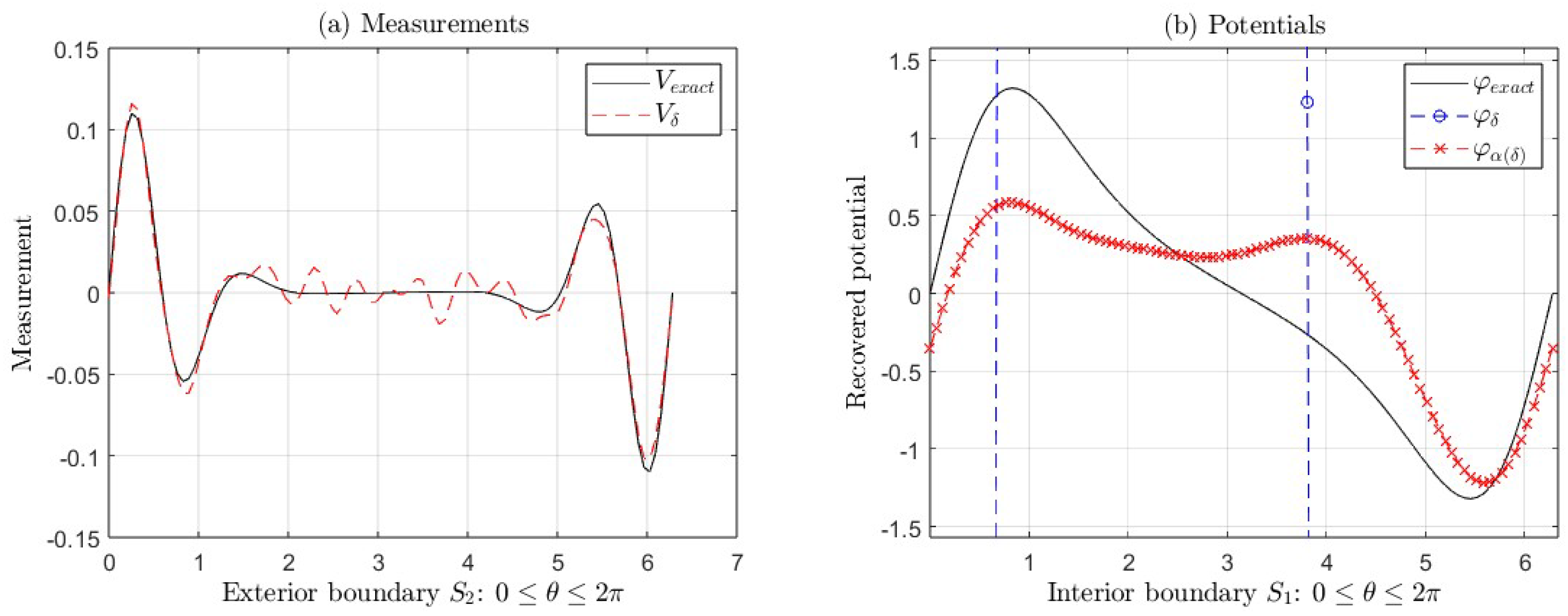

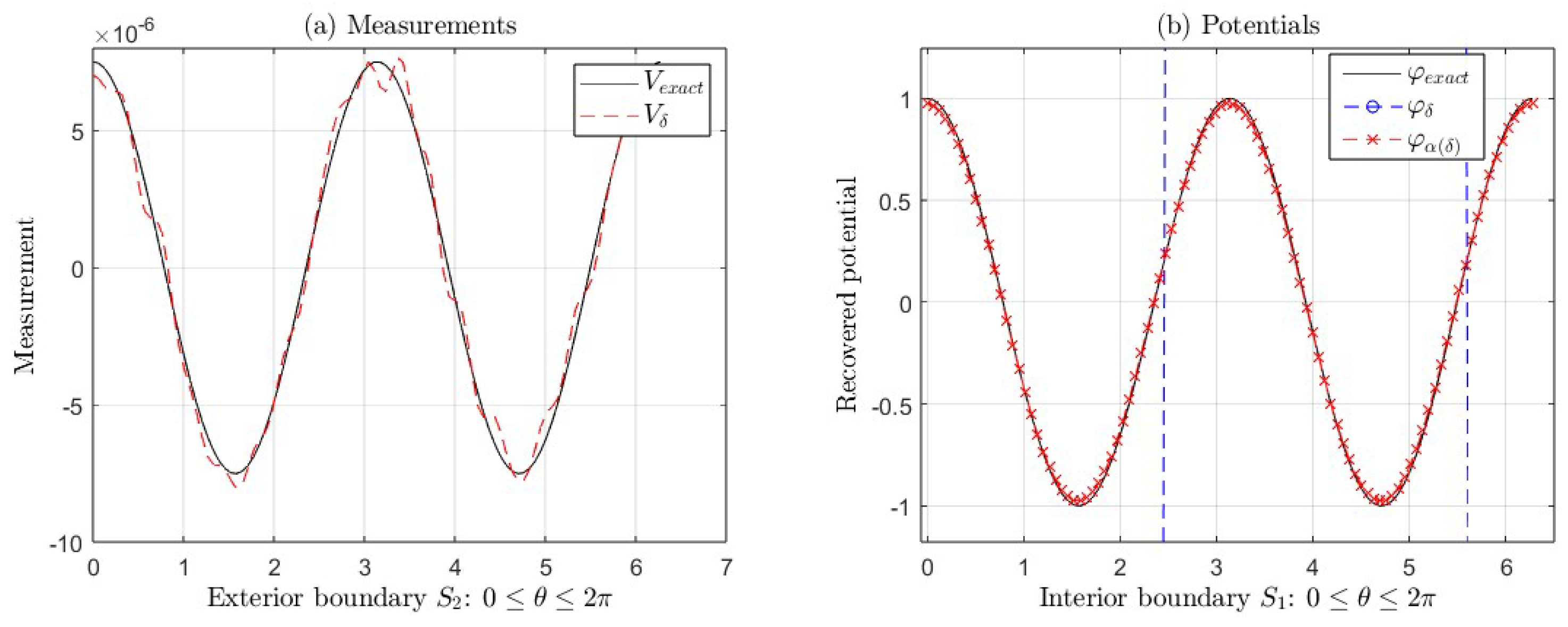

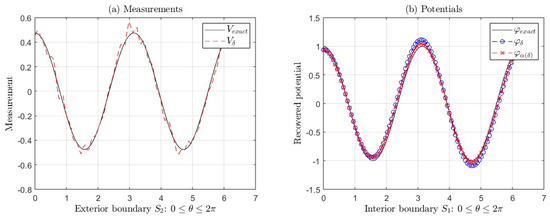

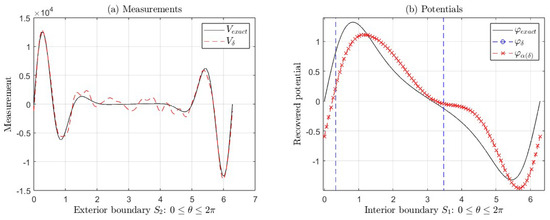

Figure 3a,b show the graphs of the exact measurement V and with error , the graphs of the exact potential and its approximations (with regularization) and (without regularization) taking and , corresponding to Example 2, for (see Table 2). In Figure 3b, we can see the ill-posedness of the inverse problem if we do not apply regularization. In this case, and .

Figure 3.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and applying regularization and without regularization, corresponding to Example 2 for (see Table 2). In this case, we take and .

4.2. Solution to the IP Related to the Fractional Cauchy Problem

In this section, we look into the performance of the TRM to solve the IP of the fractional Cauchy problem (12) in a circular annular region with and . Then, and are two circumferences of radii and (see Figure 1), respectively. In this case, we consider as ‘exact potentials’ the two functions from the previous subsection: and , for .

Similar to the previous subsection, the ‘exact solution’ V and the ‘measurement with error’ are obtained by truncating the series (16) and (23) up to N terms, respectively; furthermore, the Fourier coefficients , , and (given by (17)) are obtained numerically using the function of .

In this case, we take values of , 20, 25, and 30 terms. Therefore, the measurement with error is given by the series (23) truncated to N terms. The regularized solution to the IP is given by the series (21) truncated to N terms. Also, the solution without regularization to the IP is given by (29), where

Remark 2.

4.2.1. Case 1: and , When Tends to Zero

In this section, we consider the case when , , and for different values of close to zero. Table 3 and Table 4 show the relative errors of the approximations and , when tends to zero, for the two exact functions considered in Section 4.1. In both cases, we observe that the of the solutions with regularization is less than the for each value of and N given in these tables. Additionally, the and are of the same order, i.e., the solutions without regularization are close to regularized solutions for and . In both cases, the measurements with errors do not have much impact on recovered solution , and they are close to . We observe from the relative errors that regularized approximations are better than those without regularization. In this case, the regularization parameter depends on , N, m, and .

Table 3.

Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12), for , , and different values of and N.

Table 4.

Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12), for , , and different values of and N.

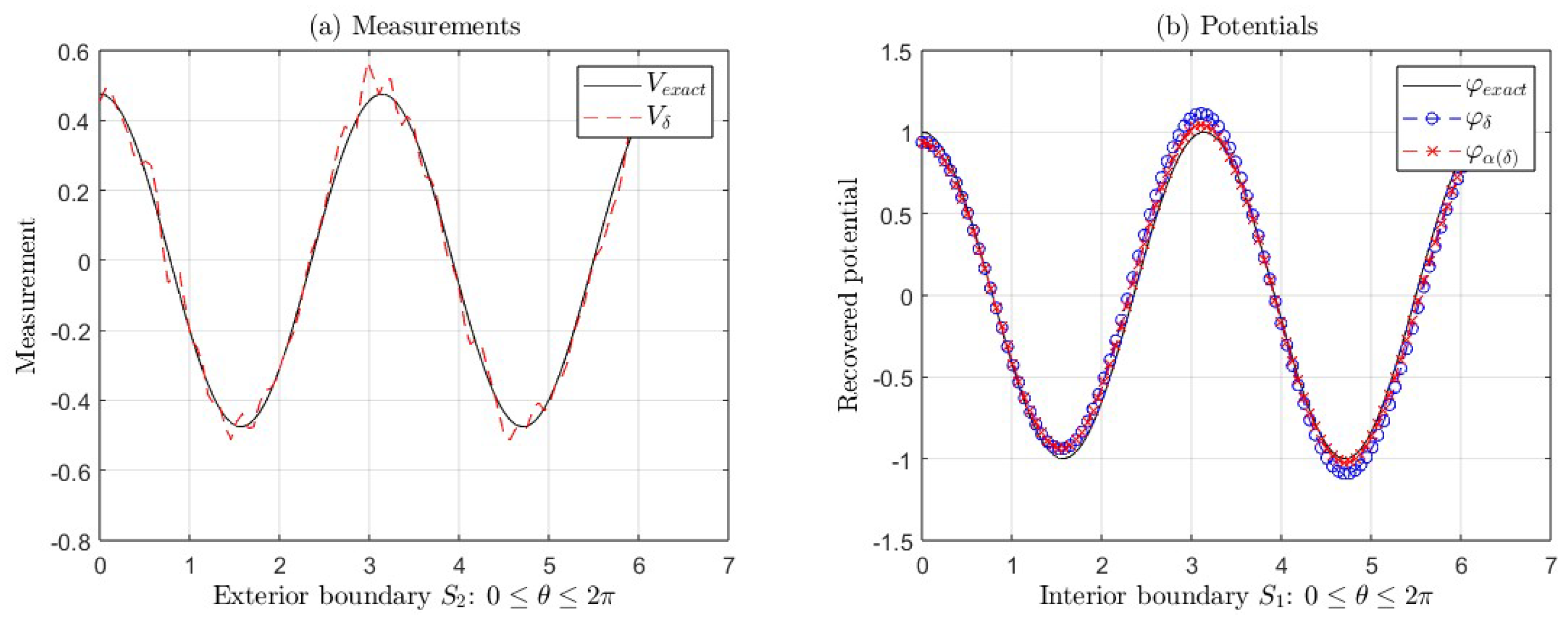

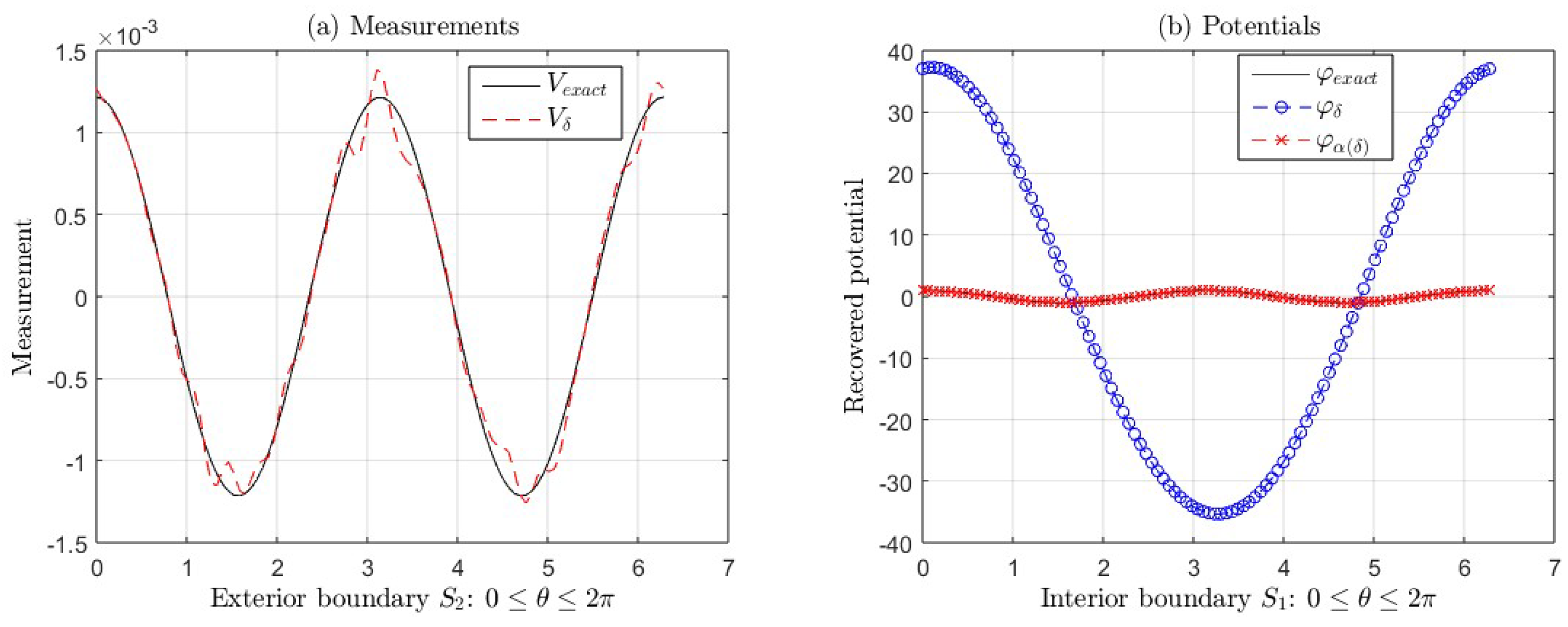

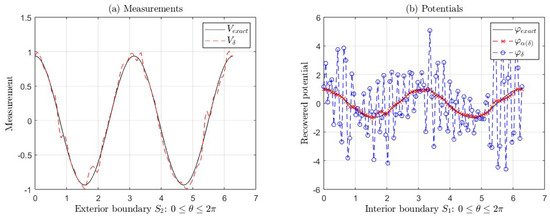

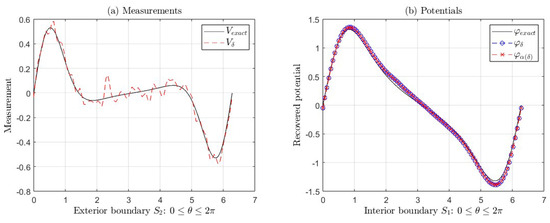

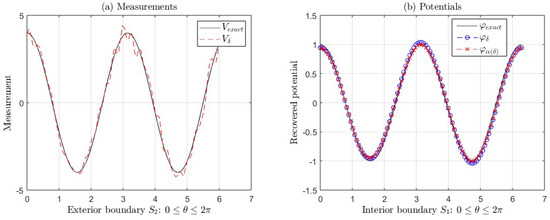

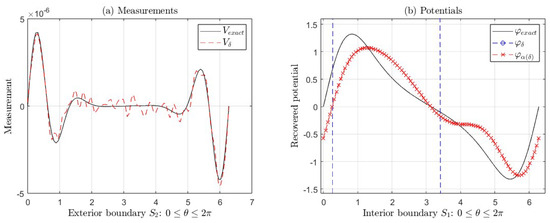

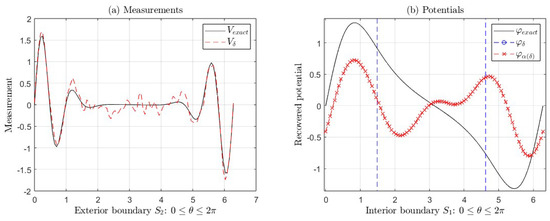

Considering , , and , we show the graphs for the following potentials (Figure 4) and (Figure 5) for where the following is true:

Figure 4.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for (see Table 3). In this case, we take and .

Figure 5.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for (see Table 4). In this case, we take and .

- (a)

- The exact measurement V and the measurement with error .

- (b)

- The exact potential and its approximations (with regularization) and (without regularization) taking and .

4.2.2. Case 2: , for and

Table 5 and Table 6 show the relative errors of the approximations and when for the two exact functions considered in Section 4.1.

Table 5.

Case 2: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

Table 6.

Case 2: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

In Table 5, we observe that for each value of N, , and m given in the mentioned table. Also, the and are of the same order, i.e., the solutions without regularization are close to regularized solutions , for , , 25, 30, , and . We can see similar results in Table 6 for , , 25, 30, , , , and 3; however the regularized approximates are better than the solutions without regularization. Furthermore, and these increase suddenly, starting at and (see Table 5 and Table 6) for the functions and for , respectively. As in the previous case, the regularization parameter changes depending on , N, m, and .

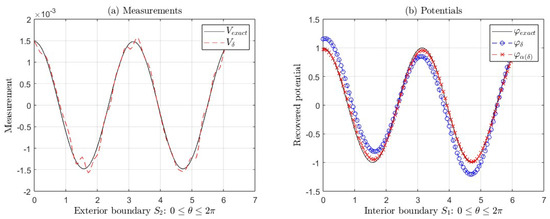

We show the graphs for the following functions:

- •

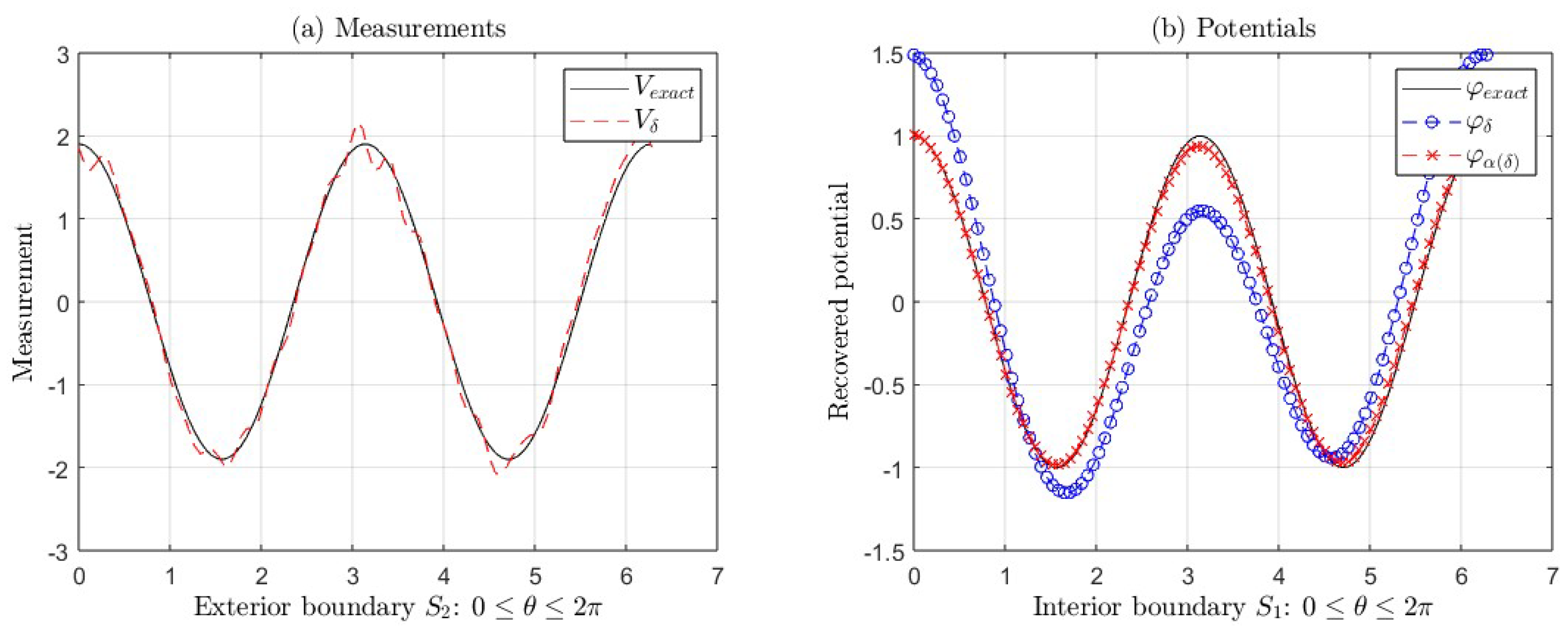

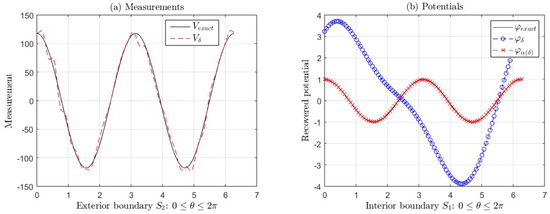

Figure 6. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 5). In this case, we take and .

Figure 6. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 5). In this case, we take and . Figure 7. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 5). In this case, we take and .

Figure 7. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 5). In this case, we take and .- •

- (Figure 8 with parameters , , , , and ).

Figure 8. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for , , and (see Table 6). In this case, we take and .

Figure 8. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for , , and (see Table 6). In this case, we take and .

For . These figures show the following:

- (a)

- The exact measurement V and the measurement with error .

- (b)

- The exact potential and its approximations (with regularization) and (without regularization).

In both cases, as mentioned in the previous paragraph, the errors increase suddenly, starting at for the first function and for the second one, as can be seen in Figure 7b and Figure 8b, where we can see the ill-posedness of the IP if we do not apply regularization. For example, for the second function, the is much greater than for , , and (see Table 6). However, in this same example, for , 10, 11, and 12, the increases around 90%. Nevertheless, is bigger than . In this case, we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP.

4.2.3. Case 3: , When Is Next to or m and

This section considers the case when and is next to or m. Table 7 and Table 8 show the relative errors of the approximations and when for the same two exact functions considered in Section 4.1.

Table 7.

Case 3: Numerical results applying TRM to solve the IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

Table 8.

Case 3: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

In Table 7, we observe that the are less than the for each value of N, , and m given in this table. We can see that and are of the same order for , 2, i.e., the solutions without regularization are close to regularized solutions . However, the regularized approximates are better than the solutions without regularization for , 2. Nonetheless, increases more than starting at . Furthermore, we can observe similar results in Table 8, where the are less than the for , 2, 3, 4, with , , and the different values of are close to m or given in this table. For the values of , 6, 7, and 8, the are around the percentage of the . For the other values of , 10, 11, and 12, given in Table 8, the corresponding increases around 90%, but no more than , i.e., the TRM does not provide a good approximate solution to the IP. In this case, we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP. Furthermore, the relative errors of the recovered solutions without applying regularization increase suddenly, starting at and (see Table 7 and Table 8) for the functions and for , respectively. As in the previous cases, the regularization parameter changes depending on , N, m, and .

We show the graphs for the following functions:

- •

- (Figure 9 with parameters , , , , and ).

Figure 9. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 7). In this case, we take and .

Figure 9. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 7). In this case, we take and . - •

- (Figure 10 with parameters , , , , and ).

Figure 10. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 8). In this case, we take and .

Figure 10. (a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 8). In this case, we take and .

For . These figures show the following:

- (a)

- The exact measurement V and the measurement with error .

- (b)

- The exact potential and its approximations (with regularization) and (without regularization).

In both cases, as mentioned in the previous paragraph, the errors increase starting at for the first function and starting at for the second one, as can be seen in Figure 9b and Figure 10b for , where we can see the ill-posedness of the IP if we do not apply regularization. For example, for the first function, the is greater than for , , and (see Table 7). For the second one, the is greater than for , , and (see Table 8). In this latter function, the approximate solution is far from the exact solution . In this case, we could apply an iterative method to obtain a better solution, taking as an initial point.

4.2.4. Case 4: , for ,…,12 and

In this Section, we consider the case when for , 3,…,12 and . Table 9 and Table 10 show the relative errors of the approximations and when , with the same two exact functions considered in the Section 4.1.

Table 9.

Case 4: Numerical results applying TRM to solve the IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

Table 10.

Case 4: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for , and different values of m, where , .

In Table 9, we observe that the from solutions with regularization are less than the . For some values of N, , and m given in this same table, we can see that and are of the same order, i.e., the solutions without regularization are close to regularized solutions ; however, the regularized solutions are better than the solutions without regularization. The increases faster than the starting at . Furthermore, we can observe similar results in Table 10, where the are of the same order as for , 3, 4, with , , except for . Nevertheless, the increases faster than the starting at . For the values of , 9, 10, 11, and 12, the increases between 40% and 90%, but no more than . In this case, the TRM does not provide a good approximate solution to the IP. However, as mentioned before, we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP. Also, the relative errors of the recovered solutions without applying regularization increase suddenly, starting at and (see Table 9 and Table 10) for the functions and for , respectively. Here also, as in the previous cases, the parameter of regularization changes depending on , N, m, and .

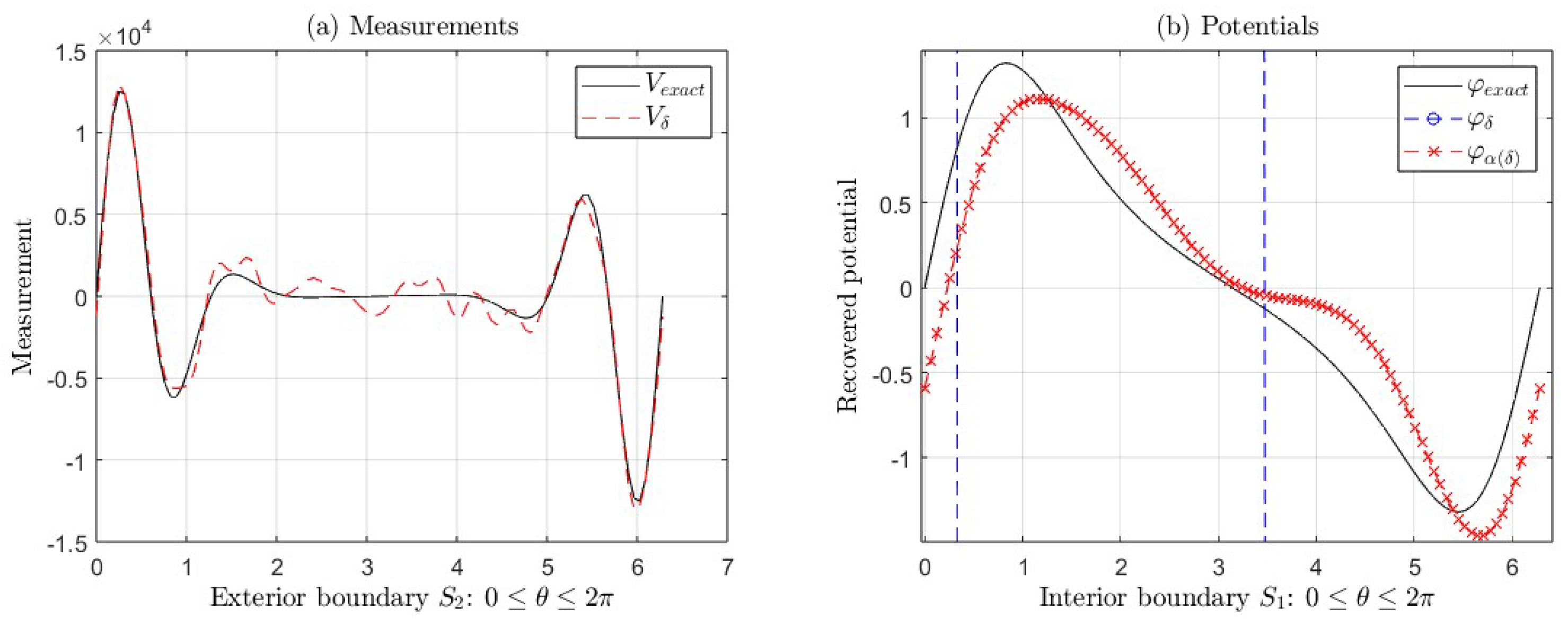

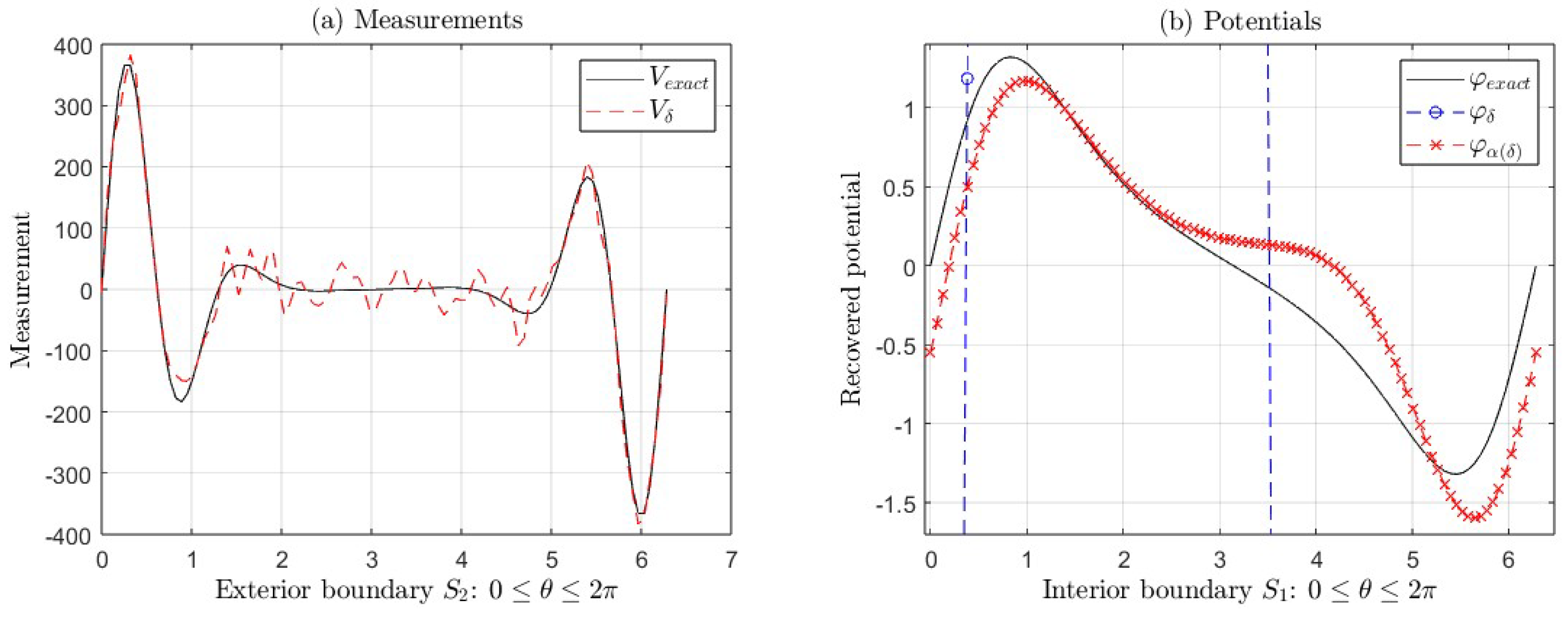

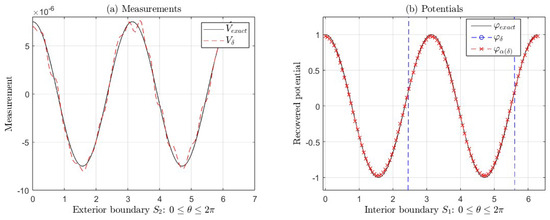

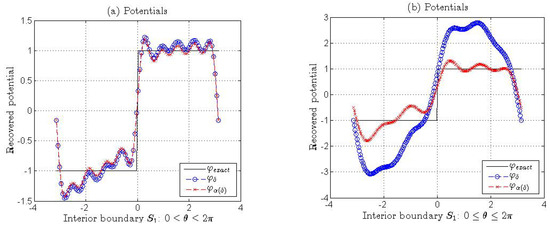

Figure 11 and Figure 12 show the graphs of the exact measurement V and with error with , the graphs of the exact potential and its approximations (with regularization) and (without regularization), corresponding to the functions and for , respectively. In both cases, as mentioned in the previous paragraph, the errors increase suddenly, starting at for the first function and starting at for the second one, as can be seen in Figure 11b and Figure 12b, where we can see the ill-posedness of the IP if we do not apply regularization. For example, for the first function, the relative error is greater than for , , and y (see Table 9). For the second one, the is greater than for , , and (see Table 10). In this case, we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP.

Figure 11.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 9). In this case, we take and .

Figure 12.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for , , and (see Table 10). In this case, we take and .

4.2.5. Case 5: , When Is Next to n or , Where , for and

In this section, we consider the case when , when is next to n or , where , for and . Table 11 and Table 12 show the relative errors of the approximations and when for the same two exact functions considered in Section 4.1.

Table 11.

Case 5: Numerical results applying TRM to solve the IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

Table 12.

Case 5: Numerical results applying TRM to solve the IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

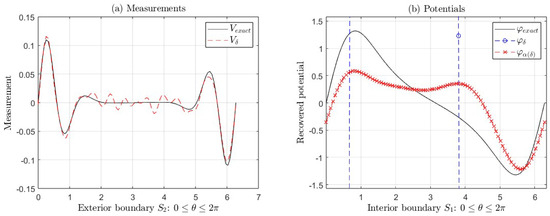

In Table 11, we observe that . For , we can see that and are of the same order when is next to 1 or 0 (taking ), i.e., the solutions without regularization are close to regularized solutions . However, the regularized approximates are better than the solutions without regularization. The increases faster than the starting at , as shown in Figure 13b for , , and , where and . These approximations, and , are recovered from measurements with error , shown in Figure 13a. Also, we can observe similar results in Table 12, where and are of the same order for , 3, 4, and when is next to n or (taking , 1, and 3, respectively), for , nevertheless the increases between 17% and 38%, but no more than the for , 6, 7, and 8. For the values of , 10, 11, and 12, the increases around 90%, but no more than . In this case, we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP. Nevertheless, the relative errors of the recovered solutions without applying regularization increase suddenly, starting at and (see Table 11 and Table 12) for the functions and for , respectively. Here, the regularization parameters also change depending on , N, m, and .

Figure 13.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 11). In this case, we take and .

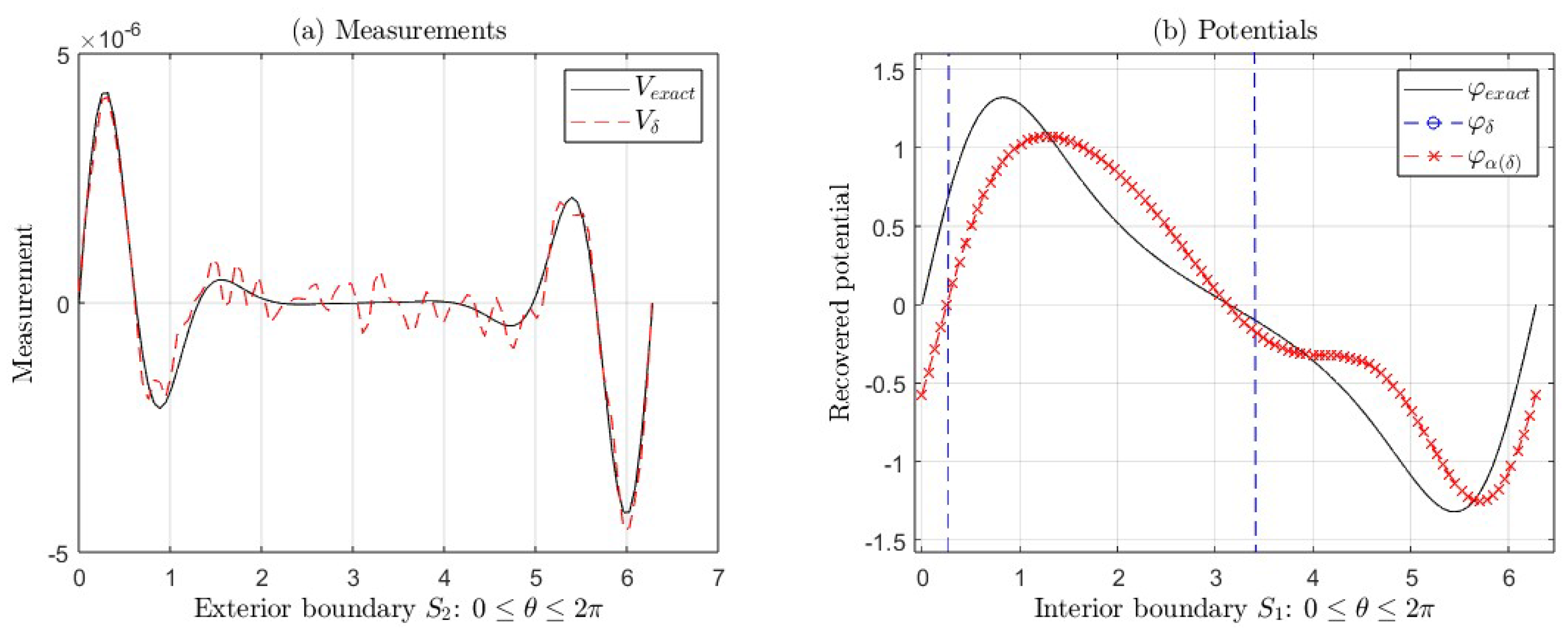

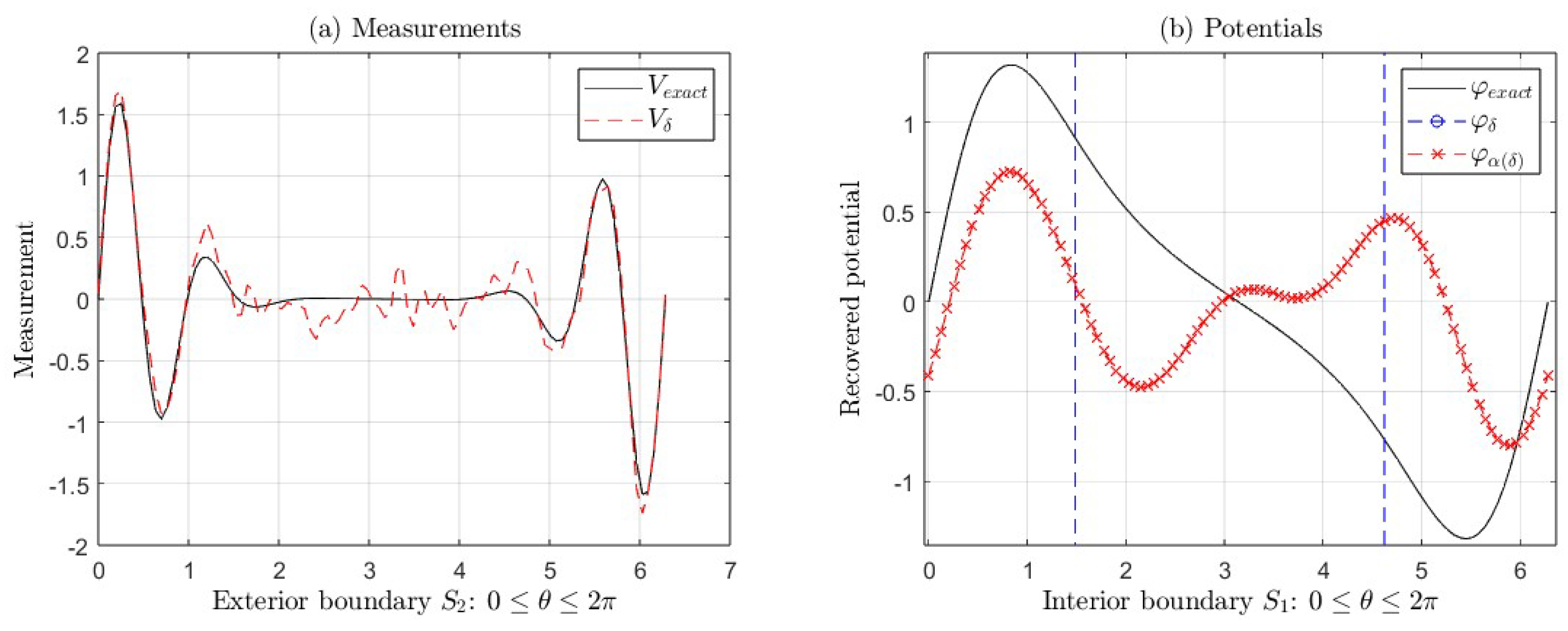

Figure 13, Figure 14, Figure 15 and Figure 16 show the graphs of the exact measurement V and with error with , the graphs of the exact potential and its approximations (with regularization) and (without regularization), corresponding to the functions and for , respectively. In both cases, as mentioned in the previous paragraph, the errors increase suddenly, starting at for the first function and starting at for the second one, as can be seen in Figure 14b, Figure 15b, and Figure 16b, where we can see the ill-posedness of the IP if we do not apply regularization for , 8, and , respectively. For example, for the approximations and shown in Figure 14b of the first function, the is much greater than for , , and (see Table 11). For the approximations and shown in Figure 15b of the second one, the is much greater than , for , , and y (see Table 12). Lastly, for the approximations and shown in Figure 16b of the second one, the is greater than for , , and (see Table 12). In these last two examples, when the approximate solutions are not close to the exact solution , we could use the regularized solution as an initial point of an iterative method to recover a better solution to the IP.

Figure 14.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 1 for , , and (see Table 11). In this case, we take and .

Figure 15.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for , , and (see Table 12). In this case, we take and .

Figure 16.

(a) Exact measurement V (black line) and with error (red line). (b) Exact potential and its approximations and , corresponding to Example 2 for , , and (see Table 12). In this case, we take and .

4.2.6. Case 6: , for ,…,13 and

In this case, we consider the case when , with for . Table 13 and Table 14 show the relative errors of the approximations and when for the same two exact functions considered in Section 4.1.

Table 13.

Case 6: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

Table 14.

Case 6: Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

In Table 13, we observe that . For , we can see that and are of the same order when , i.e., the solutions without regularization are close to regularized solutions . However, the regularized approximates are better than the solutions without regularization. Additionally, the relative errors of the solutions without regularization increase faster, starting at . Furthermore, we can observe similar results in Table 14. In this case, the relative errors from solutions with regularization are less than the for , and increase between 29% and 46% for , when . Nevertheless, the corresponding relative errors of the solutions with regularization increase between 88% and 96% for , but no more than the corresponding . The relative errors of the recovered solutions without regularization increase suddenly, starting at . For example, for and , the . For , the increases between 91% and 99%, but no more than the ), for , as well as for and with . Moreover, as in the previous cases, the regularization parameters change depending on the data with error , the values N, m, and . Analogous results can be obtained for values , as those obtained for , which are not included in this work.

In the following two examples, we have considered non-smooth functions.

Example 3.

We consider the ‘exact potential’ , for , which in polar coordinates is given by , for . Resembling the first example, the ‘exact measurement’ V and the ‘measurement with error’ are generated with the first N terms of the Fourier series (22) and (23), respectively, such that , with . For , . In this case, and the Fourier coefficients , are obtained numerically using the intrinsic function of .

Table 15 shows the numerical results for data without error, applying TRM to solve the IP of the classical Cauchy problem (2), where , 25, and 30. In this table, we can observe similar results to the previous examples where the regularized solutions are better.

Table 15.

Numerical results applying TRM to solve the IP related to the classical Cauchy problem (2), for , and different values of and N.

Table 16 shows the numerical results for data without error, applying TRM to solve the IP of the fractional Cauchy problem (12) for different values of , m, and N.

Table 16.

Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , .

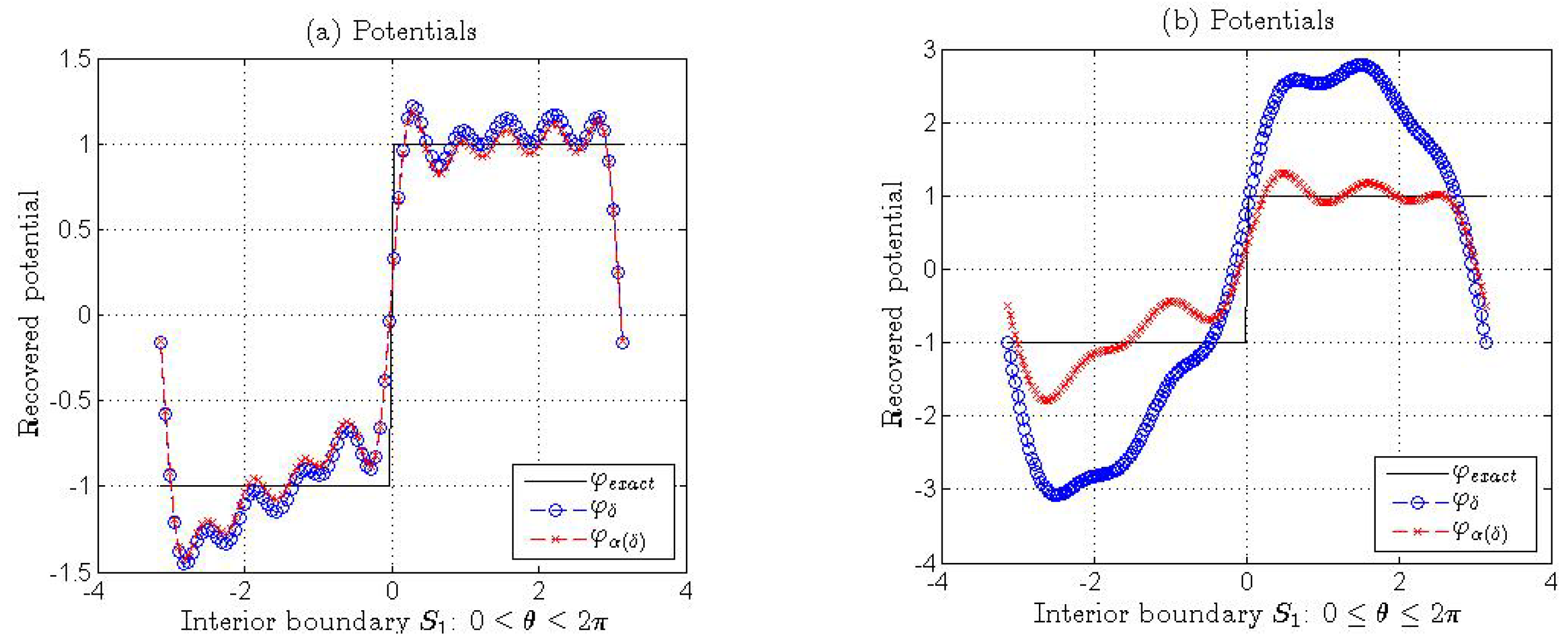

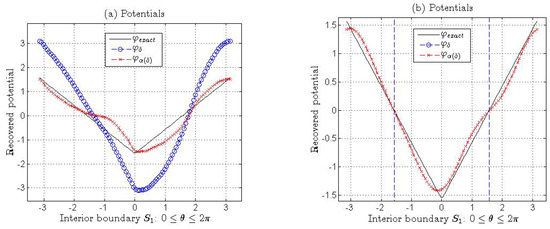

Figure 17a,b show the graphs of the exact potential and its approximations (with regularization) and (without regularization), corresponding to the function , for , for different values of , m, and N, respectively.

Figure 17.

(a) Exact potential and its approximations and , corresponding to Example 3 for , , , and . (b) Exact potential and its approximations and , corresponding to Example 3 for , , , and (see Table 16).

Example 4.

We consider the ‘exact potential’ in polar coordinates given by if and 1 if . Similar to the first example, the ‘exact measurement’ V and the ‘measurement with error’ are generated with the first N terms of the Fourier series (22) and (23), respectively, such that , with . For , . In this case, , and the Fourier coefficients , are obtained numerically using the intrinsic function of .

Table 17 shows the numerical results for data without error, applying TRM to solve the IP of the classical Cauchy problem (2), where , 25, and 30. In this table, we can observe similar results to the previous examples where the regularized solutions are better.

Table 17.

Numerical results applying TRM to solve the IP related to the classical Cauchy problem (2), for if and 1 if , and different values of and N.

Table 18 shows the numerical results for data without error, applying TRM to solve the IP of the fractional Cauchy problem (12) for different values of , m, and N.

Table 18.

Numerical results applying TRM to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where if and 1 if .

Figure 18a,b show the graphs of the exact potential and its approximations (with regularization) and (without regularization), corresponding to the function if and 1 if , for , for different values of , m, and N, respectively.

Figure 18.

(a) Exact potential and its approximations and , corresponding to Example 4 for , , , and . (b) Exact potential and its approximations and , corresponding to Example 4 for , , , and (see Table 18).

Examples 3 and 4 show numerical results analogous to the previous examples for both the classical and fractional cases. However, when we have piecewise constant functions, we truncate the series of approximate solutions to find a better approximation of the solution to the fractional Cauchy problem.

4.3. Solution to the IP Related to the Fractional Cauchy Problem Morozov Discrepancy Method and the Criterion of Tikhonov

In this subsection, the numerical results of the approximate solutions of the four examples presented above are calculated by the proposed method, choosing the regularization parameter by Morozov’s discrepancy method and by the Tikhonov criterion. The relative errors of the approximations given in Table 19, Table 20, Table 21, Table 22, Table 23, Table 24, Table 25 and Table 26 show that the best approximate solutions to the inverse problem are obtained by the L-curve criterion, as shown in Table 13, Table 14, Table 16, and Table 18, taking the same values of N, , , and m from these same examples for Table 19, Table 20, Table 21, Table 22, Table 23, Table 24, Table 25 and Table 26, respectively.

Table 19.

Numerical results choosing the regularization parameter with the discrepancy principle of Morozov to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . According to the results of the table and additional experiments, the discrepancy method fails when and , as well as for some values of .

Table 20.

Numerical results choosing the regularization parameter with the Tikhonov criterion to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . Similar results have been obtained for other values of using this criterion.

Table 21.

Numerical results applying Morozov discrepancy principle to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . According to the results, the discrepancy principle has problems when and , and for and .

Table 22.

Numerical results choosing the regularization parameter with the Tikhonov criterion to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . In almost all cases, the L-curve method gives better results.

Table 23.

Numerical results choosing the regularization parameter with the discrepancy principle of Morozov to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . In almost all cases, the L-curve method gives better results.

Table 24.

Numerical results choosing the regularization parameter with the Tikhonov criterion to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where , . In almost all cases, the L-curve method gives better results.

Table 25.

Numerical results choosing the regularization parameter with the discrepancy principle of Morozov to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where if and 1 if . In almost all cases, the L-curve method gives better results. In this Table, .

Table 26.

Numerical results choosing the regularization parameter with the Tikhonov criterion to solve IP related to the fractional Cauchy problem (12) for and different values of and m, where if and 1 if . In almost all cases, the L-curve method gives better results.

5. Discussion

The numerical tests show that the proposed algorithm usually gives good results. Even if the numerical results are unsatisfactory, they are enough to start an iterative method. In all cases, the regularized method is worth more than the method without regularization. After some numerical tests, we found that the series expansion of the solution to the fractional Cauchy problem can be truncated in , , or .

When for , the results obtained are similar, i.e., the results obtained with and without regularization almost coincide. One possible explanation can be associated with the smoothing properties of the integral operator to have similar results when , for . In the other cases, the regularized case is better.

When , the regularized method loses precision. However, the approximate solution obtained can be used as an initial point of a stable iterative method. From the numerical results, we want to emphasize that the solution by the Tikhonov regularization method of the classical Cauchy problem works adequately in all cases.

The Tikhonov regularization parameter was very large in some cases. We do not have an explanation for this situation, but we consider this an interesting topic that must be studied in future works. According to numerical results, in almost all cases, the best approximate solutions to the inverse problem are obtained by the L-curve criterion. According to the results, the discrepancy principle has problems when and .

In the classical Cauchy problem, the adjoint operator is associated with a boundary value problem called the adjoint problem. In the fractional Cauchy problem, we calculate the adjoint operator using its definition. One interesting question is whether a boundary value problem is associated with the adjoint operator. If the answer is positive, the following question arises: Can the adjoint operator be used in irregular regions? This is an interesting question whose answer can help us apply numerical methods to find the minimum of the functional since we have to solve boundary value problems. One of the most used methods to find such a minimum is the conjugate gradient method in combination with the finite element method.

6. Conclusions

This work proposes an algorithm to solve the fractional Cauchy problem obtained from the Tikhonov regularization and the circular harmonics. The regularization was obtained using the L-curve method, Morozov’s discrepancy principle, and numerical tests by the Tikhonov criterion. The numerical results show that the algorithm is feasible for various parameters. The discrepancy principle presents some problems in finding the regularization parameter for some values of the parameters appearing in the fractional Cauchy problem.

In almost all cases, the L-curve method gives better results than the Tikhonov Criterion and Morozov’s discrepancy principle. In all cases, the regularization using the L-curve method gives better results than without regularization. In some cases, despite not being a good approximation, the regularized solution is much better than the solution without regularization. Since the algorithm does not give good results in some cases, it must be improved using an iterative method, which takes the regularized solution as an initial point. This point might be future work.

Author Contributions

Conceptualization, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., and J.J.O.O.; methodology, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., and J.J.O.O.; software, J.J.C.M., J.A.A.V. and E.H.M.; validation, J.J.C.M., J.A.A.V., and J.J.O.O.; formal analysis, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., C.A.H.G., and J.J.O.O.; investigation, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., C.A.H.G., and J.J.O.O.; resources, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., C.A.H.G., and J.J.O.O.; data curation, J.J.C.M., J.A.A.V., and J.J.O.O.; writing—original draft preparation, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., C.A.H.G., and J.J.O.O.; writing—review and editing, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., and J.J.O.O.; visualization, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., C.A.H.G., and J.J.O.O.; supervision, J.J.C.M. and J.J.O.O.; project administration, J.J.C.M. and J.J.O.O.; funding acquisition, J.J.C.M., J.A.A.V., E.H.M., M.M.M.C., and J.J.O.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by VIEP-BUAP withthe support provided, to research project number 151. The National Council for Humanities, Sciences, and Technologies in Mexico (CONAHCYT) also provided partial funding through a PhD scholarship for the second author, with CVU number 1030555.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare that they have no competing interests.

Appendix A. Computation of the Derivatives of Jα

If , then

Thus, . For the second derivative, we have

Hence, .

Appendix B. Proof That the Functional Jα (f) = Is Convex

Theorem A1.

The functional

is convex.

Proof.

We want to show that

for all , with , and for all .

Starting with the left-hand side,

By the Cauchy–Schwarz inequality:

we obtain

Applying the inequality: , if . Considering that and , then,

Then

Therefore, is convex. □

Theorem A2.

The functional

is convex.

Proof.

Assuming the operator is linear, we have

where .

By the Cauchy–Schwarz inequality:

and using the inequality if . Considering that and , we obtain

Combining these results,

Therefore, is convex. □

Thus, from the last two theorems, we have that the functional is convex.

References

- Conde Mones, J.J.; Juárez Valencia, L.H.; Oliveros Oliveros, J.J.; León Velasco, D.A. Stable numerical solution of the Cauchy problem for the Laplace equation in irregular annular regions. Numer. Methods Partial Differ. Equ. 2017, 33, 1799–1822. [Google Scholar] [CrossRef]

- Oliveros, J.; Morín, M.; Conde, J.; Fraguela, A. A regularization strategy for the inverse problem of identification of bioelectrical sources for the case of concentric spheres. Far East J. Appl. Math. 2013, 77, 1–20. [Google Scholar]

- Lee, J.Y.; Yoon, J.R. A numerical method for Cauchy problem using singular value decomposition. Commun. Korean Math. Soc. 2001, 16, 487–508. [Google Scholar]

- Wei, T.; Chen, Y.G. A regularization method for a Cauchy problem of Laplace’s equation in an annular domain. Math. Comput. Simul. 2012, 82, 2129–2144. [Google Scholar] [CrossRef]

- Zhou, D.; Wei, T. The method of fundamental solutions for solving a Cauchy problem of Laplace’s equation in a multi-connected domain. Inverse Probl. Sci. Eng. 2008, 16, 389–411. [Google Scholar] [CrossRef]

- Chang, J.R.; Yeih, W.; Shieh, M.H. On the modified Tikhonov’s regularization method for the Cauchy problem of the Laplace equation. J. Mar. Sci. Technol. 2001, 9, 113–121. [Google Scholar] [CrossRef]

- Gong, X.; Yang, S. A local regularization scheme of Cauchy problem for the Laplace equation on a doubly connected domain. Bound. Value Probl. 2023, 2023, 30. [Google Scholar] [CrossRef]

- Cheng, J.; Hon, Y.C.; Wei, T.; Yamamoto, M. Numerical computation of a Cauchy problem for Laplace’s equation. ZAMM-J. Appl. Math. Mech. Angew. Math. Mech. Appl. Math. Mech. 2001, 81, 665–674. [Google Scholar] [CrossRef]

- Borachok, I.; Chapko, R.; Tomas Johansson, B. Numerical solution of a Cauchy problem for Laplace equation in 3-dimensional domains by integral equations. Inverse Probl. Sci. Eng. 2016, 24, 1550–1568. [Google Scholar] [CrossRef]

- Hào, D.N.; Lesnic, D. The Cauchy problem for Laplace’s equation via the conjugate gradient method. IMA J. Appl. Math. 2000, 65, 199–217. [Google Scholar] [CrossRef]

- Caubet, F.; Dardé, J.; Godoy, M. On the data completion problem and the inverse obstacle problem with partial Cauchy data for Laplace’s equation. ESAIM Control Optim. Calc. Var. 2019, 25, 30. [Google Scholar] [CrossRef]

- Amdouni, S.; Ben Abda, A. The Cauchy problem for Laplace’s equation via a modified conjugate gradient method and energy space approaches. Math. Methods Appl. Sci. 2023, 46, 3560–3582. [Google Scholar] [CrossRef]

- León-Velasco, A.; Glowinski, R.; Juárez-Valencia, L.H. On the controllability of diffusion processes on the surface of a torus: A computational approach. Pac. J. Optim. 2015, 11, 763–790. [Google Scholar]

- Conde Mones, J.J.; Estrada Aguayo, E.R.; Oliveros Oliveros, J.J.; Hernández Gracidas, C.A.; Morín Castillo, M.M. Stable identification of sources located on interface of nonhomogeneous media. Mathematics 2021, 9, 1932. [Google Scholar] [CrossRef]

- Berntsson, F.; Lars, E. Numerical solution of a Cauchy problem for the Laplace equation. Inverse Probl. 2001, 17, 839–853. [Google Scholar] [CrossRef]

- Kress, R. Inverse Dirichlet problem and conformal mapping. Math. Comput. Simul. 2004, 66, 255–265. [Google Scholar] [CrossRef]

- Clerc, M.; Kybic, J. Cortical mapping by Laplace-Cauchy transmission using a boundary element method. Inverse Probl. 2007, 23, 2589–2601. [Google Scholar] [CrossRef][Green Version]

- Denisov, A.M.; Zakharov, E.V.; Kalinin, A.V.; Kalinin, V.V. Numerical solution of an inverse electrocardiography problem for a medium with piecewise constant electrical conductivity. Comput. Math. Math. Phys. 2010, 50, 1172–1177. [Google Scholar] [CrossRef]

- Kalinin, A.; Potyagaylo, D.; Kalinin, V. Solving the inverse problem of electrocardiography on the endocardium using a single layer source. Front. Physiol. 2019, 10, 58. [Google Scholar] [CrossRef]

- Ruiz de Miras, J.; Derchi, C.-C.; Atzori, T.; Mazza, A.; Arcuri, P.; Salvatore, A.; Navarro, J.; Saibene, F.L.; Meloni, M.; Comanducci, A. Spatio-Temporal Fractal Dimension Analysis from Resting State EEG Signals in Parkinson’s Disease. Entropy 2023, 25, 1017. [Google Scholar] [CrossRef]

- Sirpal, P.; Sikora, W.A.; Refai, H.H.; Yang, Y. Association between Opioid Dependence and Scale Free Fractal Brain Activity: An EEG Study. Fractal Fract. 2023, 7, 659. [Google Scholar] [CrossRef]

- Perez-Sanchez, A.V.; Valtierra-Rodriguez, M.; Perez-Ramirez, C.A.; De-Santiago-Perez, J.J.; Amezquita-Sanchez, J.P. Epileptic Seizure Prediction Using Wavelet Transform, Fractal Dimension, Support Vector Machine, and EEG Signals. Fractals 2022, 30, 2250154. [Google Scholar] [CrossRef]

- Karaoulanis, D.; Lazopoulou, N.; Lazopoulos, K. On A-Fractional Derivative and Human Neural Network. Axioms 2023, 12, 136. [Google Scholar] [CrossRef]

- Turmetov, B.K.; Nazarova, K.D. On a generalization of the Neumann problem for the Laplace equation. Math. Nachrichten 2019, 293, 169–177. [Google Scholar] [CrossRef]

- Kadirkulov, B.J.; Kirane, M. On solvability of a boundary value problem for the Poisson equation with a nonlocal boundary operator. Acta Math. Sci. 2015, 35B, 970–980. [Google Scholar] [CrossRef]

- Kirsch, A. An Introduction to the Mathematical Theory of Inverse Problems, 2nd ed.; Springer: New York, NY, USA, 2011; Volume 120, Applied Mathematical Sciences. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).