Real-Time Monitoring of Parameters and Diagnostics of the Technical Condition of Small Unmanned Aerial Vehicle’s (UAV) Units Based on Deep BiGRU-CNN Models

Abstract

1. Introduction

2. Description of the Proposed Diagnostic and Monitoring Technique

- Estimated deviation of the expected single parameter value, specifying the deviation magnitude calculated by reducing the normalized parameter value to its measurement range;

- Estimated deviation of the set of parameters, specifying the deviation as a percentage of the normalized value of the expected set of parameters;

- The fault classifier output value, which is one of the possible types, specifying the estimated probability of this fault class.

- Preparing the training data—the use of archived data on the UAV unit parameters and faults during flights with normalization and reduction to the maximum processing frequency.

- Training the neural network with the proposed CompactNeuroUAV architecture with the generation of the required target value:

- Estimating the individual parameter deviation from the expected value (Algorithm 1 with );

- Estimating the deviation of the set of parameters (Algorithm 1 with );

- Estimating the availability and probability of a fault or pre-failure condition (Algorithm 2).

- Validating the developed models.

- Integrating the developed models into the UAV control system.

| Algorithm 1 Algorithm for estimating the deviation of any UAV parameter or a set of parameters from the expected reference as a neural network model with the CompactNeuroUAV Architecture |

|

- At each time instant, a set of input values of normalized parameter-time matrices is generated, where the timestamp is within the range , …, ;

- This set of normalized parameters is processed by the CompactNeuroUAV neural network; as a result, for each time instant, an actual compact aggregate representation of the parameter sets is generated in real-time;

- Deviations of the compact aggregated representation from the expected reference are estimated; the used model is marked as EstimateDeviationCompactNeuroUAV[i] in Algorithm 1;

- In the presence of a pre-trained classifier, a compact aggregated representation is used to estimate the presence of a certain-class fault or a pre-failure condition as ClassifierCompactNeuroUAV[i] in Algorithm 2.

| Algorithm 2 Algorithm for identifying the UAV faults or pre-failure conditions based on neural network models with the CompactNeuroUAV architecture |

|

3. An Example of Implementing the Technique for a Fixed-Wing Type UAV

- Two-meter wingspan;

- Front engine;

- Ailerons;

- Flaperons (flap ailerons);

- Elevator;

- Rudder.

- i

- Complete engine fault (shutdown);

- ii

- Elevator fault in a horizontal position;

- iii

- Rudder fault in the full right, full left, or middle position;

- iv

- Fault of the ailerons with the simulation of right, left, or both ailerons stuck in a horizontal position.

- i

- Labeling the experimental time series data:

- (a)

- Experimental flight data are divided into time intervals of 1 second; for signals with a sampling frequency less than the maximum 25 Hz, the dataset is completed by linear interpolation;

- (b)

- A label characterizing the presence of a fault is assigned to each interval from paragraph 1.a: flight without a fault, flight with a fault, transition interval for the fault manifestation. Transition intervals are chosen with the fault simulation start moments falling on different interval regions;

- (c)

- The resulting set of intervals is split into training (40%), cross-validation (10%), and test (50%) sets, considering the interval type (Table 1) and fault label (paragraph 1.b).

- ii

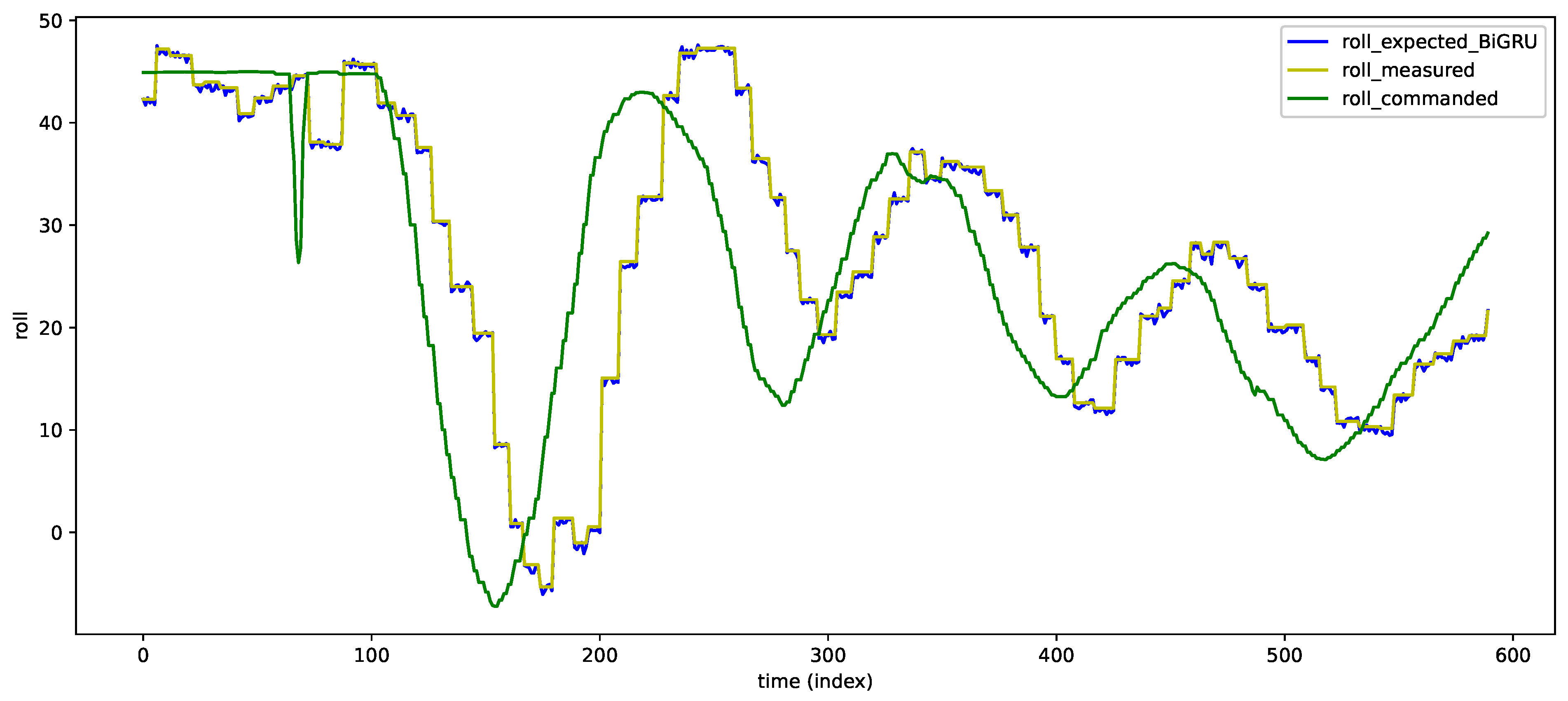

- The time series obtained at stage 1 is normalized by the measured parameter range for the UAV position GPS data and the actual and target roll, pitch, speed, and yaw values measured by the sensors and set by the autopilot.

- iii

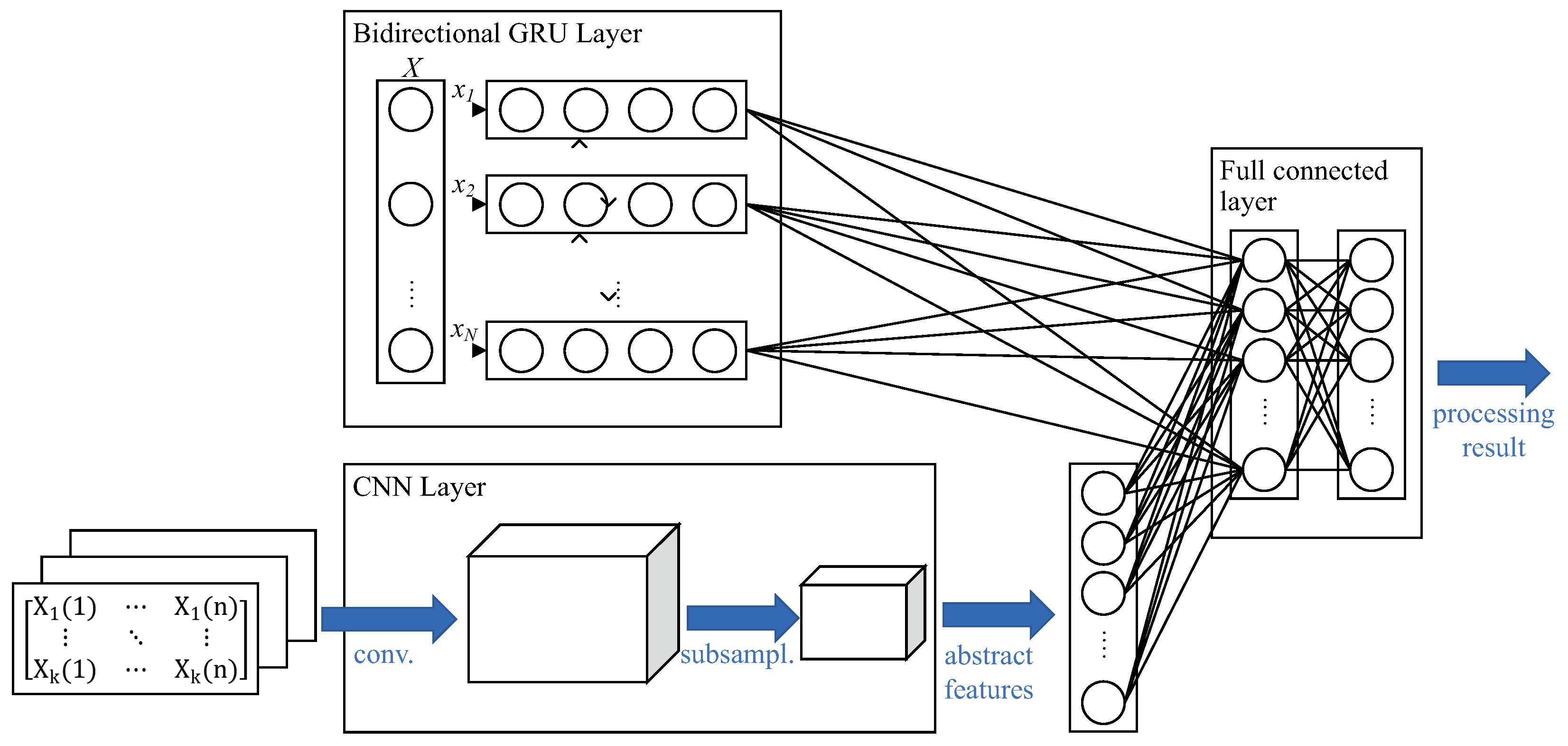

- Deep neural network models with the proposed CompactNeuroUAV architecture are trained using training and cross-validation sets:

- (a)

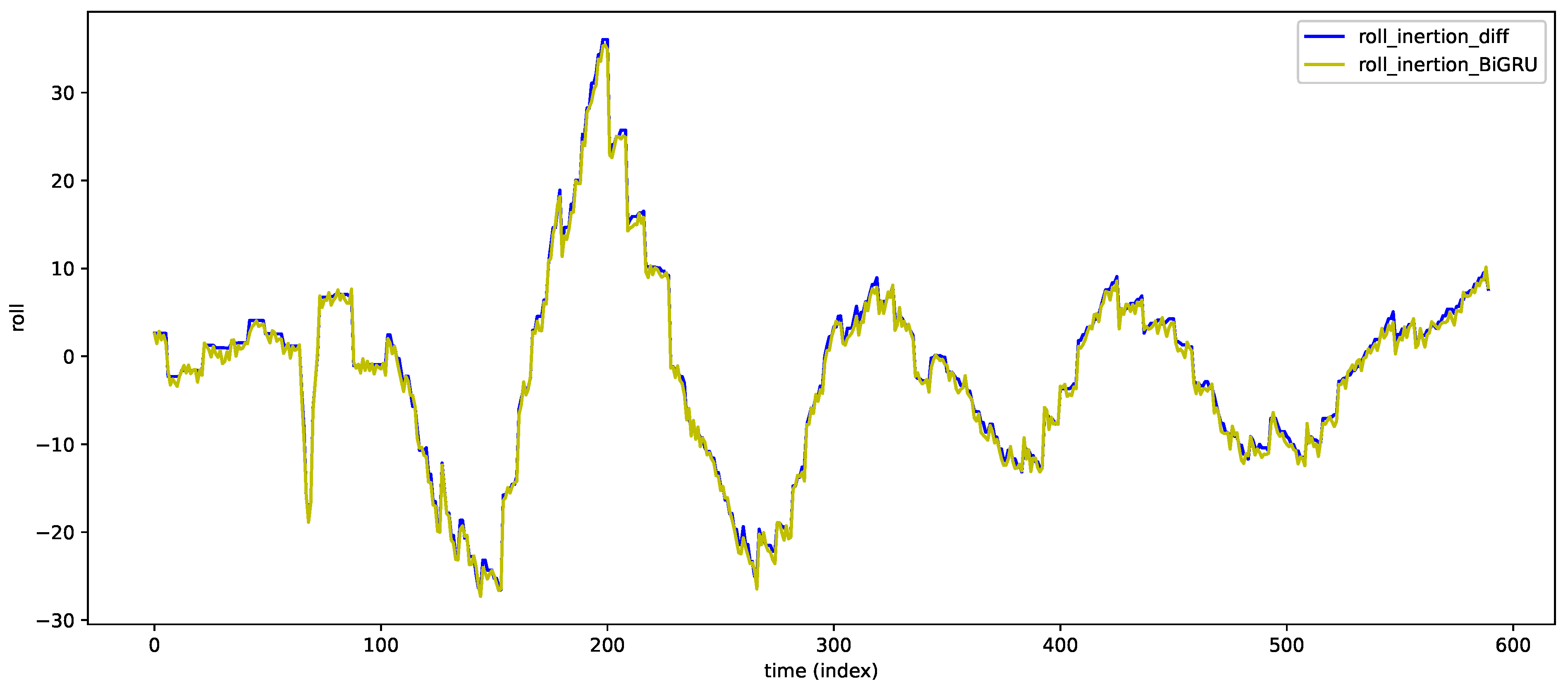

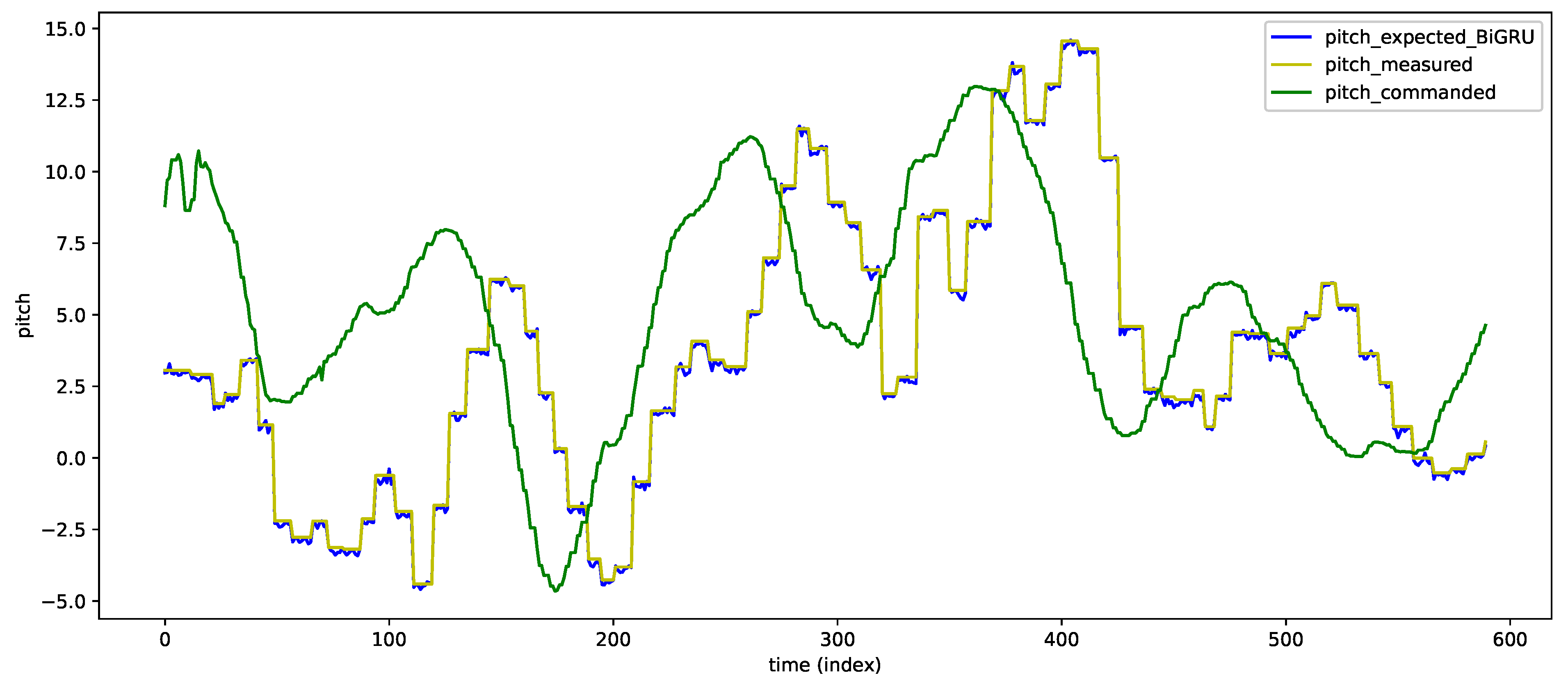

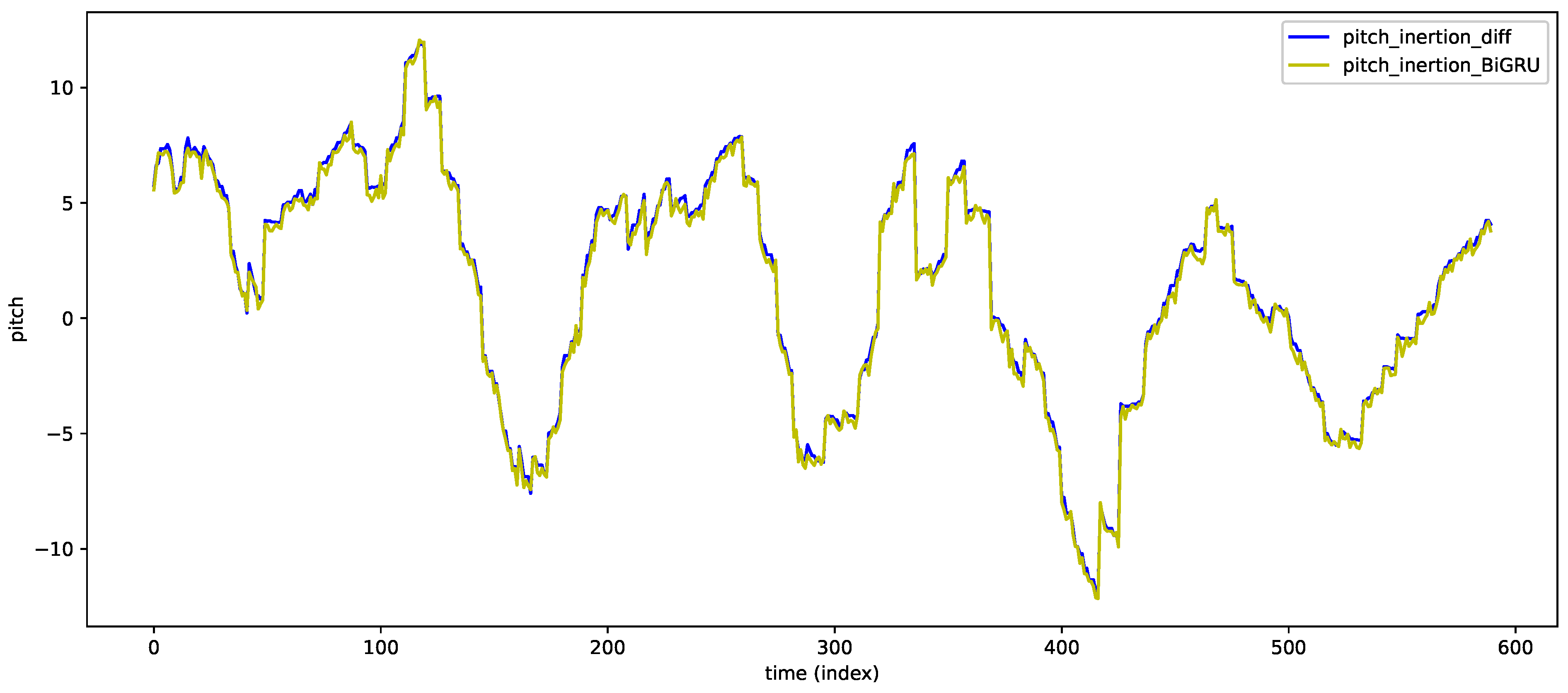

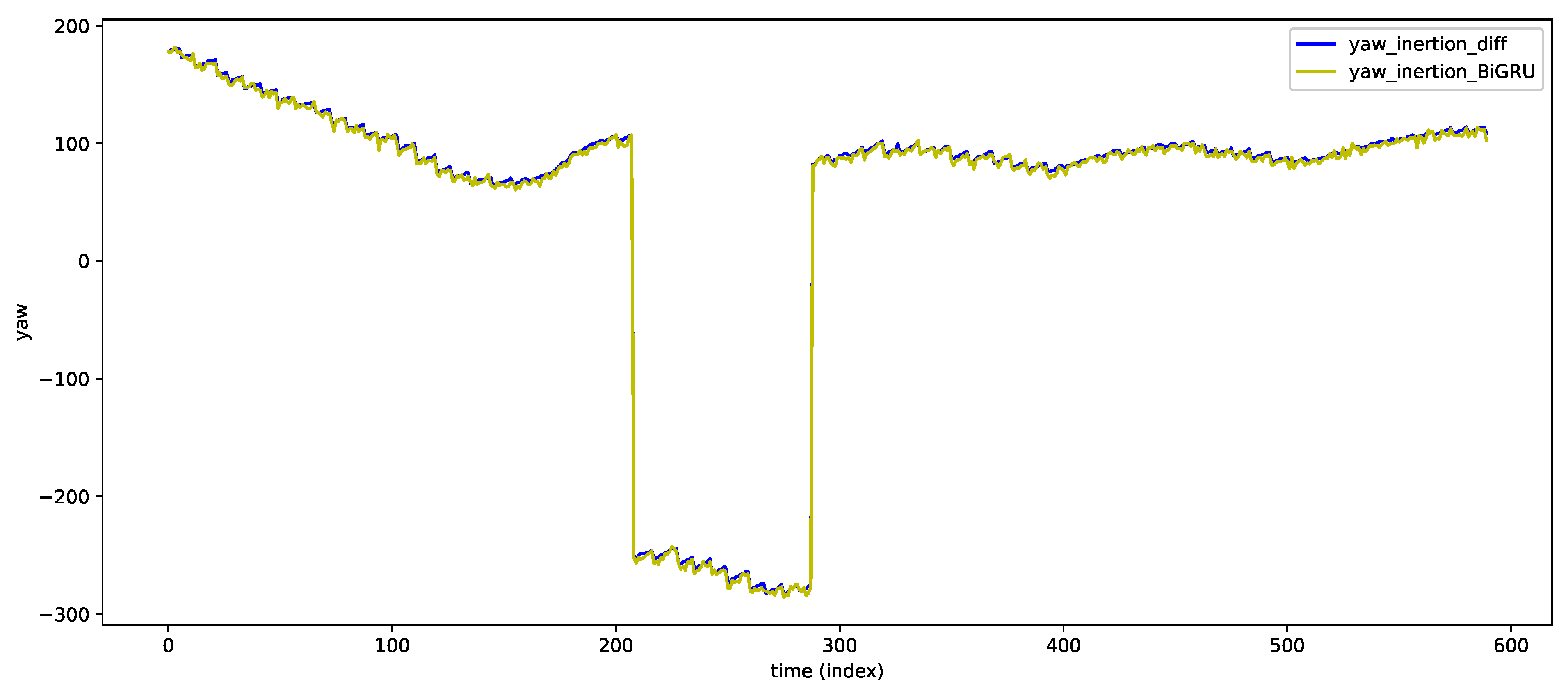

- To estimate the deviation of a single pitch, roll, or yaw parameter from the expected reference considering the actuator response inertia;

- (b)

- To estimate the deviation of the set of roll, pitch, speed, and yaw parameters from the expected reference;

- (c)

- Classifier model to estimate the presence of a fault or pre-failure condition, specifying the estimated probability of this fault class

- iv

- The trained models are tested on a test set.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

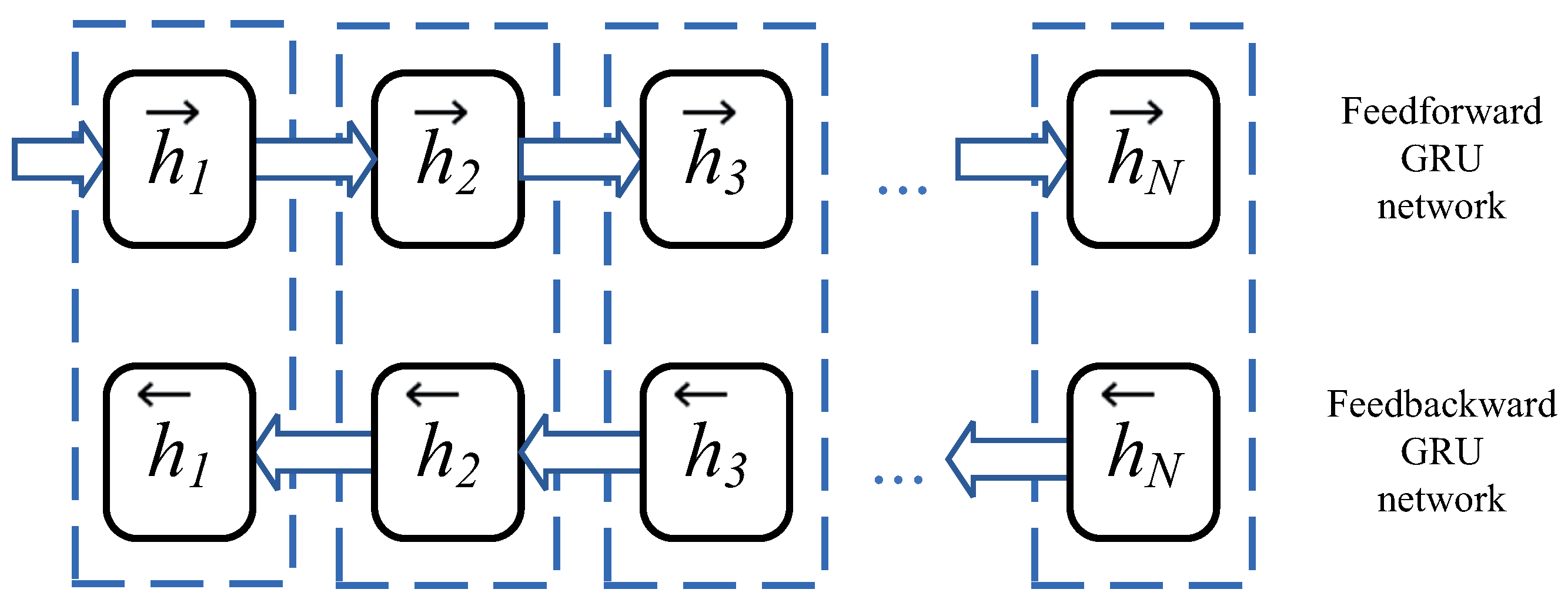

| GRU | Gated Recurrent Unit |

| RBF | Radial Basis Function |

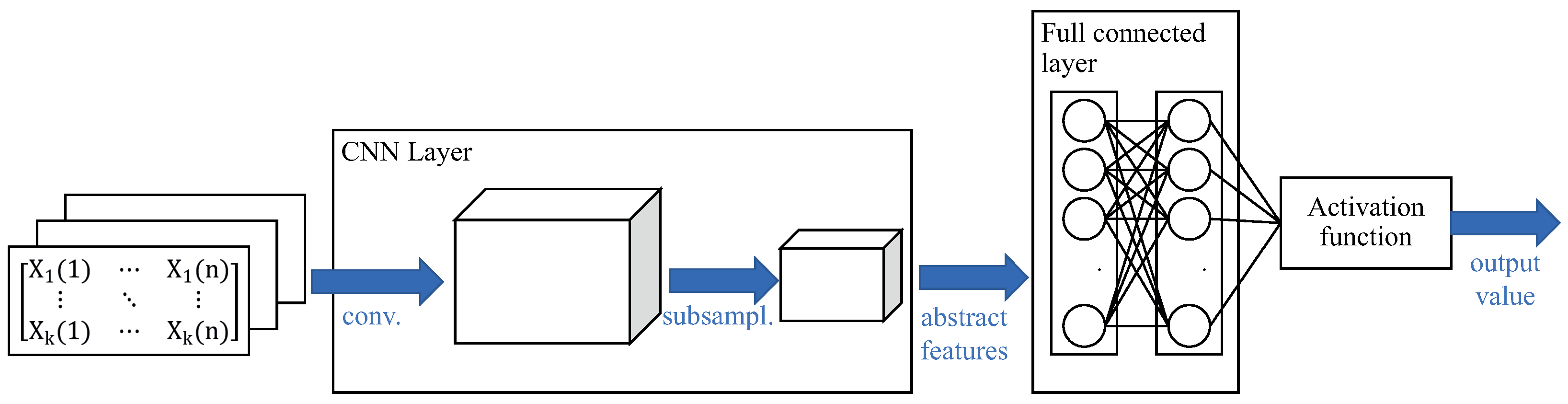

| CNN | Convolutional Neural Network |

| BiGRU | Bidirectional Gated Recurrent Unit Neural Network |

| NN | Neural Network |

| FP | False Positive (classification result) |

| FN | False Negative (classification result) |

| GPS | Global Positioning System |

References

- Levin, M.; Smirnov, V.; Ulanov, M.; Davidchuk, A.; Buravlev, D.; Zimin, S. Method for Technical Control and Diagnostics of Onboard Systems of Unmanned Aerial Vehicle with Decision Support and Complex of Control and Checking Equipment with Intelligent Decision Support System for Its Implementation. RU Patent RU 2 557 771 C1, 27 July 2015. [Google Scholar]

- Dolzhikov, V.; Ryzhakov, S.; Perfiliev, O. Aircraft Intelligent Troubleshooting System. RU Patent RU 2 680 945 C1, 17 August 2019. [Google Scholar]

- Dolzhikov, V.; Ryzhakov, S.; Perfiliev, O. Intelligent Aircraft Maintenance System. RU Patent RU 2 729 110 C1, 4 August 2020. [Google Scholar]

- Pandey, A.; Jain, K. An intelligent system for crop identification and classification from UAV images using conjugated dense convolutional neural network. Comput. Electron. Agric. 2022, 192, 106543. [Google Scholar] [CrossRef]

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement distress detection using convolutional neural networks with images captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Ishengoma, F.S.; Rai, I.A.; Ngoga, S.R. Hybrid convolution neural network model for a quicker detection of infested maize plants with fall armyworms using UAV-based images. Ecol. Inform. 2022, 67, 101502. [Google Scholar] [CrossRef]

- Behera, T.K.; Bakshi, S.; Sa, P.K. Vegetation Extraction from UAV-based Aerial Images through Deep Learning. Comput. Electron. Agric. 2022, 198, 107094. [Google Scholar] [CrossRef]

- Medaiyese, O.O.; Ezuma, M.; Lauf, A.P.; Guvenc, I. Wavelet transform analytics for RF-based UAV detection and identification system using machine learning. Pervasive Mob. Comput. 2022, 82, 101569. [Google Scholar] [CrossRef]

- Wei, L.; Luo, Y.; Xu, L.; Zhang, Q.; Cai, Q.; Shen, M. Deep Convolutional Neural Network for Rice Density Prescription Map at Ripening Stage Using Unmanned Aerial Vehicle-Based Remotely Sensed Images. Remote Sens. 2022, 14, 46. [Google Scholar] [CrossRef]

- Wang, X.; Sun, S.; Tao, C.; Xu, B. Neural sliding mode control of low-altitude flying UAV considering wave effect. Comput. Electr. Eng. 2021, 96, 107505. [Google Scholar] [CrossRef]

- Zhao, D.; Liu, Y.; Wu, X.; Dong, H.; Wang, C.; Tang, J.; Shen, C.; Liu, J. Attitude-Induced error modeling and compensation with GRU networks for the polarization compass during UAV orientation. Measurement 2022, 190, 110734. [Google Scholar] [CrossRef]

- Abo Mosali, N.; Shamsudin, S.S.; Mostafa, S.A.; Alfandi, O.; Omar, R.; Al-Fadhali, N.; Mohammed, M.A.; Malik, R.Q.; Jaber, M.M.; Saif, A. An Adaptive Multi-Level Quantization-Based Reinforcement Learning Model for Enhancing UAV Landing on Moving Targets. Sustainability 2022, 14, 8825. [Google Scholar] [CrossRef]

- Puente-Castro, A.; Rivero, D.; Pazos, A.; Fernandez-Blanco, E. Using Reinforcement Learning in the Path Planning of Swarms of UAVs for the Photographic Capture of Terrains. Eng. Proc. 2021, 7, 32. [Google Scholar] [CrossRef]

- Miao, Q.; Wei, J.; Wang, J.; Chen, Y. Fault Diagnosis Algorithm Based on Adjustable Nonlinear PI State Observer and Its Application in UAV Fault Diagnosis. Algorithms 2021, 14, 119. [Google Scholar] [CrossRef]

- Vitanov, I.; Aouf, N. Fault detection and isolation in an inertial navigation system using a bank of unscented H∞ filters. In Proceedings of the 2014 UKACC International Conference on Control (CONTROL), Loughborough, UK, 9–11 July 2014; pp. 250–255. [Google Scholar] [CrossRef]

- Samy, I.; Postlethwaite, I.; Gu, D.W.; Fan, I.S. Detection of multiple sensor faults using neural networks- demonstrated on a unmanned air vehicle (UAV) model. In Proceedings of the UKACC International Conference on Control 2010, Coventry, UK, 7–10 September 2010; pp. 1–7. [Google Scholar] [CrossRef]

- Yun-hong, G.; Ding, Z.; Yi-bo, L. Small UAV sensor fault detection and signal reconstruction. In Proceedings of the 2013 International Conference on Mechatronic Sciences, Electric Engineering and Computer (MEC), Shenyang, China, 22–22 December 2013; pp. 3055–3058. [Google Scholar] [CrossRef]

- Li, D.; Yang, P.; Liu, Z.; Liu, J. Fault Diagnosis for Distributed UAVs Formation Based on Unknown Input Observer. In Proceedings of the Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 4996–5001. [Google Scholar] [CrossRef]

- Rotondo, D.; Cristofaro, A.; Johansen, T.; Nejjari, F.; Puig, V. Detection of icing and actuators faults in the longitudinal dynamics of small UAVs using an LPV proportional integral unknown input observer. In Proceedings of the 2016 3rd Conference on Control and Fault-Tolerant Systems (SysTol), Barcelona, Spain, 7–9 September 2016; pp. 690–697. [Google Scholar] [CrossRef][Green Version]

- Yang, X.; Mejias, L.; Warren, M.; Gonzalez, F.; Upcroft, B. Recursive Actuator Fault Detection and Diagnosis for Emergency Landing of UASs. IFAC Proc. Vol. 2014, 47, 2495–2502. [Google Scholar] [CrossRef]

- Zogopoulos-Papaliakos, G.; Karras, G.C.; Kyriakopoulos, K.J. A Fault-Tolerant Control Scheme for Fixed-Wing UAVs with Flight Envelope Awareness. J. Intell. Robot. Syst. 2021, 102, 46. [Google Scholar] [CrossRef]

- Slim, M.; Saied, M.; Mazeh, H.; Shraim, H.; Francis, C. Fault-Tolerant Control Design for Multirotor UAVs Formation Flight. Gyroscopy Navig. 2021, 12, 166–177. [Google Scholar] [CrossRef]

- Muslimov, T. Adaptation Strategy for a Distributed Autonomous UAV Formation in Case of Aircraft Loss. arXiv 2022, arXiv:2208.02502. [Google Scholar] [CrossRef]

- Valueva, M.; Nagornov, N.; Lyakhov, P.; Valuev, G.; Chervyakov, N. Application of the residue number system to reduce hardware costs of the convolutional neural network implementation. Math. Comput. Simul. 2020, 177, 232–243. [Google Scholar] [CrossRef]

- Tsantekidis, A.; Passalis, N.; Tefas, A.; Kanniainen, J.; Gabbouj, M.; Iosifidis, A. Forecasting Stock Prices from the Limit Order Book Using Convolutional Neural Networks. In Proceedings of the IEEE 19th Conference on Business Informatics (CBI), Thessaloniki, Greece, 24–27 July 2017; Volume 1, pp. 7–12. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 30 October 2022).

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar] [CrossRef]

- Gruber, N.; Jockisch, A. Are GRU Cells More Specific and LSTM Cells More Sensitive in Motive Classification of Text? Front. Artif. Intell. 2020, 3, 40. [Google Scholar] [CrossRef] [PubMed]

- Keipour, A.; Mousaei, M.; Scherer, S. Automatic Real-time Anomaly Detection for Autonomous Aerial Vehicles. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5679–5685. [Google Scholar] [CrossRef]

- Keipour, A.; Mousaei, M.; Scherer, S. ALFA: A dataset for UAV fault and anomaly detection. Int. J. Robot. Res. 2021, 40, 515–520. [Google Scholar] [CrossRef]

| Fault Type | Number of Experimental Flights | Flight Duration Before Fault (s) | Flight Duration with Fault (s) |

|---|---|---|---|

| No fault | 10 | 558 | - |

| Engine thrust loss | 23 | 2282 | 362 |

| Full left rudder fault (rudder control loss) | 1 | 60 | 9 |

| Full right rudder fault (rudder control loss) | 2 | 107 | 32 |

| Elevator fault in a horizontal position (loss of elevator control) | 2 | 181 | 23 |

| Left aileron control loss in a horizontal position | 3 | 228 | 183 |

| Right aileron control loss in a horizontal position | 4 | 442 | 231 |

| Loss of control over both ailerons in a horizontal position | 1 | 66 | 36 |

| Loss of control over the rudder and ailerons stuck in a horizontal position | 1 | 116 | 27 |

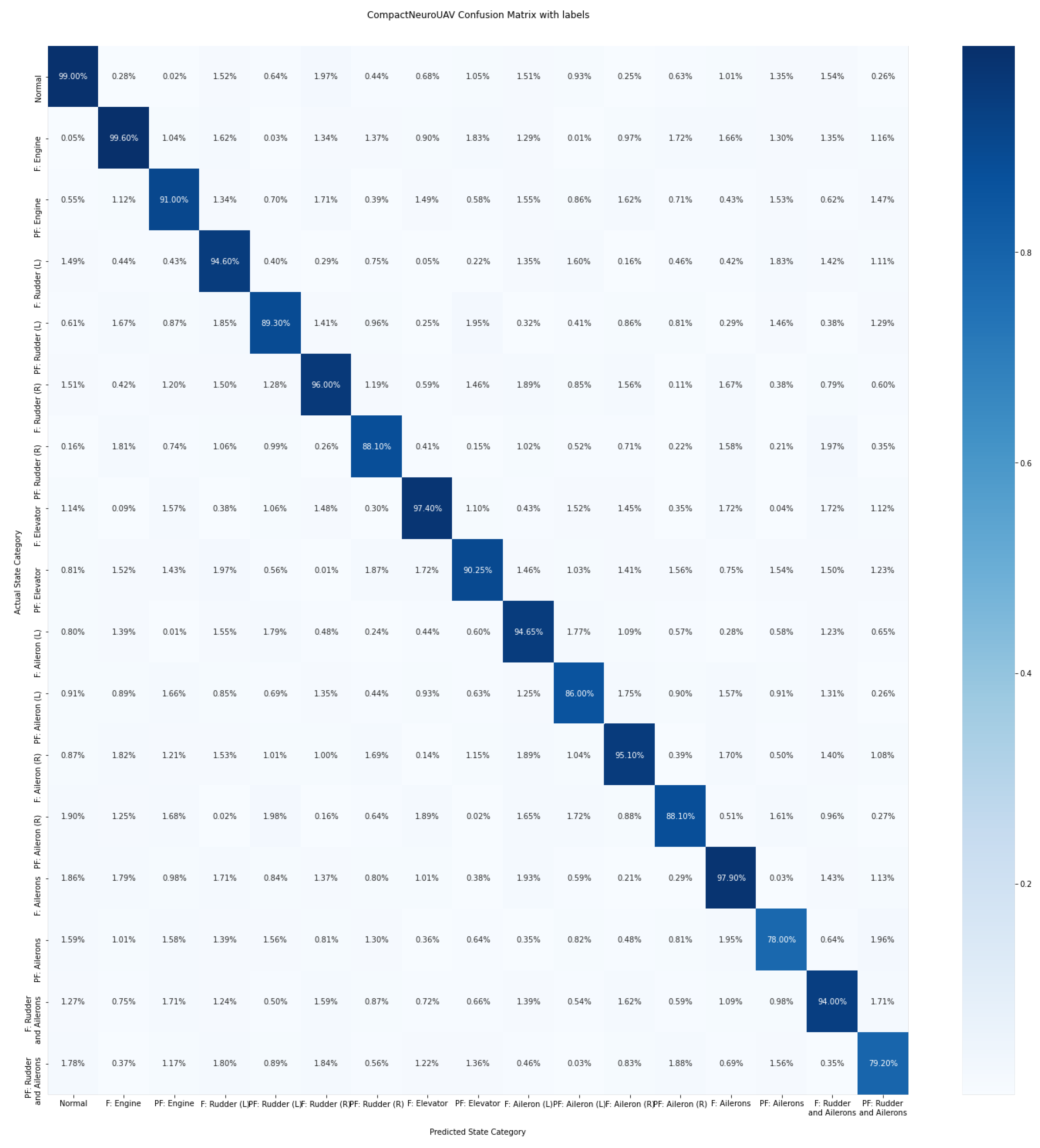

| Fault Type | Class Identification Accuracy | FP Proportion (of the Total Dataset) | FN Proportion |

|---|---|---|---|

| No fault | 99% | 0.1% | 0.25% |

| Engine thrust loss—fault | 99.6% | 0.5% | 0.05% |

| Engine thrust loss—pre-failure condition | 91% | 1.4% | 1.56% |

| Full left rudder fault (rudder control loss)—fault | 94.6% | 0.14% | 0.03% |

| Full left rudder fault (rudder control loss)—pre-failure condition | 89.3% | 5.1% | 3.2% |

| Full right rudder fault (rudder control loss)—fault | 96% | 0.26% | 0.15% |

| Full right rudder fault (rudder control loss)—pre-failure condition | 88.1% | 6.7% | 3.6% |

| Elevator fault in a horizontal position (loss of elevator control)—fault | 97.4% | 0.12% | 0.83% |

| Elevator fault in a horizontal position (loss of elevator control)—pre-failure condition | 90.25% | 4.85% | 4.19% |

| Left aileron control loss in a horizontal position—fault | 94.65% | 0.51% | 0.3% |

| Left aileron control loss in a horizontal position—pre-failure condition | 86% | 7% | 3.3% |

| Right aileron control loss in a horizontal position—fault | 95.1% | 0.63% | 0.66% |

| Right aileron control loss in a horizontal position—pre-failure condition | 88.1% | 6.09% | 2.91% |

| Loss of control over both ailerons in a horizontal position—fault | 97.9% | 0.08% | 0.03% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Masalimov, K.; Muslimov, T.; Munasypov, R. Real-Time Monitoring of Parameters and Diagnostics of the Technical Condition of Small Unmanned Aerial Vehicle’s (UAV) Units Based on Deep BiGRU-CNN Models. Drones 2022, 6, 368. https://doi.org/10.3390/drones6110368

Masalimov K, Muslimov T, Munasypov R. Real-Time Monitoring of Parameters and Diagnostics of the Technical Condition of Small Unmanned Aerial Vehicle’s (UAV) Units Based on Deep BiGRU-CNN Models. Drones. 2022; 6(11):368. https://doi.org/10.3390/drones6110368

Chicago/Turabian StyleMasalimov, Kamil, Tagir Muslimov, and Rustem Munasypov. 2022. "Real-Time Monitoring of Parameters and Diagnostics of the Technical Condition of Small Unmanned Aerial Vehicle’s (UAV) Units Based on Deep BiGRU-CNN Models" Drones 6, no. 11: 368. https://doi.org/10.3390/drones6110368

APA StyleMasalimov, K., Muslimov, T., & Munasypov, R. (2022). Real-Time Monitoring of Parameters and Diagnostics of the Technical Condition of Small Unmanned Aerial Vehicle’s (UAV) Units Based on Deep BiGRU-CNN Models. Drones, 6(11), 368. https://doi.org/10.3390/drones6110368