Improved Image Synthesis with Attention Mechanism for Virtual Scenes via UAV Imagery

Abstract

1. Introduction

2. Materials and Methods

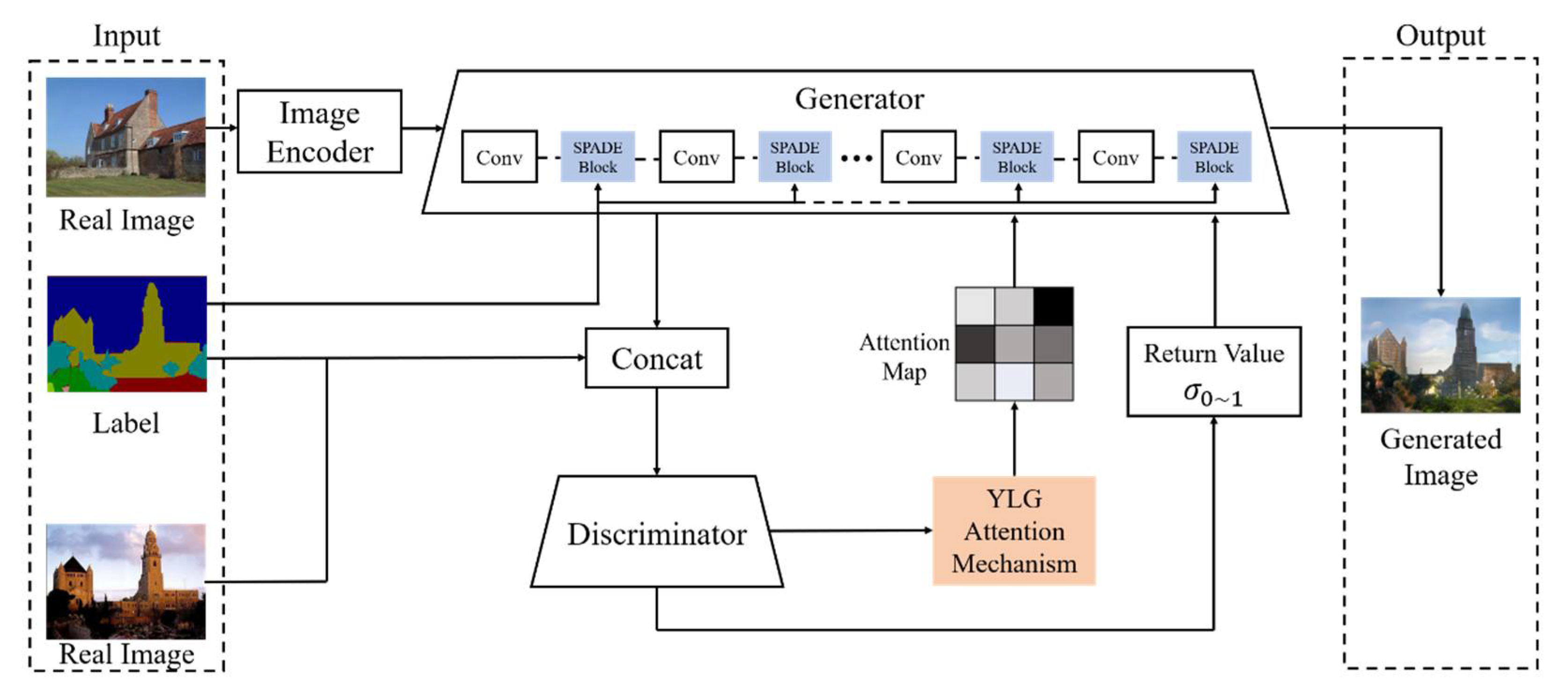

2.1. Main Idea

- (1)

- Adjusting GAN as a main framework

- (2)

- Importing spatially adaptive normalization module SPADE into the generator

- (3)

- Adding attention mechanism YLG

2.2. SYGAN Model

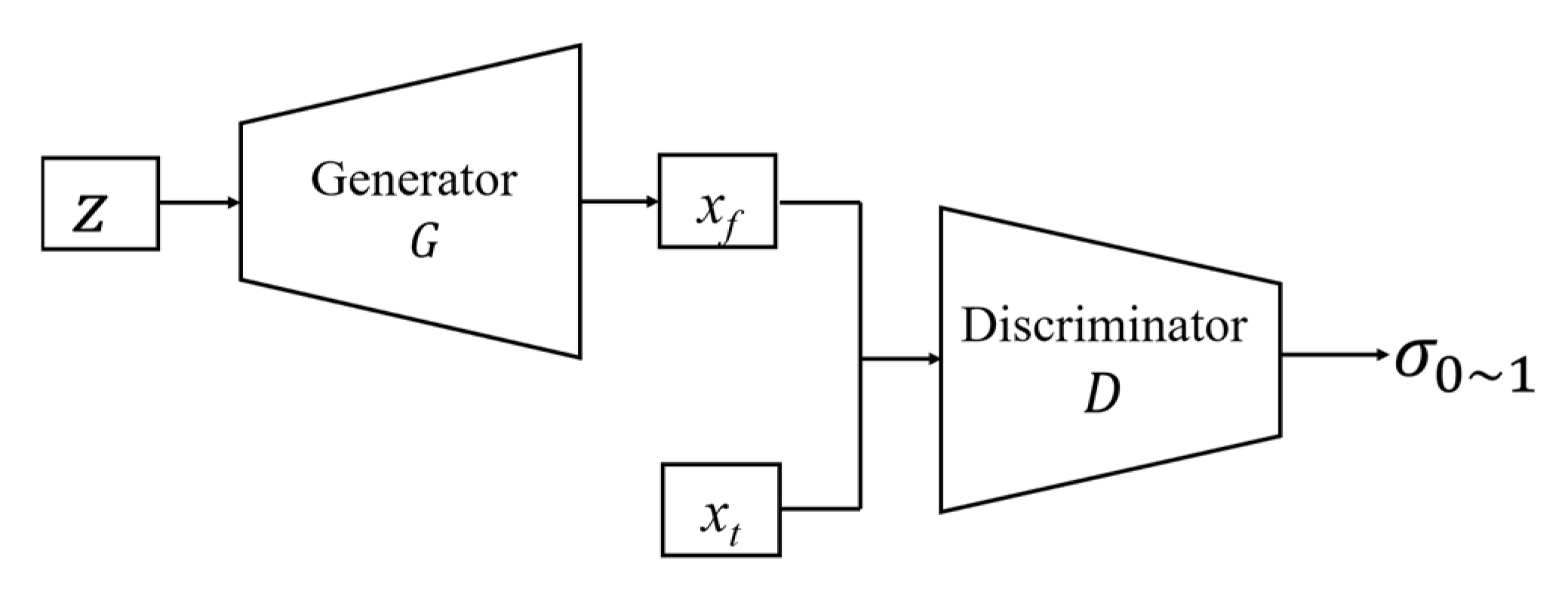

2.2.1. Adjusting GAN as Main Framework

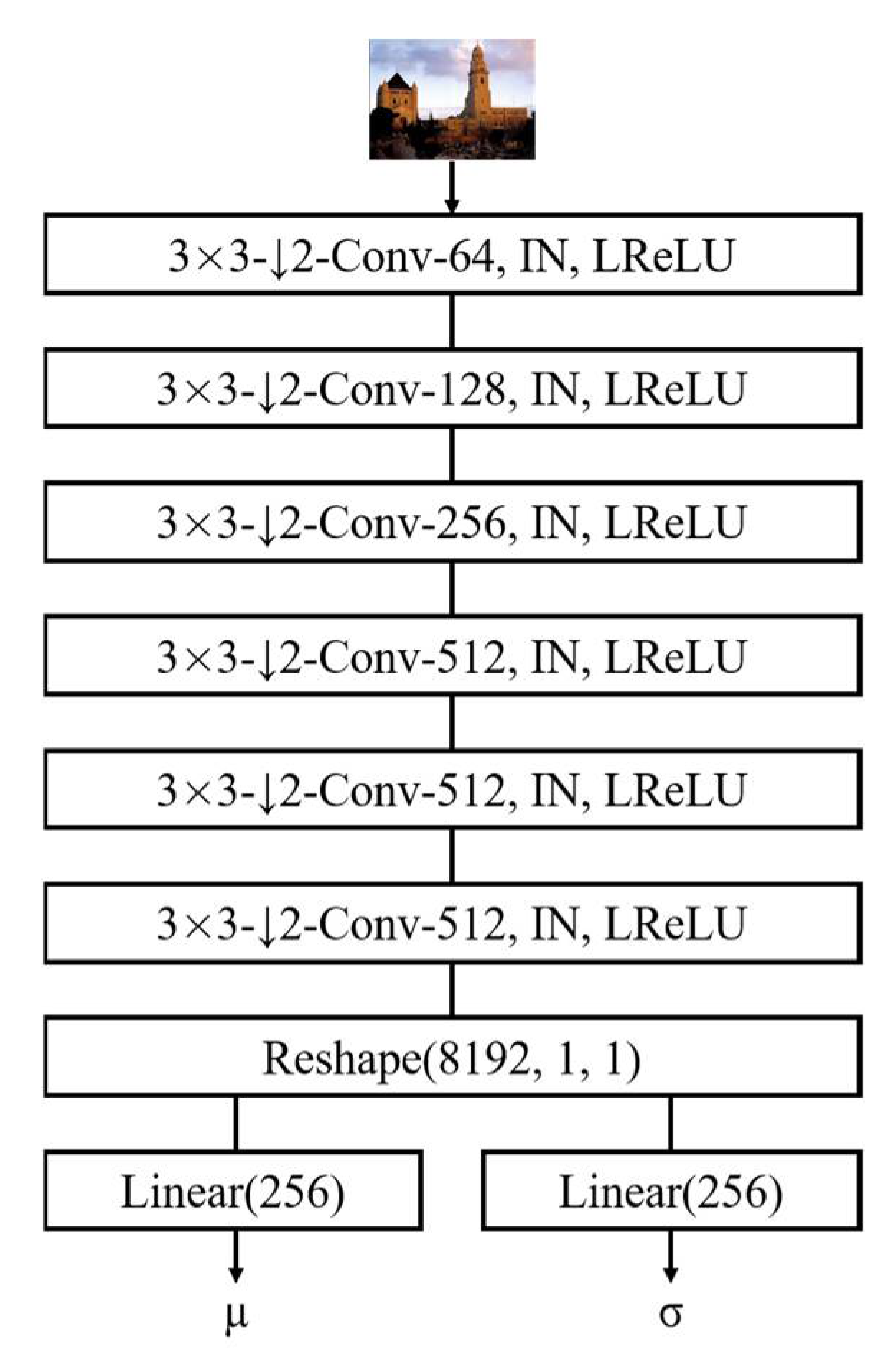

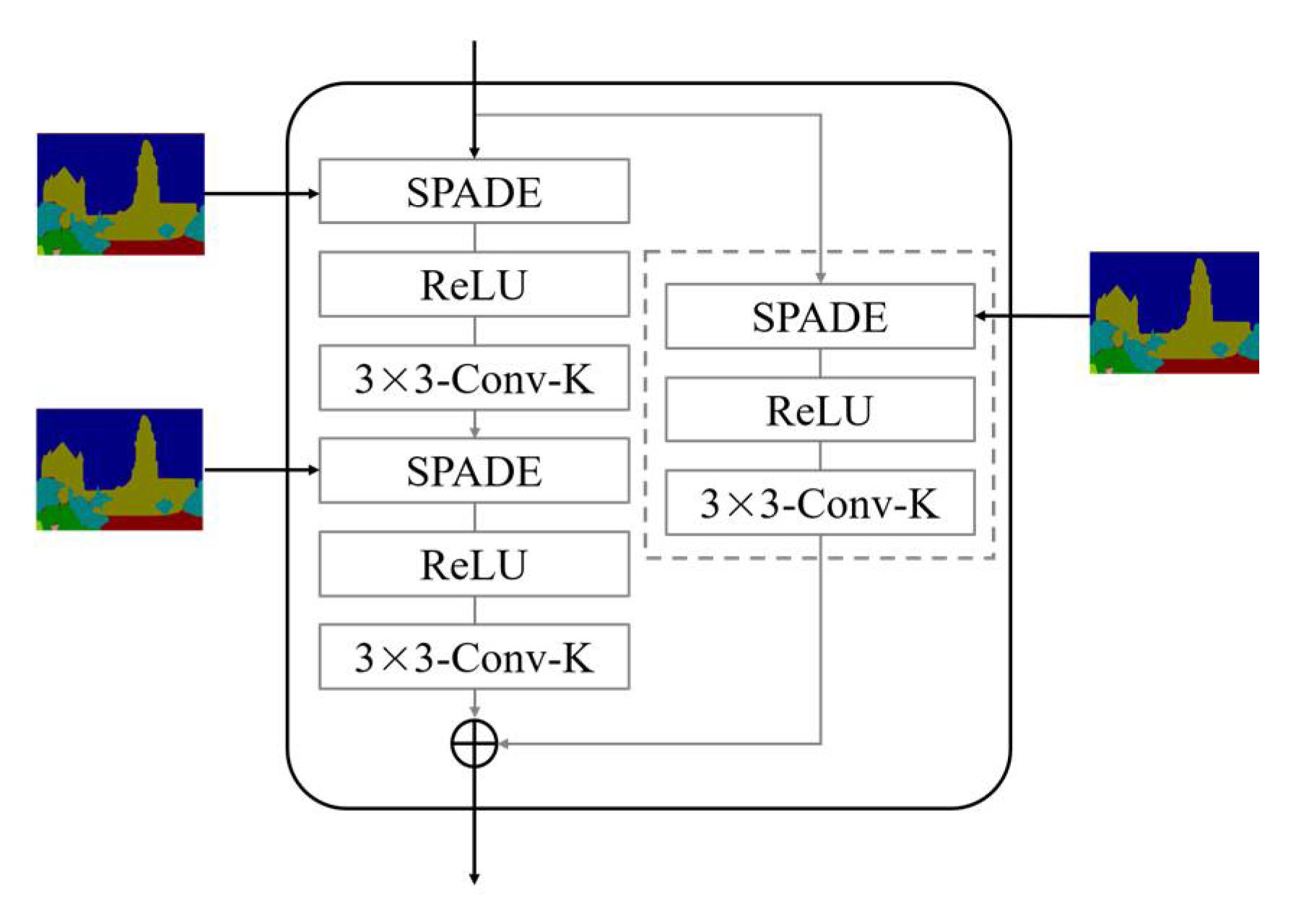

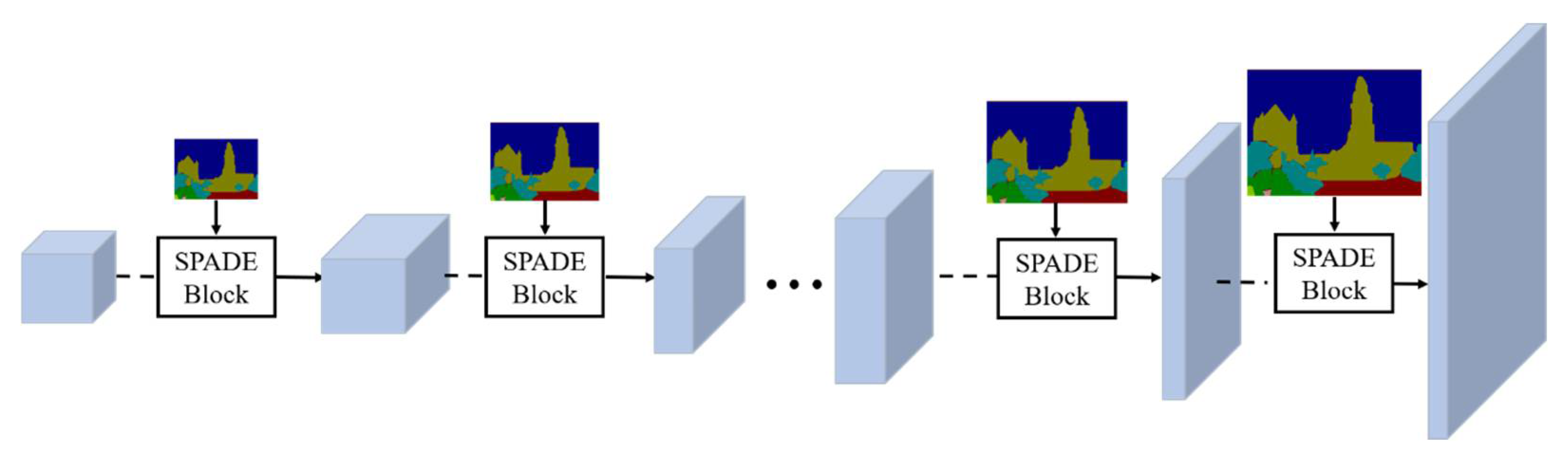

2.2.2. Importing Spatially Adaptive Normalization Module SPADE into Generator

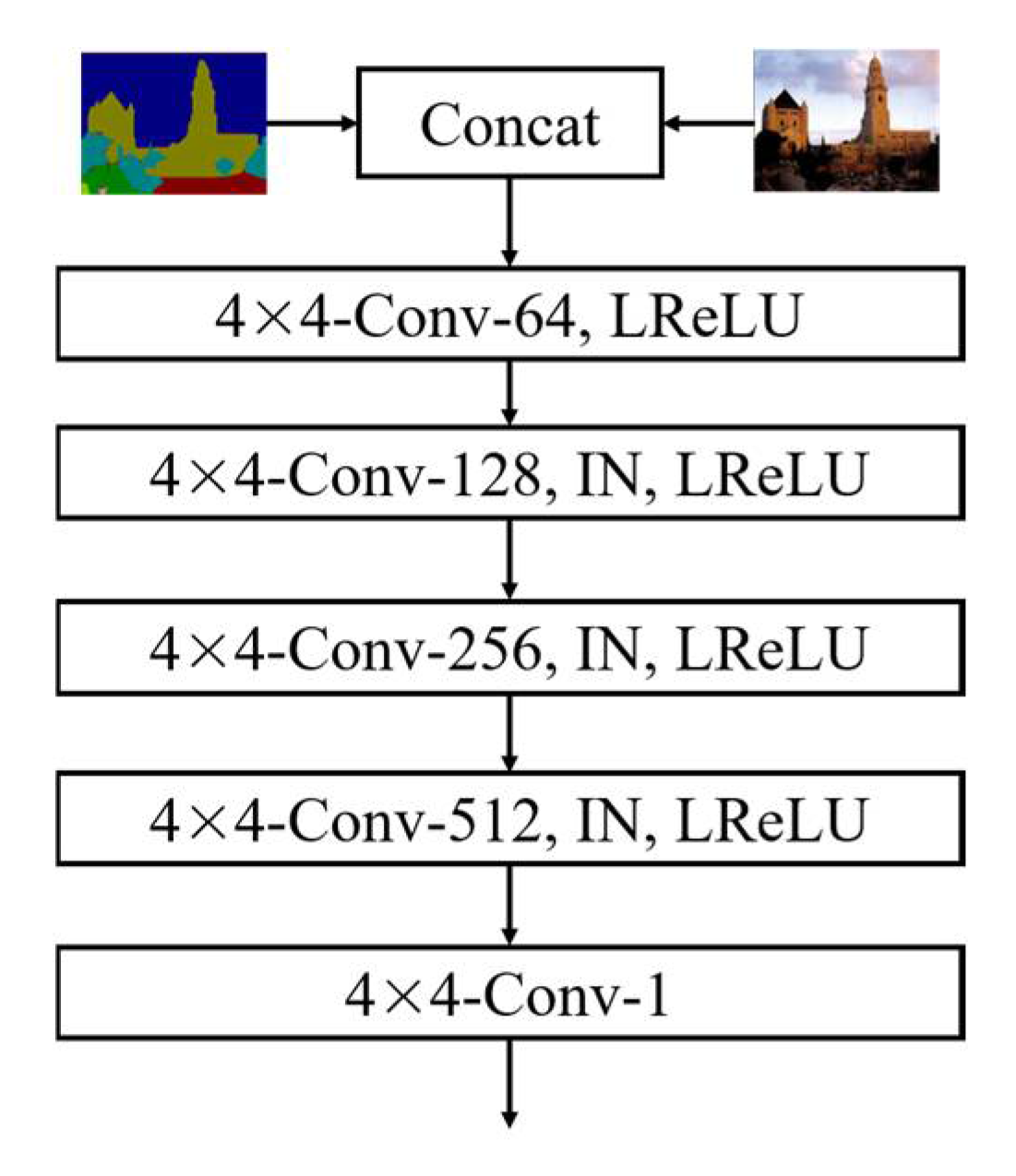

2.2.3. Adding Attention Mechanism YLG

2.3. Datasets

2.4. Design of Experiments

2.4.1. Hardware and Software Configuration

2.4.2. Evaluation Indicators

2.4.3. Parameters of Experiments

- (1)

- Loss function.

- (2)

- Training parameters.

2.4.4. Schemes of Experiments

- (1)

- Comparative experiments.

- (2)

- Computational complexity experiments.

- (3)

- Ablation experiments.

3. Results and Discussion

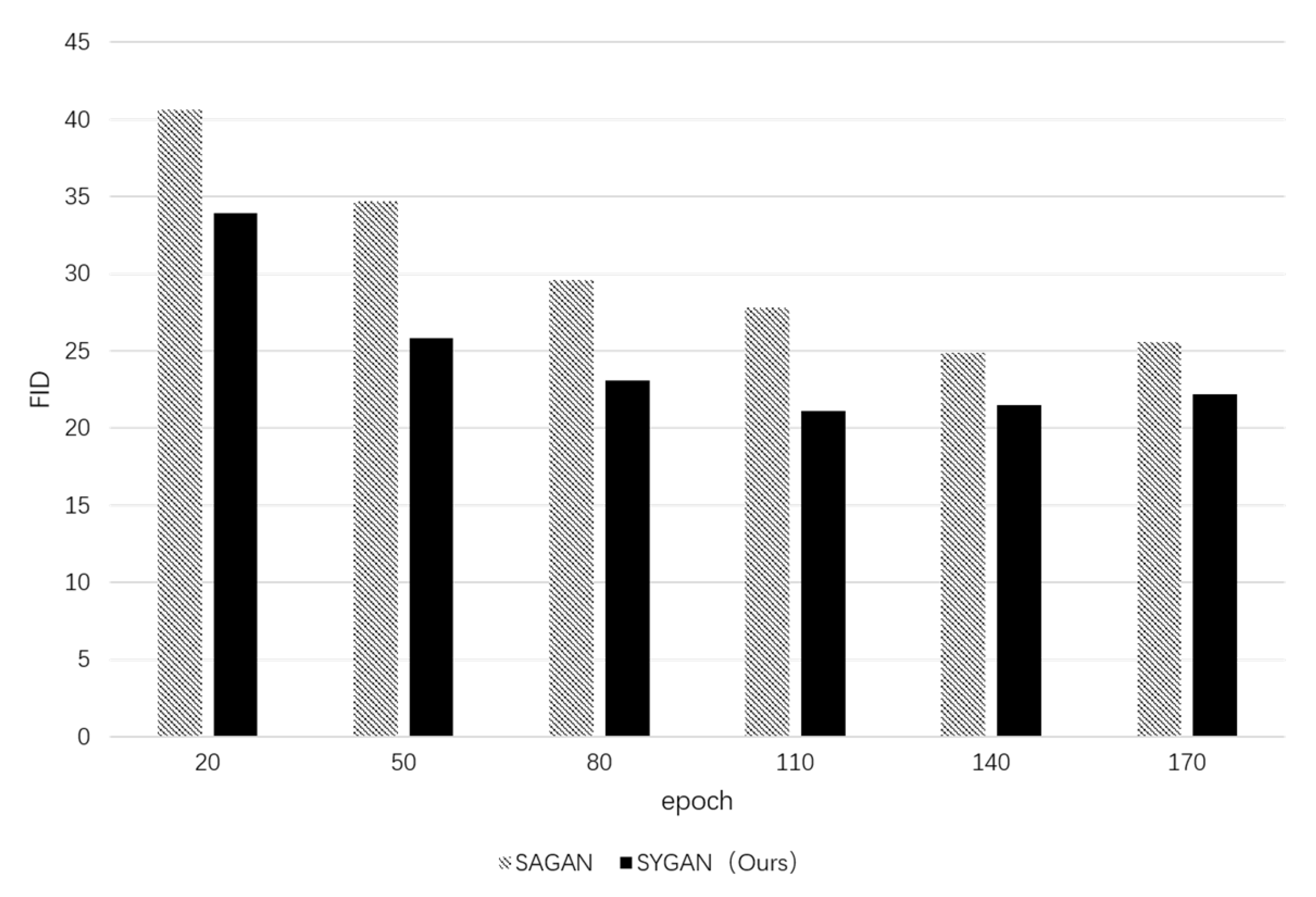

3.1. Comparative Experiments

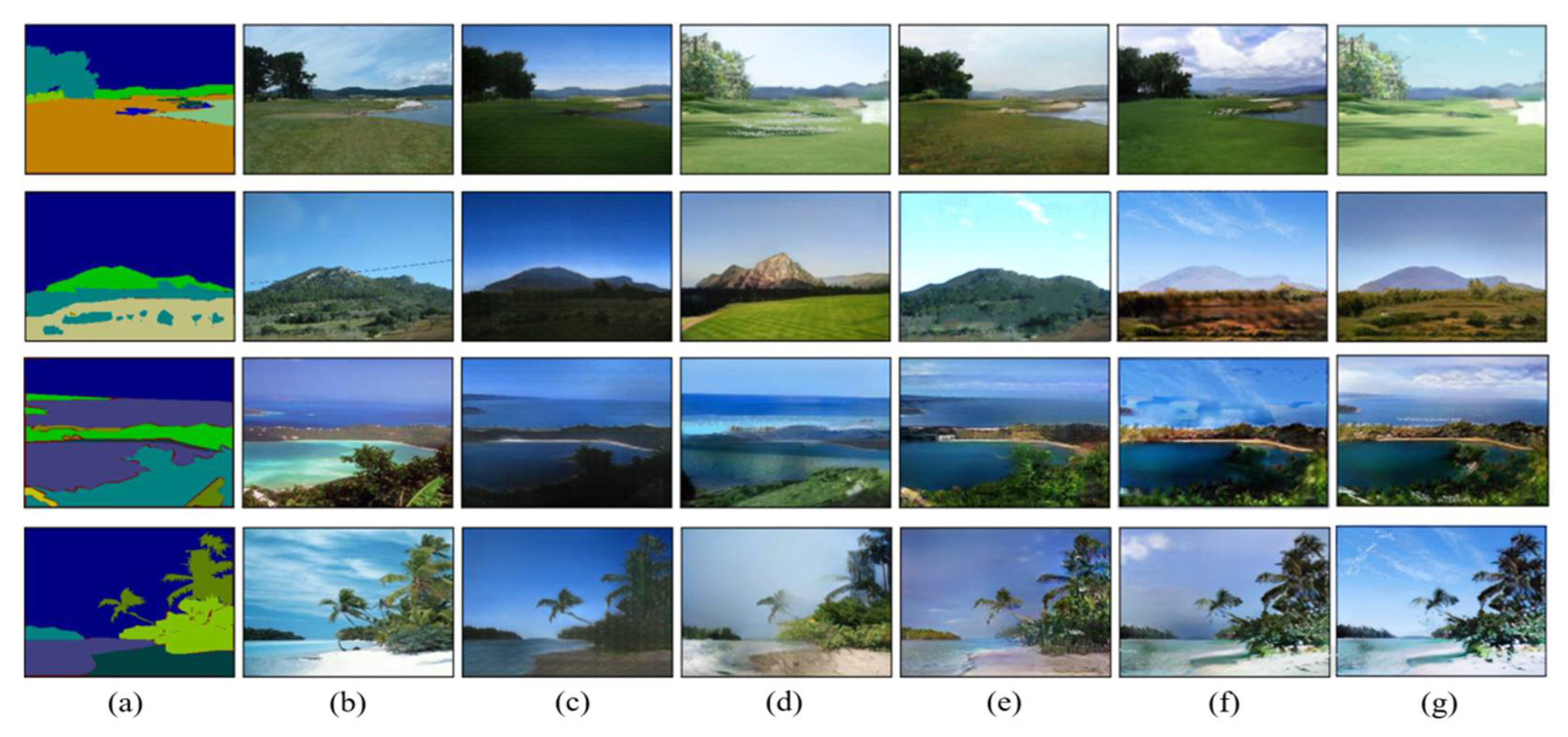

3.1.1. Natural Scene

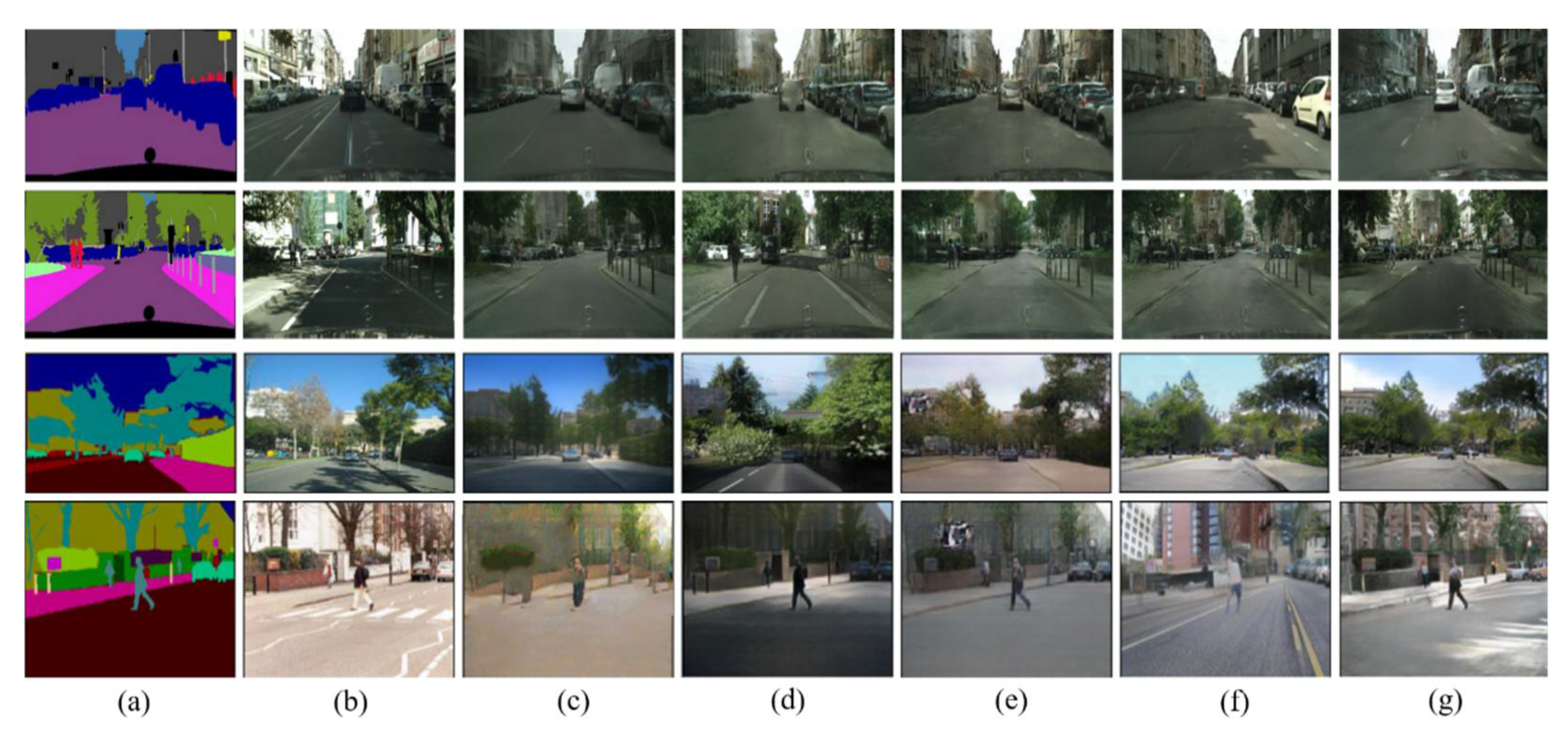

3.1.2. Street Scene

3.1.3. Comparison of the Two Scenes

3.2. Computational Complexity Experiments

3.3. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Botín-Sanabria, D.M.; Mihaita, A.-S.; Peimbert-García, R.E.; Ramírez-Moreno, M.A.; Ramírez-Mendoza, R.A.; Lozoya-Santos, J.D.J. Digital twin technology challenges and applications: A comprehensive review. Remote Sens. 2022, 14, 1335. [Google Scholar] [CrossRef]

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 4401–4410. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Wang, T.-C.; Liu, M.-Y.; Zhu, J.-Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-Resolution Image Synthesis and Semantic Manipulation with Conditional Gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8798–8807. [Google Scholar]

- Chen, Q.; Koltun, V. Photographic Image Synthesis with Cascaded Refinement Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1511–1520. [Google Scholar]

- Qi, X.; Chen, Q.; Jia, J.; Koltun, V. Semi-Parametric Image Synthesis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8808–8816. [Google Scholar]

- Bai, G.; Xi, W.; Hong, X.; Liu, X.; Yue, Y.; Zhao, S. Robust and Rotation-Equivariant Contrastive Learning. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–14. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Yu, X. An overview of image caption generation methods. Comput. Intell. Neurosci. 2020, 2020, 3062706. [Google Scholar] [CrossRef] [PubMed]

- Park, T.; Liu, M.-Y.; Wang, T.-C.; Zhu, J.-Y. Semantic Image Synthesis with Spatially-Adaptive Normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 2337–2346. [Google Scholar]

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a Convolutional Neural Network. In Proceedings of the 2017 International Conference on Engineering and Technology (ICET), Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar]

- Zhang, H.; Goodfellow, I.; Metaxas, D.; Odena, A. Self-Attention Generative Adversarial Networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7354–7363. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Daras, G.; Odena, A.; Zhang, H.; Dimakis, A.G. Your local GAN: Designing Two Dimensional Local Attention Mechanisms for Generative Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 14531–14539. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Fukui, H.; Hirakawa, T.; Yamashita, T.; Fujiyoshi, H. Attention Branch Network: Learning of Attention Mechanism for Visual Explanation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 10705–10714. [Google Scholar]

- Xu, J.; Li, Z.; Du, B.; Zhang, M.; Liu, J. Reluplex Made More Practical: Leaky ReLU. In Proceedings of the 2020 IEEE Symposium on Computers and communications (ISCC), Rennes, France, 7–10 July 2020; pp. 1–7. [Google Scholar]

- Cai, T.; Luo, S.; Xu, K.; He, D.; Liu, T.-Y.; Wang, L. Graphnorm: A Principled Approach to Accelerating Graph Neural Network Training. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 1204–1215. [Google Scholar] [CrossRef]

- Hara, K.; Saito, D.; Shouno, H. Analysis of Function of Rectified Linear Unit Used in Deep Learning. In Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Mescheder, L.; Geiger, A.; Nowozin, S. Which Training Methods for GANs do Actually Converge? In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 3481–3490. [Google Scholar]

- Miyato, T.; Koyama, M. cGANs with Projection Discriminator. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Mazaheri, G.; Mithun, N.C.; Bappy, J.H.; Roy-Chowdhury, A.K. A Skip Connection Architecture for Localization of Image Manipulations. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 119–129. [Google Scholar]

- Child, R.; Gray, S.; Radford, A.; Sutskever, I. Generating long sequences with sparse transformers. arXiv 2019, arXiv:1904.10509. [Google Scholar]

- Caesar, H.; Uijlings, J.; Ferrari, V. Coco-stuff: Thing and stuff classes in context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1209–1218. [Google Scholar]

- Zhou, B.; Zhao, H.; Puig, X.; Fidler, S.; Barriuso, A.; Torralba, A. Scene Parsing through ade20k Dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 633–641. [Google Scholar]

- Pedamonti, D. Comparison of non-linear activation functions for deep neural networks on MNIST classification task. arXiv 2018, arXiv:1804.02763. [Google Scholar]

- Obukhov, A.; Krasnyanskiy, M. Quality Assessment Method for GAN Based on Modified Metrics Inception Score and Fréchet Inception Distance. In Proceedings of the Computational Methods in Systems and Software, Online, 14–17 October 2020; pp. 102–114. Available online: https://link.springer.com/chapter/10.1007/978-3-030-63322-6_8 (accessed on 6 February 2023).

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv. Neural Inf. Process. Syst. 2017, 30, 1–12. [Google Scholar]

| Item | Detail |

|---|---|

| CPU | AMD Ryzen 7 3900X 12-Core processor |

| GPU | NVIDIA GeForce RTX 3090 |

| RAM | 32GB |

| Operating system | 64-bit Windows 11 |

| CUDA | CUDA11.3 |

| Data processing | Python 3.7 |

| Item | Value |

|---|---|

| epoch | 120 |

| Batch size | 16 |

| 0.0001 | |

| 0.0004 | |

| Image size | 512 × 512 |

| Model | PA (%) | MIoU (%) | FID |

|---|---|---|---|

| CRN | 68.4 | 45.3 | 48.6 |

| SIMS | 63.6 | 38.6 | 43.6 |

| pix2pixHD | 73.9 | 46.3 | 39.8 |

| GauGAN | 83.9 | 54.8 | 22.6 |

| SYGAN(ours) | 86.1 | 56.6 | 22.1 |

| Model | PA (%) | MIoU (%) | FID |

|---|---|---|---|

| CRN | 67.5 | 43.5 | 58.2 |

| SIMS | 73.1 | 34.2 | 61.3 |

| pix2pixHD | 68.9 | 41.4 | 47.6 |

| GauGAN | 78.8 | 49.6 | 33.8 |

| SYGAN(ours) | 81.3 | 51.4 | 31.2 |

| Model | PA (%) | MIoU (%) | FID |

|---|---|---|---|

| SYGAN | 69.5 | 48.2 | 22.3 |

| SGAN | 66.3 | 46.1 | 25.3 |

| YGAN | 55.4 | 38.6 | 36.5 |

| GAN | 33.4 | 30.6 | 68.2 |

| Model | PA (%) | MIoU (%) | FID |

|---|---|---|---|

| SYGAN | 81.4 | 51.3 | 37.8 |

| SGAN | 78.6 | 48.1 | 42.3 |

| YGAN | 68.2 | 41.8 | 51.2 |

| GAN | 44.3 | 25.6 | 71.5 |

| Model | PA (%) | MIoU (%) | FID |

|---|---|---|---|

| SYGAN | 86.3 | 57.1 | 32.3 |

| SGAN | 82.9 | 54.3 | 36.2 |

| YGAN | 71.5 | 46.3 | 46.1 |

| GAN | 49.6 | 29.8 | 70.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mo, L.; Zhu, Y.; Wang, G.; Yi, X.; Wu, X.; Wu, P. Improved Image Synthesis with Attention Mechanism for Virtual Scenes via UAV Imagery. Drones 2023, 7, 160. https://doi.org/10.3390/drones7030160

Mo L, Zhu Y, Wang G, Yi X, Wu X, Wu P. Improved Image Synthesis with Attention Mechanism for Virtual Scenes via UAV Imagery. Drones. 2023; 7(3):160. https://doi.org/10.3390/drones7030160

Chicago/Turabian StyleMo, Lufeng, Yanbin Zhu, Guoying Wang, Xiaomei Yi, Xiaoping Wu, and Peng Wu. 2023. "Improved Image Synthesis with Attention Mechanism for Virtual Scenes via UAV Imagery" Drones 7, no. 3: 160. https://doi.org/10.3390/drones7030160

APA StyleMo, L., Zhu, Y., Wang, G., Yi, X., Wu, X., & Wu, P. (2023). Improved Image Synthesis with Attention Mechanism for Virtual Scenes via UAV Imagery. Drones, 7(3), 160. https://doi.org/10.3390/drones7030160