Learning to Propose and Refine for Accurate and Robust Tracking via an Alignment Convolution

Abstract

:1. Introduction

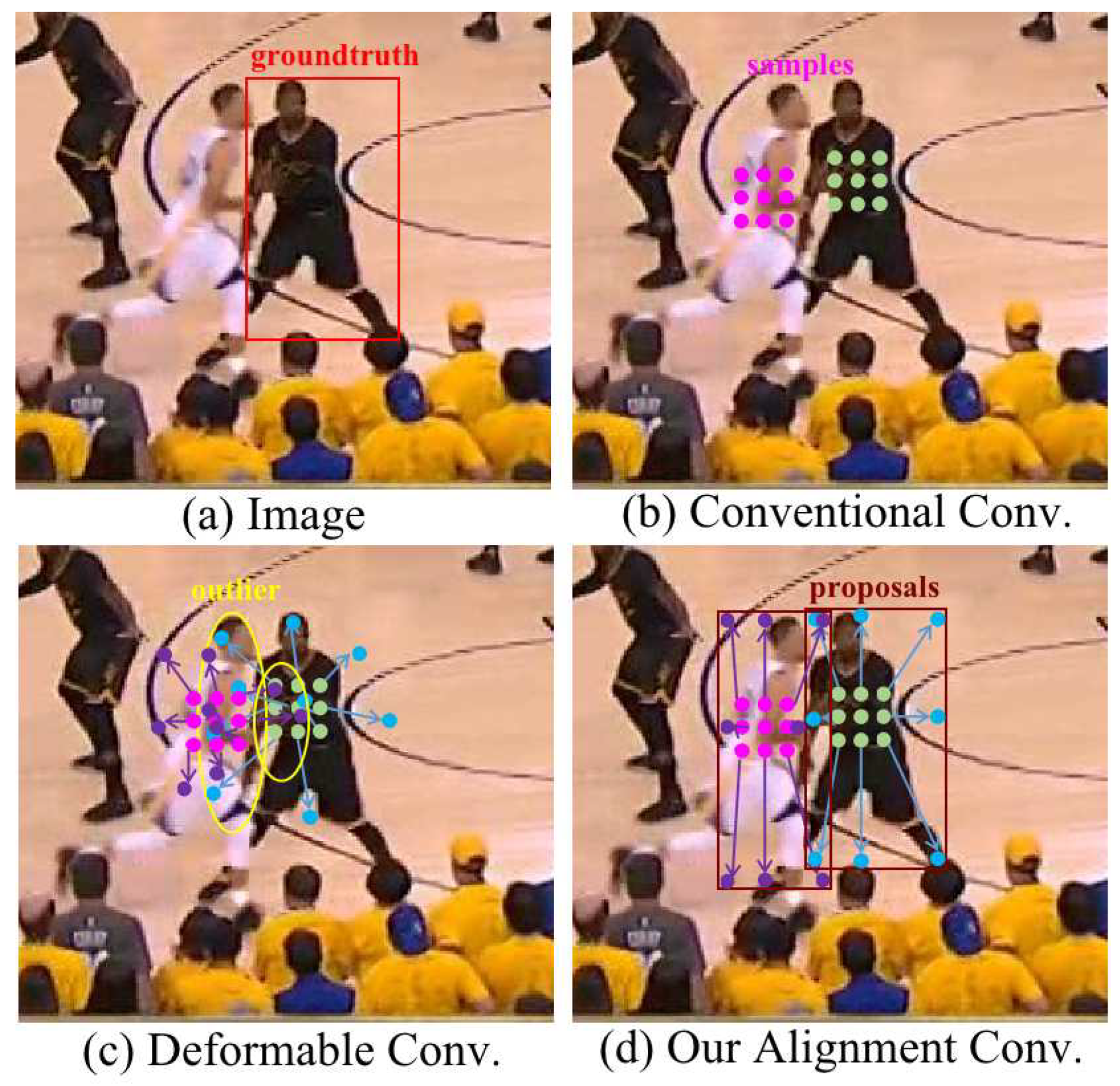

- The paper explores a major challenge that leads to inaccurate target localization, while often not discussed in the tracking literature. Based on careful investigation, this paper discovers the inaccurate convolution sampling points likely to lead to incorrect feature extraction, which degrades a tracker.

- The paper designs a simple yet efficient propose-and-refine mechanism that is driven by an alignment convolution to classify and refine the proposals. By naturally accentuating the advantages of each component, the proposed PRTracker can not only effectively obtain reliable proposals, but also provide more accurate and robust features for further classification and regression.

| Types | Sample Mode | Coarse-to-Fine Refinement |

|---|---|---|

| Conventional Convolution-Based Trackers [6,9,20] | Regular Grid Sampling | No |

| Deformable Convolution-Based Trackers [17] | Learnable Offset Sampling | No |

| Alignment Convolution-Based Trackers (Proposed PRTracker) | Learnable Offset Sampling with the Proposal Supervision Signal | Yes |

2. Related Work

2.1. Coarse Target Localization in Object Tracking

2.2. Coarse-to-Fine Localization in Object Tracking

2.3. Feature Alignment in Object Detection

3. The Proposed Tracker

3.1. The Siamese Network Backbone

3.2. Alignment Convolution

3.3. The Propose-and-Refine Module

3.4. The Target Mask

3.5. Ground Truth and Loss

3.6. Training and Inference

| Algorithm 1: Accurate and robust tracking with PRTracker |

|

4. Experiments

4.1. Implementation Details

4.2. Comparison with State-of-the-Art Trackers

4.3. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AlignConv | Alignment convolution |

| AUC | Area under the curve |

| DCF | Discriminative correlation filter |

| DW-Corr | Depth-wise cross-correlation operation |

| EAO | Expected average overlap |

| NfS | Need for speed |

| PRTracker | Propose-and-refine tracker |

| RPN | Region proposal network |

| SGD | Stochastic gradient descent |

| FPS | Frame per second |

| RoI | Region of interest |

| VOT2018 | Visual Object Tracking Challenge 2018 |

| VOT2019 | Visual Object Tracking challenge 2019 |

References

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R.; Tang, Z.; Li, X. SiamBAN: Target-aware tracking with siamese box adaptive network. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 5158–5173. [Google Scholar] [CrossRef] [PubMed]

- Molchanov, P.; Yang, X.; Gupta, S.; Kim, K.; Tyree, S.; Kautz, J. Online Detection and Classification of Dynamic Hand Gestures With Recurrent 3D Convolutional Neural Network. In Proceedings of the CVPR 2016, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Lee, K.H.; Hwang, J.N. On-Road Pedestrian Tracking Across Multiple Driving Recorders. IEEE Trans. Multimed. 2015, 17, 1. [Google Scholar] [CrossRef]

- Tang, S.; Andriluka, M.; Andres, B.; Schiele, B. Multiple People Tracking by Lifted Multicut and Person Re-identification. In Proceedings of the CVPR 2017, Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision Workshops, Amsterdam, The Netherlands, 11–14 October 2016; pp. 850–865. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese Box Adaptive Network for Visual Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, virtual, 14–19 June 2020; pp. 6668–6677. [Google Scholar]

- Voigtlaender, P.; Luiten, J.; Torr, P.H.; Leibe, B. Siam r-cnn: Visual tracking by re-detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, virtual, 14–19 June 2020; pp. 6578–6588. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware Anchor-free Tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. SiamRPN++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4282–4291. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. SPM-Tracker: Series-parallel matching for real-time visual object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3643–3652. [Google Scholar]

- Mu, Z.A.; Hui, Z.; Jing, Z.; Li, Z. Multi-level prediction Siamese network for real-time UAV visual tracking. Image Vis. Comput. 2020, 103, 104002. [Google Scholar]

- Wu, Y.; Liu, Z.; Zhou, X.; Ye, L.; Wang, Y. ATCC: Accurate tracking by criss-cross location attention. Image Vis. Comput. 2021, 111, 104188. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhong, B.; Liang, Q.; Tang, Z.; Ji, R.; Li, X. Leveraging Local and Global Cues for Visual Tracking via Parallel Interaction Network. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1671–1683. [Google Scholar] [CrossRef]

- Ma, J.; Lan, X.; Zhong, B.; Li, G.; Tang, Z.; Li, X.; Ji, R. Robust Tracking via Uncertainty-aware Semantic Consistency. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 1740–1751. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the ICCV, IEEE Computer Society, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable Siamese Attention Networks for Visual Object Tracking. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; pp. 6727–6736. [Google Scholar] [CrossRef]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1763–1771. [Google Scholar]

- Wang, Q.; Teng, Z.; Xing, J.; Gao, J.; Hu, W.; Maybank, S. Learning attentions: Residual attentional siamese network for high performance online visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 4854–4863. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 8971–8980. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 10–17 October 2015; pp. 91–99. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. SiamFC++: Towards Robust and Accurate Visual Tracking with Target Estimation Guidelines. In Proceedings of the AAAI, New York, NY, USA, 7–12 February 2020; pp. 12549–12556. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, virtual, 14–19 June 2020; pp. 6269–6277. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 22–25 July 2017; pp. 2961–2969. [Google Scholar]

- Jiang, B.; Luo, R.; Mao, J.; Xiao, T.; Jiang, Y. Acquisition of localization confidence for accurate object detection. In Proceedings of the European Conference on Computer Vision, Salt Lake City, UT, USA, 19–21 June 2018; pp. 784–799. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. LaSOT: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5374–5383. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Cehovin Zajc, L.; Vojir, T.; Bhat, G.; Lukezic, A.; Eldesokey, A.; et al. The sixth visual object tracking VOT2018 challenge results. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–53. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kamarainen, J.K.; Cehovin Zajc, L.; Drbohlav, O.; Lukezic, A.; Berg, A.; et al. The seventh visual object tracking VOT2019 challenge results. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–36. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Huang, C.; Ramanan, D.; Lucey, S. Need for speed: A benchmark for higher frame rate object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1125–1134. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M.H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. ECO: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 6638–6646. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4591–4600. [Google Scholar]

- Wang, Q.; Zhang, L.; Bertinetto, L.; Hu, W.; Torr, P.H. Fast online object tracking and segmentation: A unifying approach. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1328–1338. [Google Scholar]

- Zhang, K.; Liu, Q.; Wu, Y.; Yang, M.H. Robust Visual Tracking via Convolutional Networks Without Training. IEEE Trans. Image Process. 2016, 25, 1779–1792. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Liu, X.; Cheng, X.; Zhang, K.; Wu, Y.; Chen, S. Multi-Task Deep Dual Correlation Filters for Visual Tracking. IEEE Trans. Image Process. 2020, 29, 9614–9626. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 472–488. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Xie, S.; Girshick, R.B.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Yan, B.; Zhang, X.; Wang, D.; Lu, H.; Yang, X. Alpha-Refine: Boosting Tracking Performance by Precise Bounding Box Estimation. arXiv 2020, arXiv:2012.06815. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ATOM: Accurate tracking by overlap maximization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4660–4669. [Google Scholar]

- Gomaa, A.; Abdelwahab, M.M.; Abo-Zahhad, M. Efficient vehicle detection and tracking strategy in aerial videos by employing morphological operations and feature points motion analysis. Multim. Tools Appl. 2020, 79, 26023–26043. [Google Scholar] [CrossRef]

- Gomaa, A.; Minematsu, T.; Abdelwahab, M.M.; Abo-Zahhad, M.; Taniguchi, R. Faster CNN-based vehicle detection and counting strategy for fixed camera scenes. Multim. Tools Appl. 2022, 81, 25443–25471. [Google Scholar] [CrossRef]

- Chang, Y.; Tu, Z.; Xie, W.; Luo, B.; Zhang, S.; Sui, H.; Yuan, J. Video anomaly detection with spatio-temporal dissociation. Pattern Recognit. 2022, 122, 108213. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. CornerNet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Jang, H.D.; Woo, S.; Benz, P.; Park, J.; Kweon, I.S. Propose-and-attend single shot detector. In Proceedings of the The IEEE Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 815–824. [Google Scholar]

- Zhang, H.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Cascade retinanet: Maintaining consistency for single-stage object detection. arXiv 2019, arXiv:1907.06881. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Real, E.; Shlens, J.; Mazzocchi, S.; Pan, X.; Vanhoucke, V. YouTube-BoundingBoxes: A large high-precision human-annotated data set for object detection in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 5296–5305. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A large high-diversity benchmark for Ggeneric object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Xu, T.; Feng, Z.H.; Wu, X.J.; Kittler, J. Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking. IEEE Trans. Image Process. 2019, 28, 5596–5609. [Google Scholar] [CrossRef] [PubMed]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Cheng, S.; Zhong, B.; Li, G.; Liu, X.; Tang, Z.; Li, X.; Wang, J. Learning To Filter: Siamese Relation Network for Robust Tracking. In Proceedings of the CVPR. Computer Vision Foundation/IEEE, virtual, 19–25 June 2021; pp. 4421–4431. [Google Scholar]

- Chen, X.; Yan, B.; Zhu, J.; Wang, D.; Yang, X.; Lu, H. Transformer Tracking. In Proceedings of the CVPR. Computer Vision Foundation/IEEE, virtual, 19–25 June 2021; pp. 8126–8135. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4293–4302. [Google Scholar]

- Bhat, G.; Johnander, J.; Danelljan, M.; Shahbaz Khan, F.; Felsberg, M. Unveiling the power of deep tracking. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 483–498. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. HiFT: Hierarchical Feature Transformer for Aerial Tracking. In Proceedings of the ICCV. IEEE, Montreal, QC, Canada, 10–17 October 2021; pp. 15437–15446. [Google Scholar]

- Danelljan, M.; Hager, G.; Shahbaz Khan, F.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4310–4318. [Google Scholar]

- Fan, H.; Ling, H. Siamese cascaded region proposal networks for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7952–7961. [Google Scholar]

- Song, Y.; Ma, C.; Wu, X.; Gong, L.; Bao, L.; Zuo, W.; Shen, C.; Lau, R.W.; Yang, M.H. VITAL: Visual tracking via adversarial learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 8990–8999. [Google Scholar]

| Block | Backbone | Search Branch Output Size | Template Branch Output Size |

|---|---|---|---|

| conv1 | 7 × 7, 64, stride 2 | 125 × 125 | 61 × 61 |

| conv2_x | 3 × 3 max pool, stride 2 | 63 × 63 | 31 × 31 |

| conv3_x | 31 × 31 | 15 × 15 | |

| conv4_x | 31 × 31 | 15 × 15 | |

| adjust | 1 × 1, 256 | 31 × 31 | 7 × 7 |

| xcorr | depth-wise | 25 × 25 | |

| SiamRPN [20] | LADCF [56] | ATOM [43] | Siam R-CNN [7] | SiamRPN++ [9] | SiamFC++ [23] | DiMP [57] | SiamBAN [6] | Ocean [8] | PRTracker | |

|---|---|---|---|---|---|---|---|---|---|---|

| EAO | 0.384 | 0.389 | 0.401 | 0.408 | 0.417 | 0.430 | 0.441 | 0.452 | 0.470 | 0.497 |

| Accuracy | 0.588 | 0.503 | 0.590 | 0.617 | 0.604 | 0.590 | 0.597 | 0.597 | 0.603 | 0.627 |

| Robustness | 0.276 | 0.159 | 0.201 | 0.220 | 0.234 | 0.173 | 0.150 | 0.178 | 0.164 | 0.150 |

| SPM [10] | SiamRPN++ [9] | SiamMask [35] | ARTCS [29] | SiamDW_ST [34] | DCFST [29] | DiMP [57] | SiamBAN [6] | Ocean [8] | PRTracker | |

|---|---|---|---|---|---|---|---|---|---|---|

| EAO | 0.275 | 0.285 | 0.287 | 0.287 | 0.299 | 0.317 | 0.321 | 0.327 | 0.329 | 0.352 |

| Accuracy | 0.577 | 0.599 | 0.594 | 0.602 | 0.600 | 0.585 | 0.582 | 0.602 | 0.595 | 0.634 |

| Robustness | 0.507 | 0.482 | 0.461 | 0.482 | 0.467 | 0.376 | 0.371 | 0.396 | 0.376 | 0.341 |

| MDNet [60] | ECO [33] | C-COT [38] | UPDT [61] | ATOM [43] | SiamBAN [6] | DiMP [57] | PRTracker | |

|---|---|---|---|---|---|---|---|---|

| AUC | 0.422 | 0.466 | 0.488 | 0.537 | 0.584 | 0.594 | 0.620 | 0.603 |

| CC | DC | AC | Target Mask | OTB100 AUC | LaSOT AUC | |

|---|---|---|---|---|---|---|

| T1 | 0.683 | 0.512 | ||||

| T2 | ✔ | 0.690 | 0.523 | |||

| T3 | ✔ | 0.694 | 0.530 | |||

| T4 | ✔ | 0.702 | 0.556 | |||

| PRTracker | ✔ | ✔ | 0.710 | 0.569 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mo, Z.; Li, Z. Learning to Propose and Refine for Accurate and Robust Tracking via an Alignment Convolution. Drones 2023, 7, 343. https://doi.org/10.3390/drones7060343

Mo Z, Li Z. Learning to Propose and Refine for Accurate and Robust Tracking via an Alignment Convolution. Drones. 2023; 7(6):343. https://doi.org/10.3390/drones7060343

Chicago/Turabian StyleMo, Zhiyi, and Zhi Li. 2023. "Learning to Propose and Refine for Accurate and Robust Tracking via an Alignment Convolution" Drones 7, no. 6: 343. https://doi.org/10.3390/drones7060343