Detecting Canopy Gaps in Uneven-Aged Mixed Forests through the Combined Use of Unmanned Aerial Vehicle Imagery and Deep Learning

Abstract

1. Introduction

2. Materials and Methods

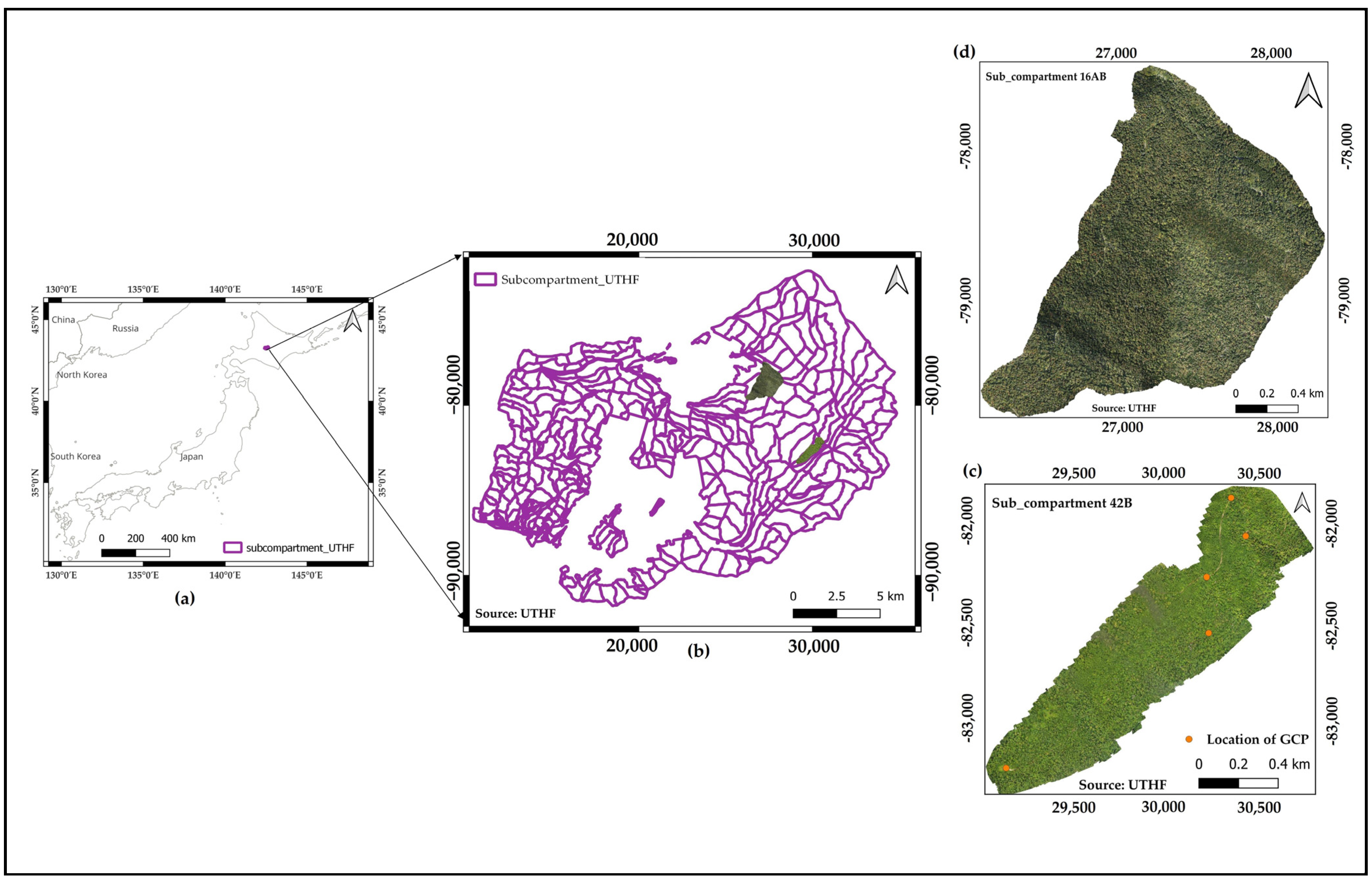

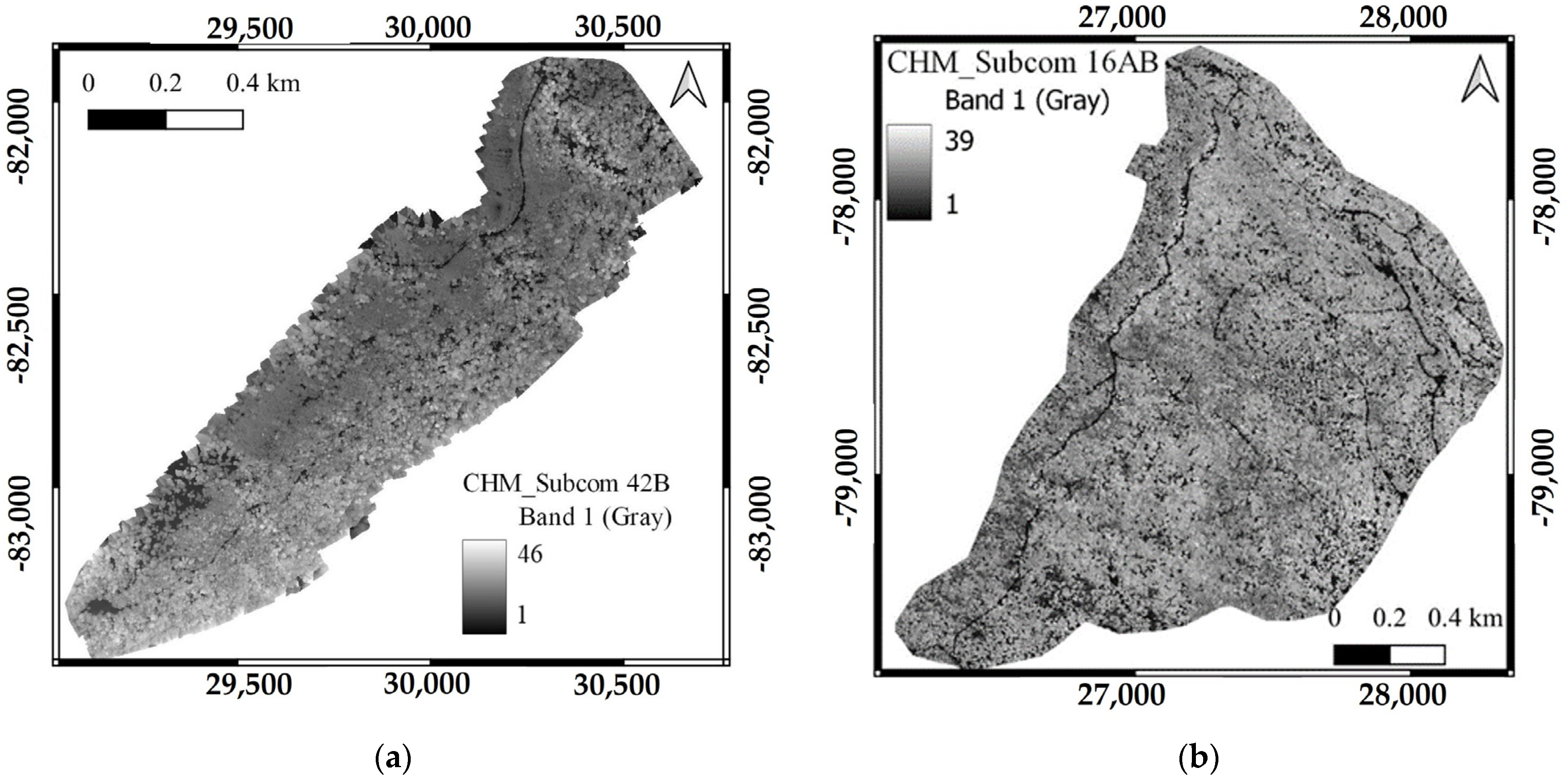

2.1. Study Sites and Remote Sensing Datasets

2.2. Data Analysis

2.2.1. Deep Learning Algorithms

2.2.2. Detection of Canopy Gaps Using UAV Remote Sensing Datasets and Deep Learning Models

Input Data and Preprocessing

Generation of Labeled Masks for Canopy Gaps

Model Configuration and Hyperparameter Settings

2.2.3. Extended Training for Canopy Gap Detection

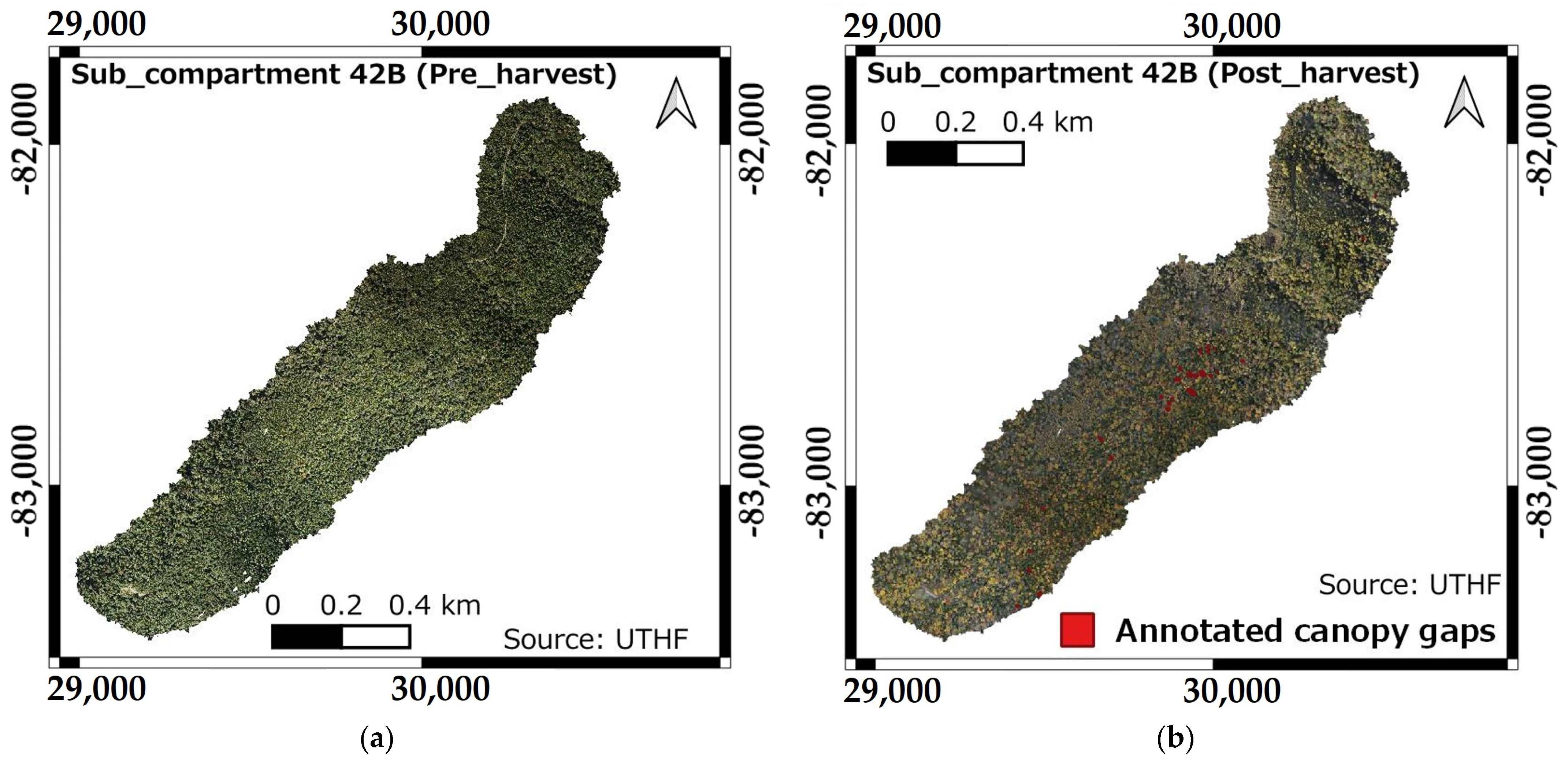

2.2.4. Training Transfer Models and ResU-Net Model for the Detection of Canopy Gaps Caused by Selection Harvesting

2.2.5. Evaluation Metrics for the Models

- TP (True Positives): The number of pixels correctly predicted as canopy gaps.

- FP (False Positives): The number of pixels incorrectly predicted as canopy gaps (predicted as gaps but actually not gaps).

- FN (False Negatives): The number of pixels that are actually canopy gaps but were incorrectly predicted as background.

3. Results

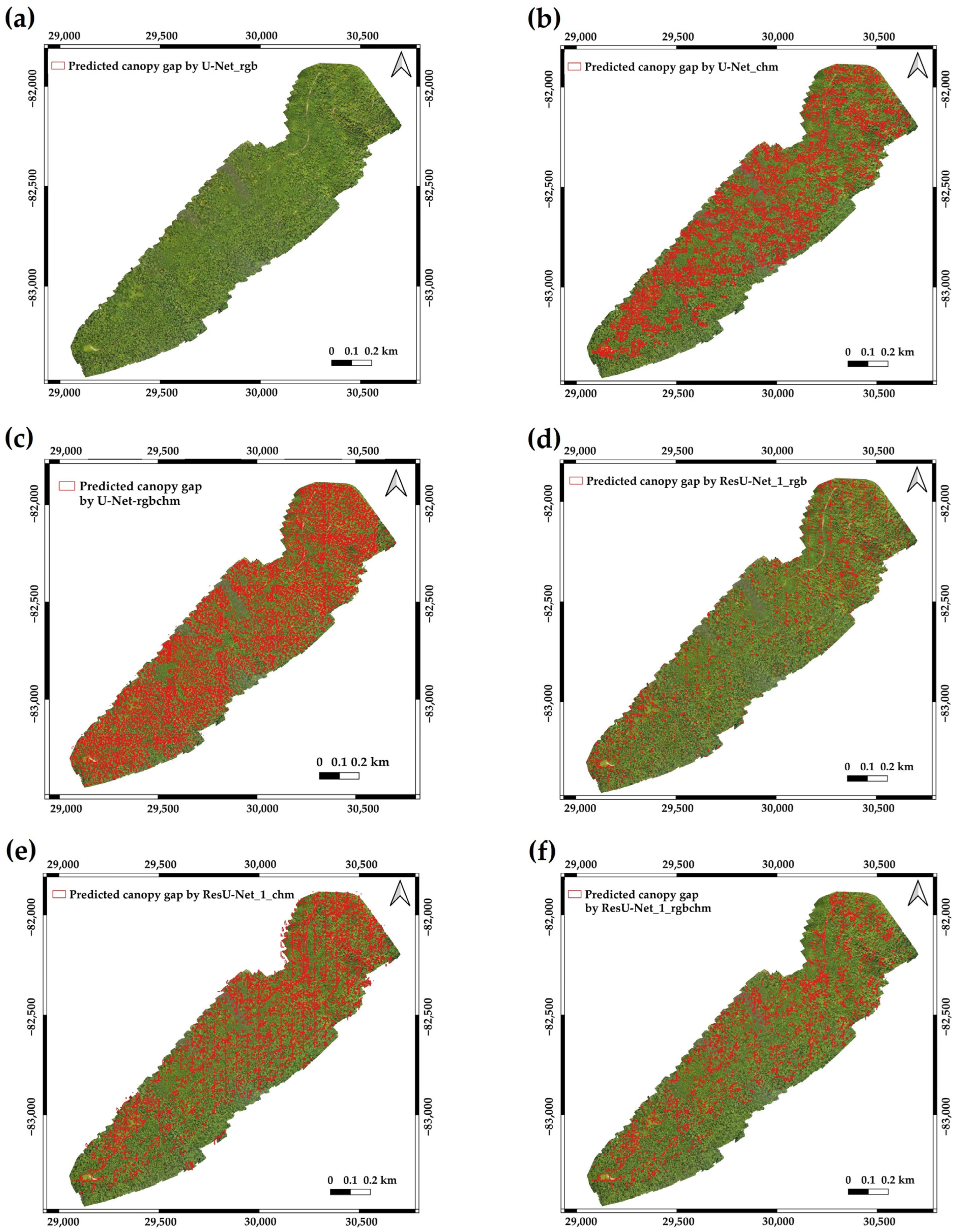

3.1. Accuracy for Detection of Canopy Gap Using Deep Learning Models and UAV Remote Sensing Datasets of Sub-Compartment 42B

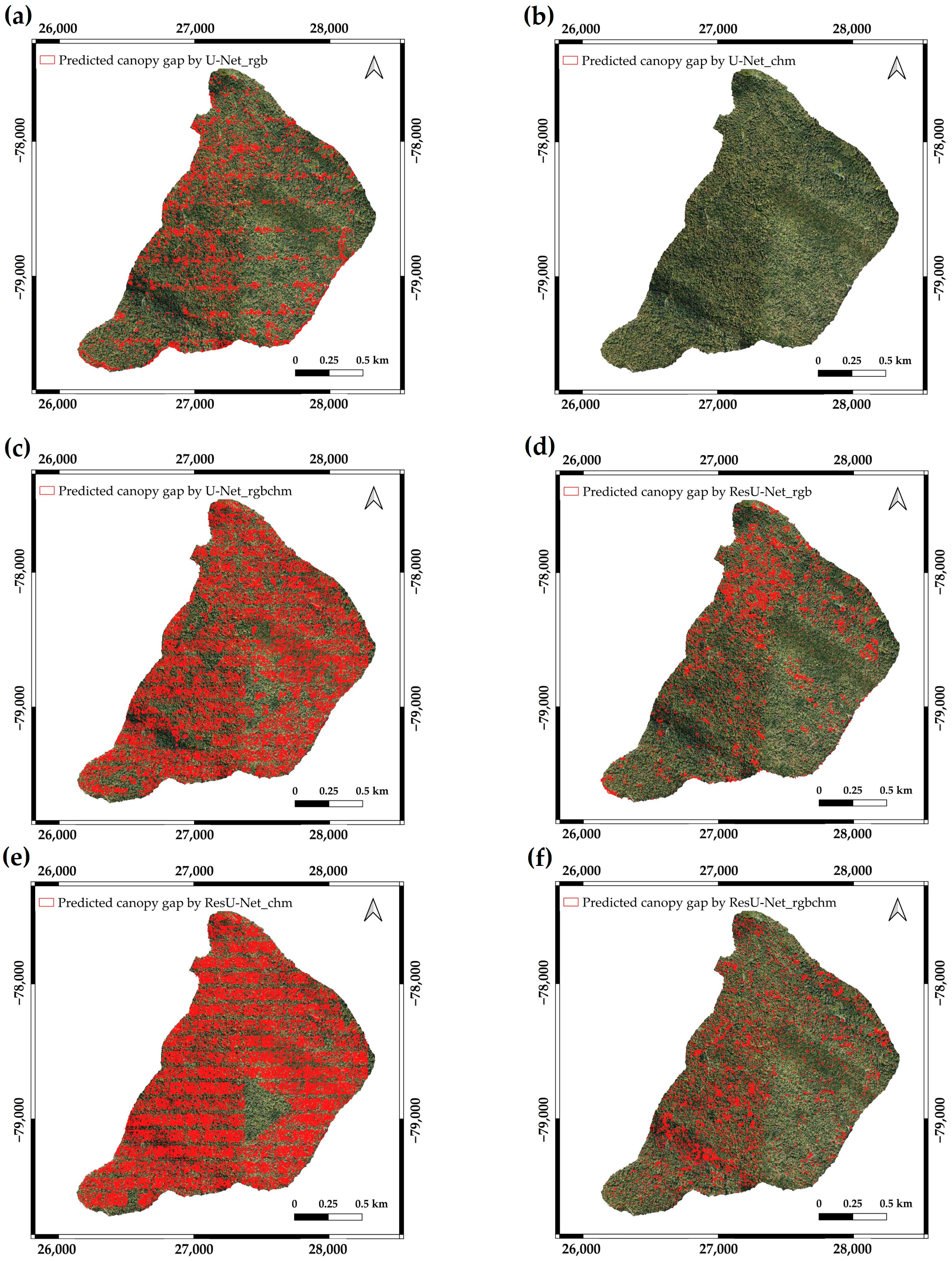

3.2. Accuracy for Detection of Canopy Gap Using Deep Learning Models and UAV Remote Sensing Datasets of Sub-Compartment 16AB

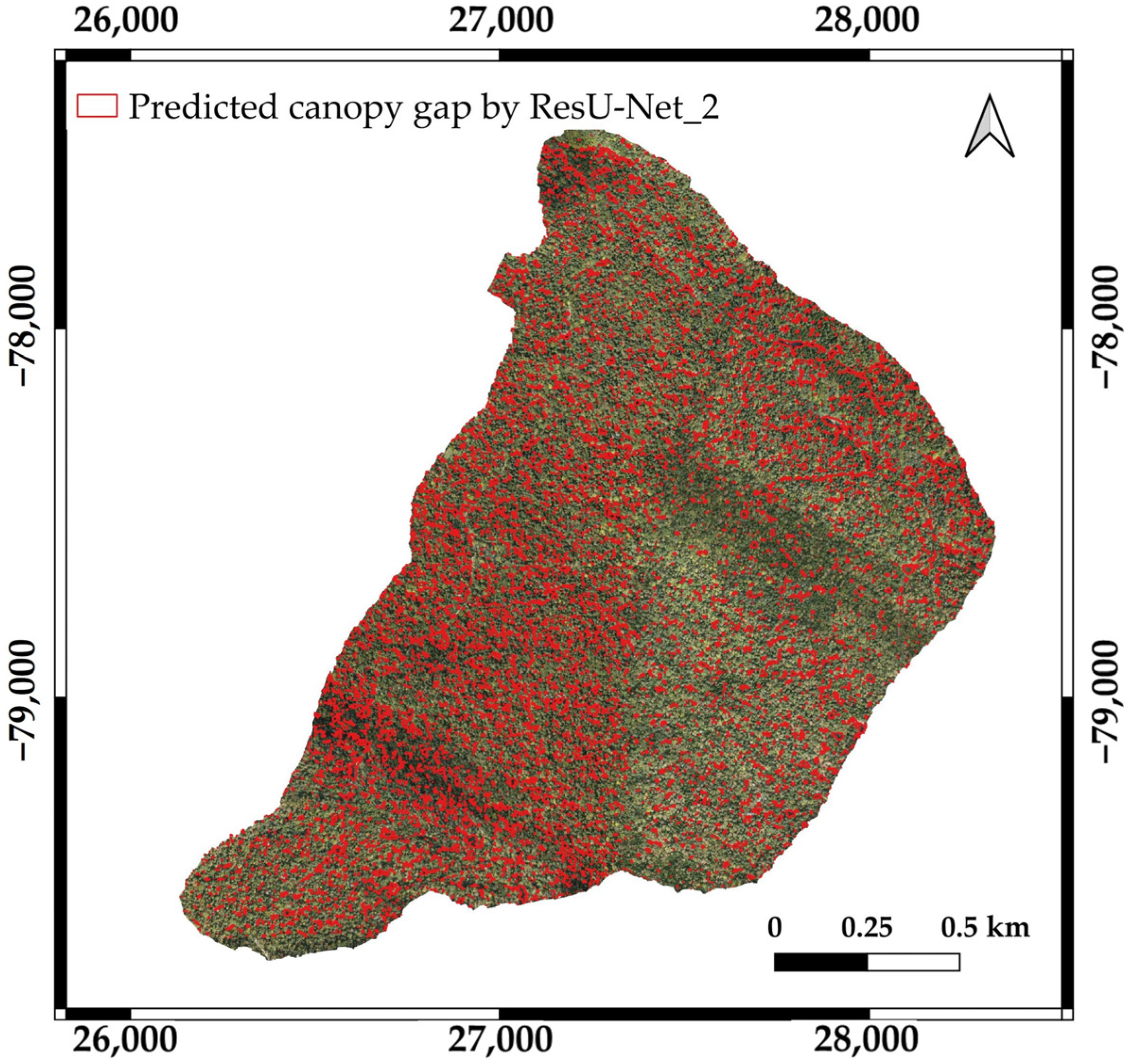

3.3. Performance of the Trained Models (Transfer Models) in Comparison with the ResU-Net Model for the Detection of Canopy Gap Resulting from Selective Harvesting Using the Trained Deep Learning Models and Pre- and Post-UAV Imagery

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Yamamoto, S.-I. Forest Gap Dynamics and Tree Regeneration. J. For. Res. 2000, 5, 223–229. [Google Scholar] [CrossRef]

- Brokaw, A.N.V.L. Gap-Phase Regeneration of Three Pioneer Tree Species in a Tropical Forest. J. Ecol. 1987, 75, 9–19. [Google Scholar] [CrossRef]

- Watt, A.S. Pattern and Process in the Plant Community. J. Ecol. 1947, 35, 1–13. [Google Scholar] [CrossRef]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using Unmanned Aerial Vehicles (UAV) to Quantify Spatial Gap Patterns in Forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef]

- Asner, G.P.; Kellner, J.R.; Kennedy-Bowdoin, T.; Knapp, D.E.; Anderson, C.; Martin, R.E. Forest Canopy Gap Distributions in the Southern Peruvian Amazon. PLoS ONE 2013, 8, e60875. [Google Scholar] [CrossRef]

- White, J.C.; Tompalski, P.; Coops, N.C.; Wulder, M.A. Comparison of Airborne Laser Scanning and Digital Stereo Imagery for Characterizing Forest Canopy Gaps in Coastal Temperate Rainforests. Remote Sens. Environ. 2018, 208, 1–14. [Google Scholar] [CrossRef]

- Pilaš, I.; Gašparović, M.; Novkinić, A.; Klobučar, D. Mapping of the Canopy Openings in Mixed Beech–Fir Forest at Sentinel-2 Subpixel Level Using Uav and Machine Learning Approach. Remote Sens. 2020, 12, 3925. [Google Scholar] [CrossRef]

- Chen, J.; Wang, L.; Jucker, T.; Da, H.; Zhang, Z.; Hu, J.; Yang, Q.; Wang, X.; Qin, Y.; Shen, G.; et al. Detecting Forest Canopy Gaps Using Unoccupied Aerial Vehicle RGB Imagery in a Species-Rich Subtropical Forest. Remote Sens. Ecol. Conserv. 2023, 9, 671–686. [Google Scholar] [CrossRef]

- Jackson, C.M.; Adam, E. A Machine Learning Approach to Mapping Canopy Gaps in an Indigenous Tropical Submontane Forest Using WorldView-3 Multispectral Satellite Imagery. Environ. Conserv. 2022, 49, 255–262. [Google Scholar] [CrossRef]

- Solano, F.; Praticò, S.; Piovesan, G.; Modica, G. Unmanned Aerial Vehicle (UAV) Derived Canopy Gaps in the Old-Growth Beech Forest of Mount Pollinello (Italy): Preliminary Results; Springer International Publishing: Cham, Switzerland, 2021; Volume 12955, pp. 126–138. [Google Scholar]

- Xia, J.; Wang, Y.; Dong, P.; He, S.; Zhao, F.; Luan, G. Object-Oriented Canopy Gap Extraction from UAV Images Based on Edge Enhancement. Remote Sens. 2022, 14, 4762. [Google Scholar] [CrossRef]

- Grybas, H.; Congalton, R.G. Evaluating the Capability of Unmanned Aerial System (Uas) Imagery to Detect and Measure the Effects of Edge Influence on Forest Canopy Cover in New England. Forests 2021, 12, 1252. [Google Scholar] [CrossRef]

- Grybas, H.; Congalton, R.G. A Comparison of Multi-Temporal Rgb and Multispectral Uas Imagery for Tree Species Classification in Heterogeneous New Hampshire Forests. Remote Sens. 2021, 13, 2631. [Google Scholar] [CrossRef]

- Onishi, M.; Watanabe, S.; Nakashima, T.; Ise, T. Practicality and Robustness of Tree Species Identification Using UAV RGB Image and Deep Learning in Temperate Forest in Japan. Remote Sens. 2022, 14, 1710. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, W.; Zhang, W.; Yu, J.; Zhang, J.; Yang, Y.; Lu, Y.; Tang, W. Two-Step ResUp&Down Generative Adversarial Network to Reconstruct Multispectral Image from Aerial RGB Image. Comput. Electron. Agric. 2022, 192, 106617. [Google Scholar] [CrossRef]

- Kachamba, D.J.; Ørka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef]

- Yurtseven, H.; Akgul, M.; Coban, S.; Gulci, S. Determination and Accuracy Analysis of Individual Tree Crown Parameters Using UAV Based Imagery and OBIA Techniques. Measurement 2019, 145, 651–664. [Google Scholar] [CrossRef]

- Tang, L.; Shao, G. Drone Remote Sensing for Forestry Research and Practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Tran, D.Q.; Park, M.; Jung, D.; Park, S. Damage-Map Estimation Using Uav Images and Deep Learning Algorithms for Disaster Management System. Remote Sens. 2020, 12, 4169. [Google Scholar] [CrossRef]

- Franklin, H.; Veras, P.; Pinheiro, M.; Paula, A.; Corte, D.; Roberto, C. Fusing Multi-Season UAS Images with Convolutional Neural Networks to Map Tree Species in Amazonian Forests. Ecol. Inform. 2022, 71, 101815. [Google Scholar] [CrossRef]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping Forest Tree Species in High Resolution UAV-Based RGB-Imagery by Means of Convolutional Neural Networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Li, L.; Mu, X.; Chianucci, F.; Qi, J.; Jiang, J.; Zhou, J.; Chen, L.; Huang, H.; Yan, G.; Liu, S. Ultrahigh-Resolution Boreal Forest Canopy Mapping: Combining UAV Imagery and Photogrammetric Point Clouds in a Deep-Learning-Based Approach. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102686. [Google Scholar] [CrossRef]

- Safonova, A.; Tabik, S.; Alcaraz-Segura, D.; Rubtsov, A.; Maglinets, Y.; Herrera, F. Detection of Fir Trees (Abies Sibirica) Damaged by the Bark Beetle in Unmanned Aerial Vehicle Images with Deep Learning. Remote Sens. 2019, 11, 643. [Google Scholar] [CrossRef]

- Elamin, A.; El-Rabbany, A. UAV-Based Multi-Sensor Data Fusion for Urban Land Cover Mapping Using a Deep Convolutional Neural Network. Remote Sens. 2022, 14, 4298. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S.; Hirata, Y. Potential of UAV Photogrammetry for Characterization of Forest Canopy Structure in Uneven-Aged Mixed Conifer–Broadleaf Forests. Int. J. Remote Sens. 2020, 41, 53–73. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. Evaluating the Performance of Photogrammetric Products Using Fixed-Wing UAV Imagery over a Mixed Conifer-Broadleaf Forest: Comparison with Airborne Laser Scanning. Remote Sens. 2018, 10, 187. [Google Scholar] [CrossRef]

- Htun, N.M.; Owari, T.; Tsuyuki, S.; Hiroshima, T. Integration of Unmanned Aerial Vehicle Imagery and Machine Learning Technology to Map the Distribution of Conifer and Broadleaf Canopy Cover in Uneven-Aged Mixed Forests. Drones 2023, 7, 705. [Google Scholar] [CrossRef]

- Jayathunga, S.; Owari, T.; Tsuyuki, S. The Use of Fixed–Wing UAV Photogrammetry with LiDAR DTM to Estimate Merchantable Volume and Carbon Stock in Living Biomass over a Mixed Conifer–Broadleaf Forest. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 767–777. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. INet: Convolutional Networks for Biomedical Image Segmentation. IEEE Access 2015, 9, 16591–16603. [Google Scholar] [CrossRef]

- Pei, H.; Owari, T.; Tsuyuki, S.; Zhong, Y. Application of a Novel Multiscale Global Graph Convolutional Neural Network to Improve the Accuracy of Forest Type Classification Using Aerial Photographs. Remote Sens. 2023, 15, 1001. [Google Scholar] [CrossRef]

- He, S.; Xu, G.; Yang, H. A Semantic Segmentation Method for Remote Sensing Images Based on Multiple Contextual Feature Extraction. Concurr. Comput. Pract. Exp. 2022, 10, 77432–77451. [Google Scholar] [CrossRef]

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and Mapping Individual Plants in a Highly Diverse High-Elevation Ecosystem Using UAV Imagery and Deep Learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Pyo, J.C.; Han, K.J.; Cho, Y.; Kim, D.; Jin, D. Generalization of U-Net Semantic Segmentation for Forest Change Detection in South Korea Using Airborne Imagery. Forests 2022, 13, 2170. [Google Scholar] [CrossRef]

- Zhang, P.; Ban, Y.; Nascetti, A. Learning U-Net without Forgetting for near Real-Time Wildfire Monitoring by the Fusion of SAR and Optical Time Series. Remote Sens. Environ. 2021, 261, 112467. [Google Scholar] [CrossRef]

- Wagner, F.H.; Sanchez, A.; Tarabalka, Y.; Lotte, R.G.; Ferreira, M.P.; Aidar, M.P.M.; Gloor, E.; Phillips, O.L.; Aragão, L.E.O.C. Using the U-Net Convolutional Network to Map Forest Types and Disturbance in the Atlantic Rainforest with Very High Resolution Images. Remote Sens. Ecol. Conserv. 2019, 5, 360–375. [Google Scholar] [CrossRef]

- Cao, K.; Zhang, X. An Improved Res-UNet Model for Tree Species Classification Using Airborne High-Resolution Images. Remote Sens. 2020, 12, 1128. [Google Scholar] [CrossRef]

- Huang, S.; Huang, M.; Zhang, Y.; Chen, J.; Bhatti, U. Medical Image Segmentation Using Deep Learning with Feature Enhancement. IET Image Process. 2020, 14, 3324–3332. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Yang, M.; Mou, Y.; Liu, S.; Meng, Y.; Liu, Z.; Li, P.; Xiang, W.; Zhou, X.; Peng, C. Detecting and Mapping Tree Crowns Based on Convolutional Neural Network and Google Earth Images. Int. J. Appl. Earth Obs. Geoinf. 2022, 108, 102764. [Google Scholar] [CrossRef]

- Liu, B.; Hao, Y.; Huang, H.; Chen, S.; Li, Z.; Chen, E.; Tian, X.; Ren, M. TSCMDL: Multimodal Deep Learning Framework for Classifying Tree Species Using Fusion of 2-D and 3-D Features. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Chen, C.; Jing, L.; Li, H.; Tang, Y. A New Individual Tree Species Classification Method Based on the Resu-Net Model. Forests 2021, 12, 1202. [Google Scholar] [CrossRef]

- Htun, N.M.; Owari, T.; Tsuyuki, S.; Hiroshima, T. Mapping the Distribution of High-Value Broadleaf Tree Crowns through Unmanned Aerial Vehicle Image Analysis Using Deep Learning. Algorithms 2024, 17, 84. [Google Scholar] [CrossRef]

- Bonnet, S.; Gaulton, R.; Lehaire, F.; Lejeune, P. Canopy Gap Mapping from Airborne Laser Scanning: An Assessment of the Positional and Geometrical Accuracy. Remote Sens. 2015, 7, 11267–11294. [Google Scholar] [CrossRef]

- Boyd, D.S.; Hill, R.A.; Hopkinson, C.; Baker, T.R. Landscape-Scale Forest Disturbance Regimes in Southern Peruvian Amazonia. Ecol. Appl. 2013, 23, 1588–1602. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Buda, M.; Maki, A.; Mazurowski, M.A. A Systematic Study of the Class Imbalance Problem in Convolutional Neural Networks. Neural Netw. 2018, 106, 249–259. [Google Scholar] [CrossRef]

- Cabezas, M.; Kentsch, S.; Tomhave, L.; Gross, J.; Caceres, M.L.L.; Diez, Y. Detection of Invasive Species in Wetlands: Practical Dl with Heavily Imbalanced Data. Remote Sens. 2020, 12, 3431. [Google Scholar] [CrossRef]

- Safonova, A.; Hamad, Y.; Dmitriev, E.; Georgiev, G.; Trenkin, V.; Georgieva, M.; Dimitrov, S.; Iliev, M. Individual Tree Crown Delineation for the Species Classification and Assessment of Vital Status of Forest Stands from UAV Images. Drones 2021, 5, 77. [Google Scholar] [CrossRef]

- Pratiwi, N.M.D.; Widiartha, I.M. Mangrove Ecosystem Segmentation from Drone Images Using Otsu Method. JELIKU J. Elektron. Ilmu Komput. Udayana 2021, 9, 391. [Google Scholar] [CrossRef]

- Bradley, D.; Roth, G. Adaptive Thresholding Using the Integral Image. J. Graph. Tools 2007, 12, 13–21. [Google Scholar] [CrossRef]

- Sankur, B. Survey over Image Thresholding Techniques and Quantitative Performance Evaluation. J. Electron. Imaging 2004, 13, 146. [Google Scholar] [CrossRef]

- Li, M.; Li, M. Forest Gap Extraction Based on Convolutional Neural Networks and Sentinel-2 Images. Forests 2023, 14, 2146. [Google Scholar] [CrossRef]

- Felix, F.C.; Spalevic, V.; Curovic, M.; Mincato, R.L. Comparing Pixel-and Object-Based Forest Canopy Gaps Classification Using Low-Cost Unmanned Aerial Vehicle Imagery. Agric. For. 2021, 2021, 19–29. [Google Scholar] [CrossRef]

- Mäyrä, J.; Keski-Saari, S.; Kivinen, S.; Tanhuanpää, T.; Hurskainen, P.; Kullberg, P.; Poikolainen, L.; Viinikka, A.; Tuominen, S.; Kumpula, T.; et al. Tree Species Classification from Airborne Hyperspectral and LiDAR Data Using 3D Convolutional Neural Networks. Remote Sens. Environ. 2021, 256, 112322. [Google Scholar] [CrossRef]

- Sothe, C.; La Rosa, L.E.C.; De Almeida, C.M.; Gonsamo, A.; Schimalski, M.B.; Castro, J.D.B.; Feitosa, R.Q.; Dalponte, M.; Lima, C.L.; Liesenberg, V.; et al. Evaluating a Convolutional Neural Network for Feature Extraction and Tree Species Classification Using Uav-Hyperspectral Images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 5, 193–199. [Google Scholar] [CrossRef]

- Schmitt, M.; Hughes, L.H.; Qiu, C.; Zhu, X.X. SEN12MS—A curated dataset of georeferenced multi-spectral sentinel-1/2 imagery for deep learning and data fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 153–160. [Google Scholar] [CrossRef]

- Sumbul, G.; Charfuelan, M.; Demir, B.; Markl, V. Bigearthnet: A Large-Scale Benchmark Archive for Remote Sensing Image Understanding. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5901–5904. [Google Scholar] [CrossRef]

| Parameter | Description |

|---|---|

| Course overlap | 50% |

| Flying height | 600 m |

| Flying speed | 140.4 km/h |

| pulse rate | 100 kHz |

| scan angle | ±20° |

| beam divergence | 0.16 mrad |

| point density | 11.6 points/m2 |

| Deep Learning Models | UAV Datasets | F1-Score | Precision | Recall | OA | IoU |

|---|---|---|---|---|---|---|

| U-Net | RGB | 0.00 | 0.01 | 0.00 | 0.93 | 0.00 |

| CHM | 0.52 | 0.55 | 0.50 | 0.94 | 0.36 | |

| RGB_CHM | 0.48 | 0.69 | 0.37 | 0.94 | 0.31 | |

| ResU-Net_1 | RGB | 0.64 | 0.70 | 0.60 | 0.95 | 0.47 |

| CHM | 0.53 | 0.52 | 0.55 | 0.93 | 0.36 | |

| RGB_CHM | 0.70 | 0.74 | 0.67 | 0.96 | 0.54 | |

| ResU-Net_2 | RGB | 0.77 | 0.79 | 0.74 | 0.97 | 0.62 |

| Deep Learning Models | UAV Datasets | F1-Score | Precision | Recall | OA | IoU |

|---|---|---|---|---|---|---|

| U-Net | RGB | 0.63 | 0.61 | 0.66 | 0.93 | 0.46 |

| CHM | 0.01 | 0.31 | 0.00 | 0.90 | 0.01 | |

| RGB_CHM | 0.44 | 0.67 | 0.33 | 0.92 | 0.28 | |

| ResU-Net_1 | RGB | 0.70 | 0.69 | 0.71 | 0.94 | 0.54 |

| CHM | 0.36 | 0.43 | 0.31 | 0.90 | 0.22 | |

| RGB_CHM | 0.72 | 0.68 | 0.77 | 0.94 | 0.56 | |

| ResU-Net_2 | RGB | 0.79 | 0.77 | 0.81 | 0.96 | 0.66 |

| Transfer Model | F1-Score | Precision | Recall | OA | IoU | |

|---|---|---|---|---|---|---|

| Before extended training | 42B | 0.37 | 0.57 | 0.28 | 0.82 | 0.23 |

| 16AB | 0.47 | 0.54 | 0.42 | 0.81 | 0.31 | |

| After extended training | 42B | 0.54 | 0.60 | 0.49 | 0.83 | 0.37 |

| 16AB | 0.56 | 0.56 | 0.56 | 0.83 | 0.39 | |

| ResU-Net | 0.45 | 0.61 | 0.35 | 0.83 | 0.29 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Htun, N.M.; Owari, T.; Tsuyuki, S.; Hiroshima, T. Detecting Canopy Gaps in Uneven-Aged Mixed Forests through the Combined Use of Unmanned Aerial Vehicle Imagery and Deep Learning. Drones 2024, 8, 484. https://doi.org/10.3390/drones8090484

Htun NM, Owari T, Tsuyuki S, Hiroshima T. Detecting Canopy Gaps in Uneven-Aged Mixed Forests through the Combined Use of Unmanned Aerial Vehicle Imagery and Deep Learning. Drones. 2024; 8(9):484. https://doi.org/10.3390/drones8090484

Chicago/Turabian StyleHtun, Nyo Me, Toshiaki Owari, Satoshi Tsuyuki, and Takuya Hiroshima. 2024. "Detecting Canopy Gaps in Uneven-Aged Mixed Forests through the Combined Use of Unmanned Aerial Vehicle Imagery and Deep Learning" Drones 8, no. 9: 484. https://doi.org/10.3390/drones8090484

APA StyleHtun, N. M., Owari, T., Tsuyuki, S., & Hiroshima, T. (2024). Detecting Canopy Gaps in Uneven-Aged Mixed Forests through the Combined Use of Unmanned Aerial Vehicle Imagery and Deep Learning. Drones, 8(9), 484. https://doi.org/10.3390/drones8090484