Abstract

The use of computer vision in the industry has become fundamental, playing an essential role in areas such as quality control and inspection, object recognition/tracking, and automation. Despite this constant growth, robotic cell systems employing computer vision encounter significant challenges, such as a lack of flexibility to adapt to different tasks or types of objects, necessitating extensive adjustments each time a change is required. This highlights the importance of developing a system that can be easily reused and reconfigured to address these challenges. This paper introduces a versatile and adaptable framework that exploits Computer Vision and the Robot Operating System (ROS) to facilitate pick-and-place operations within robotic cells, offering a comprehensive solution for handling and sorting random-flow objects on conveyor belts. Designed to be easily configured and reconfigured, it accommodates ROS-compatible robotic arms and 3D vision systems, ensuring adaptability to different technological requirements and reducing deployment costs. Experimental results demonstrate the framework’s high precision and accuracy in manipulating and sorting tested objects. Thus, this framework enhances the efficiency and flexibility of industrial robotic systems, making object manipulation more adaptable for unpredictable manufacturing environments.

1. Introduction

Industrial robots have been a constant presence in the manufacturing industry since the onset of the third industrial revolution, and their use has increased over the years, driven by advancements in computing, electronics, automation, and cyber–physical systems [1]. Among these industrial robots, robotic arms stand out for their ability to tirelessly manipulate products at a higher speed when compared with human labourers. Such a capacity has made them ideal for executing pick-and-place tasks on products in manufacturing lines. In Industry 4.0, industrial robots are becoming increasingly autonomous, flexible, and collaborative, intending to work alongside humans safely and learn from them [2]. They play a crucial role in modern manufacturing, aligning with Industry 4.0’s focus on autonomous production methods powered by intelligent robots, prioritising safety, flexibility, versatility, and collaboration.

Currently, many industrial pick-and-place systems rely on preprogrammed trajectories to reach fixed target poses, limiting their adaptability to changes in the production line. These systems often require manual intervention or reprogramming to accommodate the products’ position and orientation variations. Indeed, robotic arms demonstrate excellent performance for preprogrammed tasks within structured manufacturing environments. However, slight changes in product position or orientation may be enough to prevent a robotic arm from completing its predefined routine [3]. This lack of flexibility leads to inefficiencies, reductions in productivity, and increased downtime in manufacturing processes. For instance, random-flow pick-and-place systems operate in unpredictable environments. These applications require flexibility to adapt to target changes and to achieve the demanded performance [4]. In this way, to address these challenges, depth sensors, cameras, and other sensors for comprehending the manufacturing environment have become indispensable [5,6].

Computer vision has gained popularity in industrial applications over the last few decades due to its ability to create autonomous systems that rely on vision sensors to extract information from the workspace [5,7]. Providing vision capabilities to industrial robots opens new possibilities for automation, quality control, inspection, and object detection [8,9]. Additionally, perceiving the environment enables these robots to adapt to minor variations in the robot workspace, which may avoid system reprogramming [10,11]. Therefore, despite computer vision already being deployed in industry, there is still space for new vision-based applications capable of enhancing aspects of pick-and-place robotic systems, such as real-time performance, generalization, integration, and robustness.

For industrial applications, it is common for robotic manufacturers to employ their own toolkit for robotic system development. Generally, this toolkit includes controllers, teach pendants and software platforms for robot programming and simulation. Consequently, the deployment process can vary significantly depending on the selected robot model and brand. On the other hand, the Robot Operating System (ROS) and ROS-Industrial (ROS-I) have emerged as game-changing platforms for advancing automation [12]. ROS, known for its versatility and robustness, provides a framework for developing, simulating, and deploying robotic applications regardless of the robot’s type or brand. ROS-I, a tailored extension of ROS, is specifically designed to meet the unique needs of industrial environments and supports a range of robot models from various popular industrial robot companies. In this paper, we exploit the capabilities of ROS and ROS-I combined with computer vision to drive our research towards more adaptable and intelligent manufacturing processes.

This paper addresses the challenges encountered in industrial settings related to reliable product localization and autonomous displacement, particularly in applications requiring rapid deployment. Based on this, a ROS-based computer vision package is proposed. This package includes a toolkit designed to accelerate the deployment process, making it fully modular and easy to integrate with other ROS-compatible robotic cells. Furthermore, both the physical and digital layers of a minimum robotic cell were developed specifically for pick-and-place tasks. This robotic cell operates with ROS Noetic (version 1.16.0) and is compatible with third-party ROS packages, making the digital side suitable for the development and testing of robotic pick-and-place tasks. Also, it relies on MoveIt for efficient path planning, employing Rviz for bidirectional control and visualization of the virtual model of the cell. Finally, the paper presents a pick-and-place experiment conducted with the physical/digital robotic cell to showcase the capabilities of the proposed computer vision package in handling scenarios involving situations where objects flow randomly.

2. Background and Related Works

2.1. Robot Operating System for Industrial Applications

Officially introduced to the public in 2009 by Willow Garage, ROS is a framework that provides functionalities such as hardware abstraction, device drivers, libraries, visualizers, message passing, and package management [12]. ROS’s adaptability has led to its wide adoption in various robotic applications, ranging from academic research to real-world industrial scenarios. In the context of industrial automation, ROS-I has been designed to fit the industry’s specific demands. ROS-I provides an open-source platform to simplify the integration of advanced capabilities, such as computer vision and motion planning, into industrial robotics [13]. Among its advantages, ROS-I includes interoperability, scalability, flexibility, and collaboration. Several studies have highlighted the integration of ROS-I in manufacturing scenarios. These works cover a range of robotic applications, such as collaborative robots, advanced path planning, flexible automation, and 3D perception. Moreover, computer vision integration with ROS-I has opened avenues for more intuitive robot systems. With the ability to perceive their environment, robots can now handle tasks with higher variability and complexity, eliminating the need for extensive reprogramming [4,10,11]. In conclusion, ROS and ROS-Industrial have become indispensable tools for advancing automation in the industrial sector. By offering versatility, scalability, and a community-driven platform, they ensure that industrial robots remain competitive within the scope of robotic technology.

2.2. Computer Vision for Pick-and-Place Applications

The development of robust methods to address robot grasping challenges has been extensively explored in the robotics field [14,15,16,17,18,19]. Although robot grasping can be split into multiple intricate parts, two critical aspects stand out in the analysis of object grasping. First, sensor utilization to acquire data from the environment, proportioning robot perception. Second, algorithms that interpret and give meaning to this collected data. Combining perception with powerful algorithms enables higher autonomy levels for pick-and-place robotic systems and raises the possibilities in manufacturing applications. Recent works show a large variety of approaches to solve such challenges. For instance, there are methods based on a non-visual workspace, which can reduce the computational cost of the system [20,21]. However, some applications demand more information from the workspace, leading to the use of more powerful equipment, such as 2D and 3D cameras. From these cameras, robots gain the ability to perceive the environment with vision and take actions according to object displacement. In this context, several works in the literature focus on processing and comprehension of the environment by using computer vision algorithms. Recently, with notable advances in computational power, supervised machine learning techniques have been applied to learn the characteristics of target objects, such as colour, shape, texture, spatial position, and orientation [9,18,22,23,24,25]. Reinforcement Learning (RL) has gained special attention since it provides more adaptability to dynamic environments, self-improvement, generalization, and a reduction in human intervention, among other advantages. Its potential has been proven by many research studies in the field of industrial robots [20,26,27,28].

3. Methodology

The methodology employed was divided into four main subsections: the robotic cell description, the computer vision algorithm development, the ROS workspace structure, and the proposed random-flow pick-and-place system design. Such topics form the basis of the research, enabling the creation of flexible and adaptive robotic systems for efficient industrial automation. The following subsections provide detailed insights into each part, explaining the choices made and the rationale behind them.

3.1. Robotic Cell Description

3.1.1. Robotic Cell—Physical Layer

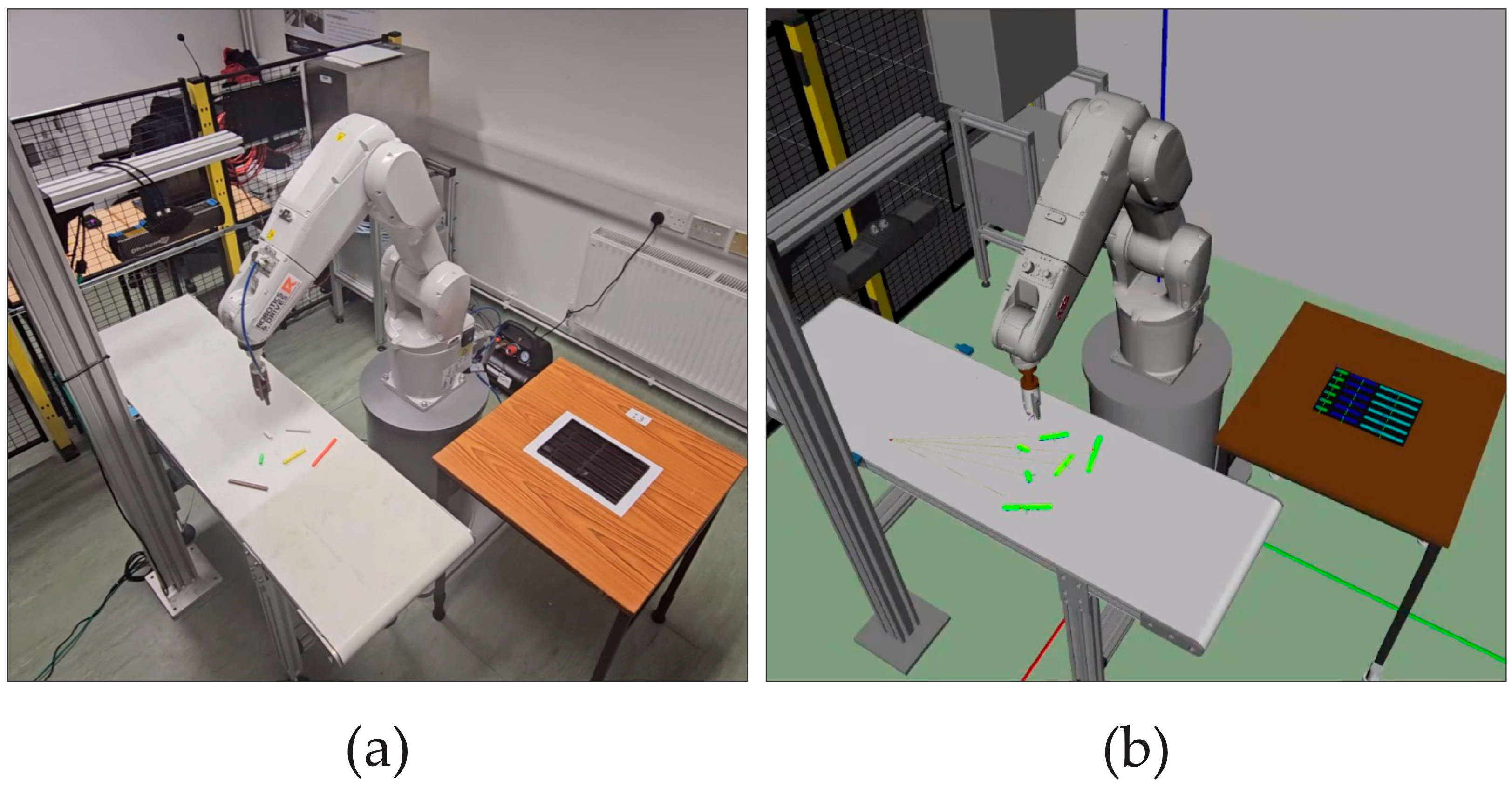

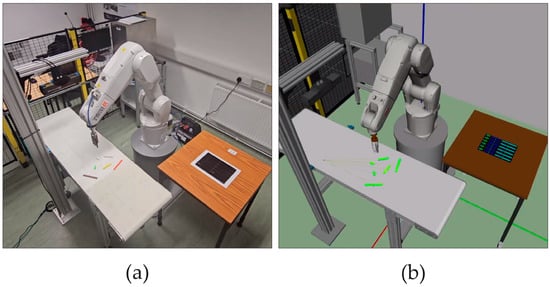

The robotic cell (Figure 1) was developed to support the execution of pick-and-place experiments with precision and efficiency. It comprises a range of components, each contributing to the system’s functionality:

Figure 1.

(a) Physical and (b) digital layers of the robotic cell.

- Robotic arm: The core of our robotic cell is the ABB IRB 1200-5/09 robotic arm, integrated with the IRC5 compact controller. This model variant can reach 900 mm and carry a payload of up to 5 kg. Therefore, this arm offers the required dexterity and precision for the proposed pick-and-place tasks.

- Vision sensor: The robotic cell integrates a Phoxi 3D scanner S from Photoneo for real-time part detection. This scanner provides accurate depth data, enabling the robot to interact with objects by using point cloud acquisition to determine their position and orientation. The camera offers a resolution of up to 3.2 MP and has a scanning range from 384 to 520 mm, with an optimal scanning distance of 442 mm. Based on this optimal distance, the camera was positioned 1370 mm above the floor and 430 mm above the conveyor belt surface.

- End-effector: The GIMATIC PB-0013 pneumatic gripper attached to the sixth robotic arm joint is used to execute the grasp of the detected parts on the conveyor belt. This gripper operates with compressed air and a 3/2-way valve. It functions as a single-acting gripper with an opening spring mechanism, closing when pressure is applied to its cavity and opening via spring return action when pressure is released.

- Extra components: Some minor components present in the robotic cell are relevant for the pick-and-place system functioning. First, to facilitate the transportation of products within the cell, we have implemented a conveyor belt system that ensures a continuous flow of objects (RDS, Mullingar, Ireland), creating a dynamic environment for our pick-and-place experiments. The conveyor belt has a width and length equal to 0.42 and 1.4 m, respectively, and operates at a constant speed of 0.240 m per second. A stationary table exists within the cell for the place area boundary where the robot must displace the parts. Together, these components form the robotic cell designed for the execution of pick-and-place tasks in dynamic and unstructured environments based on the proposed computer vision ROS package.

3.1.2. Robotic Cell—Digital Layer

In addition to the physical components of the robotic cell, a significant aspect of the research involves the creation of its virtual counterpart. This digital layer operates as a virtual counterpart of the physical layer, enabling the real-time simulation, monitoring, and optimization of our automated processes.

The physical/digital interface development was made possible by integrating ROS-I, a set of packages that extends the ROS capabilities to industrial robots. ROS-I packages support a range of industrial robot models from leading robotic companies, including ABB. These packages provide description packages for the supported robot models, enabling the creation of simulations based on the exact physical properties of the robot. Additionally, ROS-I incorporates robot drives that operate over Transmission Control Protocol/Internet Protocol (TCP/IP) sockets, facilitating a robust interface between the physical robot and ROS nodes. This integration empowers either the digital layer to mirror the behaviour of the physical robot in a real-time process or the offline simulation for development purposes. The left side of Figure 1 shows the physical layer of the robotic cell, whilst the right side shows its digital version running in the Rviz platform during the pick-and-place task execution.

Considering that the digital layer is the virtual counterpart of the physical one, all the components present in the cell have their digital representation within the virtual environment. In ROS, the standard way of creating digital copies of physical system components is by describing those components inside Unified Robot Description Format (URDF) files. URDF is a type of XML file that extends its text-based structure to describe specifically robots and other surrounding items necessary to complete the robot environment [29]. A URDF file comprises a primary tag known as ‘Robot’, within which exists two nested tags: ‘Link’ and ‘Joint’, describing the parts of the robot and the connections between them, respectively. For instance, for illustration, consider a 6-axis robotic arm. It contains seven parts sequentially connected from one to the next, ultimately forming the complete robot structure. In this context, each part of the robot corresponds to a ‘Link’, while the interfaces or connections between these components represent the ‘Joints’. A comprehensive guide to creating URDF files, including detailed examples and best practices, is available on the official ROS website [30]. These resources are essential for understanding the structure and development of URDF files, which are crucial for accurately representing robot models in ROS-based applications.

Furthermore, we encounter two more nested tags when inspecting the ‘Link’ tag. The first is ‘Visual’, which contains an identical virtual mesh representation of the physical component. The second, ‘Collision’, holds a low-resolution mesh slightly wider than the Visual one. This collision mesh is necessary once it defines all the possible collision points within the cell, considering them during path planning definition.

3.1.3. Motion Planning Definition with MoveIt

Effective motion control is one of the most critical aspects of working with robots and needs to be well structured to enable precise and efficient robotic arm movements during complex tasks such as pick-and-place operations involving objects placed on conveyors. MoveIt is a robust motion planning framework within the ROS that provides various tools and algorithms for motion planning, collision checking, and control. When dealing with motion planning, MoveIt is designed to work with many different types of planners, such as Open Motion Planning Library (OMPL) [31], Stochastic Trajectory Optimization for Motion Planning (STOMP) [32], and Covariant Hamiltonian Optimization for Motion Planning (CHOMP) [33]. For all the tests conducted in this research, the OMPL planner was used since its capabilities have been proven over the years in many related works [34,35]. Using MoveIt and OMPL planner together allows the creation of real-time robotic applications that require the fast computing of the robotic arm’s forward and inverse kinematics to determine its joint angles or reach end-effector target poses, respectively.

3.2. Computer Vision Algorithm

To address the previously listed challenges, an object detection algorithm was developed that works with point clouds, a conventional data structure used in 3D imaging. The point cloud extraction process is discussed in detail in the next section when describing the developed ROS workspace.

3.2.1. DBSCAN for Shape Identification

The primary strength of this algorithm is based on its capacity for object shape extraction. When detecting possible objects on the conveyor belt, the 3D sensor is triggered to obtain the raw point cloud of the scene. This point cloud is processed by removing all unwanted points, such as the conveyor surface where the objects travel. After this filtering stage, the clustering process begins, where points nearby are grouped to represent possible objects existing in the scanned scene. The Density-Based Spatial Clustering of Applications with Noise (DBSCAN) method, renowned for its effectiveness in spatial data analysis, supports this clustering phase [36,37]. The core idea behind DBSCAN is its reliance on two hyperparameters:

- (epsilon): It represents the maximum distance (radius) between two points to be considered neighbours.

- (minimum number of points): It indicates the minimum number of points that must be within ϵ distance to classify a point as a core point.

Mathematically, for a given point belonging to a point cloud and with a distance of , the -neighbourhood of is defined as:

where is the Euclidean distance between points and in 3D space, calculated as:

From Equation (1), all the neighbours of are found. Based on this information, it is defined whether is a core, border or noise point. A point is classified as a core point if there are at least points (including itself) within its -neighbourhood, i.e., . Meanwhile, a point is classified as a border point if two conditions are fulfilled. First, is not a core point once the number of neighbours is less than , i.e., . Second, is within the -neighbourhood of a core point , i.e., where . However, if a point is neither a core point nor a border point, it is defined as a noise point. In other words, is a noise point if does not have at least points in its -neighborhood (so it is not a core point) and is not within the -neighborhood of any core point (so it is not a border point). Mathematically, is noise if:

With this information, the DBSCAN algorithm begins by labelling all points in a point cloud as unvisited. It iterates through each point in the point cloud. If is already visited, it skips to the next point. If is unvisited, it marks it as visited and retrieves its -neighborhood . If is a core point, it creates a new cluster and adds to . If is not a core point, it marks it as noise. This may change later if is found to be within the -neighborhood of a different core point. For each core point in the new cluster , the algorithm iterates through each point in . If is unvisited, it marks it as visited and retrieves its -neighborhood . If is a core point, it adds all points in to the cluster . If is not a core point but is within the -neighborhood of a core point, it is considered a border point and is added to the cluster . The algorithm continues expanding clusters until all points are visited. It terminates once all points have been processed.

At the end of the clustering process, a set of related points that respect the rules described above defines a cluster , and a point cloud can have multiple clusters so that each one is labelled as a potential target object. Therefore, given a set of clusters , each cluster is non-empty (), clusters are disjoint ( for ), and:

where is the current point cloud. The equation indicates that every point in any cluster is a point in , though may also include points not in any (e.g., noise points).

Notably, the algorithm offers flexibility by adjusting its parameters. For example, users can modify (1) the value, which sets the neighbourhood consideration radius; and (2) the needed for a dense area. Such an adjusting capacity ensures the algorithm’s adaptability to many different applications.

3.2.2. PCA for Object Centroid and Orientation Extraction

In addition to detecting shapes, the algorithm also offers information on the orientation of objects. By examining the longest and shortest parts of an object, it can determine how the object is positioned. This orientation analysis is driven by Principal Component Analysis (PCA), a statistical procedure that uses an orthogonal transformation to convert correlated variables into a set of values of linearly uncorrelated variables called principal components [38]. A key point of PCA is the dimensional reduction. However, it can also be applied to object orientation computation by extracting the centroid, eigenvalue, and eigenvector of their point clouds’ principal component.

Suppose we have a cluster with points representing the object in a 3D space. Each point can be represented as a vector , and is the matrix where each row corresponds to a point . The first step is to calculate the centroid by computing the mean of the points for the dimensions using the equation below:

The cluster is translated to the origin by subtracting the centroid from each point available in the cluster in each dimension, resulting in a centred cluster . This is then used to compute the covariance matrix , which is given by:

where denotes the conjugate transpose of the centred cluster. The principal components are then determined from:

where is the eigenvectors and is their corresponding eigenvalues. The orientation of the object is provided by using the eigenvectors. The first principal component of the cluster (associated with the largest eigenvalue ) represents the longest part of the object, while the orthogonal one represents the shortest dimension.

Through PCA, we can extract the eigenvector, centroid, and eigenvalues for all objects in the scene. These features ensure that our algorithm is effective in both object detection and spatial orientation understanding, which are essential for applications like robotic manipulation or advanced scene analysis.

3.2.3. Object Shape Matching and Sorting

The final stage of the computer vision algorithm relies on integrating the object shape, position, and orientation extracted from the point cloud in the preceding steps. A point cloud registration is then conducted to align the real-time acquisition (source) point clouds with the desired object position (target). This alignment is made by the centroids of both target and source object point clouds. The algorithm incorporates a built-in functionality to extract the point cloud of a predefined object stored within the system, which serves as the target position for the identified objects to be grasped.

Following the registration, the source and target point clouds are overlaid by translating the source point cloud using its centroid to the target centroid, facilitating a comparison. From this comparison, the system extracts a series of features related to their shapes to check the similarities between the source and target objects. Objects are categorized as the same type if their similarity exceeds a predetermined percentage threshold, as specified in the configuration file. Upon classification as identical objects, the source object is designated for placement in its corresponding target position.

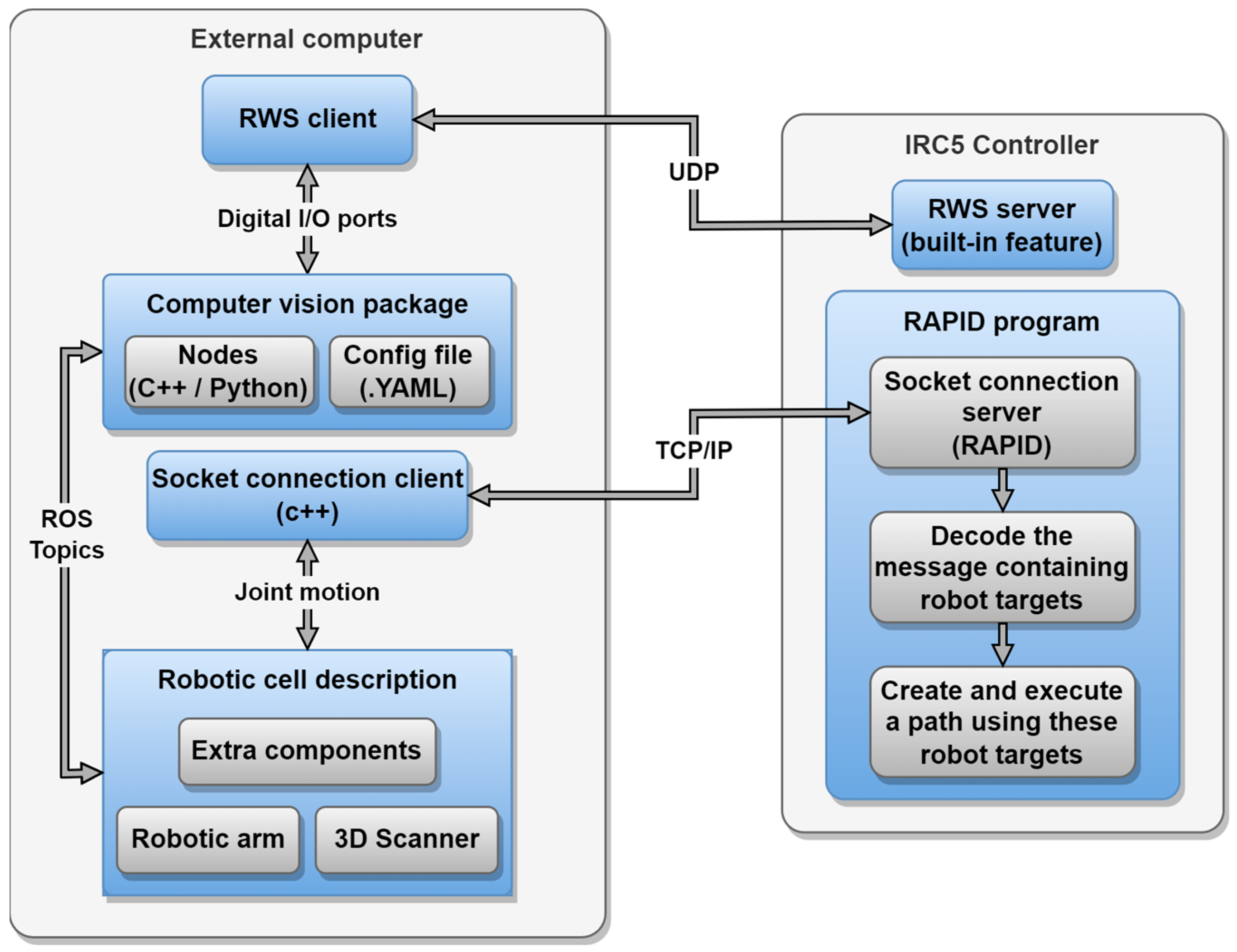

3.3. ROS Workspace Structure

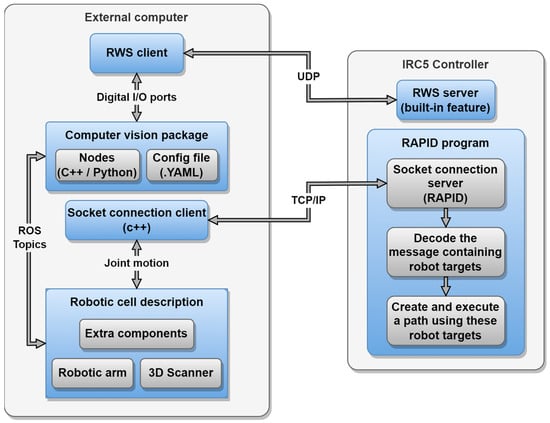

The ROS workspace developed for this research is composed of a set of packages to be used in conjunction to facilitate the pick-and-place process. Figure 2 presents an overview of the interactions between an external computer and the IRC5 controller, manufactured by ABB Robotics, Västerås, Sweden. It consists of several nodes that handle data processing, control commands, and sensor fusion. The package uses ROS’s topics and socket connections to ensure seamless communication between the vision system and the robot, allowing real-time control.

Figure 2.

ROS workspace and components interconnection overview.

The IRC5 controller is the key component for controlling ABB robots and can be programmed in RAPID code. RAPID is the native programming language used by ABB Robotics, designed specifically for robot motion control, manipulation, and execution of complex tasks. Although not a complex language, it supports TCP/IP socket connections, which allows send and receive data from external programming languages. In this system, the socket connection is a bidirectional communication. The IRC5 controller sends the robot joints’ position, which is used to update the digital layer in real time. On the other hand, the external computer runs a ROS node to send a set of targets, which describes the path to be executed by the physical robot.

The computer vision package is one of the main components of the entire system since it acts as the bridge between the computer vision algorithm and the robotic arm. This package has a set of Python scripts (version 3.8.10) and C++ scripts (version 9.4.0) to execute point cloud processing and define the targets that the robot must reach. Furthermore, the computer vision package uses Robot Web Services (RWS) to read/write digital I/O ports. This feature allows the easy control of peripherals, such as the conveyor belt and the end-effector’s pneumatic valve. This package is modular and seamlessly integrated into different robotic cells by meeting the minimum system requirements for executing random-flow pick-and-place tasks. These requirements include a robotic arm equipped with a binary gripper, a conveyor belt for object delivery, and a 3D sensor for extracting object point clouds. Understanding that each robotic cell has unique features, the computer vision package incorporates a comprehensive set of configuration files in YAML format, which is a human-readable data serialization language. These files are essential repositories of application-specific information, ensuring smooth operation and compatibility with diverse cell configurations. The configuration files encompass crucial elements, including the names of all ROS topics and services built for sending/receiving data to/from the cell components. Additionally, they provide specifications such as the robot model, the end-effector tool centre point, the coordinates of designated place positions, and the type of camera/scanner employed for object detection and scanning Notably, the designated place positions are fundamental to the package’s functionality. These positions are determined by loading STL format files into the system by defining the mesh file location and its corresponding position/orientation in the configuration file. Consequently, when the shape of a potentially detected object matches the loaded target mesh shape, the robot can effectively place it. Additionally, the system supports multiple predefined object types loading in various positions and orientations. This capability enhances the system’s flexibility, as target positions can be designated anywhere within the robot’s workspace.

3.4. Random-Flow Pick-and-Place System Design

The proposed random-flow pick-and-place system is designed to efficiently handle objects in dynamic and unpredictable environments. The system incorporates adaptive algorithms that allow the robot to adjust its trajectory and grasp strategy in real-time, responding to variations in object position and orientation. Safety measures, such as collision detection and emergency stops, are integrated to ensure the system’s reliability in unstructured settings.

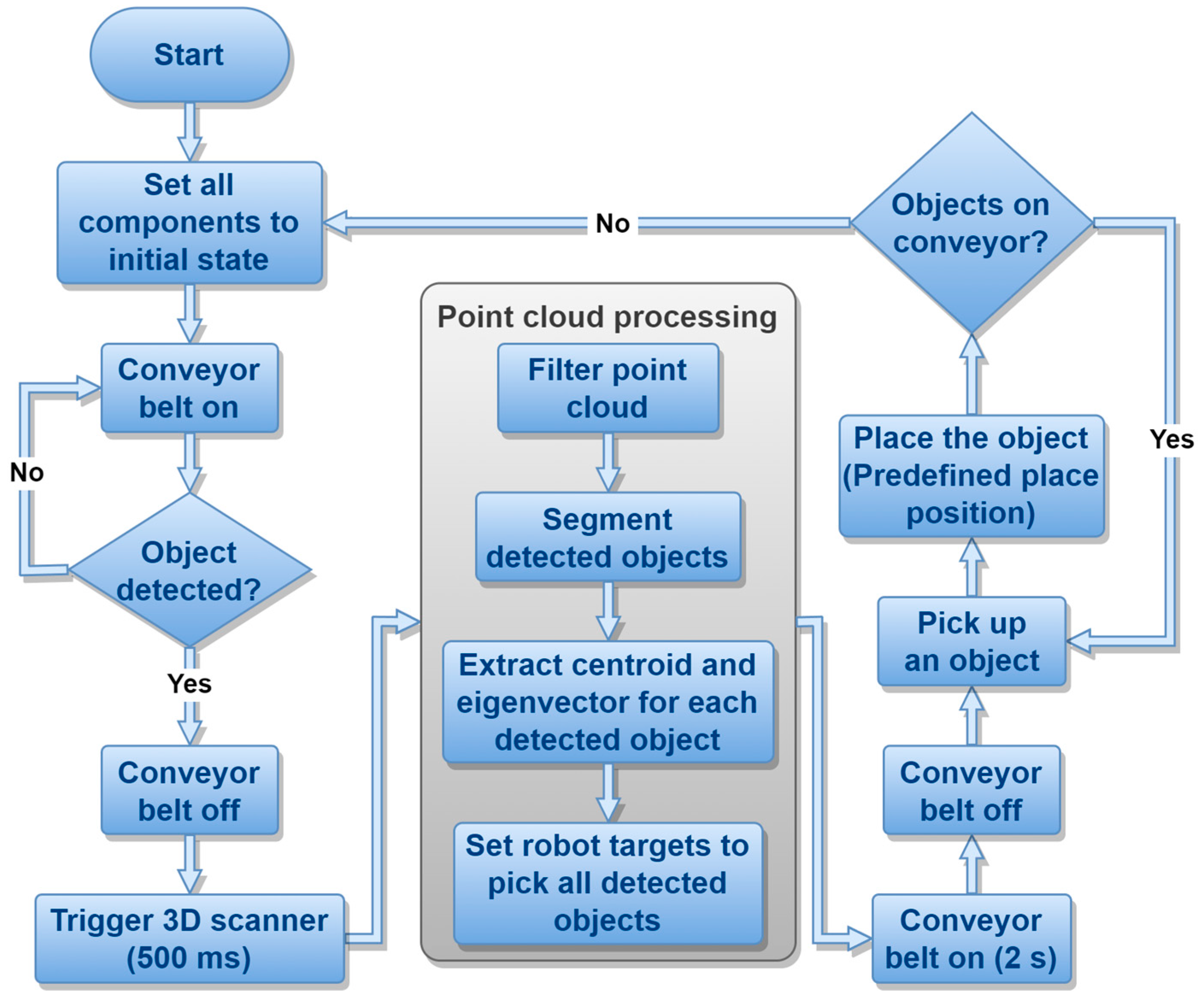

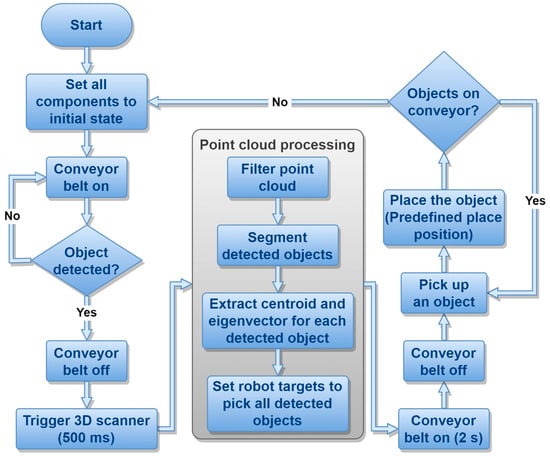

Figure 3 presents the flowchart of the proposed pick-and-place system, which, after it starts, will execute an initialization phase where all components are set to their default state, ensuring that the system is ready for operation. This is critical for the subsequent processes to function correctly. With the system initialized, the conveyor belt is activated. As objects begin to move across the conveyor belt, the system enters a detection phase. Here, a pair of retroreflective sensors are employed to identify whether an object is present underneath the 3D scanner. If no object is detected, the system keeps continuously scanning for items. Upon object detection, the conveyor belt stops, triggering the 3D scanner, which captures a point cloud of the object. This point cloud is then subjected to a series of data processing steps described in Section 3.2.

Figure 3.

Autonomous pick-and-place process flowchart.

With the processed data, the system prepares the robotic arm with specific targets to handle all detected objects, adjusting its orientation according to the best pick-up approach. The presence of objects on the conveyor belt is re-evaluated. If the belt is clear, the system resumes the conveyor and keeps checking for new objects. Otherwise, the robotic arm engages in picking up the next identified object. During the pick-up phase, the conveyor belt remains stationary to allow the robotic arm to perform its task without any movement that could compromise precision. The robot then executes the placement of the object to the predefined position.

The process of camera calibration was critical to the real-world experiments since the pick-and-place task relied on the quality and precision of point cloud acquisition. To ensure accurate detection, calibration was performed according to the camera’s datasheet. We defined the coordinate space as MarkerSpace, a reference frame commonly used in Photoneo cameras. In this setup, MarkerSpace allows the system to recognize and map objects within the physical environment by detecting specific markers that serve as fixed reference points, ensuring spatial accuracy. We used the Marker Pattern provided on the Photoneo resource webpage to complete the calibration process and align the camera’s field of view with the conveyor system for precise object detection and positioning. Moreover, to ensure the relative position between the digital and physical cameras and the robotic arm remained consistent, a pointing tool was attached to the robotic arm. The robot was then navigated to key points of the camera structure, ensuring that its digital counterpart was correctly aligned with the corresponding points in the physical camera structure.

3.5. Pick-and-Place Task Experiment

The hardware used in this experiment consisted of a standard laptop equipped with 32 GB of RAM, an 11th Gen Intel(R) Core(TM) i7-11800H processor running at 2.30 GHz, and an NVIDIA GeForce RTX 3060 GPU with 6 GB of memory. Despite the availability of a GPU, the computer vision algorithm utilized in the system was processed solely on the CPU. The decision to run the algorithm on the CPU was based on the current system configuration, which prioritized simplicity and ease of implementation over performance optimization. However, for future experiments or industrial applications requiring faster processing times, the use of GPU capabilities could significantly accelerate the point cloud processing and other computer vision tasks, improving overall system efficiency.

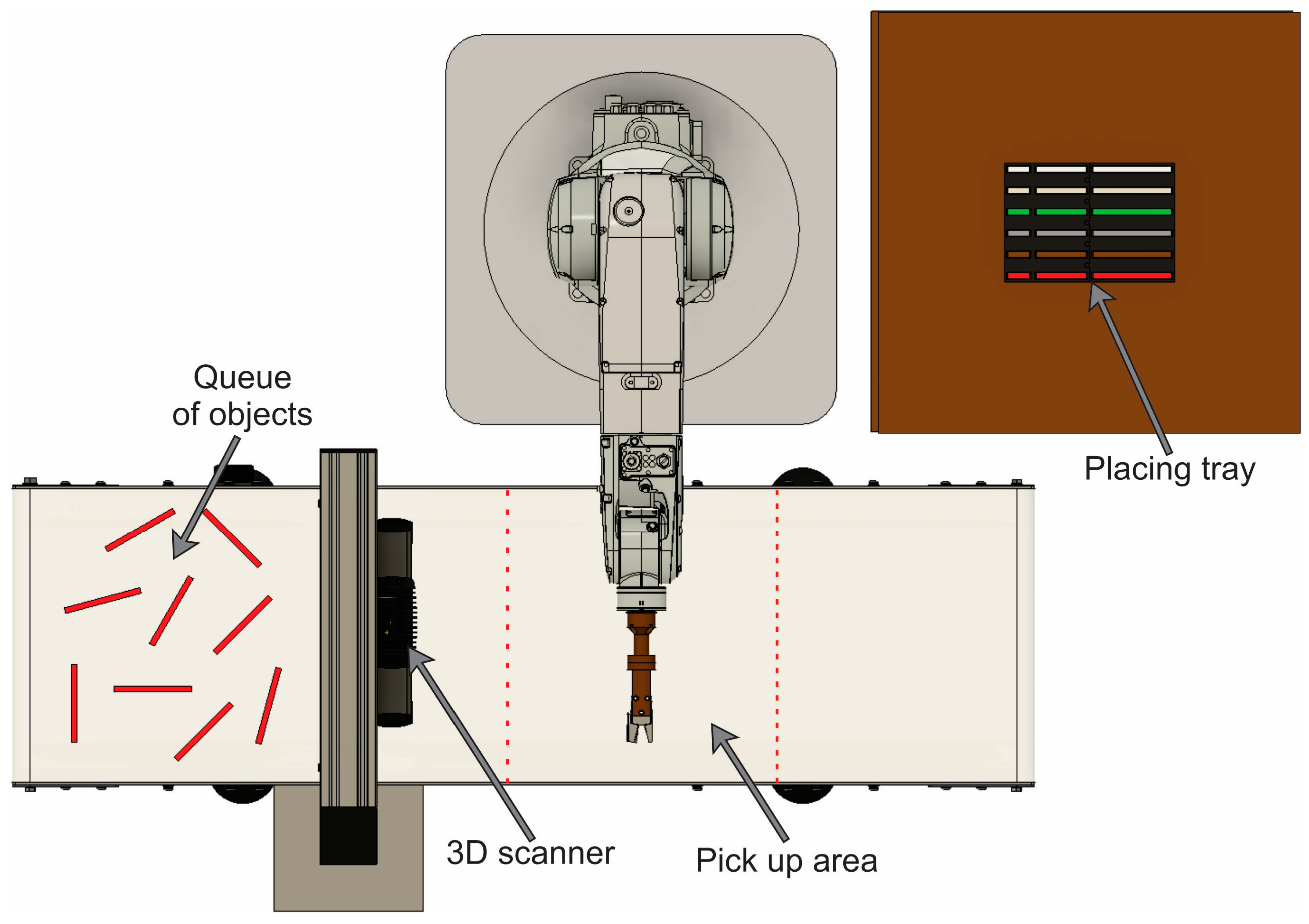

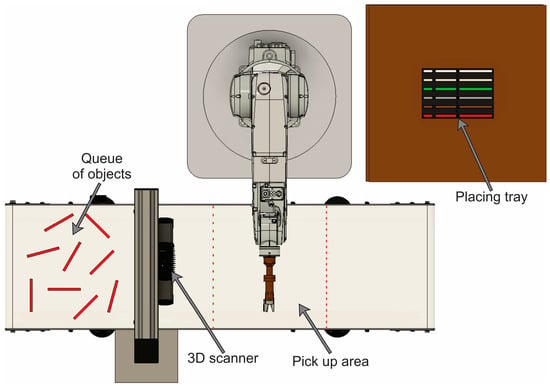

A random-flow pick-and-place experiment was conducted using the robotic cell outlined in Section 3.1 to analyse and validate the proposed computer vision package. The experiment involved performing pick-and-place tasks with three types of objects represented in Figure 4 inside the placing tray. The objects share similar rectangular shapes but differ in size: small (8 × 8 × 30 mm), medium (8 × 8 × 70 mm), and large (8 × 8 × 110 mm). These objects were retrieved from the conveyor belt and transferred to a tray positioned near the robotic arm on a table. The tray was designed with slots customized to accommodate each object type, providing a 1 mm clearance on all sides. Thus, the tray dimensions were set at 10 × 10 × 32 mm for small objects, 10 × 10 × 72 mm for medium objects, and 10 × 10 × 112 mm for large objects.

Figure 4.

Pick-and-place task experiment setup. The queue of objects passes in front of the 3D scanner, which sends the target positions to the robot for picking the objects in the pickup area and placing them in the tray positioned on the table.

During the experiments, we collected the position and orientation of all detected objects to measure the error between the real object angle and the estimated angle by the computer vision algorithm. Each experiment batch involved placing 12 objects of identical type onto the conveyor at varying angles, ranging from 0 to 165° in increments of 15°. To maintain uniformity in object orientation, we designed a mould with 12 slots for all possible angles, allowing the robot to pick one object at a time and complete a batch when all 12 objects were picked and placed. This process was repeated 60 times for each object type, resulting in 720 pick-and-place task executions per object type.

In addition, a second analysis was performed to evaluate the success of the pick-and-place task. A successful task was defined by the robot effectively picking the object from the designated position and accurately placing it into the corresponding slot. Failures occurred if the system encountered difficulties in picking the object, if the object fell during transfer, or if the robot incorrectly placed the object into the designated slot or a mismatched one.

4. Results and Discussion

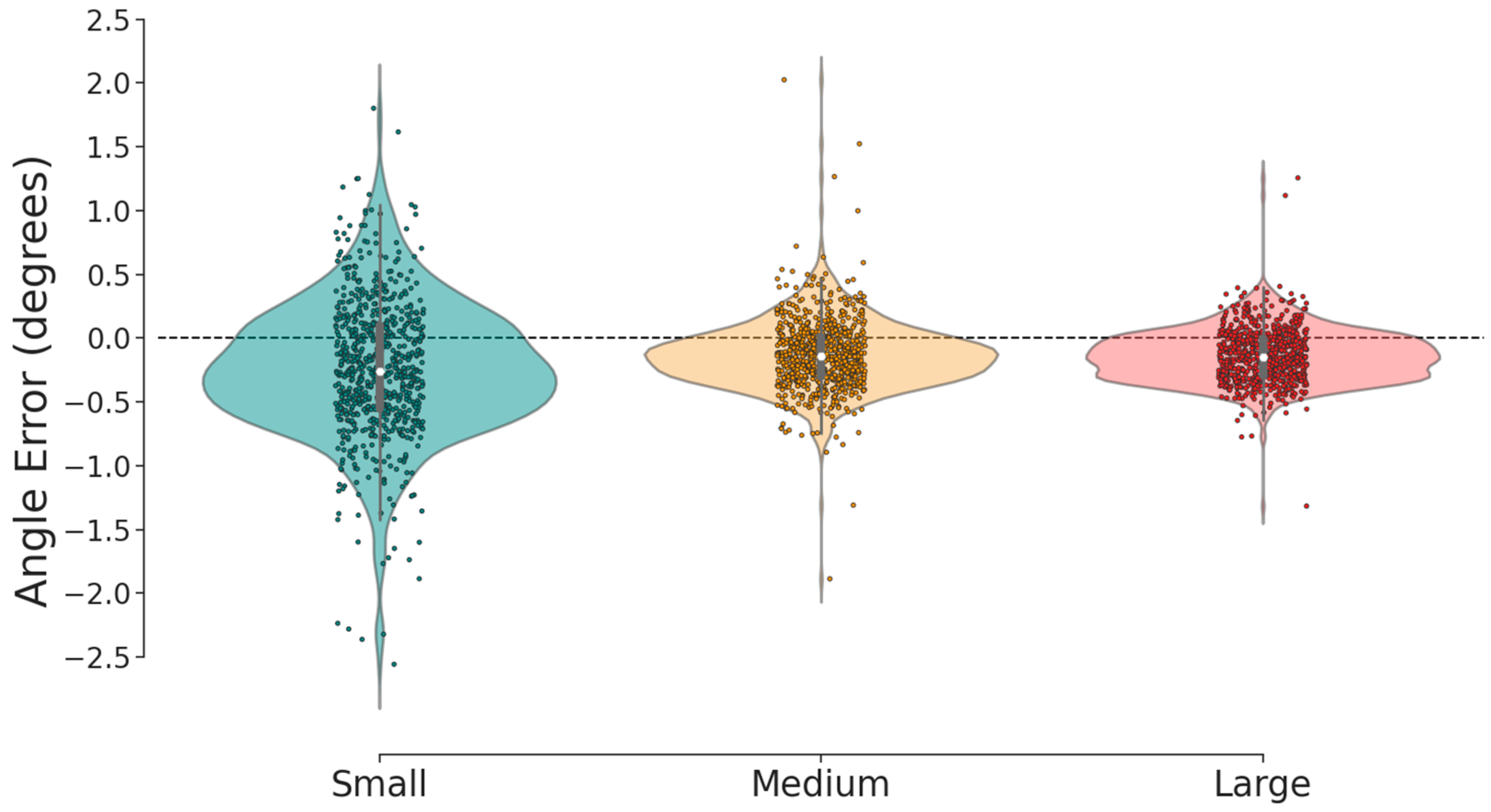

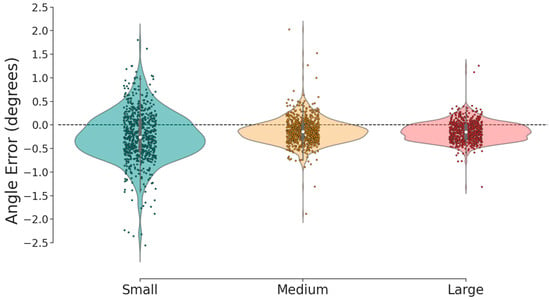

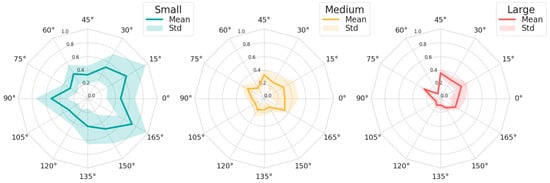

The accuracy of the computer vision algorithm in estimating object orientation was evaluated by analysing the difference between the real angle and the algorithm’s angle estimation. Figure 5 illustrates the angle error, measured in degrees for each object type. The obtained errors were between −2.558° and 2.025°. When investigating each object type separately, the mean error and standard deviation were as follows: −0.240° ± 0.536° for small objects, −0.131° ± 0.282° for medium objects, and −0.138° ± 0.211° for large objects. The results indicate that the computer vision algorithm achieved a high accuracy level in estimating the orientation of objects during the pick-and-place task. Across all object types, the mean angle error was close to zero, indicating minimal bias in the estimation process. Additionally, the narrow range of errors and low standard deviations suggest consistency and precision in the orientation estimation.

Figure 5.

Violin plot of the angle error for each type of object.

Figure 5 presents a decrease in mean error and standard deviation as object size shifts from small to medium and from medium to large. These variations are likely due to discrepancies in object dimensions and morphology. The estimation of angular orientation relies on the PCA technique, which derives centroid, eigenvalue, and eigenvector parameters from a point cloud, splitting the data variability into principal components so that the first one refers to the axis exhibiting the greatest data variability. Consequently, longer objects typically have disparities inside lengths, leading the PCA to identify the longer side as the principal component, thus producing orientation estimates aligned with the long axis. On the other hand, shorter objects tend to exhibit more uniform side lengths, leading the PCA algorithm to detect more variability along the object’s diagonals, thereby increasing the error in angle estimation. However, further analysis is necessary to fully understand the factors impacting orientation estimation accuracy for different object types.

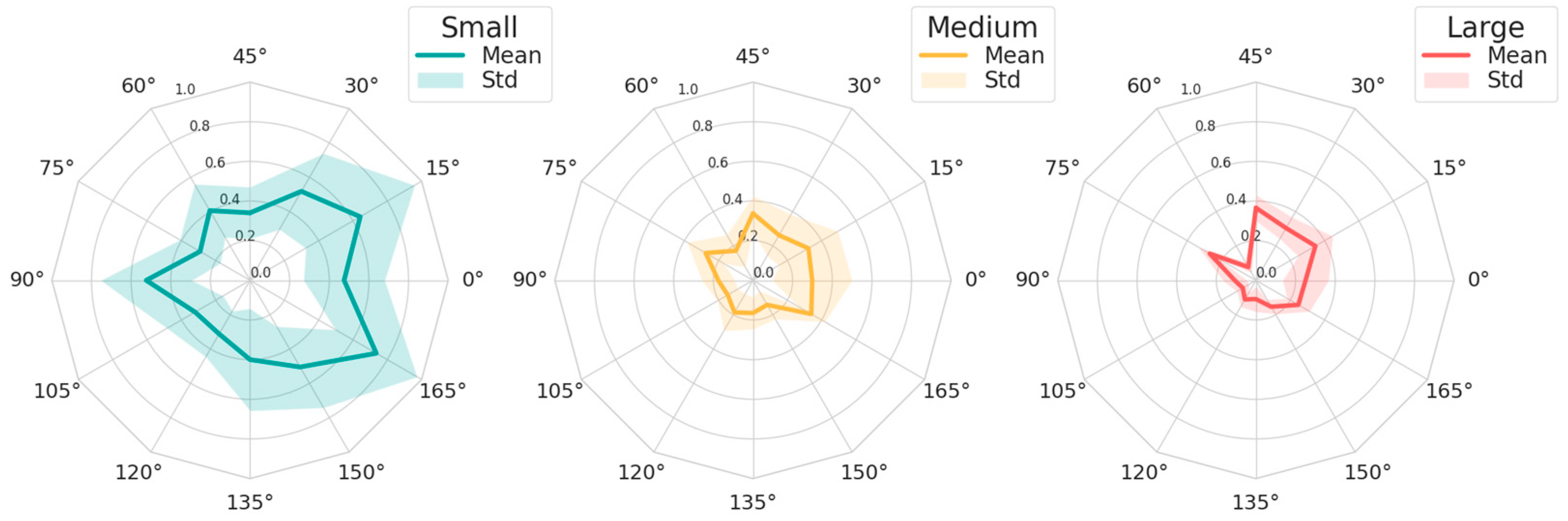

Considering the points raised, the Mann–Whitney U Test was employed to verify whether the angle error distributions are equivalent or divergent. Figure 6 shows the errors observed individually for each angle tested throughout the experimental trials. The radar charts present the absolute error values, with negative values transformed to their positive counterparts. When comparing both Figure 5 and Figure 6, disparities in mean error and standard deviation for small object types in contrast to others were apparent, whereas distinctions between medium and large object types are less evident. Collectively, these findings highlight the effectiveness of the computer vision framework in accurately discerning object orientation, which is crucial in pick-and-place operations in robotic settings. Furthermore, refinements and optimizations to the algorithm could potentially enhance performance and extend its applicability to a comprehensive range of objects and environments.

Figure 6.

Error for each of the 12 predefined angles from 0 to 165° in increments of 15°.

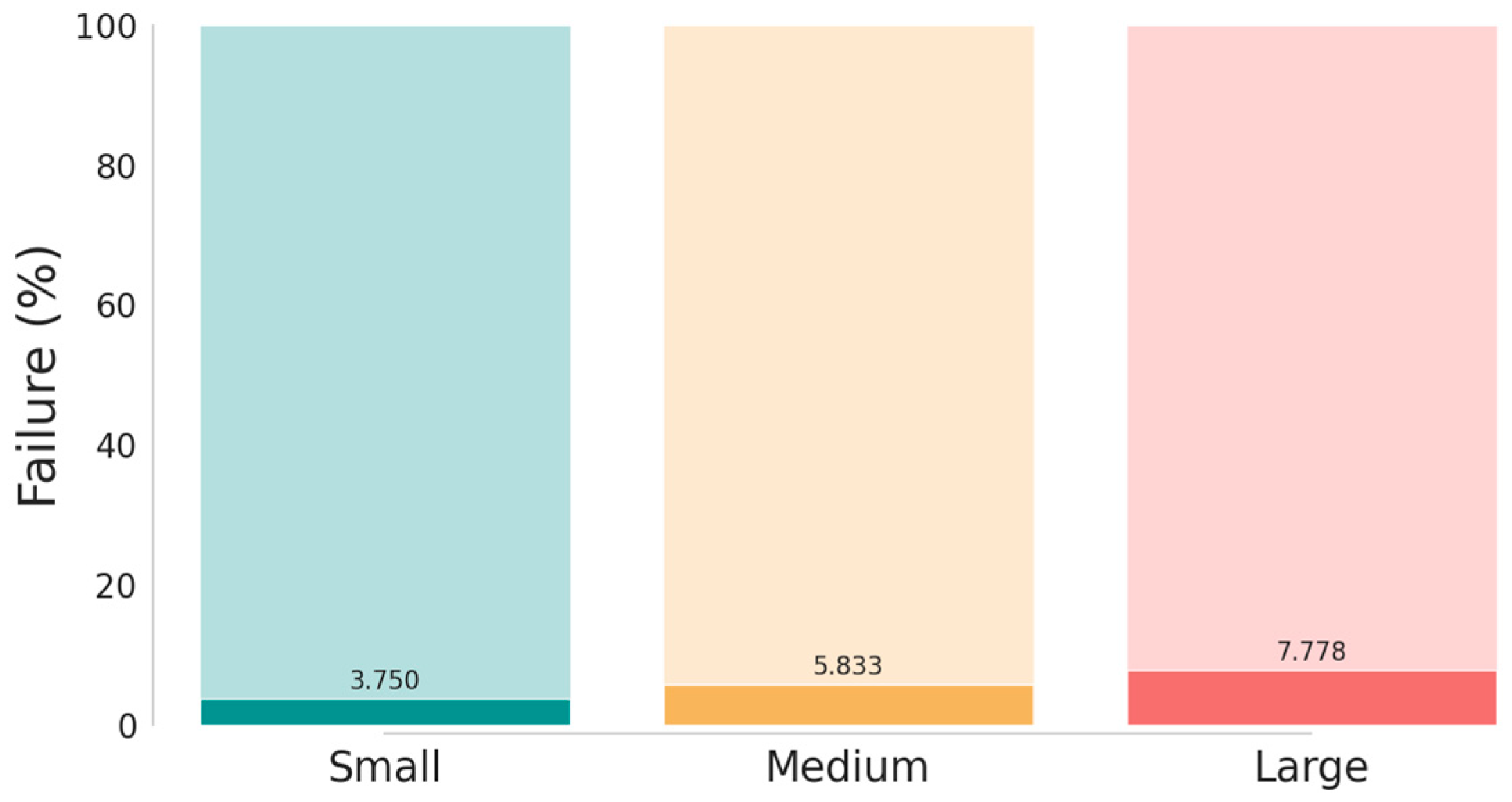

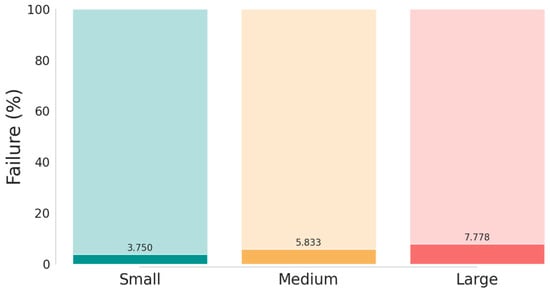

The pick-and-place failure rate was also analysed during the procedure. Figure 7 shows the percentage of failure for each object type. Seven hundred and twenty pick-and-place repetitions of each object type were executed, of which 27 failures occurred for the small type, 42 failures for the medium type and 56 failures for the large type. This indicates that the system showed effective performance in the pick-and-place execution since the mean failure rate was 5.79%. The large object type had the highest failure rate, equal to 7.78%. In most cases, the failures happened during the placing procedure, where the object hit the edges of the placing slot. The reason behind this is the small clearance of 1 mm in the place position, which significantly increases the difficulty level when placing objects into it.

Figure 7.

Overall results of the success rate during the pick-and-place experiments.

Unlike structured or pre-arranged setups, random flow environments have unique challenges for robotic systems, requiring adaptability and flexibility to handle dynamic object positions and orientations. By accurately detecting, picking, and placing objects within a random flow, robotic systems have proven their capability of operating effectively in dynamic and unpredictable environments, enhancing efficiency in manufacturing industries. However, random-flow pick-and-place system developments introduce challenges that require careful thinking and innovative solutions. One challenging difficulty is accurately detecting and tracking objects within a dynamic environment. Unlike structured setups where objects follow predetermined paths, random-flow environments introduce variability in object positions and orientations, making detection and tracking more challenging.

The pick-and-place problem has been studied constantly over the years, with many researchers putting their efforts into improving the performance of object grasp detection based on computer vision algorithms. Asif et al. [14] address the picking problem by proposing a region-based grasp network designed to extract salient features, such as handles or boundaries, from input images. This network utilizes this information to acquire class-specific grasp predictions, assigning probabilities to each grasp relative to graspable and non-graspable classes. Guo et al. [19] propose a hybrid deep architecture for robotic grasp detection, integrating visual and tactile sensing to improve performance in real-world environments. Other works focus on solving similar problems but using different approaches, such as those by Anjum et al. [22] and Ito et al. [25]. All these works focus on the grasp detection algorithm, proposing methods capable of defining the best picking point on the target object. On the other hand, we address not only the picking problem but also the entire chain necessary to execute pick-and-place tasks in a robotic system, such as the ROS-based package that extends ROS and ROS-I capabilities to the robotic system. The creation of a system workflow that integrates ABB robotic manipulators and ROS for industrial automation was also explored by Diprasetya et al. [39]. However, they did not focus on pick-and-place tasks in robotic systems but on welding industrial processes using deep reinforcement learning.

One of the main contributions of this work is the proposed computer vision package, whose modularity is a significant advantage, as it enables easy integration with other robotic cells that follow the same random-flow structure. This modularity allows the system reutilization across different setups without the need for extensive modifications, saving time and resources in the implementation process. Given the specific nature of most pick-and-place applications, achieving standardisation across all processes implies a considerable challenge. However, the proposed computer vision package is designed to solve the picking problem autonomously, and the user must add only the object mesh and its target position. With this approach, only the initial setup is required, and from that point, the system must take over and work autonomously. Also, the configuration files act as a blueprint for customising the system parameters to match the specific requirements of each robotic cell. The configuration file encapsulates essential parameters such as object dimensions, conveyor belt speed, tray dimensions, and pick-and-place task details. This flexibility enhances the system’s versatility and scalability, allowing it to be deployed across a comprehensive range of pick-and-place applications with minimal effort.

One key aspect of the system’s current architecture is the choice to implement it using ROS rather than ROS2. This decision was influenced by the reliability and extensive library support of ROS (Noetic) and ROS-Industrial at the time of development, which allowed for rapid integration with the hardware and software tools needed for the proposed system. ROS also has wide research and industrial use, making it a robust and stable choice. However, as ROS2 continues to advance, with features such as real-time performance, enhanced security, and multi-robot capabilities, the benefits of transitioning to ROS2 in the future are becoming more obvious [40]. In future work, we plan to explore migrating the proposed system to ROS2, particularly as more ROS2-compatible versions of the required libraries and packages become available. This will ensure the system remains aligned with ongoing developments in robotics middleware and can benefit from the advancements ROS2 offers.

When dealing with robotic systems, the execution time is one of the most significant parameters in the performance of a pick-and-place operation analysis. However, speed was not fully explored during this experiment. Although the system has demonstrated potential for pick-and-place applications due to its accuracy and minimal failure rates, there is still space for future optimisations within its architecture. The current setup of the pick-and-place system, employing TCP/IP socket communication, may encounter limitations in achieving optimal speed. TCP/IP communication, while reliable and widely used, introduces inherent overhead and latency due to its connection-oriented nature. This latency can impact the system’s responsiveness, particularly in tasks requiring rapid pick-and-place operations. Transitioning to External Guided Motion (EGM) based on User Datagram Protocol (UDP) communication presents a potential solution to overcome these limitations. Unlike TCP/IP, UDP offers lower overhead and reduced latency, making it more suitable for applications prioritising speed over reliability. By swapping to UDP, the system can minimise communication latency, enabling faster data transmission rates and real-time control of the robot’s motion trajectory.

Another critical aspect that could significantly impact the system’s speed is the operation of the conveyor belt. In the described experiment, the conveyor likely halted during object scanning and picking, contributing to potential inefficiencies in the overall process. Ideally, the system should operate continuously to achieve optimal performance, with the conveyor running uninterrupted while objects are picked and placed by the robotic arm. Moreover, analysing the system’s speed in conjunction with its accuracy and failure rates provides a comprehensive understanding of its overall performance. While accuracy and failure rates provide valuable insights into the system’s reliability, speed measurements offer crucial information regarding efficiency and productivity.

5. Conclusions

The evaluation of the computer vision algorithm in object orientation estimation for pick-and-place tasks demonstrates high accuracy and precision across different object types, with minimal mean angle errors and narrow error ranges. Despite occasional failures during pick-and-place operations, the system’s performance demonstrates effectiveness, with low failure rates. The modularity of the computer vision package enhances its versatility and ease of integration into various robotic setups, indicating potential for widespread application. However, optimization opportunities such as transitioning from TCP/IP to UDP communication and ensuring continuous conveyor operation could improve system speed and efficiency. Continued refinement and optimization efforts are necessary to fully leverage the system’s capabilities in enhancing efficiency and adaptability across diverse industrial settings.

Author Contributions

Conceptualization, software, resources, methodology, validation, formal analysis, data curation, and writing—original draft preparation, E.L.G.; writing—review and editing and supervision, J.G.L. and D.M.D. All authors have read and agreed to the published version of the manuscript.

Funding

This publication emanated from the research conducted with the support of the Science Foundation Ireland (SFI), Grant Number SFI 16/RC/3919, co-funded by the European Regional Development Fund, The Technological University of the Shannon Presidents Doctoral Scholarship, and Johnson & Johnson.

Data Availability Statement

The data is available from the authors upon request. It is not publicly accessible due to ongoing research on this topic.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lasi, H.; Fettke, P.; Kemper, H.G.; Feld, T.; Hoffmann, M. Industry 4.0. Bus. Inf. Syst. Eng. 2014, 6, 239–242. [Google Scholar] [CrossRef]

- Bahrin, M.A.K.; Othman, M.F.; Azli, N.H.N.; Talib, M.F. Industry 4.0: A Review on Industrial Automation and Robotic. J. Teknol. 2016, 78, 137–143. [Google Scholar]

- Oztemel, E.; Gursev, S. Literature Review of Industry 4.0 and Related Technologies. J. Intell. Manuf. 2020, 31, 127–182. [Google Scholar] [CrossRef]

- D’Avella, S.; Avizzano, C.A.; Tripicchio, P. Ros-Industrial Based Robotic Cell for Industry 4.0: Eye-in-Hand Stereo Camera and Visual Servoing for Flexible, Fast, and Accurate Picking and Hooking in the Production Line. Robot. Comput. Integr. Manuf. 2023, 80, 102453. [Google Scholar] [CrossRef]

- Ghobakhloo, M. Industry 4.0, Digitization, and Opportunities for Sustainability. J. Clean. Prod. 2020, 252, 119869. [Google Scholar] [CrossRef]

- Maskuriy, R.; Selamat, A.; Ali, K.N.; Maresova, P.; Krejcar, O. Industry 4.0 for the Construction Industry-How Ready Is the Industry? Appl. Sci. 2019, 9, 2819. [Google Scholar] [CrossRef]

- Xu, S.; Wang, J.; Shou, W.; Ngo, T.; Sadick, A.-M.; Wang, X. Computer Vision Techniques in Construction: A Critical Review. Arch. Comput. Methods Eng. 2021, 28, 3383–3397. [Google Scholar] [CrossRef]

- Bai, C.; Dallasega, P.; Orzes, G.; Sarkis, J. Industry 4.0 Technologies Assessment: A Sustainability Perspective. Int. J. Prod. Econ. 2020, 229, 107776. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Konz, N. Computer Vision Techniques in Manufacturing. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 105–117. [Google Scholar] [CrossRef]

- Ferrein, A.; Schiffer, S.; Kallweit, S. The ROSIN Education Concept: Fostering ROS Industrial-Related Robotics Education in Europe. In ROBOT 2017: Third Iberian Robotics Conference: Volume 2; Springer: Berlin/Heidelberg, Germany, 2018; pp. 370–381. [Google Scholar]

- Martinez, C.; Barrero, N.; Hernandez, W.; Montaño, C.; Mondragón, I. Setup of the Yaskawa Sda10f Robot for Industrial Applications, Using Ros-Industrial. In Advances in Automation and Robotics Research in Latin America: Proceedings of the 1st Latin American Congress on Automation and Robotics, Panama City, Panama, 8–10 February 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 186–203. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An Open-Source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Mayoral-Vilches, V.; Pinzger, M.; Rass, S.; Dieber, B.; Gil-Uriarte, E. Can Ros Be Used Securely in Industry? Red Teaming Ros-Industrial. arXiv 2020, arXiv:2009.08211. [Google Scholar]

- Asif, U.; Tang, J.; Harrer, S. Densely Supervised Grasp Detector (DSGD). In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8085–8093. [Google Scholar]

- Bicchi, A.; Kumar, V. Robotic Grasping and Contact: A Review. In Proceedings of the Proceedings 2000 ICRA. Millennium Conference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 1, pp. 348–353. [Google Scholar]

- Bohg, J.; Morales, A.; Asfour, T.; Kragic, D. Data-Driven Grasp Synthesis—A Survey. IEEE Trans. Robot. 2013, 30, 289–309. [Google Scholar] [CrossRef]

- Caldera, S.; Rassau, A.; Chai, D. Review of Deep Learning Methods in Robotic Grasp Detection. Multimodal Technol. Interact. 2018, 2, 57. [Google Scholar] [CrossRef]

- Bergamini, L.; Sposato, M.; Pellicciari, M.; Peruzzini, M.; Calderara, S.; Schmidt, J. Deep Learning-Based Method for Vision-Guided Robotic Grasping of Unknown Objects. Adv. Eng. Inform. 2020, 44, 101052. [Google Scholar] [CrossRef]

- Guo, D.; Sun, F.; Liu, H.; Kong, T.; Fang, B.; Xi, N. A Hybrid Deep Architecture for Robotic Grasp Detection. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1609–1614. [Google Scholar]

- Imtiaz, M.B.; Qiao, Y.; Lee, B. Implementing Robotic Pick and Place with Non-Visual Sensing Using Reinforcement Learning. In Proceedings of the 2022 6th International Conference on Robotics, Control and Automation (ICRCA), Xiamen, China, 26–28 February 2022; pp. 23–28. [Google Scholar]

- Imtiaz, M.; Qiao, Y.; Lee, B. Comparison of Two Reinforcement Learning Algorithms for Robotic Pick and Place with Non-Visual Sensing. Int. J. Mech. Eng. Robot. Res. 2021, 10, 526–535. [Google Scholar] [CrossRef]

- Anjum, M.U.; Khan, U.S.; Qureshi, W.S.; Hamza, A.; Khan, W.A. Vision-Based Hybrid Detection For Pick And Place Application In Robotic Manipulators. In Proceedings of the 2023 International Conference on Robotics and Automation in Industry (ICRAI), Peshawar, Pakistan, 3–5 March 2023; pp. 1–5. [Google Scholar]

- Zarif, M.I.I.; Shahria, M.T.; Sunny, M.S.H.; Khan, M.M.R.; Ahamed, S.I.; Wang, I.; Rahman, M.H. A Vision-Based Object Detection and Localization System in 3D Environment for Assistive Robots’ Manipulation. In Proceedings of the International Conference of Control, Dynamic Systems, and Robotics, Niagara Falls, ON, Canada, 2–4 June 2022; Avestia: Orléans, ON, Canada, 2022. [Google Scholar]

- Andhare, P.; Rawat, S. Pick and Place Industrial Robot Controller with Computer Vision. In Proceedings of the 2016 International Conference on Computing Communication Control and automation (ICCUBEA), Pune, India, 12–13 August 2016; pp. 1–4. [Google Scholar]

- Ito, S.; Kubota, S. Point Proposal Based Instance Segmentation with Rectangular Masks for Robot Picking Task. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Kalashnikov, D.; Irpan, A.; Pastor, P.; Ibarz, J.; Herzog, A.; Jang, E.; Quillen, D.; Holly, E.; Kalakrishnan, M.; Vanhoucke, V.; et al. Scalable Deep Reinforcement Learning for Vision-Based Robotic Manipulation. In Proceedings of the Conference on Robot Learning, Zürich, Switzerland, 29–31 October 2018; pp. 651–673. [Google Scholar]

- Lobbezoo, A.; Qian, Y.; Kwon, H.J. Reinforcement Learning for Pick and Place Operations in Robotics: A Survey. Robotics 2021, 10, 105. [Google Scholar] [CrossRef]

- Lan, X.; Lee, B. Towards Pick and Place Multi Robot Coordination Using Multi-Agent Deep Reinforcement Learning. In Proceedings of the 2021 7th International Conference on Automation, Robotics and Applications (ICARA), Prague, Czech Republic, 4–6 February 2021. [Google Scholar]

- Kunze, L.; Roehm, T.; Beetz, M. Towards Semantic Robot Description Languages. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5589–5595. [Google Scholar]

- ROS Wiki URDF. Available online: http://wiki.ros.org/urdf (accessed on 5 September 2024).

- Sucan, I.A.; Moll, M.; Kavraki, L.E. The Open Motion Planning Library. IEEE Robot. Autom. Mag. 2012, 19, 72–82. [Google Scholar] [CrossRef]

- Kalakrishnan, M.; Chitta, S.; Theodorou, E.; Pastor, P.; Schaal, S. STOMP: Stochastic Trajectory Optimization for Motion Planning. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4569–4574. [Google Scholar]

- Ratliff, N.; Zucker, M.; Bagnell, J.A.; Srinivasa, S. CHOMP: Gradient Optimization Techniques for Efficient Motion Planning. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 489–494. [Google Scholar]

- Moll, M.; Sucan, I.A.; Kavraki, L.E. Benchmarking Motion Planning Algorithms: An Extensible Infrastructure for Analysis and Visualization. IEEE Robot. Autom. Mag. 2015, 22, 96–102. [Google Scholar] [CrossRef]

- Liu, S.; Liu, P. Benchmarking and Optimization of Robot Motion Planning with Motion Planning Pipeline. Int. J. Adv. Manuf. Technol. 2022, 118, 949–961. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.-P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. In Proceedings of the Second International Conference on Knowledge Discovery and Data Mining (KDD), Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Wold, S.; Esbensen, K.; Geladi, P. Principal Component Analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Diprasetya, M.R.; Yuwono, S.; Loppenberg, M.; Schwung, A. Integration of ABB Robot Manipulators and Robot Operating System for Industrial Automation. In Proceedings of the 2023 IEEE 21st International Conference on Industrial Informatics (INDIN), Lemgo, Germany, 18–20 July 2023; pp. 1–7. [Google Scholar]

- Maruyama, Y.; Kato, S.; Azumi, T. Exploring the Performance of ROS2. In Proceedings of the 13th International Conference on Embedded Software, Pittsburgh, PA, USA, 1–7 October 2016; pp. 1–10. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).