Not in My Face: Challenges and Ethical Considerations in Automatic Face Emotion Recognition Technology

Abstract

:1. Introduction

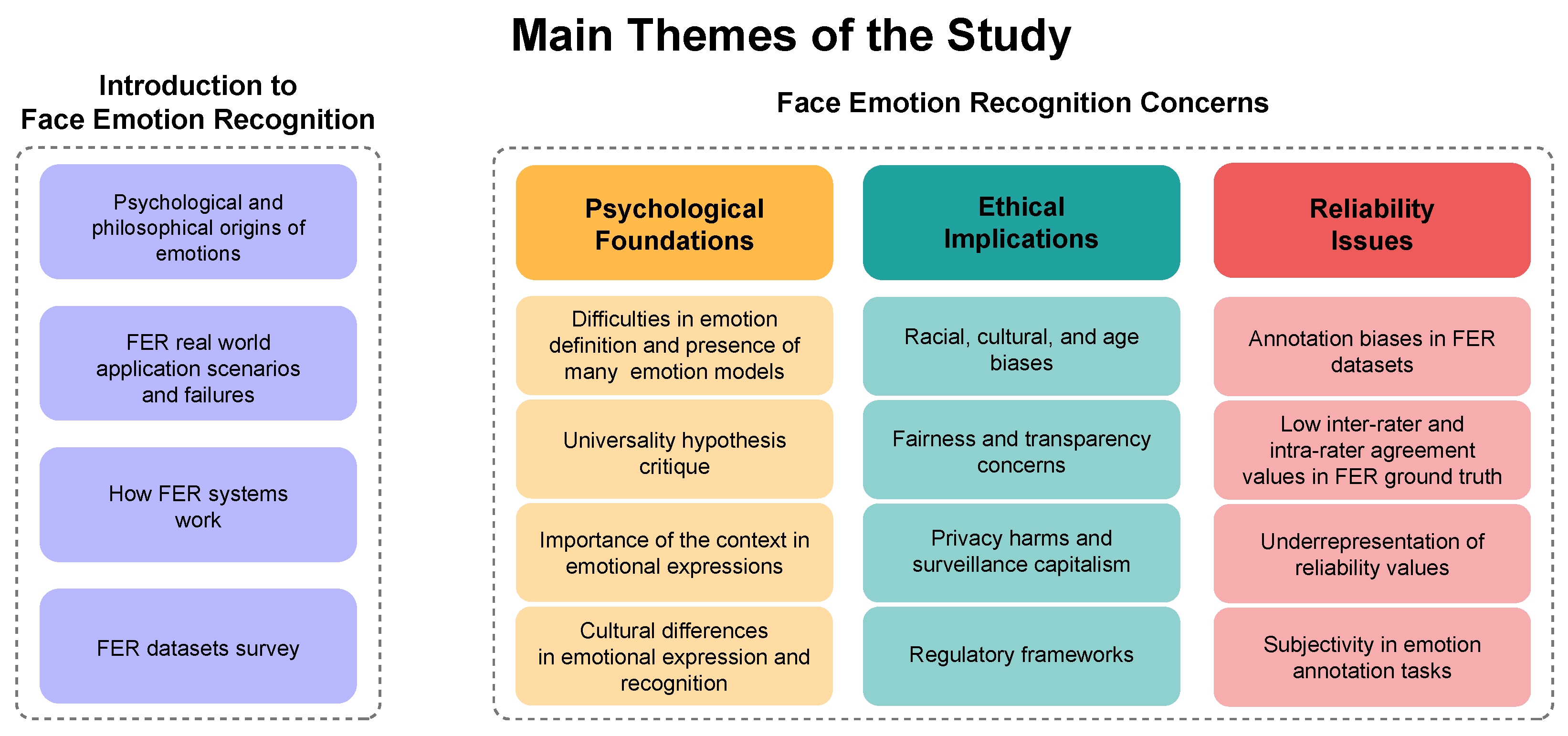

Aim and Scope of the Study

- Examine the psychological and philosophical debate on the scientific feasibility of accurately inferring human emotions from facial expressions;

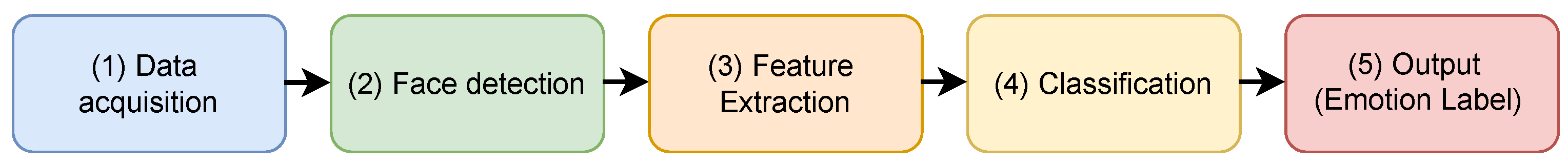

- Present an overview of how FER technology works, focusing on critical applications, failures, and misclassification errors;

- Review FER datasets by classifying them as those with simulated vs. genuine expressions and those based on categorical vs. ordinal models, highlighting concerns regarding bias and reliability;

- Critically review the literature surrounding FER concerns, focusing on their psychological foundations, ethical consequences, and reliability issues;

- Propose a conceptual taxonomy that categorizes the major critiques and discussions about FER technologies mentioned above, facilitating a structured and comprehensive understanding of the field.

2. Methodology

- Psychological Foundations: Articles that critique or challenge the universality hypothesis and explore how cultural and social factors influence emotion recognition.

- Ethical Implications: Studies addressing biases and ethical concerns in the deployment of FER systems, with emphasis on issues such as racial bias, privacy, and human rights.

- Reliability Issues: Research evaluating the reliability and accuracy of FER datasets, particularly in terms of inter-rater and intra-rater reliability in human-annotated data.

3. Finding the Origins of Emotions

3.1. The Main Psychological Models of Emotions

3.2. Measures and Labeling of Emotions: What Is the Role of Facial Expressions?

4. An Overview of Face Emotion Recognition

“The notion of ‘emotion recognition system’ […] as an AI system for the purpose of identifying or inferring emotions or intentions of natural persons on the basis of their biometric data. The notion refers to emotions or intentions such as happiness, sadness, anger, surprise, disgust, embarrassment, excitement, shame, contempt, satisfaction and amusement [49].”

4.1. FER Applications: Examples from the Real World

“Has effectively become a ‘frontline laboratory’ for data-driven surveillance” [73].

4.2. How Does FER Work?

Categorical Lab Setting Datasets

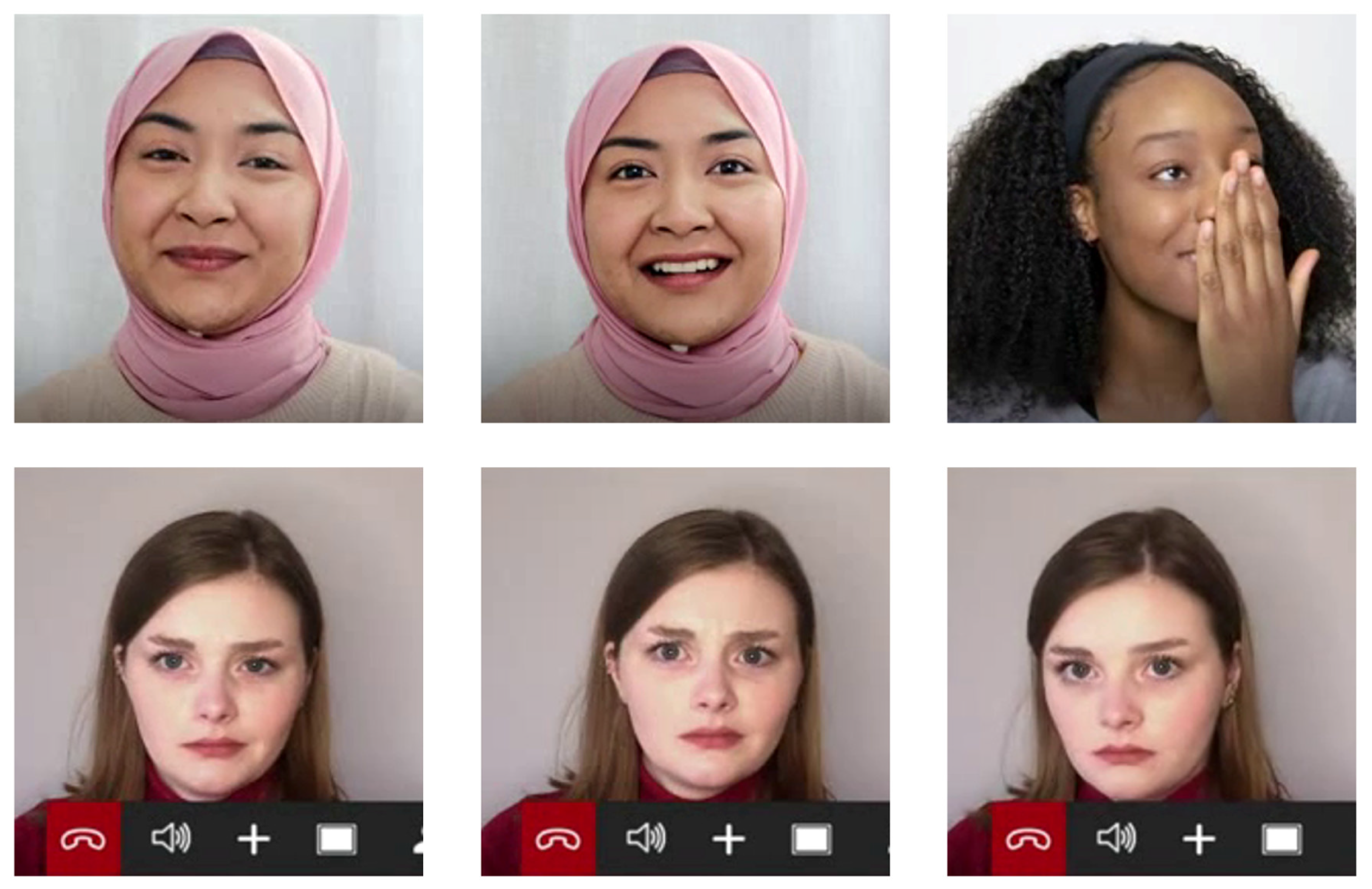

4.3. The Construction of FER Datasets

4.3.1. Ordinal Lab Setting Datasets

4.3.2. Categorical In-the-Wild Datasets

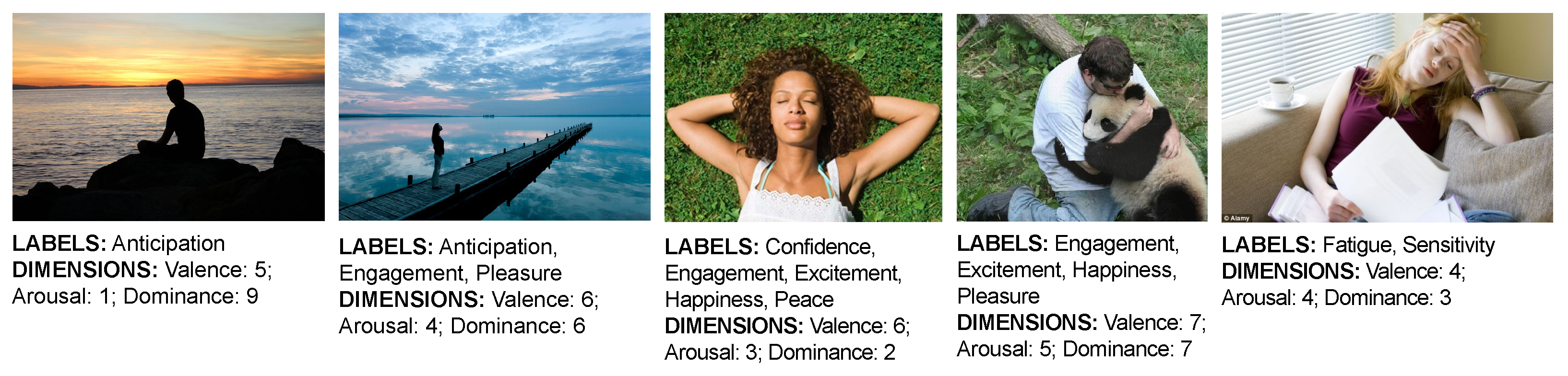

4.3.3. Ordinal In-The-Wild Datasets

5. Face Emotion Recognition Concerns

5.1. Challenging Emotion Fingerprints

“When it comes to emotion, a face doesn’t speak for itself. In fact, the poses of the basic emotion method were not discovered by observing faces in the real world. Scientists stipulated those facial poses, inspired by Darwin’s book, and asked actors to portray them. And now these faces are simply assumed to be the universal expressions of emotion [2].”

With machine learning, in particular, we often see metrics being used to make decisions—not because they’re reliable, but simply because they can be measured [8].”

“Emotions are complex, and they develop and change in relation to our families, friends, cultures, and histories, all the manifold contexts that live outside of the AI frame [111].”

5.2. Ethical Concerns Associated with Face Emotion Recognition

What Is the Position of the EU?

“The key shortcomings of such technologies, are the limited reliability (emotion categories are neither reliably expressed through nor unequivocally associated with, a common set of physical or physiological movements), the lack of specificity (physical or physiological expressions do not perfectly match emotion categories) and the limited generalisability (the effects of context and culture are not sufficiently considered)” [134].

“Claims human experience as free raw material for translation into behavioral data” [23].

5.3. On the Dubious Reliability of Face Emotion Recognition

- Is the inter-rater reliability of the FER ground truth sufficient to support reliable research and analysis?

- Does providing some sort of contextual information have any effect on the reliability of the ground truth?

- Is the intra-rater reliability of the FER ground truth high enough?

6. Discussion

- First, the reliance of FER systems on oversimplified models of emotions raises concerns about the validity of their psychological foundations. These systems often overlook the influences of context, culture, and the distinction between genuine and simulated emotions.

- Secondly, the potential for racial biases and the perpetuation of these stereotypes through AI systems pose significant ethical challenges. Studies have shown that FER systems can exhibit variations in emotional interpretation influenced by an individual’s race, leading to troubling outcomes, especially in surveillance and law enforcement settings. As a consequence, there is growing awareness regarding the ethical aspects surrounding the FER scenario, questioning whether these technologies have the potential to cause harm to individuals, particularly those who belong to minority groups or those who are considered at-risk, such as children.

- Finally, even if assuming that the first two requisites are entirely met and non-problematic, meaning that emotion recognition systems are based on valid psychological models and that their application poses no ethical risks, there is a third point to be touched upon, namely the reliability of the ground truth utilized by these systems. In other words, the ground truth on which FER is built is unreliable due to insufficient agreement values and biased datasets.

Limitations

7. Conclusions

- Surveyed the complexity of human emotions, from the early philosophical theories to the major psychological models;

- Provided an overview of how FER works and its common applications, describing the most frequently used datasets;

- Identified three different areas of concern, developing a taxonomy for the analysis of FER issues, namely the psychological foundations, possible negative ethical outcomes, and reliability of these systems;

- Advocated for a multi-layered approach that focuses on how the various areas of criticism are interconnected in order to help researchers and policymakers better address the implications of FER.

- Emphasized the need for interdisciplinary research and careful regulation to improve the reliability, ethical responsibility, and effectiveness of FER systems, particularly in safeguarding marginalized and vulnerable populations.

Funding

Acknowledgments

Conflicts of Interest

References

- Gendron, M.; Roberson, D.; van der Vyver, J.M.; Barrett, L.F. Perceptions of emotion from facial expressions are not culturally universal: Evidence from a remote culture. Emotion 2014, 14, 251. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F. How Emotions Are Made: The Secret Life of the Brain; Houghton Mifflin Harcourt: Boston, MA, USA, 2017. [Google Scholar]

- Gates, K.A. Our Biometric Future: Facial Recognition Technology and the Culture of Surveillance; NYU Press: New York, NY, USA, 2011. [Google Scholar]

- Berry, J.W.; Poortinga, Y.H.; Pandey, J. Handbook of Cross-Cultural Psychology: Basic Processes and Human Development; John Berry: Boston, MA, USA, 1997; Volume 2. [Google Scholar]

- Barrett, L.F.; Adolphs, R.; Marsella, S.; Martinez, A.M.; Pollak, S.D. Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychol. Sci. Public Interest 2019, 20, 1–68. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F. Solving the emotion paradox: Categorization and the experience of emotion. Personal. Soc. Psychol. Rev. 2006, 10, 20–46. [Google Scholar] [CrossRef] [PubMed]

- Durán, J.I.; Fernández-Dols, J.M. Do emotions result in their predicted facial expressions? A meta-analysis of studies on the co-occurrence of expression and emotion. Emotion 2021, 21, 1550. [Google Scholar] [CrossRef] [PubMed]

- Vincent, J. Emotion Recognition Can’t be Trusted. 2019. Available online: https://www.theverge.com/2019/7/25/8929793/emotion-recognition-analysis-ai-machine-learning-facial-expression-review (accessed on 7 September 2024).

- Matsumoto, D. Ethnic differences in affect intensity, emotion judgments, display rule attitudes, and self-reported emotional expression in an American sample. Motiv. Emot. 1993, 17, 107–123. [Google Scholar] [CrossRef]

- Barrett, L.F.; Mesquita, B.; Gendron, M. Context in emotion perception. Curr. Dir. Psychol. Sci. 2011, 20, 286–290. [Google Scholar] [CrossRef]

- Hofstede, G. Culture’s Consequences: Comparing Values, Behaviors, Institutions and Organizations Across Nations; Sage: Melbourne, VIC, Australia, 2001. [Google Scholar]

- Matsumoto, D. Cultural influences on the perception of emotion. J. Cross-Cult. Psychol. 1989, 20, 92–105. [Google Scholar] [CrossRef]

- Russell, J.A. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 1994, 115, 102. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef]

- Picard, R.W. Affective Computing; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Cabitza, F.; Campagner, A.; Mattioli, M. The unbearable (technical) unreliability of automated facial emotion recognition. Big Data Soc. 2022, 9, 20539517221129549. [Google Scholar] [CrossRef]

- Russell, J.A. Emotion, core affect, and psychological construction. Cogn. Emot. 2009, 23, 1259–1283. [Google Scholar] [CrossRef]

- LeDoux, J.E.; Hofmann, S.G. The subjective experience of emotion: A fearful view. Curr. Opin. Behav. Sci. 2018, 19, 67–72. [Google Scholar] [CrossRef]

- Hugenberg, K.; Bodenhausen, G.V. Ambiguity in social categorization: The role of prejudice and facial affect in race categorization. Psychol. Sci. 2004, 15, 342–345. [Google Scholar] [CrossRef] [PubMed]

- Hugenberg, K.; Bodenhausen, G.V. Facing prejudice: Implicit prejudice and the perception of facial threat. Psychol. Sci. 2003, 14, 640–643. [Google Scholar] [CrossRef] [PubMed]

- Halberstadt, A.G.; Castro, V.L.; Chu, Q.; Lozada, F.T.; Sims, C.M. Preservice teachers’ racialized emotion recognition, anger bias, and hostility attributions. Contemp. Educ. Psychol. 2018, 54, 125–138. [Google Scholar] [CrossRef]

- Halberstadt, A.G.; Cooke, A.N.; Garner, P.W.; Hughes, S.A.; Oertwig, D.; Neupert, S.D. Racialized emotion recognition accuracy and anger bias of children’s faces. Emotion 2022, 22, 403. [Google Scholar] [CrossRef]

- Zuboff, S. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power; First Trade Paperback Edition; PublicAffairs: New York, NY, USA, 2020. [Google Scholar]

- Sajjad, M.; Nasir, M.; Ullah, F.U.M.; Muhammad, K.; Sangaiah, A.K.; Baik, S.W. Raspberry Pi assisted facial expression recognition framework for smart security in law-enforcement services. Inf. Sci. 2019, 479, 416–431. [Google Scholar] [CrossRef]

- Laufs, J.; Borrion, H.; Bradford, B. Security and the smart city: A systematic review. Sustain. Cities Soc. 2020, 55, 102023. [Google Scholar] [CrossRef]

- Rhue, L.A. Racial Influence on Automated Perceptions of Emotions. CJRN Race Ethn. 2018. Available online: https://racismandtechnology.center/wp-content/uploads/racial-influence-on-automated-perceptions-of-emotions.pdf (accessed on 26 August 2024).

- Gleason, M. Privacy Groups Urge Zoom to Abandon Emotion AI Research. 2022. Available online: https://www.techtarget.com/searchunifiedcommunications/news/252518128/Privacy-groups-urge-Zoom-to-abandon-emotion-AI-research (accessed on 6 September 2024).

- Stark, L.; Hoey, J. The ethics of emotion in Artificial Intelligence systems. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, New York, NY, USA, 3–10 March 2021; FAccT ’21. pp. 782–793. [Google Scholar] [CrossRef]

- Hernandez, J.; Lovejoy, J.; McDuff, D.; Suh, J.; O’Brien, T.; Sethumadhavan, A.; Greene, G.; Picard, R.; Czerwinski, M. Guidelines for Assessing and Minimizing Risks of Emotion Recognition Applications. In Proceedings of the 2021 9th International Conference on Affective Computing and Intelligent Interaction (ACII), Nara, Japan, 28 September–1 October 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Fernández-Dols, J.M.; Russell, J.A. The Science of Facial Expression; Oxford Series in Social Cognition and Social Neuroscience; Oxford University Press: Oxford, UK, 2017. [Google Scholar]

- Dixon, T. From Passions to Emotions: The Creation of a Secular Psychological Category; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Lin, W.; Li, C. Review of studies on emotion recognition and judgment based on physiological signals. Appl. Sci. 2023, 13, 2573. [Google Scholar] [CrossRef]

- Roshdy, A.; Karar, A.; Kork, S.A.; Beyrouthy, T.; Nait-ali, A. Advancements in EEG Emotion Recognition: Leveraging multi-modal database integration. Appl. Sci. 2024, 14, 2487. [Google Scholar] [CrossRef]

- James, W. What is an emotion? Mind 1884, 9, 188–205. [Google Scholar] [CrossRef]

- Plutchik, R. The Psychology and Biology of Emotion; HarperCollins College Publishers: New York, NY, USA, 1994. [Google Scholar]

- Plutchik, R. The nature of emotions: Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 2001, 89, 344–350. [Google Scholar] [CrossRef]

- Kleinginna, P.R.; Kleinginna, A.M. A categorized list of emotion definitions, with suggestions for a consensual definition. Motiv. Emot. 1981, 5, 345–379. [Google Scholar] [CrossRef]

- Skinner, B.F. Science and Human Behavior; Macmillan: New York, NY, USA, 1953. [Google Scholar]

- Oatley, K.; Johnson-Laird, P.N. Cognitive approaches to emotions. Trends Cogn. Sci. 2014, 18, 134–140. [Google Scholar] [CrossRef] [PubMed]

- Radò, S. Adaptational Psychodynamics: Motivation and Control; Science House: New York, NY, USA, 1969. [Google Scholar]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. AffectNet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2019, 10, 18–31. [Google Scholar] [CrossRef]

- Tomkins, S.S. Affect Imagery Consciousness: The Complete Edition; Springer Publisher: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Barrett, L.F. Discrete emotions or dimensions? The role of valence focus and arousal focus. Cogn. Emot. 1998, 12, 579–599. [Google Scholar] [CrossRef]

- Wundt, W.M. An Introduction to Psychology; G. Allen, Limited: London, UK, 1912. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Russell, J.A.; Mehrabian, A. Evidence for a three-factor theory of emotions. J. Res. Personal. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Mehrabian, A. Pleasure-arousal-dominance: A general framework for describing and measuring individual differences in temperament. Curr. Psychol. 1996, 14, 261–292. [Google Scholar] [CrossRef]

- Ekman, P.; Rosenberg, E.L. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS); Oxford University Press: Oxford, UK, 1997. [Google Scholar]

- The European Parliament and the Council of the European Union. Artificial Intelligence Act. 2024. Available online: https://www.europarl.europa.eu/doceo/document/TA-9-2024-0138-FNL-COR01_EN.pdf (accessed on 7 September 2024).

- Stahl, B.C. Ethical Issues of AI. In Artificial Intelligence for a Better Future: An Ecosystem Perspective on the Ethics of AI and Emerging Digital Technologies; Springer International Publishing: Cham, Switzerland, 2021; pp. 35–53. [Google Scholar] [CrossRef]

- Cabitza, F.; Campagner, A.; Albano, D.; Aliprandi, A.; Bruno, A.; Chianca, V.; Corazza, A.; Di Pietto, F.; Gambino, A.; Gitto, S.; et al. The elephant in the machine: Proposing a new metric of data reliability and its application to a medical case to assess classification reliability. Appl. Sci. 2020, 10, 4014. [Google Scholar] [CrossRef]

- Barrett, L.F.; Westlin, C. Chapter 2—Navigating the science of emotion. In Emotion Measurement; Meiselman, H.L., Ed.; Woodhead Publishing: Sawston, UK, 2021; pp. 39–84. [Google Scholar] [CrossRef]

- Zepf, S.; Hernandez, J.; Schmitt, A.; Minker, W.; Picard, R.W. Driver emotion recognition for intelligent vehicles: A survey. ACM Comput. Surv. 2020, 53, 1–30. [Google Scholar] [CrossRef]

- Awatramani, J.; Hasteer, N. Facial expression recognition using deep learning for children with autism spectrum disorder. In Proceedings of the 2020 IEEE 5th International Conference on Computing Communication and Automation (ICCCA), Greater Noida, India, 30–31 October 2020; pp. 35–39. [Google Scholar]

- Ullah, R.; Hayat, H.; Siddiqui, A.A.; Siddiqui, U.A.; Khan, J.; Ullah, F.; Hassan, S.; Hasan, L.; Albattah, W.; Islam, M.; et al. A real-time framework for human face detection and recognition in CCTV images. Math. Probl. Eng. 2022, 2022. [Google Scholar] [CrossRef]

- Vardarlier, P.; Zafer, C. Use of Artificial Intelligence as business strategy in recruitment process and social perspective. In Digital Business Strategies in Blockchain Ecosystems: Transformational Design and Future of Global Business; Springer: Cham, Switzerland, 2020; pp. 355–373. [Google Scholar]

- Chowdary, M.K.; Nguyen, T.N.; Hemanth, D.J. Deep Learning-based facial emotion recognition for human–computer interaction applications. Neural Comput. Appl. 2023, 35, 23311–23328. [Google Scholar] [CrossRef]

- Huang, C.W.; Wu, B.C.; Nguyen, P.A.; Wang, H.H.; Kao, C.C.; Lee, P.C.; Rahmanti, A.R.; Hsu, J.C.; Yang, H.C.; Li, Y.C.J. Emotion recognition in doctor-patient interactions from real-world clinical video database: Initial development of artificial empathy. Comput. Methods Programs Biomed. 2023, 233, 107480. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional Neural Networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The extended Cohn-Kanade dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar]

- Pavez, R.; Diaz, J.; Arango-Lopez, J.; Ahumada, D.; Mendez-Sandoval, C.; Moreira, F. Emo-mirror: A proposal to support emotion recognition in children with autism spectrum disorders. Neural Comput. Appl. 2023, 35, 7913–7924. [Google Scholar] [CrossRef] [PubMed]

- Silva, V.; Soares, F.; Esteves, J.S.; Santos, C.P.; Pereira, A.P. Fostering emotion recognition in children with autism spectrum disorder. Multimodal Technol. Interact. 2021, 5, 57. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Erhan, D.; Carrier, P.L.; Courville, A.; Mirza, M.; Hamner, B.; Cukierski, W.; Tang, Y.; Thaler, D.; Lee, D.H.; et al. Challenges in representation learning: A report on three Machine Learning contests. In Proceedings of the Neural Information Processing: 20th International Conference, ICONIP 2013, Daegu, Republic of Korea, 3–7 November 2013; Proceedings, Part III 20. Springer: Berlin/Heidelberg, Germany, 2013; pp. 117–124. [Google Scholar]

- McStay, A. Emotional AI: The Rise of Empathic Media; Sage Publications Ltd.: Melbourne, VIC, Australia, 2018. [Google Scholar] [CrossRef]

- Katirai, A. Ethical considerations in emotion recognition technologies: A review of the literature. AI Ethics 2023, 1–22. [Google Scholar] [CrossRef]

- Podoletz, L. We have to talk about emotional AI and crime. AI Soc. 2023, 38, 1067–1082. [Google Scholar] [CrossRef]

- Spiroiu, F. The impact of beliefs concerning deception on perceptions of nonverbal Behavior: Implications for neuro-linguistic programming-based lie detection. J. Police Crim. Psychol. 2018, 33, 244–256. [Google Scholar] [CrossRef]

- Finlay, A. Global Information Society Watch 2019: Artificial Intelligence: Human Rights, Social Justice and Development; Association for Progressive Communications (APC): Johannesburg, South Africa, 2019. [Google Scholar]

- Qiang, X. President XI’s surveillance state. J. Democr. 2019, 30, 53. [Google Scholar] [CrossRef]

- Watch, H.R. China’s Algorithms of Repression: Reverse Engineering a Xinjiang Police Mass Surveillance App. 2019. Available online: https://www.hrw.org/report/2019/05/01/chinas-algorithms-repression/reverse-engineering-xinjiang-police-mass (accessed on 28 May 2024).

- Luca Zorloni. iBorderCtrl: La “Macchina Della Verità”’ che l’Europa Userà ai Confini. 2023. Available online: https://www.wired.it/article/iborderctrl-macchina-verita-europa/ (accessed on 8 September 2024).

- Carrer, L. Storia di un Ordinario Flop del Riconoscimento Facciale. 2024. Available online: https://www.wired.it/article/riconoscimento-facciale-fallimento-arresto-stadio/ (accessed on 5 July 2024).

- Landowska, A. Uncertainty in emotion recognition. J. Inf. Commun. Ethics Soc. 2019, 17, 273–291. [Google Scholar] [CrossRef]

- Canal, F.Z.; Müller, T.R.; Matias, J.C.; Scotton, G.G.; de Sa Junior, A.R.; Pozzebon, E.; Sobieranski, A.C. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Inf. Sci. 2022, 582, 593–617. [Google Scholar] [CrossRef]

- Ko, B.C. A brief review of facial emotion recognition based on visual information. Sensors 2018, 18, 401. [Google Scholar] [CrossRef] [PubMed]

- Naga, P.; Marri, S.D.; Borreo, R. Facial emotion recognition methods, datasets and technologies: A literature survey. Mater. Today Proc. 2023, 80, 2824–2828. [Google Scholar] [CrossRef]

- Mohanta, S.R.; Veer, K. Trends and challenges of image analysis in facial emotion recognition: A review. Netw. Model. Anal. Health Inform. Bioinform. 2022, 11, 35. [Google Scholar] [CrossRef]

- Jones, M.; Viola, P. Fast Multi-View Face Detection; Mitsubishi Electric Research Lab TR-20003-96: Cambridge, MA, USA, 2003; Volume 3, p. 2. [Google Scholar]

- Soo, S. Object Detection Using Haar-Cascade Classifier; Institute of Computer Science, University of Tartu: Tartu, Estonia, 2014; Volume 2, pp. 1–12. [Google Scholar]

- Kumar, K.S.; Prasad, S.; Semwal, V.B.; Tripathi, R.C. Real time face recognition using AdaBoost improved fast PCA algorithm. Int. J. Artif. Intell. Appl. 2011, 2, 45–58. [Google Scholar] [CrossRef]

- Rajesh, K.; Naveenkumar, M. A robust method for face recognition and face emotion detection system using support vector machines. In Proceedings of the 2016 International Conference on Electrical, Electronics, Communication, Computer and Optimization Techniques (ICEECCOT), Mysuru, India, 9–10 December 2016; pp. 1–5. [Google Scholar]

- Wang, Y.; Li, Y.; Song, Y.; Rong, X. Facial expression recognition based on random forest and convolutional Neural Network. Information 2019, 10, 375. [Google Scholar] [CrossRef]

- Li, X.; Ji, Q. Active affective state detection and user assistance with dynamic Bayesian Networks. IEEE Trans. Syst. Man-Cybern.-Part Syst. Humans 2004, 35, 93–105. [Google Scholar] [CrossRef]

- Mollahosseini, A.; Chan, D.; Mahoor, M.H. Going Deeper in facial expression recognition using Deep Neural Networks. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–10. [Google Scholar]

- Li, S.; Deng, W. Deep facial expression recognition: A survey. IEEE Trans. Affect. Comput. 2020, 13, 1195–1215. [Google Scholar] [CrossRef]

- Parkhi, O.; Vedaldi, A.; Zisserman, A. Deep face recognition. In Proceedings of the British Machine Vision Conference 2015, British Machine Vision Association, Swansea, UK, 7–10 September 2015. [Google Scholar]

- Cabitza, F.; Ciucci, D.; Rasoini, R. A giant with feet of clay: On the validity of the data that feed Machine Learning in medicine. In Proceedings of the Organizing for the Digital World; Cabitza, F., Batini, C., Magni, M., Eds.; Springer: Cham, Switzerland, 2019; pp. 121–136. [Google Scholar]

- Cabitza, F.; Campagner, A.; Sconfienza, L.M. As if sand were stone. New concepts and metrics to probe the ground on which to build trustable AI. BMC Med. Inform. Decis. Mak. 2020, 20, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Deng, W. A deeper look at facial expression dataset bias. IEEE Trans. Affect. Comput. 2020, 13, 881–893. [Google Scholar] [CrossRef]

- Yang, T.; Yang, Z.; Xu, G.; Gao, D.; Zhang, Z.; Wang, H.; Liu, S.; Han, L.; Zhu, Z.; Tian, Y.; et al. Tsinghua facial expression database—A database of facial expressions in Chinese young and older women and men: Development and validation. PLoS ONE 2020, 15, e0231304. [Google Scholar] [CrossRef] [PubMed]

- Dalrymple, K.A.; Gomez, J.; Duchaine, B. The Dartmouth Database of Children’s Faces: Acquisition and validation of a new face stimulus set. PLoS ONE 2013, 8, e79131. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- LoBue, V.; Thrasher, C. The Child Affective Facial Expression (CAFE) set: Validity and reliability from untrained adults. Front. Psychol. 2015, 5, 127200. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Liu, Z.; Lv, S.; Lv, Y.; Wu, G.; Peng, P.; Chen, F.; Wang, X. A natural visible and infrared facial expression database for expression recognition and emotion inference. IEEE Trans. Multimed. 2010, 12, 682–691. [Google Scholar] [CrossRef]

- Meuwissen, A.S.; Anderson, J.E.; Zelazo, P.D. The creation and validation of the developmental emotional faces stimulus set. Behav. Res. Methods 2017, 49, 960–966. [Google Scholar] [CrossRef]

- Mavadati, S.M.; Mahoor, M.H.; Bartlett, K.; Trinh, P.; Cohn, J.F. DISFA: A Spontaneous Facial Action Intensity Database. IEEE Trans. Affect. Comput. 2013, 4, 151–160. [Google Scholar] [CrossRef]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.; Hawk, S.T.; Van Knippenberg, A. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Vemulapalli, R.; Agarwala, A. A compact embedding for facial expression similarity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5683–5692. [Google Scholar]

- Benitez-Quiroz, C.F.; Srinivasan, R.; Feng, Q.; Wang, Y.; Martinez, A.M. Emotionet challenge: Recognition of facial expressions of emotion in the wild. arXiv 2017, arXiv:1703.01210. [Google Scholar]

- Kosti, R.; Alvarez, J.M.; Recasens, A.; Lapedriza, A. Emotion Recognition in Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Barros, P.; Churamani, N.; Lakomkin, E.; Siqueira, H.; Sutherland, A.; Wermter, S. The OMG-emotion behavior dataset. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–7. [Google Scholar]

- Nojavanasghari, B.; Baltrušaitis, T.; Hughes, C.E.; Morency, L.P. Emoreact: A multimodal approach and dataset for recognizing emotional responses in children. In Proceedings of the 18th ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; pp. 137–144. [Google Scholar]

- Zafeiriou, S.; Kollias, D.; Nicolaou, M.A.; Papaioannou, A.; Zhao, G.; Kotsia, I. Aff-Wild: Valence and arousal ‘in-the-wild’ challenge. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1980–1987. [Google Scholar] [CrossRef]

- Kollias, D.; Schulc, A.; Hajiyev, E.; Zafeiriou, S. Analysing affective behavior in the first ABAW 2020 competition. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2020), Buenos Aires, Argentina, 16–20 November 2020; pp. 637–643. [Google Scholar] [CrossRef]

- Gendron, M.; Barrett, L.F. Facing the past: A history of the face in psychological research on emotion perception. In The Science of Sacial Expression; Oxford Series in Social Cognition and Social Neuroscience; Oxford University Press: New York, NY, USA, 2017; pp. 15–36. [Google Scholar]

- McStay, A.; Pavliscak, P. Emotional Artificial Intelligence: Guidelines for Ethical Use. 2019. Available online: https://emotionalai.org/outputs (accessed on 7 August 2024).

- Crawford, K. The Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence; Yale University Press: New Haven, CT, USA, 2021. [Google Scholar]

- Crawford, K. Time to regulate AI that interprets human emotions. Nature 2021, 592, 167. [Google Scholar] [CrossRef]

- Keltner, Dacher and Ekman, Paul. The Science of “Inside Out”. 2015. Available online: https://www.paulekman.com/blog/the-science-of-inside-out/ (accessed on 7 September 2024).

- Matsumoto, D. American-Japanese cultural differences in the recognition of universal facial expressions. J. Cross-Cult. Psychol. 1992, 23, 72–84. [Google Scholar] [CrossRef]

- Matsumoto, D.; Yoo, S.H.; Nakagawa, S. Culture, emotion regulation, and adjustment. J. Personal. Soc. Psychol. 2008, 94, 925. [Google Scholar] [CrossRef] [PubMed]

- Matsumoto, D. Cultural similarities and differences in display rules. Motiv. Emot. 1990, 14, 195–214. [Google Scholar] [CrossRef]

- Butler, E.A.; Lee, T.L.; Gross, J.J. Emotion regulation and culture: Are the social consequences of emotion suppression culture-specific? Emotion 2007, 7, 30. [Google Scholar] [CrossRef] [PubMed]

- Barrett, L.F. The theory of constructed emotion: An active inference account of interoception and categorization. Soc. Cogn. Affect. Neurosci. 2016, 12, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Floridi, L. Etica dell’Intelligenza Artificiale: Sviluppi, Opportunità, Sfide; Raffaello Cortina Editore: Milano, Italy, 2022. [Google Scholar]

- Booth, B.M.; Hickman, L.; Subburaj, S.K.; Tay, L.; Woo, S.E.; D’Mello, S.K. Integrating psychometrics and computing perspectives on bias and fairness in Affective Computing: A case study of automated video interviews. IEEE Signal Process. Mag. 2021, 38, 84–95. [Google Scholar] [CrossRef]

- Reyes, B.N.; Segal, S.C.; Moulson, M.C. An investigation of the effect of race-based social categorization on adults’ recognition of emotion. PLoS ONE 2018, 13, e0192418. [Google Scholar] [CrossRef]

- Hutchings, P.B.; Haddock, G. Look Black in anger: The role of implicit prejudice in the categorization and perceived emotional intensity of racially ambiguous faces. J. Exp. Soc. Psychol. 2008, 44, 1418–1420. [Google Scholar] [CrossRef]

- Kim, E.; Bryant, D.; Srikanth, D.; Howard, A. Age bias in emotion detection: An analysis of facial emotion recognition performance on young, middle-aged, and older adults. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society, Virtually, 19–21 May 2021; pp. 638–644. [Google Scholar]

- Xu, T.; White, J.; Kalkan, S.; Gunes, H. Investigating bias and fairness in facial expression recognition. In Proceedings of the Computer Vision–ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; Proceedings, Part VI 16. Springer: Berlin/Heidelberg, Germany, 2020; pp. 506–523. [Google Scholar]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; pp. 77–91. [Google Scholar]

- Drozdowski, P.; Rathgeb, C.; Dantcheva, A.; Damer, N.; Busch, C. Demographic bias in biometrics: A survey on an emerging challenge. IEEE Trans. Technol. Soc. 2020, 1, 89–103. [Google Scholar] [CrossRef]

- Stark, L. The emotional context of information privacy. Inf. Soc. 2016, 32, 14–27. [Google Scholar] [CrossRef]

- McStay, A. Emotional AI, soft biometrics and the surveillance of emotional life: An unusual consensus on privacy. Big Data Soc. 2020, 7, 205395172090438. [Google Scholar] [CrossRef]

- Sánchez-Monedero, J.; Dencik, L. The politics of deceptive borders: ‘Biomarkers of deceit’ and the case of iBorderCtrl. Inf. Commun. Soc. 2022, 25, 413–430. [Google Scholar] [CrossRef]

- Kalantarian, H.; Jedoui, K.; Washington, P.; Tariq, Q.; Dunlap, K.; Schwartz, J.; Wall, D.P. Labeling images with facial emotion and the potential for pediatric healthcare. Artif. Intell. Med. 2019, 98, 77–86. [Google Scholar] [CrossRef] [PubMed]

- Nagy, J. Autism and the making of emotion AI: Disability as resource for surveillance capitalism. New Media Soc. 2024, 26, 14614448221109550. [Google Scholar] [CrossRef]

- European Parliament. EU AI Act: First Regulation on Artificial Intelligence. 2023. Available online: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 1 September 2024).

- NIST. Artificial Intelligence Risk Management Framework (AI RMF 1.0). 2023. Available online: https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.600-1.pdf (accessed on 7 August 2024).

- European Parliament. Amendments Adopted by the European Parliament on 14 June 2023 on the Proposal for a Regulation of the European Parliament and of the Council on Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. 2023. Available online: https://www.europarl.europa.eu/doceo/document/TA-9-2023-0236_EN.pdf (accessed on 6 August 2024).

- Council, N.R. The Polygraph and Lie Detection; The National Academies Press: Washington, DC, USA, 2003. [Google Scholar] [CrossRef]

- Hayes, A.F.; Krippendorff, K. Answering the call for a standard reliability measure for coding data. Commun. Methods Meas. 2007, 1, 77–89. [Google Scholar] [CrossRef]

- Chen, Y.; Joo, J. Understanding and Mitigating Annotation Bias in Facial Expression Recognition. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 14960–14971. [Google Scholar] [CrossRef]

- American Psychological Association. The Truth about Lie Detectors (Aka Polygraph Tests). 2004. Available online: https://www.apa.org/topics/cognitive-neuroscience/polygraph (accessed on 6 August 2024).

- Leahu, L.; Schwenk, S.; Sengers, P. Subjective objectivity: Negotiating emotional meaning. In Proceedings of the 7th ACM Conference on Designing Interactive Systems, Cape Town, South Africa, 25–27 February 2008; pp. 425–434. [Google Scholar]

- Faigman, D.L.; Fienberg, S.E.; Stern, P.C. The limits of the polygraph. Issues Sci. Technol. 2003, 20, 40–46. [Google Scholar]

- Nortje, A.; Tredoux, C. How good are we at detecting deception? A review of current techniques and theories. S. Afr. J. Psychol. 2019, 49, 491–504. [Google Scholar] [CrossRef]

- U.S. United States v. Scheffer. Opinions and Dissents, Supreme Court. 1998. Available online: https://supreme.justia.com/cases/federal/us/523/303/ (accessed on 6 August 2024).

- Krippendorff, K. Content Analysis: An Introduction to Its Methodology; Sage Publications Sage: Thousand Oaks, CA, USA, 2018. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef] [PubMed]

- The European Parliament and the Council of the European Union. General Data Protection Regulation. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC. 2016. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 26 August 2024).

| Dataset | Basic Emotion Labels |

|---|---|

| DDCF [95] | Neutral, Content, Sad, Angry, Afraid, Happy, Surprised, Disgusted |

| CAFE [97] | Sadness, Happiness, Surprise, Anger, Disgust, Fear, Neutral |

| NVIE [98] | Happiness, Disgust, Fear, Surprise, Sadness, Anger |

| TSINGHUA [94] | Neutral, Happiness, Anger, Disgust, Surprise, Fear, Content, Sadness |

| DEFSS [99] | Happy, Sad, Fearful, Angry, Neutral, None of the Above |

| Dataset | Basic Emotion Labels |

|---|---|

| DISFA [100] | Action units with intensity from 0 to 5 |

| Radboud Faces Dataset [101] | Neutral, Anger, Sadness, Fear, Disgust, Surprise, Happiness, and Contempt Dimensions: Clarity, Intensity, Genuineness, and Valence. |

| Dataset | Basic Emotion Labels |

|---|---|

| FER-2013 [67] | Angry, Disgust, Fear, Happy, Sadness, Surprise, Contempt |

| Google-FEC [102] | Amusement, Anger, Awe, Boredom, Concentration, Confusion, Contemplation, Contempt, Contentment, Desire, Disappointment, Disgust, Distress, Doubt, Ecstasy, Elation, Embarrassment, Fear, Interest, Love, Neutral, Pain, Pride, Realization, Relief, Sadness, Shame, Surprise, Sympathy, Triumph |

| EmotioNet [103] | Happy, Angry, Sad, Surprised, Fearful, Disgusted, Appalled, Awed, Angrily disgusted, Angrily surprised, Fearfully angry, Fearfully surprised, Happily disgusted, Happily surprised, Sadly angry, Sadly disgusted |

| Dataset | Basic Emotion Labels |

|---|---|

| AffectNet [41] | Labels: Neutral, Happy, Sad, Surprise, Fear, Anger, Disgust, Contempt, None, Uncertain, Non-face. Dimensions: Valence, Arousal |

| EMOTIC [104] | Labels: Affection, Anger, Annoyance, Anticipation, Aversion, Confidence, Disapproval, Disconnection, Disquietment, Doubt/Confusion, Embarrassment, Engagement, Esteem, Excitement, Fatigue, Fear, Happiness, Pain, Peace, Pleasure, Sadness, Sensitivity, Suffering, Surprise, Sympathy, Yearning. Dimensions: Valence, Arousal, Dominance |

| OMG-Emotion [105] | Labels: Surprise, Disgust, Happiness, Fear, Anger, Sadness. Dimensions: Valence, Arousal |

| EmoReact [106] | Labels: Happiness, Surprise, Disgust, Fear, Curiosity, Uncertainty, Excitement, Frustration, Exploration, Confusion, Anxiety, Attentiveness, Anger, Sadness, Embarrassment, Valence, Neutral. Dimensions: All emotions except valence are annotated on a 1–4 Likert scale |

| Aff-Wild [107] | Dimensions: Valence, Arousal |

| Aff-Wild2 [108] | Labels: Neutral, Anger, Disgust, Fear, Happiness, Sadness, Surprise. Partly annotated with 8 Action Units. Dimensions: Valence, Arousal |

| Area of Criticism | |

|---|---|

| Psychological Foundations | The psychological foundations on which FER technology is based are not uniformly accepted and suffer from theoretical ambiguities. Emotions are not considered measurable “entities” and do not stand in a univocal relation with expressions. |

| Ethical Implications | Emotions can be considered soft biometric data, feeding surveillance capitalism. Their employment in sensitive scenarios can be potentially harmful to essential human rights. |

| Reliability Issues | FER ground truth is considered unreliable and datasets may replicate annotation biases. Human beings do not agree sufficiently in the emotion annotation task. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mattioli, M.; Cabitza, F. Not in My Face: Challenges and Ethical Considerations in Automatic Face Emotion Recognition Technology. Mach. Learn. Knowl. Extr. 2024, 6, 2201-2231. https://doi.org/10.3390/make6040109

Mattioli M, Cabitza F. Not in My Face: Challenges and Ethical Considerations in Automatic Face Emotion Recognition Technology. Machine Learning and Knowledge Extraction. 2024; 6(4):2201-2231. https://doi.org/10.3390/make6040109

Chicago/Turabian StyleMattioli, Martina, and Federico Cabitza. 2024. "Not in My Face: Challenges and Ethical Considerations in Automatic Face Emotion Recognition Technology" Machine Learning and Knowledge Extraction 6, no. 4: 2201-2231. https://doi.org/10.3390/make6040109