Winds and Gusts during the Thomas Fire

Abstract

1. Introduction

2. Experimental Design

3. Survey of Observations and Verification of Model Forecasts

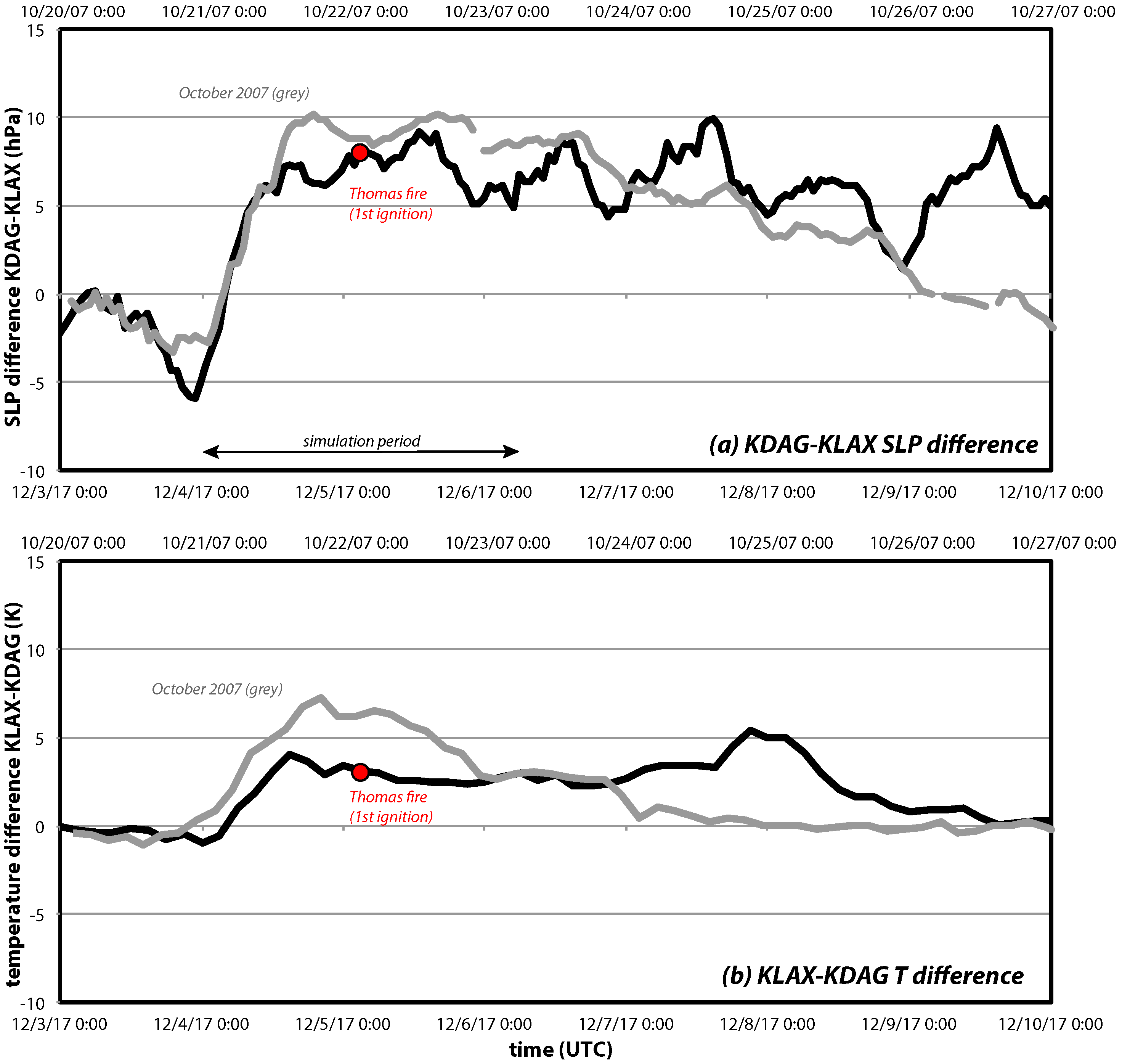

3.1. Wind and Gust Observations Near the Thomas Fire Ignition Sites

3.2. Evaluation of Observations

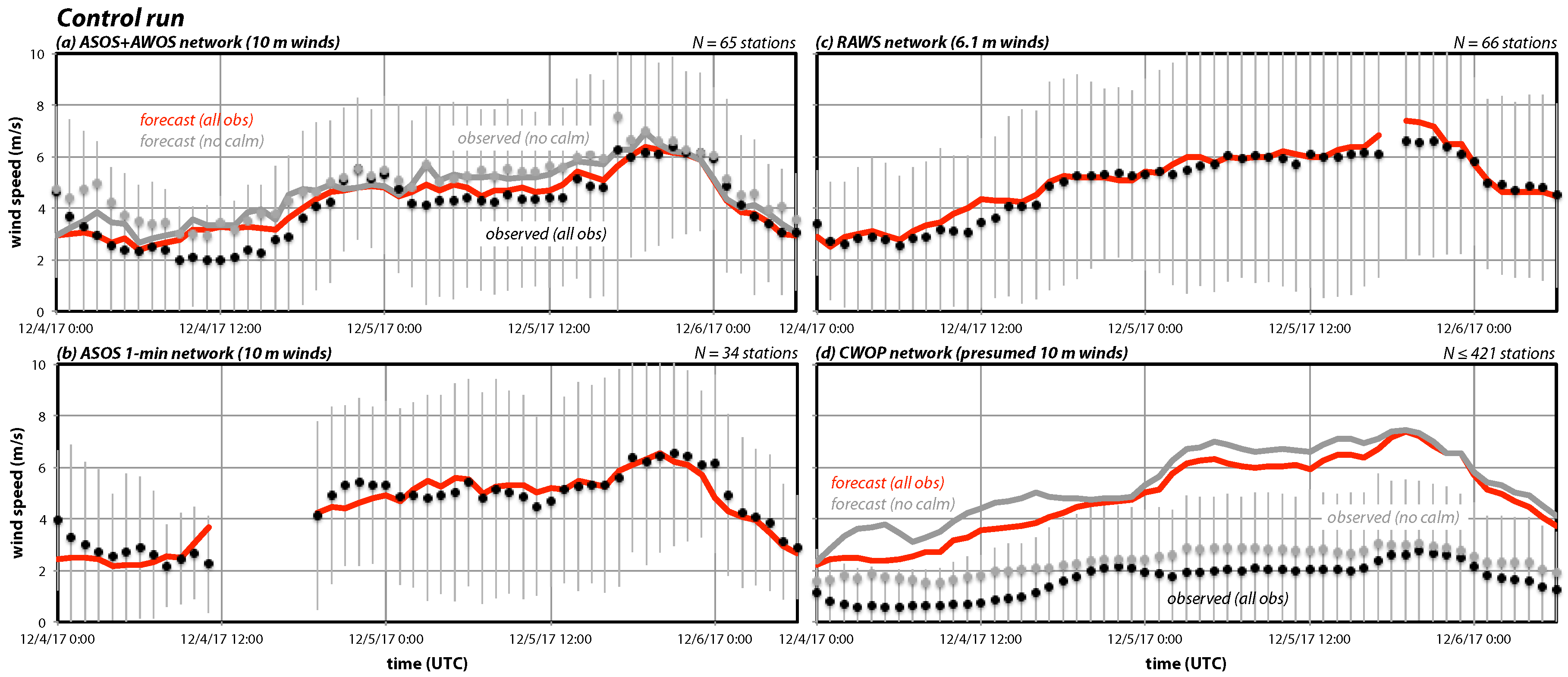

3.3. Control Simulation and Forecast Verification

4. Model Predicted Winds at and Near the Fire Sites

4.1. Forecasts for the Ignition Sites and Nearby Stations

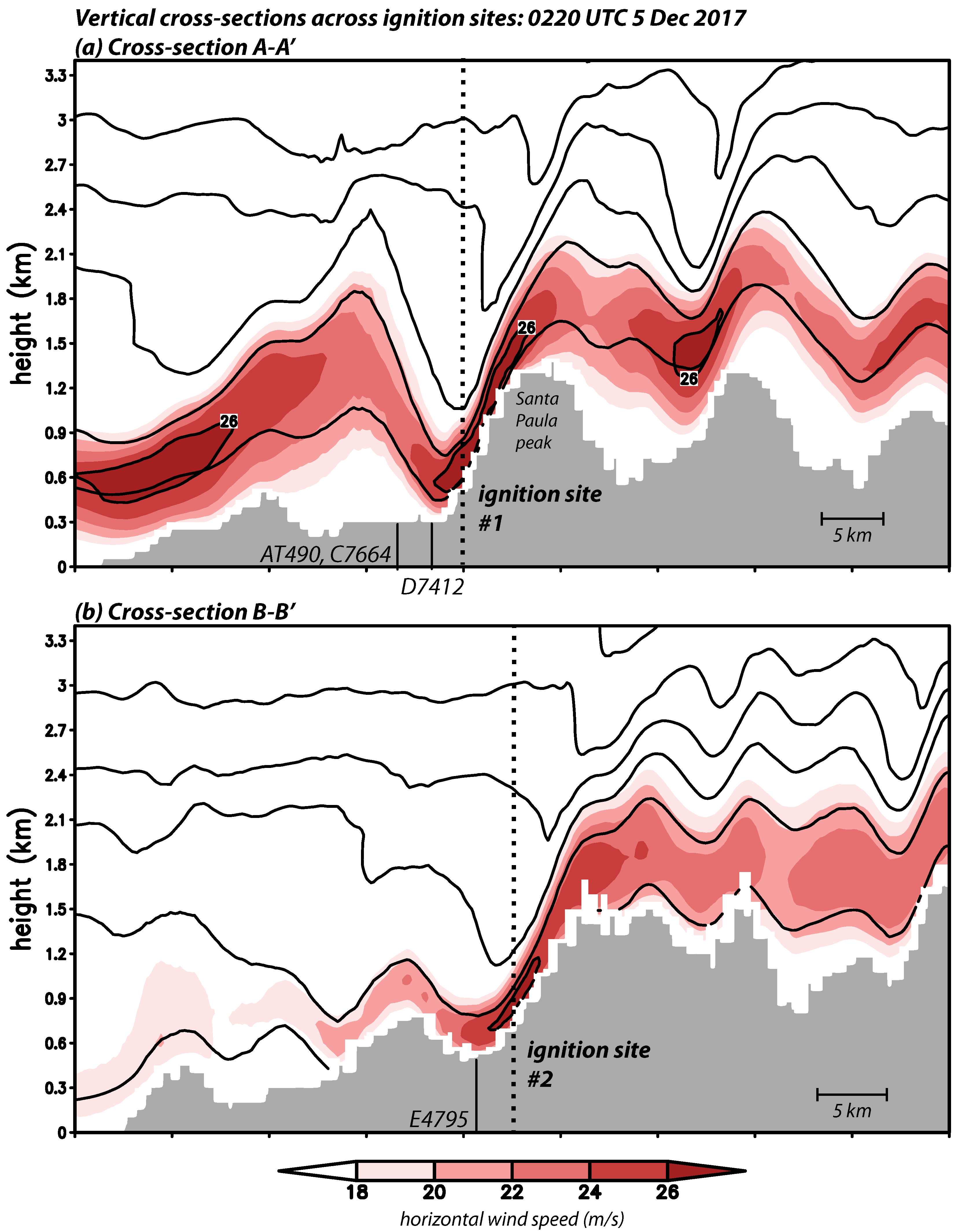

4.2. Vertical Cross-Sections Past the Ignition Points

4.3. Sensitivity Tests and Near-Surface Winds above the First Ignition Site

5. Discussion

6. Conclusions

- The Thomas fire ignition sites, especially the primary origin, were likely subjected to strong but quite localized near-surface winds due to the downsloping flow being elevated both farther upwind and downwind, the latter having the form of a hydraulic jump. Owing to this, even reliable nearby surface stations might have failed to capture the true magnitude of the winds and gusts occurring at the ignition sites, leaving properly verified numerical model simulations as a viable tool for estimating flow conditions at and above the fire sites.

- The numerical model provided skillful reconstructions of the network-averaged sustained winds for ASOS, RAWS, and SDG & E surface stations while at the same time severely overpredicting winds for the cooperative citizen weather observing (CWOP) network, even after calm reports were neglected and quality control filtering was applied. Thus, the validity of CWOP wind reports as a group was questioned and the recommendation made that these stations be treated with suspicion and excluded from model verifications.

- The modeling results were shown to be largely insensitive to the introduction of random perturbations and other alterations (apart from changing the land surface model, which determines surface roughness). Using a crude estimate, the simulations suggested that gusts reached at least 29 ± 1.4 m/s (65 ± 3.1 mph) at the first origin site for the presumed ignition time, with higher speeds predicted later.

- However, as we provided evidence that well-calibrated models tend to consistently underspecify wind speeds at windier locations, and since the gust proxy did not attempt to account for additional momentum production by turbulence, this gust estimate should be treated as a lower bound. We suspect that instantaneous wind speeds experienced at the ignition sites were substantially higher at the times the fires started.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ACM2 | Asymmetric Convection Model version 2 PBL scheme |

| AGL | Above ground level |

| ARW | Advanced Research WRF core |

| ASOS | Automated Surface Observing System |

| AWOS | Automated Weather Observing System |

| CWOP | Citizen Weather Observing Program |

| GF | Gust factor |

| GFS | Global Forecast System |

| HRRR | High-Resolution Rapid Refresh |

| MADIS | Meteorological Assimilation Data Ingest System |

| MET | Model Evaluation Tools |

| METAR | Meteorological Terminal Aviation Routine Weather Report |

| MODIS | Moderate Resolution Imaging Spectroradiometer |

| MYNN2 | Mellor-Yamada-Nakanishi-Niino level 2 |

| NCAR | National Center for Atmospheric Research |

| NCEI | National Centers for Environmental Information |

| NAM | North American Mesoscale |

| NARR | North American Regional Reanalysis |

| PBL | Planetary boundary layer |

| QC | Quality control |

| RAWS | Remote Automated Weather Stations |

| RRTMG | Rapid Radiative Transfer Model for General Circulation Models |

| SDG & E | San Diego Gas and Electric |

| SKEBS | Stochastic Kinetic Energy Backscatter Scheme |

| WMO | World Meteorological Organization |

| WRF | Weather Research and Forecasting |

| YSU | Yonsei University |

References

- Sommers, W.T. LFM forecast variables related to Santa Ana wind occurrences. Mon. Weather Rev. 1978, 106, 1307–1316. [Google Scholar] [CrossRef]

- Raphael, M. The Santa Ana winds of California. Earth Interact. 2003, 7, 1–13. [Google Scholar] [CrossRef]

- Conil, S.; Hall, A. Local regimes of atmospheric variability: A case study of Southern California. J. Clim. 2006, 19, 4308–4325. [Google Scholar] [CrossRef]

- Jones, C.; Fujioka, F.; Carvalho, L.M.V. Forecast skill of synoptic conditions associated with Santa Ana winds in Southern California. Mon. Weather Rev. 2010, 138, 4528–4541. [Google Scholar] [CrossRef]

- Hughes, M.; Hall, A. Local and synoptic mechanisms causing Southern California’s Santa Ana winds. Clim. Dyn. 2010, 34, 847–857. [Google Scholar] [CrossRef]

- Cao, Y.; Fovell, R.G. Downslope windstorms of San Diego County. Part I: A case study. Mon. Weather Rev. 2016, 144, 529–552. [Google Scholar] [CrossRef]

- Rothermel, R.C. A Mathematical Model for Predicting Fire Spread in Wildland Fuels; Research Paper INT-115; U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station: Ogden, UT, USA, 1972; p. 40. [Google Scholar]

- Westerling, A.L.; Cayan, D.R.; Brown, T.J.; Hall, B.L.; Riddle, L.G. Climate, Santa Ana winds and autumn wildfires in Southern California. Eos Trans. Am. Geophys. Union 2004, 85, 289–296. [Google Scholar] [CrossRef]

- Rolinski, T.; Capps, S.B.; Fovell, R.G.; Cao, Y.; D’Agostino, B.J.; Vanderburg, S. The Santa Ana wildfire threat index: Methodology and operational implementation. Weather Forecast. 2016, 31, 1881–1897. [Google Scholar] [CrossRef]

- Small, I.J. Santa Ana Winds and the Fire Outbreak of Fall 1993; NOAA Technical Memorandum, National Oceanic and Atmospheric Administration, National Weather Service Scientific Services Division, Western Region: Oxnard, CA, USA, 1995; p. 56.

- Abatzoglou, J.T.; Barbero, R.; Nauslar, N.J. Diagnosing Santa Ana winds in Southern California with synoptic-scale analysis. Weather Forecast. 2013, 28, 704–710. [Google Scholar] [CrossRef]

- Kolden, C.A.; Abatzoglou, J.T. Spatial distribution of wildfires ignited under katabatic versus non-katabatic winds in mediterranean Southern California USA. Fire 2018, 1, 19. [Google Scholar] [CrossRef]

- Nauslar, N.J.; Abatzoglou, J.T.; Marsh, P.T. The 2017 North Bay and Southern California fires: A case study. Fire 2018, 1, 18. [Google Scholar] [CrossRef]

- Fovell, R.G.; Cao, Y. The Santa Ana winds of Southern California: Winds, gusts, and the 2007 Witch fire. Wind Struct. 2017, 24, 529–564. [Google Scholar] [CrossRef]

- Cao, Y.; Fovell, R.G. Downslope windstorms of San Diego County. Part II: Physics ensemble analyses and gust forecasting. Weather Forecast. 2018, 33, 539–559. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Duda, M.G.; Huang, X.Y.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3; NCAR Technical Note TN-475+STR; National Center for Atmospheric Research: Boulder, CO, USA, 2008. [Google Scholar]

- Moritz, M.A.; Moody, T.J.; Krawchuk, M.A.; Hughes, M.; Hall, A. Spatial variation in extreme winds predicts large wildfire locations in chaparral ecosystems. Geophys. Res. Lett. 2010, 37, L04801. [Google Scholar] [CrossRef]

- Pleim, J.E.; Xiu, A. Development and testing of a surface flux and planetary boundary layer model for application in mesoscale models. J. Appl. Meteorol. 1995, 34, 16–32. [Google Scholar] [CrossRef]

- Pleim, J.E. A combined local and nonlocal closure model for the atmospheric boundary layer. Part I: Model description and testing. J. Appl. Meteorol. Climatol. 2007, 46, 1383–1395. [Google Scholar] [CrossRef]

- Pleim, J.E. A combined local and nonlocal closure model for the atmospheric boundary layer. Part II: Application and evaluation in a mesoscale meteorological model. J. Appl. Meteorol. Climatol. 2007, 46, 1396–1409. [Google Scholar] [CrossRef]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Shephard, M.W.; Clough, S.A.; Collins, W.D. Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models. J. Geophys. Res. Atmos. 2008, 113, D13103. [Google Scholar] [CrossRef]

- Benjamin, S.G.; Weygandt, S.S.; Brown, J.W.; Hu, M.; Alexander, C.R.; Smirnova, T.G.; Olson, J.B.; James, E.P.; Dowell, D.C.; Grell, G.A.; et al. A North American hourly assimilation and model forecast cycle: The Rapid Refresh. Mon. Weather Rev. 2016, 144, 1669–1694. [Google Scholar] [CrossRef]

- Mesinger, F.; Kalnay, E.; Mitchell, K.; Shafran, P.C.; Ebisuzaki, W.; Jović, D.; Woollen, J.; Rogers, E.; Berbery, E.H.; Ek, M.B.; et al. North American Regional Reanalysis. Bull. Am. Meteorol. Soc. 2006, 87, 343–360. [Google Scholar] [CrossRef]

- Berner, J.; Ha, S.Y.; Hacker, J.P.; Fournier, A.; Snyder, C. Model uncertainty in a mesoscale ensemble prediction system: Stochastic versus multiphysics representations. Mon. Weather Rev. 2011, 139, 1972–1995. [Google Scholar] [CrossRef]

- Hong, S.Y.; Noh, Y.; Dudhia, J. A new vertical diffusion package with an explicit treatment of entrainment processes. Mon. Weather Rev. 2006, 134, 2318. [Google Scholar] [CrossRef]

- Shin, H.; Hong, S. Representation of the subgrid-scale turbulent transport in convective boundary layers at gray-zone resolutions. Mon. Weather Rev. 2015, 143, 250–271. [Google Scholar] [CrossRef]

- Nakanishi, M.; Niino, H. An improved Mellor-Yamada Level-3 model with condensation physics: Its design and verification. Bound.-Layer Meteorol. 2004, 112, 1–31. [Google Scholar] [CrossRef]

- Ek, M.B.; Mitchell, K.E.; Lin, Y.; Rogers, E.; Grunmann, P.; Koren, V.; Gayno, G.; Tarpley, J.D. Implementation of Noah land surface model advances in the National Centers for Environmental Prediction operational mesoscale Eta model. J. Geophys. Res. Atmos. 2003, 108, 8851. [Google Scholar] [CrossRef]

- Tyndall, D.P.; Horel, J.D. Impacts of mesonet observations on meteorological surface analyses. Weather Forecast. 2013, 28, 254–269. [Google Scholar] [CrossRef]

- World Meteorological Organization (WMO). Guide to Meteorological Instruments and Methods of Observation, 2014 ed.; Updated in 2017; WMO: Geneva, Switzerland, 2017; p. 1165. [Google Scholar]

- Harper, B.; Kepert, J.D.; Ginger, J.D. Guidelines for Converting between Various Wind Averaging Periods in Tropical Cyclone Conditions; Technical Report; World Meteorological Organization (WMO) Tech. Doc. WMO/TD-1555; WMO: Geneva, Switzerland, 2010. [Google Scholar]

- National Oceanic and Atmospheric Administration: (NOAA). Automated Surface Observing System (ASOS) User’s Guide; National Oceanic and Atmospheric Administration: Silver Spring, MD, USA, 1998.

- Gallagher, A.A. The Network Average Gust Factor, Its Measurement and Environmental Controls, and Role in Gust Forecasting. Master’s Thesis, University at Albany, State University of New York, Albany, NY, USA, 2016. [Google Scholar]

- Davis, F.K.; Newstein, H. The variation of gust factors with mean wind speed and with height. J. Appl. Meteorol. 1968, 7, 372–378. [Google Scholar] [CrossRef]

- Monahan, H.H.; Armendariz, M. Gust factor variations with height and atmospheric stability. J. Geophys. Res. 1971, 76, 5807–5818. [Google Scholar] [CrossRef]

- Suomi, I.; Vihma, T.; Fortelius, C.; Gryning, S. Wind-gust parametrizations at heights relevant for wind energy: A study based on mast observations. Q. J. R. Meteorol. Soc. 2013, 139, 1298–1310. [Google Scholar] [CrossRef]

- Tyndall, D.P.; Horel, J.D.; de Pondeca, M.S.F.V. Sensitivity of surface air temperature analyses to background and observation errors. Weather Forecast. 2010, 25, 852–865. [Google Scholar] [CrossRef]

- Madaus, L.E.; Hakim, G.J.; Mass, C.F. Utility of dense pressure observations for improving mesoscale analyses and forecasts. Mon. Weather Rev. 2014, 7, 2398–2413. [Google Scholar] [CrossRef]

- Carlaw, L.B.; Brotzge, J.A.; Carr, F.H. Investigating the impacts of assimilating surface observations on high-resolution forecasts of the 15 May 2013 tornado event. Electron. J. Severe Storms Meteorol. 2015, 10, 1–34. [Google Scholar]

- Gasperoni, N.A.; Wang, X.; Brewster, K.A.; Carr, F.H. Assessing impacts of the high-frequency assimilation of surface observations for the forecast of convection initiation on 3 April 2014 within the Dallas-Fort Worth test bed. Mon. Weather Rev. 2018, 146, 3845–3872. [Google Scholar] [CrossRef]

- Wieringa, J. Roughness-dependent geographical interpolation of surface wind speed averages. Q. J. R. Meteorol. Soc. 1986, 112, 867–889. [Google Scholar] [CrossRef]

- Durran, D.R. Another look at downslope windstorms. Part I: The development of analogs to supercritical flow in an infinitely deep, continuously stratified fluid. J. Atmos. Sci. 1986, 43, 2527–2543. [Google Scholar] [CrossRef]

- Durran, D. Mountain waves and downslope winds. In Atmospheric Processes over Complex Terrain; Blumen, W., Ed.; Springer: Berlin, Germany, 1990; pp. 59–81. [Google Scholar]

- Sheridan, P.F.; Vosper, S.B. A flow regime diagram for forecasting lee waves, rotors and downslope winds. Meteorol. Appl. 2006, 13, 179–195. [Google Scholar] [CrossRef]

- Vosper, S.B. Inversion effects on mountain lee waves. Q. J. R. Meteorol. Soc. 2004, 130, 1723–1748. [Google Scholar] [CrossRef]

- Reinecke, P.A.; Durran, D.R. Initial-condition sensitivities and the predictability of downslope winds. J. Atmos. Sci. 2009, 66, 3401. [Google Scholar] [CrossRef]

| Experiment | Domain | WRF Model | Initialization Source | Model Physics |

|---|---|---|---|---|

| Version | ||||

| Control and perturbed runs | 54 km → 667 m (5 domains) | 3.7.1 | NAM 12 km 0000 UTC 4 December 2017 | PX LSM |

| ACM2 PBL | ||||

| Physics and version runs | Unmodified Noah LSM | |||

| YSU PBL | ||||

| Noah z_0mod LSM | ||||

| YSU, MYJ, and | ||||

| Shin-Hong PBLs | ||||

| 3.9.1.1 | PX LSM | |||

| ACM2 PBL | ||||

| Noah z_0mod LSM | ||||

| MYNN2 PBL | ||||

| Initialization runs | 3.7.1 | GFS 0.25° | PX LSM ACM2 PBL | |

| 0000 UTC | ||||

| 4 December 2017 | ||||

| NARR 32 km | ||||

| reanalysis | ||||

| NAM 12 km | ||||

| 1200 UTC | ||||

| 4 December 2017 | ||||

| 18 km → 667 m (4 domains) | 3.9.1.1 | HRRR 3 km | ||

| hourly | ||||

| analyses |

| Network | Source (Format) | Anemometer Height AGL | # Stations/Max Available or Used (if Different) | Comparisons Available | % Calm Observations | % Calmf Orecasts |

|---|---|---|---|---|---|---|

| ASOS + AWOS | MADIS (METAR) | 10 m | 65 | 3346 | 19 | 5 |

| ASOS + AWOS (no calm observations) | MADIS (METAR) | 10 m | 65/54 | 2738 | 0 | 3 |

| ASOS only | MADIS (METAR) | 10 m | 34 | 1466 | 23 | 6 |

| ASOS 1-min | NCEI | 10 m | 34 | 1498 | 1 | 5 |

| RAWS | MADIS | 6.1 m | 78/66 | 4213 | 3 | 3 |

| SDG&E | MADIS | 6.1 m | 160 | 8727 | 1 | 8 |

| CWOP | MADIS | 10 m (presumed) | 421/415 | 21922 | 39 | 6 |

| CWOP (QC3 & no calm) | MADIS | 10 m (presumed) | 403/368 | 14479 | 0 | 3 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fovell, R.G.; Gallagher, A. Winds and Gusts during the Thomas Fire. Fire 2018, 1, 47. https://doi.org/10.3390/fire1030047

Fovell RG, Gallagher A. Winds and Gusts during the Thomas Fire. Fire. 2018; 1(3):47. https://doi.org/10.3390/fire1030047

Chicago/Turabian StyleFovell, Robert G., and Alex Gallagher. 2018. "Winds and Gusts during the Thomas Fire" Fire 1, no. 3: 47. https://doi.org/10.3390/fire1030047

APA StyleFovell, R. G., & Gallagher, A. (2018). Winds and Gusts during the Thomas Fire. Fire, 1(3), 47. https://doi.org/10.3390/fire1030047