A Deep Learning Based Object Identification System for Forest Fire Detection

Abstract

:1. Introduction

2. State of the Art

3. Data and Methods

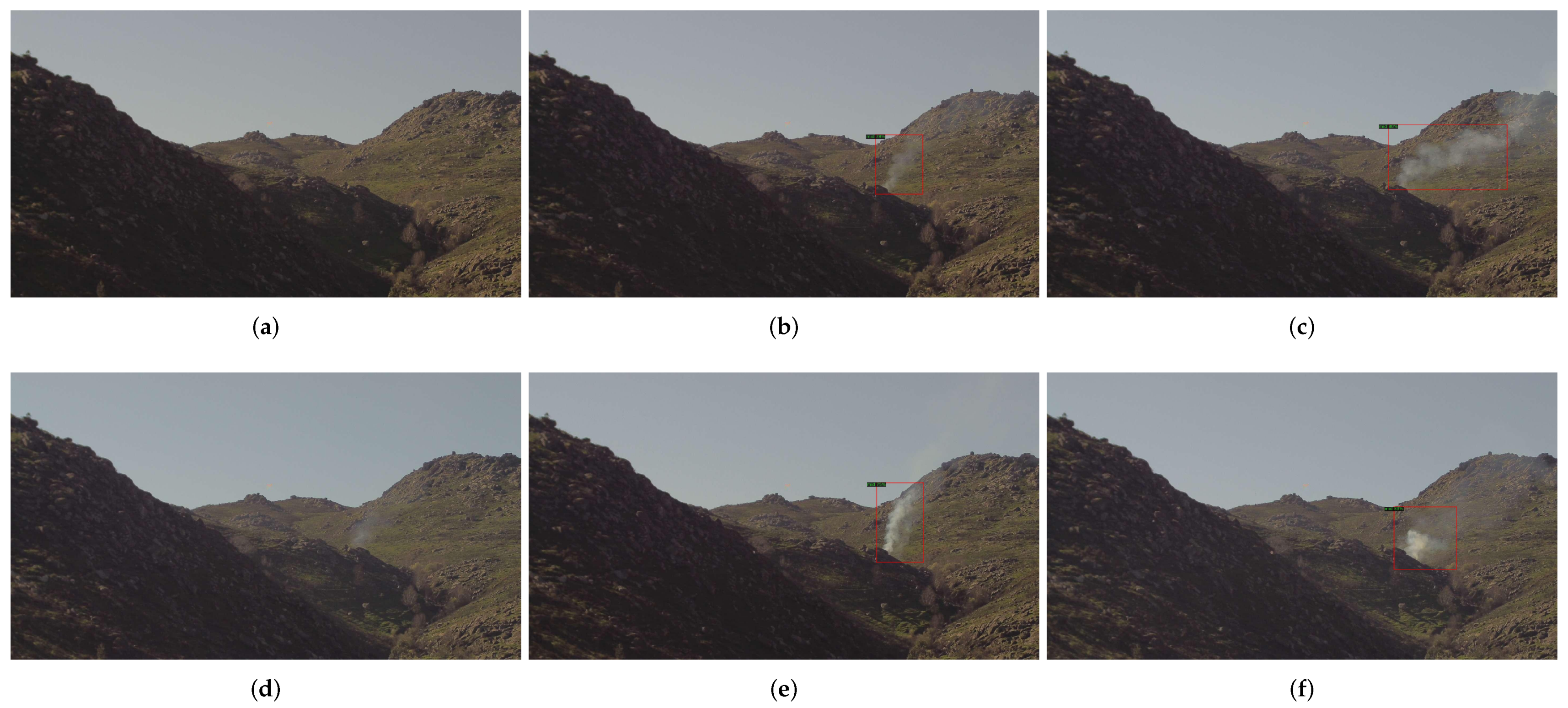

3.1. Dataset

3.2. Architecture of Object Detection Models and Transfer-Learning

3.3. Performance Evaluation

- True positives (TP) is the number of images which was detected as smoke correctly, it is determined by an IoU is greater than 0.33.

- False positives (FP) is the number of images that were badly detected as smoke, it is determined by an IoU lower than 0.33.

- False negatives (FN) is the number of images that were not detected as smoke incorrectly.

- The true negatives (TNc) is the number of images annotated as clouds that were not classified as smoke

- The true negatives (TNe) is the number of images without annotations that were not classified as smoke.

- The false positives (FPc) is the number of images annotated as clouds which were classified as smoke

- The false positives (FPe) is the number of images without annotations that were classified as smoke.

4. Results and Discussion

4.1. Test Set with Smoke, Clouds and Empty Images

4.2. Temporal Evolution of Smoke Detection Rate

4.3. Average Precision in Validation Set

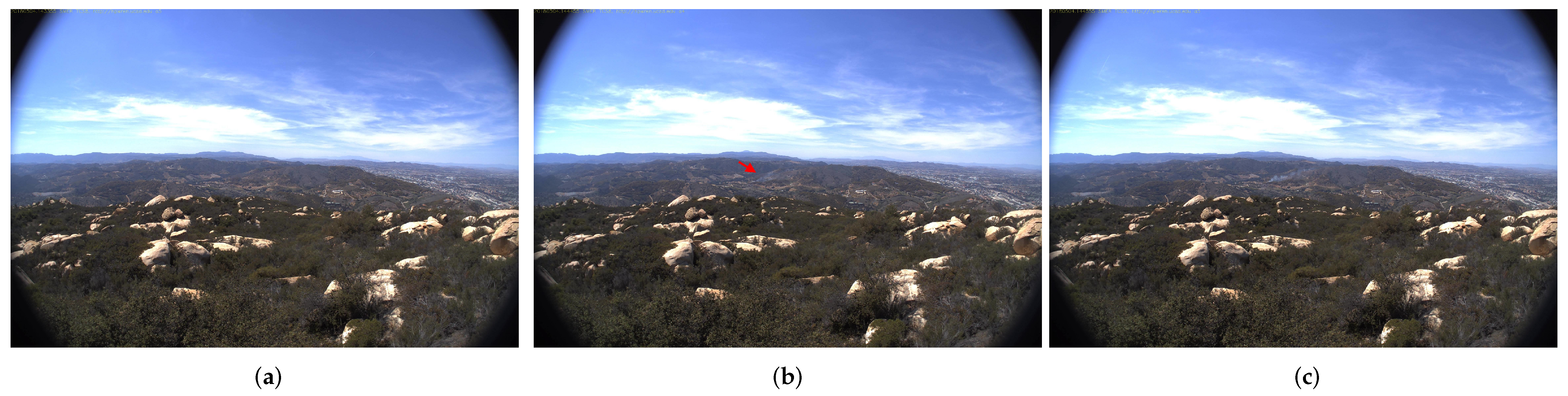

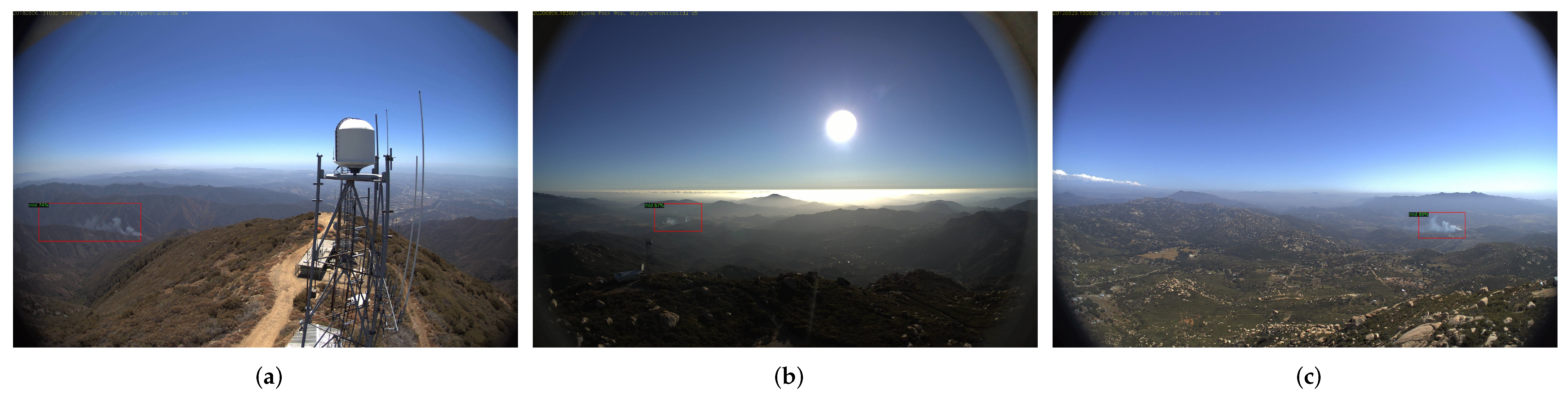

4.4. Comparison with HPWREN Public Database

4.5. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.; Subramanian, S.G.; Crowley, M.; Taylor, S.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Milne, M.; Clayton, H.; Dovers, S.; Cary, G.J. Evaluating benefits and costs of wildland fires: Critical review and future applications. Environ. Hazards 2014, 13, 114–132. [Google Scholar] [CrossRef]

- Finlay, S.E.; Moffat, A.; Gazzard, R.; Baker, D.; Murray, V. Health Impacts of Wildfires. PLoS Curr. 2012, 4, e4f959951cce2c. [Google Scholar] [CrossRef] [PubMed]

- Haikerwal, A.; Reisen, F.; Sim, M.R.; Abramson, M.J.; Meyer, C.P.; Johnston, F.H.; Dennekamp, M. Impact of smoke from prescribed burning: Is it a public health concern? J. Air Waste Manag. Assoc. 2015, 65, 592–598. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, M.; Delerue-Matos, C.; Pereira, M.C.; Morais, S. Environmental particulate matter levels during 2017 large forest fires and megafires in the center region of Portugal: A public health concern? Int. J. Environ. Res. Public Health 2020, 17, 1032. [Google Scholar] [CrossRef] [Green Version]

- Jaffe, D.A.; Wigder, N.L. Ozone production from wildfires: A critical review. Atmos. Environ. 2012, 51, 1–10. [Google Scholar] [CrossRef]

- Abedi, R. Forest fires (investigation of causes, damages and benefits). New Sci. Technol. 2020, 2, 183–187. [Google Scholar]

- Alkhatib, A.A.A. A Review on Forest Fire Detection Techniques. Int. J. Distrib. Sens. Netw. 2014, 10, 597368. [Google Scholar] [CrossRef] [Green Version]

- Vipin, V. Image Processing Based Forest Fire Detection. Int. J. Emerg. Technol. Adv. Eng. 2012, 2, 87–95. [Google Scholar]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Yu, L.; Wang, N.; Meng, X. Real-time forest fire detection with wireless sensor networks. In Proceedings of the 2005 International Conference on Wireless Communications, Networking and Mobile Computing, WCNM 2005, Wuhan, China, 26 September 2005; Volume 2, pp. 1214–1217. [Google Scholar] [CrossRef]

- Martyn, I.; Petrov, Y.; Stepanov, S.; Sidorenko, A.; Vagizov, M. Monitoring forest fires and their consequences using MODIS spectroradiometer data. IOP Conf. Ser. Earth Environ. Sci. 2020, 507, 12019. [Google Scholar] [CrossRef]

- Manyangadze, T. Forest Fire Detection for Near Real-Time Monitoring Using Geostationary Satellites; International Institute for Geo-Information Science and Earth Observation: Enschede, The Netherlands, 2009. [Google Scholar]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- Priya, R.S.; Vani, K. Deep learning based forest fire classification and detection in satellite images. In Proceedings of the 11th International Conference on Advanced Computing, ICoAC 2019, Chennai, India, 18–20 December 2019; pp. 61–65. [Google Scholar] [CrossRef]

- Hough, G. ForestWatch—A long-range outdoor wildfire detection system. In Proceedings of the WILDFIRE 2007—4th International Wildland Fire Conference, Sevilla, Spain, 13–17 May 2007. [Google Scholar]

- Valente De Almeida, R.; Vieira, P. Forest Fire Finder-DOAS application to long-range forest fire detection. Atmos. Meas. Tech. 2017, 10, 2299–2311. [Google Scholar] [CrossRef] [Green Version]

- Valente de Almeida, R.; Crivellaro, F.; Narciso, M.; Sousa, A.; Vieira, P. Bee2Fire: A Deep Learning Powered Forest Fire Detection System. In Proceedings of the 12th International Conference on Agents and Artificial Intelligence, Valletta, Malta, 22–24 February 2020; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2020; Volume 2, pp. 603–609. [Google Scholar] [CrossRef]

- Li, P.; Zhao, W. Image fire detection algorithms based on convolutional neural networks. Case Stud. Therm. Eng. 2020, 19, 100625. [Google Scholar] [CrossRef]

- Larsen, A.; Hanigan, I.; Reich, B.J.; Qin, Y.; Cope, M.; Morgan, G.; Rappold, A.G. A deep learning approach to identify smoke plumes in satellite imagery in near-real time for health risk communication. J. Expo. Sci. Environ. Epidemiol. 2021, 31, 170–176. [Google Scholar] [CrossRef] [PubMed]

- Toan, N.T.; Thanh Cong, P.; Viet Hung, N.Q.; Jo, J. A deep learning approach for early wildfire detection from hyperspectral satellite images. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 38–45. [Google Scholar] [CrossRef]

- Tang, Z.; Liu, X.; Chen, H.; Hupy, J.; Yang, B. Deep Learning Based Wildfire Event Object Detection from 4K Aerial Images Acquired by UAS. AI 2020, 1, 166–179. [Google Scholar] [CrossRef]

- Xiao, Y.; Tian, Z.; Yu, J.; Zhang, Y.; Liu, S.; Du, S.; Lan, X. A review of object detection based on deep learning. Multimed. Tools Appl. 2020, 79, 23729–23791. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar]

- Wu, X.; Sahoo, D.; Hoi, S.C. Recent advances in deep learning for object detection. Neurocomputing 2020, 396, 39–64. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In European Conference on Computer Vision; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; Volume 2016, pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015-Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Barmpoutis, P.; Dimitropoulos, K.; Kaza, K.; Grammalidis, N. Fire Detection from Images Using Faster R-CNN and Multidimensional Texture Analysis. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8301–8305. [Google Scholar] [CrossRef]

- Zhang, Q.X.; Lin, G.H.; Zhang, Y.M.; Xu, G.; Wang, J.J. Wildland Forest Fire Smoke Detection Based on Faster R-CNN using Synthetic Smoke Images. Procedia Eng. 2018, 211, 441–446. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Valikhujaev, Y.; Abdusalomov, A.; Cho, Y.I. Automatic Fire and Smoke Detection Method for Surveillance Systems Based on Dilated CNNs. Atmosphere 2020, 11, 1241. [Google Scholar] [CrossRef]

- Pan, H.; Badawi, D.; Cetin, A.E. Computationally efficient wildfire detection method using a deep convolutional network pruned via fourier analysis. Sensors 2020, 20, 2891. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Jung, D.; Park, S. Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery. Remote Sens. 2020, 12, 3715. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A. Data Mining: Practical Machine Learning Tools and Techniques, 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2011; pp. 1–622. [Google Scholar]

- University of California San Diego, California, America: The High Performance Wireless Research and Education Network, HPWREN Dataset. 2021. Available online: http://hpwren.ucsd.edu/index.html (accessed on 30 August 2021).

- Wong, K.H. OpenLabeler. 2020. Available online: https://github.com/kinhong/OpenLabeler (accessed on 10 May 2021).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context BT. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Gao, L.; He, Y.; Sun, X.; Jia, X.; Zhang, B. Incorporating negative sample training for ship detection based on deep learning. Sensors 2019, 19, 684. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and flexible image augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.Y.; Girshick, R. Detectron2. 2019. Available online: https://doi.org/https://github.com/facebookresearch/detectron2 (accessed on 1 September 2021).

- Smith, L.N. Cyclical learning rates for training neural networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, WACV 2017, Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar] [CrossRef] [Green Version]

| Version | Characteristics | Number of Images with Object | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| # Smoke Classes | Aug | Clouds | Empty | Smoke | Clouds | Empty | Hrz | Mid | Low | |

| 1 | 1 | N | N | N | 750 | 0 | 0 | 0 | 0 | 0 |

| 1A | 1 | Y | N | N | 1500 | 0 | 0 | 0 | 0 | 0 |

| 1C | 1 | N | Y | N | 750 | 750 | 0 | 0 | 0 | 0 |

| 1AC | 1 | Y | Y | N | 1500 | 1500 | 0 | 0 | 0 | 0 |

| 3 | 3 | N | N | N | 0 | 0 | 0 | 111 | 490 | 200 |

| 3A | 3 | Y | N | N | 0 | 0 | 0 | 222 | 980 | 400 |

| 3C | 3 | N | Y | N | 0 | 750 | 0 | 111 | 490 | 200 |

| 3AC | 3 | Y | Y | N | 0 | 1500 | 0 | 222 | 980 | 400 |

| 1E | 1 | N | N | Y | 750 | 0 | 750 | 0 | 0 | 0 |

| 1AE | 1 | Y | N | Y | 1500 | 0 | 1500 | 0 | 0 | 0 |

| 1CE | 1 | N | Y | Y | 750 | 750 | 750 | 0 | 0 | 0 |

| 1ACE | 1 | Y | Y | Y | 1500 | 1500 | 1500 | 0 | 0 | 0 |

| 3E | 3 | N | N | Y | 0 | 0 | 750 | 111 | 490 | 200 |

| 3AE | 3 | Y | N | Y | 0 | 0 | 1500 | 222 | 980 | 400 |

| 3CE | 3 | N | Y | Y | 0 | 750 | 750 | 111 | 490 | 200 |

| 3ACE | 3 | Y | Y | Y | 0 | 1500 | 1500 | 222 | 980 | 400 |

| Version | F11 (%) | F12 (%) | F13 (%) | F14 (%) | F15 (%) | FPRC (%) | FPRE (%) | FPRT (%) | G-Mean (%) |

|---|---|---|---|---|---|---|---|---|---|

| RetinaNet 3500_WUP | |||||||||

| 3AC | 58.6 | 60.8 | 67.3 | 70.8 | 68.5 | 4.6 | 3.3 | 4.5 | 70.5 |

| 1 | 54.2 | 69.7 | 68.4 | 71.9 | 68.5 | 18.7 | 5.6 | 11.4 | 66.9 |

| 1CE | 29.3 | 31.0 | 29.5 | 30.2 | 24.7 | 0.1 | 0.0 | 0.3 | 41.7 |

| RetinaNet 5000_WUP | |||||||||

| 1A | 55.1 | 69.7 | 68.4 | 69.6 | 74.3 | 12.1 | 2.4 | 6.9 | 71.7 |

| 1 | 60.0 | 69.7 | 67.3 | 71.9 | 70.9 | 18.3 | 5.2 | 11.0 | 70.4 |

| 3CE | 35.3 | 47.3 | 45.4 | 54.0 | 50.5 | 0.4 | 0.0 | 0.4 | 55.9 |

| FRCNN 5000_WUP | |||||||||

| 3AE | 64.1 | 75.4 | 68.4 | 84.1 | 78.6 | 6.3 | 1.9 | 4.4 | 78.6 |

| 3CE | 61.4 | 69.7 | 69.6 | 76.3 | 74.3 | 3.1 | 0.7 | 2.6 | 76.3 |

| 3E | 52.6 | 53.6 | 51.5 | 61.0 | 55.1 | 0.8 | 0.2 | 1.2 | 63.4 |

| FRCNN 5000_CYC | |||||||||

| 3AE | 69.2 | 75.4 | 78.0 | 80.3 | 75.4 | 6.7 | 2.7 | 5.2 | 80.1 |

| 3E | 64.1 | 74.3 | 69.6 | 71.9 | 67.9 | 4.8 | 1.4 | 3.7 | 75.3 |

| 1E | 22.8 | 36.8 | 33.3 | 37.8 | 38.6 | 1.2 | 0.2 | 2.0 | 48.2 |

| Version | DTR1 (%) | DTR2 (%) | DTR3 (%) | DTR4 (%) | DTR5 (%) |

|---|---|---|---|---|---|

| RetinaNet_3500_WUP | |||||

| 3AC | 38.7 | 57.3 | 68.0 | 77.3 | 85.3 |

| 1 | 34.7 | 60.0 | 72.0 | 78.7 | 85.3 |

| 1CE | 16.0 | 26.7 | 29.3 | 33.3 | 37.3 |

| RetinaNet_5000_WUP | |||||

| 1A | 34.7 | 54.7 | 66.7 | 76.0 | 80.0 |

| 1 | 40.0 | 62.7 | 69.3 | 77.3 | 82.7 |

| 3CE | 20.0 | 37.3 | 46.7 | 56.0 | 61.3 |

| FRCNN_5000_WUP | |||||

| 3AE | 42.7 | 68.0 | 73.3 | 86.7 | 89.3 |

| 3CE | 41.3 | 61.3 | 68.0 | 77.3 | 85.3 |

| 3E | 33.3 | 46.7 | 49.3 | 60.0 | 62.7 |

| FRCNN_5000_CYC | |||||

| 3AE | 48.0 | 69.3 | 80.0 | 86.7 | 92.0 |

| 3E | 44.0 | 66.7 | 72.0 | 80.0 | 82.7 |

| 1E | 12.0 | 28.0 | 34.7 | 40.0 | 44.0 |

| Version | AP (%) | AP50 (%) | AP75 (%) |

|---|---|---|---|

| RetinaNet_3500_WUP | |||

| 3AC | 38.7 | 75.0 | 36.7 |

| 1 | 39.6 | 83.3 | 31.3 |

| 1CE | 38.0 | 71.3 | 35.7 |

| RetinaNet_5000_WUP | |||

| 1A | 52.2 | 87.7 | 53.6 |

| 1 | 42.0 | 84.5 | 37.5 |

| 3CE | 38.9 | 72.3 | 39.4 |

| FRCNN_5000_WUP | |||

| 3AE | 40.1 | 81.2 | 31.6 |

| 3CE | 34.1 | 67.1 | 30.9 |

| 3E | 37.8 | 74.4 | 33.9 |

| FRCNN_5000_CYC | |||

| 3AE | 36.0 | 73.8 | 32.2 |

| 3E | 38.7 | 73.9 | 36.5 |

| 1E | 24.3 | 55.3 | 16.4 |

| Video Name | Time Elapsed (min) | |||

|---|---|---|---|---|

| Method [43] | FRCNN 5000 CYC 3AE | FRCNN 5000 WUP 3AE | RetinaNet 3500 WUP 1CE | |

| Lyons Fire | 8 | 5 | 5 | 8 |

| Holy Fire East View | 11 | 3 | 2 | 4 |

| Holy Fire South View | 9 | 2 | 1 | 2 |

| Palisades Fire | 3 | 7 | 5 | 9 |

| Palomar Mountain Fire | 13 | 18 | 10 | 16 |

| Highway Fire | 2 | 4 | 2 | 9 |

| Tomahawk Fire | 5 | 5 | 3 | 5 |

| DeLuz Fire | 11 | 22 | 16 | 136 |

| 20190529_94Fire_lp-s-mobo-c | N.A | 3 | 3 | 3 |

| 20190610_FIRE_bh-w-mobo-c | N.A | 6 | 5 | N.D |

| 20190716_FIRE_bl-s-mobo-c | N.A | 18 | 18 | N.D |

| 20190924_FIRE_sm-n-mobo-c | N.A | 17 | 7 | N.D |

| 20200611_skyline_lp-n-mobo-c | N.A | 5 | 4 | N.D |

| 20200806_SpringsFire_lp-w-mobo-c | N.A | 8 | 1 | 37 |

| 20200822_BrattonFire_lp-e-mobo-c | N.A | 2 | 5 | N.D |

| 20200905_ValleyFire_lp-n-mobo-c | N.A | 4 | 3 | N.D |

| 20160722_FIRE_mw-e-mobo-c | N.A | 3 | 5 | N.D |

| 20170520_FIRE_lp-s-iqeye | N.A | 8 | 2 | N.D |

| 20170625_BBM_bm-n-mobo | N.A | 23 | 21 | 25 |

| 20170708_Whittier_syp-n-mobo-c | N.A | 4 | 5 | 6 |

| 20170722_FIRE_so-s-mobo-c | N.A | 6 | 13 | 27 |

| 20180504_FIRE_smer-tcs8-mobo-c | N.A | 7 | 9 | 16 |

| 20180504_FIRE_smer-tcs8-mobo-c | N.A | 4 | 3 | 9 |

| 20180809_FIRE_mg-w-mobo-c | N.A | 6 | 2 | N.D |

| Mean ± sd for 1–8 | 7.8 ± 3.8 | 8.3 ± 7.7 | 5.5 ± 8.7 | 23.6 ± 9.7 |

| Mean ± sd for 1–24 | 7.9 ± 6.3 | 6.3 ± 5.4 | 20.8 ± 32.3 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guede-Fernández, F.; Martins, L.; de Almeida, R.V.; Gamboa, H.; Vieira, P. A Deep Learning Based Object Identification System for Forest Fire Detection. Fire 2021, 4, 75. https://doi.org/10.3390/fire4040075

Guede-Fernández F, Martins L, de Almeida RV, Gamboa H, Vieira P. A Deep Learning Based Object Identification System for Forest Fire Detection. Fire. 2021; 4(4):75. https://doi.org/10.3390/fire4040075

Chicago/Turabian StyleGuede-Fernández, Federico, Leonardo Martins, Rui Valente de Almeida, Hugo Gamboa, and Pedro Vieira. 2021. "A Deep Learning Based Object Identification System for Forest Fire Detection" Fire 4, no. 4: 75. https://doi.org/10.3390/fire4040075

APA StyleGuede-Fernández, F., Martins, L., de Almeida, R. V., Gamboa, H., & Vieira, P. (2021). A Deep Learning Based Object Identification System for Forest Fire Detection. Fire, 4(4), 75. https://doi.org/10.3390/fire4040075